?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Inquiry learning is an effective learning approach if learners are properly guided. Its effectiveness depends on learners’ prior knowledge, the domain, and their relationship. In a previous study we developed an Experiment Design Tool (EDT) guiding learners in designing experiments. The EDT significantly benefited low prior knowledge learners. For the current study the EDT was refined to also serve higher prior knowledge learners. Two versions were created; the “Constrained EDT” required learners to design minimally three experimental trials and apply CVS before they could conduct their experiment, and the “Open EDT” allowed learners to design as many trials as they wanted, and vary more than one variable. Three conditions were compared in terms of learning gains for learners having distinct levels of prior knowledge. Participants designed and conducted experiments within an online learning environment that (1) did not include an EDT, (2) included the Constrained EDT, or (3) included the Open EDT. Results indicated low prior knowledge learners to benefit most from the Constrained EDT (non-significant), low-intermediate prior knowledge learners from the Open EDT (significant), and high-intermediate prior knowledge learners from no EDT (non-significant). We advocate guidance to be configurable to serve learners with varying levels of prior knowledge.

Introduction

Educational objectives are shifting in our current society, where learners increasingly must learn actively and independently because this has been acknowledged to yield better learning results (SLO Nationaal Expertisecentrum Leerplanontwikkeling, Citation2016). An educational approach that anticipates this trend is inquiry learning, which has found to be effective for learning as long as learners are guided in the processes involved (Alfieri, Brooks, Aldrich, & Tenenbaum, Citation2011). The essence of this approach is that learners construct knowledge by carrying out inquiries; learners practice (a subset of) inquiry processes such as becoming oriented to the topic of investigation, formulating hypotheses and/or research questions, setting up and conducting experiments, drawing conclusions, and reflecting upon their inquiries (Pedaste et al., Citation2015). Inquiry learning stimulates learners to acquire, integrate, and apply new knowledge (Edelson, Gordin, & Pea, Citation1999), which can lead to deeper processing of knowledge and higher-order understanding (Carnesi & DiGiorgio, Citation2009).

A core inquiry process: designing experiments

Designing experiments is one of the core activities of inquiry learning, situated in the middle of the inquiry cycle as the linchpin between the more theoretical phases of hypothesis generation and drawing conclusions (Osborne, Collins, Ratcliffe, Millar, & Duschl, Citation2003; van Riesen, Gijlers, Anjewierden, & de Jong, Citation2018b). Learners must design experiments by which they can obtain results that are relevant for drawing conclusions regarding their hypothesis or research question. Experiment design thus builds a bridge between the hypothesis or research question, and data analysis and conclusion drawing (Arnold, Kremer, & Mayer, Citation2014).

Designing useful experiments requires understanding of inquiry and possession of inquiry skills, and it entails several aspects and processes that have found to be difficult for learners of all ages (de Jong & van Joolingen, Citation1998). One of the inquiry processes that has been found to predict conceptual knowledge gains is planning, which includes setting goals, selecting and implementing relevant strategies to meet those goals, and activating prior knowledge (de Jong & Njoo, Citation1992; Schraw, Crippen, & Hartley, Citation2006; Schunk, Citation1996). In experiment design, the goal is usually to further explore a domain by testing a hypothesis or answering a research question. Depending on someone’s prior knowledge of the domain and the specific purpose of the experiment, certain experimentation strategies such as the Control of Variables Strategy, described in the following paragraph, can be selected and implemented in order to work towards that goal. However, learners typically start working on tasks without engaging in spontaneous or serious planning; if they do engage in planning, they are often unsystematic about it, causing them to struggle with the task (de Jong & van Joolingen, Citation1998; Manlove, Lazonder, & de Jong, Citation2006; Veenman, Elshout, & Meijer, Citation1997).

A well-designed experiment typically serves the goal of answering a research question or testing a hypothesis. In their experiment design, learners should design multiple trials in which they include variables that are relevant and required to answer the research question or test the hypothesis. However, they often select variables that have nothing to do with the question or hypothesis and/or neglect important variables that do (de Jong & van Joolingen, Citation1998; van Joolingen & de Jong, Citation1991), especially when they have little or no knowledge of the domain. Learners should also specify the roles of the selected variables by choosing what they want to measure (dependent variable), vary (independent variable) and control for (control variable), and they must decide upon values of the independent and control variables for the experimental trials they will conduct. The selection of relevant variables is influenced by learners’ initial understanding of the domain. A strategy that successful researchers often apply is the Control of Variables Strategy (CVS), in which, over a set of trials, all variables are kept constant except the variable for which they want to study its effect on the dependent variable, allowing them to draw conclusions from unconfounded experiments (Klahr & Nigam, Citation2004). Any effect on the dependent variable that occurs can then be ascribed to the independent variable of interest. Learners, on the other hand, often do not apply CVS, but instead vary too many variables (Klahr & Nigam, Citation2004), which impedes the process of drawing conclusions because any effect found may be due to a variety of influences (Glaser, Schauble, Raghavan, & Zeitz, Citation1992).

When learners have selected the variables they want to include in their experiment, they should design multiple experimental trials in which they choose different values for the independent variables. Two strategies for choosing those values are to use extreme values, or have equal increments between trials (Veermans, van Joolingen, & de Jong, Citation2006). In order to explore the boundaries of a domain, learners can start an experiment by using extremely low or high values. Using equal increments between trials provides information about whether or not an effect is present, when an effect occurs, the strength of an effect, and the trajectory of the effect (e.g. linear, exponential, etc.).

Guidance

Guiding learners in designing and conducting experiments helps them to conduct useful and systematic experiments from which they can derive knowledge (Zacharia et al., Citation2015). One of the most frequently applied forms of guidance in online learning environments is heuristics, which are hints or suggestions on how to complete assignments. Novice learners who have yet to learn about effective strategies for setting up experiments benefit especially from heuristics (Veermans et al., Citation2006; Zacharia et al., Citation2015). Examples of heuristics are to “vary one thing at a time”, and to “control all other variables by using the same value across experimental trials” (Veermans et al., Citation2006), which both refer to the Control of Variables Strategy (Klahr & Nigam, Citation2004). Heuristics can be explicitly stated for the learner, or they can be used implicitly, for example, by embedding them in a tool that only allows learners to perform actions that comply with the heuristic(s). Tools are another form of guidance; they transform or take over part of a task and thereby help learners to accomplish tasks they would not have been able to do on their own (de Jong, Citation2006; Reiser, Citation2004; Simons & Klein, Citation2007). One example is a monitoring tool in which experiments – described as a set of values assigned to input and output variables – are stored (Veermans, de Jong, & van Joolingen, Citation2000). The rationale behind this tool is that it allows learners to focus on important relationships within the domain of interest, because the tool takes over part of the task by providing learners with some sort of external memory in which the experimental trials they conduct are automatically stored. Learners can replay the saved trials, and rearrange them in ascending or descending order to be better able to compare results. The monitoring tool eliminates the difficulty of remembering the experimental trials that have been conducted and interpreting the results, while simultaneously thinking of appropriate follow-up trials to conduct. Another example is the SCY Experimental Design Tool in which learners can write and evaluate their experiment design by means of a checklist (Lazonder, Citation2014). The tool incorporates an overview and explanations of experimental processes, including the research question, hypothesis, principle of manipulation, materials, and data treatment. Moreover, learners receive instructions on how to perform the task.

Prior knowledge

Prior knowledge is generally found to have a strong effect on students’ learning and performance (e.g. Ausubel, Citation1968; Kalyuga, Citation2007; Ruppert, Golan Duncan, & Chinn, Citation2017), and, more specifically in our context, students’ ability to design and conduct sound experiments (Hailikari, Katajavuori, & Lindblom-Ylanne, Citation2008). Students with lower levels of prior domain knowledge lack in theoretical knowledge about important domain related variables and relations between important variables. Students with lower levels of prior knowledge seem to engage in more trial and error behaviour (Kalyuga, Citation2007). In inquiry learning, students with low prior knowledge usually design less sophisticated experiments and in order to design and conduct experiments that are comparable in quality they require higher levels of guidance than students with high prior knowledge (Kalyuga, Citation2007; Lambiotte & Dansereau, Citation1992; Tuovinen & Sweller, Citation1999). For example, in inquiry learning CVS is applied more frequently by high prior knowledge students than by low prior knowledge students (Bumbacher, Salehi, Wieman, & Blikstein, Citation2018; Schauble, Glaser, Raghavan, & Reiner, Citation1992), and CVS yields better experiments and better conceptual understanding (Bumbacher et al., Citation2018; Klahr & Nigam, Citation2004). Differences in this regard between low and high prior knowledge students can be limited or even eliminated by providing low prior knowledge students with higher levels of guidance. Additional guidance can, for example, be offered in the form of more structured tasks in which the number of possible actions is limited by restricting the number of independent variables to one, or by providing students with CVS as a heuristic (Quintana et al., Citation2004; Veermans et al., Citation2006; Zacharia et al., Citation2015). Quintana et al. (Citation2004) developed a Scaffolding Design Framework, that we adopted for the design of two versions of the Experiment Design Tool as explained in the following section. In this framework guidelines for designing effective guidance are given and one of these guidelines concerns the importance of guidance that matches students’ prior knowledge. Quintana et al. state that “learning requires continually accessing and building on prior knowledge, so it is critical that new expert practices are connected with learners’ prior conceptions and with their ways of thinking about ideas in the discipline”. However, the framework does not give details about what works and what doesn’t for specific prior knowledge groups. In the following section about the design of the EDTs, the rationales behind the two versions of the EDT that we use in this study are explained in detail based on the framework, a previously conducted study with the EDT, and additional literature about effective guidance for different prior knowledge students.

The EDT

Based on heuristics and the Scaffolding Design Framework (Quintana et al., Citation2004), an Experiment Design Tool (EDT) was developed by van Riesen et al., Citation2018b to help learners design and conduct experiments in an online inquiry learning environment. The EDT scaffolds learners in the complex and possibly overwhelming task of designing experiments by breaking down the process of designing and conducting an experiment into smaller steps, and by taking over parts of these smaller steps for the learner, for example, by automatically assigning the same value to each control variable within an experiment. First, the EDT provides learners with a predefined list of variables that learners can select and include in their experiment as independent, control, or dependent variable. Second, the EDT allows learners to design multiple experimental trials at once and determine values for each variable per trial (within predefined ranges). Third, the learners conduct the prepared experimental trials in a lab and document the results in the EDT. Finally, they analyse results and draw conclusions, which they write down in a conclusion text box. The EDT is meant to provide a structured and constrained learning environment within which learners can design their experiment, thereby allowing learners to design informative experiments.

In a recent study, the EDT was compared with two control conditions (that guided learners in the form of more or less specific research questions) in order to study the effect of the EDT on learners’ conceptual knowledge gains. Results showed that low prior knowledge learners, that is, learners whose conceptual knowledge about the domain did not exceed 25% of a conceptual knowledge test before working with the learning environment, significantly benefited from the EDT (van Riesen et al., Citation2018b). The results of that study indicated that guidance should fit with learners’ prior knowledge about the domain. For the current study, the EDT was further adapted (see Method section for more details) based on observations and findings from the above-mentioned study. In order to make the EDT suitable for more diverse groups of learners, domains, and curricula, we investigated the effect of two configurations of the EDT on the learning gains of learners with different levels of prior knowledge of the domain. Since novices have the tendency to start working on the problem immediately, without systematically considering the options a more constrained version of the EDT was developed.

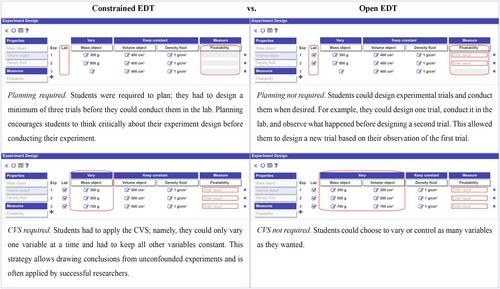

The Constrained EDT offered learners a set structure in which the application of CVS was required, namely, only one variable could be varied at a time, and in which at least three experimental trials had to be designed at once. It was expected that this configuration of the EDT with a high level of guidance would be especially beneficial for low prior knowledge learners. It is generally acknowledged that low prior knowledge learners benefit most from additional guidance (Alexander & Judy, Citation1988). Few low prior knowledge learners engage in planning when they are not guided, despite the fact that planning has found to be very important for learning (Hagemans, van der Meij, & de Jong, Citation2013; Manlove et al., Citation2006; Zimmerman, Citation2002). Dalgarno, Kennedy, and Bennett (Citation2014) also found that low prior knowledge learners applied CVS noticeably less than learners with higher prior knowledge when they analysed learners’ experimentation strategies, and that learners who applied CVS performed better on a conceptual knowledge post-test than learners who did not apply CVS.

The second configuration, the Open EDT, had a more exploratory nature. The basics of the Open EDT were identical to the Constrained EDT, but learners were free to conduct their designed trials whenever they wanted without having to first design at least three trials, and they were not obliged to apply CVS but could vary more than one variable if desired. It was expected that the Open EDT would be best for low-intermediate prior knowledge learners, because they already possess basic knowledge about the domain, but still need to explore relationships between variables, in contrast to students with low prior knowledge who might lack the very basics that are needed for successful exploration of the domain (Roll, de Baker, Aleven, & Koedinger, Citation2014). Roll, Briseno, Yee, and Welsh (Citation2014) found that low prior knowledge students sometimes perform better when they first explore the domain by means of trial-and-error behaviour, and a review study by Pedaste et al. (Citation2015) showed that an exploratory approach to the domain is beneficial for learners lacking specific knowledge about the domain.

Virtual lab

Learners in the current study conducted their designed experiments in a virtual lab. A virtual lab is a type of online lab that is operated through a medium such as the computer, and is described as a simulation of reality (de Jong, Linn, & Zacharia, Citation2013; Sancristobal et al., Citation2012). An important advantage of virtual labs over physical labs is that they allow variables to take on many values, and learners can conduct an unlimited number of experiments that consume less time than experiments conducted in other types of labs, which provides them with excellent opportunities to gain theoretical understandings (Almarshoud, Citation2011). In a study by Toth, Ludvico, and Morrow (Citation2014) in which virtual labs and hands-on labs about DNA gel-electrophoresis were compared, it was found that virtual labs had significant advantages for gaining conceptual knowledge, and learning was deeper and more purposeful than learning with hands-on labs.

Domains: buoyancy and Archimedes’ principle

The virtual lab that was used in the study was about the domains of buoyancy and Archimedes’ principle. Buoyancy plays an important role in science education and everyday life, it can be challenging for learners of all ages, and its understanding is a prerequisite for understanding Archimedes’ principle (van Riesen et al., Citation2018b). It requires a conceptual understanding of density (mass divided by volume), and floating and sinking; objects placed in a fluid float when the density of the object is lower than the density of the fluid, and they sink when the density of the object is higher than the density of the fluid (Hardy, Jonen, Möller, & Stern, Citation2006). Learners of all ages experience challenges in understanding the relationship between density and floating or sinking; they often think that the floatability of an object is determined by its weight without considering volume as well, they fail to recognise the relationship between mass and volume, or they focus on specific features of objects such as holes in an object that may cause it to float (Driver, Squires, Rushworth, & Wood-Robinson, 1994, in Loverude, Citation2009; McKinnon & Renner, 1971, in Loverude, Kautz, & Heron, Citation2003).

Archimedes’ principle is related to buoyancy and is often used as additional subject-matter in Dutch education. Archimedes’ principle can be explained in terms of water displacement or forces (van Riesen et al., Citation2018b). Floating objects have the same mass as the fluid they displace, sinking objects have the same volume as the displaced fluid, and suspended objects have the same mass and volume as the fluid they displace (Hughes, Citation2005). When explained in terms of forces, Archimedes’ principle states that “an object fully or partially immersed in a fluid is buoyed up by a force equal to the weight of the fluid that the object displaces” (Halliday, Resnick, & Walker, 1997, in Hughes, Citation2005, p. 469).

In the current study, which is quasi-experimental, students designed and conducted experiments in an online learning environment to answer research questions about buoyancy and Archimedes’ Principle that were provided to them in that environment. Three learning environments with different supports for designing and conducting experiments were compared. Additional support in the form of one of the two configurations of the EDT was provided in the two experimental conditions. Students in the control condition performed their experiments without using any form of the EDT.

Method

Participants

Three secondary schools in the Netherlands participated in the current study, with a total of 160 pre-university students from six third year classes (approximately 15 years old). To make sure that the level of prior knowledge in all three conditions was similar, learners were assigned to one of the three conditions based on grades received during their physics classed (this information was retrieved through their teacher). After the experiment we eliminated four outliers based on their difference scores regarding buoyancy, two outliers based on their difference scores regarding Archimedes’ principle, one student who was observed not to take the study seriously, and 44 students who missed a session. The data from a total of 109 students (36 in the control condition, 36 in the constrained EDT condition and 37 in the open EDT condition) remained for analyses.

Learning environments

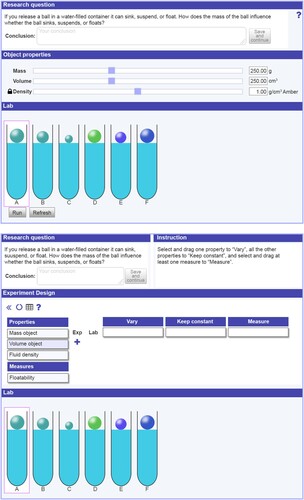

Upon entering the environment, learners saw instructions on the screen telling them about their task of designing and conducting experiments in a virtual lab, called Splash, in order to answer research questions provided to them in the learning environment. After students had read the instructions they could continue to the investigation space (), which included a research question, a conclusion text box, a mechanism to prepare experiments with, Splash (a virtual lab about buoyancy and Archimedes’ principle), and a help button to retrieve domain information upon request. The three learning environments each contained the same set of fourteen research questions. Students were presented with one research question at a time, in order, and they could only continue with the next research question after they had designed and conducted their experiments and had entered their conclusion in the conclusion text box.

Online virtual lab: Splash

Students worked with an online virtual lab called Splash (the lab in ). In Splash, several fluid-filled tubes were displayed; the fluids could be water or fluids with a different density. Students must determine the mass, volume and density of the balls that were provided, which they could then place in the fluid-filled tubes. They could observe whether the balls sank, floated, or suspended in the fluid, how much fluid was displaced by the ball, and how the domain-related forces, such as buoyant force and gravity, acted upon each other.

Support

The learning environments were similarly structured for each of the conditions and only differed in the support offered to students for the processes of designing and conducting experiments (). Learners in the control condition had to use sliders in the Object properties box to adjust the settings for their experiments, whereas learners in the experimental conditions used one of the configurations of the EDT, as is described in more detail in the following sections.

Control condition

The learning environment that was used by students in the control condition offered the least amount of support. Students could prepare their experiments and conduct them directly in Splash by means of sliders that assigned values to the variables in their experiments. They could take notes in their provided booklet and write down everything they considered to be relevant to answer the research question, including their experimental trials and observed results. This learning environment did not include the EDT to help students design their experiments.

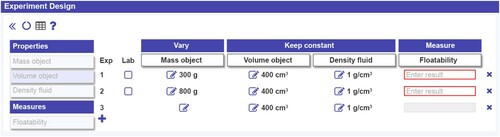

EDT conditions: Constrained EDT and Open EDT

Learners in the two experimental conditions worked with a learning environment that included one of the two configurations of the Experiment Design Tool (EDT) to support learners in the processes of designing and conducting experiments (). The basic functionality of the two configurations was the same. The EDT was developed to address elements that are central in experimentation; it revolved around the independent, control, and dependent variables. The tool presented learners with a list of pre-selected variables to use in their experiment design. For each variable, learners had to decide if they wanted to vary it across experimental trials, control it, or measure it, by dragging it to one of the boxes “vary”, “keep constant”, or “measure”. Learners could plan multiple experimental trials by adding them to the design, and assign values to the independent and control variables for each trial by means of a slider that allowed them to choose from a range of values. Different values across trials could be assigned to variables in the “vary” box while only one value could be assigned to each variable in the “keep constant” box, because that value was automatically copied by the EDT to all other experimental trials within the experiment. At all times, learners could read instructions at the top of their screen on how to use the EDT. The instructions were presented just-in-time and were based on learners’ actions. For example, when they started designing their experiment they received instructions to drag and drop all property variables to the “vary” and “keep constant” boxes, and to drag at least one variable they wanted to measure to “measure”.

When the experiment design was ready, learners could select the trials they wanted to conduct in Splash. The selected trials were automatically transferred from the EDT to Splash so learners did not have to enter the chosen values twice. In Splash they could observe what happened and write down the results in the EDT. Learners could enter their results for the dependent variables after they had conducted the trials. The completed trials for which they had entered results were all automatically saved in a history table that they could view at all times. Moreover, the history table allowed them to sort variable values in ascending or descending order, which made it easier to reach conclusions or decide whether more trials or even experiments were required to answer the research question. In case learners wanted to conduct more trials or experiments to answer the research question, they could add more trials, adjust their design, or design an entirely new experiment. Any of those options still allowed them to view the history table with all of their completed trials for the research question they were trying to answer.

The two configurations of the tool differed in two aspects, as shown in . One configuration had a more exploratory character and the other configuration offered more structure to the learners. The first way the two configurations differed was in the number of trials learners had to design before they could conduct them in the lab. The second way the two configurations differed was in the application of experiment design strategies.

Assessment

Students’ knowledge of buoyancy and Archimedes’ principle, the topics in Splash, was assessed both before and after the intervention with parallel pencil-and-paper pre- and post-tests that were based on tests created by van Riesen et al. (Citation2018b). The pre-test contained the same type of questions as the post-test but differed in the values provided within questions and in the order of the questions. The tests that we used in our current study consisted of 58 open-ended questions that measured learners’ understanding of the key concepts and principles of the topics in Splash, with 25 points available for buoyancy and 33 for Archimedes’ principle. Learners had to write down definitions and apply their knowledge by providing the mass, volume, and density of balls and fluids in different situations, the amount of water that was displaced by the ball, and/or forces that were present in the provided situations. Learners were given thirty minutes to complete the test and were allowed to use a calculator and a pen. To determine the reliability of the tests, separate Cronbach alpha’s for both parts (buoyancy and Archimedes’ principle) of the pre- and the post-tests were determined based on the 109 participants that were taken into account in the analyses. The first part of the pre-test (about buoyancy) showed a Cronbach’s alpha of .936 (25 items), and the second part of the pre-test (about Archimedes’ principle) a Cronbach’s alpha of .886 (33 items). Cronbach’s alpha’s of .921 were found for the buoyancy part of the post-test (25 items) and of .907 for the Archimedes’ principle part of the post-test (33 items), all of which demonstrate high reliabilities.

Procedure

The study was carried out during four sessions of 50–60 min each, over a period of two and a half weeks, in the computer lab at their school during their regular physics lessons. At the beginning of the first session learners were told what they were going to do in the four sessions making up the study. Thereafter, they had half an hour to complete the pre-test, which was enough time for all learners to finish. According to the condition learners were assigned to they were given instructions and a demonstration on how to perform the tasks for the upcoming lessons within the learning environment. They could ask any questions they (still) had. During the second session learners received a booklet matching the condition they were assigned to. All booklets contained instructions about the tasks they were going to perform, and the research questions they had to answer during the lesson in order for them to see which questions were still coming and to take notes for specific questions if they wanted to. In addition, the booklets given to learners in the control group provided specific spaces where they could write down anything they thought might help them answer the research question, such as their experiment design and observed results. All learners worked individually with the learning environment at a computer. Instructions had already been provided to them during the first session, but were also present in the learning environment and on paper, so they could immediately start designing and conducting experiments to learn about buoyancy during the second session. The third session was similar to the second session; learners also worked with the learning environment, but the topic of investigation was Archimedes’ principle instead of buoyancy. During the fourth session learners took the post-test, which they again had half an hour to complete, and they all finished within the allotted time again.

Results

In the current study three conditions were compared, which differed in the support provided for designing and conducting experiments in an online learning environment. First, we explored whether learners in all conditions gained knowledge about buoyancy and Archimedes’ principle. Paired samples t-tests were conducted for each condition and showed significant increases in score from pre- to post-test for buoyancy (control condition: t(35) = −3.941, p < .001, d = 0.66; Constrained EDT condition: t(35) = −3.088, p = .004, d = 0.51; Open EDT condition: t(36) = −3.709, p = .001, d = 0.61) and for Archimedes’ principle (control condition: t(35) = −4.378, p < .001, d = 0.73; Constrained EDT condition: t(35) = −2.711, p = .010, d = 0.45; Open EDT condition: t(36) = −3.630, p = .001, d = 0.60) in all three conditions. shows the means and SDs of the pre- and post-test scores for all conditions, as well as the difference scores.

Table 1. Test scores per condition for all students.

Our first principal interest was whether mean conceptual learning gains differed between learners who received different guidance for designing experiments. One-way ANOVA’s showed no a-priori differences between conditions regarding prior knowledge about buoyancy, F(2, 106) = 0.115, p = 0.892, and about Archimedes’ principle, F(2, 106) = 0.223, p = 0.800. Univariate analyses showed no significant differences between conditions for buoyancy, F(2, 106) = 0.37, p = 0.693, = .007, and for Archimedes’ principle, F(2, 106) = 1.89, p = 0.156,

= .034.

Different prior knowledge groups

Our second principal interest was in differences between conditions for learners with distinct levels of prior knowledge regarding the targeted knowledge domain. Here we were only concerned with buoyancy and not Archimedes’ principle. For Archimedes principle 93% of all learners had low prior knowledge (scored below 25% correct on the pre-test). This prevented us from performing any useful analyses about that topic. Based on their pre-test scores about buoyancy, learners were classified as (1) low prior knowledge, when they scored 0%–25% on the pre-test, (2) low-intermediate prior knowledge, when they scored 26%–50% on the pre-test, (3) high-intermediate prior knowledge, when they scored 51%–75% on the pre-test, or (4) high prior knowledge learners, when they scored 76%–100% on the pre-test.

Because of the low number of learners per group, an independent-samples Kruskal–Wallis test was conducted, which showed a significant difference between conditions only for low-intermediate prior knowledge learners learning about buoyancy, H(2) = 9.14, p = .010. Follow-up Mann–Whitney analyses showed that low-intermediate prior knowledge learners in the control condition and in the Open EDT condition gained significantly more knowledge than low-intermediate prior knowledge learners in the Constrained EDT condition, (control vs Constrained EDT: U = 3.5, p = .047, r = 0.60; Constrained EDT vs Open EDT: U = 0.0, p = .010, r = 0.81), and a non-significant effect that approached significance was found in favour of the Open EDT condition compared to the control condition (U = 8.0, p = .062, r = 0.52). shows the means and SDs of the pre- and post-test scores for buoyancy for each prior knowledge group per condition, as well as the difference scores.

Table 2. Test scores for buoyancy (max = 25) per condition for each prior knowledge group.

The results for the other groups of learners with different levels of prior knowledge are non-significant, but they should not be ignored. Descriptive statistics regarding difference scores between the pre- and post-test, as presented in , show that the groups of learners with different levels of prior knowledge each gained most knowledge in a different condition; low prior knowledge learners gained most when they worked with the Constrained EDT (non-significant), low-intermediate prior knowledge learners when they worked with the Open EDT (significant), and high-intermediate prior knowledge learners when they were not guided by the EDT (non-significant). High prior knowledge learners did not gain knowledge, but they already had an average pre-test score for buoyancy of 22.30, and therefore had little room to gain any knowledge.

Conclusion and discussion

In the current study we investigated the effect of two versions of the EDT in terms of conceptual learning gain and compared the results to a control condition. One EDT (Constrained EDT) required learners to plan and apply CVS. The other EDT (Open EDT) was more exploratory and provided learners with the same opportunities as learners who worked with the Constrained EDT (i.e. they could design several trials at once, and they could apply CVS just as easily as in the Constrained EDT), but without requiring them to perform the mandatory steps in the Constrained EDT. The two versions of the EDT were based on an earlier version of the EDT (van Riesen et al., Citation2018b) that was designed according to the Scaffolding Framework of Quintana et al. (Citation2004) along with the theoretical background that supports that framework.

Taking all learners into account, no significant differences were found between conditions regarding knowledge gains. However, as expected, when we distinguished between groups of learners based on their prior knowledge regarding buoyancy, a significant effect was found for low-intermediate learners, insofar as this group performed significantly better with the Open EDT compared to the Constrained EDT on buoyancy. Moreover, descriptive statistics for all prior knowledge groups showed promising trends that point in the direction of even more specific prior knowledge-related differences. Our results showed that each of the three conditions resulted in distinctly (albeit not significantly) higher scores for one specific group of prior knowledge learners on buoyancy; the Open EDT yielded significantly better performance for low-intermediate prior knowledge learners compared to the Constrained EDT, the Constrained EDT showed high knowledge gain for low prior knowledge learners compared to other conditions (non-significant), and the control condition had the best learning gains for high-intermediate prior knowledge learners (non-significant). These (directional) results suggest a coherence between prior knowledge and the type and level of support that is effective for designing experiments, and support the widely acknowledged consensus that prior knowledge has a prominent role in new learning (Alexander & Judy, Citation1988; Hmelo, Nagarajan, & Day, Citation2000; Tuovinen & Sweller, Citation1999).

Although the results are partly directional they suggest that the match between learners’ prior knowledge and the type of support they require should be handled very delicately. The effects of the interventions within the conditions in our study on learners with distinct levels of prior knowledge may be explained by the characteristics of the conditions, and how they foster or limit the application of certain search methods that learners can apply in their experimentation processes (Klahr & Simon, Citation1999).

To elaborate, low prior knowledge learners on buoyancy performed best when they were guided by the Constrained EDT (non-significant). This result, even though non-significant in the reported study, is in line with results of our previous study with the EDT (van Riesen et al., Citation2018b), in which low prior knowledge learners who worked with a more constrained version of the EDT significantly outperformed learners who did not. In the current study the array of different possible masses and volumes learners could select was wide, combined with the interaction between the mass, volume and density of the object and the fluid in which it is placed, this can lead to many experimental trials. Learners that apply unsystematic experimentation behaviour and fail to document their experimental trials and results, might lose track of their experiments and observations and fail to draw conclusions. Learners that are not properly guided, often apply weak search methods such as “generate and test” (Klahr & Simon, Citation1999), in which learners try something, and observe whether it leads to the desired outcome without pursuing a structured plan. This strategy can consume a lot of time and can be like trying to find a specific ring in a big box filled with rings and tossing the ring back every time it is not the correct one. The Constrained EDT provided learners with the clearest structure for designing experiments and required learners to plan at least three experimental trials at once, in which they also had to keep all variables constant except for the independent variable. Since low prior knowledge learners still need to figure out the effect each causal variable has on the dependent variables, a clear experimental structure requiring them to vary exactly one variable could help them gain insight into the effect of these variables on the dependent variable, allowing them to work through the learning material step by step (Quintana et al., Citation2004). Moreover, the Constrained EDT automatically saved all the experimental trials and allowed learners to organise the results for inspection by allowing them to sort each variable in ascending or descending order.

Interestingly, in contrast with the low prior knowledge learners, low-intermediate prior knowledge learners performed significantly better when they worked with the Open EDT compared to the Constrained EDT. The Open EDT offered learners the same structure for experimental design as the Constrained EDT (i.e. learners could see variables they could keep constant, vary or measure, and the EDT automatically assigned identical values to control variables in different experimental trials), but it differed in that experimental trials could also be conducted when learners had not prepared at least three experimental trials, and it allowed them to vary more than one variable at a time. Learners who have no specific idea about the domain benefit from applying an exploratory approach to the domain, in which they try to find relationships between variables in a systematic way (Pedaste et al., Citation2015). The Open EDT allows broader exploration than the Constrained EDT because learners who work with the Open EDT can conduct single trials and are not required to apply CVS, giving them the freedom to design and conduct one trial, observe what happens, and design a new trial accordingly. As with the Constrained EDT, all completed trials for which learners have entered the results are automatically documented and learners record their observation for each trial themselves, which then provides them with the opportunity to review their observations at any point. The characteristics of the Open EDT make it very suitable for learners to apply the exploratory search method known as “hill climbing” (Klahr & Simon, Citation1999), in which they first design several experimental trials that do not necessarily need to be heading in the same direction, then observe what happens, and then design new trials based on the results from the trials that show greatest promise for answering the research question (Klahr & Simon, Citation1999). Our results suggest that the Open EDT is more suitable for learners who already have at least some knowledge about the domain of investigation, but who still have a considerable amount to learn, which fits well with other literature (e.g. Lim, Citation2004; Pedaste et al., Citation2015).

Furthermore, high-intermediate prior knowledge learners in the current study gained most knowledge when they were not working with the EDT at all (non-significant). A strand of research supports the finding that more knowledgeable learners require less guidance and apply more sophisticated strategies than their peers who have little prior knowledge (e.g. Alexander & Judy, Citation1988; Hmelo et al., Citation2000; Tuovinen & Sweller, Citation1999). Klahr and Simon (Citation1999) found that more knowledgeable learners often use strong methods that allow them to find solutions with little or no search, for example, by applying known formulas or physics rules. When learners are familiar with the formula for density: ρ = m / V, and when they also know the relationship between object density, fluid density, and floatability, they can simply apply those rules to know whether an object sinks, submerges, or floats in a certain fluid. They only need to conduct a few experimental trials in order to check the correctness of their prior knowledge or extend their knowledge, which they can do more easily by setting up an experimental trial directly in the lab, observing what happens, and continuing with another experimental trial until they feel confident about their answer to the research question. Guidance in the form of the EDT would therefore be unnecessary to aid their learning and might even slow down their learning compared to when no additional support is provided, which seemed to have been the case in our current study.

Lastly, high prior knowledge learners did not gain knowledge of the topic of buoyancy, but it should be noted that they had already scored very high on the pre-test. Despite their very high pre-test scores, high prior knowledge learners who worked with the Constrained EDT even showed a negative learning effect of more than half a point. Similar findings were obtained by Kalyuga (Citation2007), who also found that guidance can have a negative effect on high prior knowledge learners, which he referred to as the “expertise reversal effects". Results of a recent study by Groβmann and Wilde (Citation2018) also suggests that students with higher levels of prior knowledge do not feel worried or anxious during an inquiry learning task and are able to solve the task without support. They argue that students with high levels of prior knowledge might actually be hindered by the provided support. In the present study the redundant additional support may distract learners and prevent them from performing the task as well as they could have with less support.

It is important to stress that we have discussed our results based on literature that we mapped to our study, with which we have attempted to explain relationships between learner- and learning environment characteristics and learning gains. A limitation of our study is that we focused only on learning gains measured with a pre- and post-test. Multiple methods could have provided us with richer insights in learners’ learning processes and learning outcomes. In future studies additional forms of data collection and analysis, such as log file analyses should be used in order to track whether or not the learners actually performed actions that could have been encouraged by a specific version of the EDT.

Another limitation is the low number of participants within the different prior knowledge groups. This clearly lowered the power of the current study and may have prevented significant results to come out. The indications that we have seen in the current study therefore still need to be confirmed in future work. Despite this, we still had a significant effect for low-intermediate prior knowledge learners, but one might wonder how generalisable this finding is because of the low number of participants. However, in a more recent study, in which the Open EDT was also included in one of the conditions (van Riesen, Gijlers, Anjewierden, & de Jong, Citation2018a), we found similar results. Low-intermediate prior knowledge learners in that study also performed significantly better when they worked with the Open EDT, compared to two other versions of the EDT. Results of both studies combined indicate that the Open EDT is indeed beneficial to low-intermediate prior knowledge learners for designing and conducting experiments in inquiry learning environments.

Findings from our study suggest that prior knowledge influences the degree to which learners benefit from different types and levels of support, and that the match between effective guidance for inquiry learning in an online environment and prior knowledge is a very delicate matter that should be treated carefully. This can be achieved by designing guidance in such a way that it automatically adapts to learners’ prior knowledge levels, or by allowing manual configuration so that teachers can adjust guidance based on learners’ prior knowledge and behaviours. We studied the effectiveness of two configurations of the EDT. The configurability of the EDT allows teachers to provide learners with the level of guidance they require in order for them to learn most effectively; ideally, teachers should regularly monitor learners’ knowledge and adapt the guidance accordingly.

Future research should investigate the distinction between different levels of prior knowledge with respect to their optimal type and level of support from our EDT with a larger sample size to analyse whether the results still hold. More in-depth methods should also be used in order to get a better understanding of the processes learners go through and the rationales behind their choice of experimentation strategies.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

Notes on contributors

Siswa A. N. van Riesen

Siswa A. N. van Riesen recently received her PhD from the Department of Instructional Technology at the University of Twente. Her research focuses on enhancing students’ conceptual knowledge and inquiry skills within online learning environments.

Hannie Gijlers

Hannie Gijlers is an assistant professor at the Department of Instructional Technology at the University of Twente. She received her PhD in Educational Sciences from the University of Twente. Her current research focusses on (collaborative) inquiry learning processes in the context of STEM education. Hannie contributed to the design of several ICT based learning environments and published several articles on collaborative inquiry learning in International peer reviewed journals.

Anjo A. Anjewierden

Anjo Anjewierden is a researcher and holds a bachelor degree in Computer Science. His main interest is in designing and developing highly interactive learning environments on science topics, and using Learning Analytics, the topic of his PhD thesis, to see how students use these learning environments.

Ton de Jong

Ton de Jong is professor of Instructional Technology. He specializes in inquiry learning (mainly in science domains) supported by technology (online labs, games, modelling environments). Currently he is coordinator of the 7th framework EU Go-Lab project, is associate for the Journal of Engineering Education, and is on the editorial board of eight other journals. He has published papers in Science on inquiry learning with computer simulations (2006) and online laboratories (2013). He is AERA fellow and was elected member of the Academia Europaea in 2014. For more info see: http://users.edte.utwente.nl/jong/Index.htm.

References

- Alexander, P. A., & Judy, J. E. (1988). The interaction of domain-specific and strategic knowledge in academic performance. Review of Educational Research, 58, 375–404. doi:https://doi.org/10.3102/00346543058004375

- Alfieri, L., Brooks, P. J., Aldrich, N. J., & Tenenbaum, H. R. (2011). Does discovery-based instruction enhance learning? Journal of Educational Psychology, 103, 1–18. doi:https://doi.org/10.1037/A0021017

- Almarshoud, A. F. (2011). The advancement in using remote laboratories in electrical engineering education: A review. European Journal of Engineering Education, 36, 425–433. doi:https://doi.org/10.1080/03043797.2011.604125

- Arnold, J. C., Kremer, K., & Mayer, J. (2014). Understanding students’ experiments: What kind of support do they need in inquiry tasks? International Journal of Science Education, 36, 2719–2749. doi:https://doi.org/10.1080/09500693.2014.930209

- Ausubel, D. P. (1968). Educational psychology: A cognitive view. New York: Holt, Rinehart & Winston.

- Bumbacher, E., Salehi, S., Wieman, C., & Blikstein, P. (2018). Tools for science inquiry learning: Tool affordances, experimentation strategies, and conceptual understanding. Journal of Science Education and Technology, 27, 215–235. doi:https://doi.org/10.1007/s10956-017-9719-8

- Carnesi, S., & DiGiorgio, K. (2009). Teaching the inquiry process to 21st century learners. Library Media Connection, 27, 32–36.

- Dalgarno, B., Kennedy, G., & Bennett, S. (2014). The impact of students’ exploration strategies on discovery learning using computer-based simulations. Educational Media International, 51, 310–329. doi:https://doi.org/10.1080/09523987.2014.977009

- de Jong, T. (2006). Computer simulations: Technological advances in inquiry learning. Science, 312, 532–533. doi:https://doi.org/10.1126/science.1127750

- de Jong, T., Linn, M. C., & Zacharia, Z. C. (2013). Physical and virtual laboratories in science and engineering education. Science, 340, 305–308. doi:https://doi.org/10.1126/science.1230579

- de Jong, T., & Njoo, M. (1992). Learning and instruction with computer simulations: Learning processes involved. In E. de Corte, M. Linn, H. Mandl, & L. Verschaffel (Eds.), Computer-based learning environments and problem solving (pp. 411–429). Berlin: Springer-Verlag.

- de Jong, T., & van Joolingen, W. R. (1998). Scientific discovery learning with computer simulations of conceptual domains. Review of Educational Research, 68, 179–201. doi:https://doi.org/10.2307/1170753

- Edelson, D. C., Gordin, D. N., & Pea, R. D. (1999). Addressing the challenges of inquiry-based learning through technology and curriculum design. Journal of the Learning Sciences, 8, 391–450. doi:https://doi.org/10.1207/s15327809jls0803&4_3

- Glaser, R., Schauble, L., Raghavan, K., & Zeitz, C. (1992). Scientific reasoning across different domains. In E. de Corte, M. Linn, H. Mandl, & L. Verschaffel (Eds.), Computer-based learning environments and problem solving (pp. 345–373). Berlin: Springer-Verlag.

- Groβmann, N., & Wilde, M. (2018). Experimentatioin in biology lessons: Guided discovery through incremental scaffolds. International Journal of Science Education, 41, 759–781. doi: https://doi.org/10.1080/09500693.2019.1579392

- Hagemans, M. G., van der Meij, H., & de Jong, T. (2013). The effects of a concept map-based support tool on simulation-based inquiry learning. Journal of Educational Psychology, 105, 1–24. doi:https://doi.org/10.1037/a0029433

- Hailikari, T., Katajavuori, N., & Lindblom-Ylanne, S. (2008). The relevance of prior knowledge in learning and instructional design. American Journal of Pharmaceutical Education, 72, 113. doi:https://doi.org/10.5688/aj7205113

- Hardy, I., Jonen, A., Möller, K., & Stern, E. (2006). Effects of instructional support within constructivist learning environments for elementary school students’ understanding of “floating and sinking”. Journal of Educational Psychology, 98, 307–326. doi:https://doi.org/10.1037/0022-0663.98.2.307

- Hmelo, C. E., Nagarajan, A., & Day, R. S. (2000). Effects of high and low prior knowledge on construction of a joint problem space. The Journal of Experimental Education, 69, 36–56. doi:https://doi.org/10.1080/00220970009600648

- Hughes, S. W. (2005). Archimedes revisited: A faster, better, cheaper method of accurately measuring the volume of small objects. Physics Education, 40, 468–474. doi: https://doi.org/10.1088/0031-9120/40/5/008

- Kalyuga, S. (2007). Expertise reversal effect and its implications for learner-tailored instruction. Educational Psychology Review, 19, 509–539. doi:https://doi.org/10.1007/s10648-007-9054-3

- Klahr, D., & Nigam, M. (2004). The equivalence of learning paths in early science instruction: Effect of direct instruction and discovery learning. Psychological Science, 15, 661–667. doi:https://doi.org/10.1111/j.0956-7976.2004.00737.x

- Klahr, D., & Simon, H. A. (1999). Studies of scientific discovery: Complementary approaches and convergent findings. Psychological Bulletin, 125, 524–543. doi:https://doi.org/10.1037/0033-2909.125.5.524

- Lambiotte, J. G., & Dansereau, D. F. (1992). Effects of knowledge maps and prior knowledge on recall of science lecture content. The Journal of Experimental Education, 60, 189–201. doi:https://doi.org/10.1080/00220973.1992.9943875

- Lazonder, A. W. (2014). Inquiry learning. In J. M. Spector, M. D. Merrill, J. Elen, & M. J. Bishop (Eds.), Handbook of research on educational communications and technology (pp. 453–464). New York: Springer Science + Business Media.

- Lim, B. (2004). Challenges and issues in designing inquiry on the web. British Journal of Educational Technology, 35(5), 627–643. doi:https://doi.org/10.1111/j.0007-1013.2004.00419.x

- Loverude, M. E. (2009). A research-based interactive lecture demonstration on sinking and floating. American Journal of Physics, 77, 897–901. doi:https://doi.org/10.1119/1.3191688

- Loverude, M. E., Kautz, C. H., & Heron, P. R. L. (2003). Helping students develop an understanding of Archimedes’ principle. I. Research on student understanding. American Journal of Physics, 71, 1178–1187. doi:https://doi.org/10.1119/1.1607335

- Manlove, S., Lazonder, A. W., & de Jong, T. (2006). Regulative support for collaborative scientific inquiry learning. Journal of Computer Assisted Learning, 22, 87–98. doi:https://doi.org/10.1111/j.1365-2729.2006.00162.x

- Osborne, J., Collins, S., Ratcliffe, M., Millar, R., & Duschl, R. (2003). What “ideas-about-science” should be taught in school science? A Delphi study of the expert community. Journal of Research in Science Teaching, 40, 692–720. doi:https://doi.org/10.1002/Tea.10105

- Pedaste, M., Mäeots, M., Siiman, L. A., de Jong, T., van Riesen, S. A. N., Kamp, E. T., … Tsourlidaki, E. (2015). Phases of inquiry-based learning: Definitions and the inquiry cycle. Educational Research Review, 14, 47–61. doi:https://doi.org/10.1016/j.edurev.2015.02.003

- Quintana, C., Reiser, B. J., Davis, E. A., Krajcik, J., Fretz, E., Duncan, R. G., … Soloway, E. (2004). A scaffolding design framework for software to support science inquiry. Journal of the Learning Sciences, 13, 337–386. doi:https://doi.org/10.1207/s15327809jls1303_4

- Reiser, B. J. (2004). Scaffolding complex learning: The mechanisms of structuring and problematizing student work. Journal of the Learning Sciences, 13, 273–304. doi:https://doi.org/10.1207/s15327809jls1303_2

- Roll, I., Briseno, A., Yee, N., & Welsh, A. (2014). Not a magic bullet: The effect of scaffolding on knowledge and attitudes in online simulations. Paper presented at the international conference of the learning sciences, Boulder, CO.

- Roll, I., de Baker, R. S. J., Aleven, V., & Koedinger, K. R. (2014). On the benefits of seeking (and avoiding) help in online problem-solving environments. Journal of the Learning Sciences, 23, 537–560. doi:https://doi.org/10.1080/10508406.2014.883977

- Ruppert, J., Golan Duncan, R., & Chinn, C. A. (2017). Disentangling the role of domain-specific knolwedge in student modeling. Research in Science Education. doi:https://doi.org/10.1007/s11165-017-9656-9

- Sancristobal, E., Martín, S., Gil, R., Orduña, P., Tawfik, M., Pesquera, A., … Castro, M. (2012). State of art, initiatives and new challenges for virtual and remote labs. Paper presented at the 12th IEEE international conference on advanced learning technologies, Rome.

- Schauble, L., Glaser, R., Raghavan, K., & Reiner, M. (1992). The integration of knowledge and experimentation strategies in understanding a physical system. Applied Cognitive Psychology, 6, 321–343. doi:https://doi.org/10.1002/acp.2350060405

- Schraw, G., Crippen, K. J., & Hartley, K. (2006). Promoting self-regulation in science education: Metacognition as part of a broader perspective on learning. Research in Science Education, 36, 111–139. doi:https://doi.org/10.1007/s11165-005-3917-8

- Schunk, D. H. (1996). Learning theories: An educational perspective (2nd ed.). Englewood Cliffs, NJ: Merrill.

- Simons, K. D., & Klein, J. D. (2007). The impact of scaffolding and student achievement levels in a problem-based learning environment. Instructional Science, 35, 41–72. doi:https://doi.org/10.1007/s11251-006-9002-5

- SLO Nationaal Expertisecentrum Leerplanontwikkeling. (2016). Karakteristieken en kerndoelen: Onderbouw voortgezet onderwijs [Characteristics and headline targets: Lower secundary education]. (Report number: OBVO/16-023). Retrieved from Enschede http://downloads.slo.nl/Documenten/karakteristieken-en-kerndoelen-onderbouw-vo.pdf

- Toth, E. E., Ludvico, L. R., & Morrow, B. L. (2014). Blended inquiry with hands-on and virtual laboratories: The role of perceptual features during knowledge construction. Interactive Learning Environments, 22, 614–630. doi:https://doi.org/10.1080/10494820.2012.693102

- Tuovinen, J. E., & Sweller, J. (1999). A comparison of cognitive load associated with discovery learning and worked examples. Journal of Educational Psychology, 91, 334–341. doi:https://doi.org/10.1037/0022-0663.91.2.334

- van Joolingen, W. R., & de Jong, T. (1991). Supporting hypothesis generation by learners exploring an interactive computer simulation. Instructional Science, 20, 389–404. doi: https://doi.org/10.1007/BF00116355

- van Riesen, S. A. N., Gijlers, H., Anjewierden, A. A., & de Jong, T. (2018a). The influence of prior knowledge on experiment design guidance in a science inquiry context. International Journal of Science Education. doi:https://doi.org/10.1080/09500693.2018.1477263

- van Riesen, S. A. N., Gijlers, H., Anjewierden, A. A., & de Jong, T. (2018b). Supporting learners’ experiment design. Educational Technology Research and Development, 66, 475–491. doi:https://doi.org/10.1007/s11423-017-9568-4

- Veenman, M. V. J., Elshout, J. J., & Meijer, J. (1997). The generality vs domain-specificity of metacognitive skills in novice learning across domains. Learning and Instruction, 7, 187–209. doi:https://doi.org/10.1016/S0959-4752(96)00025-4

- Veermans, K., de Jong, T., & van Joolingen, W. R. (2000). Promoting self-directed learning in simulation-based discovery learning environments through intelligent support. Interactive Learning Environments, 8, 229–255. doi:https://doi.org/10.1076/1049-4820(200012)8:3;1-D;FT229

- Veermans, K., van Joolingen, W. R., & de Jong, T. (2006). Use of heuristics to facilitate scientific discovery learning in a simulation learning environment in a physics domain. International Journal of Science Education, 28, 341–361. doi:https://doi.org/10.1080/09500690500277615

- Zacharia, Z. C., Manoli, C., Xenofontos, N., de Jong, T., Pedaste, M., van Riesen, S. A. N., … Tsourlidaki, E. (2015). Identifying potential types of guidance for supporting student inquiry when using virtual and remote labs in science: A literature review. Educational Technology Research and Development, 63, 257–302. doi:https://doi.org/10.1007/s11423-015-9370-0

- Zimmerman, B. J. (2002). Becoming a self-regulated learner: An overview. Theory Into Practice, 41, 64–70. doi:https://doi.org/10.1207/s15430421tip4102_2