ABSTRACT

Secondary school students often learn new cognitive skills by practicing with tasks that vary in difficulty, amount of support and/or content. Occasionally, they have to select these tasks themselves. Studies on task-selection guidance investigated either procedural guidance (specific rules for selecting tasks) or strategic guidance (general rules and explanations for task selection), but never directly compared them. Experiment 1 aimed to replicate these studies by comparing procedural guidance and strategic guidance to a no-guidance condition, in an electronic learning environment in which participants practiced eight self-selected tasks. Results showed no differences in selected tasks during practice and domain-specific skill acquisition between the experimental groups. A possible explanation for this is an ineffective combination of feedback and feed forward (i.e. the task-selection advice). The second experiment compared inferential guidance (which combines procedural feedback with strategic feed forward), to a no-guidance condition. Results showed that participants selected more difficult, less-supported tasks after receiving inferential guidance than after no guidance. Differences in domain-specific skill acquisition were not significant, but higher conformity to inferential guidance did significantly predict higher domain-specific skill acquisition. Hence, we conclude that inferential guidance can positively affect task selections and domain-specific skill acquisition, but only when conformity is high.

Students often learn new cognitive skills by practicing them on a variety of learning tasks. In traditional education, students usually all receive the same instruction and the same learning tasks. However, in mastery learning, instruction is adapted to the individual needs of each student (Kulik et al., Citation1990). This means that different students practice different tasks, depending on their current learning needs. When the learning tasks better fulfill learning needs, domain-specific skill acquisition could improve through these enhanced practice opportunities.

Hence, it is useful for secondary school students to practice with individualized learning tasks. They do not seem to be very skillful at selecting these themselves and therefore might benefit from task-selection guidance (Bell & Kozlowski, Citation2002; Brand-Gruwel et al., Citation2014). The main aim of the current study is to investigate types of guidance that can improve the quality of practice opportunities that students derive from task selections and, therefore, domain-specific skill acquisition. The first experiment aimed to replicate the effects of two different guidance types from earlier task-selection studies (Kicken et al., Citation2009; Kostons et al., Citation2012; Taminiau, Citation2013; Taminiau et al., Citation2013). We also investigate how conformity to guidance influences the effects of guidance. The second experiment studies the effects of improved guidance on task selection and domain-specific skills.

Task selection and the task-selection process

In a learning environment with task selection, students can choose between tasks that differ on several factors, such as difficulty, available support, and content (e.g. Brusilovsky, Citation1992; Robinson, Citation2001; Van Merriënboer & Kirschner, Citation2018). Domain-specific skill acquisition might improve through enhanced practice opportunities when students select these factors of each task as described below. Regarding difficulty, students could practice complex, cognitive skills according to the simplifying-conditions method (Reigeluth, Citation1999). This method states that each task is preferably a whole task (i.e. a task in which all subskills of the complex skill are practiced at the same time). Students start practicing with the simplest version of this task, and gradually progress to more complex tasks after sufficiently mastering each simpler version. This continues until they have mastered the most complex version of the task, under the assumption that they then have mastered all possible varieties of complexity that they might encounter in real life (Reigeluth, Citation1999; Van Merriënboer & Kirschner, Citation2018).

Similarly, students could select a support level that suits their current learning needs, for instance by decreasing the amount of provided support in sync with the increase in expertise of the students (Renkl & Atkinson, Citation2003). Students could determine this themselves by using experienced cognitive load (i.e. the strain on working memory when performing a task; Sweller et al., Citation1998) on a previous task (e.g. Camp et al., Citation2001). For example, if the experienced cognitive load is high, students could select a task with high support. Vice versa, they could select a low-supported task when the experienced load is low (Sweller et al., Citation1998). A high-supported problem-solving task could be a worked example, whereas a low-supported task can be a completion problem (cf. Sweller et al., Citation1998). Thus, students could enhance the quality of their practice tasks by first practicing with worked examples, continuing with completion problems, and ending with conventional problems to eventually master the full task without support (Renkl & Atkinson, Citation2003).

Finally, students might enhance their practice opportunities when they practice the same skill with different contents, which is called variability of practice. This enhances transfer, which means that students can apply the learned skill in new contexts that are different from the instructional context (e.g. Detterman & Sternberg, Citation1993; Sweller et al., Citation1998; Van Merriënboer & Kirschner, Citation2018).

To sum up, it is possible for the quality of practice opportunities to improve when learners work through tasks in a sequence from simple to complex, receive decreasing amounts of support, and work with a variety of contents. Task selections that reflect these patterns have been shown to enhance domain-specific skill acquisition (Camp et al., Citation2001; Corbalan et al., Citation2008; Van Merriënboer & Kirschner, Citation2018).

Task-selection guidance

Task selections could possibly be enhanced when learners are aware on which task features they can base their selections. However, previous studies have shown that secondary school students rarely consider difficulty and support when selecting learning tasks (Kostons et al., Citation2010; Nugteren et al., Citation2018). Rather, they mainly select tasks based on their content, and hardly any selections are purposely based on all three features described above (difficulty, support, and content; Nugteren et al., Citation2018). Since students show little awareness of these selection factors, they might benefit from guidance to help focus their attention to these features (Nugteren et al., Citation2018). Two factors could influence the effects of guidance on task selections: The type of guidance and conformity to guidance.

Previous studies have mostly used either procedural or strategic task-selection guidance. Procedural task-selection guidance consists of strict task-selection rules and instructions (Brand-Gruwel et al., Citation2014; Cagiltay, Citation2006; Hannafin et al., Citation1999; Kicken et al., Citation2008). For instance, procedural guidance may indicate precisely which difficulty level and support level a new task should have, given the performance and/or experienced cognitive load on previous tasks, but without possibilities for students to attune the guidance any further to their learning needs. On the other hand, strategic task-selection guidance provides heuristic rules, which give a less specific task-selection suggestion. For instance, strategic guidance can indicate whether the new task should be from a higher or lower level of difficulty, but it leaves it to the learners to specify the exact level (Brand-Gruwel et al., Citation2014; Cagiltay, Citation2006; Hannafin et al., Citation1999; Kicken et al., Citation2008).

Only few studies investigated procedural task-selection guidance (Kostons et al., Citation2012; Taminiau et al., Citation2013). Their results were mixed, as one study only partially found positive effects on domain-specific skill acquisition (Kostons et al., Citation2012), and another even found higher performance in the control group than the procedural-guidance group (Taminiau et al., Citation2013). Studies on strategic guidance provided participants with generic suggestions on which tasks to select (Kicken et al., Citation2009; Taminiau, Citation2013), but their positive results were small (Kicken et al., Citation2009; Taminiau, Citation2013). Furthermore, none of these studies made a direct comparison between strategic and procedural guidance.

Besides the type of guidance, another factor that might influence the success of guidance is to what extent students conform to guidance. None of the studies described above (Kicken et al., Citation2009; Kostons et al., Citation2012; Taminiau, Citation2013; Taminiau et al., Citation2013) forced students to conform to the task-selection rules, which resulted in students deviating from them to varying degrees. Overall, low conformity could result in lower chances to find significant effects of guidance on task selections and domain-specific skill acquisition, because participants who deviate from the guidance become more similar to participants in the control group. The presented experiments focus on both types of guidance and effects of conformation.

Experiment 1

The first experiment aimed to replicate the findings from previous studies on task-selection guidance, and to directly compare procedural and strategic guidance. Our first research question is whether guidance can help secondary school students to make different task selections than no guidance (Research Question 1a) and whether these different task selections improve domain-specific skill acquisition (Research Question 1b). Furthermore, we investigate whether higher conformity to procedural and/or strategic guidance improves domain-specific skill acquisition (Research Question 2).

Method

Participants and design

Twenty-five third-year students from a secondary school in the Netherlands participated in this experiment (Mage = 14.40, SD = 0.58 years; 18 females). We obtained parental consent for all of them, and randomly assigned them to one of three conditions: Procedural guidance (n = 7), strategic guidance (n = 9), and no guidance (n = 9).

Materials

Participants completed the experiment within an electronic learning environment on computers at their own school, which was specifically designed for this experiment. It contained the following elements.

Tasks and task database. The tasks in this experiment were also used in earlier studies on task selection (e.g. Corbalan et al., Citation2008; Kostons et al., Citation2010). They are genetics problems, and all consist of a problem statement and five solution steps. See Appendix for an example of a task and these solution steps.

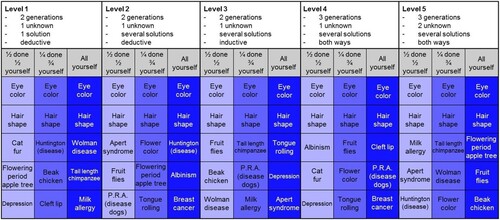

shows the overview diagram from which students selected the tasks. The top white row indicates the five difficulty levels, with the first level containing the easiest tasks and the final level the most difficult. Support varied with the amount of worked-out steps, as indicated by the second grey row. On the highest support level (left), the answers to the first four steps were given as worked-out steps. On the lowest support level (right) participants had to perform all five steps by themselves. Different options were available for content, such as eye color and cat fur.

Figure 1. Overview diagram with all the tasks that could be used for practice in this experiment (translated from Dutch). Participants used this diagram to select 8 tasks.

Prior-knowledge test. The prior-knowledge test measured the participants’ skills at solving these tasks before practice. It consisted of five tasks without support with the same five solution steps, but different contents, as the tasks in the database. Two tasks were equal to the first difficulty level, two were from the second difficulty level, and one was from the third difficulty level. The electronic learning environment automatically scored the answers. Each correctly performed step could earn 1 point, leading to a maximum score of 5 points per task, after which we calculated a mean score for all five tasks. Participants could not skip answers and did not receive information about the correct answers.

Domain-specific skills test. Five tasks measured domain-specific skills after practice. These tasks required the same solution steps as the tasks from the database, but their contents were different. Each of the five tasks represented one of the difficulty levels. The electronic learning environment automatically scored the answers. Each correctly performed step gave 1 point, leading to a maximum score of 5 points per task, after which we calculated a mean score for all five tasks. Participants could not skip answers.

Performance estimate. Participants estimated, on a rating scale from 0 to 5, how many solution steps they performed correctly for each task during practice. The answers were used to provide participants with feedback on their answers.

Mental effort rating. Participants indicated their invested mental effort after each task during practice on a subjective rating scale from 1 (very, very low mental effort) to 9 (very, very high mental effort; Paas, Citation1992). This measured their experienced cognitive load, which served as input for the task-selection advice.

Guidance. There were three types of guidance: Procedural guidance, strategic guidance, and no guidance. Each type was provided by an electronic tutor, which consisted of a static image of a girl with text messages in a text balloon (see ). We based the design of the tutoring dialogue on the analyses of tutor dialogue patterns by Graesser et al. (Citation1995). The tutor in all conditions guided participants through the experiment by indicating what they had to do and when, and asked participants for performance estimates and mental effort ratings.

Figure 2. Image of the tutor from all three guidance groups, while asking for a performance estimate (translated from Dutch).

Procedural guidance. Procedural guidance provided immediate feedback on the performance estimates, and task-selection advice. The feedback consisted of a statement whether an estimate was correct or not, and if it was incorrect, how many steps were performed correctly.

The procedural task-selection advice () used the actual performance scores for an advice about difficulty, and the mental effort ratings for the advice about support. Participants who performed well were advised to proceed to the next difficulty level. However, the advice after low performance was to retry a difficulty level or go back to an easier level. Similarly, when participants had indicated that the previous task was highly effortful, the tutor advised them to select a task with higher support. If the previous task had cost them little effort, the tutor advised them to select a task with equal or less support. Participants did not receive advice about the selection of content. The tutor communicated the advice by repeating the last given performance estimates and mental effort ratings, showing , the overview diagram (), and explaining how to use the scores and the table for selecting a new task. The overview diagram showed which tasks participants had performed previously.

Table 1. Procedural advice given to participants for task selection (translated from Dutch).

The tutor asked participants if they wanted to reconsider any selection that deviated from the advice, and provided the opportunity to return to the overview diagram to make a new selection, or to proceed and make the deviating task. The tutor did not provide advice for the first task, so participants received task-selection advice seven times.

Strategic guidance. Strategic guidance provided feedback and task-selection advice in a similar way as the procedural tutor, except that it gave more general directions for task selection than the procedural tutor. The feedback on an incorrect performance estimate only indicated whether participants had performed more or less steps correct than their original estimate.

The strategic task-selection advice had the same structure as the procedural advice. The strategic advice also did not give advice about the selection of content, allowed participants to deviate from the advice, and did not give advice for the first selection. The difference was that the strategic advice did not indicate by how many levels participants had to change the difficulty and support level of the next task, but instead provided a general direction (i.e. go to a higher or lower level) which left room to adapt it to personal needs.

Control guidance. The control guidance provided neither feedback on the performance estimates nor task-selection advice. Instead, it simply asked students which task they wanted to select next.

Training videos. Participants watched four videos of students correctly solving the five steps of the genetics tasks. These videos acquainted participants with the tasks, which could help them during the solution process, and when making their first selection during practice.

Procedure

After performing the prior-knowledge test, participants watched the training videos. Next, they practiced eight tasks from the database. Finally, participants performed the domain-specific skills test. There was no time limit; mean time spent on the experiment was 43 min and 33 s (SD = 9 min and 57 s).

Data analyses

We analyzed the selected difficulty and support levels by splitting them between the first four and final four selected tasks. Thus, we calculated four mean selected levels: (1) difficulty level tasks 1–4, (2) difficulty level tasks 5–8, (3) support level tasks 1–4, and (4) support level tasks 5–8. We measured conformity by counting the instances that participants selected a task that followed the advice. We used nonparametric tests for all analyses, because of the small sample sizes. This also means caution should be taken regarding the result of the regression analysis, as it does not generalize to other samples.

Results

shows the median scores from Experiment 1. The answer to the final question on the domain-specific skills test of one participant in the strategic-guidance condition, and the final two answers on the domain-specific skills test of one participant in the no-guidance condition were missing. Therefore, these two participants were excluded from any analyses involving the domain-specific skills test scores.

Research question 1a: Does guidance help secondary school students to make different task selections than no guidance?

Table 2. Medians from Experiment 1.

We first analyzed the differences in selected difficulty and support levels between the conditions with a Kruskal–Wallis test, which revealed no significant differences in the selected difficulty levels between the three types of guidance on tasks 1–4 (Mdnprocedural = 2.25, Mdnstrategic = 2.00, Mdncontrol = 2.00), H(2) = 2.82, p = .244, and no significant differences on tasks 5–8 (Mdnprocedural = 3.25, Mdnstrategic = 3.25, Mdncontrol = 2.50), H(2) = 3.42, p = .181. There were also no significant differences in the selected support levels between the three conditions on tasks 1–4 (Mdnprocedural = 1.25, Mdnstrategic = 1.00, Mdncontrol = 1.00), H(2) = 0.30, p = .862, and no significant differences on tasks 5–8 (Mdnprocedural = 1.50, Mdnstrategic = 0.75, Mdncontrol = 1.00), H(2) = 1.49, p = .475. Thus, participants in all three guidance conditions selected tasks from equal difficulty and support levels on both the first four tasks and the final four tasks.

Next, we compared the differences in the selected levels between the first four and final four tasks for each separate type of guidance. A Wilcoxon-signed rank test for procedural guidance showed a significant increase in the selected difficulty levels between tasks 1–4 (Mdn = 2.25) and tasks 5–8 (Mdn = 3.25), z = −2.23, p = .026, r = −0.60. There was no significant difference in the selected support levels between tasks 1–4 (Mdn = 1.25) and 5–8 (Mdn = 1.50), z = −1.51, p = .131. For strategic guidance, there was a marginally significant increase in the selected difficulty levels between tasks 1–4 (Mdn = 2.00) and tasks 5–8 (Mdn = 3.25), z = −1.84, p = .065, r = −0.43. There was no significant difference in the selected support levels between tasks 1–4 (Mdn = 1.00) and 5–8 (Mdn = 0.75), z = −0.65, p = .518. For the no-guidance condition, there was a significant increase in the selected difficulty levels between tasks 1–4 (Mdn = 2.00) and tasks 5–8 (Mdn = 2.50), z = −2.20, p = .028, r = −0.52. There was no significant difference in the selected support levels between tasks 1–4 (Mdn = 1.00) and 5–8 (Mdn = 1.00), z = −0.61, p = .539. Thus, in all types of guidance, participants selected roughly the same support levels on both the first four and final four tasks. However, participants in all conditions selected more difficult tasks during the final four tasks than during the first four tasks.

Research question 1b: Do different task selections improve domain-specific skill acquisition?

Before comparing the differences in domain-specific skill acquisition, we first checked for prior-knowledge differences. A Kruskal–Wallis test showed that the prior-knowledge test scores were not significantly different between the three groups (Mdnprocedural = 0.60, Mdnstrategic = 0.20, Mdncontrol = 0.40), H(2) = 1.02, p = .600. Next, we compared the domain-specific skills test scores, which were also not significantly different between conditions (Mdnprocedural = 0.80, Mdnstrategic = 3.20, Mdncontrol = 2.50), H(2) = 0.83, p = .661. So, there were no significant differences in domain-specific skill acquisition between the conditions.

Research question 2: Does higher conformity to procedural and/or strategic guidance improve domain-specific skill acquisition?

We investigated whether higher conformity to procedural and strategic guidance improves domain-specific skills. Participants conformed a median of 5.00 times (out of the 7.00 times they received advice) in the procedural-guidance condition, and a median of 4.00 times in the strategic-guidance condition. Advice conformity in both groups was not a significant predictor for the domain-specific skills test scores, b = 0.30, t(13) = 1.47, p = .165. Thus, participants who conformed more to the guidance did not perform better on the domain-specific skills test than participants who conformed less.

Discussion

Our results for Research Question 1a suggest that procedural and strategic guidance did not encourage participants to make other selections than the control group. This is congruent with earlier studies showing no or only small effects of procedural or strategic guidance (Kicken et al., Citation2009; Kostons et al., Citation2012; Taminiau, Citation2013; Taminiau et al., Citation2013). However, our results did show an increase in selected difficulty levels in all three conditions.

Because the different types of guidance did not stimulate participants to select different tasks, it is logical that the results for Research Question 1b (whether guidance indirectly improves domain-specific skill acquisition) and Research Question 2 (whether higher conformity improves domain-specific skill acquisition) were also not significant.

We identified three issues that might have caused the lack of effect from the procedural and strategic guidance. First, this experiment was underpowered due to its low sample size. This means the results should be interpreted with caution. However, as mentioned above, they are congruent with other studies in which these guidance types were investigated separately (Kicken et al., Citation2009; Kostons et al., Citation2012; Taminiau, Citation2013; Taminiau et al., Citation2013).

Second, the no-guidance condition also asked for performance estimates and mental effort ratings, which might have prompted participants in this condition to consider these factors when selecting new tasks as in the experimental conditions. Thus, this might have made the control group more similar to the experimental groups than was intended.

Third, the procedural and strategic-guidance conditions seemed to lack a good alignment of feedback and task-selection advice (i.e. feed forward). Feedback on task performance provides information about how well performance has been, and what still needs to be done to attain the learning goal. Feed forward, such as task-selection advice, provides information about which steps can be taken next to enhance the learning process (Hattie & Timperley, Citation2007). Effective feedback and feed forward needs to be simple, and it needs to activate students to take the necessary steps to reach the learning goal (Boud & Molloy, Citation2013; Hattie & Timperley, Citation2007). Based on this, we would expect that the procedural feedback might be more effective in this experiment than the strategic feedback, because the procedural feedback provided exact information on which parts of the tasks require further learning. The strategic feedback was more complex as participants had to conjecture which exact parts required further learning. Also, we would expect that the strategic feed forward would be more effective than the procedural feed forward, because it was more activating as it required participants to consciously decide which exact level they would like to select. This was unlike the procedural feed forward, which participants could follow without further thinking.

In sum, in the procedural guidance, feedback was simple but feed forward was not activating. In the strategic guidance, feed forward was activating but feedback was more complex. Hence, we would expect that a combination of procedural feedback and strategic feed forward would be more effective, because that would provide a better alignment between simple and activating components than only procedural feedback and feedforward, or only strategic feedback and feed forward. Thus, the aim of Experiment 2 was to investigate a new type of guidance which provided a combination of simple feedback and activating feed forward. We called this inferential guidance.

Experiment 2

The main goal of the second experiment was to investigate if inferential guidance can positively affect the task-selection process, and improve domain-specific skills acquisition through this. We retested the research questions from Experiment 1 with modified guidance conditions. First, we aimed to create a control condition that would not prompt participants to essential factors for task selection by removing the performance estimates and mental effort ratings from the original control condition. Second, we combined the procedural feedback with the strategic feed forward in a new guidance condition. This inferential guidance indicated exactly which steps a participant had performed correctly after a performance estimate, and used this as input for nonspecific task-selection advice. The first research question in Experiment 2 is whether inferential guidance can help secondary school students make different task selections than no guidance (Research Question 1a), and whether these different task selections improve domain-specific skill acquisition (Research Question 1b). The second research question is whether higher conformity to inferential task-selection guidance improves domain-specific skill acquisition (Research Question 2).

Method

Participants and design

We obtained informed and parental consent for 40 participants (Mage = 14.18, SD = 0.50 years, 27 females) and randomly assigned them to one of two conditions: 23 participants in the inferential-guidance condition and 17 participants in the no-guidance condition.

Materials

The tasks, task database, performance estimates, mental effort ratings and the domain-specific skills test were the same as in Experiment 1. The differences between the materials from Experiments 1 and 2 are explained below.

Guidance. Inferential guidance consisted of a combination of the procedural feedback and the strategic feed forward from Experiment 1. Thus, inferential guidance gave an exact specification of how many steps a participant had performed correctly, exactly like the procedural tutor did. Furthermore, it provided strategic task-selection advice by indicating whether a student should go to higher or lower difficulty and support levels, based on their performance scores and mental effort ratings from the previous task. The no-guidance tutor provided instructions for each part of the experiment. It did not ask for performance estimates and mental effort ratings, and did not give task-selection advice.

Training videos. Two videos demonstrated the correct solution procedure for the tasks. We showed two videos instead of four, because participants in Experiment 1 expressed frustration about the total length of the experiment and the repetition of information in the videos.

Prior-knowledge test. The prior-knowledge test consisted of the three easiest tasks from the prior-knowledge test in Experiment 1. We shortened the test from five to three tasks, and allowed participants to leave answers blank, because participants in Experiment 1 expressed frustration about having to solve tasks without receiving instructions about the correct solution procedure first. These changes made the prior-knowledge test more different from the domain-specific skills test than it was in Experiment 1, but they also made it less strenuous.

Procedure

The experiment consisted of two sessions, with a maximum of 1 hour per session (i.e. each session lasted one regular school lesson). The first session started with the prior-knowledge test, after which participants watched the videos. Next, participants selected and worked on three tasks from the database. The second session took place during their next lesson (on a different day), in which participants from both conditions selected and worked on another five tasks. Finally, participants performed the domain-specific skills test.

Data analyses

Analyses were performed in the same way as in Experiment 1.

Results

shows the median scores from Experiment 2. The final two answers on the domain-specific skills test were missing for one participant in the inferential-guidance condition. Therefore, this participant was excluded from any analyses involving the domain-specific skills test scores.

Research question 1a: Does inferential guidance help secondary school students to make different task selections than no guidance?

Table 3. Medians from Experiment 2.

We first compared differences in selected difficulty and support levels between the two conditions on tasks 1–4 and tasks 5–8. A Mann–Whitney U test revealed that participants in the inferential-guidance condition selected more difficult tasks than in the no-guidance condition on both tasks 1–4 (Mdninferential = 2.50, Mdncontrol = 1.75), U = 112.00, z = −2.30, p = .021, r = −0.36, and tasks 5–8 (Mdninferential = 3.50, Mdncontrol = 1.75), U = 91.50, z = −2.86, p = .004, r = −0.45. However, there was no significant difference in selected support levels between the two conditions on tasks 1–4 (Mdninferential = 1.25, Mdncontrol = 1.25), U = 168.00, z = −0.76, p = .448. On tasks 5-8, participants in the inferential-guidance condition selected tasks with significantly less support (Mdn = 0.75) than in the no-guidance condition (Mdn = 1.50), U = 84.00, z = −3.08, p = .002, r = −0.49. So, participants in the inferential-guidance condition selected more difficult tasks than in the no-guidance condition during both tasks 1–4 and tasks 5–8, and they selected tasks with less support during tasks 5–8.

Furthermore, when looking within each guidance condition, Wilcoxon signed-rank tests revealed that participants in the inferential-guidance condition selected more difficult tasks on tasks 5–8 (Mdn = 3.50) than on tasks 1–4 (Mdn = 2.50), z = −3.88, p < .001, r = −0.57. They also selected tasks with less support on tasks 5–8 (Mdn = 0.75) than on tasks 1–4 (Mdn = 1.25), z = −2.69, p = .007, r = −0.40. In the no-guidance condition, there were no significant differences between tasks 1–4 and 5–8 for both the selected difficulty levels (Mdntasks1-4 = 1.75, Mdntasks5-8 = 1.75), z = −1.30, p = .193, and the selected support levels (Mdntasks1-4 = 1.25, Mdntasks5-8 = 1.50), z = −0.63, p = .528. Thus, participants in the no-guidance condition tended to select rather easy tasks with high support for all tasks, whereas participants in the inferential guidance condition selected more difficult tasks with less support on tasks 5–8 than on tasks 1–4.

Research question 1b: Do these different task selections improve domain-specific skill acquisition?

A Mann–Whitney U test revealed no significant differences on the prior-knowledge test scores between the inferential (Mdn = 0.33) and no-guidance conditions (Mdn = 0.33), U = 151.50, z = −1.25, p = .211. The difference for the domain-specific skills test scores between the inferential-guidance (Mdn = 1.30) and no-guidance conditions (Mdn = 2.20) was also not significant, U = 181.50, z = −0.16, p = .876.

Research question 2: Does higher conformity to inferential guidance improve domain-specific skill acquisition?

Median conformity to the inferential guidance was 4.00 times. A regression analysis showed that advice conformity significantly predicted domain-specific skills test scores, b = 0.24, t(20) = 3.09, p = .006, R2 = .32. This shows that participants scored higher on the domain-specific skills test when they conformed more to the advice.

Discussion

Results from Research Question 1a suggest that task selections were different in the inferential guidance condition and the no-guidance condition. Students in the inferential-guidance condition selected more difficult tasks throughout the experiment, and practiced with tasks with less support during the second half than participants in the no-guidance condition. Moreover, they showed a progression through the levels which is supported by the simplifying-conditions method (Reigeluth, Citation1999) and cognitive load theory (Sweller et al., Citation1998), whereas participants in the no-guidance condition did not. Nevertheless, it is difficult to simply subscribe these differences to the effects of guidance, because there was also a reasonable amount of nonconformity. However, results from Research Question 2 suggested that higher conformity to inferential guidance improves domain-specific skill acquisition.

Results from Research Question 1b showed no significant difference between the inferential and no-guidance conditions in acquired domain-specific skills at the end of the experiment. It is possible that the effect of inferential guidance on task selection was too small for an indirect effect on domain-specific skill acquisition, which might have been caused by the relatively low number of practice tasks and the amount of nonconformity. The cognitive strategy resulting from the inferential guidance might only positively affect learning outcomes if the strategy is automated, which is more likely to occur after more practice tasks with high conformity to the inferential guidance.

General discussion

The current study aimed to investigate the effectiveness of guidance on improving task selection and domain-specific skills. Results from two experiments suggest that inferential guidance helped participants to make more appropriate task selections by stimulating them to select tasks from easy to difficult, and from high to low support, as compared to the no-guidance condition. Inferential guidance also seemed to improve domain-specific skill acquisition through the improved task selections, but only when participants conformed to the advice. This positive effect could have been caused by the combination of simple feedback with activating feed forward.

When looking at the alignment of feedback and feed forward in previous studies on task-selection guidance, it seems that studies on procedural guidance (Kostons et al., Citation2012; Taminiau et al., Citation2013) did not provide any feedback at all on task performance. Furthermore, their feed forwards do not seem to have been activating, because participants received an exact step size (Kostons et al., Citation2012) or specified levels (Taminiau et al., Citation2013) for each task selection. The studies on strategic guidance (Kicken et al., Citation2009; Taminiau, Citation2013) did provide both feedback and feed forward. It seems that these feed forwards were more activating in the experimental conditions than in the control conditions. The feedback in Taminiau (Citation2013) was complex. It is unclear whether the feedback in Kicken et al. (Citation2009) was simple or complex.

The strategic (complex) feedback in Experiment 1 could have caused a discrepancy between the steps the students thought they had performed correctly and the steps that actually were correct. The procedural (simple) feedback did indicate precisely which steps students performed correctly, which makes it unlikely for the same discrepancy to occur. Thus, the procedural feedback was likely easier to use by students to improve their task performance.

Our results show that participants did not always conform to the guidance, which is in line with previous studies (Kicken et al., Citation2009; Taminiau et al., Citation2013, Citation2015). However, inferential guidance still had a positive effect despite of this. This raises the question whether a certain degree of learner control might even be a useful addition to task-selection guidance. Future studies could focus on this by comparing groups of students who have various degrees of learner control when receiving task-selection guidance. Related to this, it would also be interesting to investigate if there are other factors that influence conformity, such as motivation.

In addition, asking questions seems to be an important prompt in itself, because the no-guidance condition performed unexpectedly well in the first experiment. Possibly, the questions might have stimulated participants to reflect on their performance and invested effort, and use this as input for task selection without literally being instructed to do so. This surprising effectiveness of questions as prompts is encouraging for educational practice, because it suggests that only prompting students to consider previous performance and invested mental effort can already improve task selections. These prompts consume less time and resources than prompts by a teacher, or a full task-selection advice. More research is needed to verify this effect.

The results from Experiment 2 suggested that higher conformity to the guidance is predictive of higher domain-specific skills test scores. However, this was not the case in Experiment 1. Furthermore, the effect of the amount of conformity also depends on the guidance itself. Higher conformity would only be beneficial for students if the guidance is well-designed. Even though the design guidelines used in this study are well-established (e.g. Van Merriënboer & Kirschner, Citation2018), there is also evidence that the effectiveness of these guidelines depends on how they are operationalized and the circumstances under which they are applied. For instance, the “simple-to-complex” guideline that is advocated here can be operationalized with the simplifying-conditions method (Reigeluth, Citation1999) as was done in this study. This method prescribes that students start working on the simplest version of the whole task and end with the most complex version of the whole task. However, this design principle can also be operationalized through emphasis manipulation (Gopher et al., Citation1989). That method prescribes that students start working on one of the task aspects in the whole task context, and end by working on all task aspects of the whole task simultaneously. This also applies to the scaffolding guideline. Support can decrease in a forward or backward manner, with varying effects (Renkl & Atkinson, Citation2003). Furthermore, the operationalization of the design guidelines could interact differently with student characteristics, such as prior knowledge, or contextual characteristics such as time pressure. So, future research is needed to further investigate under which circumstances these instructional methods can enhance practice opportunities. The effects of conformity to guidance would also depend on these circumstances.

In both experiments, there was no difference between the conditions on the domain-specific skills test. One possible explanation could be that there were relatively few practice tasks, which is also an issue in other task-selection experiments (Taminiau, Citation2013; Taminiau et al., Citation2013). Furthermore, the limited effects on domain-specific skill acquisition in this study are congruent with other studies on strategic guidance (Kicken et al., Citation2009; Taminiau, Citation2013). Future studies could provide more practice tasks and transfer tests, which would have the additional benefit of the advice having more time to take effect.

There were several limitations in this study. First, Experiment 1 lacked power because of its small sample size. Therefore, its results should be interpreted with caution. The experiment does present a direct comparison between procedural and strategic guidance, which are usually tested in separate studies. The results from Experiment 1 do follow a similar pattern as the results in Experiment 2 and support the assumptions behind inferential guidance about the combination of feedback and feed forward. However, more research is needed with larger sample sizes to further examine these effects. Second, it is unknown why students deviated from the advice. Possibly, these students critically considered the advice, but decided that another task would better fit their needs. If that is the case, whether they benefit from guidance or not depends on which factors they base their decisions on. Future research could explore this by inquiring why students did or did not conform to the guidance. Third, as mentioned above, the eight tasks could have been too few to really master both the domain-specific skill and the task-selection skill. If more time had been available, students could have practiced with more tasks during more sessions. Fourth, we did not directly measure task-selection skills. Future research could investigate the task-selection patterns between the different groups in more detail, for instance by investigating how adaptive the selections in the different guidance groups were. If guidance is effective in improving task-selection skills, students might adapt their selections more to their performance in a guidance condition than in a control condition.

In conclusion, the current study tried to design a type of guidance that could change task selections, and indirectly improve domain-specific skill acquisition through these enhanced practice tasks. Results indicate that task-selection guidance might affect the task-selection process if simple feedback is combined with activating feed forward. The effectiveness of these different selections is theoretically supported by the simplifying-conditions method (Reigeluth, Citation1999) and cognitive load theory (Sweller et al., Citation1998). The effects of merely providing self-assessment prompts could be investigated further in future research, as these effects remained unclear in this study. Taken together, the results of the two experiments suggest that the alignment of feedback and feed forward might be important when designing task-selection guidance, as procedural and strategic guidance might have different effects when they are implemented as feedback or feed forward.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Michelle L. Nugteren

Michelle L. Nugteren received a master’s degree in Educational Psychology after graduating from Erasmus University Rotterdam in 2012. She was also enrolled in the Advanced Research Program at the same university. She was hired as a PhD student on the project ‘Scaffolding self-regulation through peer-tutor guidance: Effects on the acquisition of domain-specific skills and self-regulated learning skills’ in 2013 at the Open University of the Netherlands. This project focuses on the effects of tutor support on task selection and domain-specific skill acquisition. Her other research interests include eye tracking and the four-component instructional design model.

Halszka Jarodzka

Dr. Halszka Jarodzka works as an associate professor at the Welten Institute, Research Centre for Learning, Teaching and Technology at the Open University of the Netherlands, where she chairs a research group on eye tracking in education (https://www.ou.nl/welten-learning-and-expertise-development). She also works part-time as a visiting scholar at the eye tracking laboratory of Lund University in Sweden (http://projekt.ht.lu.se/en/digital-classroom/). Furthermore, she is the founder and coordinator of the Special Interest Group ‘online measures of learning processes’ of the European Association of Research on Learning and Instruction (https://www.earli.org/node/50). Her main research interests lie in the use of eye tracking to understand and improve learning, instruction, and expertise development.

Liesbeth Kester

Liesbeth Kester is full professor Educational Sciences at Utrecht University (UU). She is chair of the UU division Education and director of the academic master Educational Sciences of the UU. She is also educational director of the Dutch Interuniversity Centre for Educational Research (ICO). Her expertise includes multimedia learning, hypermedia learning, personalized learning, cognitive aspects of learning, including for example, prior knowledge and learning, testing and retention or worked examples and learning, and designing and developing flexible learning environments.

Jeroen J. G. Van Merriënboer

Jeroen J. G. van Merriënboer is full professor of Learning and Instruction and Research Director of the School of Health Profession Education (SHE) at Maastricht University, the Netherlands. He has been trained as an experimental psychologist at the VU University Amsterdam and received a PhD in Educational Sciences from the University of Twente (1990). His main area of expertise is learning and instruction in the health professions, in particular instructional design and the use of new media in innovative learning environments. He has published widely on cognitive load theory, four-component instructional design (4C/ID, see www.tensteps.info), and lifelong learning in the professions. He holds several academic awards for his publications and his international contributions. His books Training Complex Cognitive Skills and Ten Steps to Complex Learning had a major impact on the field of instructional design and have been translated in several languages. He published over 350 articles and book chapters and more than 35 PhD students completed their theses under his supervision.

References

- Bell, B. S., & Kozlowski, S. W. J. (2002). Adaptive guidance: Enhancing self-regulation, knowledge, and performance in technology-based training. Personnel Psychology, 55, 267–306. https://doi.org/10.1111/j.1744-6570.2002.tb00111.x

- Boud, D., & Molloy, E. (2013). Rethinking models of feedback for learning: The challenge of design. Assessment & Evaluation in Higher Education, 38, 698–712. https://doi.org/10.1080/02602938.2012.691462

- Brand-Gruwel, S., Kester, L., Kicken, W., & Kirschner, P. A. (2014). Learning ability development in flexible learning environments. In J. M. Spector, M. D. Merrill, J. Elen, & M. J. Bishop (Eds.), Handbook of research on educational communications and technology (pp. 363–372). Springer. https://doi.org/10.1007/978-1-4614-3185-5_29

- Brusilovsky, P. L. (1992). A framework for intelligent knowledge sequencing and task sequencing. In C. Frasson, G. Gauthier, & G. I. McCalla (Eds.), Intelligent tutoring systems (pp. 499–506). Springer. https://doi.org/10.1007/3-540-55606-0_59

- Cagiltay, K. (2006). Scaffolding strategies in electronic performance support systems: Types and challenges. Innovations in Education and Teaching International, 43, 93–103. https://doi.org/10.1080/14703290500467673

- Camp, G., Paas, F., Rikers, R., & Van Merriënboer, J. (2001). Dynamic problem selection in air traffic control training: A comparison between performance, mental effort and mental efficiency. Computers in Human Behavior, 17, 575–595. https://doi.org/10.1016/S0747-5632(01)00028-0

- Corbalan, G., Kester, L., & Van Merriënboer, J. J. G. (2008). Selecting learning tasks: Effects of adaptation and shared control on learning efficiency and task involvement. Contemporary Educational Psychology, 33, 733–756. https://doi.org/10.1016/j.cedpsych.2008.02.003

- Detterman, D. K., & Sternberg, R. J. (eds.). (1993). Transfer on trial: Intelligence, cognition, and instruction. Ablex Publishing.

- Gopher, D., Weil, M., & Siegel, D. (1989). Practice under changing priorities: An approach to the training of complex skills. Acta Psychologica, 71, 147–177. https://doi.org/10.1016/0001-6918(89)90007-3

- Graesser, A. C., Person, N. K., & Magliano, J. P. (1995). Collaborative dialogue patterns in naturalistic one-to-one tutoring. Applied Cognitive Psychology, 9, 495–522. https://doi.org/10.1002/acp.2350090604

- Hannafin, M., Land, S., & Oliver, K. (1999). Open learning environments: Foundations, methods, and models. Instructional-Design Theories and Models: A New Paradigm of Instructional Theory, 2, 115–140. https://doi.org/10.4324/9781410603784-12

- Hattie, J., & Timperley, H. (2007). The power of feedback. Review of Educational Research, 77, 81–112. https://doi.org/10.3102/003465430298487

- Kicken, W., Brand-Gruwel, S., & Van Merriënboer, J. J. G. (2008). Scaffolding advice on task selection: A safe path toward self-directed learning in on-demand education. Journal of Vocational Education and Training, 60, 223–239. https://doi.org/10.1080/13636820802305561

- Kicken, W., Brand-Gruwel, S., Van Merriënboer, J. J. G., & Slot, W. (2009). The effects of portfolio-based advice on the development of self-directed learning skills in secondary vocational education. Educational Technology Research and Development, 57, 439–460. https://doi.org/10.1007/s11423-009-9111-3

- Kostons, D., Van Gog, T., & Paas, F. (2010). Self-assessment and task selection in learner-controlled instruction: Differences between effective and ineffective learners. Computers and Education, 54, 932–940. https://doi.org/10.1016/j.compedu.2009.09.025

- Kostons, D., Van Gog, T., & Paas, F. (2012). Training self-assessment and task-selection skills: A cognitive approach to improving self-regulated learning. Learning and Instruction, 22, 121–132. https://doi.org/10.1016/j.learninstruc.2011.08.004

- Kulik, C.-L. C., Kulik, J. A., & Bangert-Drowns, R. L. (1990). Effectiveness of mastery learning programs: A meta-analysis. Review of Educational Research, 60, 265–299. https://doi.org/10.3102/00346543060002265

- Nugteren, M. L., Jarodzka, H., Kester, L., & Van Merriënboer, J. J. G. (2018). Self-regulation of secondary school students: Self-assessments are inaccurate and insufficiently used for learning-task selection. Instructional Science, 46, 357–381. https://doi.org/10.1007/s11251-018-9448-2

- Paas, F. (1992). Training strategies for attaining transfer of problem-solving skill in statistics: A cognitive-load approach. Journal of Educational Psychology, 84, 429–434. https://doi.org/10.1037/0022-0663.84.4.429

- Reigeluth, C. M. (1999). The elaboration theory: Guidance for scope and sequence decisions. In C. M. Reigeluth (Ed.), Instructional design theories and models: A new paradigm of instructional theory volume II (pp. 425–453). Lawrence Erlbaum Associates, Inc.

- Renkl, A., & Atkinson, R. K. (2003). Structuring the transition from example study to problem solving in cognitive skill acquisition: A cognitive load perspective. Educational Psychologist, 38, 15–22. https://doi.org/10.1207/S15326985EP3801_3

- Robinson, P. (2001). Task complexity, task difficulty, and task production: Exploring interactions in a componential framework. Applied Linguistics, 22, 27–57. https://doi.org/10.1093/applin/22.1.27

- Sweller, J., Van Merriënboer, J. J. G., & Paas, F. G. (1998). Cognitive architecture and instructional design. Educational Psychology Review, 10, 251–296. https://doi.org/10.1023/A:1022193728205

- Taminiau, E. M. C. (2013). Advisory models for on-demand learning (Unpublished doctoral dissertation, Open University of the Netherlands, Heerlen, Netherlands).

- Taminiau, E. M., Kester, L., Corbalan, G., Alessi, S. M., Moxnes, E., Gijselaers, W. H., … Van Merriënboer, J. J. G. (2013). Why advice on task selection may hamper learning in on-demand education. Computers in Human Behavior, 29, 145–154. https://doi.org/10.1016/j.chb.2012.07.028

- Taminiau, E. M. C., Kester, L., Corbalan, G., Spector, J. M., Kirschner, P. A., & Van Merriënboer, J. J. G. (2015). Designing on-demand education for simultaneous development of domain-specific and self-directed learning skills. Journal of Computer Assisted Learning, 31, 405–421. https://doi.org/10.1111/jcal.12076

- Van Merriënboer, J. J. G., & Kirschner, P. A. (2018). Ten steps to complex learning: A systematic approach to four-component instructional design (3rd Rev. ed.). Routledge.

Appendix

Example of a high support task from difficulty level 1, translated from Dutch.