ABSTRACT

This study explored the effects of directed and undirected online peer feedback types on students’ peer feedback performance, argumentative essay writing skills, and acquisition of domain-specific knowledge. The study used a pre-test and post-test design with four conditions (feedback, feedforward, a combination of feedback and feedforward, and undirected feedback). In this exploratory study, 221 undergraduate students, who were randomly assigned to dyads, engaged in discussions about the pros and cons of “Genetically Modified Organisms (GMOs)”, provided feedback to peers, and wrote an argumentative essay regarding the topic. Results indicated significant differences among the conditions in terms of the quality of provided feedback. This implies that the peer feedback quality can be enhanced or diminished depending on its type. Results also revealed a significant improvement in students’ argumentative essay performance and domain-specific knowledge acquisition without significant differences among conditions. We discuss how the such increase in the quality of essays and learning outcomes might be related to the power of peer feedback regardless of the feedback type. We also discuss why using multiple instructional scaffolds may result in over-scripting that may diminish the power of peer feedback and the effects of the scaffolds themselves in online learning environments.

1. Introduction

Argumentation is an essential competence across domains and aspects of daily life. Argumentation competence comprehends the capacity to argue, think critically, and reason logically for justifying and contrasting our positions and opinions against the positions and opinions of others. Argumentation in academic settings encompasses the capacity to carry out comparable tasks and continue learning in the future (Noroozi et al., Citation2018). Acquiring argumentation competence is crucial for students in higher education as it is a representation of students’ high-level cognitive process thinking skills to critically argue complex and controversial issues in a certain scientific field of study (Fan & Chen, Citation2021; Mokhtar et al., Citation2020). One of the most common ways to practice argumentation skills in higher education is to write an argumentative essay on controversial scientific topics (Liunokas, Citation2020; Wingate, Citation2012). According to the literature, higher education students’ argumentative essay writing skills are typically below the proficiency level required to deliver writing tasks (Cooper et al., Citation1984; Kellogg & Whiteford, Citation2009).

A good argumentative essay writing should include intuitive opinions and feelings on the topic. The reasoning behind this is, that students, and most people, possess gut feelings and intuitive opinions on the various controversial scientific issues, although they are not familiar with the topic (Noroozi et al., Citation2016). Students’ opinion is followed by arguments and data supporting them. Moreover, essays should incorporate arguments opposing or weakening the opinion (counter-arguments), and consider and refute the point of view of opponents. Next, the arguments in favor and against the topic should be integrated considering the opinions of the advocates and the opponents of the topic in question (Andrews, Citation1995; Noroozi et al., Citation2016; Toulmin, Citation1958; Wood, Citation2001). After the integration, students should provide their conclusions since it is common that students’ final opinion on the topic remains unclear after arguing in favor and against the topic (Noroozi et al., Citation2016). Finally, the presentation and specifics of the aforementioned elements should be tailored to the domain in question as variations exist from domain to domain (Wingate, Citation2012).

Unfortunately, sound argumentation and depth of elaboration are rather infrequent in students’ essays (Cooper et al., Citation1984; Kellogg & Whiteford, Citation2009). Such poor performance in argumentative essay writing could be due to different reasons. In some cases, students are not aware of the characteristics of a good argumentative essay (Noroozi et al., Citation2016). In some other cases, while students possess argumentation knowledge, they have difficulties putting their argumentation knowledge into practice such as writing an argumentative essay (Noroozi et al., Citation2013). Other scholars such as Driver et al. (Citation2000) and Osborne (Citation2010) argue that the problem cause is that argumentation is frequently developed indirectly and informally in the classroom. Cooper et al. (Citation1984) argue that the root of the problem is insufficient task practice in the curriculum, along with the considerable time and effort required in grading essays to provide feedback to students. Even when argumentation is considered in the classroom, a teacher can only supervise and support one or a small group of students (Bloom, Citation1984). In large-size classes which is common in online learning settings, it requires an extreme and almost unaffordable workload for teachers to provide personalized effective feedback on essay writing (Banihashem et al., Citation2021, Citation2022; Er et al., Citation2021). To address the issue of insufficient argumentative essay writing skills, researchers, teachers, and practitioners have looked for instructional practices to foster students’ writing motivation and types that improve the quality of essay writing and the acquisition of domain-specific knowledge through argumentation (Bruning & Horn, Citation2000; Noroozi et al., Citation2016).

In the literature, peer learning was found as one of the most relevant instructional practices to improve students’ essay-writing capacities (Baker, Citation2016; Boud et al., Citation1999; Topping, Citation2005). In peer learning “students learn with and from each other without the immediate intervention of a teacher” (Boud et al., Citation1999, p. 413). According to the literature, the provision of peer feedback in peer learning is a powerful instructional strategy to foster learning (Gabelica et al., Citation2012; Hattie & Gan, Citation2011; Hattie & Timperley, Citation2007; Noroozi et al., Citation2020; Shute, Citation2008). Peer feedback is defined as the action taken by a peer to provide information regarding some aspect(s) of one's task performance or understanding (Hattie & Timperley, Citation2007). In the context of argumentative essay writing, peer feedback is an effective instructional strategy, particularly in large-size online courses where a large cohort of students participate and teachers are left in a difficult position in providing one-by-one feedback on students’ essay performance due to extreme workload (Er et al., Citation2021; Noroozi et al., Citation2022).

Prior studies suggest that using peer feedback can improve students’ argumentative essay writing skills (Latifi et al., Citation2020; Citation2021a; Morawski & Budke, Citation2019; Noroozi et al., Citation2016; Citation2022a). For example, Morawski and Budke (Citation2019) found that different types of peer feedback including online written and oral peer feedback can improve students’ argumentative essay writing performance in terms of the quality of complexity in arguments, quality of content-wise arguments, and quality of evidence provided in arguments. According to the literature, different peer feedback types can be used for enhancing students’ argumentation skills in essay writing (Latifi & Noroozi, Citation2021). In general, peer feedback types can be categorized into directed and undirected peer feedback (Gielen & De Wever, Citation2015; Latifi et al., Citation2021b). Directed peer feedback means that students receive support on how to provide feedback and this support can be in different forms such as training, scripts, worked examples, question prompts, sentence openers, templates, and checklists (e.g. Latifi et al., Citation2020). The main idea behind this type of feedback is that students do not have enough knowledge and skills in giving feedback themselves and they should be directed (Noroozi et al., Citation2016). On the other hand, undirected feedback means that students should not receive support when providing feedback to their learning partners and the logic behind this idea is that this support could limit students’ creativity in delivering effective feedback (Jermann & Dillenbourg, Citation2003; Tchounikine, Citation2008). Although both types have their pros and cons, the review of the literature does not provide sufficient evidence on which peer feedback type is more effective, especially in online learning environments. In online learning environments, students’ feedback performance can be recorded and tracked via online learning systems (Filius et al., Citation2019; Xie, Citation2013). This creates opportunities to track and monitor students’ performance and learning process during peer feedback activities. In online learning settings, students have flexibility in providing feedback anytime and anywhere (Du et al., Citation2019), and they can also have opportunities for anonymous feedback which can influence students’ honesty and level of criticism in delivering feedback (Aghaee & Hansson, Citation2013; Coté, Citation2014).

It is critical for teachers to know which peer feedback type is more effective in fostering students’ argumentation skills in essay writing given attention to the online context. For example, in online contexts, peer feedback can be delivered synchronously and asynchronously (Chen, Citation2016; Shang, Citation2017) in both directed and undirected formats (Latifi et al., Citation2021b; Van Popta et al., Citation2017). Some studies have shown that providing feedback on peers’ argumentative essays is a complex task and it requires high-level cognitive processing thinking skills (Foo, Citation2021; Van Popta et al., Citation2017). Therefore, directed and asynchronous peer feedback is a good option since students are guided and can take their time to review their peers’ essays (e.g. Chen, Citation2016; Latifi & Noroozi, Citation2021). While some other critics that directed peer feedback do not always result in successful performance (e.g. Dillenbourg, Citation2002; Dillenbourg & Tchounikine, Citation2007; Noroozi, Citation2013). For example, studies have shown that rigidly directed peer feedback can work as an inhibitor in the creation of natural interaction among peers (Dillenbourg & Tchounikine, Citation2007). Or, directed peer feedback can limit students’ freedom and autonomy in providing feedback which could negatively impact their learning processes and outcomes (Dillenbourg, Citation2002; Jermann & Dillenbourg, Citation2003; Noroozi, Citation2013; Tchounikine, Citation2008).

The review of the literature shows that only a few studies focused on comparing the effectiveness of different peer feedback types on students’ argumentative essay writing performance and domain-specific knowledge acquisition (e.g. Latifi et al., Citation2020; Citation2021b; Latifi & Noroozi, Citation2021). In a study, Latifi et al. (Citation2020) compared the effects of peer feedback with worked examples and scripted peer feedback on feedback quality, essay quality, and acquisition of domain-specific knowledge. They found that scripted peer feedback entailed higher feedback quality with higher positive impacts on students’ argumentative essay writing quality compared to peer feedback with worked examples. However, there was no difference between the two types in terms of improvements in the quality of students’ domain-specific learning. In another study, (Citation2021b) focused on comparing the effects of different peer feedback types on students’ learning processes and outcomes, and essay quality. They found a significant impact of different peer feedback types including peer feedback and peer feedforward on students’ learning processes and outcomes, and essay quality, however, the difference among different supported peer feedback types was not significant (Latifi et al., Citation2021b).

Furthermore, the literature suggests that, in practice, peer feedback has been traditionally seen as a strategy to provide remedial diagnostics on problems rooted in peers’ work and to clarify points for improvement, while recommendations and action plans on how to improve peers’ work from now on are somewhat overlooked (Latifi et al., Citation2021a; Taghizadeh Kerman et al., Citation2022; Wimshurst & Manning, Citation2013). In general terms, these constructive recommendations that can feed peers’ work to be improved are called peer feedforward (Wimshurst & Manning, Citation2013). Peer feedforward refers to the pieces of advice provided by a learner to a learning partner that can also include clear action plans on how to uptake the given advice (Latifi et al., Citation2021a). Providing peer feedforward is challenging for students and normally they do not provide constructive types that can guide peers in obtaining the desired goal (Noroozi & Hatami, 2019). The main reason for that is students lack sufficient knowledge and skills in advising on peers’ work and to understand what is next and how to get there (Kerman et al., Citation2022).

One may argue that feedforward is also a part of peer feedback and why these two should be seen separately. This argument is theoretically valid as we expect from high-quality peer feedback to not only give information on the current performance and related issues (peer feedback) but also include information on possible suggestions and action plans for further improvements of the work (peer feedforward) (Nelson & Schunn, Citation2009; Patchan et al., Citation2016; Wu & Schunn, Citation2021). This means that peer feedback regardless of its quality can take place in the form of feedback, feedforward, or a combination of both. However, in practice, this is not the case and students usually focus on the feedback part (what and how has been done) and struggle or ignore providing feedforward (where to go next and how to get there) (Latifi et al., Citation2021a). This issue raises a need to create and examine different peer feedback types that not only explicitly guide students on how to give feedback on the actual performance but also direct students on how to give constructive feedback that can lead peers to attain desired goals.

Although prior studies provide insights into how different peer feedback types influence students’ learning processes and outcomes, argumentative essay writing skills, they did not specifically provide information on how directed peer feedback types (feedback, feedforward, a combination of feedback and feedforward) compared to undirected peer feedback type can impact students’ performance in feedback, essay, and domain-specific knowledge acquisition. Previous studies mainly focused on comparing directed peer feedback types where students are guided and supported in different ways, while undirected peer feedback type where students can provide their feedback with freedom and in a natural way is not studied. There is a need to explore students’ peer feedback performance while they are supported and guided in providing feedback compared to when they provide feedback with freedom and autonomy. In addition, the literature review suggests that only a few studies have been conducted in this research area with a small number of students in one course which can make the results less reliable for other contexts. In addition, the results of the prior studies are mixed. While, in one study, a difference was found between supported peer feedback types (Latifi et al., Citation2020), the other study did not show such a difference (Latifi et al., Citation2021b). More studies are needed in this regard to provide more valid evidence. Therefore, the current study was conducted to compare the effects of directed peer feedback types (feedback: FB, feedforward: FF, and combination of feedback and feedforward: FB+FF) and an undirected peer feedback (UF) type on students’ provided peer feedback quality, argumentative essay writing skills, and acquisition of domain-specific knowledge. Due to the exploratory nature of this study, we addressed the gap in the literature in the form of research questions rather than hypotheses. Accordingly, the following research questions are formulated to guide this study.

What is the quality of students’ peer feedback under FB, FF, FB+FF, and UF conditions?

What are the effects of FB, FF, FB+FF, and UF types on students’ argumentative essay writing quality?

What are the effects of FB, FF, FB+FF, and UF types on students’ domain-specific knowledge acquisition?

2. Method

2.1. Context and participants

The study took place at a university in the Netherlands that is specialized in life sciences. The participants were 221 Bachelor of Science (BSc) students enrolled in a course named “Introduction to Molecular Life Sciences and Biotechnology” and followed an online learning module. The mean age of the participants was 18.42 years (SD = 1.34, MIN = 16, MAX = 28). Students were mostly Dutch (97%). About 70% of the participants were male and 30% were female.

2.2. Study design

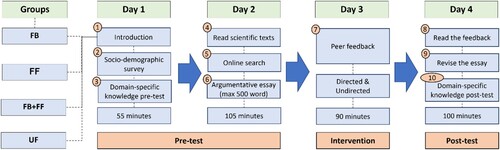

An exploratory study with a pre-test and post-test design was conducted. Students were randomly grouped in pairs and assigned to four conditions with different peer feedback types, namely, feedback (FB), feedforward (FF), feedback with feedforward (FB+FF), and undirected feedback (UF). The FB type directs the feedback to address the progress that has been made toward the goal (i.e. How am I going/doing?). Students in this condition were provided with question prompts that can guide them in explaining the current state of their peer's essays and identifying problems in the essay. The FF type directs the feedback to provide advice on activities that need to be undertaken to make better progress (i.e. where to go next?). In this condition, students were given question prompts that assist them with providing suggestions with action plans to peers on how to improve their essays. The FB+FF version of the feedback combines the two aforementioned types, that is, it directs the provision of feedback to both the current state of the essay and advice on activities to make better progress (Hattie & Timperley, Citation2007). Finally, the UF type does not guide or direct the feedback, thus it is left up to the student. This means that in the UF condition, students did not have any forms of guidelines and support (e.g. question prompts, scripts, training, etc.) that could direct them in providing feedback to their peers regarding their essays (see ). The design of directed peer feedback types was based on the scripts suggested by Noroozi et al. (Citation2016) and Latifi et al. (Citation2021b). However, the types used in the current study were further elaborated to guide the feedback process and facilitate the provision of directed (FB, FF, and FB+FF) feedback and UF. Students in four conditions were asked to provide peer feedback on their peers’ argumentative essay writing. Students’ feedback on their peers’ essays was built on the structure of high-quality argumentative essay writing (Noroozi et al., Citation2016; Toulmin, Citation1958) including a) intuitive opinion on the topic, b) arguments and data in favor, c) counter-arguments and opposing points of view (if existing) and data against, d) integration of the pros and cons considering the opinions of advocates and opponents of the topic, and e) a final conclusion (). The mode of peer feedback activity and writing an argumentative essay was asynchronous and students had to do it individually. Therefore, students had the convenience of completing each phase at their own pace from any location at any time within the stipulated time frame. In addition, students’ identity was anonymous within the system as usernames were generic (e.g. “Student 1”) (see ).

Table 1. Elements of a high-quality argumentative essay (left), directed peer feedback strategies (feedback “FB”, feedforward “FF”, and their combination “FB+FF”), and undirected peer feedback strategy (UF).

Table 2. The procedure of the study and design of the online learning module.

2.3. Procedure and design of the online learning module

A tailor-made online learning module was implemented based on a regular course assignment that was redesigned to work as a digital module. The module offers information to students in various formats such as texts, tables, figures, and images. The module only presents necessary and relevant information, and clear instructions and messages to allow students to focus on the activity at stake. For this study, we used the asynchronous format of learning as we wanted students to take their time in writing argumentative essays and providing peer feedback since these were considered complex skills that demand high cognitive processing (Van Popta et al., Citation2017). In addition, this online module only allows students to engage with learning materials and tasks anonymously. Therefore, students were anonymously involved in peer feedback tasks. This choice was made to avoid possible emotional distress among students that could happen when they receive critical comments from their peers (Noroozi et al., Citation2016). Moreover, for each input box (i.e. essay and feedback) the system checked that the number of words was within the lower and upper bounds; if that was not the case, the system provided textual and visual feedback. In addition, each text field had a word counter. For authentic online educational classrooms, this means less low-order workload for teachers as technology can do the job of checking out the structure for essay and feedback tasks.

The specific learning topic for this module was “insect cells for cultured meat manufacturing” which falls under the overarching theme of Genetically Modified Organisms (GMOs). The online module was comprised of four main phases over a period of four consecutive days as described in the section on the procedure of the study (see ).

On the first day, students received a verbal introduction to the module during class time (20 min). This introduction included all the required information and instructions for students (e.g. information on the research set-up of the study, expected goals, instructions on how to follow the modules, word limits for essays and feedback, deadlines, etc.). Students were also requested to complete questionnaires on socio-demographic information and domain-specific knowledge (35 min).

On the second day, students had to individually read a text about “how to write an argumentative essay” followed by an example of an argumentative essay (30 min), search online for more information sources such as daily papers, periodic journals, and scientific papers (30 min), and write an individual argumentative essay of ca. 500 words (min. 450, max. 550 words) about: “Insect-cell biomass infected with genetically modified baculovirus is a healthy meat alternative” (45 min). Students’ original essays were considered as the pre-test.

On the third day, dyads were formed and each student in the dyad groups had to individually provide feedback to his/her learning partner using a feedback form (90 min). No specific assignment strategy was used to form dyads of students. That means that students were randomly assigned to dyads and they were asked to give feedback to each other. Students in four different conditions performed their peer feedback task. The feedback form for students in the FB and FF conditions had a question and a text field of ca. 50 words (min. 40, max. 60 words) for each element of a high-quality essay (see ). Students in the FB+FF condition received a feedback form comprising both the FB and the FF forms. In contrast, the feedback form for students in the UF condition was comprised of a request to provide feedback and a text field to write their feedback of ca. 400 words (min. 350, max. 450 words). Students in the UF condition did not have to follow any guidelines and they had the freedom in providing their comments.

On the fourth and last day, students were invited to read the feedback from their learning partner (15 min), and then individually revise their argumentative essay (45 min), (ca. 500 words, min. 450, max. 550 words). Students’ revised essays were considered as the post-test. In addition, students completed a questionnaire on domain-specific knowledge (equal to a pre-test) (35 min). Finally, students were debriefed (5 min). The total time investment for the students was ca. 335 min.

2.4. Measurements

2.4.1. Measurement of students’ feedback quality

An adjusted version of a coding scheme developed by Noroozi et al. (Citation2016) was used to assess the quality of the feedback given by the students (see Appendix 1). The scheme was in line with the peer feedback script that students used to provide feedback to their learning partner's argumentative essay. This coding scheme was developed according to the literature (Andrews, Citation1995; Toulmin, Citation1958; Wood, Citation2001) and the characteristics of a complete and sound argumentative essay in the context of biotechnology (see ). A series of consultation meetings with a panel of experts and teachers were used to validate the coding scheme (Noroozi et al., Citation2016). The coding scheme is comprised of a set of variables with different levels of proficiency that describe the quality of the student's feedback. Each level has a label, points, and description. The feedback given by all students was coded and scored in terms of the following variables: Intuitive opinion, claims in favor of the topic, justification for claim(s) in favor of the topic, claims against the topic, justification for claim(s) against the topic, integration of pros and cons, and conclusion. A score, between zero and two, was given for each of the aforementioned variables as follows: zero points if feedback was not present, one point for non-elaborated feedback, and two points for elaborated feedback. All given points for these elements were summed up together and indicated the student's total score for the quality of the given feedback. Two coders (i.e. the first author and a trained coder) participated in analyzing the essay data based on the coding scheme. The inter-rater agreement between two coders was calculated randomly by selecting 5% of the students’ feedback (equally distributed for FB, FF, FB+FF, and UF conditions). To assure the reliability of the coding process, the coder was trained on such processes including the coding rubrics. Then, the first author and the coder independently coded 5% of the data. The interrater agreement was substantial (Cohen's Kappa = 0.68) according to Landis and Koch (Citation1977). Discrepancies were resolved through discussion until an agreement was reached on how to resolve them. Afterward, the coder coded the remaining data.

2.4.2. Measurement of students’ argumentative essay writing quality

“The quality of students” written argumentative essays on the topic was measured using an adjusted version of a coding scheme developed by Noroozi et al. (Citation2016) (see Appendix 2). The coding scheme validity was obtained in the same way as the validity of the scheme to measure students’ feedback quality, that is, from a series of consultation meetings with a panel of experts and teachers (Noroozi et al., Citation2016). The scheme consists of a set of variables with various levels of proficiency that depict the quality of the student's argumentative essay. Each level is defined with a label, points, and description to facilitate the coding. The original and revised essays of all students were coded in terms of the variables: Intuitive opinion, arguments in favor of the topic (pro-arguments), scientific facts in favor of the topic (pro-facts), arguments against the topic (con-arguments), scientific facts against the topic (con-facts), opinion on the topic considering various pros and cons (integration of pros and cons), scientific facts to support opinion regarding the integration of various pros and cons of the topic (integration of pro- and con-facts), and conclusion. A score of zero, one, or two was given for each of the aforementioned variables. The assessment was done as follows: zero points were given if the element was not mentioned, one point if the element was non-elaborated, and two points if the element was elaborated. All given points for these elements were summed up together and indicated the student's total score for the quality of the written argumentative essay. The coding scheme was used in two phases. In the first phase, it was used to assess students’ original essays and in the second phase, it was used to assess students’ revised essays. The gain in the quality of students’ argumentative essays was measured based on the mean score differences between the original essay and the revised essay. Likewise, in coding feedback data, the same two coders (i.e. the first author and a trained coder) participated in analyzing the essay data based on the coding scheme. The inter-rater agreement was calculated following the same process used before to calculate the inter-rater agreement for the quality of students’ feedback. The results showed a high level of agreement between the two coders (Cohen's Kappa = 0.87) according to Landis and Koch (Citation1977). Discrepancies were resolved through discussion until an agreement was reached on how to resolve them. Then, the coder coded the rest of the data.

2.4.3. Measurement of students’ domain-specific knowledge acquisition

Students’ domain-specific knowledge acquisition was measured during the pre-test and post-test by using a multiple-choice questionnaire. This questionnaire was developed by the course coordinator and it was comprised of 17 items (e.g. “what is a continuous animal cell line?”, “insects that are commercially cultivated include … ”, “a baculovirus is … ”, and “what is a “master cell bank”? For each question, students received a point, for a total of 18 points. Then, the domain-specific knowledge score was calculated for each student on a scale from 0 to 1 (#points/18) and then multiplied by 10 to have scores on a scale from 0 to 10. The result was used as the domain-specific knowledge score for the given test.

2.5. Analysis

A one-way analysis of variance (ANOVA) test was used to compare the mean differences in students’ feedback quality in different peer feedback types. Post hoc comparisons were performed using Bonferroni's test to see the extent to which students’ peer feedback quality differs among different peer feedback types. A one-way multivariate analysis of covariance (MANCOVA) was conducted to compare the effectiveness of the directed peer feedback script on the quality of students’ written argumentative essays and domain-specific knowledge acquisition. Such an omnibus test was used to avoid getting an artificially inflated alpha as a result of conducting multiple statistical tests on the same sample. The quality of the received feedback received was used as the covariate in the analysis. To remove the effect of the condition on the covariate, the covariate was corrected. The covariate corrected for a student is given by the quality of feedback received minus the feedback quality mean of the student group condition, that is, covariate corrected = CC_ij = C_ij-C ̅_i, where i is i-th condition and j is j-th student within the condition. Finally, to reduce the impact of potential sources of bias we used the method of winsorizing, that is, outliers were substituted with the highest value that was not an outlier.

3. Results

3.1. What is the quality of students’ peer feedback under FB, FF, FB+FF, and UF conditions?

ANOVA tests indicated a statistically significant difference in the quality of feedback provided for the four conditions, Welch's F(3, 92.15) = 46.83, p < .005, η2 = .39, with a large effect (Cohen, Citation1988, pp. 284–287). Post hoc comparisons indicated that the mean score of the FB condition (M = 14.26, SD = 2.08) was significantly different from the FF condition (M = 7.95, SD = 3.75), the FB+FF condition (M = 11.25, SD = 2.75), and the UF condition (M = 8.98, SD = 3.45). Similarly, the FB+FF condition was significantly different from the FF and UF conditions. Finally, the UF condition was significantly different from the FF condition. Such a result indicated that the directed peer feedback script was more effective in supporting and directing the creation of feedback and a combination of feedback and feedforward. In contrast, the script was not very effective supporting and directing the creation of feedforward.

3.2. What are the effects of FB, FF, FB+FF, and UF types on students’ argumentative essay writing quality?

The covariate, the quality of feedback received, presented a small significant relationship with the quality of writing argumentative essays, F(1, 165) = 4.14, p = .043, η2 = .026, indicating that the quality of writing argumentative essays is influenced by the quality of the feedback received. Moreover, there was not a significant effect of the directed and undirected peer feedback types on the quality of writing argumentative essays gain after controlling for the effect of the quality of feedback received, F(3, 155) = .231, p = .875, that is, the gain was similar for all the conditions. A comparison between the estimated marginal means showed that the biggest gain, yet not significant, was obtained by the FB condition (M = 1.74) followed by the FB+FF, FF, and UF conditions (M = 1.50, 1. 68, 1.20 respectively), see also .

Table 3. Quality of writing argumentative essays scores for the original and revised essays for all conditions.

3.3. What are the effects of FB, FF, FB+FF, and UF types on students’ domain-specific knowledge acquisition?

The covariate, the quality of feedback received, presented a small significant relationship with the acquisition of domain-specific knowledge, F(1, 155) = 4.84, p = .02, η2 = .03, indicating that the acquisition of domain-specific knowledge is influenced by the quality of the feedback received. In addition, there was not a significant effect of the directed and undirected peer feedback types on domain-specific knowledge acquisition after controlling for the effect of the quality of feedback received, F(3, 155) = .38, p = .76, indicating that the gain was similar for all the conditions. A comparison between the estimated marginal means showed that the biggest gain, yet not significant, was obtained by the FF condition (M = 11.92) followed by the FB, UF, and FB+FF conditions (M = 9.84, 9.83, and 9.09 respectively), see also .

Table 4. Domain-specific knowledge scores for the pre-test and post-test for all conditions. Scores were transformed such that the maximum possible score was 100.

4. Discussion

4.1. Discussion on RQ1

The results for the first research question showed that the quality of provided feedback among directed and undirected peer feedback types is different. Students’ feedback quality in FB and FB+FF conditions was higher than students in the UF condition. This finding indicates that directed peer feedback types namely FB and FB+FF led to better peer feedback quality than the undirected peer feedback type. The result also suggests that if the directed peer feedback type only focuses on providing feedforward, it can be less effective than undirected peer feedback. This finding is supported by prior studies where high feedback quality is reported for directed peer feedback types including scripted peer feedback and feedback and feedforward combination (Latifi et al., Citation2020; Citation2021b).

In general, the directed peer feedback types guided and helped students to provide high-quality feedback to their learning partners which is favorable to improving their writing skills (DeNisi & Kluger, Citation2000). In addition, the script supported the provision of feedback related to the task rather than on personal evaluations or the effect on the learning partner which is considered to be less effective (Hattie & Timperley, Citation2007). Moreover, the directed peer feedback types allowed the provision of structured and sequential feedback on each of the different elements of a high-quality argumentative essay, thus facilitating the improvement of each of the elements of the argumentative essay. However, it is important to take into consideration that all students received argumentative essay theory and an example of an argumentative essay and that may have played a role in the feedback provision process. Such statement is supported by the fact that the UF condition, which was not scripted, outperformed the FF condition. The previous information suggests that scaffolding the feedback provision process with theory and examples is effective to foster high-quality feedback, and a further combination with scripts to support and direct the feedback is even more effective. Yet, is necessary to understand why the script was not as effective in the FF condition. One possible explanation is that it is not customary for students to receive feedback containing possible directions to pursue or about alternative types to follow, but rather corrective feedback on their performance on the actual task. As such, it may have been difficult for the students to provide feedback containing alternative directions or types. To conclude, effective feedback should 1) reduce the gap between what is understood and what should be understood, and 2) increase students’ effort, motivation, and engagement to reduce such gap (Hattie & Timperley, Citation2007). In addition, effective feedback must include 1) information related to the actual task and/or performance taking as reference an expected standard, prior performance, and/or the success or failure on (part of) the task (How am I going/doing?), and 2) information related to possible directions or alternative types to follow (Where to go next?) (Hattie & Timperley, Citation2007).

4.2. Discussion on RQ2

There were increases in the quality of writing argumentative essays from the original essay to the revised essay in all conditions. In addition, the quality of feedback received presented a small significant relationship with the quality of writing argumentative essays. These results are in line with previous research claiming positive results of peer feedback on writing skills (Gabelica et al., Citation2012; Kellogg & Whiteford, Citation2009; Latifi et al., Citation2020; Citation2021b; Noroozi et al., Citation2016). During the peer feedback process, students contrasted their solutions with the ones from their learning partners. As a result, students were able to identify and rectify mistakes and misconceptions (Shute, Citation2008), broaden and deepen their reasoning and understanding (Yang, Citation2010), understand the differences between the current and the expected state, and what to do and how to do it to improve and do better (DeNisi & Kluger, Citation2000; Hattie & Timperley, Citation2007). Moreover, the peer feedback process guided students learning (Orsmond et al., Citation2005), facilitated problem-solving skills and self-regulation (Shute, Citation2008), and triggered reflection (Phielix et al., Citation2010).

In this particular study, no significant differences were found between directed (i.e. FB, FF, and FB+FF) and undirected (i.e. UB) feedback in terms of the quality of writing argumentative essays. These results differ from previous literature indicating that the effectiveness of feedback is influenced by its type and the way it is provided (Hattie & Timperley, Citation2007). Our results may be explained if we consider that all conditions received theory on the composition of an argumentative essay and an example of an argumentative essay. In previous studies on argumentation scaffolds to foster argumentation knowledge, students received argumentation theory before engaging in argumentative discourse activities, but the effects of providing theory were not investigated (Kollar et al., Citation2007). Therefore, it is important to put in context the effects of theory and examples on the learning outcomes. We believe, that the provision of theory and an example may have diminished the effect of the directed peer feedback scripts, and may have made the scripts redundant and even unnecessary. This reasoning is in line with educational psychology (Wittwer & Renkl, Citation2010) and cognitive load theory (CLT) (Sweller et al., Citation1998) literature indicating the positive effects of theory, or instructional explanations, along with worked examples (also known as example-based learning). For instance, providing theory and examples can prevent misconceptions and inconsistencies, and facilitate understanding (Wittwer & Renkl, Citation2010). In addition, the theory-example combination can be effective at fostering the acquisition of meaningful and flexible knowledge (Van Gog et al., Citation2004) since both the product-oriented information, i.e. the how, and the process-oriented information, i.e. the rationale or the why some solution steps should be conducted (Wittwer & Renkl, Citation2010), are provided. Similarly, previous research found that example-based learning is more effective when it is accompanied by problems to be solved (Pashler et al., Citation2007). Therefore, students supported with instructional scaffolds that combine theory, examples, and practice might profit more. Nevertheless, the effectiveness of worked examples is diminished as students get more experience, i.e. the expertise-reversal effect (Kalyuga et al., Citation2003). In addition, experienced students might not need further instructional support, as they have to invest cognitive resources in redundant information. Redundant information might hamper learning as it might result in unnecessary overloading and suboptimal learning processes due to the redundancy effect (Sweller et al., Citation1998). The redundancy effect is in line with the idea of over-scripting (Dillenbourg, Citation2002), which can occur due to too much scaffolding, or when the provision of external support deters the self-regulated application of the student's internal script (Fischer et al., Citation2013). Hence, the provision of instructional support should consider the student's internal script and should be decreased over time to foster the learning of self-directed learning skills (Noroozi & Mulder, Citation2017). To conclude, we believe that providing theory and an example nullified the effects of the directed peer feedback scripts in this study. In addition, caution should be exercised while piling up or combining instructional scaffolds, as the effects of some scaffolds may be nullified or may result in suboptimal learning processes due to overloading, e.g. redundancy effect and over-scripting. Last, the theory-example combination seems to be a powerful instructional support to foster argumentative essay writing.

4.3. Discussion on RQ3

The results also revealed an increase in the quality of domain-specific knowledge acquisition from pre-test to post-test in all conditions. This finding means that peer feedback regardless of its type is an effective instructional strategy to increase students’ domain-specific knowledge acquisition. Such results are in line with previous research claiming positive results of peer feedback on domain-specific knowledge acquisition (Latifi et al., Citation2021b; Noroozi et al., Citation2022b; Noroozi & Mulder, Citation2017; Valero Haro et al., Citation2019). One reason that might explain this finding is that peer feedback, in general, is seen as a process-oriented pedagogical activity in classrooms (Kerman et al., Citation2022; Shute, Citation2008) that, more or less depending on its design, encourages students to critically engage in collaborative discussions on a specific topic, recall their prior knowledge on the discussed topic, analyze and review peers’ works, identify gaps and problems, and suggest points for improvements (Topping, Citation2009). In addition, peer feedback allows students to reflect on their own knowledge conceptions and confirm, complement, overwrite, or restructure them (Valero Haro et al., Citation2019). Involving in such an informative knowledge-shared collaborative learning process might tend to improve students’ domain-specific knowledge acquisition. Another plausible reason to explain this finding is that in this particular study, students in all conditions received theory on the discussed topic. This means that all conditions were given an equal chance to acquire some basic knowledge on the topic and this can partly explain students’ domain-specific knowledge gain in all conditions. Therefore, it is possible that giving theoretical knowledge may have neutralized the effect of the directed peer feedback types. Similar to the discussion on RQ2, this reasoning can be valid in the case of domain-specific knowledge acquisition as well: combining theory and examples could positively impact the acquisition of meaningful and flexible knowledge (Van Gog et al., Citation2004). That is to say that students improved their knowledge acquisition in all conditions probably due to the combination of given theory and peer feedback (regardless of its type).

5. Limitations and suggestions for future research and practice

There are some limitations of this study that need to be acknowledged and considered for future studies. First, this study was conducted in vivo. This setting provided advantages and disadvantages. An advantage is the high practical relevance and high ecological validity of the study due to the real educational setting instead of laboratory settings in which motivational aspects may be affected due to synthetic learning environments and rewards upon successful completion of the tasks and activities. In contrast, to level the field of play for all the students, all the conditions received theory on the composition of an argumentative essay and an example of an argumentative essay. The latter may have affected the effectiveness of the directed peer feedback scripts, making the scripts redundant or even unnecessary. Therefore, we make a call to exercise caution while combining multiple instructional scaffolds, as some scaffolds may nullify others, which may result in suboptimal learning processes due to overloading, e.g. redundancy effect and over-scripting. The second possible criticism of the study is related to the scale and the coding scheme used to measure the quality of peer feedback. The scale, which was developed and successfully used before by Noroozi et al. (Citation2016), has a scale ranging from 0 to 2. The scale may not offer a large spectrum of variation in contrast to a scale with more points, yet it is able to provide some insight into the quality of peer feedback. Moreover, we created a rubric, and used examples (depicting the characteristics that should be met to assign a score) to ensure consistency of the measures and assess change equally well across the entire range of the construct. In addition, the coding process of the quality of feedback disregarded if the actual feedback provided by the student corresponded, either completely or partially, to the scripted feedback type. Such type of analysis may have influenced the results. Yet, we believe that analyzing the extent to which the feedback script fosters the provision of a given feedback type deserves a deeper analysis and thus further research to understand the possible reasons and processes behind such behavior. Third, the present study only measured the effect of the intervention in the short term but not in the longer term. Therefore, future research should investigate the effects of theory and worked examples in contrast to scripting (e.g. feedback type). In addition, the effectiveness of the aforementioned instructional scaffolds should be evaluated considering the educational level of the students as their expertise and cognitive capacity may play a role.

Fourth, this study is limited regarding the feedback approach as it is designed from a one-way perspective while formative behavior goes beyond that, and includes an argumentative dialogue, in which questions are being asked to confront or further sharpen the argumentative process of the student, and continuous assessment of the further argumentation process of the student (peer) is conducted by the teacher (or peer). Future studies should focus on the impacts of dialogic and interactive peer feedback types on feedback quality, essay quality, and domain-specific knowledge acquisition. In addition, there is no 0-measurement in the assessment of feedback behavior in this study. Probably, students differ a lot in their capacity of giving (different types of) feedback. However, there is no possibility to report the effect scores of the feedback instructions on feedback behavior, in terms of standardized effects scores for the four feedback types. Moreover, as we mentioned before, we acknowledge the risk of over-scripting and redundancy effect in this study which might have happened due to overloading students with too much theoretical information combined with examples, scripts, and work examples. It might be interesting for future studies to do a longitudinal study to examine the interaction effect between the type of feedback and the type of support over time. Future research can study the optimal intensity of feedback support for novel and experienced students. Last, future studies should have a longitudinal design to assess student learning, internalization of the constructs, and its application in the same and different contexts.

Despite its limitations, the present study offers implications for future education practice in online higher education settings. First, we found that students’ feedback quality in online higher education can be influenced by the types of feedback support. Based on this finding, we suggest teachers create rubrics or scripts in their online courses that provide instructions and guidelines for students on how to give feedback on peers’ essays. In this way, students are most likely expected to perform better compared to a situation where they do not receive relevant instructions and guidelines. Second, although we found no significant differences between directed and undirected peer feedback types for improving peers’ essay quality and domain-specific knowledge acquisition, directed peer feedback namely FB strategy showed a bit higher impact compared to other peer feedback types. This finding implies that even though teachers are welcome to adopt different peer feedback types based on their own and students’ preferences, we recommend using directed peer feedback. However, one critical note for consideration is that teachers should not overwhelm students with too many guidelines and instructions as it can impose the over-scripting risk which can negatively impact students’ learning processes and outcomes.

6. Conclusions

This study provided insights into how different types of peer feedback influenced students’ quality of peer feedback and argumentative essay writing, and also their acquisition of domain-specific knowledge in an online learning environment. We found that the quality of feedback provided by students differed significantly depending on the type of peer feedback. The directed peer feedback was more effective in supporting students with the provision of feedback only or a combination of feedback and feedforward. However, this directed peer feedback was less effective in supporting and directing students with the creation of feedforward alone. Such results indicate that the quality of peer feedback can be enhanced or diminished depending on the extent to which peer feedback is directed and how this is done. The results also showed an increase in the quality of argumentative essays and domain-specific knowledge acquisition in all conditions. However, no significant differences in quality gain were found between the different conditions. These results indicate that giving peer feedback, in nature regardless of its format and type, is a powerful and effective learning strategy to enhance students’ learning outcomes.

Our results add value to the existing literature where the effects of implementing different peer feedback types on the quality of peer feedback and associated learning outcomes within online settings have been investigated. The results support the evidence that different peer feedback types can lead to different levels of peer feedback quality in online learning environments. These results are important for future practice in online higher education contexts in the sense that they provide a conclusive picture of the role of directed and undirected peer feedback types for enhancing students’ learning processes and outcomes.

Availability of data

The authors declare that the data can be available based upon on a request.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Notes on contributors

Anahuac Valero Haro

Anahuac Valero Haro is a PhD graduate from the Education and Learning Sciences (ELS) Chair Group, Wageningen University, the Netherlands. His research interests include e-learning and distance education, computer-supported collaborative learning and computer-supported collaborative argumentation to facilitate acquisition of domain-specific knowledge and argumentation competence.

Omid Noroozi

Omid Noroozi is an Associate Professor at the Education and Learning Sciences (ELS) Chair Group, Wageningen University, the Netherlands. His research interests include collaborative learning, e-learning and distance education, computer-supported collaborative learning (CSCL), argumentative knowledge construction in CSCL, argumentation-based CSCL, CSCL scripts and peer feedback.

Harm J. A. Biemans

Harm J. A. Biemans is an Associate Professor at the Education and Learning Sciences (ELS) Chair Group, Wageningen University, the Netherlands. His research interests concern competence development, competence-based education, educational psychology, educational development and evaluation, and computer-supported collaborative learning.

Martin Mulder

Martin Mulder is an Emeritus Professor from the Education and Learning Sciences (ELS) Chair Group, Wageningen University, The Netherlands. His research interests include competence theory and research, human resource development, computer-supported collaborative learning, competence of entrepreneurs, competence of open innovation professionals, and interdisciplinary learning in the field of food quality management education.

Seyyed Kazem Banihashem

Seyyed Kazem Banihashem is an Assistant Professor at Open University of the Netherlands and a researcher at Wageningen University and Research. His research interests include technology-enhanced learning, learning analytics, learning design, argumentation, formative assessment, and feedback.

References

- Aghaee, N., & Hansson, H. (2013). Peer portal: Quality enhancement in thesis writing using self-managed peer review on a mass scale. International Review of Research in Open and Distributed Learning, 14(1), 186–203. https://doi.org/10.19173/IRRODL.V14I1.1394

- Andrews, R. (1995). Teaching and learning argument. Cassell Publishers.

- Baker, K. M. (2016). Peer review as a strategy for improving students’ writing process. Active Learning in Higher Education, 17(3), 179–192. https://doi.org/10.1177/1469787416654794

- Banihashem, S. K., Farrokhnia, M., Badali, M., & Noroozi, O. (2021). The impacts of constructivist learning design and learning analytics on students’ engagement and self-regulation. Innovations in Education and Teaching International, 1–11. https://doi.org/10.1080/14703297.2021.1890634

- Banihashem, S. K., Noroozi, O., van Ginkel, S., Macfadyen, L. P., & Biemans, H. J. (2022). A systematic review of the role of learning analytics in enhancing feedback practices in higher education. Educational Research Review, 100489, https://doi.org/10.1016/j.edurev.2022.100489

- Bloom, B. S. (1984). The 2 sigma problem: The search for methods of group instruction as effective as one-to-one tutoring. Educational Researcher, 13(6), 4–16. https://doi.org/10.3102/0013189X013006004

- Boud, D., Cohen, R., & Sampson, J. (1999). Peer learning and assessment. Assessment & Evaluation in Higher Education, 24(4), 413–426. https://doi.org/10.1080/0260293990240405

- Bruning, R., & Horn, C. (2000). Developing motivation to write. Educational Psychologist, 35(1), 25–37. https://doi.org/10.1207/S15326985EP3501_4

- Chen, T. (2016). Technology-supported peer feedback in ESL/EFL writing classes: A research synthesis. Computer Assisted Language Learning, 29(2), 365–397. https://doi.org/10.1080/09588221.2014.960942

- Cohen, J. (1988). Statistical power analysis for the behavioral sciences (2nd ed.). L. Erlbaum Associates. Routledge, New York. https://doi.org/10.4324/9780203771587.

- Cooper, C., Cherry, R., Copley, B., Fleischer, S., Pollard, B., & Sartisky, M. (1984). Studying the writing abilities of a university freshman class: Types from a case study. In R. Beach, & L. Bridwell (Eds.), New directions in composition research (pp. 19–52). Guilford.

- Coté, R. A. (2014). Peer feedback in anonymous peer review in an EFL writing class in Spain. GIST Education and Learning Research Journal, 9(9 JUL-DEC), 67–87. https://doi.org/10.26817/16925777.144

- DeNisi, A. S., & Kluger, A. N. (2000). Feedback effectiveness: Can 360-degree appraisals be improved? The Academy of Management Executive, 14(1), 129–139. https://doi.org/10.5465/ame.2000.2909845

- Dillenbourg, P. (2002). Over-scripting CSCL. In P. A. Kirschner (Ed.), Three worlds of CSCL: Can we support CSCL (pp. 61–91). Open University of the Netherlands.

- Dillenbourg, P., & Tchounikine, P. (2007). Flexibility in macro-scripts for computer-supported collaborative learning. Journal of Computer Assisted Learning, 23(1), 1–13. https://doi.org/10.1111/j.1365-2729.2007.00191.x

- Driver, R., Newton, P., & Osborne, J. (2000). Establishing the norms of scientific argumentation in classrooms. Science Education, 84(3), 287–312. https://doi.org/10.1002/(SICI)1098-237X(200005)84:3%3C287::AID-SCE1%3E3.0.CO;2-A

- Du, X., Zhang, M., Shelton, B. E., & Hung, J. L. (2019). Learning anytime, anywhere: A spatio-temporal analysis for online learning. Interactive Learning Environments, 1–15. https://doi.org/10.1080/10494820.2019.1633546

- Er, E., Dimitriadis, Y., & Gašević, D. (2021). Collaborative peer feedback and learning analytics: Theory-oriented design for supporting class-wide interventions. Assessment & Evaluation in Higher Education, 46(2), 169–190. https://doi.org/10.1080/02602938.2020.1764490

- Fan, C. Y., & Chen, G. D. (2021). A scaffolding tool to assist learners in argumentative writing. Computer Assisted Language Learning, 34(1), 159–183. https://doi.org/10.1080/09588221.2019.1660685

- Filius, R. M., De Kleijn, R. A., Uijl, S. G., Prins, F. J., Van Rijen, H. V., & Grobbee, D. E. (2019). Audio peer feedback to promote deep learning in online education. Journal of Computer Assisted Learning, 35(5), 607–619. https://doi.org/10.1111/jcal.12363

- Fischer, F., Kollar, I., Stegmann, K., & Wecker, C. (2013). Toward a script theory of guidance in computer-supported collaborative learning. Educational Psychologist, 48(1), 56–66. https://doi.org/10.1080/00461520.2012.748005

- Foo, S. Y. (2021). Analysing peer feedback in asynchronous online discussions: A case study. Education and Information Technologies, 26(4), 4553–4572. https://doi.org/10.1007/s10639-021-10477-4

- Gabelica, C., Bossche, P. V. D., Segers, M., & Gijselaers, W. (2012). Feedback, a powerful lever in teams: A review. Educational Research Review, 7(2), 123–144. https://doi.org/10.1016/j.edurev.2011.11.003

- Gielen, M., & De Wever, B. (2015). Structuring peer assessment: Comparing the impact of the degree of structure on peer feedback content. Computers in Human Behavior, 52, 315–325. https://doi.org/10.1016/j.chb.2015.06.019

- Hattie, J., & Gan, J. (2011). Instruction based on feedback. In R. E. Mayer, & P. A. Alexander (Eds.), Handbook of research on learning and instruction (pp. 249–271). Routledge.

- Hattie, J., & Timperley, H. (2007). The power of feedback. Review of Educational Research, 77(1), 81–112. https://doi.org/10.3102/003465430298487

- Jermann, P., & Dillenbourg, P. (2003). Elaborating new arguments through a CSCL script. In Arguing to Learn: Confronting Cognitions in Computer-Supported Collaborative Learning Environments (In J. Andriessen, M. Baker, & D. Suthers (Eds.) Vol. 1, pp. 205–226). Amsterdam: Kluwer Academic Publishers. https://doi.org/10.1007/978-94-017-0781-7_8

- Kalyuga, S., Ayres, P., Chandler, P., & Sweller, J. (2003). The expertise reversal effect. Educational Psychologist, 38(1), 23–31. https://psycnet.apa.org/doi/10.1207S15326985EP3801_4 https://doi.org/10.1207/S15326985EP3801_4

- Kellogg, R. T., & Whiteford, A. P. (2009). Training advanced writing skills: The case for deliberate practice. Educational Psychologist, 44(4), 250–266. https://doi.org/10.1080/00461520903213600

- Kerman, N. T., Noroozi, O., Banihashem, S. K., Karami, M., & Biemans, H. J. (2022). Online peer feedback patterns of success and failure in argumentative essay writing. Interactive Learning Environments, 1–13. https://doi.org/10.1080/10494820.2022.2093914

- Kollar, I., Fischer, F., & Slotta, J. D. (2007). Internal and external scripts in computer-supported collaborative inquiry learning. Learning and Instruction, 17(6), 708–721. https://doi.org/10.1016/j.learninstruc.2007.09.021

- Landis, J. R., & Koch, G. G. (1977). An application of hierarchical kappa-type statistics in the assessment of majority agreement among multiple observers. Biometrics, 33(2), 363–374. https://www.jstor.org/stable/2529786?seq = 1 https://doi.org/10.2307/2529786

- Latifi, S., & Noroozi, O. (2021). Supporting argumentative essay writing through an online supported peer-review script. Innovations in Education and Teaching International, 58(5), 501–511. https://doi.org/10.1080/14703297.2021.1961097

- Latifi, S., Noroozi, O., Hatami, J., & Biemans, H. J. (2021a). How does online peer feedback improve argumentative essay writing and learning? Innovations in Education and Teaching International, 58(2), 195–206. https://doi.org/10.1080/14703297.2019.1687005

- Latifi, S., Noroozi, O., & Talaee, E. (2020). Worked example or scripting? Fostering students’ online argumentative peer feedback, essay writing and learning. Interactive Learning Environments, 1–15. https://doi.org/10.1080/10494820.2020.1799032

- Latifi, S., Noroozi, O., & Talaee, E. (2021b). Peer feedback or peer feedforward? Enhancing students’ argumentative peer learning processes and outcomes. British Journal of Educational Technology, 52(2), 768–784. https://doi.org/10.1111/bjet.13054

- Liunokas, Y. (2020). Assessing students’ ability in writing argumentative essay at an Indonesian senior high school. IDEAS: Journal on English Language Teaching and Learning, Linguistics and Literature, 8(1), 184–196. https://doi.org/10.24256/ideas.v8i1.134

- Mokhtar, M. M., Jamil, M., Yaakub, R., & Amzah, F. (2020). Debate as a tool for learning and facilitating based on higher order thinking skills in the process of argumentative essay writing. International Journal of Learning, Teaching and Educational Research, 19(6), 62–75. https://doi.org/10.26803/ijlter.19.6.4

- Morawski, M., & Budke, A. (2019). How digital and oral peer feedback improves high school students’ written argumentation—A case study exploring the effectiveness of peer feedback in geography. Education Sciences, 9(3), 178. https://doi.org/10.3390/educsci9030178

- Nelson, M. M., & Schunn, C. D. (2009). The nature of feedback: How different types of peer feedback affect writing performance. Instructional Science, 37(4), 375–401. https://doi.org/10.1007/s11251-008-9053-x

- Noroozi, O. (2013). Fostering argumentation-based computer-supported collaborative learning in higher education. Ph.D dissertation, Wageningen University and Research, The Netherlands. https://edepot.wur.nl/242736

- Noroozi, O., Banihashem, S. K., & Biemans, H. J. (2022a). Online supported peer feedback tool for argumentative essay writing: Does course domain knowledge matter? In Weinberger, Chen, Hernandez-Leo, & Chen (Ed). In 15th international conference on computer-supported collaborative learning 2022 (pp. 356–358). International Society of the Learning Sciences.

- Noroozi, O., Banihashem, S. K., Taghizadeh Kerman, N., Parvaneh Akhteh Khaneh, M., Babayi, M., Ashrafi, H., & Biemans, H. J. (2022b). Gender differences in students’ argumentative essay writing, peer review performance, and uptake in online learning environments. Interactive Learning Environments, 1–15. https://doi.org/10.1080/10494820.2022.2034887

- Noroozi, O., Biemans, H., & Mulder, M. (2016). Relations between scripted online peer feedback processes and quality of written argumentative essay. The Internet and Higher Education, 31, 20–31. https://doi.org/10.1016/j.iheduc.2016.05.002

- Noroozi, O., Hatami, J., Bayat, A., van Ginkel, S., Biemans, H. J., & Mulder, M. (2020). Students’ online argumentative peer feedback, essay writing, and content learning: Does gender matter? Interactive Learning Environments, 28(6), 698–712. https://doi.org/10.1080/10494820.2018.1543200

- Noroozi, O., Kirschner, P., Biemans, H. J. A., & Mulder, M. (2018). Promoting argumentation competence: Extending from first- to second-order scaffolding through adaptive fading. Educational Psychology Review, 30(1), 153–176. https://doi.org/10.1007/s10648-017-9400-z

- Noroozi, O., & Mulder, M. (2017). Design and evaluation of a digital module with guided peer feedback for student learning biotechnology and molecular life sciences, attitudinal change, and satisfaction. Biochemistry and Molecular Biology Education, 45(1), 31–39. https://doi.org/10.1002/bmb.20981

- Noroozi, O., Weinberger, A., Biemans, H. J. A., Mulder, M., & Chizari, M. (2013). Facilitating argumentative knowledge construction through a transactive discussion script in CSCL. Computers & Education, 61(0), 59–76. https://doi.org/10.1016/j.compedu.2012.08.013

- Orsmond, P., Merry, S., & Reiling, K. (2005). Biology students’ utilization of tutors’ formative feedback: A qualitative interview study. Assessment & Evaluation in Higher Education, 30(4), 369–386. https://doi.org/10.1080/02602930500099177

- Osborne, J. (2010). Arguing to learn in science: The role of collaborative, critical discourse. Science, 328(5977), 463–466. https://www.science.org/doi/full/10.1126science.1183944 https://doi.org/10.1126/science.1183944

- Pashler, H., Bain, P. M., Bottge, B. A., Graesser, A., Koedinger, K. R., McDaniel, M., & Metcalfe, J. (2007). Organizing instruction and study to improve student learning: A practice guide. National Center for Education Research, U.S. Department of Education. https://files.eric.ed.gov/fulltext/ED498555.pdf

- Patchan, M. M., Schunn, C. D., & Correnti, R. J. (2016). The nature of feedback: How peer feedback features affect students’ implementation rate and quality of revisions. Journal of Educational Psychology, 108(8), 1098–1120. https://doi.org/10.1037/edu0000103

- Phielix, C., Prins, F. J., & Kirschner, P. A. (2010). Awareness of group performance in a CSCL-environment: Effects of peer feedback and reflection. Computers in Human Behavior, 26(2), 151–161. https://doi.org/10.1016/j.chb.2009.10.011

- Shang, H. F. (2017). An exploration of asynchronous and synchronous feedback modes in EFL writing. Journal of Computing in Higher Education, 29(3), 496–513. https://doi.org/10.1007/s12528-017-9154-0

- Shute, V. J. (2008). Focus on formative feedback. Review of Educational Research, 78(1), 153–189. https://doi.org/10.3102/0034654307313795

- Sweller, J., van Merrienboer, J. J. G., & Paas, F. G. W. C. (1998). Cognitive architecture and instructional design. Educational Psychology Review, 10(3), 251–296. https://doi.org/10.1023/A:1022193728205

- Taghizadeh Kerman, N., Noroozi, O., Banihashem, S. K., & Biemans, H. J. A. (2022). The effects of students’ perceived usefulness and trustworthiness of peer feedback on learning satisfaction in online learning environments. In 8th international conference on higher education advances (HEAd’22), Universitat Politecnica de Valencia. https://doi.org/10.4995/HEAd22.2022.14445.

- Tchounikine, P. (2008). Operationalizing macro-scripts in CSCL technological settings. International Journal of Computer-Supported Collaborative Learning, 3(2), 193–233. https://doi.org/10.1007/s11412-008-9039-3

- Topping, K. J. (2005). Trends in peer learning. Educational Psychology, 25(6), 631–645. https://doi.org/10.1080/01443410500345172

- Topping, K. J. (2009). Peer assessment. Theory Into Practice, 48(1), 20–27. https://doi.org/10.1080/00405840802577569

- Toulmin, S. E. (1958). The uses of argument. Cambridge University Press.

- Valero Haro, A., Noroozi, O., Biemans, H. J., & Mulder, M. (2019). The effects of an online learning environment with worked examples and peer feedback on students’ argumentative essay writing and domain-specific knowledge acquisition in the field of biotechnology. Journal of Biological Education, 53(4), 390–398. https://doi.org/10.1080/00219266.2018.1472132

- Van Gog, T., Paas, F., & Van Merriënboer, J. J. G. (2004). Process-oriented worked examples: Improving transfer performance through enhanced understanding. Instructional Science, 32(1), 83–98. https://doi.org/10.1023/B:TRUC.0000021810.70784.b0

- Van Popta, E., Kral, M., Camp, G., Martens, R. L., & Simons, P. R. J. (2017). Exploring the value of peer feedback in online learning for the provider. Educational Research Review, 20, 24–34. https://doi.org/10.1016/j.edurev.2016.10.003

- Wimshurst, K., & Manning, M. (2013). Feed-forward assessment, exemplars and peer marking: Evidence of efficacy. Assessment & Evaluation in Higher Education, 38(4), 451–465. https://doi.org/10.1080/02602938.2011.646236

- Wingate, U. (2012). ‘Argument!’helping students understand what essay writing is about. Journal of English for Academic Purposes, 11(2), 145–154. https://doi.org/10.1016/j.jeap.2011.11.001

- Wittwer, J., & Renkl, A. (2010). How effective are instructional explanations in example-based learning? A meta-analytic review. Educational Psychology Review, 22(4), 393–409. https://doi.org/10.1007/s10648-010-9136-5

- Wood, N. V. (2001). Perspectives on argument. Pearson. ISBN: 9780134392882.

- Wu, Y., & Schunn, C. D. (2021). From plans to actions: A process model for why feedback features influence feedback implementation. Instructional Science, 49(3), 365–394. https://doi.org/10.1007/s11251-021-09546-5

- Xie, K. (2013). What do the numbers say? The influence of motivation and peer feedback on students’ behaviour in online discussions. British Journal of Educational Technology, 44(2), 288–301. https://doi.org/10.1111/j.1467-8535.2012.01291.x

- Yang, Y. F. (2010). Students’ reflection on online self-correction and peer review to improve writing. Computers & Education, 55(3), 1202–1210. https://doi.org/10.1016/j.compedu.2010.05.017