Abstract

In this paper, we address the question of whether disinforming news spread online possesses the power to change the prevailing political circumstances during an election campaign. We highlight factors for believing disinformation that until now have received little attention, namely trust in news media and trust in politics. A panel survey in the context of the 2017 German parliamentary election (N = 989) shows that believing disinforming news had a specific impact on vote choice by alienating voters from the main governing party (i.e., the CDU/CSU), and driving them into the arms of right-wing populists (i.e., the AfD). Furthermore, we demonstrate that the less one trusts in news media and politics, the more one believes in online disinformation. Hence, we provide empirical evidence for Bennett and Livingston’s notion of a disinformation order, which forms in opposition to the established information system to disrupt democracy.

So-called “fake news” is not a novel phenomenon, but what certainly is new is its environment of dissemination. Digital and, especially, social media facilitate the widespread distribution of false assertions with a relatively professional layout at minimal cost. Such disinformation campaigns try to undermine the voters’ ability to make their decisions based on accurate beliefs about the political system. This involves a danger for the quality and legitimacy of the democratic process, as a well-informed electorate is essential for the collective autonomy of democracies.

In contrast, a study concerning “fake news” on social media in the 2016 US presidential election calls its impact on the outcome into question due to its limited reach (Allcott & Gentzkow, Citation2017). However, the authors did not empirically test this assumption of minimal effects. Therefore, we strive for empirical clarification on this matter considering the question of whether disinformation spread online possesses the power to change the prevailing political circumstances during an election campaign. Moreover, we broaden the understanding of the origins of such disinformation effects in a digital environment: Based on theories drawing on conceptions of social trust, we identify the lack of institutional trust in established news media and politics as a crucial reason why people believe fabricated news to be true.

To address the aforementioned questions, we will present a longitudinal study in the context of the German federal election in 2017. Germany is an appropriate research location as it is particularly affected by the European refugee situation, a major anchoring point for online disinformation. Additionally, its multiparty system, which has been stable for a long time, is recently in a state of flux. A new right-wing populist party (i.e., the AfD) has entered the political arena and been elected into parliament for the first time in 2017—a development that could have been fostered by political disinformation disseminated online.

Disinforming News and the Disinformation Order

The term “fake news” has been repeatedly misused by politicians such as Donald Trump as a label to discredit traditional news media (Egelhofer & Lecheler, Citation2019), which impairs its scientific value. Therefore, we prefer the term disinforming news (or disnews) to explicitly indicate it to be a specific “species of disinformation” (Gelfert, Citation2018, p. 103; see also Marwick & Lewis, Citation2017, p. 44; Wardle & Derakhshan, Citation2017, p. 20). We define it to be untruthful and empirically false news pretending to be true (see Allcott & Gentzkow, Citation2017, p. 213). As it is knowingly false, disinforming news is clearly different from inadvertent misinformation (e.g., honest mistakes) (Weedon, Nuland, & Stamos, Citation2017, p. 5). It is distinguished from other forms of disinformation (e.g., conspiracy theories) by its distinct news character: By applying news values such as unexpectedness and negativity as well as news formats, disnews purports to hold journalistic credibility (Lazer et al., Citation2018; Levy, Citation2017; Tandoc, Lim, & Ling, Citation2018a, p. 143). Our understanding of disinforming news does not only cover propagandistic disinformation made up to manipulate (political) attitudes and behavior. It also takes clickbait disnews into account, which employs inaccurate information to generate advertising revenues (Allcott & Gentzkow, Citation2017, p. 217).

From a societal perspective, considering disnews as isolated falsehoods is insufficient. In contrast, we agree with Bennett and Livingston (Citation2018, p. 124) in framing the problem as the ongoing systematic division and disruption of the democratic public spheres, aiming at destabilizing democratic institutions and processes (e.g., elections). In a similar vein, Lewandowsky, Ecker, and Cook (Citation2017) suggest disinformation should be embedded into a broader societal context, which they refer to as a post-truth world. In general, this term connotes that previously familiar mechanisms of knowledge production with certain responsible actors and institutions (e.g., science, politics, and legacy media) on the one hand, and corresponding publics on the other, are fundamentally challenged (Gibson, Citation2018; Harsin, Citation2015).

In a post-truth era, a portion of society no longer adheres to the conventional principles of factual reasoning. Instead, these people seek to adopt a different form of viewing the world (Lewandowsky et al., Citation2017). The originators of disinformation take advantage of this development by creating “alternative information systems that block the mainstream press and provide followers with emotionally satisfying beliefs around which they can organize” (Bennett & Livingston, Citation2018, p. 132). Accordingly, we are not simply confronted with single pieces of disinformation but with a comprehensive disinformation order. In most countries, this network of disinformation builds on right-wing sentiments and narratives. In Germany, they are primarily focused on attacking and vilifying (Muslim) immigrants, as the refugee situation has been on top of the national news agenda for a long time (Humprecht, Citation2019).

Institutional Mistrust as an Origin for Believing Disinforming News

Mere exposure to (dis)information does not necessarily translate into believing it, which is a plausible precondition for a direct electoral effect of truth claims. Therefore, our study firstly concentrates on the (institutional) reasons to perceive disnews as true. According to the theory of motivated reasoning, truth judgments are generally driven by two possibly conflicting motivations: The accuracy goal of trying to arrive at a preferably correct conclusion, and the directional goal of preferring a previously desired outcome. Interestingly, there is evidence that individuals are more likely to engage in the latter (Kunda, Citation1990).

People evaluate (political) statements in the light of their predispositions so that factual beliefs align with their (political) stances (Bartels, Citation2002). Repeated studies have confirmed this partisan, or confirmation, bias in truth judgments (Reedy, Wells, & Gastil, Citation2014; Swire, Berinsky, Lewandowsky, & Ecker, Citation2017). For example, people tend to believe conspiracy theories that correspond to their political attitudes (Swami, Citation2012; Uscinski, Klofstad, & Atkinson, Citation2016). Furthermore, selective exposure to partisan (news) media and its content can evoke misperceptions in line with the user’s views (Meirick & Bessarabova, Citation2016). This holds especially true in online environments, where audiences have a larger choice of attitude-consistent messages (Winter, Metzger, & Flanagin, Citation2016). Taken together, political ideology is one of the most important predictors of the perceived truthfulness of online disinforming news (Allcott & Gentzkow, Citation2017).

Another bias influencing truth judgments, and thereby producing misperceptions, is the so-called truth effect (Hasher, Goldstein, & Toppino, Citation1977). This implies that people ascribe more substance to assertions that they have heard, read, or seen repeatedly, and has been repeatedly proven in cognitive psychology as well as in political communication (Dechêne, Stahl, Hansen, & Wänke, Citation2010; Ernst, Kühne, & Wirth, Citation2017; Koch & Zerback, Citation2013). Based on this, the mere exposure to disnews could affect its believability. Indeed, DiFonzo, Beckstead, Stupak, and Walders (Citation2016) found that the repeated presentation of uncertain rumors had an effect on participants' validity judgments. Likewise, the exposure to false news stories seems to enhance familiarity and perceptions of their accuracy, even when controlling for correction and political ideology (Pennycook, Cannon, & Rand, Citation2017; Polage, Citation2012).

(News) Media literacy is also a crucial factor in promoting accurate truth judgments. Especially in the online context, media literacy facilitates authentication of true and rejection of false news (Tandoc et al., Citation2018b). In line with this, Craft, Ashley, and Maksl (Citation2017) demonstrate that greater knowledge about news media leads to fewer endorsements of conspiracy theories, even when these match political views. Likewise, findings by Kahne and Bowyer (Citation2017) indicate that media literacy helps to assess the veracity of simulated online posts.

In the following, we want to highlight reasons for believing disnews to which until now sufficient attention has not been paid. Following Bennett and Livingston (Citation2018, p. 127), the breakdown of trust in democratic institutions is the central origin that paves the way for the disinformation order. Alternative realities in a post-truth world “emerge from, and take advantage of … a loss of faith in institutions that anchor truth claims” (Gibson, Citation2018, p. 3180).

In general, the importance of institutional trust is rooted in the differentiation of expert systems in modern society (Giddens, Citation1990), for example science or law. Whereas we can hardly abstain from these systems, we are confronted with the continuous risk that our expectations toward them could not be met, or even be disappointed. Trust is a social mechanism to deal with this risk (Luhmann, Citation1979). Thus, to trust means that an actor delegates responsibility for a certain action to another actor, though they know that this actor potentially could not meet their expectation (Barber, Citation1983; Simmel, Citation2004, pp. 173–181).

We can also consider the news media and politics to be such expert systems. Trust in news media refers to the expectation that the news media supplies its publics with specific information, which serves to orientate users in a complex and otherwise unmanageable society (Kohring & Matthes, Citation2007). The need for orientation has thus been shown to be an important driver of people’s media use for political information, and corresponding media effects (Matthes, Citation2005; Weaver, Citation1980). The established news media are capable of satisfying a user’s need for orientation, but only if they are trusted to convey useful political information. In constrast, mistrust toward mainstream media prompts a switch to nonmainstream or alternative media (Tsfati & Peri, Citation2006). Online media especially seems to be the perfect venue for those mistrustful publics in search of alternative information about the political system (Tsfati, Citation2010; Marwick & Lewis, Citation2017, pp. 40–41). At the same time, the digital media environment is the ideal breeding ground for disinformation. As a counter-public against the established information system, the disinformation order offers 'facts' that skeptics believe to have been missing from the mainstream media in the first place. People not trusting the news media should be inclined to believe these 'alternative facts' because they dissent from the mainstream media coverage, thereby satisfying the need for counter-orientation.

H1: The less the trust in traditional news media, the higher the perceived believability of disinforming news spread online during the German election campaign.

Furthermore, political mistrust should play an important role in believing disinformation. In general, trust in politics refers to “the probability … that the political system (or some part of it) will produce preferred outcomes even if left untended” (Gamson, Citation1968, p. 54; Easton, Citation1975, p. 447). It is deemed to serve as an indispensable resource for the political system (Easton, Citation1975, pp. 447–448; Hetherington, Citation2005). In turn, mistrustful citizens cast doubt on the political system’s capability to make the right decisions. They might even expect the government and the mainstream parties to worsen the problems that society is facing (Citrin & Stoker, Citation2018; Cook & Gronke, Citation2005). The disinformation order tries to nurture such feelings of mistrust by drawing an extremely negative picture of established democratic officials and institutions, and by fabricating stories about political malfunctions. According to the theory of motivated reasoning, people not trusting the political system should tend to believe disinforming news, as it confirms their mistrust toward the political system.

H2: The less the trust in the political system, the higher the perceived believability of disinforming news spread online during the German election campaign.

Electoral Consequences of Disinforming News

There is reasonable doubt about a comprehensive disnews influence on the election result given that the average amount of disinformation exposure appears to be rather low in users’ overall media diet (Allcott & Gentzkow, Citation2017; Grinberg, Joseph, Friedland, Swire-Thompson, & Lazar, Citation2019). Nevertheless, this aggregation clouds the fact that exposure to disinformation is extremely concentrated and attributable to specific parts of the population, such as elderly and conservative people (Grinberg et al., Citation2019; Guess, Nyhan, & Reifler, Citation2018). As there are indeed fractions of the population that are highly exposed to disnews, among these this can act as a gateway for the disruptive influence of online disinformation. Hence, to address its direct influence on vote choice, one has to focus on the individual rather than aggregate level. Moreover, we do not assume mere exposure, but rather believing disinformation to make the difference regarding people’s vote decisions.

In fact, studies show that distorted beliefs about a political issue can influence people’s vote on a ballot question concerning that issue even when controlling for preexisting views and political sophistication (Reedy et al., Citation2014; Wells, Reedy, Gastil, & Lee, Citation2009). Likewise, there is evidence that voting to leave the European Union during the British referendum (i.e., “Brexit”) was fostered by the endorsement of Islamophobic conspiracy theories (Swami, Barron, Weis, & Furnham, Citation2018). The same pattern applies to presidential elections. Barrera, Guriev, Henry, and Zhuravskaya (Citation2018) demonstrate that exposure to misleading statements regarding the European refugee situation significantly increased voting intentions for the extreme right-wing candidate Le Pen. Additionally, people believing false rumors about particular candidates in the 2008 U.S. presidential election were less likely to vote for those candidates (Weeks & Garrett, Citation2014).

Since previous studies employed cross-sectional research designs, the issue of causality in this relationship remains unclear. In addition, most of these investigations deal with other forms of inaccurate information (e.g., misinformation, conspiracy theories, and rumors) instead of disinforming news. We will however build on these unambiguous findings, as we are concerned with a closely related phenomenon. Hence, we suppose that believing disnews should also affect the outcome of parliamentary elections based on proportional representation such as in Germany. Here, a causal impact at the individual level is indicated by changing the probability of electing a given party. However, there is a question as to the direction in which individual vote choice will shift in reaction to political disinformation. This obviously depends on the ideological orientation of disinformation, as the framing of news has been shown to affect individual vote decision in consistency with a frame’s leaning (Van Spanje & de Vreese, Citation2014). As mentioned before, disnews articles in online media are overwhelmingly xenophobic in Germany. This negative framing with regard to immigrants (e.g., as criminal foreigners) prompts negative attitudes toward immigration and its consequences and raises the salience of immigration as a problem, which is not appropriately addressed by the political system (Barrera et al., Citation2018; Igartua & Cheng, Citation2009).

There are three possibilities for people to deal with such political disaffection at the ballot box: First, voters could nonetheless stay loyal to the established political system and elect one of the mainstream parties. Second, citizens could voice their dissatisfaction by casting their votes for a right-wing populist or extremist party. And third, they could exit the party system entirely through abstention from the vote (Hirschman, Citation1970; Hooghe, Marien, & Pauwels, Citation2011). With no system of compulsory voting and a new populist party on the rise, there was both a viable exit and voice option in the 2017 German elections. Hence, opting for loyalty does not seem a reasonable electoral consequence of believing disinformation. It should rather stimulate people to turn away from the political parties representing the established political system (i.e., CDU/CSU, SPD, FDP, Green Party, and Left party), which are declared incapable to solve the refugee situation.

H3a: Higher perceived believability of disinforming news spread online during the German election campaign decreases the likelihood of voting for a mainstream political party.

At the same time, disinformation should promote voice in terms of supporting the “Alternative for Germany” (AfD), which was the most promising right-wing party in Germany according to the polls. As its campaign mainly focused on criticizing (political) elites as well as Islam, the AfD seemed to be the perfect incarnation of political protest in the German context.

H3b: Higher perceived believability of disinforming news spread online during the German election campaign increases the likelihood of voting for the right-wing party AfD.

Lastly, not participating in the election at all (exit) could be another possible outcome of believing online disinformation. However, taking the rather disruptive and remonstrative character of the disinformation order into account, it is debatable whether it induces abstention.

RQ1: Does higher perceived believability of disinforming news spread online during the German election campaign increase the likelihood of abstaining from the vote?

Method

Participants and Design

Our study addressed the institutional antecedents and electoral consequences of disnews based on data from a three-wave panel survey. We conducted the survey during the campaign of the German parliamentary election in fall 2017. The data were collected around two months prior to the election (Wave 1: July 31–August 8 2017), shortly after the television debate between the two candidates for the chancellorship (Wave 2: September 4–12 2017), and right after the election day (Wave 3: September 25–28 2017).

The fieldwork was performed by the German research company “respondi AG.” A quota sample was drawn from the “respondi” online access panel.Footnote1 Representative quotas (regarding the electorate) for gender, age, education, and federal state were implemented in sampling. Initially, a total of 2,301 people were approached. After removing careless responders and lurkers identified by a quality fail question, the response time, and the amount of missing values, we obtained an adjusted sample of 1,664 respondents in the first wave (American Association for Public Opinion Research [AAPOR] RR1: 72.3%). A total of 1,267 of them also took part in Wave 2 (recontact rate AAPOR RR1: 76.1%), and 989 participants eventually completed the questionnaire in Wave 3 (recontact rate AAPOR RR1: 78.1%). Our analyses are based only on those participants who participated in all three waves (N = 989).

On average, there were no significant differences between this final sample and the representative sample in Wave 1 concerning gender (female: 48.4%, male: 51.6%), education (low: 34.1%, medium: 34.7%, high: 31.2%), political ideology, and the degree of believing disinforming news. Solely, respondents who were part of all three waves were slightly older (Mage = 48.18, SD = 13.49). However, because these differences were very small, we assume that our findings are not biased through panel attrition.

Measures

Disnews Exposure and Placebo News Recall

To acquire a stock of disnews articles that circulated online during the election campaign, we applied an approach introduced by Allcott and Gentzkow (Citation2017, pp. 219–220). That is, we repeatedly looked through the major German-language fact-checking websites (e.g., “correctiv.org,” “faktenfinder.tagesschau.de,” “mimikama.at”) and gathered a variety of recent stories covering political issues that were designated as deliberately and verifiably false. As expected, nearly all disnews stories in Germany contained right-wing implications such as skepticism toward the European Union (e.g., “The European Union is going to abolish cash money starting in 2018.”), attacking politicians (e.g., “The father of the candidate for chancellorship Martin Schultz was a captain of the SS and commander of the concentration camp Mauthausen.”) and above all the exclusion of migrants and refugees (e.g., “Refugees from Arabia cause hepatitis A epidemic across Europe.”).Footnote2 We were careful to represent this spectrum of narratives as precisely as possible in our selection. We made also sure to pick those disnews that fact-checkers reported as having triggered significant online resonance for each wave (e.g., disnews for Wave 2 were released between Wave 1 and Wave 2). Finally, we compressed the stories’ message into meaningful headlines. We also fabricated some placebo disnews, which conveyed similar right-wing narratives but never circulated online. These placebo news items were meant to control for a possible inflation of the disnews scores in the upcoming analysis (Allcott & Gentzkow, Citation2017, p. 220). We also mixed in some true news headlines on the same topics in order to distract the participants, thereby preventing bias caused by only showing false assertions.

At each wave, we confronted our respondents with these headlines (T1: six disnews, four placebos; T2: six disnews, five placebos; T3: seven disnews, five placebos). We asked whether they have already encountered a statement, as well as how they assessed its truthfulness. Responses to the former question were combined into two analog sum scores across all three waves and dichotomized, with 0 = “no disnews exposure” and 1 = “disnews exposure”, as well as 0 = “no placebo news recall” and 1 = “placebo news recall” (disnews exposure: 57.1%; placebo news recall: 47.7%).

Disinforming and Placebo News Believability

Perceived believability of the disnews and placebo messages was measured by a 5-point scale ranging from 0 “certainly false” to 4 “certainly true”. We calculated a composite score from the disnews beliefs for each point in time by adding up all the single values and dividing by their number. The variable thus mirrors the average believability of disnews articles in a respective time period. Per wave, the items showed a fairly satisfying internal consistency (T1: M = 1.94, SD = .73, Cronbach’s α = .66; T2: M = 1.44, SD = .76, Cronbach’s α = .73; T3: M = 1.47, SD = .72, Cronbach’s α = .76). In contrast, all placebo news items were condensed to a single composite score across all waves (M = 1.77, SD = .58, Cronbach’s α = .78).

Trust in Traditional News Media

We applied a scale introduced by Kohring and Matthes (Citation2007; see also Prochazka & Schweiger, Citation2019) to measure trust in traditional news media at T1, T2, and T3. On a seven-point scale (1 = “not correct at all,” 7 = “fully correct”), respondents rated if they considered several statements about the news coverage on politics as correct. Originally, the scale consists of the four subscales “trust in selectivity of topics,” “trust in selectivity of facts,” “trust in accuracy of depictions,” and “trust in journalistic assessment.” In order to keep our statistical model as parsimonious as possible, we employed a short version by only taking the highest loading item of each subscale into account. Consequently, four (reflective) indicators (e.g., “The information in the reporting would be verifiable if examined.”) formed a latent factor “trust in traditional news media” per wave (T1: AVE = .66, Jöreskog’s Rho = .89; T2: AVE = .70, Jöreskog’s Rho = .90; T3: AVE = .69, Jöreskog’s Rho = .90).

Trust in Politics

The scale “trust in politics” was gathered at T1, T2, and T3 by four items. The indicators mirror the phases of the policy cycle, namely agenda setting, policy formulation, policy adoption, and policy implementation. Respondents were asked to what extent they would agree with four statements (e.g., “In general, one can rely on politics to make the right decisions.”) on a seven-point scale (1 = “not correct at all,” 7 = “fully correct”). The last item (i.e., “Frequently, political decisions are not implemented properly afterward.”) had to be removed due to its poor loading (see results). The latent factors derived from the remaining indicators performed well in terms of its average extracted variance and reliability in each wave (T1: AVE = .66, Jöreskog’s Rho = .85; T2: AVE = .67, Jöreskog’s Rho = .86; T3: AVE = .67, Jöreskog’s Rho = .86).

Voting Intention and Vote Choice

To assess change over time, we acquired data about our respondent’s voting intention at T1 as well as their actual vote choice at T3. The values for voting intention were dichotomized into five dummy variables for the CDU/CSU, SPD, the mainstream opposition parties (which comprised the Green Party, the liberal FDP, and the Left party), the AfD, and the undecided.Footnote3 Vote choice at T3 was recoded into a nominal variable with five categories, namely CDU/CSU, SPD, mainstream opposition parties, AfD, and abstention.

Controls

Based on our literature review, we added several potential confounders to our questionnaire at T1 in addition to our focal variables. Besides the demographics gender, age, and education, “political ideology” was measured on a 10-point scale ranging from 1 “left-leaning” to 10 “right-leaning” (M = 5.06, SD = 2.00). To account for a potential truth effect of disnews spread online, we questioned our respondents about their “social media news use,” employing a 5-point scale (1 = “never,” 5 = “very often”) by Choi (Citation2016), comprising three items. These were combined to a composite score showing high levels of internal consistency (M = 2.37, SD = 1.21, Cronbach’s α = .93). Additionally, we asked for “traditional news media use” “on TV,” “on the radio,” “in printed newspapers or magazines,” and “on websites of established news media outlets” (M = 4.36, SD = 1.76, Cronbach’s α = .59). Finally, the composite score “news media literacy” was gathered by adding up four items which captured a respondent’s ability to assess and handle the relevance, amount, substance, and rationale of news on a 7-point scale (M = 3.64, SD = 1.16, Cronbach’s α = .69).

Results

Preliminary Analysis

In order to study their impact during the German election, we first had to test whether exposure to online disnews articles has occurred. On average, the respondents have encountered 2.19 (SD = 3.23; 95% CI: 1.99 to 2.39) of the 19 disinforming news headlines that we presented to them over all three waves. That equals 11.5%, which sounds few at first glance. Given that the participants also came across only 15.9% of our true news headlines, the amount of false news exposure nevertheless seems quite substantial. However, the amount of disnews exposure was only slightly though significantly higher than the falsely reported average placebo news recall (10.9%). As this difference was statistically significant (ΔM [988] = .006, p = .03), there still should have been some meaningful exposure to disinformation during the campaign period.Footnote4

Besides, even though the frequency distribution is very right-skewed and zero-inflated, it shows a “long tail” of exposure to disinforming news (see , left panel). That is, most people saw no false stories at all (42.9%), but some came across a large amount during the election campaign. Eighteen percent of our participants reported exposure to five or more disnews articles. Hence, although exposure may be low on average, an individual’s exposure may still be high. Unlike exposure, disinformation beliefs across all waves were approximately normally distributed (M = 1.61, SD = .62; 95% CI: 1.57 to 1.65), meaning that most people neither strongly believed nor strongly disbelieved the false messages which had circulated online (see , right panel).

Measurement Model

To test our main assumptions, we performed structural equation modeling (SEM) using the software Mplus (Muthén & Muthén, Citation2015). We were guided by the procedure Cole and Maxwell (Citation2003, pp. 570–572) suggested when using SEM to test mediational processes in longitudinal designs. Hence, we conducted a confirmatory factor analysis (CFA) employing maximum likelihood estimation with robust standard errors (MLR) to assess the adequacy of our measures first. The latent constructs were modeled to cause their respective indicators (reflective measures). The measurement model comprised our focal variables “trust in traditional news media” and “trust in politics” at all three points in time. All six latent exogenous variables in the model were correlated.

The initial model provided a poor fit to the data.Footnote5 We tried to enhance our model by a) removing an indicator of the “political trust” factors due to poor loadings, b) adding a residual correlation between the first two items of “trust in traditional news media,” and c) allowing for correlations among the corresponding disturbances of the indicators across time. Obviously, these changes had an impact, as the global fit of the modified model demonstrated improvement (see Kline, Citation2016). Despite a significant χ2 (150) = 314.985 (p = .00) due to the large sample size, the approximate fit indices, which are robust to sample size, showed a good global fit of the model: χ2/df = 2.10, TLI = .98, CFI = .99, RMSEA = .03 (90% CI: .028 to .038).

Regarding local fit, all standardized factor loadings were higher than .50 and significant (see Table B2 in the online appendix). The average variance extracted and reliability of each factor exceeded .60, indicating a sufficient convergent validity of the individual parameters (see Byrne, Citation2012, pp. 77–82). Discriminant validity concerning the different factors was also tested and confirmed based on the Fornell-Larcker criterion (Fornell & Larcker, Citation1981). Moreover, a model with all indicators loading only on one latent factor fit the data worse, speaking against a joint measure of institutional trust. We inspected factorial measurement invariance (see Widaman & Reise, Citation1997) by comparing the model to a restricted version through chi-square difference testing and assumed configural, weak (equal loadings), partial strong (equal intercepts), and strict (equal residual variances) invariance over time for our measures (see Table B1 in the online appendix).

Table 1 Multinomial logistic regression on individual vote choice (T3)

Table 2 Effects of disnews believability on the probability of voting for a specific party

Main Results

After validating our measures, we turn to our first structural model. In order to benefit from the panel design of our study, we conceptualized it as a so-called autoregressive model with cross-lagged effects (Cole & Maxwell, Citation2003; Finkel, Citation1995; Jöreskog, Citation1979). This implied integrating lagged variables into our model, which leads to two types of relationships: autoregressive and cross-lagged. The autoregressive paths (i.e., YT on YT-1) express the stability of a variable over time. Additional cross-lagged effects (i.e., YT on XT-1) of other independent variables represent the association between the two variables from one time to another, controlling for the stability of the particular dependent variable. Therefore, a cross-lagged panel model (CLPM) provides some (but not necessarily sufficient) indication for causality regarding the relationship between X and Y.

In this case, we included our focal variables “trust in traditional news media,” “trust in politics,” and “disnews believability” at T1, T2, and T3. In our full CLPM, every upstream variable had a direct effect on every downstream variable, and all exogenous variables as well as the residuals of all endogenous variables were allowed to correlate within each wave. Afterward, we added the controls “gender,” “age,” “education,” “political ideology,” “social media news use,” “traditional news media use,” “news media literacy,” “disnews exposure,” and “placebo news recall” as exogenous variables (all correlated) exerting a direct effect on the focal constructs.Footnote6

Proceeding from this baseline CLPM, we tried to find the most parsimonious model that still provides a good fit to the data. Therefore, we excluded non-significant paths originating from the controls and restricted all wave-skipping effects (direct paths from T1 to T3) except the auto-correlational ones to zero. Moreover, we removed all paths originating from “disnews believability” to the trust variables. These restrictions did not significantly worsen the model fit. Overall, this SEM provided a good fit to the data: χ2 (427) = 759.726 (p = .00), χ2/df = 1.78, TLI = .97, CFI = .98, RMSEA = .03 (90% CI: .025 to .032), SABIC = 64,813.791. We chose this over a reversed model with paths from the trust to the disnews variables eliminated because it showed a significantly worse fit to the data (see Table B1 in the online appendix).

To test our first two hypotheses, we estimated indirect effects from T1 to T3 using a bootstrap of 10,000 draws. Overall, the independent variables accounted for 53.4% of variance in the central outcome “disnews believability” at T3 (see ). Over and above the autoregressive impact of the lagged dependent variable and several controls, “trust in news media” at T1 had a significant negative total effect (sum of all nonspurious, time-specific effects) on “disnews believability” at T3 (B = − .08, β = − .11, SE = .03, p = .00 [95% CI: − .17 to − .05]). Likewise, the total effect of “trust in politics” at T1 on “disnews believability” at T3 was also negative and significant (B = − .08, β = − .13, SE = .03, p = .00 [95% CI: − .20 to − .06]). Hence, the less people trust the established news media and politics, the more they tend to believe online disinformation to be true, supporting our hypotheses H1 and H2.

Figure 2. Most parsimonious CLPM with manifest indicators, error terms, control variables, covariances between the variables, and wave-skipping auto-regressions omitted (N = 974).Figure displays standardized regression coefficients; ns = not significant. †p < .10 *p < .05. **p < .01. ***p < .001. R2 = coefficient of determination.

In order to approach our further hypotheses, we estimated another structural equation model including our voting variables. The model differed from the previous one in that the trust variables were only included at T1 and “disnews believability” at T1 and T2. Vote choice at T3 was introduced as central endogenous variable. As we dealt with an unordered categorical outcome, we employed multinomial logistic regression for parameter estimation. In order to address change in vote decision in the course of the campaign, we also added voting intention (in the form of our dummy variables) as an exogenous predictor.Footnote7 Beyond the previously mentioned confounders, we also included “placebo news believability” to eliminate spurious effects of “disnews believability” on voting behavior. Moreover, we used “disnews exposure” as a grouping variable to reveal the separate effects for those people who actually encountered online disinformation during the campaign.Footnote8

This logistic regression model fit the data better than an intercept-only model without the predictors’ effects on vote choice. Removing the insignificant paths did not significantly deteriorate the goodness of fit (see Table B1 in the online appendix). The McFadden pseudo-R2 of .47 indicated a high explanatory power (McFadden, Citation1974).Footnote9 Conducting multinomial logistic regression on vote choice at T3, entailing five categories, provided us with four sets of different estimates. Each of them represents the effect of a given independent variable on the occurrence of an outcome relative to a fixed base category (i.e., the CDU/CSU).

The results show quite high autoregressive estimates of voting intention on vote choice indicating rather low volatility (see ). Most people that planned to elect a party at the beginning of the campaign seemed to cast their vote for the same party. Nevertheless, there was a significant impact of believing disinformation on the vote decision with reference to the CDU/CSU.Footnote10 More precisely, a one-unit increase in “disnews believability” increased the odds of voting for the AfD as opposed to the CDU/CSU more than sevenfold (B = 2.03, OR = 7.62, SE = .71, p = .00). Similarly, the odds of voting for the SPD instead of for the CDU/CSU increased about fivefold (B = 1.67, OR = 5.33, SE = .59, p = .00). The positive effects on voting for an established opposition party (B = 1.08, OR = 2.96, SE = .58, p = .06) as well as on abstaining (B = 1.42, OR = 4.13, SE = .86, p = .10) fell short of the significance level of 5%. Taken as a whole, the coefficients suggest that disinformation beliefs lower the odds of electing the main governing party.

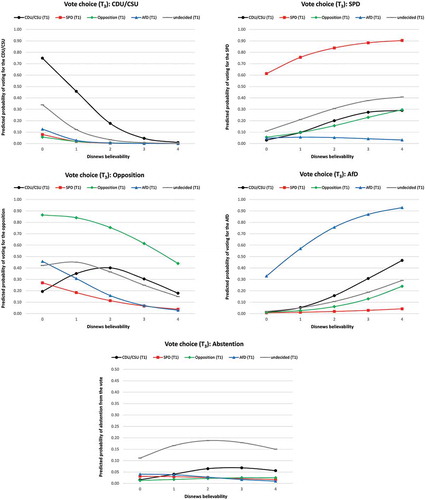

Nevertheless, log-odds and odds ratios are hard to interpret independently and sometimes misleading when it comes to probability statements. Therefore, we calculated and plotted the predicted probabilities of voting for a specific party at varying levels of disnews believability to address our remaining hypotheses and research question. To get a more fine-grained picture of the relationships, we grouped the probabilities by voting intention at T1 while holding all the other covariates constant at their sample means or modes (see ). To estimate the average influence of believing disinformation on these probabilities, we also calculated the marginal effects (including its standard errors) on a specific vote choice relative to voting intention at T1 (see ).Footnote11

Figure 3. Probabilities of voting for a specific party against disnews believability grouped by voting intention.Predicted probabilities of voting for the CDU/CSU (top-left panel), the SPD (top-right panel), an opposition party (middle-left panel), the AfD (middle-right panel), and abstaining (lower panel) at varying levels of disnews believability; separate predictions for different voting intentions at T1 with all other covariates set at their sample means/modes; based on estimates from the MLR model in .

In hypothesis H3a, we claimed that believing disnews decreases the likelihood of voting for a mainstream political party. With regard to the CDU/CSU (, top-left panel), this hypothesis seems to be proved true. Believing disinformation generally appears to affect the election chances for the main governing party negatively, as already indicated by the odds ratios. The corresponding ME were however only significant for people who intended to vote for the CDU/CSU or were yet undecided at T1. On average, their probability to elect the CDU/CSU decreased by 30.0% (SE = .11, p = .01) and 9.5% (SE = .04, p = .02), respectively, for a one-unit increase in disnews believability. However, we failed to demonstrate a simultaneous tendency regarding the other governing party, the SPD (, top-right panel). Surprisingly, disnews believability in fact increased the probability of electing the SPD in most cases (except for AfD supporters), even though not significantly. Especially, former CDU/CSU supporters appeared to rather vote for the SPD the more they perceived disinformation to be true. The marginal effect only slightly exceeded the significance level (ME = .105, SE = .06, p = .05). As expected, the disinformation effects on the probability of electing an established opposition party (, middle-left panel) were mostly negative, but altogether insignificant. Hence, H3a was only confirmed with regard to the main governing party CDU/CSU.

Hypothesis H3b implied a positive effect of disnews beliefs on voting for the right-wing protest party. In general, the AfD indeed seems to benefit from disinformation beliefs (, middle-right panel). However, the only significant gain in probability stems from former CDU/CSU supporters. For those, a one-unit increase in disnews believability raised the likelihood of voting for the AfD by 9.9% (SE = .05, p = .05). Accordingly, H3b was partly corroborated for voters initially leaning toward the CDU/CSU.

Lastly, there was no evidence for an impact of disnews on the probability to abstain from the vote, which answers our RQ1 (, lower panel). Regardless of voting intention, all of the slopes regarding abstention were flat and the marginal effects were close to zero and highly insignificant.

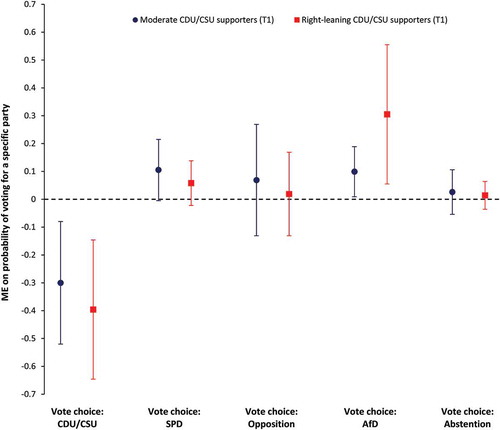

Overall, the most striking result is that former CDU/CSU supporters were more likely to refrain from electing this party the more they believed disinformation. Instead, these voters tended to choose either the AfD or the SPD. To inspect the robustness of this finding, we reestimated the marginal effects for a group that previous research indicates to be most susceptible to online disnews, namely the rightist voters.Footnote12 The direction of all the effects holds true for this part of the electorate (see Table B4 in the online appendix). However, the impact of disnews on voting for the AfD becomes much stronger and more significant for CDU/CSU supporters with right-wing attitudes. At the same time, the influence on electing the SPD almost disappears among this group (see ). This indicates that those right-leaning voters who had initially intended to vote for the CDU/CSU exclusively switched to the AfD when believing disinformation.

Figure 4. Effects of disnews believability for former (moderate and right-leaning) CDU/CSU supporters.Note. Marginal effects of the variable “disnews believability” on the probability to vote for a specific party grouped by political ideology; effects only apply to people who intended to vote for the CDU/CSU at T1; all other covariates are held constant at their means/modes; 95% confidence intervals are reported; based on estimates from the MLR model in .

Discussion

Political debates in the face of the 2017 German parliamentary election expressed severe concerns regarding the influence of political disinformation on the Internet. Our study sheds light on this matter in a twofold way. First, we aimed to analyze the possible institutional antecedents of online disnews. In light of our findings, one cannot ignore that the success of disinformation is also a defeat for democratic institutions. While there is certainly not a general loss of institutional trust in Germany, a specific portion of the German population has become strongly skeptical about legacy news media and the political system over the last years. From their point of view, professional journalists and politicians have discredited themselves in covering and dealing with important political topics such as the refugee situation. As a consequence, these doubly-mistrustful people are yearning for alternative facts for the purpose of orientation and confirmation, with the striking result that the less one trusts in news media and politics, the more one believes in online disinformation. We thus provided empirical evidence for Bennett and Livingston's (Citation2018) notion of a disinformation order emerging from a breakdown of institutional trust and forming in opposition to the established information system.

Besides these antecedents, we did also reveal the electoral consequences of disnews beliefs in a multiparty context. Here, our research also makes a significant contribution to the field of political communication in addressing this relationship over time. By applying a longitudinal design, we were able to draw conclusions about the impact of political disinformation on vote switching, even though our results concerning this matter are mixed. Our data demonstrate that false news intentionally spread on the Internet did play a role in diminishing loyalty and raising voice in the German election. In contrast, abstention from the vote (exit option) remained unaffected by online disinformation, probably due to its rather inflammatory (anti-immigration) narratives. Because of its disruptive, right-leaning nature, believing disnews apparently alienated voters from the main governing party, i.e., the Christian Democrats, and notably drove them into the arms of the AfD. Accordingly, disinformation beliefs were apparently one of the reasons for the electoral success of the right-wing populists in the 2017 parliamentary election. At the same time, sticking to disnews did obviously not encourage a decision against the other governing party, i.e., the Social Democrats (SPD). They have, if anything, rather benefited from disinformation, even drawing former supporters of their coalition partner.

There may be a suitable explanation for this quite puzzling observation. In Germany, disinformation has mainly focused on purported troubles caused by immigration from Islamic countries. The public debate about the refugee situation has been centered around Chancellor Angela Merkel, as she was deemed responsible for Germany’s “welcome policy.” Misinformed individuals might have felt vindicated in primarily blaming Merkel, the head of the Christian Democrats (CDU/CSU), for the alleged misconduct concerning Muslim immigrants. Against this background, it makes sense that it was only the CDU/CSU which considerably suffered from disinformation in Germany. The former supporters of this party mainly voiced their disnews-induced disaffection by switching to the most obvious protest party, AfD. This tendency holds especially true for the most conservative fraction, as the AfD’s right-wing populist claims probably best match its demands. For the rather moderate portion, even the SPD apparently served as a voice option due to their attempted replacement of Angela Merkel with their candidate Martin Schulz, thereby providing an alternative to her policy beyond a rightist ideology.

Although we could not demonstrate a meaningful disloyalty or voice effect of disnews beyond people formerly supportive of the Christian Democrats, it would be wrong to conclude that online disinformation did not matter further. It may be reasonable that the periods between waves were too short to detect a causal impact of disinforming news. According to our data, most of the voters were already positive about their decision two months before the election day, which counteracts any media effects during that period. One possibility however is that disnews shaped voting intentions for the AfD (or for other parties) far before the election, e.g., during the peak of the refugee situation in 2015.

However, our study deliberately focused on vote switching during the peak of the election campaign because this provided the strictest test for an electoral influence of disnews. We leave it to future research to pay attention to the long-term effects of online political disinformation. Another question is whether our results are also applicable to other democracies. Of course, there are cross-country differences that shape the characteristics of the national disinformation orders (e.g., amount, structure, and narratives) and its social impact. Nevertheless, its role as a right-wing counter-public should apply across nations and unfold with similar implications. Besides, when there are certain disruptions in Germany, where overall institutional trust is comparatively high, electoral consequences of disnews might probably be even graver in more polarized countries such as the USA.

As every internet-based research, our study has to face a potential sampling bias due to recruitment from an online access panel. We nonetheless attempted to ensure a high degree of representativeness by employing quotas for gender, age, education, and federal state. Additionally, participants from the online access panel were recruited using online and offline procedures, which may increase population coverage.

In addition, some of our self-reported measures could have caused difficulties. Our respondents may have been wary about giving their true voting intention for the AfD because of a perceived societal stigma as an extremist party. However, some portion of this possible bias has been compensated for by our longitudinal design. Besides, the share of AfD voters in our sample (14.8%) slightly exceeded their actual election result (12.6%), which contradicts the understatement concern. Beyond that, the variable “disnews believability” might partly represent general xenophobic and populist attitudes. In that case, its effects on vote choice would not be reducible to disinformation that actually circulated online, but also be biased by stable traits. We took measures to eliminate this bias as far as possible. First, we restricted our vote-specific analysis to the subsample that indicated exposure to our disnews headlines, thereby excluding all people declaring to believe them without in fact having read some. Second, we included the perceived believability of made-up placebo news conveying similar narratives to control for potential spurious effects which did not stem from disnews stories that were indeed distributed during the campaign.

Furthermore, we were not able to capture all disinforming news that was disseminated online during the election period. Therefore, it may be possible that we missed some effects by leaving out some false stories. There are two reasons why this seems unlikely: First, we relied on what the major fact-checking websites in Germany declared as the most attention-grabbing falsehoods. And second, we intentionally considered a wide range of narratives supposing the influence of omitted disnews to lead in the same direction due to their similar message pattern.

Altogether, we provided evidence for the political impact of online disinformation, as it may affect the individual vote choice based on false information, thereby undermining important democratic principles. However, to understand the problem in its entirety, we have to go beyond “fake news” and look at its societal background. That is, we should not merely understand disinforming news as an isolated phenomenon but rather as a symptom of a more deep-rooted public disaffection with the news media as well as the political system. Therefore, effective measures to combat political disinformation should address its social root cause by trying to regain trust in democratic institutions.

Open Scholarship

This article has earned the Center for Open Science badges for Open Data and Open Materials through Open Practices Disclosure. The data and materials are openly accessible at https://doi.org/10.7801/313

This article has earned the Center for Open Science badges for Open Data and Open Materials through Open Practices Disclosure. The data and materials are openly accessible at https://doi.org/10.7801/313

Supplemental Material

Download Zip (941.1 KB)Disclosure statement

There was no potential conflict of interest.

Supplemental material

Supplemental data for this article can be accessed on the publisher’s website at https://doi.org/10.1080/10584609.2019.1686095.

Data availability statement

The data described in this article are openly available in the Open Science Framework at https://doi.org/10.7801/313

Additional information

Funding

Notes on contributors

Fabian Zimmermann

Fabian Zimmermann is a research associate and doctoral student at the Department of Media and Communication Studies, University of Mannheim, Germany. His research addresses political disinformation, media trust and distrust, as well as mediatization of society.

Matthias Kohring

Matthias Kohring is a professor of media and communication studies at the University of Mannheim, Germany. His research addresses public communication, journalism theory, trust in news media, and science communication.

Notes

1. The “respondi AG” (https://www.respondi.com/) satisfies the ESOMAR guidelines on market, opinion, and social research. It combines online and offline procedures to recruit participants for its online access panel.

2. See Table A1 in the online appendix for a detailed description of the survey measures including all question wordings and items.

3. We summarized the mainstream opposition parties to prevent estimation problems due to low individual rates of the single parties.

4. The comparison between actual disnews and made-up placebo news exposure only takes an overestimation of true exposure due to the headlines’ perceived plausibility into account. It misses a possible underestimation of true exposure by discounting some real disnews articles that people saw but forgot.

5. See Table B1 in the online appendix for the global fit measures corresponding to all models that have been estimated in the analysis.

6. The following model estimations were only based on a subsample of N = 974 because of missing values in the control variable “political ideology.”

7. We did not include a dummy variable for the Christian Democrats (CDU/CSU), as it served as base category in the upcoming analysis.

8. The following analysis regarding the effects of ‘disnews believability’ on vote choice is based only on the parameter estimates from the group indicating exposure to disinformation.

9. As this model included multinomial logistic regression, chi-square-based fit indices could not be computed. Therefore, the goodness of fit assessments were based on the log-likelihood values. Besides, the estimation was only based on a subsample of N = 791 because of missing values in the variables “voting intention” and “vote choice.”

10. We also calculated MLR models setting the other parties as base category. These can be found in Table B3 in the online appendix.

11. The computed marginal effects (ME) give the instantaneous rate of change in the probability of the DV caused by the IV at its sample mean (holding all other covariates constant).

12. This time, we computed the marginal effects of disnews believability (at its sample mean) on the probability to vote for a specific party holding the other covariates at their means or modes, but fixing political ideology at 2 SD above its mean.

References

- Allcott, H., & Gentzkow, M. (2017). Social media and fake news in the 2016 election. Journal of Economic Perspectives, 31, 211–236. doi:10.1257/jep.31.2.211

- Barber, B. (1983). The logic and limits of trust. New Brunswick, NJ: Rutgers University Press.

- Barrera, O., Guriev, S., Henry, E., & Zhuravskaya, E. (2018). Facts, alternative facts, and fact checking in times of post-truth politics. Retrieved from https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3004631

- Bartels, L. M. (2002). Beyond the running tally: Partisan bias in political perceptions. Political Behavior, 24, 117–150. doi:10.1023/A:1021226224601

- Bennett, W. L., & Livingston, S. (2018). The disinformation order: Disruptive communication and the decline of democratic institutions. European Journal of Communication, 33, 122–139. doi:10.1177/0267323118760317

- Byrne, B. M. (2012). Structural equation modeling with Mplus: Basic concepts, applications, and programming. New York, NY: Taylor and Francis.

- Choi, J. (2016). Why do people use news differently on SNSs? An investigation of the role of motivations, media repertoires, and technology cluster on citizens’ news-related activities. Computers in Human Behavior, 54, 249–256. doi:10.1016/j.chb.2015.08.006

- Citrin, J., & Stoker, L. (2018). Political trust in a cynical age. Annual Review of Political Science, 21, 49–70. doi:10.1146/annurev-polisci-050316-092550

- Cole, D. A., & Maxwell, S. E. (2003). Testing mediational models with longitudinal data: Questions and tips in the use of structural equation modeling. Journal of Abnormal Psychology, 112, 558–577. doi:10.1037/0021-843X.112.4.558

- Cook, T. E., & Gronke, P. (2005). The skeptical American: Revisiting the meanings of trust in government and confidence in institutions. Journal of Politics, 67, 784–803. doi:10.1111/j.1468-2508.2005.00339.x

- Craft, S., Ashley, S., & Maksl, A. (2017). News media literacy and conspiracy theory endorsement. Communication and the Public, 2, 388–401. doi:10.1177/2057047317725539

- Dechêne, A., Stahl, C., Hansen, J., & Wänke, M. (2010). The truth about the truth: A meta-analytic review of the truth effect. Personality and Social Psychology Review, 14, 238–257. doi:10.1177/1088868309352251

- DiFonzo, N., Beckstead, J. W., Stupak, N., & Walders, K. (2016). Validity judgments of rumors heard multiple times: The shape of the truth effect. Social Influence, 11, 22–39. doi:10.1080/15534510.2015.1137224

- Easton, D. (1975). A re-assessment of the concept of political support. British Journal of Political Science, 5, 435–457. doi:10.1017/S0007123400008309

- Egelhofer, J. L., & Lecheler, S. (2019). Fake news as a two-dimensional phenomenon: A framework and research agenda. Annals of the International Communication Association, 1–20. doi:10.1080/23808985.2019.1602782

- Ernst, N., Kühne, R., & Wirth, W. (2017). Effects of message repetition and negativity on credibility judgments and political attitudes. International Journal of Communication, 11, 3265–3285.

- Finkel, S. E. (1995). Causal analysis with panel data. Thousand Oaks, CA: Sage.

- Fornell, C., & Larcker, D. F. (1981). Evaluating structural equation models with unobservable variables and measurement error. Journal of Marketing Research, 18, 39–50. doi:10.2307/3151312

- Gamson, W. A. (1968). Power and discontent. Homewood, IL: The Dorsey Press.

- Gelfert, A. (2018). Fake news: A definition. Informal Logic, 38, 84–117. doi:10.22329/il.v38i1.5068

- Gibson, T. A. (2018). The post-truth double helix: Reflexivity and mistrust in local politics. International Journal of Communication, 12, 3167–3185.

- Giddens, A. (1990). The consequences of modernity. Stanford, CA: Stanford University Press.

- Grinberg, N., Joseph, K., Friedland, L., Swire-Thompson, B., & Lazar, D. (2019). Fake news on Twitter during the 2016 U.S. presidential election. Science, 363, 374–378. doi:10.1126/science.aau2706

- Guess, A., Nyhan, B., & Reifler, J. (2018). Selective exposure to misinformation: Evidence from the consumption of fake news during the 2016 U.S. presidential campaign. Brussels, Belgium: European Research Council. Retrieved from http://www.dartmouth.edu/~nyhan/fake-news-2016.pdf

- Harsin, J. (2015). Regimes of posttruth, postpolitics, and attention economies. Communication, Culture & Critique, 8, 327–333. doi:10.1111/cccr.12097

- Hasher, L., Goldstein, D., & Toppino, T. (1977). Frequency and the conference of referential validity. Journal of Verbal Learning and Verbal Behavior, 16, 107–112. doi:10.1016/S0022-5371(77)80012-1

- Hetherington, M. J. (2005). Why trust matters: Declining political trust and the demise of American liberalism. Princeton, NJ: Princeton University Press.

- Hirschman, A. O. (1970). Exit, voice, and loyalty: Responses to decline in firms, organizations, and states. Cambridge, MA: Harvard University Press.

- Hooghe, M., Marien, S., & Pauwels, T. (2011). Where do distrusting voters turn if there is no viable exit or voice option? The impact of political trust on electoral behaviour in the Belgian regional elections of June 2009. Government and Opposition, 46, 245–273. doi:10.1111/j.1477-7053.2010.01338.x

- Humprecht, E. (2019). Where ‘fake news’ flourishes: A comparison across four Western democracies. Information, Communication & Society, 22, 1973–1988. doi:10.1080/1369118X.2018.1474241

- Igartua, J. J., & Cheng, L. (2009). Moderating effect of group cue while processing news on immigration: Is the framing effect a heuristic process? Journal of Communication, 59, 726–749. doi:10.1111/j.1460-2466.2009.01454.x

- Jöreskog, K. G. (1979). Statistical models and methods for analysis of longitudinal data. In K. G. Jöreskog & D. Sörbom (Eds.), Advances in factor analysis and structural equation models (pp. 129–169). Cambridge, MA: Abt Books.

- Kahne, J., & Bowyer, B. (2017). Educating for democracy in a partisan age: Confronting the challenges of motivated reasoning and misinformation. American Educational Research Journal, 54, 3–34. doi:10.3102/0002831216679817

- Kline, R. B. (2016). Principles and practice of structural equation modeling (4th ed.). New York, NY: Guilford Press.

- Koch, T., & Zerback, T. (2013). Helpful or harmful? How frequent repetition affects perceived statement credibility. Journal of Communication, 63, 993–1010. doi:10.1111/jcom.12063

- Kohring, M., & Matthes, J. (2007). Trust in news media: Development and validation of a multidimensional scale. Communication Research, 34, 231–252. doi:10.1177/0093650206298071

- Kunda, Z. (1990). The case for motivated reasoning. Psychological Bulletin, 108, 480–498. doi:10.1037/0033-2909.108.3.480

- Lazer, D. M. J., Baum, M. A., Benkler, Y., Berinsky, A. J., Greenhill, K. M., Menczer, F., … Zittrain, J. L. (2018). The science of fake news: Addressing fake news requires a multidisciplinary effort. Science, 359, 1094–1096. doi:10.1126/science.aao2998

- Levy, N. (2017). The bad news about fake news. Social Epistemology Review and Reply Collective, 6(8), 20–36. Retrieved from https://social-epistemology.com/2017/07/24/the-bad-news-about-fake-news-neil-levy/

- Lewandowsky, S., Ecker, U. K. H., & Cook, J. (2017). Beyond misinformation: Understanding and coping with the “post-truth” era. Journal of Applied Research in Memory and Cognition, 6, 353–369. doi:10.1016/j.jarmac.2017.07.008

- Luhmann, N. (1979). Trust and power. Chichester, UK: Wiley & Sons.

- Marwick, A., & Lewis, R. (2017). Media manipulation and disinformation online. New York, NY: Data & Society Research Institute. Retrieved from https://datasociety.net/output/media-manipulation-and-disinfo-online/

- Matthes, J. (2005). The need for orientation towards news media: Revising and validating a classic concept. International Journal of Public Opinion Research, 18, 422–444. doi:10.1093/ijpor/edh118

- McFadden, D. (1974). Conditional logit analysis of qualitative choice behavior. In P. Zarembka (Ed.), Frontiers in econometrics (pp. 105–142). New York, NY: Academic Press.

- Meirick, P. C., & Bessarabova, E. (2016). Epistemic factors in selective exposure and political misperceptions on the right and left. Analyses of Social Issues and Public Policy, 16, 36–68. doi:10.1111/asap.12101

- Muthén, L. K., & Muthén, B. O. (2015). Mplus user’s guide (7th ed.). Los Angeles, CA: Muthén & Muthén.

- Pennycook, G., Cannon, T. D., & Rand, D. G. (2017). Prior exposure increases perceived accuracy of fake news. Social Science Research Network. Retrieved from https://ssrn.com/abstract=2958246

- Polage, D. C. (2012). Making up history: False memories of fake news stories. Europe’s Journal of Psychology, 8, 245–250. doi:10.5964/ejop.v8i2.456

- Prochazka, F., & Schweiger, W. (2019). How to measure generalized trust in news media? An adaptation and test of scales. Communication Methods and Measures, 13, 26–42. doi:10.1080/19312458.2018.1506021

- Reedy, J., Wells, C., & Gastil, J. (2014). How voters become misinformed: An investigation of the emergence and consequences of false factual beliefs. Social Science Quarterly, 95, 1399–1418. doi:10.1111/ssqu.12102

- Simmel, G. (2004). The philosophy of money (third enlarged ed., D. Frisby, Ed.). London, UK: Routledge.

- Swami, V. (2012). Social psychological origins of conspiracy theories: The case of the Jewish conspiracy theory in Malaysia. Frontiers in Psychology, 3, 1–9. doi:10.3389/fpsyg.2012

- Swami, V., Barron, D., Weis, L., & Furnham, A. (2018). To Brexit or not to Brexit: The roles of Islamophobia, conspiracist beliefs, and integrated threat in voting intentions for the United Kingdom European Union membership referendum. British Journal of Psychology, 109, 156–179. doi:10.1111/bjop.12252

- Swire, B., Berinsky, A. J., Lewandowsky, S., & Ecker, U. K. (2017). Processing political misinformation: Comprehending the Trump phenomenon. Royal Society Open Science, 4, 160802. doi:10.1098/rsos.160802

- Tandoc, E. C., Jr., Lim, Z. W., & Ling, R. (2018a). Defining “fake news”. A typology of scholarly definitions. Digital Journalism, 6, 137–153. doi:10.1080/21670811.2017.1360143

- Tandoc, E. C., Jr, Ling, R., Westlund, O., Duffy, A., Goh, D., & Zheng Wei, L. (2018b). Audiences’ acts of authentication in the age of fake news: A conceptual framework. New Media & Society, 20, 2745–2763. doi:10.1177/1461444817731756

- Tsfati, Y. (2010). Online news exposure and trust in the mainstream media: Exploring possible associations. American Behavioral Scientist, 54, 22–42. doi:10.1177/0002764210376309

- Tsfati, Y., & Peri, Y. (2006). Mainstream media skepticism and exposure to sectorial and extranational news media: The case of Israel. Mass Communication and Society, 9, 165–187. doi:10.1207/s15327825mcs0902_3

- Uscinski, J. E., Klofstad, C., & Atkinson, M. D. (2016). What drives conspiratorial beliefs? The role of informational cues and predispositions. Political Research Quarterly, 69, 57–71. doi:10.1177/1065912915621621

- Van Spanje, J., & de Vreese, C. (2014). Europhile media and Eurosceptic voting: Effects of news media coverage on Eurosceptic voting in the 2009 European parliamentary elections. Political Communication, 31, 325–354. doi:10.1080/10584609.2013.828137

- Wardle, C., & Derakhshan, H. (2017). Information disorder: Toward an interdisciplinary framework for research and policy making (Council of Europe report DGI(2017)09). Strasbourg, France: Council of Europe.

- Weaver, D. H. (1980). Audience need for orientation and media effects. Communication Research, 7, 361–376. doi:10.1177/009365028000700305

- Weedon, J., Nuland, W., & Stamos, A. (2017). Information operations and Facebook. Menlo Park, CA: Facebook, Inc. Retrieved from https://fbnewsroomus.files.wordpress.com/2017/04/facebook-and-information-operations-v1.pdf

- Weeks, B. E., & Garrett, R. K. (2014). Electoral consequences of political rumors: Motivated reasoning, candidate rumors, and vote choice during the 2008 US presidential election. International Journal of Public Opinion Research, 26, 401–422. doi:10.1093/ijpor/edu005

- Wells, C., Reedy, J., Gastil, J., & Lee, C. (2009). Information distortion and voting choices: The origins and effects of factual beliefs in initiative elections. Political Psychology, 30, 953–969. doi:10.1111/j.1467-9221.2009.00735.x

- Widaman, K. F., & Reise, S. P. (1997). Exploring the measurement invariance of psychological instruments: Applications in the substance use domain. In K. J. Bryant, M. Windle, & S. G. West (Eds.), The science of prevention: Methodological advances from alcohol and substance abuse research (pp. 281–324). Washington, DC: American Psychological Association.

- Winter, S., Metzger, M. J., & Flanagin, A. J. (2016). Selective use of news cues: A multiple motive perspective on information selection in social media environments. Journal of Communication, 66, 669–693. doi:10.1111/jcom.1224