?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Research has shown that in addition to top-down and bottom-up processes, biases produced by the repetition priming effect and reward play a major role in visual selection. Action control research argues that bidirectional effect-response associations underlie the repetition priming effect and that such associations are also achievable through verbal instructions. This study evaluated whether verbally induced effect-response instructions bias visual selective attention in a visual search task in which these instructions were irrelevant. In two online experiments (Exp.1, N = 100; Exp. 2, N = 100), participants memorized specific verbal instructions before completing speeded visual-search classification tasks. In critical trials of the visual search task, a priming stimulus specified in the verbal instructions matched the target stimulus (positive priming). In addition, the design of Experiment 2 accounted for the repetition priming effect caused by frequent appearance of the target object. Reaction time analysis showed that verbal instructions inhibited visual search. Response error analysis showed that verbal effect-response formed an effect-response association between verbally specified stimulus and response. The results also showed that the target object’s frequent appearance strongly affected visual search. The overall findings showed that verbal instructions extended the list of selection biases that modulate visual selective attention.

Given that people can only process limited information at one time, they therefore need to selectively focus their attention on a behaviourally relevant scene or object. The predominant models of attentional control describe selective attention in terms of interplay between bottom-up and top-down processes (Carrasco, Citation2011). These models provide rich flexibility for exploring human attention from a variety of perspectives and explain how attention navigates actions and perception through the interplay of physical properties (colour, shape, location) and the behavioural relevance of various objects.

While such a theoretical split into different processes explains many aspects of selective attention, an alternative framework argues that selective attention is also controlled by section biases that might overcome the salience of either physical properties or the behavioural relevance of stimuli (Awh et al., Citation2012). For example, history-based selection or reward associated with specific stimuli bias selective attention, making it more sensitive to those stimuli (e.g., Anderson et al., Citation2011b). A large body of research provided evidence that past episodes of encountering and selecting specific objects bias attentional selection through the repetition priming mechanism (e.g., Logan, Citation1990; Theeuwes, Citation2018; Theeuwes & Failing, Citation2020), arguing that such an influence on attentional selection acts beyond top-down and bottom-up processes.

Furthermore, research on action control argues that associative learning underlies the repetition priming effect (Henson et al., Citation2014; Soldan et al., Citation2012). Specific actions with specific objects result in the formation of bidirectional associations between those actions and objects (stimulus-response and response-effect associations; Elsner & Hommel, Citation2001; Frings et al., Citation2020; Shin et al., Citation2010). This formation, in turn, can serve as a unified priming mechanism that navigates attentional focus toward previously encountered stimuli (Hommel, Citation2005; Memelink & Hommel, Citation2013; Müsseler & Hommel, Citation1997). Moreover, encountering that stimulus also triggers an associated response with that stimulus. Therefore, the repetition priming effect involves an interplay between perception and actions, and this interplay is based on the associative learning principle (Soldan et al., Citation2012).

Parallel to action-control research, the last decade has seen the rapid development of research on verbal information and verbal action planning, and their effect on behavioural control (Brass et al., Citation2017; Liefooghe & De Houwer, Citation2018; Martiny-Huenger et al., Citation2017; Meiran et al., Citation2015a; Meiran et al., Citation2015b). These lines of research investigate how verbal instructions formulated in a stimulus-response (Liefooghe et al., Citation2012; Liefooghe et al., Citation2018), response-effect (Theeuwes et al., Citation2015), or effect-response (Damanskyy et al., Citation2022) manner influence cognitive control. Despite growing evidence that verbal instructions, formulated in a stimulus-response manner, are highly important to action control, little is known about whether verbal instructions serve as a selection bias. Studying how verbal instructions modulate selective attention can provide valuable insights into the topic of selection biases. This study investigates whether verbal instructions formulated in an effect-response manner affect visual selective attention.

Selective attention

Selective attention is the ability to select specific stimuli, select behavioural responses, access particular memories, or navigate behaviourally relevant thoughts at a given moment (Maurizio, Citation1998). Human perception is continuously exposed to complex input from surroundings targeting the five perceptual domains of sight, hearing, smell, touch, and taste. Visual selective attention is one of the central topics in research on perception because it provides a rich experimental flexibility that allows scholars to investigate attention across multiple visual domains (e.g., colour, shape, location; Carrasco, Citation2011). On a conceptual level, visual selective attention operates by representations of a priority map (Awh et al., Citation2012; Theeuwes, Citation2013, Citation2018; Treisman & Gelade, Citation1980) and integrates three sources of influence: current goal (top-down), physical salience (bottom-up), and selection history.

Bottom-up attention is based on the basic salient visual features of a stimulus (e.g., orientation, colour, motion, size). Research from this perspective focuses mainly on a feature singleton (Yantis & Egeth, Citation1999), which implies that when a presented stimulus is locally unique in one visual dimension (colour, shape, orientation, or size), it attracts focus toward the self. Numerous studies (for a review see Carrasco, Citation2011) have demonstrated that a unique salient feature in the visual field can capture human focus independently of the task at hand (e.g., a red flower on a green background, a light point in the dark).

Whereas the bottom-up process is often called stimulus-driven, the top-down process entails task-driven factors that shape and navigate perception (Theeuwes, Citation2018). Yarbus’ (Citation1967) classic study demonstrated an example of the top-down guidance of selective attention. Participants viewed a family room scene and had to answer specific questions about that scene. Participants’ attentional focus varied depending on the specific task they were asked to perform. For example, the eye saccades of participants whose task was to identify the people’s ages differed from the saccades of both those whose task was to remember object locations and those who had no particular task. These differences indicated that task-relevant factors navigated selective attention.

While many studies have explained selective attention solely from bottom-up and top-down perspectives, Awh et al. (Citation2012) proposed an integrative framework specifying a modified taxonomy of attentional control. According to this model, three sources of attentional control contribute to the priority map that guides selective attention: current goal, physical salience, and selection history. The concept of selection history adds two additional sources of influence on the priority map. The underlying notion is that attention is often driven by neither salient stimuli nor the goal of an observer. Indeed, in many cases, attention guidance is biased by a reward associated with specific stimuli (Anderson et al., Citation2011a; Anderson & Yantis, Citation2013; Bucker & Theeuwes, Citation2014; Failing & Theeuwes, Citation2015; Failing & Theeuwes, Citation2016; Libera & Chelazzi, Citation2006) or by previous history-based selection in terms of the repetition priming effect (Theeuwes & Van der Burg, Citation2011, Citation2013; for a review see, Kristjánsson & Campana, Citation2010; Lamy & Kristjansson, Citation2013).

Repetition priming from verbal instructions

Repetition priming is a change in the reaction time or response accuracy to a stimulus due to prior presentation of the same stimulus (Henson et al., Citation2014; Logan, Citation1990). Encountering the same stimulus and performing the same response is sufficient for automatic stimulus-response associations to emerge, meaning that the repetition priming effect involves associative learning (Henson et al., Citation2014; Soldan et al., Citation2012). In addition, a large body of research demonstrated that stimulus-response associations are bidirectional (Elsner & Hommel, Citation2001).

Bidirectionality implies that associative learning also emerges from response-effect associations in which a stimulus serves as an effect of a particular response. For example, participants may perceive that a particular response (left keypress) leads to a playback of a specific sound (high or low pitch). A temporal overlap between such a response and its effect results in the formation of bidirectional response-effect association. When the participants hear the same sound again, they effortlessly and automatically retrieve a previously formed response-effect association that provides a faster and more direct route of responding (e.g., Elsner & Hommel, Citation2001).

However, a growing interest in research on verbal instructions has demonstrated that verbally induced priming can also form stimulus-response associations linking perception and actions. The empirical evidence comes primarily from two different research directions: instruction-based research and implementation intentions. Within instructions-based research, verbal instructions are treated as a simple form of stimulus-response mappings (e.g., “if cat press left; if dog, press right”) that have an immediate effect. Such mappings are translated into procedural representations in working memory, enabling their execution through reflexive behaviour (Brass et al., Citation2017; Liefooghe et al., Citation2012; Meiran et al., Citation2012; Meiran et al., Citation2015a; Van ‘t Wout et al., Citation2013). However, several studies emphasize that the effect of verbal instructions also relies on representations in long-term memory (Liefooghe & De Houwer, Citation2018; Pfeuffer et al., Citation2017).

In contrast, implementation intentions research emphasizes a specific verbal action plan (e.g., “If I pass a supermarket, I will buy bread”) as a critical component of action planning, and participants are asked to repeat this plan several times to ensure encoding and remembering (Gollwitzer & Sheeran, Citation2006). Such planning creates the direct perception-action link between the anticipated situation (critical cue) and the intended behaviour (e.g., passing a supermarket serves as a critical cue that automatically triggers the planned action of buying bread). The execution of such a plan does not require conscious involvement. As soon as the individual encounters that cue, it triggers a specific behaviour linked to it. The theory of implementation intentions suggests that such verbal planning can serve as an alternative path to the strategic automaticity of action control (Gollwitzer, Citation1999; Gollwitzer, Citation2014) and that the effect of this action planning may be observed over days or even weeks (Conner & Higgins, Citation2010; Papies et al., Citation2009).

While both implementation intention and instruction-based research provided empirical evidence that verbally induced stimulus-response associations influence response selection and retrieval, an open question remains as to whether these associations influence selective attention. Several studies using visual search tasks found that while using verbal cues influences visual selective attention, this influence is not as effective as using specific visual cues (Knapp & Abrams, Citation2012; Schmidt & Zelinsky, Citation2009; Wolfe et al., Citation2004).

However, these studies within the visual search paradigm used verbal primes as simple textual cues, with participants aware that these textual cues were relevant for an upcoming task. Furthermore, these studies did not formulate textual cues in sentence instruction or action plans formulated in a stimulus-response format. In contrast, research on verbal instructions argues that verbal instructions can have an unintentional priming effect in tasks in which those instructions are irrelevant, especially when participants are asked to form an intention to execute given priming instructions (Sheeran et al., Citation2005).

The present experiments

This study investigated whether verbally induced stimulus-response associations affect visual selective attention as a selection bias in the facilitation paradigm. In Awh et al.'s (Citation2012) framework, the effect of selection biases can either facilitate the top-down or bottom-up processes or work in opposition to them. Thus, if verbal instructions act as a selection bias, the effect of that bias should be observable through one of those characteristics.

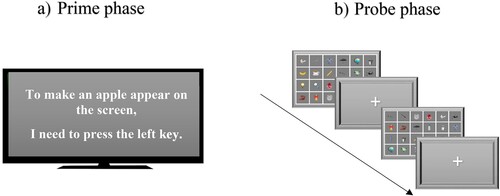

The general design of these present experiments involved prime-probe phases similar to those of other studies of implementation intention and instruction-based research (Liefooghe & De Houwer, Citation2018; Martiny-Huenger et al., Citation2017). In the prime phase, participants formed a specific verbal action plan with specific stimulus-response associations. The probe phase involved probe trials to evaluate whether previously verbally induced associations influenced participants’ selective attention and behavioural responses in a subsequent, two-alternative forced-choice task (2AFC). In this task, participants categorized a target stimulus as either a fruit or a vegetable. To evaluate whether verbally induced associations modulate visual selective attention, the 2AFC task was embodied in a visual search task with the additional objective of finding a target stimulus among distractors (Wolfe, Citation1994).

In the present experiments, a stimulus that was specified in the verbal instructions matched one of the target stimuli in the 2AFC visual search task (facilitation paradigm; Logan, Citation1990). If the verbal instructions prime visual selective attention, then the participants’ performance – upon encountering a critical stimulus from the prime phase – would result in faster and more accurate responses than their responses to target stimuli. Moreover, according to the stimulus-response priming principle (Henson et al., Citation2014), encountering a stimulus associated with a specific response should also lead to unintentional retrieval of that response. Therefore, in a compatible condition in which required and retrieved responses matched (left-left; right-right), I expected faster and more accurate responses than from an incompatible condition containing a reversed pattern (left-right; right-left).

In the present study, all target and distractor stimuli represented different real objects. Although a common procedure within the visual search paradigm involves using a feature singleton in which target and distractor stimuli differ on one or a few dimensions (e.g., colour, shape, size; Yantis & Egeth, Citation1999), using real objects is not new in these types of tasks (Bravo & Farid, Citation2004; Ehinger et al., Citation2009; Fletcher-Watson et al., Citation2008; Schmidt & Zelinsky, Citation2009; Yang & Zelinsky, Citation2009). Moreover, Bravo and Farid (Citation2004) pointed out that using different object categories from real life as targets or distractor stimuli can provide certain advantages for visual search tasks. First, real objects allow researchers to avoid high artificial stimuli; second, real objects have more practical applications and bring the situation closer to real life (i.e., ecological validity; Orne, Citation2002).

In addition, several types of verbal instruction formulations appear in the research on verbal instructions: stimulus-response (Martiny-Huenger et al., Citation2017), response-effect (Theeuwes et al., Citation2015), and effect-response (Damanskyy et al., Citation2022). The effect-response is an action-effect modification of the stimulus-response formulation. Damanskyy et al. (Citation2022) found that the effect-response formulation does not diminish the effectiveness of stimulus-response associations. Conversely, such formulation provides more flexibility to formulate an instruction sentence. Therefore, in the present study, I formulated the verbal instructions in an effect-response manner (e.g., “to make an apple appear on the screen, I need to press the left key”) in which a critical stimulus (i.e., apple) was formulated as the effect of a response (i.e., “I need to press the left key”).

Experiment 1

Methods

Participants

To determine the minimum sample size given d = 0.2, I ran a simulation analysis in R (R Core Team, Citation2021) using the Simr package (Green et al., Citation2016).Footnote1 The results yielded 100 English-speaking participants in the first experiment (64 females, 30 males, and six participants who did not specify their gender). After the data cleaning described in the following “Data Preparation” subsection, the analyzed sample included 88 participants. Overall, the participants’ ages ranged from 30 to 45 years (M = 35.8, SD = 4.46). All participants were recruited through the recruiting portal Prolific and received monetary compensation for their participation. The local Ethics Committee of the Arctic University of Norway approved the study, and all participants provided informed consent prior to the experimental procedures.

Materials

The experiment was programmed with PsychoPy v.2020.2.1 (Peirce et al., Citation2019) and uploaded to Pavlovia.org, thereby allowing online participation. All participants received a link to the experiment via Prolific, allowing them to participate remotely. The recruiting portal only allowed participation with a desktop computer or laptop with a keyboard.

Design

The experiment followed a 2 (prime: critical vs. control) by 2 (compatibility: compatible vs. incompatible) mixed design. Prime was a within-subject factor that specified whether a target stimulus in the visual search task represented a priming stimulus from the verbal instructions sentence (apple; critical trials) or control stimuli (all other fruits and vegetables; control trials).

illustrates all target stimuli. Compatibility was a between-subject factor that specified whether the associated response with a priming stimulus from the verbal instructions sentence matched or mismatched the instructed response for the 2AFC visual search task (“apple”-left/right, fruits-left/right).

Procedure

Prime Phase. Participants saw a critical verbal instruction formulated in an effect-response manner: “To make an apple appear on the screen, I need to press the left/right key.” No time frame restricted participants when memorizing the priming sentence. Prior to the prime phase, participants were clearly instructed that any reference to “left” or “right” meant the “A” or “L” key, respectively. Response specification (press left vs. press right) was randomly counterbalanced. Participants were asked to remember these instructions because they would have to apply them during the final part of the experiment. Appendix B presents all the instructions that the participants saw.

Probe Phase. All probe trials had the same between-trial interval (“+”) of 500 ms. Afterward, a 6 × 4 grid showed the participants 24 figures for 3000 ms. The locations of all 24 stimuli changed randomly in each trial. In each trial, one of those 24 stimuli was a target stimulus. For each control stimulus, the critical stimulus appeared in a proportion of 3:1 (approximately 2000 critical trials versus 500 trials for each control stimulus). In total, participants worked through 96 probe trials. The participants’ task was to find – as quickly and accurately as possible – a target stimulus and categorize it as a fruit or vegetable by pressing either the left (“A”) or right (“L”) key, respectively (keypress conditions were counterbalanced). Six different fruits and six different vegetables represented target stimuli, including the priming stimulus (“apple”). The other 23 objects belonged to different categories. Before the probe phase, participants performed 10 practice trials without the priming stimulus (“apple”). provides a schematic presentation of the prime and probe phases.

Figure 2. The Prime and Probe Phases Note. The prime phase included a single presentation of a critical verbal action-effect sentence without any deadline. After the prime phase, participants performed a visual search task (96 trials). During the probe phase, the participants’ task was to find either a fruit or a vegetable and press the “A” or “L” key, respectively. In one-third of the trials, the target stimulus was an apple (priming stimulus from the prime phase). The location of all figures changed randomly in each trial, and there was only one target stimulus among the distractors.

At the end of the experiment, after completing all trials in the visual search task, participants performed a simple one-trial task. They had to press a corresponding key that would lead to the appearance of an “apple” on the screen as specified in the priming instructions during the prime phase. I created this task solely to avoid participant deception in the prime phase. Therefore, this task was not included in statistical analyses.Footnote2

Data preparation

I used the R software package to prepare and analyze the data (R Core Team, Citation2021). I excluded three participants whose ages fell outside of the predetermined criteria (i.e., younger than 30 years or older than 45 years). I removed one outlier whose responses included greater than 25% incorrect keypresses (not “A” or “L”) during the test phase. Eight-point-four percent of critical trials were removed to account for potential intra-trial priming effect (Lamy & Kristjansson, Citation2013). Other participants’ incorrect keypresses were also excluded (4.56% data lost). Participants missed the response deadline in only 0.07% of trials. After I applied the boxplot method (Tukey, Citation1977), I excluded eight participants due to an excessive amount of response errors (more than 10%). Response error analysis included data with 6956 observations.

To evaluate whether a specific target control stimulus caused a deviation in participants’ response times and response errors compared to their responses to the other control stimuli, I applied the boxplot method (Tukey, Citation1977). This method was applied separately for response times and response errors. The boxplot analysis of the response errors revealed that the “onion” (bottom-right stimulus in ) caused an excessive amount of response errors (9% compared to the upper whisker boundary of 4.44%). Therefore, I removed this stimulus from the analysis. The remainder of the response errors that the other control stimuli produced fell within the interquartile range (2.79% – 4.44%).

Prior to the response time analysis, all response errors were excluded from the data (3.45% data lost). Individual response times beyond the mean ± 2.5 SD, calculated by participant and within-participant conditions, were also excluded (1.52% data lost). The response time variable was log-transformed to handle skewness (Judd et al., Citation1995). Boxplot analysis revealed that response times to the “onion” (1671 ms) and “potato”(1464 ms) deviated from the overall interquartile range (1242–1389 ms). Therefore, these two stimuli () were not included in the analysis. The final response time analysis included data with 6266 observations.

Data analysis

To apply and analyze the mixed models, I used the lme4, lmerTest, and Emmeans packages (Douglas et al., Citation2015; Kuznetsova et al., Citation2017). I calculated all confidence intervals in the result sections using bootstrap statistics with 1,000 simulations (Efron & Tibshirani, Citation1993). The statistical model also included an intercept of the participants as a random factor accounting for by-participant variability. To account for variability in the target stimuli, the model also included the intercept of the visual identity of the target stimuli as a random factor. The regression analysis treated two main factors as dummy variables. The final models for reaction times and response errors were specified as follows:

Table 1. Descriptive Statistics of the Response Time Test Phase and Response Errors.

Results and discussion

Reaction time

Random Factors. The effect of response times as a function of prime and compatibility varied in intercepts across participants (SD = 0.09, χ2(1) = 349.3, p < .001), indicating significant by-participant variation around the average intercept. The effect of response times as a function of prime and compatibility varied in intercepts across stimulus identity (SD = 0.02, χ2(1) = 5.2, p = .002), indicating significant by-stimulus identity variation around the average intercept.

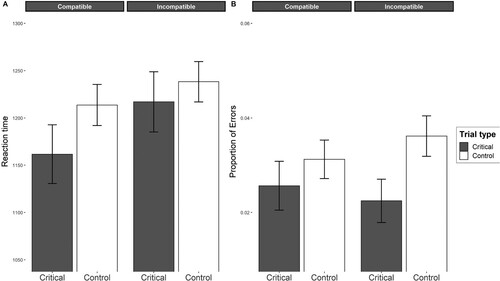

Fixed Factors. The two-way interaction term between prime and compatibility was not significant (b = −0.01, 95% CI [−0.06, 0.01], p = .162), indicating that the difference between critical and control trials did not differ between compatible and incompatible groups. In the compatible group, the difference between critical and control trials was not significant (b = −0.04, 95% CI [−0.00, 0.90], p = .115). In the incompatible group, the same difference between critical and control trials was also not significant (b = −0.01, 95% CI [−0.03, 0.06], p = .495). In contrast, a between-subject comparison revealed a marginal significant difference between the critical trials in the compatible and incompatible groups (b = 0.04, 95% CI [−0.00, 0.10], p = .073), showing that participants responded to the critical priming stimulus faster in the compatible group than participants in the incompatible group.

The significant difference between the compatible and incompatible groups indicates the presence of the instruction-compatible effect caused not only by the priming stimulus but also by the priming response, as participants responded faster to the priming stimulus (apple) when the required response matched the priming response (left-left; right-right). In contrast, participants responded more slowly to the same priming stimulus when the required response in the visual search task did not match the priming response from the priming action-effect sentence (left-right; right-left). A illustrates this regression model. Appendix presents a table with all fixed factor results.

Figure 3. An Illustration of Mixed-Models Analysis Note. The plot illustrates the results from the linear mixed model (A) and generalized linear mixed model (B), with confidence intervals derived from these regression analyses. The mean values on both graphs represent marginally estimated means.

Response error

Random Factors. The effect of response errors as a function of prime and compatibility varied in intercepts across participants (SD = 0.38, χ2(1) = 4.9, p = 0.26), indicating significant by-participant variation around the average intercept. As the effect of response errors as a function of the prime and compatibility did not vary in intercepts across stimulus identity (SD = 0.00, χ2(1) = 0.0, p = .1), the final model did not include target object identity as a random factor.

Fixed Factors. The two-way interaction between prime and compatibility was also not significant (b = 0.28, 95% CI [−3.46, 0.98], p = .365), indicating that the difference between critical and control trials did not differ between compatible and incompatible groups. In the compatible group, participants’ responses were not significantly more accurate in critical trials than in control trials (b = 0.20, 95% CI [−2.29, 0.66], p = .368). In the incompatible group, participants’ responses were significantly more accurate in critical trials than in control trials (b = 0.49, 95% CI [0.06, 0.94], p = .027). These results indicate that participants responded more accurately when the priming stimulus from the verbal instructions matched the target stimulus in the visual search task. These results did not indicate that the associated response with the priming stimulus influenced participants’ accuracy.

The between-subject comparison did not reveal any significant difference in the critical trials between the compatible and incompatible groups (b = 0.13, 95% CI [−7.68, 0.42], p = .638), showing that the associated response with the priming stimulus did not affect the participants’ accuracy. B illustrates this regression model, and Appendix presents a table with all fixed factor results.

Discussion

Experiment 1 demonstrated that the verbal action-effect priming sentence influenced participants’ performance in the visual search task. Although participants did not respond faster in critical trials than in control trials in either group, the between-subject comparison showed that participants in the compatible group responded significantly faster to the priming stimulus. Response error analysis provided no statistical evidence that the priming verbal action-effect sentence influenced participants’ accuracy. Participant accuracy in the critical trials did not significantly differ between the two groups. The only significant difference in the incompatible group between the critical and control trials was that participants responded significantly more accurately in the critical trials. This pattern of findings is contrary to what this paper initially hypothesized (i.e., that participants’ responses in the critical trials would be less accurate than their responses in the control trials).

One possible alternative explanation exists for the results for the repetition priming effect of the critical target stimulus. As this stimulus appeared more frequently than any other control target stimulus, participant familiarity with the target object may have influenced their responses. The repetition priming effect could have interfered with the effect from the verbal instructions and distorted the overall results. Therefore, Experiment 2 accounted for the potential influence of the repetition priming effect by separating the effect of the verbal instructions from the repetition priming effect.

Experiment 2

Despite the significant findings in Experiment 1, the design of this experiment did not account for the potential influence of familiarity on the target object (Hout & Goldinger, Citation2010) that can appear during the probe phase. The familiarity effect implies that participants’ response times and accuracy gradually improve due to multiple repetitions of the target stimuli. This concept shares the core idea of the repetition priming effect (Logan, Citation1990), which states that multiple repetitions of the same stimuli cause a priming effect.

In many behavioural studies evaluating the repetition priming effect, the priming occurs during a priming phase, and the successive evaluation of that effect occurs during a probe phase (e.g., Eder & Dignath, Citation2017; Hommel, Citation2009; Hommel & Hommel, Citation2004). However, an unequal number of stimuli appearing during the probe phase can cause the same repetition priming effect to emerge passively, thereby diminishing the overall results. Although in Experiment 1 the critical stimulus appeared in a proportion of 3:1 to the control stimuli, the appearance of an unequal proportion of stimuli could have caused the repetition priming effect during the probe phase and distorted the effect of the verbal instructions.

Therefore, Experiment 2 repeated Experiment 1 with an adjustment to account for the possible passive repetition priming effect. The critical stimulus from the verbal priming phase remained the same as in Experiment 1 (i.e., apple). However, it appeared an equal number of times as each of the other control stimuli. Furthermore, one of the control stimuli appeared three times more often than all of the other stimuli (i.e., repetition priming). This allowed me to evaluate specifically whether the repetition priming effect can occur solely during the probe phase.

Methods

Participants

I recruited the same sample size as in Experiment 1. A total of 100 English-speaking participants participated in the study (52 females, 42 males, and five participants who did not specify their gender). After the data cleaning described in the “Data Preparation” subsection, the analyzed sample included 90 participants. The participants’ ages ranged from 30 to 45 years (M = 36.5, SD = 4.5). All participants were recruited through the recruiting portal Prolific and received monetary compensation for their participation. The local Ethics Committee of the Arctic University of Norway approved the study, and all participants provided informed consent prior to the experimental procedures.

Design

The experiment followed a 3 (prime: critical vs. control vs. frequency) by 2 (compatibility: compatible vs. incompatible) mixed design. Prime was a within-subject factor that specified whether a target stimulus in the visual search task represented a priming stimulus from the verbal instructions sentence (apple; critical trials) or control stimuli (all other fruits and vegetables; control trials), or repetition priming (carrot; frequency trials). Compatibility was a between-subject factor that specified whether the associated response with a priming stimulus from the verbal instructions sentence matched or did not match the instructed response for the visual search task (“apple”-left/right, fruits-left/right).

Prime and Probe Phases. The prime phase was identical to that in Experiment 1, and the probe phase was similar to that in Experiment 1 but with certain adjustments to evaluate the repetition priming effect. First, the number of target stimuli was reduced to eight. As the boxplot analysis showed in Experiment 1, two stimuli from the vegetable category deviated from the other stimuli in terms of reaction times and response errors. Therefore, I excluded these two stimuli from Experiment 2. I also removed two randomly chosen stimuli from the fruit category (pineapple and strawberry). As in Experiment 1, the apple was a critical target stimulus.

Second, I used the programme PsychoPy to choose one random stimulus from the vegetable category to be a frequency stimulus (carrot). The verbal priming stimulus appeared an equal number of times as each of the other control stimuli, and the frequency stimulus appeared at a 3:1 proportion. The visual search task was identical to the one in Experiment 1. The participants’ task was to find a target stimulus and identify it as a fruit or vegetable as quickly as possible. Participants did not receive any information about frequency stimulus. They were therefore unaware that this stimulus would appear most often in the task.

Data preparation and data analysis

The second experiment involved the same data preparation and data analysis procedures as those in the Experiment 1. I excluded one participant from the analysis whose ages fell outside sampling criteria (younger than 30 or older than 45). I removed two outliers who made more than 25% incorrect keypresses (not “A” or “L”) during the probe phase. Other participants’ incorrect responses were also excluded (2.56% data lost). Participants missed the response deadline in only 0.30% of trials. Application of the boxplot method (Tukey, Citation1977) led to the exclusion of seven participants due to an excessive amount of response errors (more than 10%). Six-point-ninety two percent of trials were excluded to account for intra-trial priming (i.e., the same target stimulus appears two or more times in a row) Response error analysis included data with 8740 observations.

Before the response time analysis, all response errors were excluded from the data (3.05% data lost). Individual response times beyond the mean ± 2.5 SD, calculated by participant and within-participant conditions, were also excluded (1.92% data lost). Response time analysis included data with 7722 observations. The final statistical model was identical to that in Experiment 1:

Table 2. Descriptive Statistics of the Response Time Test Phase and Response Errors.

Results and discussion

Reaction time

The effect of reaction time as a function of prime and compatibility varied in intercepts across participants (SD = 0.09, χ2(1) = 393.3, p < .001), indicating significant by-participant variation around the average intercept. The effect of response times as a function of prime and compatibility varied in intercepts across stimulus identity (SD = 0.02, χ2(1) = 26.6, p < .001), indicating significant by-stimulus identity variation around the average intercept.

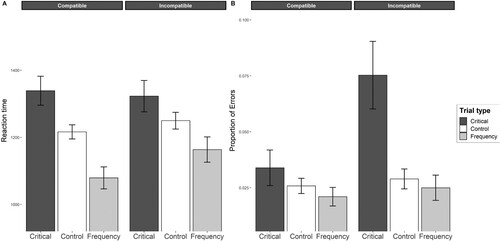

The two-way interaction between prime and compatibility was significant (b = 0.04, 95% CI [0.01, 0.07], p < .001), indicating that the difference between critical, control, and frequency trials in the compatible group was different from the same difference in the incompatible group. In the compatible group, participants’ responses were significantly slower in the critical trials than in the control trials (b = 0.09, 95% CI [−0.15, – 0.03], p = .014). Participants’ responses were also significantly slower in the critical trials than in the frequency trials (b = 0.21, 95% CI [−0.29, – 0.13], p < .001). Participants’ responses in the control trials were also significantly slower than their responses in the frequency trials (b = 0.11, 95% CI [0.06, 0.18], p = .005). These results indicated that the response times were fastest when the target stimulus was the most-repeated stimulus in the visual search task (i.e., repetition priming effect). The results also showed that the verbal priming stimulus had an inhibitory influence on response times.

In the incompatible group, the responses in the critical and control trials were not significantly different (b = 0.05, 95% CI [−0.12, 0.01], p = .123). In contrast, the response times in the frequency trials were significantly faster than the response times in the critical trials (b = 0.12, 95% CI [−0.21, – 0.04], p = .013) and the response times in the control trials (b = −0.07, 95% CI [0.00, 0.13], p = .050). These findings indicated that the participants responded significantly faster to the most-repeated target stimulus than to the verbal priming stimulus or control stimuli.

In addition, the between-subject analysis revealed that participants’ response times in the critical trials did not significantly differ between the critical trials in the compatible and incompatible groups (b = −0.01, 95% CI [−0.07, 0.04], p = .689). Response times in the control trials also did not differ between the compatible and incompatible groups (b = −0.02, 95% CI [−0.08, 0.05], p = .213). Finally, the analysis revealed a significant difference in the response times in the frequency trials between the compatible and incompatible groups (b = −0.07, 95% CI [−0.12, – 0.02], p = .002). illustrates this pattern of findings. Appendix presents a table with all of the fixed factor results.

Figure 4. An Illustration of Mixed-Models Analysis Note. The plot illustrates the results from the linear mixed model (A) and generalized linear mixed model (B), with confidence intervals derived from these models. The mean values on both graphs represent marginally estimated means.

Response errors

The effect of response errors as a function of the prime and compatibility varied in intercepts across participants (SD = 0.54, χ2(1) = 18.6, p < .001), indicating significant by-participant variation around the average intercept. The effect of response errors as a function of the prime and compatibility also did not vary in intercepts across stimulus identity (SD = 0.00, χ2(1) = 0, p = .99). Therefore, the final model did not include stimulus identity as a random factor.

The overall two-way interaction between prime and compatibility was marginally significant (b = −0.37, 95% CI [−0.83, 0.06], p = .091), showing that the difference in the critical, control, and frequency trials was significant between the compatible and incompatible groups. In the compatible group, the results showed no significant difference between the critical and control trials (b = 0.28, 95% CI [−0.72, 0.33], p = .258). There was also no significant difference between the frequency and control trials (b = 0.21, 95% CI [−0.19, 0.68], p = .315). The participants’ accuracy was only marginally significantly different between the critical and frequency trials (b = 0.49, 95% CI [−1.12, 0.14], p = .090).

In the incompatible group, the participants’ accuracy was significantly different between the critical and control trials (b = 1.06, 95% CI [−1.54, – 0.60], p < .001). The participants’ accuracy was also significantly different between the critical and frequency trials (b = 1.15, 95% CI [−1.75, – 0.54], p < .001). However, the participants’ accuracy did not significantly differ between the control and frequency trials (b = 0.15, 95% CI [−0.41, 0.59], p = .532).

The between-subject comparison showed that participants’ responses in the compatible group were significantly more accurate in the critical trials than participants’ responses in the incompatible group (b = 0.84, 95% CI [0.20, 1.54], p = .008). In comparison, participants’ accuracy did not significantly differ between the control trials (b = −0.11, 95% CI [−0.45, 0.10], p = .561) and frequency trials (b = −0.17, 95% CI [−0.45, 0.76], p = .599). illustrates this pattern of findings. Appendix presents a table with all of the fixed factor results.

Discussion

In terms of reaction time analysis, the results showed that participants responded faster to the frequency stimulus than to the critical and control stimuli. These findings indicate that the most-repeated stimulus (carrot) in the probe phase caused the repetition priming effect. Furthermore, the results showed that the participants’ responses to the verbal priming stimulus (apple) were slowest in comparison to their responses to the control or frequency stimuli. These results contradict the findings in Experiment 1 and highlight that the results in Experiment 1 were caused by the repetition priming effect that overrode the effect of verbal priming.

In terms of response errors, the results from Experiment 2 indicate that the verbal action-effect sentence formed an association between the priming stimulus and the priming response, leading to improved response accuracy in the critical trials in the compatible group compared to the incompatible group. These results replicate previous findings on verbal instructions and indicate that verbal instructions formulated in an action-oriented manner (i.e., stimulus-response) function as a unified priming mechanism (Muhle - Karbe et al., Citation2017)

General discussion

The present study investigated whether a verbal priming action-effect sentence acts as a selection bias that influences visual search. The main idea behind the action-effect sentence was to evaluate not only the perceptual aspect of verbal priming but also the behavioural aspect through verbal effect-response associations. Specifically, a priming sentence formulated in an effect-response manner should not only prime perceptual areas, making them more sensitive to a specific stimulus; they should also prime a particular response selection associated with that stimulus, thereby acting as a unified priming mechanism.

Overall, the results show that the verbal action-effect sentence influenced visual search performance. In terms of reaction time, the verbal instructions decelerated participants’ responses. Participants’ responses were also slowed down in the compatible condition when the target stimulus and response direction required in the visual search task matched the stimulus and response direction specified in the priming action-effect sentence. In terms of response errors, the priming action-effect sentence influenced participants’ accuracy as a unified (i.e., stimulus-response) priming mechanism, as the results in Experiment 2 showed. Their accuracy was also significantly improved in the compatible condition compared to the incompatible condition. That supports the previous findings of (Damanskyy et al., Citation2022; Theeuwes et al., Citation2015), which showed that a verbal priming action-effect sentence forms an association between verbally specified stimulus and response.

The repetition priming effect of the most frequent stimulus

In addition to the verbal priming effect, the present studies evaluated the repetition priming effect that could appear in the probe phase due to one of the target stimuli appearing most frequently. Repetition priming implies that when participants encounter a specific target object more often, their performance gradually improves in relation to that object throughout the visual search trials because the most-repeated object primes the participants’ search template. Within the visual search paradigm, this effect has often been evaluated in studies on familiarity with the target object (Hout & Goldinger, Citation2010).

The findings from Experiment 2 demonstrated that repetition priming occurred when one of the control stimuli appeared at a 3:1 proportion with the other target stimuli. These results indicated that the repetition priming effect can occur passively during the probe phase, facilitating response times. Furthermore, the results of Experiment 2 showed that the effect found in Experiment 1 was caused by the repetition priming effect rather than by the verbal priming sentence. As participants’ responses were facilitated in Experiment 1 and not inhibited as in Experiment 2, this facilitation indicates that the effect of verbal instructions (the inhibition of response times) was modified by the repetition priming effect (the facilitation of responses). This supports the previous arguments of (Huang et al., Citation2013) that proved that learning based on verbal instructions is more flexible to adaptation and changes than learning based on the active repetition of the same behaviour.

The results in Experiment 2 show that the frequency stimulus showed significant interaction with compatibility. When participants’ responses to the most-frequent stimulus matched with the response specified in the verbal priming action-effect sentence, participants’ response times were facilitated. However, this pattern of compatibility was not observed in the control condition in the two experiments. These findings potentially indicate that the part of a verbal priming sentence that specifies the response direction (i.e., “left” or “right”) can have an independent priming effect, regardless of whether this part of the verbal priming sentence is syntactically connected to a specific stimulus. Consequentially, when responses to the most-frequent stimulus are habituated and become automatic, they might be more inducive of an additional priming effect. However, these findings require replication to validate this point.

The inhibition of visual searches

Previous studies within the visual search paradigm (Schmidt & Zelinsky, Citation2009; Wolfe et al., Citation2004) and the implementation intention paradigm (Wieber & Sassenberg, Citation2006) argued that verbal information affects visual selective attention. This effect has also been observed within the facilitation paradigm (i.e., the facilitation of visual searches; Knapp & Abrams, Citation2012; Schmidt & Zelinsky, Citation2009). However, my findings demonstrated that when verbal instructions are formulated in an action-oriented manner (i.e., action-effect) and are irrelevant to the visual search task, the effect of these instructions can slow down the visual search performance. Considering previous studies on verbal instructions (Hartstra et al., Citation2012; Muhle - Karbe et al., Citation2017; Van ‘t Wout et al., Citation2013), I suggest two possible explanations for this inhibitory effect.

First, this inhibitory effect was related to variability in the search templates that participants formed. When participants comprehended the verbal action-effect sentence, they retrieved the representation of a critical stimulus (apple) from a long-term memory that influenced their search template. Given that each participant could have had different representations of the critical stimulus, high variability in the search templates could have arisen among participants.

Consequently, when participants encountered a critical stimulus as a target object, their search template could have had a different representation of the target object, causing conflict between the search templates and inhibiting their responses. However, this idea does not explain the instruction-compatibility effect within the response errors. If participants formed different object representations of the critical stimulus, then the associated response should only have been associated with those specific object representations and not lead to better accuracy in the compatible condition than in the incompatible condition. However, as the response error analysis showed, participants’ accuracy was significantly better in the compatible condition than in the incompatible one, highlighting the presence of the instruction-compatibility effect.

Second, the deceleration of response times was related to the relevance of the verbal priming sentence. the studies of (Knapp & Abrams, Citation2012; Schmidt & Zelinsky, Citation2009) participants were aware that the verbal cues they received were relevant to the upcoming visual search task. In contrast, in my experiments, I explained to participants that the verbal action-effect sentence was irrelevant to the visual search task, but they needed to remember it nonetheless, as they would have to apply it after the visual search task. Therefore, when they encountered the verbal priming stimulus as a target stimulus that could potentially cause a spontaneous memory retrieval of the primed behavioural intention coded in the verbal sentence, their responses were inhibited.

Research on implementation intention (for review, see Chen et al., Citation2015) has suggested that when participants form a verbal plan with the intention to execute it in the future, the execution of that plan will occur through a prospective memory mechanism as spontaneous memory retrieval. For example, Rummel et al. (Citation2012) argued that when participants encountered stimuli that were specified in a previously learned verbal action plan, they spontaneously retrieved the previously learned action intention (i.e., prospective memory; McDaniel et al., Citation2008), which may have interfered with the current ongoing task. When participants encountered the verbally specified stimulus (i.e., apple) in Experiment 2, their responses could have been decelerated, not necessarily by the variability in the search templates but by the spontaneous memory retrieval of the action-effect sentence from the priming phase. This could have interfered with the ongoing task and caused delays in their response times.

Verbal instructions as selection bias

The results of Experiment 2 demonstrated how the repetition priming effect biases selective attention. When the same stimulus is encountered more often, it biases selective attention unintentionally and automatically, making participants’ search templates being more sensitive to a specific stimulus and facilitating top-down attentional modulation (Grill-Spector et al., Citation2006). The results of Experiment 2 also demonstrate that the priming action-effect sentence inhibited visual search performance. This inhibition can also be considered an unintentional effect that worked in opposition to top-down attentional modulation.

Interpreting the present findings according to Awh et al.'s (Citation2012) framework, I suggest that verbal instructions fall under the category of selection bias. Notably, however, verbal instructions can affect cognition in different ways (Braem et al., Citation2017). As the present study demonstrates, along with the findings of (Knapp & Abrams, Citation2012; Schmidt & Zelinsky, Citation2009), the effect of verbal primes is dependent on their relevance to the task in which they are evaluated. In addition, the underlying mechanisms of verbal instructions differ from the priming mechanisms of active behaviour in terms of flexibility to changes (Huang et al., Citation2013) and the practice effect (Pfeuffer et al., Citation2018). Therefore, exactly how verbal instructions influence cognitive control is debatable (Blache, Citation2017) because this question relates to how language influences cognition on a neural level (see Perlovsky & Sakai, Citation2014; and Poeppel, Citation2012, for an extended discussion).

Limitations and Future Directions

In the present study, the priming stimulus specified in the verbal instructions remained constant throughout the probe trials. Therefore, my findings do not answer the question of whether participants encoded specific features of the priming stimulus (apple) or an entire specific representation thereof that was potentially retrieved from long-term memory. Treisman and Gelade (Citation1980) argued that the priority map of attentional focus does not necessarily encode an entire representation of a specific stimulus but only specific features thereof – that is, feature-based attention (for a review see Carrasco, Citation2011). Moreover, using real objects as target stimuli, Yang and Zelinsky (Citation2009) demonstrated that visual searches are based on a category-defined principle. Future studies can therefore provide additional insight into this topic by manipulating specific features of a priming stimulus (e.g., colour, size, shape) that can explain how a priority map precisely encodes a priming stimulus that is only presented verbally.

Although Experiment 1, and 2 demonstrated that the modulation of visual selective attention was achieved in a spatially independent manner, these results do not exclude the possibility that spatial attention (Carrasco, Citation2011) does not affect visual search. To account for the possible effect of spatial attention, I randomly varied the location of the stimuli in each trial. Nevertheless, spatial sensitivity might be more relevant when a specific location is involved in purposive behaviour (Moore & Zirnsak, Citation2017). Therefore, the precise answer to whether selection bias can also overcome the spatial dimension requires an experimental procedure in which the spatial dimension is systematically manipulated.

Conclusion

In this study, my findings showed that verbal instructions modulated visual selective attention. Although the verbal instructions were irrelevant to the visual search task, they unintentionally affected visual search performance. I interpret these findings as evidence that verbal instructions extend the list of selection biases. While selection biases based on history-based selection have been studied primarily in behavioural research, biases based on active behaviour cannot explain all the flexibility that humans have within their cognitive control. In the present findings, I highlight the importance of language in cognitive control and show how verbal instructions interact with cognitive control.

Ethical approval

This study was performed in line with the principles of the American Psychological Association. The Ethics Committee of the Arctic University of Norway granted ethical approval for this study (March 2020/No: 2017/1912).

Consent to participate

Informed consent was obtained from all individual participants included in the study prior to participation.

Availability of data and material

The datasets generated during the current study are available via the Open Science Framework (OSF): https://osf.io/4w6k9/?view_only=b8b2208fd93b4d2299eeaefc60238929.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Correction Statement

This article has been republished with minor changes. These changes do not impact the academic content of the article.

Correction Statement

This article was originally published with errors, which have now been corrected in the online version. Please see Correction (https://doi.org/10.1080/13506285.2023.2289292)

Additional information

Funding

Notes

1 The simulation power analysis was based on the following statistical model: Outcome = prime * compatibility + (1|participants). This model did not include stimulus identity as a random factor. In contrast, the main models in the present study include stimulus identity as a random factor.

2 For a post-hoc exploratory analysis on a subgroup of participants who answered correctly in the memory task, see the supplementary materials available at: https://osf.io/4w6k9/?view_only=b8b2208fd93b4d2299eeaefc60238929

References

- Anderson, B. A., Laurent, P. A., & Yantis, S. (2011a). Learned value magnifies salience-based attentional capture. PLoS One, 6(11), e27926–e27926. https://doi.org/10.1371/journal.pone.0027926

- Anderson, B. A., Laurent, P. A., & Yantis, S. (2011b). Value-driven attentional capture. Proceedings of the National Academy of Sciences, 108(25), 10367–10371. https://doi.org/10.1073/pnas.1104047108

- Anderson, B. A., & Yantis, S. (2013). Persistence of value-driven attentional capture. Journal of Experimental Psychology: Human Perception and Performance, 39(1), 6–9. https://doi.org/10.1037/a0030860

- Awh, E., Belopolsky, A. V., & Theeuwes, J. (2012). Top-down versus bottom-up attentional control: A failed theoretical dichotomy. Trends in Cognitive Sciences, 16(8), 437–443. https://doi.org/10.1016/j.tics.2012.06.010

- Blache, P. (2017). Light and deep parsing: A cognitive model of sentence processing. In (pp. 27–52). Cambridge University Press. https://doi.org/10.1017/9781316676974.002

- Braem, S., Liefooghe, B., De Houwer, J., Brass, M., & Abrahamse, E. (2017). There are limits to the effects of task instructions: Making the automatic effects of task instructions context-specific takes practice. Journal of Experimental Psychology: Learning, Memory, and Cognition, 43(3), 394–403. https://doi.org/10.1037/xlm0000310

- Brass, M., Liefooghe, B., Braem, S., & De Houwer, J. (2017). Following new task instructions: Evidence for a dissociation between knowing and doing. Neuroscience & Biobehavioral Reviews, 81(Pt A), 16–28. https://doi.org/10.1016/j.neubiorev.2017.02.012

- Bravo, M. J., & Farid, H. (2004). Search for a category target in clutter. Perception, 33(6), 643–652. https://doi.org/10.1068/p5244

- Bucker, B., & Theeuwes, J. (2014). The effect of reward on orienting and reorienting in exogenous cuing. Cognitive, Affective, & Behavioral Neuroscience, 14(2), 635–646. https://doi.org/10.3758/s13415-014-0278-7

- Carrasco, M. (2011). Visual attention: The past 25 years. Vision Research, 51(13), 1484–1525. https://doi.org/10.1016/j.visres.2011.04.012

- Chen, X.-j., Wang, Y., Liu, L.-l., Cui, J.-f., Gan, M.-y., Shum, D. H. K., & Chan, R. C. K. (2015). The effect of implementation intention on prospective memory: A systematic and meta-analytic review. Psychiatry Research, 226(1), 14–22. https://doi.org/10.1016/j.psychres.2015.01.011

- Conner, M., & Higgins, A. R. (2010). Long-term effects of implementation intentions on prevention of smoking uptake among adolescents: A cluster randomized controlled trial. Health Psychology, 29(5), 529–538. https://doi.org/10.1037/a0020317

- Damanskyy, Y., Martiny-Huenger, T., & Parks-Stamm, E. J. (2022). Unintentional response priming from verbal action–effect instructions. Psychological Research, 87, 161–175. https://doi.org/10.1007/s00426-022-01664-0

- Douglas, B., Martin, M., Ben, B., & Steve, W. (2015). Fitting linear mixed-effects models using lme4. Journal of Statistical Software, 67(1), 1–48. https://doi.org/10.18637/jss.v067.i01

- Eder, & Dignath. (2017). Influence of verbal instructions on effect-based action control. An International Journal of Perception, Attention, Memory, and Action, 81(2), 355–365. https://doi.org/10.1007/s00426-016-0745-6

- Efron, B., & Tibshirani, R. J. (1993). An introduction to the bootstrap (Vol. 57). Chapman & Hall/CRC.

- Ehinger, K. A., Hidalgo-Sotelo, B., Torralba, A., & Oliva, A. (2009). Modelling search for people in 900 scenes: A combined source model of eye guidance. Visual Cognition, 17(6-7), 945–978. https://doi.org/10.1080/13506280902834720

- Elsner, B., & Hommel, B. (2001). Effect anticipation and action control. Journal of Experimental Psychology: Human Perception and Performance, 27(1), 229–240. https://doi.org/10.1037/0096-1523.27.1.229

- Failing, M., & Theeuwes, J. (2016). Reward alters the perception of time. Cognition, 148, 19–26. https://doi.org/10.1016/j.cognition.2015.12.005

- Failing, M. F., & Theeuwes, J. (2015). Nonspatial attentional capture by previously rewarded scene semantics. Visual Cognition, 23(1-2), 82–104. https://doi.org/10.1080/13506285.2014.990546

- Fletcher-Watson, S., Findlay, J. M., Leekam, S. R., & Benson, V. (2008). Rapid detection of person information in a naturalistic scene. Perception, 37(4), 571–583. https://doi.org/10.1068/p5705

- Frings, C., Hommel, B., Koch, I., Rothermund, K., Dignath, D., Giesen, C., Kiesel, A., Kunde, W., Mayr, S., Moeller, B., Möller, M., Pfister, R., & Philipp, A. (2020). Binding and retrieval in action control (BRAC). Trends in Cognitive Sciences, 24(5), 375–387. https://doi.org/10.1016/j.tics.2020.02.004

- Gollwitzer, P. (1999). Implementation intentions: Strong effects of simple plans. American Psychologist, 54(7), 493–503. https://doi.org/10.1037/0003-066X.54.7.493

- Gollwitzer, P., & Sheeran, P. (2006). Advances in experimental social psychology. Advances in Experimental Social Psychology, 38, 69–119. https://doi.org/10.1016/S0065-2601(06)38002-1

- Gollwitzer, P. M. (2014). Weakness of the will: Is a quick fix possible? Motivation and Emotion, 38(3), 305–322. https://doi.org/10.1007/s11031-014-9416-3

- Green, P., MacLeod, C. J., & Nakagawa, S. (2016). SIMR: An R package for power analysis of generalized linear mixed models by simulation. Methods in Ecology and Evolution, 7(4), 493–498. https://doi.org/10.1111/2041-210X.12504

- Grill-Spector, K., Henson, R., & Martin, A. (2006). Repetition and the brain: Neural models of stimulus-specific effects. Trends in Cognitive Sciences, 10(1), 14–23. https://doi.org/10.1016/j.tics.2005.11.006

- Hartstra, E., Waszak, F., & Brass, M. (2012). The implementation of verbal instructions: Dissociating motor preparation from the formation of stimulus–response associations. NeuroImage, 63(3), 1143–1153. https://doi.org/10.1016/j.neuroimage.2012.08.003

- Henson, R. N., Eckstein, D., Waszak, F., Frings, C., & Horner, A. J. (2014). Stimulus–response bindings in priming. Trends in Cognitive Sciences, 18(7), 376–384. https://doi.org/10.1016/j.tics.2014.03.004

- Hommel, B. (2005). Perception in action: Multiple roles of sensory information in action control. Cognitive Processing, 6(1), 3–14. https://doi.org/10.1007/s10339-004-0040-0

- Hommel, B. (2009). Action control according to TEC (theory of event coding). Psychological Research Psychologische Forschung, 73(4), 512–526. https://doi.org/10.1007/s00426-009-0234-2

- Hommel, B., & Hommel, B. (2004). Coloring an action: Intending to produce color events eliminates the stroop effect. Psychological Research, 68(2), 74–90. https://doi.org/10.1007/s00426-003-0146-5

- Hout, M. C., & Goldinger, S. D. (2010). Learning in repeated visual search. Attention, Perception & Psychophysics, 72(5), 1267–1282. https://doi.org/10.3758/APP.72.5.1267

- Huang, T.-R., Hazy, T. E., Herd, S. A., & O'Reilly, R. C. (2013). Assembling old tricks for New tasks: A neural model of instructional learning and control. Journal of Cognitive Neuroscience, 25(6), 843–851. https://doi.org/10.1162/jocn_a_00365

- Judd, C. M., McClelland, G. H., & Culhane, S. E. (1995). Data analysis: Continuing issues in the everyday analysis of psychological data. Annual Review of Psychology, 46(1), 433–465. https://doi.org/10.1146/annurev.ps.46.020195.002245

- Knapp, W. H., & Abrams, R. A. (2012). Fundamental differences in visual search with verbal and pictorial cues. Vision Research, 71, 28–36. https://doi.org/10.1016/j.visres.2012.08.015

- Kristjánsson, Á, & Campana, G. (2010). Where perception meets memory: A review of repetition priming in visual search tasks. Attention, Perception, & Psychophysics, 72(1), 5–18. https://doi.org/10.3758/APP.72.1.5

- Kuznetsova, A., Brockhoff, P. B., & Christensen, R. H. B. (2017). Lmertest package: Tests in linear mixed effects models. Journal of Statistical Software, 82(13), 1–26. https://doi.org/10.18637/jss.v082.i13

- Lamy, D. F., & Kristjansson, A. (2013). Is goal-directed attentional guidance just intertrial priming? A review. Journal of Vision, 13(3), 14–14. https://doi.org/10.1167/13.3.14

- Libera, C. D., & Chelazzi, L. (2006). Visual selective attention and the effects of monetary rewards. Psychological Science, 17(3), 222–227. https://doi.org/10.1111/j.1467-9280.2006.01689.x

- Liefooghe, B., Braem, S., & Meiran, N. (2018). The implications and applications of learning via instructions. Acta Psychologica, 184, 1–3. https://doi.org/10.1016/j.actpsy.2017.09.015

- Liefooghe, B., & De Houwer, J. (2018). Automatic effects of instructions do not require the intention to execute these instructions. Journal of Cognitive Psychology, 30(1), 108–121. https://doi.org/10.1080/20445911.2017.1365871

- Liefooghe, B., Wenke, D., & De Houwer, J. (2012). Instruction-based task-rule congruency effects. Journal of Experimental Psychology: Learning, Memory, and Cognition, 38(5), 1325–1335. https://doi.org/10.1037/a0028148

- Logan, G. D. (1990). Repetition priming and automaticity: Common underlying mechanisms? Cognitive Psychology, 22(1), 1–35. https://doi.org/10.1016/0010-0285(90)90002-L

- Martiny-Huenger, T., Martiny, S. E., Parks-Stamm, E. J., Pfeiffer, E., Gollwitzer, P. M., Gauthier, I., & Cowan, N. (2017). From conscious thought to automatic action: A simulation account of action planning. Journal of Experimental Psychology: General, 146(10), 1513–1525. https://doi.org/10.1037/xge0000344

- Maurizio, C. (1998). Frontoparietal cortical networks for directing attention and the eye to visual locations: Identical, independent, or overlapping neural systems? Proceedings of the National Academy of Sciences, 95(3), 831–838. https://doi.org/10.1073/pnas.95.3.831. (Colloquium Paper).

- McDaniel, M. A., Howard, D. C., & Butler, K. M. (2008). Implementation intentions facilitate prospective memory under high attention demands. Memory & Cognition, 36(4), 716–724. https://doi.org/10.3758/MC.36.4.716

- Meiran, N., Cole, M. W., & Braver, T. S. (2012). When planning results in loss of control: Intention-based reflexivity and working-memory. Frontiers in Human Neuroscience, 6(2012), https://doi.org/10.3389/fnhum.2012.00104

- Meiran, N., Pereg, M., Kessler, Y., Cole, M., & Braver, T. (2015a). Reflexive activation of newly instructed stimulus–response rules: Evidence from lateralized readiness potentials in no-go trials. Cognitive, Affective, & Behavioral Neuroscience, 15(2), 365–373. https://doi.org/10.3758/s13415-014-0321-8

- Meiran, N., Pereg, M., Kessler, Y., Cole, M. W., & Braver, T. S. (2015b). The power of instructions: Proactive configuration of stimulus–response translation. Journal of Experimental Psychology: Learning, Memory, and Cognition, 41(3), 768–786. https://doi.org/10.1037/xlm0000063

- Memelink, J., & Hommel, B. (2013). Intentional weighting: A basic principle in cognitive control. Psychological Research, 77(3), 249–259. https://doi.org/10.1007/s00426-012-0435-y

- Moore, T., & Zirnsak, M. (2017). Neural mechanisms of selective visual attention. Annual Review of Psychology, 68(1), 47–72. https://doi.org/10.1146/annurev-psych-122414-033400

- Muhle - Karbe, P. S., Duncan, J., De Baene, W., Mitchell, D. J., & Brass, M. (2017). Neural coding for instruction-based task sets in human frontoparietal and visual cortex. CEREBRAL CORTEX, 27(3), https://doi.org/10.1093/cercor/bhw032

- Müsseler, J., & Hommel, B. (1997). Blindness to response-compatible stimuli. Journal of Experimental Psychology: Human Perception and Performance, 23(3), 861–872. https://doi.org/10.1037/0096-1523.23.3.861

- Orne, M. T. (2002). On the social psychology of the psychological experiment: With particular reference to demand characteristics and their implications. Prevention & Treatment, 5(1), No Pagination Specified-No Pagination Specified. https://doi.org/10.1037/1522-3736.5.1.535a

- Papies, E. K., Aarts, H., & de Vries, N. K. (2009). Planning is for doing: Implementation intentions go beyond the mere creation of goal-directed associations. Journal of Experimental Social Psychology, 45(5), 1148–1151. https://doi.org/10.1016/j.jesp.2009.06.011

- Peirce, J., Gray, J. R., Simpson, S., MacAskill, M., Höchenberger, R., Sogo, H., Kastman, E., & Lindeløv, J. K. (2019). Psychopy2: Experiments in behavior made easy. Behavior Research Methods, 51(1), 195–203. https://doi.org/10.3758/s13428-018-01193-y

- Perlovsky, L., & Sakai, K. L. (2014). Language and cognition. Frontiers in Behavioral Neuroscience, 8, 436–436. https://doi.org/10.3389/fnbeh.2014.00436

- Pfeuffer, C. U., Moutsopoulou, K., Pfister, R., Waszak, F., Kiesel, A., Enns, J. T., & Gauthier, I. (2017). The power of words: On item-specific stimulus–response associations formed in the absence of action. Journal of Experimental Psychology: Human Perception and Performance, 43(2), 328–347. https://doi.org/10.1037/xhp0000317

- Pfeuffer, C. U., Moutsopoulou, K., Waszak, F., & Kiesel, A. (2018). Multiple priming instances increase the impact of practice-based but not verbal code-based stimulus-response associations. Acta Psychologica, 184, 100–109. https://doi.org/10.1016/j.actpsy.2017.05.001

- Poeppel, D. (2012). The maps problem and the mapping problem: Two challenges for a cognitive neuroscience of speech and language. Cognitive Neuropsychology, 29(1-2), 34–55. https://doi.org/10.1080/02643294.2012.710600

- R Core Team. (2021). R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. https://www.R-project.org/

- Rummel, J., Einstein, G. O., & Rampey, H. (2012). Implementation-intention encoding in a prospective memory task enhances spontaneous retrieval of intentions. Memory (Hove, England), 20(8), 803–817. https://doi.org/10.1080/09658211.2012.707214

- Schmidt, J., & Zelinsky, G. J. (2009). Search guidance is proportional to the categorical specificity of a target cue. Quarterly Journal of Experimental Psychology, 62(10), 1904–1914. https://doi.org/10.1080/17470210902853530

- Sheeran, P., Webb, T. L., & Gollwitzer, P. M. (2005). The interplay between goal intentions and implementation intentions. Personality and Social Psychology Bulletin, 31(1), 87–98. https://doi.org/10.1177/0146167204271308

- Shin, Y. K., Proctor, R. W., & Capaldi, E. J. (2010). A review of contemporary ideomotor theory. Psychological Bulletin, 136(6), 943–974. https://doi.org/10.1037/a0020541

- Soldan, A., Clarke, B., Colleran, C., & Kuras, Y. (2012). Priming and stimulus-response learning in perceptual classification tasks. Memory (Hove, England), 20(4), 400–413. https://doi.org/10.1080/09658211.2012.669482

- Theeuwes, J. (2013). Feature-based attention: It is all bottom-up priming. Philosophical Transactions of the Royal Society B: Biological Sciences, 368(1628), 20130055–20130055. https://doi.org/10.1098/rstb.2013.0055

- Theeuwes, J. (2018). Visual selection: Usually fast and automatic; seldom slow and volitional. Journal of Cognition, 1(1), 29–29. https://doi.org/10.5334/joc.13

- Theeuwes, J., & Failing, M. (2020). Attentional selection: Top-down, bottom-up and history-based biases. Cambridge University Press.

- Theeuwes, J., & Van der Burg, E. (2011). On the limits of top-down control of visual selection. Attention, Perception, & Psychophysics, 73(7), 2092–2103. https://doi.org/10.3758/s13414-011-0176-9

- Theeuwes, J., & Van der Burg, E. (2013). Priming makes a stimulus more salient. Journal of Vision, 13(3), 21–21. https://doi.org/10.1167/13.3.21

- Theeuwes, M., De Houwer, J., Eder, A., & Liefooghe, B. (2015). Congruency effects on the basis of instructed response-effect contingencies. Acta Psychologica, 158, 43–50. https://doi.org/10.1016/j.actpsy.2015.04.002

- Treisman, A. M., & Gelade, G. (1980). A feature-integration theory of attention. Cognitive Psychology, 12(1), 97–136. https://doi.org/10.1016/0010-0285(80)90005-5

- Tukey, J. W. (1977). Exploratory data analysis. Addison-Wesley.

- Van’t Wout, F., Lavric, A., & Monsell, S. (2013). Are stimulus–response rules represented phonologically for task-Set preparation and maintenance? Journal of Experimental Psychology: Learning, Memory, and Cognition, 39(5), 1538–1551. https://doi.org/10.1037/a0031672

- Wieber, F., & Sassenberg, K. (2006). I can't take my eyes off of it – attention attraction effects of implementation intentions. Social Cognition, 24(6), 723–752. https://doi.org/10.1521/soco.2006.24.6.723

- Wolfe, J. M. (1994). Guided search 2.0 A revised model of visual search. Psychonomic Bulletin & Review, 1(2), 202–238. https://doi.org/10.3758/BF03200774

- Wolfe, J. M., Horowitz, T. S., Kenner, N., Hyle, M., & Vasan, N. (2004). How fast can you change your mind? The speed of top-down guidance in visual search. Vision Research, 44(12), 1411–1426. https://doi.org/10.1016/j.visres.2003.11.024

- Yang, H., & Zelinsky, G. J. (2009). Visual search is guided to categorically-defined targets. Vision Research, 49(16), 2095–2103. https://doi.org/10.1016/j.visres.2009.05.017

- Yantis, S., & Egeth, H. E. (1999). On the distinction between visual salience and stimulus-driven attentional capture. Journal of Experimental Psychology: Human Perception and Performance, 25(3), 661–676. https://doi.org/10.1037/0096-1523.25.3.661

- Yarbus, A. L. (1967). Eyes movements and vision (Translated from Russian by Basil Haigh). Plenum Press.

Appendices

Appendix A

Table A1. The Results from the Reaction Time Analysis of the Fixed Effects in Experiment 1.

Table A2. Results from the Response Error Analysis of the Fixed Effects in Experiment 1.

Table A3. Results from the Reaction Time Analysis of the Fixed Effects in Experiment 2.

Table A4. Results from the Response Error Analysis of the Fixed Effects in Experiment 2.

Appendix B

Slide 1

INFORMED CONSENT

Welcome to this study on concentration. We will ask you to perform a simple attention speed categorization task. You will be presented with a series of different figures; your task will be to find a specific figure among others and to press either the left (A) or right (L) key on your keyboard. More detailed instructions follow the informed consent information below.

VOLUNTARY PARTICIPATION

Your participation in this study is voluntary. You may leave the study at any time without needing to provide a reason. If you choose to leave before completing the study, your data will not be stored.

CONFIDENTIALITY

The recorded data will only be used for scientific purposes. Participation in this study is anonymous. The only personal information collected from you will be your age and gender. No additional participant identifiers will be recorded, and none of the information collected can be used to identify participants. As the data will be stored anonymously, individual data cannot be made available on request.

CONTACT INFORMATION

If you have questions about this study, you may contact the lead researcher Yevhen Damanskyy at [email protected].

Press the spacebar to continue (or close your browser if you do not want to participate now).

By pressing the spacebar, I acknowledge that I have read and understood these terms and conditions and agree to participate in the study.

Press the spacebar to continue (or close your browser if you do not want to participate now).

Slide 2

This study can only be completed using a traditional desktop PC or laptop with a physical keyboard. If you are reading this on a tablet or smartphone, you will need to switch to a personal computer and restart the study.

IMPORTANT! If you are colourblind, unfortunately you will not be able to complete the tasks correctly. In this case, we kindly ask you not to participate in this study and just close your browser page.

To prepare for the task, please place your chair in a comfortable position so that you can easily reach the keyboard with both hands. You will be using the A and L keys. Please place your left and right index fingers on the respective left (A) and right (L) keys and press each key a few times to get a sense of how they work.

For further instructions, press the spacebar.

Slide 3

At the end of the experiment, you will be presented with a single task with the following instructions.

Please read and memorize the following instructions:

TO MAKE AN APPLE APPEAR ON THE SCREEN,

I NEED TO PRESS THE LEFT (A) KEY.

It is important that you memorize the capitalized sentence above; please repeat the sentence in your head a few times to make sure that you have memorized it.

Press the spacebar to continue.

Slide 4

Please repeat the instruction sentence from the previous screen in your head a few times.

Please also remember that “left” refers to the “A” key, and “right” refers to the “L” key.

Press the spacebar when you are done.

Slide 5