Abstract

Left-hemispheric language dominance has been suggested by observations in patients with brain damages as early as the 19th century, and has since been confirmed by modern behavioural and brain imaging techniques. Nevertheless, most of these studies have been conducted in small samples with predominantly Anglo-American background, thus limiting generalization and possible differences between cultural and linguistic backgrounds may be obscured. To overcome this limitation, we conducted a global dichotic listening experiment using a smartphone application for remote data collection. The results from over 4,000 participants with more than 60 different language backgrounds showed that left-hemispheric language dominance is indeed a general phenomenon. However, the degree of lateralization appears to be modulated by linguistic background. These results suggest that more emphasis should be placed on cultural/linguistic specificities of psychological phenomena and on the need to collect more diverse samples.

We like to thank iDichotic users from around the world that shared their test results with us.

No potential conflict of interest was reported by the authors.

The present work was funded by the European Research Council (ERC) Advanced Grant [no. 249516] to Prof. Kenneth Hugdahl.

For the past 50 years, dichotic listening has served as a non-invasive method for the study of hemispheric lateralization of speech perception (Hugdahl, Citation2011). Its hallmark finding is a preference to report the right-ear over the left-ear stimulus of two, simultaneously presented consonant-vowel (CV) syllables or words, a phenomenon called the right-ear advantage (REA; Bryden, Citation1988; Hugdahl, Citation1995; Kimura, Citation1961). The REA is an indicator of left-lateralized processing of language and has been validated with a variety of methods, such as functional magnetic resonance imaging (fMRI; e.g., Hund-Georgiadis, Lex, Friederici, & von Cramon, Citation2002; van den Noort, Specht, Rimol, Ersland, & Hugdahl, Citation2008; Westerhausen, Kompus, & Hugdahl, Citation2014), positron emission tomography (Hugdahl et al., Citation1999), the Wada procedure (e.g., Hugdahl, Carlsson, Uvebrant, & Lundervold, Citation1997; Strauss, Gaddes, & Wada, Citation1987), as well as lesion studies (e.g., Gramstad, Engelsen, & Hugdahl, Citation2003; Pollmann, Maertens, von Cramon, Lepsien, & Hugdahl, Citation2002; Sparks, Goodglass, & Nickel, Citation1970).

Although the REA is one of the best-described perceptual phenomena in neuropsychological research, it is based on results obtained from small samples in the laboratory. In addition, subjects typically fall into the so-called “WEIRD”-category, that is, they come from Western, educated, industrialized, rich and democratic cultures (Jones, Citation2010). Moreover, Arnett (Citation2008) reported on the dominance of samples from English-speaking countries, and particularly the USA, as they are represented in 82% of studies published in APA journals. One main reason for this state of affairs in psychological research is the constraints that are accompanying running experiments in the laboratory, which make it impossible to collect large-scale data from different countries and cultures within the same experimental design or paradigm and within reasonable time. As a consequence, empirical evidence for models and theories of general psychological phenomena is heavily biased towards a minority, about 5% of the world's population (see Arnett, Citation2008), and any findings made on the basis of this minority may not necessarily generalize to all humans (see Henrich, Heine, & Norenzayan, Citation2010). Indeed, previous studies have shown that cultural differences exist for complex tasks, e.g., in fairness and economic decision-making (Henrich et al., Citation2006), as well as for basic perceptual phenomena such as in the Müller-Lyer illusion (Segall, Campbell, & Herskovits, Citation1966). Thus, universality should not be assumed before the phenomenon in question has been explored across a range of cultures. The internet and smartphones offer an opportunity for a paradigm shift in psychological research by allowing to collect more diverse and larger samples, under various real-life conditions, yet with the same experimental paradigm (Gosling, Sandy, John, & Potter, Citation2010; see Miller, Citation2012). In this way, data collected globally should provide results that better represent the world's population and at the same time identify cultural/linguistic specificities among sub-populations.

In this paper we present the results of a large-scale (>4000 subjects) and international (>60 native languages) field experiment on language lateralization using a mobile-app for data collection (iDichotic). The app served as a self-administered dichotic listening test based on the CV-paradigm (Hugdahl & Andersson, Citation1986), and it has previously been shown to produce both reliable and valid results (Bless et al., Citation2013). App-users from around the world could participate in this field-experiment by submitting their test results to our database. This is the largest and (culturally/linguistically) most diverse sample of subjects that has been tested for language lateralization, allowing us to explore if the phenomenon in question (i.e., the REA, indicating left-lateralized processing of language) is indeed a universal phenomenon (generality of the REA). Also, having data collected from individuals of different language backgrounds with exactly the same experimental paradigm, allowed us to test for differences between language groups (specificity of the REA). We expected to find the REA irrespective of language background (generality) and sought to examine the differences in the magnitude of the REA between languages (specificity). Based on our previous study in a smaller sample using the same paradigm (Bless et al., Citation2013), we would expect to find differences in the REA between language groups. However, other studies using different dichotic listening paradigms (multiple responses per trial) have not found cross-cultural differences (Cohen, Levy, & McShane, Citation1989; Nachshon, Citation1986). In addition, we also expected the size of the REA to be modulated by sex (males > females), handedness (right-handers > left-handers) and age (increasing REA with age), as reported in previous studies (Hirnstein, Westerhausen, Korsnes, & Hugdahl, Citation2013; Hiscock, Inch, Jacek, Hiscock-Kalil, & Kalil, Citation1994; Hugdahl, Citation2003).

METHODS

Database and samples

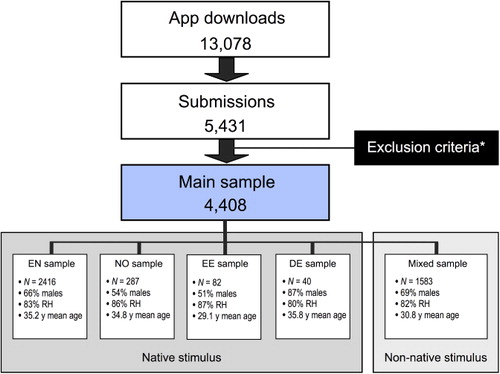

The complete database consists of 5,431 submissions from iDichotic app-users around the world. The app was promoted via various media channels (radio, web, newspapers). Since the data were collected under uncontrolled experimental conditions, through self-administration, a number of exclusion criteria were applied (see below) to control for this. This resulted in the exclusion of 1,022 data sets (19%) and a remaining main sample size of 4,408 participants (66% males, 83% right-handers, mean age 33.6) from 64 different native language backgrounds for the main analysis. Two thousand eight hundred twenty-five participants received the stimulus materials in their native language, while 1,583 participants received the materials in a non-native language (see for an overview). The experiment was carried out in accordance with the Declaration of Helsinki and informed consent was obtained prior to submission of the test results.

Material

The app iDichotic was developed in-house using the iOS software development kit (Apple Inc., Cupertino, CA) and is available free of charge on the App Store, which makes it compatible with iPhone, iPad and iPod touch devices. The test is based on the standard Bergen dichotic listening paradigm (Hugdahl, Citation2003; Hugdahl & Andersson, Citation1986). In this paradigm, the stimuli consist of the six CV-syllables /ba/, /da/, /ga/, /ta/, /ka/ and /pa/, presented via headphones in pairs, one syllable played in the right-ear channel and the other syllable played simultaneously in the left-ear channel. In this way, all possible combinations are presented forming 30 unlike pairs (e.g., /ba/-/ka/, /ta/-/ka/ etc.) and 6 like pairs (e.g., /da/-/da/, /ta/-/ta/). The stimuli were available in four different language-sets, that is, the syllables were spoken with constant intonation and intensity by native speakers of (British) English, Norwegian, German and Estonian. The six stimuli of each language-set varied in their length due to differences in the voice-onset time (VOT) of the consonant. Two types can be distinguished: those with long VOT (i.e., /pa/, /ta/, /ka/) and those with short VOT (i.e., /ba/, /da/, /ga/) (see Rimol, Eichele, & Hugdahl, Citation2006). Previously, this stimulus set-up has yielded laterality estimates that were successfully validated against the sodium amytal procedure (e.g., Hugdahl et al., Citation1997; Strauss et al., Citation1987), as well as minimally invasive (e.g., Hugdahl et al., Citation1999) and non-invasive neuroimaging techniques (e.g., Van der Haegen, Westerhausen, Hugdahl, & Brysbaert, Citation2013; van den Noort et al., Citation2008; Westerhausen et al., Citation2014). Also, independent of length difference within the stimulus set of one language, there were differences in the overall length between the four language-sets (English: 480–550 msec, Norwegian: 400–500 msec, German: 320–380 msec, Estonian: 320–390 msec). This is due to the syllables being recorded to sound as natural/native as possible, with the intention to preserve the specific phonetic character of each language. The inter-stimulus interval was kept constant for all language-sets at 4,000 msec.

Procedure

The experiment was implemented as a self-administration test. First, the iDichotic app had to be downloaded from the App Store and installed on a mobile device (e.g., iPhone). As part of the app and before the start of the test, participants were asked to report on a set of variables, including stimulus language (English, Norwegian, German, Estonian), age (in years), sex, handedness (3 alternatives: right, left, both) and native language (including the main English dialects). They also performed a simple hearing test to control for hearing asymmetries that would bias the test results (see exclusion criteria below). In this test, using a horizontal volume scroll-bar, a 1000 Hz tone had to be adjusted to the point it became “just inaudible” (separate for left and right ear), Participants were reminded (via a pop-up window) to wear the headphones in correct orientation (left-channel headphone on the left ear, right-channel headphone on the right ear) and adjust the main volume to a comfortable level. In the next step, test instructions were presented on the screen corresponding to the non-forced condition of the Bergen dichotic listening paradigm (Hugdahl, Citation2003). That is, participants were instructed to listen to a series of syllable pairs (36 pairs) and respond after each trial (1 pair per trial) by selecting (on the touch-screen) the syllable he/she had heard best. Participants had 4 sec to respond before the next syllable pair was presented. A response was counted as correct when the selected syllable matched the syllable presented to either the right or left ear on each trial; if the response did not match either syllable, or no response was given, it was counted as an error. The error was calculated as follows:

The laterality index (LI) was calculated to quantify the magnitude of language lateralization using this formula:

Thus, a REA (left-hemisphere language dominance) was indicated by a positive LI, while a left-ear advantage (right-hemispheric language dominance) was indicated by a negative LI. At completion of the 3-minute long test, the results were displayed including the option to submit the results (see below) to our database.

Data collection

The data were collected via secure file transfer protocol during the period between 10 August 2012 and 27 January 2014. The majority of the data was collected during a six-week period as a result of a series of media promotions of the app. The end point of data collection was arbitrary. Since the aim was to attain the largest possible sample, no a priori sample size was calculated, but effect sizes were considered in the interpretation of effects. The submission of test results was optional, anonymous at all times and required accepting the terms of informed consent (via pop-up notification). The submitted file contained the test scores, participant-variables, submission date and app-ID (date of app download + random number).

Exclusion criteria

First, in order to guarantee that a participant was able to identify the syllables we decided that at least 6 out of 30 (20%) total reports needed to be correct in dichotic trials. For homonym trials, 3 out of 6 (50%) were considered adequate. Further, cases that had an absolute LI of 100%, indicating that there was no correct identification of stimuli presented to one (the right or the left) ear were also excluded. Although it cannot be completely ruled out that this is in fact a “true” REA/LEA, data from laboratory experiments very rarely show a 100% ear advantage (see Hugdahl, Citation2003). Thus, we considered it more likely that most of these cases were artefactual, e.g., due to a unilateral hearing loss in one ear, or a broken headphone piece. Also, a hearing asymmetry of more than 20% was a criterion for exclusion. The threshold is deduced from previous experiments under soundproof conditions, which showed that an asymmetry of more than 6db (10% of normal conversation of 60db) would start affecting the size of the ear advantage (see Hugdahl, Westerhausen, Alho, Medvedev, & Hämäläinen, Citation2008). However, since we used a crude measurement of hearing ability, and given the potentially noisy environments in which it was conducted, we found it reasonable to double the threshold level. In addition, participants under the age of eight were excluded, since it cannot be expected that they were able to read and understand the instructions. From around the age of eight, however, performance approaches “adult-like” behaviour, as has been shown in previous longitudinal studies with the same paradigm (Westerhausen, Helland, Ofte, & Hugdahl, Citation2010). Finally, double submissions from the same participant were excluded, that is only the first results-submission was counted, in order to avoid re-test/practice effects.

Data analysis

The data were analyzed with five analyses of variance (ANOVAs) using the right ear/left ear scores as dependent variable (for details see ). The purpose of the first analysis (Analysis 1) was to explore our hypothesis on the generality of the REA irrespective of language background as well as the expected effects of sex (male, female), handedness (right-handers, left-handers, ambidextrous), age and the use of stimulus language (native, non-native syllables). Further, the role of “nativeness” was explored by investigating the effect of phonetic overlap on the ear scores (Analysis 2a/b). Phonetic overlap was defined as the degree in which the native language and the stimulus language overlap with regard to place of articulation of the six stop consonants. For example, a native English speaker using the English syllables is assigned an overlap of six, since native language and stimulus language have identical points of articulation (for details see ). For this purpose, we looked at the two sub-samples separately, one with complete phonetic overlap (native-stimulus sample; Analysis 2a) and another with varying degrees of phonetic overlap (non-native stimulus sample; Analysis 2b). Finally, in order to examine the effect of language/dialect background independently of the stimulus language and compare language/dialect groups directly, we analyzed the language groups with more than 100 participants (English, Danish, Norwegian, Hindi, Chinese, Spanish; Analysis 3a), and the English language dialects separately (North American, British, Australian; Analysis 3b). Of note, non-right-handers (ambidextrous, left-handers) were excluded in Analysis 2a/b and Analysis 3a/b, since keeping the factor handedness would result in an ANOVA design with less than 10 subjects in one or more cells.

Across all analyses the effects of interest were: (1) the interaction of the factor Ear with the respective language group factor, indicating differences in the magnitude of the ear advantage, and (2) the main effects of the language group factor, representing differences in the overall (average across ears) performance level. In general, significant main and interaction effects were followed-up by pairwise t-test comparisons and lower level ANOVAs. In order to control the familywise error rate, the Bonferroni correction was applied in the omnibus ANOVAs (i.e., adjustment alpha = 0.05/5 = 0.01). The Fisher's least significant difference procedure (alpha = 0.05) was used for the post-hoc tests. In addition, effect-size measures were provided indicating the proportion of explained variance, i.e., . Mean LI scores were calculated for the various language/dialect sub-samples to describe the magnitude of the ear advantage in a commonly accepted form (formula see above). The statistical analyses were performed in PASW 18.0 (IBM SPSS, New York, USA).

RESULTS

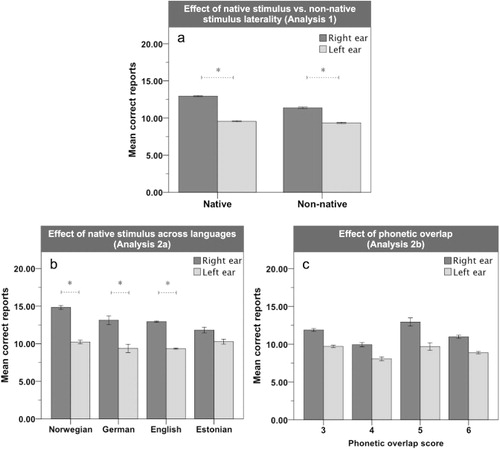

In the main sample (Analysis 1), there was a significant main effect of Ear, F(1, 4395) = 30.78, p < .0001, , right ear > left ear, equivalent to a REA of LI = 12.5 (SE = 0.4), and significant interactions between Ear × Handedness, F(2, 4395) = 10.29, p < .0001,

, and Ear × Stimulus language, F(1, 4395) = 9.04, p < .01,

, (see ). Post-hoc analyses revealed that all three handedness groups showed a significant REA (p < .001). However, the interaction was based on right-handers displaying a significantly larger REA than left-handers (p < .0001), whereas there were no significant differences of the REA between the ambidextrous groups and either right- or left-handers (p > .05). With regard to stimulus language, both the native and non-native stimulus sub-samples showed a significant REA (p < .0001), with the native-stimulus sample displaying a significantly larger REA than the non-native sample [LI = 14.4 (SE = 0.5) and LI = 9.1 (SE = 0.7), respectively]. Furthermore, there were main effects of Stimulus language, F(1, 4395) = 47.70, p < .001,

, native > non-native, and Age, F(2, 4395) = 57.57, p < .0001,

. No other main or interaction effects were significant (ps > .043).

In the native-stimulus sample (Analysis 2a), there was a significant main effect of Ear, F(1, 2339) = 41.84, p < .0001, = .018, right ear > left ear, indicating a REA of LI = 15.4 (SE = 0.6), and a significant interaction between Ear × Native language, F(3, 2339) = 4.42, p < .01,

= .006, (see ). Post-hoc analyses on the interaction effect showed a significant REA (p < .05) in all but the Estonian sub-sample, and the REAs differed significantly between certain native language groups (p < .05): Norwegian > (English > Estonian), but not between the other groups (ps > .05). Furthermore, there were main effects of Native language, F(3, 2339) = 35.02, p < .0001, η2 = .043, Norwegian > (English = German = Estonian), and Age, F(1, 2339) = 34.13, p < .0001,

= .014. No other main or interaction effects were significant (ps > .36).

In the non-native stimulus sample (Analysis 2b), there was a significant interaction between Ear × Sex, F(1, 933) = 7.18, p < .01, = .008. Post-hoc analyses of the interaction effect showed a significant REA in males (p < .0001) but not in females (p > .05), and the REA was significantly larger in males than females. Furthermore, there was a main effect of Phonetic overlap, F(3, 933) = 18.11, p < .0001,

= .055, 3 > 4, 3 = 5, 3 > 6, 4 < 5, 4 < 6, 5 > 6. Importantly, the interaction of Ear × Phonetic overlap did not reach significance, F(3, 933) = 0.71, p < .54, (see ). No other main or interaction effects were significant (ps > .01).

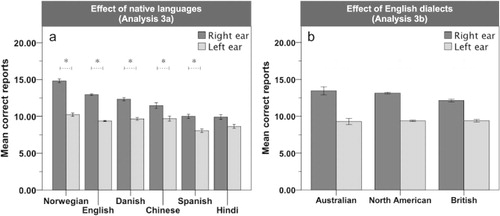

When looking at only the largest language groups (N > 100) of the main sample (Analysis 3a), there was a significant main effect of Ear, F(1, 3015) = 42.97, p < .0001, = .014, right ear > left ear, indicating a REA of LI = 14.1 (SE = 0.5), and a significant interaction between Ear × Native language, F(5, 3015) = 7.23, p < .0001,

= .012, (see ). Post-hoc analyses revealed that all of the language groups except for the Hindi group showed a significant REA (p < .05; for mean LI see ). The interaction was based on the REA being larger in the Norwegian and the English group compared to all other groups (p < .05). Further, the REA was larger in the Norwegian than the English group (p < .05), while no significant differences were found between the other language-groups (ps > .13). Furthermore, there were main effects of Native language, F(5, 3015) = 63.59, p < .0001,

= .095, Norwegian > English > (Danish = Chinese) > Hindi = Spanish, and Age, F(1, 3015) = 33.97, p < .0001,

= .011. No other main or interaction effects were significant (ps > .01).

In the native English sample (Analysis 3b), there was a significant main effect of Ear, F(1, 2015) = 54.73, p < .0001, = .026, right ear > left ear, indicating a REA of LI = 15.2 (SE = 0.6). The effect of interest, i.e., the Ear × Dialect interaction was not significant, F(2, 2015) = 3.81, p = .02,

= .004, (see ). Furthermore, there were main effects of Dialect, F(2, 2015) = 10.45, p < .0001,

= .010, (North American = Australian) > British, and Age, F(1, 2015) = 31.77, p < .0001,

= .016. No other main or interaction effects were significant (ps > .14).

DISCUSSION

The present study assessed the generality and specificity of the REA in a large, international sample, using a mobile-app version of the Bergen dichotic listening paradigm. Averaged across all sub-samples, an overall REA (LI = 12.5%) was found, and a significant REA emerged in most of the language sub-samples (see ), indicating that left-lateralized processing of language indeed is a “universal” phenomenon. However, we also found specificity of the REA emerging as differences in magnitude between the languages groups. These differences appeared to cut across language families. For example, Danish showed a significantly smaller LI than Norwegian, although the languages are closely related (both are part of the North Germanic language family), while languages as diverse as Chinese and Estonian displayed similar LIs. This raises the question whether these variations are due to methodological or sampling issues or indeed indicate valid differences in language laterality, which we attempt to answer in the following paragraphs.

The observed interaction of stimulus language and ear in the main sample (Analysis 1) suggests that “nativeness” of the stimulus language has an effect on the magnitude of the ear advantage, that is, performing the task with non-native (unfamiliar) as opposed to native (familiar) stimuli appears to produce a reduction in the measured laterality. The question however is whether the use of native vs. non-native stimuli can also explain the differences in the magnitude of the ear advantage observed between languages. Since the languages for which we had native syllables (English, German, Norwegian, Estonian) were selected arbitrarily, it might be that these selected languages (by chance) display stronger mean LIs than the other languages (specified as non-native). In this case, the main effect of “nativeness” would reflect true differences between languages in the LI rather than an effect of congruency between stimuli and native language. To differentiate between these opposing interpretations we further explored the data with regard to the phonetic overlap between the stimulus language and the native language of the participant. If the use of native vs. non-native stimuli alone was to explain the “nativeness”-effect, the following observations would be predicted: (1) in the languages for which native stimuli were used, there should be no effect of language background, and (2) in the languages for which non-native stimuli were used, a significant effect of phonetic overlap could be expected. However, neither of these predictions was right (see Analyses 2a/b; relevant effects not significant together with high test power), therefore the differences cannot be related to the stimulus-language congruency alone. Moreover, the largest language groups (English, Danish, Norwegian, Hindi, Chinese, Spanish) as well as the English dialect groups, showed variations of LIs (Analysis 3a/b), however, the latter emerged only as a trend. This suggests that the differences in the magnitude of the REA reflect true differences between the languages, whereas the differences between the English dialects are more subtle and therefore did not influence the REA substantially.

There are distinct linguistic features that may contribute to different degrees of lateralization. For example, there is evidence that speakers of tonal languages, such as Chinese, display greater variability in the degree and direction of language lateralization than non-tonal languages, such as English and Spanish (e.g., Valaki et al., Citation2004). In an fMRI study, Li et al. (Citation2010) observed a rightward asymmetry in frontoparietal regions when native speakers of Mandarin Chinese were asked to make judgements about lexical tones relative to when subjects were making judgements about consonants and rhymes, indicating a more bilateral, less asymmetrical processing of language in tonal languages. This could be reflected in the present study, by the fact that native speakers of Chinese showed a smaller REA, compared to native speakers of English and Spanish. However, since Chinese is the only language that uses tone to distinguish between monosyllables in the present database, other explanations need to be considered. Languages also differ with regard to their rhythmic structure. Rhythm rests on a combination of different acoustic properties, but the parameter that is most often linked to rhythm in the literature is duration (Loukina, Kochanski, Rosner, Keane, & Shih, Citation2011). For example, Estonian and Finnish are languages that use vowel-duration changes to signal phonetic distinctions, and this function is largely carried out by the right hemisphere (Kirmse et al., Citation2008). This may be one explanation for the relatively small LI of the Estonian sub-sample. Finally, it is possible that the differences in LIs in the non-native stimulus sample were influenced by the level of English proficiency, with less proficient subjects being more left-lateralized in their second language (see Hull & Vaid, Citation2007). However, since we do not know the proficiency level of the participants, this hypothesis cannot be explored with the current data.

In addition, there were other factors (sex, handedness) that affected the magnitude of the REA, as expected based on previous studies (Hugdahl, Citation2003; Roup, Wiley, & Wilson, Citation2006; Voyer, Citation2011). In the main sample (Analysis 1), handedness appeared to affect language lateralization, with right-handers displaying stronger left-lateralization than left-handers, as being widely reported (see Corballis, Citation1989; Ocklenburg, Beste, Arning, Peterburs, & Güntürkün, Citation2014) and being in line with previous results obtained with the current paradigm (Hugdahl, Citation2003), or neuroimaging techniques (e.g., Knecht et al., Citation2000; Pujol, Deus, Losilla, & Capdevila, Citation1999; Westerhausen et al., Citation2006). Further, the sex effect, with males being more left-lateralized than females, was significant in the non-native stimulus sample (Analysis 2b) and is in accordance with previous dichotic listening studies (Bless et al., Citation2013; Hirnstein et al., Citation2013; Hiscock et al., Citation1994; for a review, see Voyer, Citation2011). It should be noted that both factors (sex and handedness) had only limited effects on the REA (as indicated by small effect sizes) and no interaction with language background, despite similar sample-composition (more than 60% males in 13 of 16 sub-samples, see ). For example, Norwegian and Estonian samples had a balanced ratio of males and females, yet they displayed very different degrees of lateralization (18.3% and 6.1%, respectively); on the other hand, Hindi and Chinese had different sample compositions with regard to the factor sex, yet they showed similar degrees of lateralization (5.9% and 6.4%, respectively). There was no significant effect of age on the ear advantage, which is contrary to what might be expected based on previous reports (Bellis & Wilber, Citation2001; Gootjes, Van Strien, & Bouma, Citation2004; Roup et al., Citation2006).

In summary, the observed differences are stimulus-independent and related to the way the sounds are processed, as a more or less lateralized function, in native speakers of different languages. It may be that in some languages, e.g., tonal languages (Chinese) or languages with certain rhythmic characteristics (Estonian), a less lateralized processing of speech sounds is more efficient. In addition, laterality appears to be influenced by handedness and sex, although to a lesser degree than by language background; it may also vary within a person, e.g., as a function of hormonal fluctuations (e.g., Wadnerkar, Whiteside, & Cowell, Citation2008).

Beyond laterality, we also found that performing the task with non-native stimuli resulted in more errors (i.e., lower overall performance) than when native stimuli were used. Thus, analogously to what was discussed regarding the variability of the laterality, one might ask whether this is a methodological artefact of the “nativeness” of the stimulus material, or reflects “natural” variations across languages. For the former to be true, there should be: (1) no main effect of native language in the native stimulus sample, and (2) a main effect of phonetic overlap in the non-native stimulus sample, with a positive linear relationship between the overlap score and correct reports. Since the results showed a main effect of native language in both samples, but no systematic relationship between phonetic overlap and performance, it can be concluded that the different performance levels were not simply due to the “nativeness” of the stimulus material but also related to cross-linguistic variations. In addition, age had an effect on performance in all but the non-native stimulus samples. This is in line with previous reports that have shown a decline in cognitive performance with age (e.g., Passow et al., Citation2012; Salthouse, Citation2009).

Limitations

Certainly, large-scale data collection using smartphones also has its limitations, mainly embodied in the lack of control over the experimental parameters. Some of the “noise”, however, can be removed by using strict exclusion criteria. For example, participants who only gave right-ear responses may have had a hearing loss or failed to plug in the left earbud and were excluded from the analysis.

Despite the global reach of the experiment, the current sample is not without bias towards English-speaking participants. However, this bias is significantly reduced to about 55%, compared to the 82% reported by Arnett (Citation2008), and a further reduction is to be expected from future studies as smartphones become more widely available and affordable. Furthermore, although participants from 67 different native language backgrounds were part of the present study, many cultures were only represented in small numbers. For future experiments of this kind, it should be attempted to specifically target non-English, non-Western cultures, for example, by translating the app into various languages and deploying recruitment campaigns via social media and news platforms in those countries. Another bias is found in the fact that the current app only runs on iOS devices (e.g., iPhones). Although there is no evidence that suggests that Apple users would perform differently on a language-laterality test than e.g., Android users, such restriction should be avoided if possible, also considering the goal to reach a larger audience.

The present study does not answer beyond speculation as to why there are differences in language laterality between speakers of various native language backgrounds and English dialects (see discussion above). Thus, it may have been useful to include a few more items in the pre-test settings, for example, English proficiency level (see discussion above), or education level. However, since we intended this part to be short and simple in order to avoid overloading the participant with questions and information before the actual test started, we only included the most basic variables.

Finally, it may be argued that participants should only be tested with their native stimuli, to avoid a confounding effect of stimulus-language congruency. However, this study intended to collect a global sample, and as it was not possible to provide native syllables in all languages, some had to be tested with non-native stimuli. In addition, the present results showed that choice of stimulus language alone could not explain the observed differences in language lateralization between the language groups.

CONCLUSIONS

This study reveals that the REA is a general perceptual effect, emerging across languages, but differing in magnitude between languages. These differences may be at least partly related to linguistic aspects of the languages themselves, with bias towards specific phonological features (e.g., vowel duration) in the native language or dialect affecting the processing of language as a more or less lateralized function. These results suggest that more emphasis should be placed on cultural and linguistic specificities of psychological phenomena, as suggested by Henrich et al. (Citation2010), and on the need to collect more diverse, cross-cultural/cross-linguistic samples. The study further shows that smartphone-based data collection is an effective method to gain access to larger and more diverse populations.

Additional information

Funding

REFERENCES

- Arnett, J. J. (2008). The neglected 95%: Why American psychology needs to become less American. American Psychologist, 63, 602–614. doi:10.1037/0003-066X.63.7.602

- Bellis, T. J., & Wilber, L. A. (2001). Effects of aging and gender on interhemispheric function. Journal of Speech Language and Hearing Research, 44, 246–263. doi:10.1044/1092-4388(2001/021)

- Bless, J. J., Westerhausen, R., Arciuli, J., Kompus, K., Gudmundsen, M., & Hugdahl, K. (2013). “Right on all occasions?” – On the feasibility of laterality research using a smartphone dichotic listening application. Frontiers in Psychology, 4, 42. doi:10.3389/fpsyg.2013.00042

- Bryden, M. P. (1988). An overview of the dichotic listening procedure and its relation to cerebral organization. In K. Hugdahl (Ed.), Handbook of dichotic listening: Theory, methods and research (pp. 1–43). Oxford: John Wiley & Sons.

- Cohen, H., Levy, J. J., & McShane, D. (1989). Hemispheric specialization for speech and non-verbal stimuli in Chinese and French-Canadian subjects. Neuropsychologia, 27, 241–245. doi:10.1016/0028-3932(89)90175-9

- Corballis, M. C. (1989). Laterality and human evolution. Psychological Review, 96, 492–505. doi:10.1037/0033-295X.96.3.492

- Gootjes, L., Van Strien, J. W., & Bouma, A. (2004). Age effects in identifying and localising dichotic stimuli: A corpus callosum deficit? Journal of Clinical and Experimental Neuropsychology, 26, 826–837. doi:10.1080/13803390490509448

- Gosling, S. D., Sandy, C. J., John, O. P., & Potter, J. (2010). Wired but not WEIRD: The promise of the Internet in reaching more diverse samples. Behavioral and Brain Sciences, 33(2–3), 94–95. doi:10.1017/S0140525X10000300

- Gramstad, A., Engelsen, B. A., & Hugdahl, K. (2003). Left hemisphere dysfunction affects dichotic listening in patients with temporal lobe epilepsy. International Journal of Neuroscience, 113, 1177–1196. doi:10.1080/00207450390212302

- Henrich, J., Heine, S. J., & Norenzayan, A. (2010). The weirdest people in the world? Behavioral and Brain Sciences, 33(2–3), 61–83; discussion 83–135. doi:10.1017/S0140525X0999152X

- Henrich, J., McElreath, R., Barr, A., Ensminger, J., Barrett, C., Bolyanatz, A., … Ziker, J. (2006). Costly punishment across human societies. Science, 312, 1767–1770. doi:10.1126/science.1127333

- Hirnstein, M., Westerhausen, R., Korsnes, M. S., & Hugdahl, K. (2013). Sex differences in language asymmetry are age-dependent and small: A large-scale, consonant–vowel dichotic listening study with behavioral and fMRI data. Cortex, 49, 1910–1921. doi:10.1016/j.cortex.2012.08.002

- Hiscock, M., Inch, R., Jacek, C., Hiscock-Kalil, C., & Kalil, K. M. (1994). Is there a sex difference in human laterality? I. An exhaustive survey of auditory laterality studies from six neuropsychology journals. Journal of Clinical and Experimental Neuropsychology, 16, 423–435. doi:10.1080/01688639408402653

- Hugdahl, K. (1995). Dichotic listening: Probing temporal lobe functional integrity. In R. J. Davidson & K. Hugdahl (Eds.), Brain asymmetry (pp. 123–156). Cambridge, MA: MIT Press.

- Hugdahl, K. (2003). Dichotic listening in the study of auditory laterality. In K. Hugdahl & R. J. Davidson (Eds.), The asymmetrical brain (pp. 441–476). Cambridge, MA: MIT Press.

- Hugdahl, K. (2011). Fifty years of dichotic listening research – Still going and going and …. Brain and Cognition, 76, 211–213. doi:10.1016/j.bandc.2011.03.006

- Hugdahl, K., & Andersson, L. (1986). The “forced-attention paradigm” in dichotic listening to CV-syllables: A comparison between adults and children. Cortex, 22, 417–432. doi:10.1016/S0010-9452(86)80005-3

- Hugdahl, K., Bronnick, K., Kyllingsbaek, S., Law, I., Gade, A., & Paulson, O. B. (1999). Brain activation during dichotic presentations of consonant-vowel and musical instrument stimuli: A 15O-PET study. Neuropsychologia, 37, 431–440. doi:10.1016/S0028-3932(98)00101-8

- Hugdahl, K., Carlsson, G., Uvebrant, P., & Lundervold, A. J. (1997). Dichotic-listening performance and intracarotid injections of amobarbital in children and adolescents. Preoperative and postoperative comparisons. Archives of Neurology, 54, 1494–1500. doi:10.1001/archneur.1997.00550240046011

- Hugdahl, K., Westerhausen, R., Alho, K., Medvedev, S., & Hämäläinen, H. (2008). The effect of stimulus intensity on the right ear advantage in dichotic listening. Neuroscience Letters, 431(1), 90–94. doi:10.1016/j.neulet.2007.11.046

- Hull, R., & Vaid, J. (2007). Bilingual language lateralization: A meta-analytic tale of two hemispheres. Neuropsychologia, 45, 1987–2008. doi:10.1016/j.neuropsychologia.2007.03.002

- Hund-Georgiadis, M., Lex, U., Friederici, A. D., & von Cramon, D. Y. (2002). Non-invasive regime for language lateralization in right and left-handers by means of functional MRI and dichotic listening. Experimental Brain Research, 145, 166–176. doi:10.1007/s00221-002-1090-0

- Jones, D. (2010). Psychology. Ache WEIRD view of human nature skews psychologists' studies. Science, 328, 1627. doi:10.1126/science.328.5986.1627

- Kimura, D. (1961). Some effects of temporal-lobe damage on auditory perception. Canadian Journal of Psychology/Revue canadienne de psychologie, 15, 156–165. doi:10.1037/h0083218

- Kirmse, U., Ylinen, S., Tervaniemi, M., Vainio, M., Schroger, E., & Jacobsen, T. (2008). Modulation of the mismatch negativity (MMN) to vowel duration changes in native speakers of Finnish and German as a result of language experience. International Journal of Psychophysiology, 67, 131–143.

- Knecht, S., Drager, B., Deppe, M., Bobe, L., Lohmann, H., Floel, A., … Henningsen, H. (2000). Handedness and hemispheric language dominance in healthy humans. Brain, 123, 2512–2518.

- Li, X., Gandour, J. T., Talavage, T., Wong, D., Hoffa, A., Lowe, M., & Dzemidzic, M. (2010). Hemispheric asymmetries in phonological processing of tones versus segmental units. Neuroreport, 21, 690–694.

- Loukina, A., Kochanski, G., Rosner, B., Keane, E., & Shih, C. (2011). Rhythm measures and dimensions of durational variation in speech. The Journal of the Acoustical Society of America, 129, 3258–3270. doi:10.1121/1.3559709

- Miller, G. (2012). The smartphone psychology manifesto. Perspectives on Psychological Science, 7, 221–237. doi:10.1177/1745691612441215

- Nachshon, I. (1986). Cross-language differences in dichotic-listening. International Journal of Psychology, 21, 617–625. doi:10.1080/00207598608247609

- Ocklenburg, S., Beste, C., Arning, L., Peterburs, J., & Güntürkün, O. (2014). The ontogenesis of language lateralization and its relation to handedness. Neuroscience and Biobehavioral Reviews, 43, 191–198. doi:10.1016/j.neubiorev.2014.04.008

- Passow, S., Westerhausen, R., Wartenburger, I., Hugdahl, K., Heekeren, H. R., Lindenberger, U., & Li, S.-C. (2012). Human aging compromises attentional control of auditory perception. Psychology and Aging, 27, 99–105. doi:10.1037/a0025667

- Pollmann, S., Maertens, M., von Cramon, D. Y., Lepsien, J., & Hugdahl, K. (2002). Dichotic listening in patients with splenial and nonsplenial callosal lesions. Neuropsychology, 16(1), 56–64. doi:10.1037/0894-4105.16.1.56

- Pujol, J., Deus, J., Losilla, J. M., & Capdevila, A. (1999). Cerebral lateralization of language in normal left-handed people studied by functional MRI. Neurology, 52, 1038. doi:10.1212/WNL.52.5.1038

- Rimol, L. M., Eichele, T., & Hugdahl, K. (2006). The effect of voice-onset-time on dichotic listening with consonant–vowel syllables. Neuropsychologia, 44, 191–196. doi:10.1016/j.neuropsychologia.2005.05.006

- Roup, C. M., Wiley, T. L., & Wilson, R. H. (2006). Dichotic word recognition in young and older adults. Journal of the American Academy of Audiology, 17, 230–240; quiz 297–238. doi:10.3766/jaaa.17.4.2

- Salthouse, T. A. (2009). When does age-related cognitive decline begin? Neurobiology of Aging, 30, 507–514. doi:10.1016/j.neurobiolaging.2008.09.023

- Segall, M. H., Campbell, D. T., & Herskovits, M. J. (1966). The influence of culture on visual perception. Indianapolis, IN: Bobbs-Merrill.

- Sparks, R., Goodglass, H., & Nickel, B. (1970). Ipsilateral versus contralateral extinction in dichotic listening resulting from hemisphere lesions. Cortex, 6, 249–260. doi:10.1016/S0010-9452(70)80014-4

- Strauss, E., Gaddes, W. H., & Wada, J. (1987). Performance on a free-recall verbal dichotic listening task and cerebral dominance determined by the carotid amytal test. Neuropsychologia, 25, 747–753. doi:10.1016/0028-3932(87)90112-6

- Valaki, C. E., Maestu, F., Simos, P. G., Zhang, W., Fernandez, A., Amo, C. M., … Papanicolaou, A. C. (2004). Cortical organization for receptive language functions in Chinese, English, and Spanish: A cross-linguistic MEG study. Neuropsychologia, 42, 967–979. doi:10.1016/j.neuropsychologia.2003.11.019

- van den Noort, M., Specht, K., Rimol, L. M., Ersland, L., & Hugdahl, K. (2008). A new verbal reports fMRI dichotic listening paradigm for studies of hemispheric asymmetry. Neuroimage, 40, 902–911. doi:10.1016/j.neuroimage.2007.11.051

- Van der Haegen, L., Westerhausen, R., Hugdahl, K., & Brysbaert, M. (2013). Speech dominance is a better predictor of functional brain asymmetry than handedness: A combined fMRI word generation and behavioral dichotic listening study. Neuropsychologia, 51(1), 91–97. doi:10.1016/j.neuropsychologia.2012.11.002

- Voyer, D. (2011). Sex differences in dichotic listening. Brain and Cognition, 76, 245–255. doi:10.1016/j.bandc.2011.02.001

- Wadnerkar, M. B., Whiteside, S. P., & Cowell, P. E. (2008). Dichotic listening asymmetry: Sex differences and menstrual cycle effects. Laterality, 13, 297–309.

- Westerhausen, R., Helland, T., Ofte, S., & Hugdahl, K. (2010). A longitudinal study of the effect of voicing on the dichotic listening ear advantage in boys and girls at age 5 to 8. Developmental Neuropsychology, 35, 752–761. doi:10.1080/87565641.2010.508551

- Westerhausen, R., Kompus, K., & Hugdahl, K. (2014). Mapping hemispheric symmetries, relative asymmetries, and absolute asymmetries underlying the auditory laterality effect. Neuroimage, 84, 962–970. doi:10.1016/j.neuroimage.2013.09.074

- Westerhausen, R., Woerner, W., Kreuder, F., Schweiger, E., Hugdahl, K., & Wittling, W. (2006). The role of the corpus callosum in dichotic listening: A combined morphological and diffusion tensor imaging study. Neuropsychology, 20, 272–279. doi:10.1037/0894-4105.20.3.272