Abstract

Objectives

The number of bilateral adult cochlear implant (CI) users and bimodal CI users is expanding worldwide. The addition of a hearing aid (HA) in the contralateral non-implanted ear (bimodal) or a second CI (bilateral) can provide CI users with some of the benefits associated with listening with two ears. Our was to examine whether bilateral and bimodal CI users demonstrate binaural summation, binaural unmasking and a fluctuating masker benefit.

Methods

Direct audio input was used to present stimuli to 10 bilateral and 10 bimodal CochlearTM CI users. Speech recognition in noise (speech reception threshold, SRT) was assessed monaurally, diotically (identical signals in both devices) and dichotically (antiphasic speech) with different masking noises (steady-state and interrupted), using the digits-in-noise test.

Results

Bilateral CI users demonstrated a trend towards better SRTs with both CIs than with one CI. Bimodal CI users showed no difference between the bimodal SRT and the SRT for CI alone. No significant differences in SRT were found between the diotic and dichotic conditions for either group. Analyses of electrodograms created from bilateral stimuli demonstrated that substantial parts of the interaural speech cues were preserved in the Advanced Combination Encoder, an n-of-m channel selection speech coding strategy, used by the CI users. Speech recognition in noise was significantly better with interrupted noise than with steady-state masking noise for both bilateral and bimodal CI users.

Conclusion

Bilateral CI users demonstrated a trend towards binaural summation, but bimodal CI users did not. No binaural unmasking was demonstrated for either group of CI users. A large fluctuating masker benefit was found in both bilateral and bimodal CI users.

Introduction

Candidacy criteria for cochlear implantation have changed considerably over the years, mainly due to technical improvements and the resulting improved success of cochlear implantation. Consequently, the number of cochlear implant (CI) candidates is increasing, including people with residual hearing in one or both ears (Leigh et al., Citation2016; Snel-Bongers et al., Citation2018). These CI users regularly opt to wear a contralateral hearing aid (HA) to obtain the benefits of bimodal hearing (Devocht et al., Citation2015; Dunn et al., Citation2005; Gifford et al., Citation2007; van Loon et al., Citation2017). In addition, the attitude towards those with bilateral severe-to-profound hearing loss is also changing. Bilateral implantation is already considered the standard of care for prelingually deafened children in many countries, and is commonly recommended and performed in some countries for adults as well (Smulders et al., Citation2017). The prevailing thought is that the addition of a HA in the contralateral non-implanted ear (bimodal) or a second CI (bilateral) can provide CI users with some of the benefits associated with listening with two ears, such as improved localization ability and improved speech recognition when speech and noise are spatially separated. It has been demonstrated that bilateral and bimodal CI users can benefit from head shadow (better-ear listening) and binaural summation (Dunn et al., Citation2005; Gifford et al., Citation2014; Kokkinakis and Pak, Citation2014; Schafer et al., Citation2011; van Hoesel, Citation2012; van Loon et al., Citation2014), but they often benefit less from other binaural effects such as squelch or binaural unmasking (Gifford et al., Citation2014; Kokkinakis and Pak, Citation2014; Schleich et al., Citation2004; Sheffield et al., Citation2017). CI users have also been shown to have reduced ability to use temporal fluctuations in masking noise to improve speech recognition (Cullington and Zeng, Citation2008; Nelson et al., Citation2003; Zirn, Polterauer, et al., Citation2016), which further impairs speech recognition in everyday life situations.

Previously, we used the Dutch digits-in-noise (DIN) test in the free-field, with spatially separated loudspeakers for speech and noise, to demonstrate the head shadow effect in unilateral, bilateral and bimodal CI users (van Loon et al., Citation2014, Citation2017). Although measurements in the free-field better represent daily listening situations, there are many factors that are difficult to control which influence the results (e.g. room acoustics, background noise and head movements) and the results often reflect combinations of binaural processes. In other studies we used headphone presentation to measure the specific individual factors (i.e. binaural summation, binaural unmasking, and fluctuating masker benefit) in normal-hearing adults (Smits et al., Citation2016) and children (Koopmans et al., Citation2018).

Binaural summation

Binaural summation refers to the benefit obtained when identical signals are presented to both ears instead of just one. It is determined by comparing monaural to binaural speech recognition in noise with identical signals presented to both ears (i.e. diotic speech recognition, S0N0). Mixed results have been reported in the literature on binaural summation for bilateral and bimodal CI users. Some studies have demonstrated no binaural summation for bilateral CI users when signal and noise (i.e. speech-shaped noise or interfering talkers) were both presented from the front (Laske et al., Citation2009; Rana et al., Citation2017; van Hoesel and Tyler, Citation2003). Other studies found that some bilateral CI users experience binaural summation (Reeder et al., Citation2014; Schleich et al., Citation2004; Tyler et al., Citation2002; van Hoesel, Citation2012). Most studies show an improvement in speech recognition in noise with the addition of a HA (Hoppe et al., Citation2018; van Hoesel, Citation2012), but Morera et al. (Citation2005) did not find a benefit when using four-talker babble and a fixed signal-to noise ratio (SNR). Schafer et al. (Citation2011) conducted a meta-analysis to evaluate the findings on binaural advantages in bilateral and bimodal CI users. The authors concluded that the addition of a contralateral CI or HA significantly improved speech recognition in noise. Kokkinakis and Pak (Citation2014) also found a benefit, both in bimodal and bilateral CI users, when speech was presented in four-talker babble.

Binaural unmasking

Binaural unmasking refers to the improvement in speech recognition in noise due to inter-aural time differences (ITDs). It is determined by comparing diotic and dichotic (antiphasic) speech recognition. Dichotic speech recognition is obtained with identical noise signals in both ears, while the speech signal presented to one of the two ears is phase inverted (SπN0). The binaural auditory system uses the interaural speech cues, reflected by the difference between the monaural signals, to improve speech recognition scores in noisy conditions.

Binaural unmasking is rarely demonstrated for bilateral or bimodal CI users (Bernstein et al., Citation2016; Ching et al., Citation2005; Sheffield et al., Citation2017; van Hoesel et al., Citation2008), although Zirn, Arndt, et al. (Citation2016) did report binaural unmasking for bilateral CI users, and Sheffield et al. (Citation2017) reported binaural unmasking for several of their bimodal participants. Because temporal fine-structure information is not coded in most speech coding strategies, it is expected that bilateral electrical stimulation patterns (electrodograms) will be identical and therefore there will be no binaural unmasking.

Fluctuating masker benefit

Fluctuating masker benefit refers to the benefit of listening to temporarily more favorable SNRs which occur due to interruptions in the noise, i.e. so-called ‘dip listening.’ It is determined by comparing speech recognition in steady-state noise to speech recognition in interrupted noise. The limited number of studies on the fluctuating masker benefit for CI users have reported no (Cullington and Zeng, Citation2008; Nelson et al., Citation2003; Stickney et al., Citation2004; Zirn, Polterauer, et al., Citation2016) or limited fluctuating masker benefit for CI users (Nelson et al., Citation2003).

The aim of the current study was to examine whether CI users with a second CI or a contralateral HA demonstrate binaural summation, binaural unmasking and a fluctuating masker benefit. The digits-in-noise (DIN) test was selected for this study because it is a feasible, reliable and valid test for measuring speech recognition in noise in CI users (Kaandorp et al., Citation2015). To examine these effects, speech recognition in noise was measured with an Australian English DIN test in different listening conditions (monaural, diotic and dichotic) and with two different masking noises (steady-state and interrupted noise) in a group of bilateral CI users and a group of bimodal CI users. We hypothesized that both groups would demonstrate binaural summation and a small fluctuating masker benefit, with better performers achieving higher fluctuating masker benefits. We also hypothesized that participants would demonstrate no significant binaural unmasking, and that electrodograms would be essentially identical for the normal and antiphasic signal because the CI speech coding strategy encodes envelope information but does not explicitly encode temporal fine-structure information needed for binaural unmasking.

Materials and methods

Study participants

Ten bilateral CI users (3 females, 7 males; age range 20–91 years) and 10 bimodal CI users (6 females, 4 males; age range 29–84 years) participated in this study. All participants were native English speakers and had CochlearTM sound processors. Bilateral and bimodal participants who did not use CI910 sound processors were fitted with a CP910 processor for the purpose of the study to ensure access to direct audio input (DAI) and similiar sound processing across users. Both the bilateral and bimodal CI users had at least 6 months of experience with their CI(s) and attained phoneme scores in quiet of at least 50% with their CI as assessed at a recent visit to the clinic. lists the demographic characteristics of the participants.

Table 1 Demographic characteristics of bilateral and bimodal CI patients

Bilateral CI users

Monaural audio cables were connected to the accessory sockets of each CI processor, and the accessory mixing ratio of both CIs was set to accessory only.

Bimodal CI users

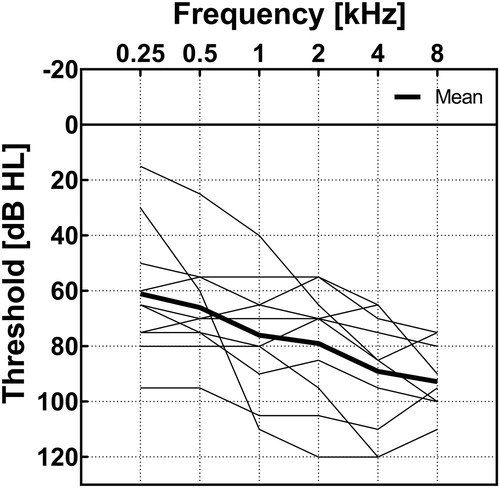

All bimodal CI users reported daily use of their hearing aid. For this study, they were fitted with the ReSound ENZO2 (EN998-DW, GN Hearing) HA prior to testing. This ensured access to DAI, which is not available with all HA models, and that the sound stimuli were presented at predefined levels. The fitting was performed by a HA audiologist, in a sound-treated testing booth, according to the NAL-NL2 prescription rule. Pure-tone audiometry was repeated prior to the HA fitting if no audiometry had been performed in the last 6 months. Target measurements were verified using Real-Ear Measurements (REMs) at 65 dB SPL and fine-tuned where necessary. The HA was programd with two programs: Program 1 set to standard microphone settings, and Program 2 set to default DAI settings. It should be noted that the DAI default setting yields a 3 dB advantage to the DAI signal but does not disable the HA microphone. The accessory mixing ratio of the CI was set to accessory only. The pure-tone thresholds of the HA ear of the bimodal CI users are shown in .

Digits-in-noise test

The DIN test measures speech recognition in noise in normal-hearing children and adults, listeners with severe-to-profound hearing loss and CI users (Kaandorp et al., Citation2015; Koopmans et al., Citation2018; Smits et al., Citation2013, Citation2016). In the standard test setup for CI users, stimuli are presented via a loudspeaker in a sound-treated booth (Kaandorp et al., Citation2015, Citation2017). Recent studies have demonstrated that the DIN test can also be administered reliably by a calibrated tablet-computer connected to the CI sound processor using DAI via an audio cable (de Graaff et al., Citation2016, Citation2018). In this study, test stimuli were presented via DAI to the CI sound processors (bilateral) or the CI sound processor and the contralateral HA (bimodal). This ensured the preservation of detailed and ear-specific cues that may be disrupted by room acoustics (e.g. noise and reverberation) and head movements in free field measurement conditions, enabling the study of subtle binaural effects and the effect of interruptions in the masking noise on speech recognition.

In this study, the DIN test used a validated set of Australian-English digit-triplets. Series of randomly chosen digit-triplets (e.g. 6-5-2) were presented in background noise to estimate the speech reception threshold (SRT). The SRT represents the SNR at which the listener correctly recognizes 50% of the triplets.

In this study, steady state noise and 16-Hz interrupted noise with a modulation depth of 100% was used. In the studies of Smits et al. (Citation2016) and Koopmans et al. (Citation2018), interrupted noise with a modulation depth of 15 dB was used to avoid the possible effect of ambient noise on the measured SRT. However, because we expected to find no or only a small fluctuating masker benefit for these participants (Zirn, Polterauer, et al., Citation2016), we used a modulation depth of 100% to maximize the possible benefit of the noise interruptions on the SRT for the CI users. The effect of ambient noise or reverberation on the SRT in interrupted noise was reduced by using DAI. The two noise types had the same spectrum as the average spectrum of the digits, and the noise was presented continuously throughout the test (de Graaff et al., Citation2016). Continuous presentation of background noise was required as the relatively slow-acting advanced sound processing features of the CP910 CI processor and ReSound ENZO HA need time to fully activate during speech recognition testing (de Graaff et al., Citation2016).

The overall presentation level was fixed at 65 dB SPL and the initial SNR was 0 dB. An adaptive procedure was used, in which the SNR of each digit-triplet depended on the correctness of the response on the previous digit-triplet, with the subsequent digit-triplet presented at a 2 dB higher SNR after an incorrect response, and 2 dB lower SNR after a correct response. This was the same test procedure as used by de Graaff et al. (Citation2016). This test procedure was specifically designed for use in CI patients in that it included two dummy digit-triplets with a fixed SNR of +6 and +2 dB which preceded the actual test. These dummy triplets were used to ensure that the noise reduction algorithms of the CI sound processor had settled, whilst maintaining the attention of the participant. Each test consisted of a total of 26 digit-triplet presentations. The SRT was calculated as the average SNR of the 7th to what would be the 27th digit-triplet. The SNR of the not-presented 27th digit-triplet was based on the SNR and correctness of the response of the 26th digit-triplet.

Procedures

Each participant completed the study tasks within one session and followed a within-subject repeated measures design. The test battery per participant consisted of four conditions (monaural [left CI and right CI for bilateral CI users, or CI and HA for bimodal CI users], diotic, dichotic), with two different masking noises (steady-state and interrupted noise). The test battery was preceded by two practice tests (diotic, one with steady-state and one with interrupted masking noise). Two tests (test and retest) were performed in each condition. Thus, each participant performed a total of 18 DIN tests: 2 practice tests + (2 tests × 4 conditions × 2 noise types). Test presentation order was counterbalanced across participants, with half of the participants starting with steady-state noise. Testing was completed in a sound-treated booth. Participants were instructed to repeat the digit-triplets to the experimenter who entered the responses into a tablet computer.

The study design followed that described by De Graaff et al (de Graaff et al., Citation2016, Citation2018). The tests were administered with a standard Windows tablet computer (Lenovo Thinkpad 10), using software developed by Cochlear Technology Center (Mechelen, Belgium). The stimuli were presented directly to the CI sound processor via an audio cable. The setup was calibrated, such that the signals presented through the audio cable were delivered at an intensity equal to the intensity of an acoustic signal of 65 dB SPL (de Graaff et al., Citation2016). The accuracy of the internal sound level meter of the CP910 sound processor was determined by comparing the sound pressure levels of pure-tones and speech-shaped noise read out directly from the internal sound level meter to those measured with a Bruël and Kjaer Type 2250 sound level meter. Differences were within 1 dB. The internal sound level meter of the sound processor was also used to calibrate the tablet computer. For the binaural measurements, a splitter cable with a stereo channel jack connected to the tablet computer, and two single channel sockets connected via an audio cable to the HA and CI, or to both CIs, was used.

DIN test conditions

Speech recognition was assessed monaurally (left and right ear) and binaurally (diotic and dichotic). For the diotic condition, the noise and speech were identical for both ears (S0N0). For the dichotic condition, the noise was identical for both ears while the speech signal presented to one of the two ears was phase-inverted (SπN0). Bilateral CI users were assessed in the following conditions; left CI only, right CI only and CI + CI (diotic and dichotic) and bimodal CI users were assessed in HA only, CI only and CI + HA (diotic and dichotic) conditions. During monaural speech recognition testing, the audio cable of the contralateral ear (either CI or HA) was disconnected.

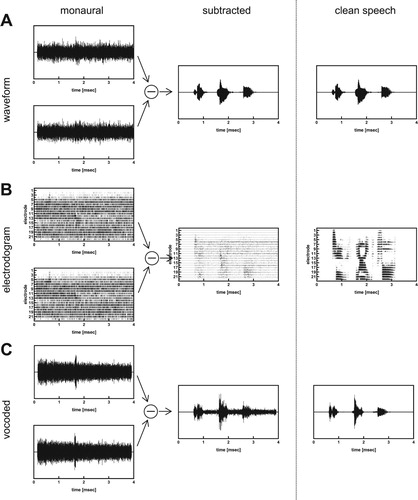

Analyses of electrodograms

All participants used the Advanced Combination Encoder (ACE), an n-of-m channel selection speech coding strategy. This coding strategy encodes envelope information but does not explicitly encode temporal fine-structure information. If temporal fine-structure information is lost during processing, the electrical pulse trains delivered by the implant would be essentially identical for the normal and phase-inverted signals and binaural unmasking could not occur. Electrodograms were created from the two (monaural) waveforms mimicking all the processing of a real CI processor. Differences between the two electrodograms were calculated to determine if any of the interaural speech cues were preserved. The electrodograms were resynthesized in noise-band vocoder stimuli to analyze the waveforms. The vocoder used pink noise signals, which were band-pass filtered by fourth-order Butterworth filters corresponding to the channels of the cochlear implant.

Results

Data was missing or unreliable for both the steady-state and interrupted masking noise for two bilateral CI users in the dichotic condition, for one bilateral CI user in the left CI condition with interrupted masking noise, and for two bilateral CI users in the right CI condition due to technical errors in data collection.

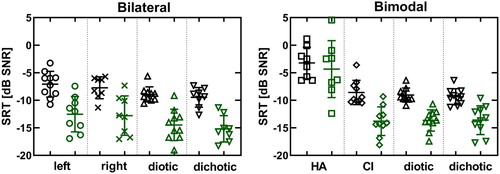

The test and retest SRTs for each condition were averaged. shows the mean and shows the individual SRTs for the different conditions and masking noises for the participants. On average, the monaural SRTs of the bilateral CI users were not significantly different between left and right CI nor between the first-implanted CI and the second-implanted CI, for both steady-state noise and interrupted noise (all p-values > 0.25, without correction for multiple comparisons). Therefore, the monaural SRTs for the left and right ears of the bilateral CI users were averaged and used as the mean monaural SRT. The effects of the choice of monaural reference ear on the calculated binaural summation are described in the Discussion section.

Figure 2 Speech recognition in noise for 10 bilateral (left panel) and 10 bimodal (right panel) CI users. The SRT was measured in four different conditions (different symbols) and with two different masking noises. The SRTs measured with steady-state noise are represented by the black symbols, the SRTs measured with interrupted noise are represented by the green symbols. The symbols represent individual scores and the horizontal lines represent mean and ± 1 standard deviation

Table 2 Mean SRT for the DIN tests (mean SRT for complete cases in brackets) measured in different conditions and with different masking noises

We used linear mixed models with participant as a random effect to analyze the data, because these models include all available data and not only those subjects with a complete dataset. For both groups of CI users, analyses were conducted to compare the effects of noise type and condition on SRT. For the bilateral CI users, a statistical analyses via linear mixed models revealed a significant overall effect of condition [F(2,49) = 4.964, p = 0.011] and type of noise [F(1,49) = 82.747, p < 0.001] on mean SRT. There was no significant interaction between condition and noise [F(2,49) = 0.041, p = 0.960]. For the bimodal CI users, linear mixed models revealed a significant overall effect of condition [F(3,68) = 34.298, p < 0.01] and type of noise [F(1,68) = 38.624, p < 0.01] on mean SRT. The interaction between condition and noise [F(3,68) = 2.023, p = 0.119] was not significant. Post hoc tests were conducted in the case of statistically significant effects and reported below to examine binaural summation, binaural unmasking and the fluctuating masker benefit.

Binaural summation

For the bilateral CI users, the post hoc comparisons showed significantly better SRTs in the dichotic condition than in the monaural condition (p = 0.013). SRTs in the diotic condition were not significantly better than in the monaural condition (p = 0.077) although inspection of individual data shows a trend towards better SRTs in the diotic condition (see the Discussion section and supplemental material). Additionally, diotic and dichotic SRTs were averaged and paired samples t tests were performed to clarify the somewhat conflicting results when comparing monaural to binaural SRTs. Mean SRTs in bilateral conditions were significantly better than in monaural conditions for steady-state noise (t(8) = −3.923, p = 0.008) and for interrupted noise (t(8) = −3.769, p = 0.010); Bonferroni corrections were used for multiple comparisons. Thus, our results reveal a trend towards binaural summation in steady-state noise (1.6 dB) and in interrupted noise (2.0 dB) for the bilateral CI users.

For the bimodal CI users, the post hoc comparisons revealed that the SRTs in the HA alone condition were significantly worse than the SRTs in all other conditions (CI alone, diotic and dichotic; all p-values < 0.001). There were no significant differences in mean SRT when comparing CI alone and diotic conditions, even when omitting the Bonferroni correction for multiple comparisons. Averaging diotic and dichotic SRTs and performing paired samples t tests, as for the bilateral CI users, did not show significant differences between monaural and binaural SRTs. Thus, there was no binaural summation in the group of bimodal CI users.

Binaural unmasking

The pairwise comparison of the diotic with dichotic condition was non-significant (p = 1.00) for the bilateral CI users. For the bimodal CI users, there were also no significant differences in mean SRT when comparing diotic and dichotic conditions (p = 1.00). Thus, our results suggest that bilateral and bimodal CI users do not benefit from binaural unmasking. Note the small number of participants and the specific paradigm used to measure binaural unmasking.

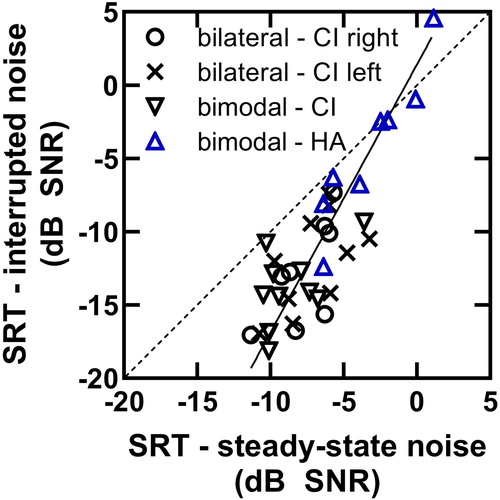

Fluctuating masker benefit

The conditions with interrupted noise resulted in significantly better SRTs than the conditions with steady-state noise for both the bilateral and bimodal CI users (p < 0.001 for both groups). Thus, both groups of participants benefited from interruptions in the masking noise, which yielded an average fluctuating masker benefit of 5.5 dB for bilateral CI users and 3.8 dB for bimodal CI users. Exploration of the individual data showed a trend towards increased benefit for bimodal users with better auditory thresholds. Due to the small number of participants, we could not test for statistical significance of the effect of auditory thresholds on bimodal benefit.

The effect of CI speech coding strategy on interaural speech cues

No significant binaural unmasking was found for the bilateral CI users. To explore whether the lack of binaural unmasking was due to the sound coding strategy of the CI sound processor or because the CI users cannot take advantage of interaural speech cues, electrodograms of a standard and a phase-inverted stimulus were compared. A shows acoustic waveforms for the standard and phase-inverted digit-triplet ‘294’ at an SNR of −10 dB. The difference between the two waveforms (subtracted waveform in ) yields the clean speech waveform. The interaural speech cues represented by the subtracted waveform can be used by an intact binaural auditory system and provides a benefit of 5–6 dB SNR in normal hearing listeners (Smits et al., Citation2016). Electrodograms were created from the two (monaural) waveforms (B). The difference between the two electrodograms illustrates that some interaural speech cues are preserved, as demonstrated by the similarity between the subtracted electrodogram and the electrodogram of the clean speech signal. Finally, the electrodograms were resynthesized in noise-band vocoder stimuli (C). Again, the difference between the standard and phase-inverted signals resembles the vocoded signal of the electrodogram of the clean speech signal. These results demonstrate that at least some of the interaural speech cues are preserved during ACE speech coding of the dichotic signals.

Figure 3 (A) From left to right: monaural acoustic waveforms of standard and phase-inverted digit-triplet (2-9-4) in steady-state noise at an SNR of −10 dB, the difference between both monaural waveforms (subtracted), and the waveform of the clean speech signal. (B) Electrodograms of the monaural acoustic waveforms, the difference between both electrodograms representing interaural speech cues, and the electrodogram of the clean speech signal. (C) Monaural acoustic waveforms of the vocoded electrodograms, the difference between the waveforms, and the waveform of the clean speech signal

Discussion

Binaural summation

Binaural summation was determined by comparing monaural and binaural SRTs. For bilateral CI users, there was a trend towards better SRTs for binaural conditions than for monaural conditions. For bimodal CI users, monaural speech recognition with the CI was significantly better than with the HA alone, but binaural speech recognition was not significantly better than monaural speech recognition with the CI.

Previous studies have shown that normal-hearing listeners experience binaural summation with the DIN test. Smits et al. (Citation2016) reported a small, but significant, benefit (between 0.5 and 0.8 dB) from the diotic condition compared to the monaural condition. van Loon et al. (Citation2014) reported binaural summation of 0.9 dB for normal-hearing listeners in a free-field setup with the DIN test in steady-state noise, and binaural summation of 1.8 dB for a small group of five bilateral CI users with the same set up. Although the binaural summation for the CI users reported by van Loon et al. (Citation2014) was not significant, it corresponds with the 1.6 dB found in the current study. Some studies have demonstrated no binaural summation for bilateral CI users (Laske et al., Citation2009; Rana et al., Citation2017; van Hoesel and Tyler, Citation2003). However, other studies have found that some bilateral CI users experience binaural summation (Kokkinakis & Pak, Citation2014; Reeder et al., Citation2014; Schleich et al., Citation2004; Tyler et al., Citation2002).

It should be noted that the magnitude of the calculated binaural summation depends on the chosen monaural reference SRT (Davis et al., Citation1990; van Hoesel, Citation2012). In the current study, we did not find differences in mean SRT between left and right nor between first-implanted and second-implanted CI. Therefore, we averaged the monaural SRTs to reduce random error. Significant differences in speech perception often exist between the first and second CI. If differences exist, averaging monaural SRTs would result in invalid results for binaural summation. For example, the calculated binaural summation would be half of the difference between the two monaural SRTs when there is no true binaural summation. When comparing the diotic SRT to the better ear monaural SRT, it could yield an underestimation of the true binaural summation. If the chosen reference ear is based on different criteria (e.g. first-implanted CI), then a potential risk of overestimating the true binaural summation occurs, for example, when speech recognition with the second-implanted CI is much better than with the first-implanted CI. The derived binaural summation would then consist of a better-ear advantage (i.e. the difference between the monaural SRTs from the second-implanted CI and first-implanted CI) and the true binaural summation.

We recognize that the differences observed between conditions could be partly due to small sample sizes and the choices that we made for the monaural reference SRT and binaural SRT. Results for paired sample t tests between monaural SRTs (first CI, better CI and the average monaural SRT) and binaural SRTs (diotic SRT and average of diotic and dichotic SRT) are provided as supplemental material. Although all 12 comparisons showed a better mean value for the binaural SRT than for the monaural SRT, only 5 of the 12 were statistically significantly different. Thus, our results suggest binaural summation for the group of bilateral CI users, but further study with a larger group of participants, and/or more SRT measurements to reduce measurement error, is needed to confirm this conclusion.

We did not find significant binaural summation in our group of bimodal CI users. Because of the much better SRTs in the CI alone conditions than in the HA alone conditions (differences are 5.3 and 8.9 dB for steady-state noise and interrupted noise, respectively), and because the better ear (i.e. CI alone) was chosen for the reference monaural SRT, no risk of underestimation or overestimation of the true binaural summation exists (van Hoesel, Citation2012). Most studies show an improvement in speech recognition in noise when adding a HA (Hoppe et al., Citation2018; Kokkinakis and Pak, Citation2014; van Hoesel, Citation2012). However, Morera et al. (Citation2005) did not find a benefit. Hoppe et al. (Citation2018) demonstrated binaural summation in a large group (148) of bimodal CI users. Different from our group of participants and also different from most other studies is that in the Hoppe et al. (Citation2018) study, participants generally had relatively good hearing thresholds in the HA ear and the mean monaural SRT with CI was similar to the mean monaural SRT with HA. Hoppe et al. (Citation2018) did not find a significant relationship between binaural summation and pure-tone thresholds, but the relationship with speech recognition in quiet with the HA was significant and showed less binaural summation for participants with poorer speech recognition in quiet. van Hoesel (Citation2012) analyzed data from four studies and showed a decrease in binaural summation for speech recognition in noise with an increasing difference between HA and CI scores. It is likely that we found no binaural summation in our group of bimodal CI users because of the relatively large differences between speech recognition scores with CI and HA. Importantly, the small number of participants could very well have influenced the results.

Binaural unmasking

Binaural unmasking was assessed by comparing diotic (same speech and noise signal, S0N0) and dichotic (phase-inverted speech signal and same noise signal, SπN0) speech recognition in noise. We hypothesized that both groups of CI users would not demonstrate binaural unmasking. Indeed, the interaural phase difference introduced in the speech signal was found to have no effect on speech recognition for bilateral and bimodal CI users. In normal-hearing listeners, binaural unmasking results in an improvement in speech recognition of 5.5–6.0 dB for digit-triplets (Smits et al., Citation2016). These values were obtained in a group of young normal-hearing listeners. Because binaural processing deteriorates with increasing age, even when audiometric thresholds are within the normal range (Moore, Citation2016), results could be expected to be lower for participants in the current study based on age alone.

Because CIs do not transmit the temporal fine-structure information of the signal, it was expected that CI users would lack interaural speech cues needed for binaural unmasking. This is supported by Sheffield et al. (Citation2017) who used the same conditions as in our study (S0N0 and SπN0) to measure SRTs for sentences presented auditory-visually to bimodal CI users pre- and post-implantation. They found that approximately half of the participants experienced binaural unmasking preoperatively. However, the majority of bimodal CI users had no binaural unmasking postoperatively (Sheffield et al., Citation2017). Using a different paradigm with speech and steady-state noise in one ear and the same noise, but without speech, in the other ear, Bernstein et al. (Citation2016) found no binaural unmasking for bilateral CI users. van Hoesel et al. (Citation2008) did not observe binaural speech unmasking, resulting from a 700 µs ITD applied to speech in diotic noise for bilateral CI users. Using a similar paradigm, Ching et al. (Citation2005) applied a 700 µs ITD to the noise and did not find binaural unmasking in bimodal CI users. However, Zirn, Arndt, et al. (Citation2016) reported a small but significant amount of binaural unmasking for bilateral CI users using the same paradigm as in our study. They reported a difference in SRT of approximately 0.5–0.6 dB between S0N0 and SπN0 for sentences in steady-state noise measured in a group of 12 MED-EL CI users.

The analyses of electrodograms unexpectedly showed that subtracting the monaural electrodograms yielded a substantial amount of interaural speech cues in the resulting electrodogram, even for the low SNR of −10 dB. It is unclear whether listeners can use these cues without specific training. A more detailed look at the subtracted electrodogram showed that the noise perfectly cancels out during the first 500 msec where no speech is present. When the mixed speech and noise signals introduce differences between the two monaural signals, the electrodograms show differences which last until the end of the stimuli. It is most likely that some slow acting features in the sound coding strategy act differently for the two signals, resulting in activation patterns where nothing would be expected (e.g. the last 750 msec of the stimuli where no speech is present and the noise is identical for both signals). This is also visible in the subtracted vocoded signals. It is remarkable that introducing the 180-degree phase shift to the speech signal has such an apparent effect on the subtracted electrodogram, even for an SNR of −10 dB. It should be noted that, although the results show that interaural speech cues are at least partly preserved by the ACE speech coding strategy, differences between fitting parameters in both sound processors could be detrimental to these interaural speech cues. Furthermore, differences in the electrode-neuron interface and pitch-to-place of stimulation differences may exist between the two ears, potentially limiting the possibility to fully utilize these cues. Researchers have developed specific speech processing strategies in an attempt to provide temporal fine-structure cues to bilateral CI users (Laback et al., Citation2015). Stimuli to both sides can be synchronized by using controlled electrical signals delivered by research processors (Todd et al., Citation2019). It is important to use these research processors when exploring the possibilities for bilateral CI users to use bilateral speech cues, but it does not give insight in the use of these cues in realistic listening situations with standard clinical speech processors. For bimodal CI users, the processing delay for the HA will be much larger than for the CI (Zirn et al., Citation2019) which would be detrimental for binaural unmasking.

Fluctuating masker benefit

The fluctuating masker benefit was assessed by comparing speech recognition in steady-state noise with speech recognition in interrupted noise. Both groups of CI users demonstrated a large and significant fluctuating masker benefit, 5.4 and 3.8 dB for bilateral and bimodal CI users, respectively. It is well known that normal-hearing individuals experience an improvement in speech recognition in fluctuating noise (Rhebergen et al., Citation2008; Smits et al., Citation2016; Smits and Houtgast, Citation2007). Hearing-impaired listeners often experience less fluctuating masker benefit than normal-hearing listeners (Bernstein and Grant, Citation2009; Desloge et al., Citation2010; Smits and Festen, Citation2013). Nelson et al. (Citation2003) found a small amount of fluctuating masker benefit for CI users, depending on the frequency of interruption. Cullington and Zeng (Citation2008) and Stickney et al. (Citation2004) found no fluctuating masker benefit for different fluctuating maskers in CI users and normal-hearing listeners who listened through an implant simulation.

Possible explanations for the discrepancy between our findings and the findings in the literature may be the stimulus presentation mode, type of fluctuating noise, and speech material used. We used DAI to directly present stimuli to the sound processor, whereas some other studies used loudspeakers to present stimuli. However, Zirn, Polterauer, et al. (Citation2016) also used an audio cable to investigate fluctuating masker benefit in a group of high performing CI users, and they found no fluctuating masker benefit. Unlike our study, which used interrupted noise, they used speech-modulated noise which means that CI users need to segregate speech from noise. In interrupted noise, CI users can use clean speech fragments during noise interruptions for recognition. The large fluctuating masker benefit found in our study might also be explained by the speech material that we used (i.e. digit-triplets). Smits and Festen (Citation2013) showed that the fluctuating masker benefit decreases with increasing SNR. Both Kwon et al. (Citation2012) and Zirn, Polterauer, et al. (Citation2016) used sentences as speech material. SRTs for sentences are in general worse than SRTs for digit-triplets (Kaandorp et al., Citation2015; Smits et al., Citation2013), which implies less fluctuating masker benefit when using sentences than when using digit-triplets.

Another explanation for the large fluctuating masker benefit found in our study compared to other studies could be the relatively good SRTs in steady-state noise for the CI users in the current study. The fluctuating masker benefit for hearing-impaired listeners decreases with increasing SRTs in steady-state noise (Smits and Festen, Citation2013). gives an indication that the large fluctuating masker benefit could be due to the good (low) SRTs in steady-state noise found in our study. It shows the SRT in interrupted noise versus the SRT in steady-state noise for the monaural conditions. The data was fitted with a linear function, represented by the solid line, using Deming’s regression to account for the errors in both x and y values. The slope of this function, 1.8 (95%CI: 1.6–2.1), indicates a decrease in fluctuating masker benefit with increasing SRT in steady-state noise. This might indicate that the CI users in this study are relatively high-performing CI users. Both Kwon et al. (Citation2012) and Zirn, Polterauer, et al. (Citation2016) showed fluctuating masker benefit for some of their high-performing CI users. However, Kwon et al. (Citation2012) created a condition that promoted fluctuating masker benefit, because the masker was presented in gaps of the speech signal.

Figure 4 SRT in interrupted noise versus SRT in steady-state noise for the monaural conditions in bilateral and bimodal CI users. Black symbols represent data from measurements with a CI and blue symbols represent data from measurements with a HA. The diagonal dashed line represents equal performance, with the distance between points below the dashed line and the diagonal representing the fluctuating masker benefit. The solid line shows a fitted linear function suggesting a decrease in fluctuating masker benefit with an increase in SRT in steady-state noise

Conclusion

The current study demonstrated a trend toward binaural summation in bilateral CI users but not in bimodal CI users. The latter may be due to the large difference in speech recognition with CI and with HA. Although the effect of binaural unmasking on speech recognition in noise is large in normal-hearing listeners, no binaural unmasking was demonstrated for either group of CI users. Electrodogram analyses showed that a substantial proportion of interaural speech cues are preserved in the ACE speech coding strategy. However, the absence of binaural unmasking in bilateral CI users showed that this group could not use these cues to improve speech recognition in noise. A large fluctuating masker benefit was found in both bilateral and bimodal CI users, when speech recognition in steady-state masking noise was compared to speech recognition in interrupted masking noise. These results indicate that both bilateral and bimodal CI users are able to use the temporarily higher SNRs that arise from interruptions in the masking noise.

Statement of ethics

Participants enrolled in the study voluntarily and provided informed consent at the beginning of the study. The study was approved by the Human Research Ethics Committee of The University of Western Australia.

Disclaimer statements

Contributors None.

Funding None.

Conflict of interest None.

Ethics approval None.

Supplemental data for this article can be accessed https://doi.org/10.1080/14670100.2021.1894686

Acknowledgements

The authors would like to thank Siew-Moon Lim and Kayla Harvey from Ear Science Institute Australia (Subiaco, Australia) for their help with the recruitment of participants, HA fitting and data collection. The authors would also like to thank Ann de Bolster and Obaid Qazi from Cochlear Technology Center (Mechelen, Belgium) for contributions to the development and programing of the speech recognition tool, and for creating the electrodograms and vocoded signals. GN Hearing Benelux B.V. is acknowledged for providing the ReSound ENZO hearing aids used in this study.

References

- Bernstein, J.G., Goupell, M.J., Schuchman, G.I., Rivera, A.L., Brungart, D.S. 2016. Having two ears facilitates the perceptual separation of concurrent talkers for bilateral and single-sided deaf cochlear implantees. Ear & Hearing, 37(3): 289–302. doi:10.1097/AUD.0000000000000284.

- Bernstein, J.G., Grant, K.W. 2009. Auditory and auditory-visual intelligibility of speech in fluctuating maskers for normal-hearing and hearing-impaired listeners. The Journal of the Acoustical Society of America, 125(5): 3358–3372. doi:10.1121/1.3110132.

- Ching, T.Y., van Wanrooy, E., Hill, M., Dillon, H. 2005. Binaural redundancy and inter-aural time difference cues for patients wearing a cochlear implant and a hearing aid in opposite ears. International Journal of Audiology, 44(9): 513–521. doi:10.1080/14992020500190003.

- Cullington, H.E., Zeng, F.G. 2008. Speech recognition with varying numbers and types of competing talkers by normal-hearing, cochlear-implant, and implant simulation subjects. The Journal of the Acoustical Society of America, 123(1): 450–461. doi:10.1121/1.2805617.

- Davis, A., Haggard, M., Bell, I. 1990. Magnitude of diotic summation in speech-in-noise tasks: performance region and appropriate baseline. British Journal of Audiology, 24(1): 11–16. doi:10.3109/03005369009077838.

- de Graaff, F., Huysmans, E., Merkus, P., Theo Goverts, S., Smits, C. 2018. Assessment of speech recognition abilities in quiet and in noise: a comparison between self-administered home testing and testing in the clinic for adult cochlear implant users. International Journal of Audiology, 57(11): 872–880. doi:10.1080/14992027.2018.1506168.

- de Graaff, F., Huysmans, E., Qazi, O.U., Vanpoucke, F.J., Merkus, P., Goverts, S.T., Smits, C. 2016. The development of remote speech recognition tests for adult cochlear implant users: the effect of presentation mode of the noise and a reliable method to deliver sound in home environments. Audiology and Neurotology, 21(Suppl. 1): 48–54. doi:10.1159/000448355.

- Desloge, J.G., Reed, C.M., Braida, L.D., Perez, Z.D., Delhorne, L.A. 2010. Speech reception by listeners with real and simulated hearing impairment: effects of continuous and interrupted noise. The Journal of the Acoustical Society of America, 128(1): 342–359. doi:10.1121/1.3436522.

- Devocht, E.M., George, E.L., Janssen, A.M., Stokroos, R.J. 2015. Bimodal hearing aid retention after unilateral cochlear implantation. Audiology and Neurotology, 20(6): 383–393. doi:10.1159/000439344.

- Dunn, C.C., Tyler, R.S., Witt, S.A. 2005. Benefit of wearing a hearing aid on the unimplanted ear in adult users of a cochlear implant. Journal of Speech, Language, and Hearing Research, 48(3): 668–680. doi:10.1044/1092-4388(2005/046).

- Gifford, R.H., Dorman, M.F., McKarns, S.A., Spahr, A.J. 2007. Combined electric and contralateral acoustic hearing: word and sentence recognition with bimodal hearing. Journal of Speech, Language, and Hearing Research, 50(4): 835–843. doi:10.1044/1092-4388(2007/058).

- Gifford, R.H., Dorman, M.F., Sheffield, S.W., Teece, K., Olund, A.P. 2014. Availability of binaural cues for bilateral implant recipients and bimodal listeners with and without preserved hearing in the implanted ear. Audiology and Neurotology, 19(1): 57–71. doi:10.1159/000355700.

- Hoppe, U., Hocke, T., Digeser, F. 2018. Bimodal benefit for cochlear implant listeners with different grades of hearing loss in the opposite ear. Acta Oto-Laryngologica, 138(8): 713–721. doi:10.1080/00016489.2018.1444281.

- Kaandorp, M.W., Smits, C., Merkus, P., Festen, J.M., Goverts, S.T. 2017. Lexical-Access ability and cognitive predictors of speech recognition in noise in adult cochlear implant users. Trends in Hearing, 21: 2331216517743887. doi:10.1177/2331216517743887.

- Kaandorp, M.W., Smits, C., Merkus, P., Goverts, S.T., Festen, J.M. 2015. Assessing speech recognition abilities with digits in noise in cochlear implant and hearing aid users. International Journal of Audiology, 54(1): 48–57 (In File). doi:10.3109/14992027.2014.945623.

- Kokkinakis, K., Pak, N. 2014. Binaural advantages in users of bimodal and bilateral cochlear implant devices. The Journal of the Acoustical Society of America, 135(1), EL47–EL53. doi:10.1121/1.4831955.

- Koopmans, W.J.A., Goverts, S.T., Smits, C. 2018. Speech recognition abilities in normal-hearing children 4 to 12 years of age in stationary and interrupted noise. Ear & Hearing, 39(6): 1091–1103. doi:10.1097/AUD.0000000000000569.

- Kwon, B.J., Perry, T.T., Wilhelm, C.L., Healy, E.W. 2012. Sentence recognition in noise promoting or suppressing masking release by normal-hearing and cochlear-implant listeners. The Journal of the Acoustical Society of America, 131(4): 3111–3119. doi:10.1121/1.3688511.

- Laback, B., Egger, K., Majdak, P. 2015. Perception and coding of interaural time differences with bilateral cochlear implants. Hearing Research, 322: 138–150. doi:10.1016/j.heares.2014.10.004.

- Laske, R.D., Veraguth, D., Dillier, N., Binkert, A., Holzmann, D., Huber, A.M. 2009. Subjective and objective results after bilateral cochlear implantation in adults. Otology & Neurotology, 30(3): 313–318. doi:10.1097/MAO.0b013e31819bd7e6.

- Leigh, J.R., Moran, M., Hollow, R., Dowell, R.C. 2016. Evidence-based guidelines for recommending cochlear implantation for postlingually deafened adults. International Journal of Audiology, 55(Suppl 2): S3–S8. doi:10.3109/14992027.2016.1146415.

- Moore, B.C.J. 2016. Effects of age and hearing loss on the processing of auditory temporal fine structure. Advances in Experimental Medicine and Biology, 894: 1–8. doi:10.1007/978-3-319-25474-6_1.

- Morera, C., Manrique, M., Ramos, A., Garcia-Ibanez, L., Cavalle, L., Huarte, A., et al. 2005. Advantages of binaural hearing provided through bimodal stimulation via a cochlear implant and a conventional hearing aid: a 6-month comparative study. Acta Oto-Laryngologica, 125(6): 596–606. doi:10.1080/00016480510027493.

- Nelson, P.B., Jin, S.H., Carney, A.E., Nelson, D.A. 2003. Understanding speech in modulated interference: cochlear implant users and normal-hearing listeners. The Journal of the Acoustical Society of America, 113(2): 961–968. doi:10.1121/1.1531983.

- Rana, B., Buchholz, J.M., Morgan, C., Sharma, M., Weller, T., Konganda, S.A., et al. 2017. Bilateral versus unilateral cochlear implantation in adult listeners: speech-on-speech masking and multitalker localization. Trends in Hearing, 21: 2331216517722106. doi:10.1177/2331216517722106.

- Reeder, R.M., Firszt, J.B., Holden, L.K., Strube, M.J. 2014. A longitudinal study in adults with sequential bilateral cochlear implants: time course for individual ear and bilateral performance. Journal of Speech, Language, and Hearing Research, 57(3): 1108–1126. doi:10.1044/2014_JSLHR-H-13-0087.

- Rhebergen, K.S., Versfeld, N.J., Dreschler, W.A. 2008. Learning effect observed for the speech reception threshold in interrupted noise with normal hearing listeners. International Journal of Audiology, 47(4): 185–188. doi:10.1080/14992020701883224.

- Schafer, E.C., Amlani, A.M., Paiva, D., Nozari, L., Verret, S. 2011. A meta-analysis to compare speech recognition in noise with bilateral cochlear implants and bimodal stimulation. International Journal of Audiology, 50(12): 871–880. doi:10.3109/14992027.2011.622300.

- Schleich, P., Nopp, P., D'Haese, P. 2004. Head shadow, squelch, and summation effects in bilateral users of the MED-EL COMBI 40/40+ cochlear implant. Ear and Hearing, 25(3): 197–204. doi:10.1097/01.aud.0000130792.43315.97.

- Sheffield, B.M., Schuchman, G., Bernstein, J.G.W. 2017. Pre- and postoperative binaural unmasking for bimodal cochlear implant listeners. Ear & Hearing, 38(5): 554–567. doi:10.1097/AUD.0000000000000420.

- Smits, C., Festen, J.M. 2013. The interpretation of speech reception threshold data in normal-hearing and hearing-impaired listeners: II. Fluctuating noise. The Journal of the Acoustical Society of America, 133(5): 3004–3015. doi:10.1121/1.4798667.

- Smits, C., Houtgast, T. 2007. Recognition of digits in different types of noise by normal-hearing and hearing-impaired listeners. International Journal of Audiology, 46(3): 134–144. doi:10.1080/14992020601102170.

- Smits, C., Theo Goverts, S., Festen, J.M. 2013. The digits-in-noise test: assessing auditory speech recognition abilities in noise. The Journal of the Acoustical Society of America, 133(3): 1693–1706 (In File). doi:10.1121/1.4789933<otherinfo.

- Smits, C., Watson, C.S., Kidd, G.R., Moore, D.R., Goverts, S.T. 2016. A comparison between the Dutch and American-English digits-in-noise (DIN) tests in normal-hearing listeners. International Journal of Audiology, 55(6): 358–365. doi:10.3109/14992027.2015.1137362.

- Smulders, Y.E., Hendriks, T., Eikelboom, R.H., Stegeman, I., Santa Maria, P.L., Atlas, M.D., Friedland, P.L. 2017. Predicting sequential cochlear implantation performance: a systematic review. Audiology and Neurotology, 22(6): 356–363. doi:10.1159/000488386.

- Snel-Bongers, J., Netten, A.P., Boermans, P.B.M., Rotteveel, L.J.C., Briaire, J.J., Frijns, J.H.M. 2018. Evidence-based inclusion criteria for cochlear implantation in patients with postlingual deafness. Ear & Hearing, 39(5): 1008–1014. doi:10.1097/AUD.0000000000000568.

- Stickney, G.S., Zeng, F.G., Litovsky, R., Assmann, P. 2004. Cochlear implant speech recognition with speech maskers. The Journal of the Acoustical Society of America, 116(2): 1081–1091. doi:10.1121/1.1772399.

- Todd, A.E., Goupell, M.J., Litovsky, R.Y. 2019. Binaural unmasking with temporal envelope and fine structure in listeners with cochlear implants. The Journal of the Acoustical Society of America, 145(5): 2982–2993. doi:10.1121/1.5102158.

- Tyler, R.S., Gantz, B.J., Rubinstein, J.T., Wilson, B.S., Parkinson, A.J., Wolaver, A., et al. 2002. Three-month results with bilateral cochlear implants. Ear and Hearing, 23(Suppl 1): 80S–89S. doi:10.1097/00003446-200202001-00010.

- van Hoesel, R., Bohm, M., Pesch, J., Vandali, A., Battmer, R.D., Lenarz, T. 2008. Binaural speech unmasking and localization in noise with bilateral cochlear implants using envelope and fine-timing based strategies. The Journal of the Acoustical Society of America, 123(4): 2249–2263. doi:10.1121/1.2875229.

- van Hoesel, R.J. 2012. Contrasting benefits from contralateral implants and hearing aids in cochlear implant users. Hearing Research, 288(1–2): 100–113. doi:10.1016/j.heares.2011.11.014.

- van Hoesel, R.J., Tyler, R.S. 2003. Speech perception, localization, and lateralization with bilateral cochlear implants. The Journal of the Acoustical Society of America, 113(3): 1617–1630. doi:10.1121/1.1539520.

- van Loon, M.C., Goverts, S.T., Merkus, P., Hensen, E.F., Smits, C. 2014. The addition of a contralateral microphone for unilateral cochlear implant users: Not an alternative for bilateral cochlear implantation. Otology & Neurotology, 35(9): e233–e239.

- van Loon, M.C., Smits, C., Smit, C.F., Hensen, E.F., Merkus, P. 2017. Cochlear implantation in adults With asymmetric hearing loss: benefits of bimodal stimulation. Otology & Neurotology, 38(6): e100–e106. doi:10.1097/MAO.0000000000001418.

- Zirn, S., Angermeier, J., Arndt, S., Aschendorff, A., Wesarg, T. 2019. Reducing the device delay mismatch Can improve sound localization in bimodal cochlear implant/hearing-Aid users. Trends in Hearing, 23: 2331216519843876. doi:10.1177/2331216519843876.

- Zirn, S., Arndt, S., Aschendorff, A., Laszig, R., Wesarg, T. 2016. Perception of interaural phase differences with envelope and fine structure coding strategies in bilateral cochlear implant users. Trends in Hearing, 20. doi:10.1177/2331216516665608.

- Zirn, S., Polterauer, D., Keller, S., Hemmert, W. 2016. The effect of fluctuating maskers on speech understanding of high-performing cochlear implant users. International Journal of Audiology, 55(5): 295–304. doi:10.3109/14992027.2015.1128124.