ABSTRACT

This paper contributes to the social media moderation research space by examining the still under-researched “shadowban”, a form of light and secret censorship targeting what Instagram defines as borderline content, particularly affecting posts depicting women’s bodies, nudity and sexuality. “Shadowban” is a user-generated term given to the platform’s “vaguely inappropriate content” policy, which hides users’ posts from its Explore page, dramatically reducing their visibility. While research has already focused on algorithmic bias and on social media moderation, there are not, at present, studies on how Instagram’s shadowban works. This autoethnographic exploration of the shadowban provides insights into how it manifests from a user’s perspective, applying a risk society framework to Instagram’s moderation of pole dancing content to show how the platform’s preventive measures are affecting user rights.

Introduction

This article provides one of the first contributions to research on Instagram’s “shadowban”, a user-generated term for the Facebook-owned photo streaming app’s “vaguely inappropriate content” policy, which dramatically reduces posts’ visibility by hiding them from its Explore page without warning (John Constine Citation2019).

Instagram’s moderation of nudity has previously been condemned by artists, performers, activists and celebrities after bans of pictures of female but not male nipples, which caused global online and offline protests such as #FreeTheNipple (Tarleton Gillespie Citation2018; Instagram Citationn.d.b). While following the protests Facebook and Instagram stopped removing breastfeeding and mastectomy pictures (Gillespie Citation2018), nudity on these platforms continues to be viewed as problematic, and is moderated through a variety of obscure techniques, such as shadowbans (Susanna Paasonen, Kylie Jarrett and Ben Light Citation2019; Katrin Tiidenberg and Emily van der Nagel Citation2020).

Techniques similar to shadowbanning have been used in Internet forums since the 1970s, but the term itself seems to have appeared in the early 2000s on the Something Awful website, where in 2001 moderators began to diminish the reach of those joining forums to troll others (Samantha Cole Citation2018). In 2016, shadowbans became a conservative conspiracy theory, with the far-right website Breitbart arguing Twitter was shadowbanning Republicans to diminish their influence (ibid).

Shadowbans are a cross-platform moderation technique implemented by platforms like Twitter—which prevents shadowbanned accounts’ usernames from appearing at search (Liam Stack Citation2018)—and of TikTok—which allegedly hid black creators from newsfeeds (Trinady Joslin Citation2020). Given my experience with Instagram censorship and the app’s growing role in self-promotion and user self-expression (Jesselyn Cook Citation2019, Citation2020), this paper focuses on Instagram’s shadowban.

Throughout 2019 and 2020, Instagram used shadowbans to hide pictures and videos they deemed inappropriate without deleting them, preventing freelancers, artists, sex workers, activists and, largely, women from reaching new audiences and potentially growing their pages (Carolina Are Citation2019a; Cook Citation2019, Citation2020; Sharine Taylor Citation2019; Mark Zuckerberg Citation2018). Hiding or removing social media content is, for Gillespie, “akin to censorship”: it limits users’ speech and prevents them from participating on platforms, causing a chilling effect when posting (Gillespie Citation2018, 177).

Informed by an autoethnography of my experience on Instagram and my interviews with their press team, this paper examines Instagram’s censorship of nudity through a world risk society framework. World risk society theory (Ulrich Beck Citation1992, Citation2006; Anthony Giddens Citation1998) is based on the idea that institutions and businesses ineffectively attempt to reduce risks for their citizens or customers by restricting civil liberties. This way, corporations attempt to avoid undesirable events by arbitrarily identifying risks to prevent, increasing the marginalisation of society’s ‘others’ (ibid). This paper will show that, on Instagram, these preventive governance measures result in unfair moderation.

Social media moderation research has explored the practice of ‘flagging’ content (Kate Crawford and Tarleton Gillespie Citation2016) algorithmic bias in moderation (Reuben Binns Citation2019; Gillespie Citation2018; Kaye Citation2019; Paasonen et al. Citation2019; etc); censorship of sex or sexual content (Tiidenberg and van der Nagel Citation2020); and the ‘assembling’ of social media moderation and content recommendation processes to perpetuate sexism (Ysabel Gerrard and Helen Thornham Citation2020). However, research has yet to examine examples of Instagram’s shadowban.

While nudity and sexualisation of women are common in advertising and the media (J V. Sparks and Annie Lang Citation2015), social media offered users the opportunity to portray their sexuality on their own terms, sparking movements and trends that normalised different bodies and sexual practices (Sarah Banet-Weiser Citation2018; Rachel Cohen et al. Citation2019). This deviation from mainstream notions of which bodies and activities are deemed acceptable to depict in a public forum nonetheless resulted in platforms limiting nudity and sexual expression to appeal to advertisers (Tarleton Gillespie Citation2010; Paasonen et al. Citation2019; Tiidenberg and van der Nagel Citation2020).

Facebook/Instagram’s governance of bodies following this shift has been found to rely on old-fashioned and non-inclusive depictions of bodies: leaked reports showed the company based their Community Standards on advertising guidelines by Victoria’s Secret (Salty Citation2019) or tried to normate the acceptability of different modes of breast cupping or grabbing (David Gilbert Citation2020), using standards more akin to sexist advertising (Sparks and Lang Citation2015) than to the progressive sexual practices showcased by the platforms’ own users. Shadowbans are a key technique through which these standards are implemented.

Instagram reportedly uses light censorship through the shadowban, meaning that if a post is sexually suggestive, but doesn’t depict a sex act, it may not appear in Explore or hashtag pages, seriously impacting creators’ ability to gain new audiences (Are Citation2019b, Citation2019c; Constine Citation2019).

Shadowbannable content is what Zuckerberg (Citation2018) defined as “borderline” when he introduced the policies he rolled out across Facebook brands. Borderline content toes the line of acceptability Facebook/Instagram set and results, in their experience, in higher engagement even if people do not approve of it. Facebook understands nudity as borderline and has successfully hidden it from its platforms: “photos close to the line of nudity, like with revealing clothing or sexually suggestive positions, got more engagement on average before we changed the distribution curve to discourage this” (ibid). Zuckerberg fails to define what “revealing clothing” or “sexually suggestive positions” are, but admits Facebook’s algorithms “proactively identify 96% of the nudity” they take action against compared to 52% of hate speech (ibid).

Facebook/Instagram’s approach, which affects women, athletes, educators, artists, sex workers, the LGBTQIA+ community and people of colour, has been condemned as “secret censorship of a person, topic or community,” negatively impacting those who rely on these platforms to earn a living (Cook Citation2020). In a social media economy that thrives on visibility and its resulting opportunities (Banet-Weiser Citation2018), social media platforms hold the tools to distribute said visibility, tools unequally available to users performing different actions and to different bodies from different racial and socio-economic backgrounds, engaging in different types of labour.

World risk society and Instagram

This paper applies Beck’s (Citation1992, Citation2006) and Giddens’ (Citation1998) world risk society theory to Instagram moderation. A sense of security is a crucial part of the social contract, a trade-off between individual liberty and security (Barbara Hudson Citation2003). Risks are undesired, threatening events (ibid) that become apparent through what Beck calls “techniques of visualization,” like the mass media (Beck Citation2006, 332). Modern society has become so preoccupied with risks that, for Beck, it has become a risk society “debating, preventing and managing risks that it itself has produced” (ibid). Beck’s world risk society “addresses the increasing realization of the irrepressible ubiquity of radical uncertainty in the modern world,” rendering institutions’ inefficiency in calculating and preventing those risks apparent to the public (ibid: 338). This perception of risks is re-configuring contemporary institutions and consciousness, so much that our understanding of modernity focuses more on its risks than on its benefits (Hudson Citation2003). The risk narrative is ironic for Beck, as society’s main institutions scramble to “attempt to anticipate what cannot be anticipated” (Beck Citation2006, 329), anticipating the wrong risks without preventing disasters arising from risks that could not be calculated.

Risk is “a socially constructed phenomenon” for Beck, and it creates inequalities because “some people have a greater capacity to define risks than others” (ibid, 333). Powerful actors in society, such as the insurance industry, can define risks and, while they cannot prevent them, they can spread their cost, alleviating uncertainty (Beck Citation2006; Merryn Ekberg Citation2007; Giddens Citation1998). Heightened perceptions of risk have therefore benefited private insurance firms, marginalising society’s undesirables such as the poor, who are excluded from these schemes (Hudson Citation2003). Indeed, perceptions of risk cannot be separated from risk’s politics, which for Ekberg are connected with “liberty, equality, justice, rights and democracy” and with groups interested in risk prevention policies (Ekberg Citation2007, 357).

Social media platforms are one of the most representative elements of the risks of late modernity, providing a new space with risks and opportunities that make world risk society theory a valid framework to understand moderation.

While social media movements such as the Arab Spring, #MeToo, #BlackLivesMatter, #OccupyWallStreet (Luke Sloan and Annabel Quan-Haase Citation2017) initially positioned these platforms as “an opportunity for marginalised people to represent themselves” (Sonja Vivienne Citation2016, 10), throughout the second half of the 2010s increasing concerns about misinformation and conspiracy theories, hate speech, online abuse and harassment brought them under society’s scrutiny (Jamie Bartlett Citation2018; Emma Jane Citation2014).

Social media platforms are owned by corporate entities in charge of a space that is being used for expression and debate (Bartlett Citation2018; Kaye Citation2019). Similarly to the institutions mentioned by Beck Citation1992, Citation2006) and Hudson (Citation2003), they have responded to risks by introducing and changing their community guidelines, governance mechanisms enforced through algorithmic moderation (Kaye Citation2019). Additionally, social media present similar regulatory issues to what Beck (Citation2006) calls the new risks, which do not respect borders or nation-states, partly because of the fast, exponential growth they experienced and partly due to breaches and abuse happening in different jurisdictions (Kaye Citation2019).

However, since to protect citizens from risks “states increasingly limit civil rights and liberties” (Beck Citation2006, 330), governance methods used by social media platforms raise concerns regarding fair moderation, protection of the vulnerable, and monopolies where a handful of platforms apply the same moderation techniques to swathes of online content (Kaye Citation2019; Gillespie Citation2010; Paasonen et al. Citation2019; José van Dijck, David Nieborg and Thomas Poell Citation2019).

Critics of the risk society approach argue it focused too heavily on industrialisation or on modern risks, downplaying other risks and politicising decision-making (Lucas Bergkamp Citation2016). Others state that Beck’s rejection of class in favour of risk distribution is at odds with the inequalities he discussed (Dean Curran Citation2013). However, precisely because social media moderation places such a strong emphasis on safety (Zuckerberg Citation2018), world risk society theory can help understand content governance on Instagram. In particular, two key aspects of world risk society theory are appropriate to conceptualise controversies in Instagram moderation: that preventive measures restrict civil liberties and that they create power imbalances with those in charge of identifying risks, who choose specific undesirable elements to be restricted and prevented (Beck Citation1992, Citation2006; Giddens Citation1998).

Risk and moderation of nudity

Social media moderation is driven by in-platform laws known as community guidelines: every platform has them—from Facebook and Instagram’s Community Standards to YouTube’s “common sense” regulation—but most platforms have struggled to distinguish between troubling content and content in the public interest, or content covered by freedom of expression rights (Gillespie Citation2010; Kaye Citation2019). Aside from guiding how their platforms should be presented to audiences, advertisers and governments, Facebook/Instagram’s Community Guidelines ask users to comply with local law (Kaye Citation2019).

Community guidelines are enforced through human moderation and algorithms. Human moderators, contractors who make split-second decisions over content they might not be familiar with to earn their commission, seem to primarily deal with appeals (S. Suri and M. L. Gray Citation2019). This moderation system is, for Suri and Gray (ibid), creating an increasingly precarious workforce, so much that previous research has recommended that social media platforms should increase their reliance on human moderation for fairer content governance and better working conditions (Binns Citation2019). Indeed, the algorithms used to enforce Community Standards have been deemed opaque, unclear and inconsistent (Bartlett Citation2018; Kaye Citation2019; Paasonen et al. Citation2019). So far, social media companies have not provided insights into the making of their algorithms, avoiding any stakeholder participation in deciding what content remains online (Sangeet Kumar Citation2019). Because of this, for Kaye algorithmically enforced community standards “encourage censorship, hate speech, data mining, disinformation and propaganda” (Kaye Citation2019, 12).

In Instagram’s Community Guidelines section, “safety” is used in connection with both abuse and nudity: “We want Instagram to continue to be an authentic and safe place for inspiration and expression. […] Post only your own photos and videos and always follow the law. Respect everyone on Instagram, don’t spam people or post nudity” (Instagram Citationn.d.b). They add:

We know that there are times when people might want to share nude images that are artistic or creative in nature, but for a variety of reasons, we don’t allow nudity on Instagram. This includes photos, videos, and some digitally-created content that show sexual intercourse, genitals, and close-ups of fully-nude buttocks. It also includes some photos of female nipples, but photos of post-mastectomy scarring and women actively breastfeeding are allowed. Nudity in photos of paintings and sculptures is OK, too, (ibid).

If for Paasonen et al. “sexuality is largely comprised of men looking at naked women,” then Instagram’s guidelines’ focus on female and not male nipples should not surprise us, since “the female body remains the marker of sex and object of sexual desire, as well as symbol of obscenity,” while male bodies are allowed broader self-expression (Paasonen et al. Citation2019, 49). Social media moderation may therefore be replicating the male gaze (Laura Mulvey Citation1989), where the active male codes community guidelines to moderate the passive female as an erotic spectacle, a sexual object to be consumed but contained for viewers’ safety—a gaze that may stem from the largely male workforce of Silicon Valley (Charlotte Jee Citation2021).

The securitisation of society to prevent risks often materialises through preventively excluding undesirable elements (Beck Citation2006; Daniel Moeckli Citation2016), which on Instagram translates to the exclusion of nudity—particularly women’s bodies. Indeed, while hate speech and harassment affecting women have now become a fixture of online spaces, Instagram conflates nudity, sex and sexuality with unsafe content (Adrienne Massanari Citation2017, Citation2018; Paasonen et al. Citation2019). Harassment has emotional, psychological and economic costs for victims, making women and minorities stop contributing to online spaces, but nudity, sex and sexuality bear the brunt of social media’s censorship (Paasonen et al. Citation2019).

Instagram’s regulation of nudity and sexuality shows a typically North American mentality (Kaye Citation2019; Paasonen et al. Citation2019), with platforms born and based in the United States affecting the visibility of nudity and sexuality on apps used around the world (Paasonen et al. Citation2019). These culturally specific values are defined by Paasonen et al. as “puritan”, characterised by “wariness, unease, and distaste towards sexual desires and acts deemed unclean and involving both the risk of punishment and the imperative for control” so much so that sexuality must be feared, governed and avoided (ibid, 169). This promotes the view of sex and sexuality as risky and harmful, using archaic conceptions of decency that undermine the centrality of sex in people’s lives. The authors also point out the absurdity of categorising nudity and sexual activity in the same area as graphic violence, likening female nipples to animal cruelty, dismemberment or cannibalism by stigmatising sexual activity and those working in the sex industry (ibid).

This approach has become evident following the approval of FOSTA/SESTA by the US congress, one of the main drivers online nudity censorship (Paasonen et al. Citation2019; Tiidenberg and van der Nagel Citation2020). After FOSTA/SESTA, an exception to Section 230 of the Telecommunications Act that ruled platforms were not liable for what was posted on them, social media companies have been deleting and censoring an increasing number of posts showing skin for fear of being seen as promoting or facilitating prostitution (H.R.Citation1865—Allow States and Victims to Fight Online Sex Trafficking Act of 2017). After the new law, platforms like Instagram banned sex work related content, even when it is not sexually explicit, conflating sex work with trafficking, and female sexuality with sex work to attempt to prevent the risk of appearing to facilitate prostitution (Are Citation2019b; Paasonen et al. Citation2019, 62).

In July 2019, recreational pole dancers who, like strippers, use dance poles in performances and exercise classes, also became affected by FOSTA/SESTA: with pole dance’s aesthetic originating from and replicating the stripping one, and due to the necessity of a form of nudity to practice the sport, the two practices may be too similar for automated moderation to separate (Are Citation2019b, Citation2019c). Censorship of sex work trickling down to more mainstream users posting nudity like recreational pole dancers (Are Citation2019b, Citation2019c; Cook Citation2020; Taylor Citation2019 etc.) shows that, just like offline society has turned to technological means of risk control (Beck Citation1992, Citation2006; Giddens Citation1998; Moeckli Citation2016), Instagram has chosen to fight this risk—and therefore nudity—through technology.

Yet, Instagram’s moderation should not be separate from discussions about offline privilege and inequalities. Debbie Ging and Eugenia Siapera (Citation2018) state that the technology sector’s toxic and unequal working culture can affect algorithms, since women’s exclusion from IT offices can influence the social media moderation processes. Bartlett (Citation2018) argues that algorithms perpetrate offline privilege. Lastly, censorship of nudity experienced by many users is in stark contrast with hyper-sexualised social media posts by celebrities, often promoted by platforms (Carolina Are and Susanna Paasonen Citation2021).

Precisely because of offline systemic privilege, users are left to their own devices online: as a result, variety of sex workers have had their accounts deleted, while in August 2019, Instagram had to apologise to pole dancers, Carnival dancers and other communities for shadowbanning their posts (Are Citation2019d; Paasonen et al. Citation2019; Taylor Citation2019). The platform denied wanting to target specific communities, arguing content and hashtags were moderated “in error” (Are Citation2019d).

By applying world risk society theory to shadowbans of nudity, this article develops knowledge on the shadowban and the techniques applied by Instagram when moderating pole dancing content, highlighting the effects of the platform’s unequal risk control.

Methods

To answer the question: “Which censorship techniques do pole dancers posting content featuring nudity on Instagram experience?” this research takes a qualitative approach through autoethnography, seeking “to describe and systematically analyze personal experience in order to understand cultural experience” (Carolyn Ellis, Tony E. Adams and Arthur P. Bochner Citation2011, 273). Using tenets of autobiography and ethnography, autoethnography is an “interpretation and creation of knowledge rooted in the native context” (Rahul Mitra Citation2010, 15).

Towards this purpose, I started noting down my experiences of running the pole dance and blogging Instagram account @bloggeronpole (currently at 15,700 followers) from late 2018, when I became more interested in reaching new audiences with my dancing and when rumours of Instagram censorship started spreading in pole dance networks. The account has followed my progress as, initially, a recreational pole dancer, having taken up the sport while away from most of my loved ones during a Master in Australia in 2016. I did not sign up for a class because it was sold to me under a specific aesthetic, but because I was struggling to exercise alone due to anxiety and depression caused by exiting an abusive relationship. Pole dancing looked challenging and required focus, and while I joined it uncritically, my growing interest in pole provided opportunities for self-reflection about nudity and sexuality through blogging and posting on Instagram. Starting from very blurry videos of me wearing traditional, modest sportswear and holding onto the pole for dear life, my Instagram’s pole dancing aesthetic increasingly developed: due to further training and teaching qualifications, I started posting videos showcasing more polished, musical and improved dancing; I used better lighting and shooting equipment, as well as clearer backgrounds to shift the focus from what was behind me to my movement; finally, I started dancing in skimpier outfits, due to the need for more advanced grip but also as a homage to a sport created by strippers. This increasingly taxing but rewarding digital labour, consisting in both Instagram posts and articles about pole dancing, resonated with my blog’s existing audience, who followed me as I performed at international competitions and events and as I became a pole dance instructor in 2019. Consistently with Banet-Weiser (Citation2018) economy of visibility, through pole dancing I have made myself visible in ways that require physical efforts and digital labour spread across years spent building a platform on social media. As a woman and as an abuse survivor performing actions that deviate from offline, mainstream notions of acceptability, I didn’t use to think this visibility was available to me before finding my network of pole dancers on social media.

Due to Instagram’s censorship, I feared losing the biggest platform I shared my writing and dancing journey on. Instagram is both a work resource and a tool for my own expression, a situation many fellow pole dancers and sex workers resonated with and found themselves in. Therefore, this autoethnography is also informed by questions answered by Instagram’s press team and published on bloggeronpole.com, my fitness, lifestyle and activism blog, launched in December 2017. It currently averages 10,000 readers per month, with a Domain Authority of 40 (Moz Citationn.d.; Siteworthtraffic Citationn.d.). Because of this blog, and because of the audience gained as a blogger and as a pole dancer, I started blending my research interests with my pole dance persona, providing information about and campaigning against social media censorship.

Finally, this research utilises posts shared by @EveryBODYVisible, the 17,100-follower account of the #EveryBODYVisible anti-censorship campaign I launched with other pole dancers in early October 2019.

To answer the research question, this paper initially takes a narrative approach, showcasing pre—and post-shadowban experiences. Afterwards, it presents censorship techniques and repercussions through the data gathered, applying this paper’s theoretical framework to the shadowban.

These experiences are valid towards this paper for a variety of reasons. Firstly, my PhD research explored online abuse and harassment, with a focus on legislation tackling abusive online content. Secondly, promoting my classes and performances through Instagram as a pole dance performer and instructor meant a variety of my posts were censored or shadowbanned. Furthermore, I interviewed Instagram’s press team for my blog multiple times, asking for clarifications on their moderation, hearing outright denial of the shadowban until, in July 2019, a petition signed by nearly 20,000 pole dancers forced them to officially apologise to us through bloggeronpole.com (Are Citation2019d; Rachel Osborne Citation2019). Additionally, as one of the founding members of #EveryBODYVisible, I have been part of informing the account’s audience and experimenting with different techniques to boost their Instagram visibility. Therefore, this study blends research experience around moderation with the experience of posting on Instagram as a censored user, creating and joining a successful global campaign against censorship, and the interviews with Instagram’s press team, published in blog form. Precisely because of these experiences and because of the developing status of social media moderation of bodies, autoethnography is an appropriate method to understanding how Instagram moderate specific content.

This research presents a set of limitations. Critics of autoethnography claim examining one’s own experience results in researchers being overly immersed in—and not impartial about—their own research (Mitra Citation2010). Ethnographic studies also risk being subjective to the researcher’s understanding of a subject, their background and opinion (ibid). This is evident in the experiences analysed in this paper, reflecting moderation of a white, cis-gender woman’s body, meaning that moderation of bodies from a different background may result in different observations. However, the fact that even a white, cis-gender woman pole dancing inside a dance studio can face considerable social media censorship raises the important question on the effects censorship can have on users from less privileged backgrounds, and is therefore worthy of investigation.

Those fearing I may be biased for being one of the founding members of EveryBODYVisible should examine the experiences shown in this paper, which highlight that, whether one interacts with Instagram as a user, a journalist or a researcher, the platform do not share elucidations about how their moderation works. Examining this from the perspective of a user with a specific experience becomes therefore valid and applicable to a variety of different user populations. What is more, @everybodyvisible is at present run by volunteers, not-for-profit. Therefore, like #EveryBODYVisible, this article raises awareness about and examines an issue rather than profiting from it.

The availability of social media posts demands new ways to protect participants’ rights to privacy and consent (Association of Internet Researchers Citation2019). In this case, posts from @everybodyvisible and interviews with Instagram’s press team were already posted with the informed consent of the people involved: every post shared on @everybodyvisible was preceded by users clearing their stories for media and sharing purposes, while interviews with Instagram’s press team were already authorised to appear on my blog. Furthermore, this analysis is largely centred on the researcher’s own experience of censorship and therefore mainly on my own account—and while researchers who share their own experiences publicly may find themselves at risk of online abuse (Massanari Citation2018), this risk already manifests itself through my growing social media following, which I manage by establishing boundaries in interactions with my audience, blocking or muting accounts I do not wish to engage with and limiting my time on social media. I have also so far managed the sharing of personal experience through research by blending my pole dance activist persona with my researcher one, having been offered support by my institution’s communication department in case issues were to arise.

With the above issues in mind and given the unique circumstances of this study, the benefits of analysing the shadowban from a user perspective outweigh the drawbacks. While this research may appear like a set of conjectures to the reader, this is precisely my intent: for many users, knowing why and how Instagram is restricting their content—and why specific types of content are restricted as opposed to others—is still a matter of trial and error, a conjecture, due to the opacity of social media moderation, and to platforms’ refusal to let users, researchers or international media outlets in on their internal guidelines (Bartlett Citation2018; Kaye Citation2019; Kumar Citation2019). For all the above reasons, this study makes a step towards understanding Instagram’s shadowban, to push for more platform transparency and to advocate for clearer, fairer and more equal moderation of social media content.

Research timeline

This section showcases my experience with Instagram moderation pre- and post-shadowban, providing examples of pre- and post-shadowban engagement and of the events that led to a wider awareness of Instagram’s censorship.

I converted my Instagram account from a personal one into a business account, changing the name to @bloggeronpole in the summer of 2017, before relaunching the homonymous blog in December 2017. Content on the @bloggeronpole profile is a blend of different personal, blogging, work and pole dancing pictures, showing increasing amounts of skin both due to increased self-confidence and to the need of friction between my skin and the pole for grip (Carolina Are Citation2018).

I started teaching pole dance and twerk classes as a substitute instructor in December 2018, promoting them via Instagram through increasing digital labour, consisting in the creation of posts including pole trick poses, choreographies, freestyles and performances, in a living example of Banet-Weiser (Citation2018) economy of visibility. I began noticing drops in engagement and deletion of posts due to unspecified violations of community guidelines around March 2019, mirroring other pole dancers’ experiences. At the time, after emailing [email protected], the platform’s press team were keen to deny the existence of the shadowban (Are Citation2019a), despite increasing media reports of new censorship guidelines (Constine Citation2019).

Throughout the spring and early summer of 2019, engagement on @bloggeronpole continued to drop, in reverse proportionality to my growing number of followers gained through media interviews about me or at pole competitions, suggesting the account was shadowbanned on and off and that potential audiences were not seeing my content.

The situation shifted in July 2019, when a variety of queer and sex toy brands became unable to advertise on Instagram and YouTube (Are Citation2019b). At the same time, together with #femalefitness (but not #malefitness), the majority of pole dance hashtags, essential to find dance inspiration, were hidden from Instagram’s Explore page due to apparent community guidelines violations (Are Citation2019b; Kerry Justich Citation2019). Other pole dance related hashtags did not appear in the hashtag’s “recent” tab, showing Instagram was hiding posts from its Explore page without even warning users about it.

Following an uproar within the pole dancing community, a Change.org petition was started and signed by nearly 20,000 people, asking Instagram to stop censoring pole dance (Osborne Citation2019). Throughout this stage, I spoke with Instagram’s press team two weeks in a row, receiving further denials that the platform was targeting specific communities (Are Citation2019b, Citation2019c). However, the platform did admit censorship of specific hashtags, without ever using the shadowban term (ibid).

Following these interviews, I was invited into a wider coalition of world-famous pole dancers—including @michelleshimmy, an Australian dancer and studio owner with 186,000 followers, @upartists and @poledancenation, pole dance appreciation accounts and networks with 185,000 and 222,000 followers respectively at the time of writing—to ask new questions to Instagram about their moderation. After each coalition member asked their networks to share their moderation worries, I emailed these back to Instagram’s press team, receiving an apology attributed to an anonymous Facebook spokesperson and documented in my blog post:

A number of hashtags, including #poledancenation and #polemaniabr, were blocked in error and have now been restored. We apologise for the mistake. Over a billion people use Instagram every month, and operating at that size means mistakes are made – it is never our intention to silence members of our community (Are Citation2019d).

The apology was notable because my communication with Instagram, started in March 2019, had so far only featured quotes “on background”—i.e., to be paraphrased—and no admission of wrongdoing. Instead in July, Instagram apologised directly. While reasons behind this direct apology fall outside this research’s scope, it is worth noting that, in 2013, a petition backed by 20,000 people arising from a controversy over Instagram’s deletion of pictures with mastectomy scars resulted in an apology from the platform (Paasonen et al. Citation2019). This raises questions on how high-profile a complaint should be for Instagram to apologise.

Following the apology and media coverage in international news outlets such as CTV News Canada and Yahoo! Lifestyle, I and the accounts involved in the petition received an outpouring of stories from people across the globe, outside of pole dancing or sex work, hinting that Instagram’s censorship also affected sex educators, yogis, athletes, performers, people of colour, the LGBTQIA+ community and more (Justich Citation2019; Jeremiah Rodriguez Citation2019). This led to the group committing to launch a more inclusive anti-censorship campaign.

In October 2019, the coalition, renamed #EveryBODYVisible, launched the @everybodyvisible Instagram account, promoted through online word-of-mouth via coalition members’ networks. On October 29 2019, @everybodyvisible launched its #EveryBODYVisible campaign, encouraging censored people around the world to share either its logo or a picture of them that had been censored, tagging the chiefs of Instagram and Facebook such as Instagram CEO Adam Mosseri and Facebook’s Chief Operating Officer Sheryl Sandberg with the message:

WE WANT: Clear guidelines, equally-applied ‘community standards’, right of appeal, and an urgent review into algorithmic bias disproportionately affecting the visibility of women, femme-presenting people, LGBTQIA folx, People of Color, sex workers, dancers, athletes, fitness fans, artists, photographers & body-positive Instagram users (@everybodyvisible 2019).

The campaign was shared by photographer Spencer Tunick and by celebrity burlesque performer Dita Von Teese. During launch week, the tagged sections of Instagram chiefs’ profiles mainly featured pictures of bottoms, nude women and different bodies as a result, bringing Mosseri to acknowledge @everybodyvisible’s demands were reasonable in an Instagram story.

Following media coverage in the BBC, The Huffington Post and other news outlets, @everybodyvisible reached over 17,000 followers and continued sharing stories of censorship by deleted or shadowbanned users (Cook Citation2019; Thomas Fabbri Citation2019). The account also began experimenting with techniques to “trick” the algorithm, such as suggesting that users should change their gender to male (@everybodyvisible 2019; Cook Citation2019). Although I did not feel comfortable with “impersonating” a different gender for fear of Instagram retaliation on my account, some users did follow the tip and received pre-shadowban like boosts in engagement after doing so (Cook Citation2019).

In February 2020, Instagram’s CEO Adam Mosseri denied the shadowban existed in an Instagram story Q&A. He was subsequently accused of lying by censored users and by The Huffington Post (Cook Citation2020). In June 2020 however, Mosseri published a blog post admitting the shadowban of users such as people of colour, activists, athletes and adult performers, burying the admission in a more PR-friendly pledge to promote Black creators following the #BlackLivesMatter protests (Carolina Are Citation2020).

Instagram censorship techniques

From the early days of the shadowban rumours, I experienced a constant decrease in engagement, with inverse proportionality compared to my account’s growth in followers. Reasoning in mere numbers, it would be fair to assume that, once an account gains new followers, more people would engage with its content and that those followers would see its posts. However, the @bloggeronpole account experienced less engagement throughout 2019 and 2020 compared to 2018 (pre-shadowban), even though its following doubled. For example, a choreography created for a class in December 2018, when the account averaged 3,000 Instagram followers and when I had been training for only two years, received significantly more engagement than a more professional (and more clothed) choreography posted in 2020, when the account averaged 6,000 followers (, depicting video stills from the choreographies).

While audience preferences are outside of this research’s scope, it would be fair to say that after receiving instructor training and gaining more experience in dancing in 2019 and 2020, my engagement might have increased without the shadowban. However, this was not the case.

User conjectures like the ones above are a natural result of Instagram’s lack of clarity about accounts’ and content’s performances on the platform. Users are left to guess whether their content was not seen by other viewers because of the shadowban, or whether it was merely not interesting enough to warrant engagement, without the platform attempting to clarify any aspect of its moderation. Considering this limbo, it would be fair to assume that allowing accounts to keep posting their content while restricting how far it travels is, for Instagram, preferable to the risk of the aftermath of fully deleting a borderline account, or of having its content reach a wider public. With the shadowban, users posting nudity are left in a helpless limbo where the accounts they use to promote their work, to reach new audiences and to express themselves are obscured from Instagram’s main homepage, without receiving a notification about it (Are Citation2019a, Citation2019b, Citation2019c; Citation2019d; Cook Citation2019). While the shadowban is not as crippling as outright account deletion, the sense of powerlessness arising from content posted into a void, particularly after the aforementioned digital labour of crafting posts in the hope to reach old and new audiences, shows a power imbalance where platforms create norms of acceptability that users are not privy to. Users, in short, do not seem to have a right to know what happens to their content.

A specific type of censorship I experienced during my analysis was hashtag censorship. While Instagram did not use the term shadowban to describe it, they admitted to hiding certain hashtags and content:

Note that they never used the S word once with me, or the word censorship, but said they will temporarily remove the ‘Most Recent’ section and only display ‘Top Posts’ of the hashtag – which is what we call a shadowban. […] Instagram told me they will also no longer show hashtags related to a shadowbanned hashtag. So what most likely happened then is that Instagram censored one ‘#pdsomethingsomething’ hashtag, meaning that all the other #pd hashtags we use to look up moves have been affected by censorship as a result1 (Are Citation2019b).

This technique seems to be at odds with hasthags’ function to form communities around themes, topics or events (Paasonen et al. Citation2019). Therefore, in regulating and restricting a hashtag, platforms are going against their own purpose, to detect risks surrounding hashtags that may appear problematic. In a game of whack-a-mole, a variety of accounts engaging with @everybodyvisible lamented that hashtags linking to abusive or offensive content were not censored, but the accounts they engaged with were. This is a further example of how Instagram apply risky labels to certain hashtags arbitrarily, but cannot predict all risks embedded within the platform.

A further censorship technique was Instagram’s algorithm flagging a post where I was wearing nude coloured underwear and automatically deleting it for violation of Community Guidelines. For this type of censorship, users get a notification when a post is removed or when an account is disabled (@everybodyvisible 2019). Sometimes, a user is even able to appeal against the decision (ibid). Once again, users—and particularly sex workers—involved with @everybodyvisiblle expressed frustration about the lack of clarity behind account or post deletion, and about the difficulty in getting deleted accounts back, showcasing a power imbalance between “othered” accounts and Instagram constructing the risks around them without directly explaining violations.

The Shadowban Cycle

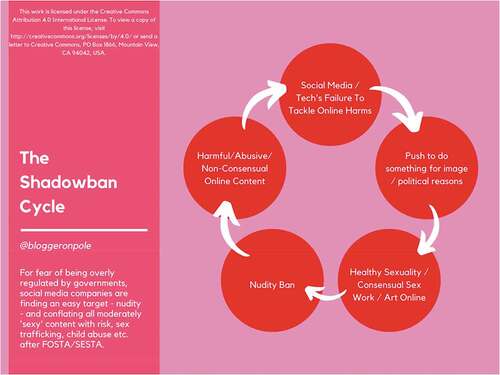

This paper will now situate the shadowban within a world risk society framework in what it conceptualises as The Shadowban Cycle.

(Beck Citation1992, Citation2006) argued that while scrambling to prevent risks, institutions and businesses restrict civil liberties without being able to prevent all risks from manifesting. This particular aspect of Beck’s world risk society theory is currently manifesting itself through Instagram moderation. As argued by Paasonen et al. (Citation2019), Kaye (Citation2019), and as stated by Zuckerberg himself (Citation2018), Instagram and Facebook’s algorithms have become more proficient in tackling and removing nudity. It would then be fair to say that in a time when social media platforms are increasingly under pressure by users and governments to fix issues such as online abuse and hate speech (Kaye Citation2019), they have hit an easy target: women’s bodies posting content featuring nudity, in a puritanical moderation approach characterised by the male gaze (Mulvey Citation1989; Paasonen et al. Citation2019). This paper will call this phenomenon “The Shadowban Cycle” ().

Figure 2. Shadowban Cycle graphic by Are (Citation2020).

With The Shadowban Cycle, this paper means that as harmful online content such as hate speech, online harassment and the like began to arise, social networking platforms have found themselves playing a game of whack-a-mole to take it down while also having to cope with the exponential growth of their audiences (Kaye Citation2019). They were faced with the political and reputational pressures of being seen to be tackling these issues. Therefore, particularly after FOSTA/SESTA made them liable for sexual content posted on their platforms, social media identified it as an easy target, censoring different manifestations of nudity instead of approaching the thornier issue of regulating speech (Are Citation2019c; Citation2019d; Citation2019e). This has resulted in censorship techniques such as the shadowban and account deletion, in an “othering” of a variety of user groups and in replication of puritan, conservative values that conflate trafficking with sex, sex with a lack of safety and a lack of safety with women’s bodies.

Conclusion

This paper examined and conceptualized Instagram’s shadowban through an autoethnography of the lived, blogged experiences of the researcher herself. Because of this, it presents a set of limitations, and while some of these have already been discussed above, it is worth mentioning potential further limits to this paper’s findings.

Firstly, moderation of hashtags and of posts is in constant flow, with certain content being hidden and reposted within hours, days and weeks of each other. Therefore, it is not always possible to know if it has been removed or hidden due to Instagram’s shadowban. Additionally, although community guidelines and Instagram’s responses have been used in understanding the platform’s moderation, given the lack of access to Instagram’s internal policies or moderation teams, users—and researchers—are left to conjecture when it comes to understanding the techniques implemented by the platform.

While this research focused on the experience of a particular Instagram community, further studies may wish to examine censorship on other platforms. Future research might also wish to interview different user communities about their experience with moderation and/or censorship, such as male athletes or artists. Additional studies might also wish to witness Instagram moderation from the inside, studying the work of human moderators.

The censorship techniques showcased throughout this paper show that women’s bodies are a target on Instagram, and that users are often left to reverse-engineer its algorithm in the hope to secure a form of visibility on their posts. Particularly with regards to the shadowban, this paper’s findings show an overall lack of clarity about the performance of content on Instagram, and a sense of discrimination felt by women, artists, performers, sex workers, athletes and the like when it comes to the platform’s content moderation. This lack of clarity and overall sense of discrimination raise questions about platforms’ role in policing the visibility of different bodies, professions, backgrounds, and actions, and their role in creating norms of acceptability that have a tangible effect on users’ offline lives and livelihoods, as well as on general perception on what should and shouldn’t be seen. Indeed, in a social media economy where visibility is required to thrive (Banet-Weiser Citation2018), depriving users of the option to be visible feels like a negation of opportunity. With regards to the researcher’s experience as a pole dancer, particularly during the global Covid-19 pandemic which has seen classes move online after the closure of a variety of dance studios for public health reasons, the lack of online visibility comes with the stress of this translating into lack of earnings, and with the frustration arising from having built a platform through years of work for content not to be seen.

The shadowban and Instagram’s policing of nudity and sexuality, particularly in connection with women’s bodies, are indeed both a feminist and a freedom of expression issue: women’s bodies and their performance of specific activities can both be a form of expression and a source of income. Yet, that expression and labour are viewed as risky, borderline and worth hiding in a way that, leading from examples of hashtag censorship cited above, men’s bodies and actions aren’t.

Leaving platforms in charge of the visibility of women, and particularly of those that do not conform their notions of acceptability, is a form of silencing, aligning with mainstream depictions of women, nudity and sexuality that only conform to specific gazes, without making space for users’ own gaze and agency (Cohen et al. Citation2019; Mulvey Citation1989; Sparks and Lang Citation2015). This can have a chilling effect on women and users posting nudity and sexuality, resulting in the shrinking of online spaces for content that does not align with platforms’ norms.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Notes on contributors

Carolina Are

Dr.Carolina Are is a researcher focusing on moderation, online abuse, conspiracy theories and online subcultures. She’s a blogger, writer, pole dance instructor, performer and activist with a six-year experience in PR and social media management. Following campaigning against Instagram censorship, her research has also been focusing on algorithm bias against women. She currently teaches in the criminology, technology and journalism fields. E-mail: [email protected]

References

- 115th Congress. 2017–2018. “H.R.1865 - Allow States and Victims to Fight Online Sex Trafficking Act of 2017.” Congress.gov. https://www.congress.gov/bill/115th-congress/house-bill/1865

- Are, Carolina. 2018. “Pole Dance: Your Questions Answered.” Blogger On Pole. https://bloggeronpole.com/2018/01/pole-dance-your-questions-answered/

- Are, Carolina. 2019a. “Why The Instagram Algorithm Is Banning Your Posts.” Blogger On Pole. https://bloggeronpole.com/2019/03/why-instagram-is-banning-your-posts/

- Are, Carolina. 2019b. “What Instagram’s Pole Dance Shadowban Means For Social Media.” Blogger On Pole. https://bloggeronpole.com/2019/07/what-instagram-pole-dance-shadowban-means-for-social-media/

- Are, Carolina. 2019c. “Instagram Denies Censorship of Pole Dancers and Sex Workers.” Blogger On Pole. https://bloggeronpole.com/2019/07/instagram-denies-censorship-of-pole-dancers-and-sex-workers/

- Are, Carolina. 2019d. “Instagram Apologises to Pole Dancers about the Shadowban.” Blogger On Pole. https://bloggeronpole.com/2019/07/instagram-apologises-to-pole-dancers-about-the-shadowban/

- Are, Carolina. 2019e. “Instagram Censors EveryBODYVisible Campaign against Instagram Censorship – LOL.” Blogger On Pole. https://bloggeronpole.com/2019/10/everybodyvisible/

- Are, Carolina. 2020. “Instagram Quietly Admitted Algorithm Bias … But How Will They Fight It?” Blogger On Pole. https://bloggeronpole.com/2020/06/instagram-quietly-admitted-algorithm-bias-but-how-will-it-fight-it/

- Are, Carolina, and Susanna Paasonen. 2021.“Sex in the Shadows of Celebrity.” Porn Studies Forum. [Forthcoming].

- Association of Internet Researchers. 2019. “Internet Research: Ethical Guidelines 3.0.” Association of Internet Researchers. https://aoir.org/reports/ethics3.pdf

- Banet-Weiser, Sarah. 2018. Empowered - Popular Feminism and Popular Misogyny. Durham: Duke University Press.

- Bartlett, Jamie. 2018. The People Vs Tech: How the Internet Is Killing Democracy (And How We Save It). London: Ebury Digital.

- Beck, Ulrich. 1992. Risk Society: Towards a New Modernity. Translated by Mark Ritter. London: Sage.

- Beck, Ulrich. 2006. “Living in the World Risk Society.” Economy and Society 35 (3): 329–345. doi:10.1080/03085140600844902.

- Bergkamp, Lucas. 2016. “The Concept of Risk Society as a Model for Risk Regulation – Its Hidden and Not so Hidden Ambitions, Side Effects, and Risks.” Journal of Risk Research 20 (10): 1275–1291. doi:10.1080/13669877.2016.1153500.

- Binns, Reuben. 2019. “Human Judgement in Algorithmic Loops; Individual Justice and Automated Decision-Making.”https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3452030

- Cohen, Rachel, Lauren Irwin, Toby Newton-John, and Amy. Slater. 2019. “#bodypositivity: A Content Analysis of Body Positive Accounts on Instagram.” Body Image 29: 45–57. doi:10.1016/j.bodyim.2019.02.007.

- Cole, Samantha. 2018. “Where Did the Concept of ‘Shadow Banning’ Come From?” Vice. https://www.vice.com/en/article/a3q744/where-did-shadow-banning-come-from-trump-republicans-shadowbanned

- Constine, John. 2019. “Instagram Now Demotes Vaguely ‘Inappropriate’ Content.” TechCrunch. https://techcrunch.com/2019/04/10/instagram-borderline/

- Cook, Jesselyn. 2019. “Women Are Pretending To Be Men On Instagram To Avoid Sexist Censorship.” The Huffington Post. https://www.huffingtonpost.co.uk/entry/women-are-pretending-to-be-men-on-instagram-to-avoid-sexist-censorship_n_5dd30f2be4b0263fbc99421e?ri18n=true

- Cook, Jesselyn. 2020. “Instagram’s CEO Says Shadow Banning ‘Is Not A Thing.’ That’s Not True.” The Huffington Post. https://www.huffingtonpost.co.uk/entry/instagram-shadow-banning-is-real_n_5e555175c5b63b9c9ce434b0?ri18n

- Crawford, Kate, and Tarleton Gillespie. 2016. “What Is a Flag For? Social Media Reporting Tools and the Vocabulary of Complaint.” New Media & Society 18 (3): 410–428. doi:10.1177/1461444814543163.

- Curran, Dean. 2013. “Risk Society and the Distribution of Bads: Theorizing Class in the Risk Society.” The British Journal of Sociology 64 (1): 44–62. doi:10.1111/1468-4446.12004.

- Ekberg, Merryn. 2007. “The Parameters of the Risk Society A Review and Exploration.” Current Sociology 55 (3): 343–366. doi:10.1177/0011392107076080.

- Ellis, Carolyn, Tony E. Adams, and Arthur P. Bochner. 2011. “Autoethnography: An Overview.” Historical Social Research/Historische Sozialforschung 36 (4–138): 273–290.

- Everybodyvisible. n.d. “Home Page.” https://everybodyvisible.com/

- Fabbri, Thomas. 2019. “Why Is Instagram Deleting the Accounts of Hundreds of Porn Stars?” BBC Trending. https://www.bbc.co.uk/news/blogs-trending-50222380

- Gerrard, Ysabel, and Helen Thornham. 2020. “Content Moderation: Social Media’s Sexist Assemblages.” New Media & Society 22 (7): 1266–1286. doi:10.1177/1461444820912540.

- Giddens, Anthony. 1998. “Risk Society: The Context of British Politics.” In The Politics of Risk Society Order, edited by J. Franklin, 23–34. Cambridge: Polity Press.

- Gilbert, David. 2020. “Leaked Documents Show Facebook’s Absurd ‘Breast Squeezing Policy’.” Vice. https://www.vice.com/en/article/7k9xnb/leaked-documents-show-facebooks-absurd-breast-squeezing-policy

- Gillespie, Tarleton. 2010. “The Politics of Platforms.” New Media & Society 12 (3): 347–364. doi:10.1177/1461444809342738.

- Gillespie, Tarleton. 2018. Custodians of the Internet: Platforms, Content Moderation, and the Hidden Decisions that Shape Social Media. New Haven, CT: Yale University.

- Ging, Debbie, and Eugenia Siapera. 2018. “Special Issue on Online Misogyny.” Feminist Media Studies 18 (4): 515–524. doi:10.1080/14680777.2018.1447345.

- Hudson, Barbara. 2003. Justice in the Risk Society: Challenging and Re-affirming Justice in Late Modernity. London: SAGE.

- Instagram. n.d.a. instagram.com/bloggeronpole

- Instagram. n.d.b. instagram.com/everybodyvisible

- Instagram. n.d.c. https://help.instagram.com/477434105621119

- Jane, Emma. 2014. “‘Back to the Kitchen, Cunt’: Speaking the Unspeakable about Online Misogyny.” Continuum, Journal of Media & Cultural Studies 28 (4): 558–570. doi:10.1080/10304312.2014.924479.

- Jee, Charlotte. 2021. “A Feminist Internet Would Be Better for Everyone.” MIT Technology Review. https://www.technologyreview.com/2021/04/01/1020478/feminist-internet-culture-activist-harassment-herd-signal/

- Joslin, Trinady. 2020. “Black Creators Protest TikTok’s Algorithm with #imblackmovement.” Daily Dot. https://www.dailydot.com/irl/tiktok-protest-imblackmovement/

- Justich, Kerry. 2019. “Pole Dancer Says Instagram Is Censoring ‘Dirty and Inappropriate’ Photos: Our Community Is ‘Under Attack’.” Yahoo.com. https://www.yahoo.com/lifestyle/pole-dancing-community-says-its-under-attack-on-instagram-172415125.html

- Kaye, David. 2019. Speech Police - The Global Struggle To Govern The Internet. New York: Columbia Global Reports.

- Kumar, Sangeet. 2019. “The Algorithmic Dance: YouTube’s Adpocalypse and the Gatekeeping of Cultural Content on Digital Platforms.” In Internet Policy Review: Transnational Materialities, edited by J. van Dijck and B. Rieder, Vol. 8. (2). https://policyreview.info/articles/analysis/transnational-materialities

- Massanari, Adrienne. 2017. “#gamergate and the Fappening: How Reddit’s Algorithm, Governance, and Culture Support Toxic Technocultures.” New Media & Society 19 (3): 329–346. doi:10.1177/1461444815608807.

- Massanari, Adrienne. 2018. “Rethinking Research Ethics, Power, and the Risk of Visibility in the Era of the ‘Alt-right’ Gaze.” Social Media + Society, April-June 1–9.

- Mitra, Rahul. 2010. “Doing Ethnography, Being an Ethnographer: The Autoethnographic Research Process and I.” Journal of Research Practice 6 (1): 1–21.

- Moeckli, Daniel. 2016. Exclusion from Public Space - A Comparative Constitutional Analysis. Cambridge: Cambridge University Press.

- Moz. n.d. https://moz.com/link-explorer

- Mulvey, Laura. 1989. Visual and Other Pleasures. Bloomington: Indiana University Press.

- Osborne, Rachel. 2019. “Instagram, Please Stop Censoring Pole Dance.” change.org/p/instagram-com-instagram-stop-censoring-pole-dance-fitness

- Paasonen, Susanna, Kylie Jarrett, and Ben Light. 2019. #NSFW: Sex, Humor, And Risk In Social Media. Cambridge: MIT Press.

- Rodriguez, Jeremiah. 2019. “Instagram Apologizes to Pole Dancers after Hiding Their Posts.” CTVNews.ca. https://www.ctvnews.ca/sci-tech/instagram-apologizes-to-pole-dancers-after-hiding-their-posts-1.4537820

- Salty. 2019. “Exclusive: Victoria’s Secret Influence on Instagram’s Censorship Policies.” Saltyworld.net. https://saltyworld.net/exclusive-victorias-secret-influence-on-instagrams-censorship-policies/

- Siteworthtraffic. n.d. https://www.siteworthtraffic.com/

- Sloan, Luke, and Annabel Quan-Haase. 2017. The SAGE Handbook of Social Media Research Methods. London: SAGE Publications.

- Sparks, J V., and Annie Lang. 2015. “Mechanisms Underlying the Effects of Sexy and Humorous Content in Advertisements.” Communication Monographs 82 (1): 134–162. doi:10.1080/03637751.2014.976236.

- Stack, Liam. 2018. “What Is a ‘Shadow Ban,’ and Is Twitter Doing It to Republican Accounts?” The New York Times. https://www.nytimes.com/2018/07/26/us/politics/twitter-shadowbanning.html

- Suri, S., and M. L. Gray. 2019. Ghost Work: How to Stop Silicon Valley from Building a New Global Underclass. Boston: Houghton Mifflin Harcourt.

- Taylor, Sharine. 2019. “Instagram Apologises For Blocking Caribbean Carnival Content.” Vice. https://www.vice.com/en_in/article/7xg5dd/instagram-apologises-for-blocking-caribbean-carnival-content

- Tiidenberg, Katrin, and Emily van der Nagel. 2020. Sex and Social Media. Melbourne: Emerald Publishing.

- van Dijck, José, David Nieborg, and Thomas Poell. 2019. “Reframing Platform Power.” In Internet Policy Review: Transnational Materialities, edited by J. van Dijck and B. Rieder, Vol. 8. (2). https://policyreview.info/articles/analysis/transnational-materialities

- Vivienne, Sonja. 2016. Digital Identity and Everyday Activism - Sharing Private Stories with Networked Publics. Basingstoke: Palgrave MacMillan.

- Zuckerberg, Mark. 2018. “A Blueprint for Content Governance and Enforcement.” Facebook. https://www.facebook.com/notes/mark-zuckerberg/a-blueprint-for-content-governance-and-enforcement/10156443129621634/