Abstract

Maintaining high-quality standards has consistently been the main goal of industries. With rising demand and customisation, industries must strike a balance between cost, manufacturing time, and quality. The technological advancements of Industry 4.0 have allowed the implementation of accurate quality prediction frameworks in the manufacturing lines. For quality prediction in manufacturing, machine learning, and artificial intelligence offer several benefits, but there are also a number of limitations that must be taken into consideration. The current study aims to highlight the aforementioned benefits and drawbacks. To do this, a literature review on the area of quality prediction and monitoring in Industry 4.0 manufacturing lines is conducted. The results demonstrate that the merits of the reviewed methods are many but six significant drawbacks must be accounted for the successful implementation of the studied quality prediction frameworks. The current study can serve as a ‘map’ for production managers in industries as well as experts in the field of manufacturing as they weigh the benefits and drawbacks of popular quality prediction models, as it provides information needed to determine to what extent these methods can be applied to new or existing manufacturing lines.

1. Introduction

Quality is a term that has many definitions. The American Society for Quality defines quality as ‘the characteristics of a product or service that bear on its ability to satisfy stated or implied needs’ and ‘a product or service free of deficiencies’ (ASQ, Citationn.d.). According to Joseph Juran, quality is defined as ‘fitness for use’ and according to Philip Crosby, quality is ‘conformance to requirements’ (ASQ, Citationn.d.). In all the above definitions, it can be seen that quality is measured by customer satisfaction.

Throughout the ages, quality was always the main focus of manufacturing. Especially now, with rising demand and customisation, industries must balance cost, manufacturing time, and quality. Growing emphasis on sustainable manufacturing requires manufacturers to deliver higher-quality, complicated products at lower costs while minimising resource utilisation (and waste) throughout the industrial ecosystem (Powell et al., Citation2022). A shift in manufacturing philosophy is required in light of the recent trend toward environmental awareness from a regulatory, consumer, and moral standpoint (Kalpande & Toke, Citation2021).

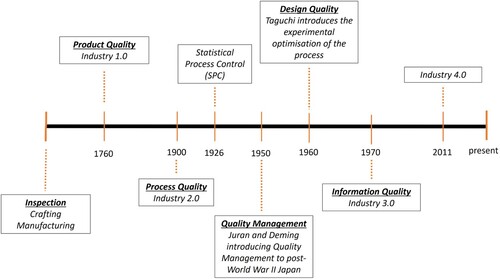

Also, when it comes to performance indicators, quality is one of the most important factors that may have a big impact on a manufacturer's ability to remain competitive. Various quality models have been introduced, both empirical (e.g. inspection) and mathematical (e.g. statistical process control (SPC) charts, Machine Learning methods), to assess quality. From the inspection phase, which was initiated at the beginning of the artisanship process, we have now reached a stage where the focus has shifted to Information quality, which focuses on the gathering of quality information to utilise it for the optimisation of the manufacturing process.

When it comes to satisfying the growing demand for high-quality goods, the Zero Defect Manufacturing (ZDM) concept is of the utmost significance. The fundamental principle that motivates the ZDM methodology is not the identification of faults and defects, but rather the forecasting of such difficulties and the provision of recommendations for how they might be evaded (Nazarenko et al., Citation2021).

With the introduction of Industry 4.0 (fourth industrial revolution), we are witnessing a shift towards an ‘intelligent’ or ‘smart’ factory, one that is fully integrated, automated, and has optimised manufacturing (Zolotová et al., Citation2020) (Silvestri et al., Citation2020). This results in increased productivity and a shift away from the conventional production relationships between suppliers, producers, and customers as well as between humans and machines (Vaidya et al., Citation2018) (Rüßmann et al., Citation2015). Industry 4.0 makes use of Cyber Physical Systems (CPS) to automate production processes and communicate information. Data mining and industrial control have both seen major improvements as a result of the introduction of technological advancements such as the Internet of Things (IoT), the Industrial Internet of Things (IIoT), machine learning, artificial intelligence, and big data analytics (Sahoo & Lo, Citation2022).

In recent years, Artificial Intelligence (AI) has demonstrated substantial success in automating processes that are associated with human thinking, such as planning, decision making, and problem solving. This development has been made possible by recent advancements in deep learning and neural networks. Artificial intelligence (AI) systems can handle large amounts of data derived from a diverse variety of sources, such as photos, text, audio, and 3D geometry. As a consequence of this, we are witnessing a proliferation of AI-enabled strategies that aim to improve the forecasting, design, and control capabilities of advanced manufacturing processes. These strategies make use of the current trend toward the digitalisation of manufacturing and the use of large-scale data acquisition platforms (Mozaffar et al., Citation2022).

AI has been widely used in manufacturing for the development of predictive process modeling systems (Petri et al., Citation1998), in the improvement of manufacturing accuracy (Warnecke & Kluge, Citation1998), in the development of control models for the production processes (Institute of Electrical and Electronics Engineers, Citationn.d.), in providing predictive analytics for quality assurance of assembly processes (Burggräf et al., Citation2021) and has been used for early quality classification and prediction (S. Stock et al., Citation2022). Machine learning and, in general, Artificial Intelligence offer several advantages for the quality prediction and control of manufacturing and assembly processes, but some drawbacks and restrictions must be taken into account.

The present research aims to highlight the advantages and weaknesses of the approaches that are widely used in Industry 4.0 manufacturing lines for quality monitoring and prediction. To do so, a literature review on the research around the area of quality prediction and monitoring in manufacturing lines of Industry 4.0 was conducted for the purpose of: (1) presenting the research that has been conducted in the area of quality prediction in manufacturing processes in Industry 4.0, (2) providing insight into the strengths and weaknesses of the research in this area, (3) identifying the research gaps that need to be addressed, and (4) examine whether widely used methods such as Statistical Process Control (SPC) can adequately address the highly-customised and complex manufacturing lines of Industry 4.0.

The aforementioned literature review allowed the formation of three research objectives that the current research aims to achieve, to provide a spherical approach to address the matter of quality prediction in industrial settings. These objectives are:

Provide historical background on the evolution of Industry, from Industry 1.0 to Industry 4.0.

This will act as a link between the technological achievements of each period and the impact that these achievements had on manufacturing. Through this historical context, it will become apparent which technological achievements allowed the transition from the Inspection phase to Information quality and the automation that has been introduced by Industry 4.0. Moreover, the pillars of Industry 4.0 will be presented and their contribution towards manufacturing automation and quality prediction and monitoring will be discussed. Finally, this historical context aims to sufficiently present to the reader the transformation of Industrial processes.

| (2) | Present the evolution of quality models from Industry 1.0 to Industry 4.0. | ||||

By analysing the evolution of quality models, the aspects of manufacturing on which the quality models focused to measure and improve quality will be highlighted. This analysis will allow the formulation of a spherical understanding of the evolution and focus of quality models from Industry 1.0 to Industry 4.0.

| (3) | Analyse quality prediction and monitoring methods in industrial settings, especially in Industry 4.0. | ||||

This analysis will delineate widely used quality prediction and monitoring methods that have been used from Industry 1.0 to Industry 4.0. More specifically, the current research aims to identify the advantages and weaknesses of these methods, with the focus shifting especially to Machine Learning and, in general, Artificial Intelligence approaches that are widely used in Industry 4.0 for quality monitoring and prediction.

The remainder of the manuscript is organised as follows: The literature that is pertinent to the evolution of Industry and the evolution of quality models from Industry 1.0 to Industry 4.0 is reviewed in the following section (Section 2). The research methodology used in this study is included in Section 3, and the study's findings are presented in Section 4. The results are discussed in Section 5. In Section 6, this paper’s main conclusions and suggestions for additional research are presented alongside the managerial implications of the present research.

2. Literature review

2.1. Evolution of industry (from industry 1.0 to industry 4.0)

2.1.1. Industry 1.0

The First Industrial Revolution (Industry 1.0) spans from approximately 1760–1840 and is characterised by the utilisation of steam power and mechanisation of production (Vinitha et al., Citation2020). Steam power and water power play a crucial part in the expansion of the industrial revolution, which began with the transition from manual to mechanised production. Textile manufacturing, iron industry, steam power, machine tools, chemicals, cement, gaslighting, glassmaking, agriculture, paper machine, transportation, mining, canals and improved waterways, railways, roads, railroads and other industries were among the first to be influenced by the industrial revolution (Vinitha et al., Citation2020).

2.1.2. Industry 2.0

The Second Industrial Revolution (Industry 2.0) is highlighted by a constellation of technologies, management approaches, and the use of electricity that expanded mass production in the early twentieth century (Zonnenshain & Kenett, Citation2020). Walter Shewhart, a physicist, engineer, and statistician, proposed using control charts, a statistical tool, to manage production operations (Shewhart, Citation1926). Controlling the process via control charts reduced the requirement for inspection, saving time and money while improving quality. The focus of data analysis switched from inspection to process performance and the need to comprehend the variation. Consequently, statistical models and probability began to play a crucial role as quality assessment tools (Zonnenshain & Kenett, Citation2020).

2.1.3. Industry 3.0

The 1970s marked the beginning of the Third Industrial Revolution (Industry 3.0), which was kicked off by the partial automation of industries through the use of computers (Rifkin, Citation2011). Because of computers, ‘mass customisation’ became possible (Davis, Citation1997). Mass customisation, in its most basic form, brings together the scalability of big, continuous flow production systems and the adaptability of job shops. During this period of revolutionary change, the manufacturing sector is transitioning into the automation industry. The field of engineering had phenomenal expansion within the production sector. The entire production process in industries is currently being automated such that no humans are required. Electronics and computer-controlled hardware are essential components in the operation of automation. The dependability and effectiveness of the industrial system are both improved thanks to automation.

2.1.4. Industry 4.0

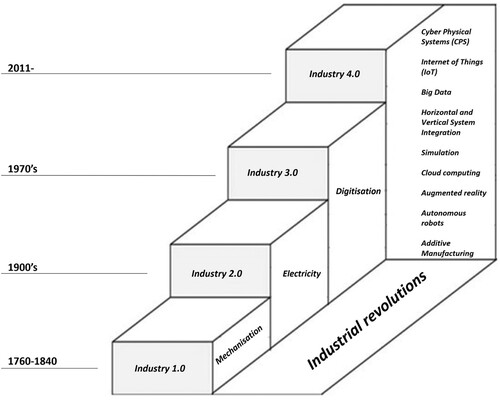

The phrase ‘Industrie 4.0’ was first used in Germany in 2011 by the German National Academy of Science and Engineering. It was translated into English as ‘Industry 4.0’ (Kagermann et al., Citation2013). Industry 4.0 has marked the beginning of the Fourth Industrial Revolution, which is being brought on by the use of cyber-physical systems (CPS) and the Internet of Things and Services. Germany is in a position of leadership in the field of CPS and has approximately 20 years’ worth of expertise to draw on. The incorporation of cyber technologies that enable products to be connected to the Internet lays the foundation for the development of innovative services that, among other things, allow for Internet-based diagnostics, maintenance, operation, and so on to be carried out in a manner that is both economical and effective. In addition to this, it assists in the creation of new business models, operating concepts, and smart controls, as well as the focus on the user and the user's specific requirements (Jazdi, Citation2014). The evolution from Industry 1.0 to Industry 4.0 can be seen in

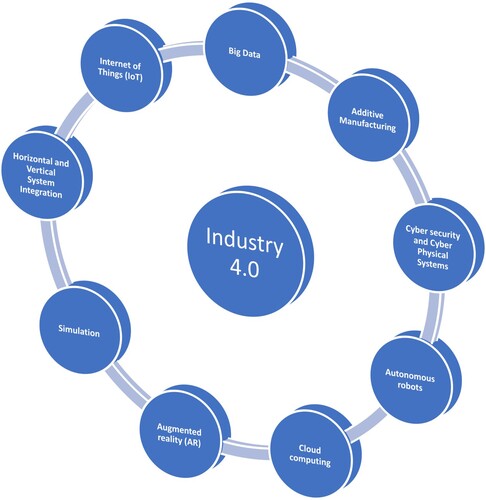

The nine pillars of Industry 4.0 () that transform the factory into a ‘smart’ factory and add the element of automation are: Big Data and Analytics, Industrial Internet of Things (IIoT), Horizontal and Vertical System Integration, Simulation, Cloud Computing, Augmented Reality (AR), Autonomous Robots, Additive Manufacturing (AM), and Cyber Security and Cyber-Physical Systems (CPS).

2.1.4.1. Big data and analytics

Traditional technologies are unable to process and analyse the vast amounts of information that are generated and gathered daily as a result of the fast expansion of the Internet in the modern day. On the other hand, there is a technology known as Big Data that enables us to conduct analyses (Witkowski, Citation2017). Because it compiles information from a wide variety of sources, the databases are always expanding, but thanks to Big Data, we are able to swiftly and effectively manage and use this information.

Big Data is comprised of four dimensions (also known as 4 V): Volume of data, Variety of Data, Velocity of generation of new data and analysis, and Value of Data. Big Data enables analysis of the data at a more sophisticated level than was previously feasible with the available tools. Even information that has been gathered in different, incompatible systems, databases, and websites may be analysed and merged with the help of this technology to provide a more accurate depiction of the circumstances around a particular business or individual (Witkowski, Citation2017).

2.1.4.2. Internet of things (IoT)

In the paradigm of the Internet of Things (IoT), a significant number of the items that are all around us are connected to the network in some form or another. This challenge, in which information and communication systems are invisibly integrated into the world around us, is met head-on by technologies such as Radio Frequency Identification (RFID) and sensor network technology. This model is composed of services that can be categorised as commodities and will be distributed in a manner that is analogous to how traditional commodities are distributed (Gubbi et al., Citation2013).

The Internet of Things is distinguished by three primary characteristics: optimisation, omnipresence, and context. Omnipresence provides information on the location, and physical or atmospheric conditions of an object, optimisation illustrates the fact that today's objects are more than just connection to a network of human operators at human-machine interfaces and context refers to the possibility of advanced object interaction with an existing environment and immediate response if anything changes.

The Internet of Things opens up brand new opportunities for exploration in the area of performance. For instance, road transport vehicles may be automatically regulated according to the specifications of hosts. This will let the trucks run according to predetermined intervals and at a constant pace, therefore optimising their use of fuel and reducing their environmental impact (Witkowski, Citation2017).

2.1.4.3. Horizontal and vertical system integration

The paradigm of Industry 4.0 is fundamentally delineated by three dimensions: (1) horizontal integration throughout the whole value creation network, (2) end-to-end engineering over the entirety of the product life cycle, as well as (3) vertical integration and networked manufacturing systems (T. Stock & Seliger, Citation2016).

The horizontal integration throughout the whole value creation network is a description of the cross-company and company-internal intelligent cross-linking and digitalisation of value creation modules throughout the value chain of a product life cycle and between value chains of adjacent product life cycles. End-to-end engineering across the entire product life cycle refers to the intelligent cross-linking and digitalisation that occurs throughout all stages of a product's life cycle, beginning with the acquisition of raw materials and continuing through the manufacturing system, product use, and the product end of life. Vertical integration and networked manufacturing systems are terms that are used to describe the intelligent cross-linking and digitalisation that occurs between the various aggregation and hierarchical levels of a value creation module, ranging from manufacturing stations to manufacturing cells, lines, and factories.

These systems also integrate the associated value chain activities, such as marketing or technological innovation (Acatech, Citation2015). The complete digital integration and automation of all aspects of manufacturing in both the vertical and horizontal dimensions entails not only the automation of production processes but also of communication and collaboration, particularly in areas where processes are standardised (Erol et al., Citation2016).

2.1.4.4. Simulation

In plant operations, simulations are increasingly utilised to harness real-time data to create virtual models that match the physical world and can include equipment, products, and humans. This helps reduce the amount of time needed to set up machinery and improves product quality. For instance, Siemens and a German machine-tool vendor collaborated to design a virtual machine that can imitate the machining of components by making use of data obtained from the actual machine. Because of this, the amount of time required to set up the actual machining process is reduced by as much as 80 percent (Rüßmann et al., Citation2015). The reproduction in virtual form of a whole value chain makes it possible to employ simulations as a decision-support tool (Schuh et al., Citation2014).

2.1.4.5. Cloud computing

Because of Industry 4.0, businesses are required to improve their data sharing across all of their locations and companies, which means that they must be able to achieve reaction times in milliseconds or even faster (Vaidya et al., Citation2018)(Rüßmann et al., Citation2015). The cloud-based information technology platform acts as the technological backbone for the connectivity and communication of the several pieces that make up the Application Centre for Industry 4.0. Through the use of integration services, it is possible to link the controls of machining centres and robots to the platform, in addition to decoupling sensors such as cameras and temperature probes (Landherr et al., Citation2016). This concept can be expanded to include a collection of equipment from a shop floor as well as the entire plant, in order to have a ‘digital production’ (Marilungo et al., Citation2017).

2.1.4.6. Augmented reality (AR)

Systems that are based on augmented reality provide assistance for a wide range of services, including the selection of components at a warehouse and the transmission of repair instructions through mobile devices. Augmented reality may be used in industry to offer workers with information in real-time, which can enhance decision-making as well as working operations. Workers may obtain repair instructions on how to replace a particular part while they are looking at the real system that needs to be repaired (Vaidya et al., Citation2018) (Rüßmann et al., Citation2015). For instance, employees may receive instructions on how to replace a specific part while they are looking at the real system that needs to be repaired. Augmented reality glasses are one example of a technology that might be used to show this information right in front of the employees’ line of sight (Rüßmann et al., Citation2015).

2.1.4.7. Autonomous robots

Autonomous production methods driven by robots that can intelligently accomplish tasks are a crucial aspect of Industry 4.0. Autonomous robots are complicated systems that involve the interaction or cooperation of a large number of different kinds of software components. Autonomous robots are designed to carry out high-level activities either independently or with very limited control from an outside source. Because of the following characteristics, they are required in circumstances in which the employment of human control is either not practical or not cost-effective due to the following reasons: (1) they work in circumstances that are highly unpredictable, uncertain, and constantly changing throughout time, (2) for them to perform successfully, they have to adhere to the real-time limitations and (3) they frequently engage in conversation with other agents, including people and other types of machines (Bensalem et al., Citation2009).

This facet of Industry 4.0 places an emphasis on safety, flexibility, versatility, and collaborative working. Because there is no longer a requirement to compartmentalise its working area, its incorporation into human workstations is both more cost-effective and productive, and it opens the door to a wide variety of potential uses in industrial settings. The most recent technological advancements are resulting in the development of an increasing number of industrial robots, which are helping to facilitate the industrial revolution. Robots and people will collaborate, on interlinking tasks in Industry 4.0 by utilising intelligent sensor human-machine interfaces. These tasks will be completed by combining efforts. The use of robots is expanding to incorporate a variety of tasks, such as production, logistics, and office management (for document distribution), and they may be operated remotely (Aiman et al., Citation2016).

2.1.4.8. Additive manufacturing (AM)

With the advent of Industry 4.0, additive manufacturing techniques are becoming increasingly popular. These techniques allow for the production of individualised goods in small quantities, and they provide several benefits to the construction industry. High-performance, decentralised additive manufacturing systems will cut down on transportation distances as well as the amount of stock kept on hand (Rüßmann et al., Citation2015). Utilising processes like selective laser melting (SLM), selective laser sintering (SLS), or fused deposition modeling (FDM) can help make production more efficient and cost-effective. For instance, this can be accomplished by implementing inline quality control. On the other hand, Industry 4.0 seeks an economically viable realisation of hybrid production systems that combine additive manufacturing with other well-established manufacturing processes such as turning, milling, and/or welding (Landherr et al., Citation2016).

2.1.4.9. Cyber security and cyber-physical systems (CPS)

The need to secure important industrial systems and production lines from cyber security threats has greatly expanded as a result of the increasing connection and adoption of standard communications protocols that come with Industry 4.0. As a consequence of this, it is vital to have secure and dependable communications, as well as an advanced identity and access control system for both computers and users (Rüßmann et al., Citation2015).

Cyber-physical systems, often known as CPS for short, are systems that have been characterised as those in which natural and human-made systems (physical space) are strongly linked with computing, communication, and control systems (cyberspace) (Bagheri et al., Citation2015). Researchers in the fields of systems and control have been pioneers in the development of powerful methods and tools in the fields of system science and engineering over the years. Some examples of these methods and tools include time and frequency domain methods, state space analysis, system identification, filtering, prediction, optimisation, robust control, and stochastic control.

During this same period, researchers in the field of computer science have made significant strides forward in the development of new programming languages, real-time computing techniques, visualisation methods, compiler designs, embedded system architectures and systems software, and innovative approaches to ensure the reliability of computer systems, cyber security, and fault tolerance. In addition, scholars working in the field of computer science have created a wide array of powerful modeling formalisms and verification tools. Cyber-physical systems research aims to develop new CPS science and supporting technology by integrating knowledge and engineering principles from across the computational and engineering disciplines (networking, control, software, human interaction, learning theory, as well as electrical, mechanical, chemical, biomedical, material science, and other engineering disciplines) (Baheti et al., Citation2015).

A CPS is comprised of a control unit, often one or more microcontrollers, which control the sensors and actuators required for interaction with the actual environment and processes the data acquired. These embedded systems require a communication link to share information with other embedded systems or the cloud. Data sharing is the most crucial aspect of a CPS, as the data may be connected and analysed centrally, for example. In other terms, a CPS is an embedded system capable of transmitting and receiving data across a network. The Internet-connected CPS is commonly referred to as the ‘Internet of things.’ (Jazdi, Citation2014). Cyber-Physical Systems are primarily intended to engage in interaction and collaboration with human operators, to achieve shared objectives, such as a reduction in the number of incidents that result in failure (Silvestri et al., Citation2020)(Ansari et al., Citation2018).

2.2. Contribution of industry’s 4.0 elements to quality management

The nine pillars of Industry 4.0 have revolutionised the industrial sector. More specifically, many of these pillars can contribute towards the optimisation of Quality Management, in the aspects of Quality Improvement and Quality Control, as they introduce new technologies and at the same time provide tools that can improve the manufacturing processes.

Big Data allows the industry to utilise heterogenous, large amounts of data and at the same time distinguish the essential data to improve the manufacturing processes. With the aid of Big Data, data analysis is now possible at a more sophisticated level than was previously possible.

Internet of Things (IoT) and Horizontal and Vertical System Integration allow the interconnectivity of remote systems through a central network and the complete digital integration and automation of all aspects of manufacturing in both the vertical and horizontal dimensions. That way, the element of automation is instilled in manufacturing lines and can boost performance and product quality as the independent manufacturing systems can now work and communicate as a unit.

Simulations create virtual models that can simulate real-world scenarios and thus improve product quality and speed up the process of setting up machinery.

Cloud computing is responsible for connectivity and timely transfer of information between systems and thus it optimises production times.

The use of augmented reality in the workplace can help employees make better decisions and perform their jobs more efficiently.

Autonomous robots and Cyber Physical Systems may be employed in manufacturing processes that are highly complex and as a result, improve the quality of production by reducing the number of incidents that result in failure and the factor of human error.

2.3. Evolution of quality models

Quality models have undergone various evolutions through the ages. In 2020, Zonnenshain and Kenett (Zonnenshain & Kenett, Citation2020), highlighted in their research some key milestones for the evolution of quality models through time.

As a first milestone, they refer to the Old Testament where the Creator on the sixth day, after having completed the creation, inspected the work carried out to see if any further addition or improvement was needed. They used this example to highlight that inspection was the leading quality model for many centuries, where crafting manufacturing systems were deployed. Inspection has served as a cornerstone method of quality assurance. Inspections (in the sense of measurement, test, and observation) offer the information that drives all of the other components of the quality systems, and they continue to provide a helpful way of distinguishing the problematic from the good. The inspection phase was initiated at the beginning of the artisanship process, and it was practised by the individual workers checking their work, by the master inspecting the work of apprentices, and by consumers carefully inspecting products before buying them. As a result of increased product complexity, special persons or groups with extensive training were frequently designated as inspectors (Godfrey, Citation1986).

Eli Whitney (1765-1825), an American inventor, mechanical engineer, and manufacturer, is credited with achieving a second significant milestone. As Zonneshain and Kennet state in their work (Zonnenshain & Kenett, Citation2020), this achievement involves determining the specifications of the parts to be assembled before the final assembly. He created machine tools that allowed an untrained worker to make a specific part that was then measured and compared to a specification. This signifies the change in quality approach that was introduced by Industry 1.0 and mass production. Instead of inspection at the end of the manufacturing process, mass production introduced inspection in advance of the parts to be assembled. In a sense, this is the first attempt to implement a product quality prediction framework as the final quality was linked to the specifications of the parts before the final assembly.

In 1926, Shewhart (Shewhart, Citation1926) introduced the use of statistical process control (SPC) charts. Before the introduction of statistical process control charts, inspection had focused the majority of its efforts on locating and removing defective products or lots of products before they were shipped to the customer. Shewhart realised that statistical methods could be used to increase the amount of good product that was being manufactured (Godfrey, Citation1986). This led to a ‘scientification’ of the manufacturing process by turning the focus on a more mathematical approach such as investigating the error correction on the taken data and improving the manufacturing process by implementing statistical tools. At this point, the focus had shifted from product quality to process quality (Zonnenshain & Kenett, Citation2020).

Sixty years later, Joseph Juran developed his Quality Trilogy, which is a universal method for the management of quality. This approach was based on Shewhart's work. This event is considered to be the beginning of the era of quality management. Industrial engineering, operations management, and supply-chain management are some of the other disciplines that are participating in this revolution. The overarching goals of this revolution are to achieve gains in quality, speed, delivery reliability, flexibility, and prices. W. Edwards Deming, who, along with Juran, had enormous success in applying quality management principles in devastated post-World War II Japan, was a significant contributor to this movement (Zonnenshain & Kenett, Citation2020) (Connor, Citation1986) (Juran, Citation1986).

Deming and Juran both realised while working directly with top executives in Japan, that straightforward statistical quality control methods, if implemented consistently across the business, might play a significant role in elevating the competitiveness of Japanese goods (Godfrey, Citation1986).

In the 1960s, a Japanese engineer by the name of Genichi Taguchi introduced to the industrial sector new methods for generating statistically planned experiments that were intended to improve products and processes by obtaining design-based robustness features (Zonnenshain & Kenett, Citation2020) (Godfrey, Citation1986) (Taguchi, Citation1987). Although Taguchi introduced these methods in the 1960s, it was not until 1980 that Bell Laboratories implemented these methods to increase the performance of their most complex industrial processes. Taguchi’s methods implemented in the already known frameworks the element of design quality. Based on experiments, an optimal setting for manufacturing could be established and thus minimisation of defects could be achieved.

Three decades later, industry began to face the phenomenon of big data. New control options are now available for processes and products thanks to advancements in sensor technology and data processing software. As a result of this, integrated models that combine data from a variety of sources were put under consideration (Godfrey & Kenett, Citation2007). The business of quality began migrating towards information quality with the introduction of data analytics and manufacturing execution systems (MES).

To improve the manufacturing process, information quality focuses on gathering high-quality information. To capitalise on the opportunities presented by their data, organisations have begun recruiting data scientists. Data scientists began, in some capacity or another, to become involved in the infrastructures of organisations and the quality of data (Zonnenshain & Kenett, Citation2020).

As it can be concluded the evolution of quality models has undergone many changes. The stages of that evolution () are: (1) Inspection, (2) Product Quality, (3) Process Quality, (4) Quality Management, (5) Design Quality and (6) Information Quality.

During the Inspection phase, the focus of quality models was on the manufactured product. Then, this focus shifted to the parts that were used to build the final product. It can be seen that this shift in the focus, from the final product to the parts that are used to manufacture the final product, is the first attempt to implement a quality prediction framework in the manufacturing area.

With the introduction of mathematical models, the manufacturing area faced a ‘scientification’ and the focus of quality models shifted from creating good parts to optimising the manufacturing processes to avoid defects. But this, in order to work more efficiently and boost business productivity, needed to be implemented on a wider scale and include more aspects than just the manufacturing process. In a sense, quality models shifted from improving only the manufacturing processes to improving the productivity of a business as a whole, from manufacturing to delivery reliability.

So far, the manufacturing processes were optimised based on statistical methods that were fed with data from the manufacturing line. With Taguchi’s methods though, the optimisation would happen after experimental validation of the proposed change in the system. This can be considered the first simulation approach in the manufacturing industry.

With the introduction of new sensors and technologies in manufacturing, the amount of available data deriving from the manufacturing processes expanded. This enabled scientists to acquire valuable information from the produced data and thus focus on studying these data to improve the manufacturing processes and minimise the generation of defects. This signifies a shift in the focus of quality models toward Information quality.

In Industry 4.0, where sensor technology became accessible for the vast majority of manufacturers, the introduction of the recording of diverse data regarding the manufacturing process has led to the need to classify these data based on their quality and the impact they have on manufacturing. This has motivated researchers to explore the area of Information quality to more extent.

3. Research methodology

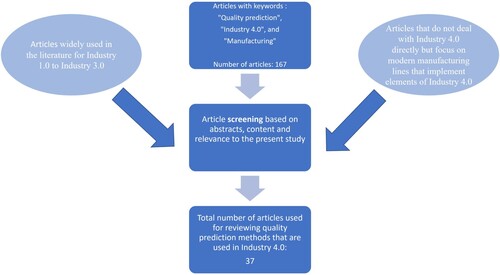

To examine the quality assessment methods that are used in manufacturing processes in Industry 4.0, a literature review was conducted to identify relevant research around this area. More specifically, the focus shifted from a wider range of keywords to specific ones in order to identify the relevant material around quality prediction in Industry 4.0. The keyword that was primarily used in the database ScienceDirect was ‘Quality prediction’. This search yielded 783,025 results, which was expected as the keyword is broad and does not specify efficiently the context of the current research.

As a second step, the keyword ‘Industry 4.0’ was added alongside ‘Quality prediction’. These keywords yielded 181 results, from which 26 were review articles, 140 were research articles, 7 were book chapters, 1 was a conference abstract, 1 was editorial, 2 were mini-reviews, 2 were short communications, 1 was software publication and 1 was categorised as ‘Other’.

Furthermore, to specify accurately the research area, the keyword ‘Manufacturing’ was added to the search criteria. The result yielded 167 results of which 24 were review articles, 130 were research articles, 7 were book chapters, 1 was a conference abstract, 1 was editorial, 2 were mini reviews and 2 were short communications. The presented results refer to a creative study that, from all those 167 documents, eliminated the ones that were not exactly relevant to the purpose of the present work.

However, apart from the above 167 documents, we took into account also references that are used widely in the literature for Industry 1.0 to Industry 3.0 (e.g. (Tannock, Citation1992) and (Zorriassatine & Tannock, Citation1997)) to present the quality approaches mentioned in these references and demonstrate why they cannot adequately address (most of the times) the complex manufacturing scenarios of Industry 4.0. In the same manner, we took into consideration references that do not deal with Industry 4.0 directly but focus on modern manufacturing lines that implement elements of Industry 4.0. In total, 37 papers are included in this research that, when analysed, can highlight the advantages and limitations of the approaches that are widely used in Industry 4.0 manufacturing lines, for quality monitoring and prediction. The selected papers cover a wide range of manufacturing processes and industries and were deemed suitable candidates to demonstrate widely used methods alongside their advantages and drawbacks. The selection process of these papers can be seen in .

4. Results

The first quality control approach was introduced during the inspection phase that was initiated at the beginning of the artisanship process. The product was inspected after it was manufactured to assess its quality. This type of quality control is called Post-Process Monitoring. The aim of Post-Process Monitoring is to create, through inspection, causal links between the final product and the manufacturing processes that were followed to create that product. Although it is capable of providing insights into the manufacturing process in order to optimise it, it is not able to adequately explain complex manufacturing processes and accurately identify why and which stage in the manufacturing process is responsible for a possible defect. Inspection is not the path to quality; rather, it is a tool for mitigating the effects of its absence (Tannock, Citation1992).

Even though they were created in the 1920s, traditional Statistical Process Control (SPC) approaches are still widely used today (Colosimo et al., Citation2021). Statistical process control, also known as SPC, is the application of statistical methods in order to maintain quality control over a procedure or manufacturing method. Tools and techniques for SPC can assist in monitoring the behaviour of processes, locating problems in internal systems, and identifying potential solutions to production problems (American Society for Quality, Citationn.d.).

SPC control charts are commonly used to create and maintain statistical control of essential outputs from manufacturing and other complex processes where process variation occurs from numerous sources (Zorriassatine & Tannock, Citation1997). There are many sorts of charts to choose from; nevertheless, those that Shewhart initially developed continue to be the most useful ones. They can be utilised with variable (continuous) data as well as attribute (discrete) data, and they comprise time-series graphs that illustrate the outcomes of periodic sampling and evaluation of some quality characteristic based on the output of the process.

In recent years, numerous SPC approaches have been implemented by enterprises all over the world, particularly as a component of quality improvement projects like Six Sigma. The dissemination of control charting methods has been significantly aided by the development of statistical software packages as well as highly developed techniques for the collection of data (American Society for Quality, Citationn.d.).

In 2016, Weese et al. (Weese et al., Citation2016) conducted a comprehensive literature review on the use of statistical learning approaches to statistical process monitoring. They observed that many contemporary systems generate data from several streams and/or a hierarchical structure, with autocorrelation and cyclical patterns frequently occurring in the output. The conventional SPC methods, which are model-based, are not well suited for monitoring such data (Colosimo et al., Citation2021)(Weese et al., Citation2016). Several significant methodological issues in process monitoring and surveillance continue to hinder SPC practise. The detection power of traditional SPC tools quickly decreases with increased data dimensions, and they frequently call for a stationary process and a fixed baseline (Weese et al., Citation2016). Additionally, traditional SPC tools are frequently based on linear dimensionality reduction techniques, so they are unable to adequately address the challenges associated with process monitoring. In addition, before monitoring can begin, one must first establish a baseline sample, which is traditionally referred to as Phase I sample. This step must be completed before monitoring can begin. One of the initial challenges is figuring out how to establish such a sample in a setting that is both complex and high-dimensional. There may be traditional statistical approaches to many of these scenarios, but the intricacies in the data from many of these scenarios may lend themselves well to algorithmic solutions such as Machine Learning and AI (Colosimo et al., Citation2021)(Weese et al., Citation2016).

The revolutionary technologies that were introduced during the age of Industry 4.0 are disrupting existing manufacturing processes as well as driving transformation in those processes. When it comes to coping with greater product personalisation and low-volume outputs, traditional production tactics, such as mass production, have not shown to be effective (Martinez et al., Citation2022). On the contrary, Flexible and Reconfigurable Manufacturing lines have tackled efficiently this issue. In addition to that, as a direct result of the previously mentioned product personalisation, the amount of time necessary to optimise the manufacturing process lines has been drastically cut down, which has led to an increase in the percentage of flawed items. Improved strategies for quality management are necessary in order to fulfil the requirements of the present situation.

One of the methodologies that currently holds the most promise is known as Zero Defect Manufacturing (ZDM). This approach aims to minimise and mitigate failures within manufacturing processes. The ZDM is an innovative idea that has the potential to completely transform the way people think about production (Psarommatis et al., Citation2020).

There are two distinct ways that the Zero Defect Manufacturing strategy may be put into practice. The ZDM that is focused on the product, as well as the ZDM that is focused on the process. The difference between a product-oriented ZDM and a process-oriented ZDM is that the former investigates the flaws that are present on the actual parts and works to find a solution, whereas the latter investigates the flaws that are present in the manufacturing equipment and uses that information to determine whether or not the products that are produced are satisfactory. The last one fits within the framework of the concept of predictive maintenance (Psarommatis et al., Citation2020). Because accurate and timely quality prediction has the potential to reduce the number of defects in a product, it is a very useful instrument for use in manufacturing.

In 2022, Martinez et al. suggested employing well-established cyber-physical architectures to support zero-defect manufacturing solutions in their paper (Martinez et al., Citation2022). The manufacturing process is monitored by making use of inspection methods that are already in place. The primary focuses of this monitoring are product quality and machine health. A forecasting model between rework ratio and tool life is briefly described, and it is demonstrated that preventative tool replacements can minimise the amount of rework that is necessary due to faulty screw-fastening procedures by between 2 and 3 percent. They recommend conducting more research into the possibility of nonlinearity in the interactions that exist between variables and KPIs because the present data analysis is restricted to linear correlations only.

In 2021, Pang et al. built a quality control system for CPS by making use of the intelligent methods of Back Propagation (BP) neural network (Pang et al., Citation2021). Based on the findings, it has been concluded that the intelligent data-driven system is both an essential approach and an effective instrument for product quality prediction control in CPS.

In 2021, Vishnu et al. introduced a Digital Twin (DT) framework for the Computer Numerical Control (CNC) machining process (Vishnu et al., Citation2021). This framework makes it possible to simulate, predict, and optimise the machining quality at both the process planning stage and the machining stage. In this study, predictive models are constructed for the purpose of forecasting surface roughness levels at both phases. It is difficult to transform this DT into a high-fidelity representation since it requires precise prediction models and optimisation modules for machining quality. Another disadvantage of this DT is that the operator only has control over a limited number of parameters, such as feed rate and spindle speed, when it comes to adjusting the quality of the machining while it is in progress. The predictive models that were constructed for estimating the surface roughness value at both phases require additional data in order to increase the model's accuracy, and an appropriate strategy needs to be picked in order to get better-predicted outcomes. Finally, they suggest that future studies should include the investigation into the possibility of developing optimisation techniques that are appropriate for this DT.

Work-in-progress (WIP) product quality may be accurately predicted using a method developed by Wang et al. in 2019, using generative neural networks (G. Wang et al., Citation2019). This technique is based on an unsupervised learning environment and a time-delayed feed-forward neural network since the already described machine learning methods in this area suffer from manual feature extraction and noise. This generative neural network technology is capable of addressing such problems despite the low amount of data available since it bases its prediction of product status on coded inputs. According to the findings of the experiments, acceptable predictive classification accuracy is attained, and the majority of WIP items that violate control limits throughout the production process are accurately captured by using this technique. In addition, autoencoders, when used as unsupervised feature extractors, present an automated method of creating features from the original dataset without the addition of prior information or assumption. They recommend that as part of future studies, this system should be improved so that it may process numerous product quality attributes simultaneously, rather than just one particular feature at a time.

According to Su et al. in (Su et al., Citation2018), neural networks may be efficiently employed to forecast irregularities in wafer dicing saw operations, and they can achieve chipping prediction accuracy of 75%. This enables real-time monitoring of production conditions, which in turn enables early identification of possible issues. This, in turn, helps prevent product failure, which in turn reduces material costs, reduces wasting of machining time, and improves overall manufacturing efficiency.

Bai et al., in 2021, presented an AdaBoost-Long Short Term Memory (LSTM) model with rough knowledge for the purpose of predicting the quality of manufactured goods (Bai et al., Citation2021). The AdaBoost method is used for the model's reinforcement, the LSTM is utilised as a regression tool, and the RS theory is utilised for the importance evaluation of the multiple parameters. The model that is provided here demonstrates the best performance in terms of metrics, and it does so by drawing inspiration from the approach of information extraction as well as ensemble learning. As a result, the suggested framework has the potential to not only increase the efficient application of information, hence enhancing the responsiveness of the model to the variables that are input, but it also has the potential to improve the performance of predictions. Additionally, there is a statistically significant difference between the provided model and other comparison models; hence, it may be the first choice for the manufacturing quality prediction in the actual work because of this difference. The researchers believe that further effort in the form of relevant data collection is required in order to test and enhance the performance of the proposed model.

An Infrared Thermography (IRT)-based artificial neural network model was suggested by Gyasi et al., and it demonstrated good weld monitoring and control performance (Gyasi et al., Citation2019). Even though the findings are encouraging, there are a few problems and constraints to consider. If the measurement point is too close to the solidifying weld pool, the temperatures of the weld pool will exceed the highest recordable temperature, and the measurement will become unusable as a result. On the other hand, if the location at which the temperature is being measured is too far off from the weld pool, the temperature will drop below the minimum value that can be measured. In terms of modeling ANN-based solutions for actual welding scenarios, one of the most difficult challenges is to determine the input and output parameters of the ANN. The causal effects of the input parameters should not be directly linked in the same way as the output parameters, but the output parameters should have some kind of causal link with the input parameters.

Saadallah et al. conducted research on the problem of early quality classification by utilising a convolutional neural network (CNN) on time series sensor data from an automotive real-world case study (Saadallah et al., Citation2022). The approach not only improved the accuracy of classification, but it also achieved early quality prediction in a real-world use case involving the automotive industry.

Krauß et al. investigated the scope of the application of AutoML in production as well as its limitations (Krauß et al., Citation2020). AutoML is a strategy that aims to eliminate the demand for human resources inside machine learning projects by automating the procedures that are required to be carried out by these projects. They argue that AutoML demonstrates poor performance with unsupervised learning as well as reinforcement learning and has issues when managing complicated data types such as diverse high-dimensional data. Further studies about quality prediction in the manufacturing processes of Industry 4.0 can be found in .

As we can deduce from the information presented above, machine learning is put to extensive use in the production and assembly lines of Industry 4.0 for the purpose of quality assessment. Examining the previous research done in that region, in the form of literature reviews, likewise enables one to reach the same conclusion.

Kang et al. conducted a comprehensive literature review, which examines the application of machine learning for production lines as well as the state of the art in this field (Kang et al., Citation2020). Several issues that occurred on manufacturing lines were successfully resolved with the help of machine learning.

In the most recent years, quality control and fault detection have emerged as two primary areas of research focus, and techniques based on machine learning have been demonstrated to be effective in both of these domains. As a result of the high level of complexity involved in the processes and the large amount of data that is produced by certain types of production lines, machine learning is most commonly utilised in the manufacturing of metals and semiconductors. Access to data and the quality of that data are the two most important factors that determine how well machine learning works. In most cases, the outcomes of supervised learning are superior to those of semi-supervised learning and unsupervised learning strategies. In order to increase the performance of the machine learning models, it is helpful to have more data for each feature and data that is balanced.

Another literature study was carried out by Bertolini et al., and it confirms that the even distribution of ML applications in many different industrial fields demonstrates the flexibility of ML approaches and their excellent potential for operation management tasks (Bertolini et al., Citation2021). In addition, the applications of machine learning in the sectors of Industry 4.0 and AM appear to have positive potential, and the preliminary findings are encouraging.

Caiazzo et al. carried out a study to investigate the most recent developments in ZDM-related methodology (Caiazzo et al., Citation2022). As was shown, a significant amount of machine learning is being put to use in the prediction area. This is in accordance with the literature reviews that were previously mentioned. According to the findings of Liu et al. (Citation2022), enabling AI in the product design stage offers a potential answer to the problem of how to take into account a wide range of design variables and the complexities of the interactions between them in order to achieve the desired level of performance in production. In addition, the incorporation of AI into the AM manufacturing and assessment stages enables not only the optimisation of the fabrication process for the tailored human-centered products but also the complete and effective evaluation of the quality performance of the products.

In addition, Dogan et al. (Dogan & Birant, Citation2021) argue that implementing ML in manufacturing can serve as a foundation for the creation of models that make estimates about the behaviour that will be shown by the system in the future. It is feasible that this will lead to forecasts that are approximately accurate regarding the possible future actions and reactions of the manufacturing system. The capacity to make accurate predictions based on the data at hand might vary widely depending on the properties of the machine learning algorithm. In spite of this, the general capacity of ML algorithms to arrive at accurate prediction outcomes has been demonstrated and validated in the context of the manufacturing industry.

There are several benefits that Machine Learning brings to the quality prediction of Industry 4.0 manufacturing and assembly processes; however, there are also a number of downsides and limitations that need to be taken into consideration.

In (Kang et al., Citation2020), it is stated that good-quality data is not always available. This can negatively affect the Machine Learning algorithms. One way for this to be tackled is by using data pre-processing techniques.

In (Bertolini et al., Citation2021), it is concluded that the majority of the issues relate either to the process of producing useful benchmark datasets or to the limited interpretability of the results that are acquired. On the other hand, both issues may already be satisfactorily resolved as a result of newly developing techniques. It's possible that the only real challenge that still needs to be solved is providing practitioners with an appropriate key to interpret and pick relevant ML approaches. This will ensure that they don't get lost in the overwhelming majority of scientific works that have been published in the subject area.

The need of selecting a suitable machine learning method in order to achieve the desired level of fault detection is something that was also highlighted by Fu et al. (Citation2022). Their literature study concentrates on the application of machine learning techniques for defect detection in metal laser-based additive manufacturing.

In (Caiazzo et al., Citation2022), it is highlighted that many areas around ZDM need improvement. In the evaluation of this literature review, the topics that particularly pique our interest are the following: Extension of the Detection strategy for different industrial processes and Data Collection Management. Regarding the Extension of the Detection for different industries, the Detection approach has undergone thorough analysis, however, the analysis has only concentrated on specific industry procedures. Regarding Data Collection Management, there is no unified procedure for the collection, management, or elaboration of data within a coherent framework. This deficiency frequently becomes a barrier in the process of putting ZDM techniques into practice in actual manufacturing environments.

According to (Liu et al., Citation2022), the suggested remaining problems and future research possibilities are as follows: (1) development of sophisticated AM-oriented AI approaches to manage the data with high heterogeneity, and high dimensionality, but limited availability in the highly customised cases; (2) make full use of physics knowledge in the AI methods for customised AM applications while ensuring process security and data privacy; and (3) incorporate people into the design, manufacturing, and quantification processes.

Following is a list of the key challenges that machine learning encounters in manufacturing, as noted by the vast majority of researchers and stated in the findings that are published in the research carried by (Dogan & Birant, Citation2021) (also supported by (Wuest et al., Citation2016)).

The major benefit of machine learning is that it can automatically learn from varied surroundings and adapt to those conditions as they change. This ability to learn and adapt is what sets machine learning apart from other approaches. Because of the dynamic and ever-changing nature of the industrial environment, the ML system needs to be able to learn and adapt to new conditions, and the designer of the system needs to be able to respond to any situation that may arise.

Acquiring collected data that is both accurate and relevant is another significant challenge because this information has a significant influence on the performance of machine learning algorithms.

One of the most common issues that emerge when applying machine learning in the manufacturing industry is the pre-processing of data. This is because it carries a substantial amount of weight in terms of how the outcomes turn out. This is also evident from looking at , which demonstrates that all of the mentioned research utilised some form of data pre-processing.

One other key challenge to surpass is deciding which machine learning (ML) strategy to use and which algorithm to use.

The interpretation of the data is the last important challenge to overcome (also supported by (Wuest et al., Citation2016)).

Table 1. Further studies about quality prediction in the manufacturing and assembly processes of Industry 4.0.

5. Discussion of the results

It can be shown that the approaches currently utilised for quality assessment in the manufacturing lines of Industry 4.0 have a number of limitations that need to be addressed. The aforementioned review papers serve as the main papers for this research because they outline in a spherical way the drawbacks of the manufacturing aspect that each paper focuses on. As these papers have studied many works in their respective manufacturing areas, their identified drawbacks are a product of wider research rather than a single case. Even though these papers concentrate on various aspects of manufacturing, it can be concluded that common drawbacks have been identified among all of them. Based on the frequency with which each disadvantage was reported in the aforementioned review papers, combined with the identified drawbacks mentioned in the other studied papers and the authors’ research, a summary of these drawbacks is provided in what follows, starting with the most important, and working down to the least important.

| (1) | It is not always possible to get high-quality data and/or of high level of accuracy. Most of the time, successful pre-processing is required since it has a substantial amount of influence on the final output. | ||||

As it was concluded from the aforementioned research, the quality of the data plays a vital role in the performance of machine learning algorithms. Although the implementation of sensors in industries allowed the acquisition of large amount of data, the data quality is something that needs to be addressed in more detail in order to make good use of them.

| (2) | The performance of the aforementioned algorithms is adversely affected negatively by the presence of high-dimensional data that is also extremely heterogenous yet has limited availability in highly customised use cases. | ||||

Although the amount of available data in Industry 4.0 may be large, the presence of high dimensionality, which is expressed as the inadequate ratio between gathered samples and measured variables, can negatively affect the performance of the quality prediction models. For that reason, a more systematic approach toward data acquisition systems needs to be established. For instance, the strategical installation of sensors (e.g. after simulation of the process) could lead to a more robust and efficient data acquisition framework as only important information would be measured maintaining the dimensionality of the datasets low. This would boost the predictive accuracy of the quality prediction methods and, at the same time, would reduce implementation and production costs (e.g. installation cost of redundant sensors, maintaining servers to store unnecessary measurements, etc.).

| (3) | One of the most critical challenges continues to be deciding which strategy and algorithm to use. | ||||

In the course of this study, it became apparent that there is no universal approach to quality prediction in Industry 4.0 manufacturing lines. Although ML and AI are the most popular ‘families’ of algorithms to address quality prediction, researchers still need to undergo trial and error to find the best solution for each specific case. This is due to the fact that modern manufacturing lines are highly customised and complex. This creates a problem that needs to be addressed as in industries, where time and cost are of the essence, the presence of universal frameworks, that each would address a specific group of cases, would speed up the implementation of quality prediction mechanisms and at the same time would reduce cost and time spent for finding the optimal solution.

| (4) | There is no guarantee that the results can be interpreted in any given situation. | ||||

Due to the complexity of the state-of-the-art algorithms that have been introduced in recent years, the interpretation of the results might not be feasible for manufacturing experts that are not familiar with the fundamental mathematics and methods of quality prediction algorithms. This adds extra difficulty in the implementation of such models to manufacturing lines as investing in solutions that cannot be fully interpreted at any given time is not a common practice in industries.

| (5) | Quality prediction algorithms need to improve their ability to learn and adapt to new conditions more effectively. | ||||

Modern manufacturing systems are highly reconfigurable and the system states change frequently. The quality prediction models that are implemented in these systems cannot accurately (most of the time) capture the change and adapt to it. This is also in accordance with the lack of universal frameworks mentioned in (3) as both these problems have to overcome the complexity and customisation of the manufacturing lines. As it can be concluded, lack of adaptability translates to loss of efficiency and rise in production cost (e.g. at each system change, readjusted solutions need to be implemented).

| (6) | Complete customisation must also ensure that data privacy and the security of processes are maintained. | ||||

With the introduction of computer systems and the interconnectivity of Industry 4.0, data pipelines are in charge of transmitting information from the shopfloor to the technology providers and vice versa. Most of the time, this information is confidential and for that reason, security measures need to be in place to ensure that data privacy and the security of processes are maintained. For that reason, middleware components need to be up-to-date with the latest advancements in the area of cyber security, in order to safeguard the confidentiality of the data and the manufacturing processes.

6. Conclusions, managerial implication, and proposals for future work of the present research

6.1. Conclusions

As it can be concluded, quality models have undergone various evolution stages to reach the current state. From the inspection of the finalised product to the use of statistical modeling and finally, to the application of Machine Learning and AI for quality prediction, quality models are constantly improving by utilising the newly introduced features of each manufacturing era. Quality models shifted their focus from Inspection to Product Quality, to monitoring and optimisation of Process Quality, to Quality Management, and then to Design Quality. As a final stop, the quality models focused on Information Quality. With the introduction of Industry 4.0, which made sensor technology available to the vast majority of manufacturers, the amount of data available allowed the implementation of Machine Learning and AI to achieve greater accuracy in quality prediction.

Despite the immense success that Artificial Intelligence has had in the industry, it still has some limitations. These limitations include the fact that the prediction models are negatively impacted by the lack of quality data, that the interpretation of the solutions is not always guaranteed, that it can be difficult to adapt the prediction frameworks to changes in the system state, and that it can be difficult to achieve transferability of the solution to similar cases due to the high level of customisation. If these limitations are overcome though, then we do not find any reason why AI could not achieve higher levels of accuracy that have not been achieved before, even in highly complex and customised manufacturing processes. Based on the authors’ belief, AI will overcome these limitations as the advancements during the last two decades indicate an exponential improvement in the capabilities of AI.

6.2. Managerial implication

The current research can act as a guidance tool for both experts in the area of manufacturing and production managers in industries to weigh the advantages and drawbacks of widely used quality prediction models. As automation and implementation of predictive tools are on the rise, the current work can act as a ‘map’ that gathers the information required to assess to which extent these methods can be implemented in new or existing manufacturing lines.

More specifically, for new manufacturing lines, the current manuscript can help in the strategic setup of the plant/factory in order to avoid the drawbacks mentioned in Section 5 (e.g. strategic installation of sensors, efficient data acquisition system to capture in depth the information that is produced by the manufacturing line, proper security measures to ensure confidentiality of the processes and data).

For existing manufacturing lines, the present study can assist in identifying the cause behind possible bottlenecks in the already implemented quality prediction frameworks or, it can be used in the same manner that can be used in new manufacturing lines and provide guidance for the strategic implementation of quality prediction models.

6.3. Future work

The present research focused on presenting the advantages and limitations of quality prediction models that are used in Industry 4.0 manufacturing lines. Although the Total Quality Management approach focuses on increasing the efficiency and adaptability of business operations as a whole (Toke & Kalpande, Citation2020), the current study focused on the technological aspects of manufacturing. It would be interesting for further studies to examine the causal links between the implementation of quality prediction models and the gains in terms of delivery reliability and financial growth. Furthermore, one aspect that future studies could focus on could be how Industry 4.0 elements would deal with the challenges that industries face for statutory and regulatory compliance regarding quality.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Correction Statement

This article has been corrected with minor changes. These changes do not impact the academic content of the article.

Additional information

Funding

References

- Acatech. (2015). Acatech: Umsetzungsstrategie Industrie 4.0 Ergebnisbericht der Plattform Industrie 4.0. www.vdma.org.

- Aiman, M., Bahrin, K., Othman, F., Hayati, N., Azli, N., & Talib, F. (2016). Industry 4.0: A review on industrial automation and robotic (Vol. 78). www.jurnalteknologi.utm.my.

- American Society for Quality. (n.d.). What is statistical process control?

- Ansari, F., Khobreh, M., Seidenberg, U., & Sihn, W. (2018). A problem-solving ontology for human-centered cyber physical production systems. CIRP Journal of Manufacturing Science and Technology, 22, 91–106. https://doi.org/10.1016/j.cirpj.2018.06.002

- ASQ. (n.d.). QUALITY GLOSSARY. Retrieved November 16, 2022, from https://asq.org/quality-resources/quality-glossary/q.

- Ayvaz, S., & Alpay, K. (2021). Predictive maintenance system for production lines in manufacturing: A machine learning approach using IoT data in real-time. Expert Systems with Applications, 173, 114598. https://doi.org/10.1016/j.eswa.2021.114598

- Bagheri, B., Yang, S., Kao, H. A., & Lee, J. (2015). Cyber-physical systems architecture for self-aware machines in industry 4.0 environment. IFAC-PapersOnLine, 48(3), 1622–1627. https://doi.org/10.1016/j.ifacol.2015.06.318

- Baheti, R., Gill, H., Lee, J., Bagheri, B., & Kao, H. A. (2015). Cyber-physical Systems. Manufacturing Letters, 3(October 2017).

- Bai, Y., Li, C., Sun, Z., & Chen, H. (2017). Deep neural network for manufacturing quality prediction. 2017 Prognostics and System Health Management Conference, PHM-Harbin 2017 - Proceedings, https://doi.org/10.1109/PHM.2017.8079165

- Bai, Y., Xie, J., Wang, D., Zhang, W., & Li, C. (2021). A manufacturing quality prediction model based on AdaBoost-LSTM with rough knowledge. Computers & Industrial Engineering, 155, 107227. https://doi.org/10.1016/j.cie.2021.107227

- Bak, C., Roy, A. G., & Son, H. (2021). Quality prediction for aluminum diecasting process based on shallow neural network and data feature selection technique. CIRP Journal of Manufacturing Science and Technology, 33, 327–338. https://doi.org/10.1016/j.cirpj.2021.04.001

- Behnke, M., Guo, S., & Guo, W. (2021). Comparison of early stopping neural network and random forest for in-situ quality prediction in laser based additive manufacturing. Procedia Manufacturing, 53, 656–663. https://doi.org/10.1016/j.promfg.2021.06.065

- Bensalem, S., Gallien, M., Ingrand, F., Kahloul, I., & Thanh-Hung, N. (2009). Designing autonomous robots: Toward a more dependable software architecture. IEEE Robotics & Automation Magazine, 16, https://doi.org/10.1109/MRA.2008.931631

- Bertolini, M., Mezzogori, D., Neroni, M., & Zammori, F. (2021). Machine Learning for industrial applications: A comprehensive literature review. Expert Systems with Applications, 175, 114820. https://doi.org/10.1016/j.eswa.2021.114820

- Burggräf, P., Wagner, J., Heinbach, B., Steinberg, F., Pérez, M. A. R., Schmallenbach, L., Garcke, J., Steffes-Lai, D., & Wolter, M. (2021). Predictive analytics in quality assurance for assembly processes: Lessons learned from a case study at an industry 4.0 demonstration cell. Procedia CIRP, 104, 641–646. https://doi.org/10.1016/j.procir.2021.11.108

- Bustillo, A., Urbikain, G., Perez, J. M., Pereira, O. M., & Lopez de Lacalle, L. N. (2018). Smart optimization of a friction-drilling process based on boosting ensembles. Journal of Manufacturing Systems, 48, 108–121. https://doi.org/10.1016/j.jmsy.2018.06.004

- Caiazzo, B., di Nardo, M., Murino, T., Petrillo, A., Piccirillo, G., & Santini, S. (2022). Towards Zero Defect Manufacturing paradigm: A review of the state-of-the-art methods and open challenges. Computers in Industry, 134, 103548. https://doi.org/10.1016/j.compind.2021.103548

- Chhor, J., Gerdhenrichs, S., & Schmitt, R. H. (2021). Predictive quality for hypoid gear in drive assembly. Procedia CIRP, 104, 702–707. https://doi.org/10.1016/j.procir.2021.11.118

- Colosimo, B. M., del Castillo, E., Jones-Farmer, L. A., & Paynabar, K. (2021). Artificial intelligence and statistics for quality technology: An introduction to the special issue. Journal of Quality Technology, 53(5), 443–453. https://doi.org/10.1080/00224065.2021.1987806

- Connor, P. D. T. O. (1986). Quality, productivity and competitive position, W. Edwards Deming, Massachusetts Institute of Technology. Center for Advanced Engineering Study, 1982. No. of pages: 373. Quality and Reliability Engineering International, 2(4), https://doi.org/10.1002/qre.4680020421

- Davis, S. (1997). Future perfect: Tenth Anniversary Edition.

- Dengler, S., Lahriri, S., Trunzer, E., & Vogel-Heuser, B. (2021). Applied machine learning for a zero defect tolerance system in the automated assembly of pharmaceutical devices. Decision Support Systems, 146), https://doi.org/10.1016/j.dss.2021.113540

- Dogan, A., & Birant, D. (2021). Machine learning and data mining in manufacturing. Expert Systems with Applications, 166, 114060. https://doi.org/10.1016/j.eswa.2020.114060

- Erol, S., Jäger, A., Hold, P., Ott, K., & Sihn, W. (2016). Tangible industry 4.0: A scenario-based approach to learning for the future of production. Procedia CIRP, 54, 13–18. https://doi.org/10.1016/j.procir.2016.03.162

- Fu, Y., Downey, A. R. J., Yuan, L., Zhang, T., Pratt, A., & Balogun, Y. (2022). Machine learning algorithms for defect detection in metal laser-based additive manufacturing: A review. Journal of Manufacturing Processes, 75, 693–710. https://doi.org/10.1016/j.jmapro.2021.12.061

- Gejji, A., Shukla, S., Pimparkar, S., Pattharwala, T., & Bewoor, A. (2020). Using a support vector machine for building a quality prediction model for center-less honing process. Procedia Manufacturing, 46, 600–607. https://doi.org/10.1016/j.promfg.2020.03.086

- Godfrey, A. B. (1986). Report: The history and evolution of quality in AT&T. AT&T Technical Journal, 65(2), 9–20. https://doi.org/10.1002/j.1538-7305.1986.tb00289.x

- Godfrey, A. B., & Kenett, R. S. (2007). Joseph M. Juran, a perspective on past contributions and future impact. Quality and Reliability Engineering International, 23(6), 653–663. https://doi.org/10.1002/qre.861

- Gubbi, J., Buyya, R., Marusic, S., & Palaniswami, M. (2013). Internet of Things (IoT): A vision, architectural elements, and future directions. Future Generation Computer Systems, 29(7), 1645–1660. https://doi.org/10.1016/j.future.2013.01.010

- Gyasi, E. A., Kah, P., Penttilä, S., Ratava, J., Handroos, H., & Sanbao, L. (2019). Digitalized automated welding systems for weld quality predictions and reliability. Procedia Manufacturing, 38, 133–141. https://doi.org/10.1016/j.promfg.2020.01.018

- Institute of Electrical and Electronics Engineers. (n.d.). 2019 Ieee Workshop on Metrology for Industry 4.0 and Internet of Things : proceedings : Naples, Italy, June 4-6, 2019. “Advanced Process Defect Monitoring Model and Prediction Improvement by Artificial Neural Network in Kitchen Manufacturing Industry: a Case of Study.”.

- Jazdi, N. (2014). Cyber physical systems in the context of Industry 4.0. Proceedings of 2014 IEEE International Conference on Automation, Quality and Testing, Robotics, AQTR 2014. https://doi.org/10.1109/AQTR.2014.6857843.

- Juran, J. M. (1986). The Quality Trilogy A Universal Approach to Managing for Quality.

- Kagermann, H., Wahlster, W., Helbig, J., Hellinger, A., Stumpf, M. A. V., Treugut, L., Blasco, J., Galloway, H., & Findeklee, U. (2013). Recommendations for implementing the strategic initiative INDUSTRIE 4.0.

- Kalpande, S. D., & Toke, L. K. (2021). Assessment of green supply chain management practices, performance, pressure and barriers amongst Indian manufacturer to achieve sustainable development. International Journal of Productivity and Performance Management, 70(8), 2237–2257. https://doi.org/10.1108/IJPPM-02-2020-0045