ABSTRACT

Theory on participatory and collaborative governance maintains that learning is essential to achieve good environmental outcomes. Empirical research has mostly produced individual case studies, and reliable evidence on both antecedents and environmental outcomes of learning remains sparse. Given conceptual ambiguities in the literature, we define governance-related learning in a threefold way: learning as deliberation; as knowledge- and capacity-building; and as informing environmental outputs. We develop nine propositions that explain learning through factors characterizing governance process and context, and three propositions explaining environmental outcomes of learning. We test these propositions drawing on the ‘SCAPE’ database of 307 published case studies of environmental decision-making, using multiple regression models. Results show that learning in all three modes is explained to some extent by a combination of process- and context-related factors. Most factors matter for learning, but with stark differences across the three modes of learning, thus demonstrating the relevance of this differentiated approach. Learning modes build on one another: Deliberation is seen to explain both capacity building and informed outputs, while informed outputs are also explained by capacity building. Contrary to our expectations, none of the learning variables was found to significantly affect environmental outcomes when considered alongside the process- and context-related variables.

1. Introduction

Theory on participatory and collaborative governance maintains that learning plays an essential part in achieving good environmental governance outcomes (Armitage, Citation2008; Leach, Weible, Vince, Siddiki, & Calanni, Citation2013). It is assumed that both the design of governance processes, and the way processes are actually conducted, impact whether and how such learning occurs (Armitage et al., Citation2018: Challies, Newig, Kochskämper, & Jager, Citation2017; Gerlak, Heikkila, Smolinski, Huitema, & Armitage, Citation2018; Heikkila & Gerlak, Citation2013; Leach et al., Citation2013; Newig, Challies, Jager, Kochskämper, & Adzersen, Citation2018; Rodela & Stagl, Citation2011). With this contribution we test these claims, analyzing how and under what conditions learning occurs in participatory governance, and how it may contribute to the environmental quality of decision-making outcomes.

Learning in environmental governance has been studied predominantly through individual case studies (Gerlak et al., Citation2018). While there is merit in rich, qualitative case accounts, relying solely on single or small-N studies makes comparability across cases difficult. Despite conceptual advances in the field, cumulation of empirical evidence has been limited (Gerlak et al., Citation2018). Hence, robust empirical evidence on which conditions enable or facilitate learning, and on whether and how learning actually improves environmental outcomes, is still lacking. This contribution draws on a database of 307 coded case studies on public environmental governance – the ‘SCAPE’ database (Newig, Adzersen, Challies, Fritsch, & Jager, Citation2013).Footnote1 Data were derived from a meta-analysis of published case studies with varying degrees of public and stakeholder participation from 22 developed Western democracies. Qualitative case study data were transformed into numeric data through a coding process utilizing a comprehensive, theoretically-informed coding scheme (case survey method). The method thus combines the richness of case study research with the rigor of a quantitative, large-N comparative analysis (Larsson, Citation1993).

We study learning in three different respects (described in detail in the next section): deliberative learning in collaborative or participatory environmental decision-making processes; learning and capacity building on the part of the participating actors; and knowledge gains and innovation incorporated into the resulting decision. All three aspects of learning are expected to lead to more environmentally oriented decisions, and to foster the acceptance of decisions by stakeholders, and hence implementation and compliance.

The remainder of this article is organized as follows. In the subsequent section, we elaborate on the conceptual foundations of learning in participatory governance settings and derive a set of hypotheses on both the contextual and process-related conditions under which learning likely occurs, and the environmental governance effects of learning. We then describe the case survey method used and briefly characterize the resulting dataset. Drawing on the case survey data, we use a step-wise multiple regression approach in order to (1) identify the causal factors, including different dimensions of participatory governance, that impact different dimensions of learning, and (2) test to what extent the different dimensions of learning impact the environmental standard of outputs, controlling for the influence of the other causal factors involved. After presenting and discussing the results of our analysis, we conclude by identifying broader implications for research and practice on learning in environmental governance.

2. Concepts and theory

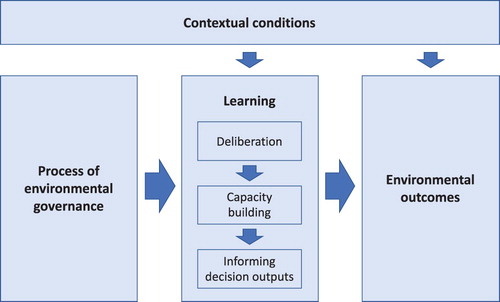

Our conceptualization of the role of learning in environmental governance is outlined in . We are interested in the process features and contextual conditions that enable learning in (participatory) environmental governance, and how learning, among other factors, shapes and contributes to environmental governance outcomes.

Figure 1. Conceptual model to assess how features of a (participatory) governance process and contextual conditions impact different kinds of learning, and how learning impacts environmental outcomes.

2.1. Conceptualizing learning

As shown in the recent overview by Gerlak et al. (Citation2018), concepts of learning differ hugely within the academic literature on learning in environmental policy and governance. Some authors focus on learning as mutual exchange and deliberation (Daniels & Walker, Citation1996; Newig, Günther, & Pahl-Wostl, Citation2010). Others focus on good information as the basis for decision-making:

In the context of public administration, learning can be understood as the process by which people develop a more comprehensive and accurate understanding of the science, technology, law, economics, and politics that underlie the decisions they make or the recommendations they advance. (Leach et al., Citation2013, p. 2)

Acknowledging these different perspectives on learning – or rather, these different concepts, which are all subsumed under the learning label – we consider three distinct and complementary modes of learning, which are derived from the literature. As knowledge plays a key role in the context of learning – as the substance of learning (Dunlop & Radaelli, Citation2018) – and has been regarded as the ‘currency’ of collaboration (Emerson & Nabatchi, Citation2015), we express the three perspectives on learning in terms of the different ways in which they relate to knowledge: learning as knowledge-exchange, as knowledge-building, and as knowledge-uptake (see ). We assume that the three modes of learning are interrelated: that deliberation may benefit capacity building, and that both deliberation and capacity building may benefit informed decision outputs (see ).

Our first mode is learning in the sense of deliberation. It is very much about the process, and less about the outcomes of learning. Here, learning is conceptualized as a process of exchange among participants, often termed ‘social learning’: ‘Social learning is the process of framing issues, analyzing alternatives, and debating choices in the context of inclusive public deliberation’ (Daniels & Walker, Citation1996, p. 73). Public deliberation, in turn, has been defined as a means by which ‘opinions can be revised, premises altered, and common interests discovered’ (Reich, Citation1988, p. 44). Deliberation implies ‘equality among the participants, the need to justify and argue for all types of (truth) claims, and an orientation toward mutual understanding and learning’ (Renn, Citation2004, p. 292). Learning in the sense of deliberation can be conceived as knowledge-exchange (Emerson & Nabatchi, Citation2015, p. 62).

Second, we consider learning in the sense of knowledge and capacity building by individuals. In their analysis of participation processes, Webler and Tuler (Citation2002) distinguish between process, outcomes related to the policy objectives, and outcomes related to capacity building. Here, we focus on capacity building, which includes aspects such as civic competence, knowledge levels, self-confidence, and ability to cooperate (Baird, Plummer, Haug, & Huitema, Citation2014; Webler & Tuler, Citation2002). In defining variables for their case survey of participatory processes across the United States, Beierle and Cayford (Citation2002, p. 13) study capacity building, and understand capacity as participants’ ‘ability to understand environmental problems, get involved in decision-making, and act collectively to implement change’ (Beierle & Cayford, Citation2002, pp. 45–46). While we assume capacity building to profit from deliberative learning, participants’ capacity building in one decision-making process may also enable them to better deliberate in subsequent processes. Learning in this sense may be expressed as knowledge-building.

Finally, we consider learning in the sense of an informed output, acknowledging the role of information, knowledge and innovation. This concept of learning builds on the argument that one of the key functions of participatory processes is to harness (lay, local) knowledge of relevance to decision-making that is not already available to the decision-makers in charge (usually the responsible government authorities) (Fischer, Citation2000; Smith, Citation2003; Wynne, Citation1992). Interaction and dialogue among diverse participants potentially produces innovative results through the exchange of different perspectives, information, and knowledge conducive to mutual learning (Fazey et al., Citation2013; Heikkila & Gerlak, Citation2013). We ask: Has new knowledge, information, or insight been made available for decision-making; have innovative solutions been found; and is any of this incorporated into the decision? We use decision and output synonymously, referring to the agreement, plan, contract, bill or other somewhat formalized product of decision-making. Learning in this sense refers to ‘outcomes related to the policy objectives’ (Webler & Tuler, Citation2002), and can be classified as learning as knowledge-uptake.

2.2. Factors assumed to foster learning

Below, we consider nine variables that figure prominently in the literature on learning in environmental governance. While many of the identified factors are hypothesized to impact all three kinds of learning outlined above, some are specifically linked to just one or two. We begin by discussing factors characterizing governance processes (hypotheses 1 to 6), and subsequently discuss contextual conditions (hypotheses 7 to 9).

2.2.1. Factors characterizing the governance process

In participatory environmental governance settings, learning is generally thought to be fostered through ‘intensive’ processes (Beierle & Cayford, Citation2002; Daniels & Walker, Citation1996). There appears to be some agreement in the literature on measuring the ‘intensity’ of participation according to three dimensions (Fung, Citation2006; Newig & Kvarda, Citation2012): communication and information exchange; delegation of power to participants; and breadth of participant involvement.

Opportunities for participants in an environmental governance process to communicate intensively are in many ways a prerequisite for learning (Heikkila & Gerlak, Citation2013). In particular, structured methods for (knowledge) exchange have been observed to foster learning among participants, because they serve to focus and channel communication and exchange on aspects deemed of particular importance, rather than allowing for very open and unstructured discussions. Structured methods include individual interviews, participatory modeling (Renn, Citation2006; Rowe & Frewer, Citation2005), transactive memory systems (Heikkila & Gerlak, Citation2013), and methods that translate between ‘lay’ and ‘expert’ types of knowledge (Edelenbos et al., Citation2011). Such methods, which also include professional facilitation or moderation, can also help achieve procedural fairness (Leach et al., Citation2013). In principle, these methods can be hypothesized to benefit all three kinds of learning: Deliberation should profit from structured exchange, as should capacity building; and in particular, the uptake of information in decision-making should be fostered by structured methods that help to identify information that is more important to incorporate. We therefore hypothesize that:

H 1: Intensive communication among those involved in an environmental governance process benefits learning.

H 2: Structured methods of facilitation and knowledge exchange in an environmental governance process benefit learning.

As a measure of ‘genuine’ participation, delegation of power to participants may have a more indirect influence on learning. Daniels and Walker (Citation1996) argued that ‘when people are given opportunities to “do” – to participate in tasks, to speak from their experiences, to be ‘players’ – they are more likely to learn than when they passively observe’ (Daniels & Walker, Citation1996, p. 75). Therefore, we may assume that the more participants have the opportunity to shape decision outputs, the more likely they are to learn. On this basis we can expect positive effects of power delegation on deliberation and on capacity building, and of course by its very definition on the uptake of learning in decisions and outputs. Therefore, we hypothesize that:

H 3: Power delegation to participants in an environmental governance process benefits learning.

H 4: Broad non-state actor participation in an environmental governance process benefits learning.

H 5: A diverse set of stakeholders in an environmental governance process benefits learning.

Finally, the duration of a process has been identified as an important factor impacting learning. Several studies from the United States on collaborative partnerships have shown that it takes time for participants to learn and build capacity (Beierle & Cayford, Citation2002; Leach et al., Citation2013). It is therefore hypothesized that:

H 6: The duration of a participatory environmental governance process is positively associated with learning and capacity building among participants.

2.2.2. Factors characterizing contextual conditions

Trust towards other participants as well as governmental actors has repeatedly been identified as a factor for the ‘success’ of collaborative governance (Emerson & Nabatchi, Citation2015), and specifically as conducive to learning (Juerges, Weber, Leahy, & Newig, Citation2018; Leach et al., Citation2013). Trusting others arguably makes the uptake of information and the updating of beliefs more likely. Moreover, true deliberation is more likely to occur if participants trust each other’s intentions.

H 7: A trustful setting in an environmental governance process, where participants trust each other and governmental actors, impacts positively on deliberation and capacity building among participants.

H 8: An attitude of cooperativeness among participants in an environmental governance process is conducive to learning.

H 9: Conflictual settings in environmental governance processes are likely to impact learning.

2.3. Assumed relations between learning and environmental outcomes

Why should learning lead to improved environmental outcomes? Drawing on earlier work by the authors (Newig et al., Citation2018), we consider arguments linking the different forms of learning to the environmental standard of decision-making outputs. By ‘output’ we mean the decision made at the end of the decision-making process, which is typically set down in writing, in the form of a management plan, a permit, a law, etc.Footnote2

Deliberation is expected to lead to a common good orientation of the discourse, characterized by ‘preferences and justifications which are “public-spirited” in nature [because] preferences held on purely self-interested grounds become difficult to defend in a deliberative context’ (Smith, Citation2003, p. 63). Deliberation is expected to ‘transform initial policy preferences (which may be based on private interest […], prejudice and so on) into ethical judgements on the matter in hand’ (Miller, Citation1992, p. 62) and toward an output that secures benefits for all parties as well as the environment (Aldred & Jacobs, Citation2000).

H 10: Learning as deliberation benefits the environmental standard of the decision output.

H 11: Learning as capacity building benefits the environmental standard of the decision output.

H 12: Learning as informed decision outputs benefits the environmental standard of the decision output.

3. Data and methods

3.1. Data: case-survey meta-analysis

Our analysis utilizes the ‘SCAPE’ database (Newig et al., Citation2013) comprising 307 cases of public environmental decision-making, covering a range of more- and less-participatory processes from standard administrative decision-making to highly inclusive and collaborative processes. This database was compiled through a case survey meta-analysis (Larsson, Citation1993; Newig & Fritsch, Citation2009a) of published case studies. This type of meta-analysis involves conversion of the rich qualitative information contained in narrative case study accounts into quantitative data, and as such represents a numeric interpretation of the case study texts. This approach therefore suits our research aims particularly well, as it provides the means to synthesize emergent findings where empirical evidence is mainly contained in a large number of single or small-N comparative case studies.

We define a ‘case’ as a public environmental decision-making process aimed at reaching a collectively binding decision, which is to a lesser or greater extent participatory in the above outlined sense. In order to be able to test our specific hypotheses on the links between participation, learning, and the environment, we quantify for each case (1) the ‘degree’ of participation, (2) the extent of learning achieved through the process, and (3) the environmental standard of the output or decision, each in multiple dimensions and thus via a number of different variables.

In conducting the case-survey, we followed the following steps:

Case study identification and selection: Based on a thorough search of several scientific databases and library cataloguesFootnote3 for studies published up until 2014 in English, German, French, or Spanish language, we identified over 3300 texts, containing more than 2000 cases of environmental decision-making with varying degrees of participation. We limited our search to cases from Europe, North America, and Australia and New Zealand. Given the varied terminology with which participatory process are described in the literature, we used a number of combinations of search terms in several iterations. Our search targeted peer-reviewed journal articles, books, edited volumes, theses, working papers, and various forms of grey literature, so long as these were publicly available. Having continued the search until saturation was reached and no new cases were being discovered, we assume that we have covered a nearly complete set of relevant, publicly available cases. The identified texts were screened for suitability, and those containing insufficient information for our purposes were eliminated, resulting in a database of 639 ‘codeable’ cases, from which we randomly sampled 307 cases for full coding.

Coding scheme development: Based on our conceptualization of participatory decision-making processes (described above), we developed an analytical coding scheme (Newig et al., Citation2013) to capture information on process attributes, outputs and outcomes, and environmental impacts, as well as relevant contextual factors. These components were broken down into 259 quantitative, and additional qualitative variables. Most variables were coded on a five-point quantitative scale (from 0 to 4).

Case coding: Each case was independently read and coded by three trained raters. In addition to the coding of actual variables, each rater assigned a confidence score to each measurement (4-point scale, 0 = insufficient information to code the variable; 3 = explicit, detailed and reliable information). After initial coding, raters met to discuss and address coding mistakes and explore divergent interpretations; however, to be able to accommodate different interpretations of the texts, raters were not required to seek agreement on codings (Kumar, Stern, & Anderson, Citation1993). Despite this deliberate assimilation of divergent codings, interrater reliability, measured through G(q,k) (Putka, Le, McCloy, & Diaz, Citation2008) was 0.77, and interrater agreement (rWG, James, Demaree, & Wolf, Citation1984) was 0.73, indicating high validity of data overall. Finally, the three rater scores were averaged, weighted with the respective confidence scores, as suggested by van Bruggen, Lilien, and Kacker (Citation2002).

3.2. Specification of variables

3.2.1. Learning variables

Following the multi-dimensional conceptualization of learning outlined above, we distinguish between learning as deliberation, capacity building, and informed outputs.

To assess deliberation, we measured the

degree to which deliberation in the sense of a ‘rational’ discourse among participants took place. The notion of deliberation refers to a process of interaction, exchange and mutual learning preceding any group decision. During this process, participants disclose their respective (relevant) values and preferences, avoiding hidden agendas and strategic game playing. Agreements are based on rational arguments, and principles such as laws of formal logic and analytical reasoning. (Newig et al., Citation2013)

Learning as capacity building, as understood here, assesses whether and how participants and the wider public were able to learn and develop capacities during a participatory process. We capture this learning mode through two interrelated variables: societal learning and individual capacity building. Societal learning measures the ‘degree to which participants, stakeholders or broader society learned about the issue such that they gained new or improved understanding or knowledge of the issue, enabling them potentially to contribute to future joint problem solving efforts’ (Newig et al., Citation2013). Individual capacity building assesses the

degree to which the skills and capabilities of individual participants or stakeholders were enhanced through involvement in or engagement with the DMP [decision-making process]. These skills and capabilities may be specific to the issue at hand, or incidental and applicable to a range of social situations. (Newig et al., Citation2013)

Finally, we operationalize learning as informed outputs by assessing the extent to which new knowledge and innovation informed the output. Information gain is defined as the

degree to which additional information in the sense of contextualized, local (including traditional and indigenous) knowledge informed the output. This kind of knowledge is characterized as implicit, informal, context-dependent, and resulting from collective experience, and can concern known parameters and/or new perspectives. This includes knowledge that may be ‘expert’ knowledge (e.g. of local people) but not in the sense of knowledge that is published (e.g. in a handbook). (Newig et al., Citation2013)

did the output present an innovative, novel solution in the sense of a solution addressing the issue at hand that had not been discussed before the DMP? This need not be an innovation in the sense of an ‘invention’ in global comparison. (Newig et al., Citation2013)

Again, both variables were measured on a five-point scale, with 0 indicating the absence, and 4 the high abundance of the variable. As both variables contribute to learning as reflected in the output, we aggregated them into one.Footnote4

3.2.2. Environmental outcomes

In order to be able to compare the environmental quality of governance outputs across diverse contexts, we follow Underdal (Citation2002) who assesses regime effectiveness against a hypothetical collective optimum; i.e. ‘one that accomplishes […] all that can be accomplished – given the state of knowledge at the time’ (Underdal, Citation2002, p. 8). On this basis, our output variable captures the

degree to which the environmental output aimed at an improvement (or tolerated a deterioration) of environmental conditions […]. This is to be assessed moving from the ‘business as usual’ scenario (projected trend) towards a hypothetical ‘optimal’ (or ‘worst case’) condition. (Newig et al., Citation2013)

We distinguish between two different but related aspects of environmental quality of decision outputs, namely the extent to which the output aligns with conservation and natural resource protection goals. We define conservation as aiming ‘to preserve, protect or restore the natural environment and ecosystems […] largely independently of their instrumental value to humankind’; and natural resource protection as aiming ‘to protect, preserve, enhance or restore stocks and flows of natural resources that are of instrumental value to humans, and provide for their sustainable use’ (Newig et al., Citation2013). As both dimensions are related and statistically correlated (r = 0.89, p < .001), they were aggregated to form a single scale (alpha = .94), which we call Environmental Standard of the Output.

3.2.3. Independent variables

As outlined above, we conceptualize participation as a multi-dimensional concept, comprising the dimensions of non-state actor representation, communication intensity and power delegation. For a detailed description of independent variables, including some descriptive statistics, see the online supplementary material.

We operationalize the representation of non-state actors as the average

extent to which the composition of participants in the process mirrors the interest constellation in the public. Full representation is reached when there are a sufficient number of representatives and when those representatives are fully accepted as such by their constituencies. (Newig et al., Citation2013)

In this, we consider all concerned actors from civil society and private business, as well as individual citizens.

Intensity of communication is operationalized as a composite variable, combining variables that measure the intensity of one-way information flows to and from participants (information dissemination and consultation), as well as two-way dialogue among participants and process organizers. Further, we consider whether communication took place directly, through the variable face-to-face. We constructed a composite factor (alpha = .93) by means of a principal component analysis (PCA) with oblique rotation (promax).

Finally, power delegation to participants was operationalized through the ‘degree to which the process design provided the possibility for participants […] to develop and determine the output’ (Newig et al., Citation2013).

Beyond these basic dimensions of participation we identified more specific process-related and contextual factors potentially influencing learning. Aiming to go beyond mapping flows of communication, we assess to what extent structured communication methods were used during the process to facilitate knowledge exchange and learning. Our measurement of such methods includes variables to assess methods of information elicitation, aggregation and knowledge integration, alongside a measurement for professional facilitation. Again, these were aggregated to a composite factor (alpha = .89) by means of a PCA.

Two further variables assess the diversity of interests and societal sectors represented in the process. To this end, we computed a Shannon diversity index, (a) for the relationship between pro-nature and pro-development interests, and (b) for the relative abundance of actors form different societal sectors (government, private sector, civil society, lay citizens).

Learning as a process evolves over time. Therefore, we introduced a variable for process duration into our model, measuring the time between the first interaction and the final decision reached in a participatory process.

Finally, we assessed a set of contextual factors that we hypothesized as contributing to the success of learning processes and products. To this end, we measured the extent of existing value conflicts, as well as the cooperativeness of the actors involved (PCA over all interests, alpha = .89). For the measurement of trust, we computed a composite variable combining the initial levels of trust with changes in trust levels during the decision-making process.

3.3. Methods of analysis

We conducted a series of regression analyses for learning, with the environmental standard of the output as the dependent variable. The rationale is to provide a basic path analysis that traces the effects of the identified process characteristics on the three modes of learning, and ultimately the effect of learning on the environmental standard of governance outputs (see Leach et al., Citation2013 for a similar approach). To this end, we first fit a model with deliberation as dependent variable and participation-related factors as independent variables. In a next step, capacity building served as dependent variable of regression models that use the same independent variables as the previous model, plus deliberation as a predictor for capacity building. In step three, we fit regression models that rely on participation variables, deliberation, and capacity building as explanatory factors for informed outputs. The final step includes all participatory and learning variables as predictors for the environmental standard of the output.

Generally, our models performed well and met the criteria for linearity, homoscedasticity and normality, without undue influence of any outliers (see online supplementary material). However, as the last two sets of models – with informed outputs and the environmental standard of the output as dependent variables – showed signs of heteroscedasticity, we computed robust standard errors ().

Table 1. Three modes of learning.

4. Results and discussion

We find, first, that all three forms of learning occur to a considerable degree in the cases studied. Considering the original, non-aggregated learning variables (all measured on a scale from 0 to 4), Deliberation has an arithmetic mean of 1.74 across all cases, Capacity Building of 1.69, and Informed outputs of 1.09.Footnote5 This suggests that learning as knowledge exchange (deliberation) is most likely to happen, followed by learning as knowledge-building (capacity building). However, it is less likely that outputs are actually informed by information acquired during the process, or even that the output includes innovation generated in the process. Below, we describe and discuss the results of the multiple regression models linking process and context factors, modes of learning, and environmental outcomes (see ).

Table 2. Results of the regression analysis.

4.1. Explaining learning through process- and context-related factors

shows eight regression models, clustered into four sets, each of which relates to a different dependent variable: Clusters 1–3 show models which explain the three modes of learning; cluster 4 shows models which explain the environmental standard of the output. Our results show that our conceptual model, consisting of carefully selected variables representing process and context features of participatory decision-making, adequately describes our data and captures a large proportion of its variance. As the overall significant and high R2 values indicate, all three modes of learning can be explained to some extent by the process- and context-related factors we have investigated. The models further highlight that the identified modes of learning can indeed be interpreted as a sequence, where capacity building benefits from deliberation and where the uptake of knowledge in the output is fostered by the previous modes of deliberation and capacity building. Comparing model (2a) with (2b), and (3a) with (3b), we can observe a significant (p < .001) improvement in model fitFootnote6 (increased R2 and decreased AIC) between the respective models (a) and (b). This indicates – together with the significant individual effects of deliberation and capacity building – that the previous modes of learning contribute significantly to the explanatory power of our models.

However, we also observe that goodness-of-fit decreases with the causal distance of each learning mode from the actual decision-making process: model (1a), assessing learning as deliberation, achieves an R2 of .67, model (2a) with learning and capacity building as dependent variable has an R2 of .54, while model (3a) for learning informing decision outputs has an R2 of .46. This decrease in model fit can be interpreted as a sign of increasing causal distance (Gerring, Citation2007) between independent variables and the phenomenon these aim to explain. Deliberation, itself a process feature, is more directly determined by choices of process design than by capacity building among participants and stakeholders. The fate of learning products and their incorporation into the output is even further out of the ambit of process design decisions and subject to many confounding influences, as our analysis suggests.

Beyond these broader trends, the models reveal particularly distinct patterns of factors determining the respective kinds of learning, providing specific answers to the hypotheses formulated above.

Communication variables show significant effects for all three kinds of learning, emphasizing the essential importance of communicative exchange for learning in all of its facets. In particular, we find the use of structured communication methods for information elicitation and aggregation, and for facilitation, to be a stable and significant predictor for all kinds of learning, confirming hypothesis 2. However, the mere intensity of communication ceteris paribus displays contradictory influence depending on the mode of learning, thus showing a mixed result for hypothesis 1. While communication intensity has a positive effect on deliberation, this effect vanishes when it comes to capacity building, and even becomes significantly negative for learning products reflected in the output. This highlights that learning does not automatically flow from communicative interactions of any kind, but depends on certain communicative qualities. While the intensity of communication may still be an essential ingredient in deliberation (model 1a), particularly models (2b) and (3b), controlling for deliberation and the use of structured communication methods, suggest that communication without these qualities may introduce dynamics that restrict capacity building and knowledge uptake in the output. Our large-N analysis of course cannot reveal these dynamics in detail, but the literature suggests for example politicized communication (Wood, Citation2015), groupthink (Janis, Citation1982), or communication as the mere voicing of individual opinions and concerns without wider discussion (Kochskämper, Challies, Jager, & Newig, Citation2018), as relevant factors in this respect.

Hypothesis 3, postulating a positive effect of power delegation to participants, is supported for learning as deliberation and as reflected in the output, with no detected effect for capacity building. We predicted the positive effect of power delegation on the uptake of learning products into the output earlier in Section 2, as power over the specific content of a political output may be a prerequisite for participants to make use of capacities developed and knowledge gained. For deliberation however, being taken seriously as a participant seems to foster willingness to engage wholeheartedly in a participatory process, to open up to the viewpoints and knowledge of fellow participants, and to strive for solutions for the common good (see also Daniels & Walker, Citation1996).

For the hypotheses related to the composition of the participant group (hypotheses 4-6) we could not find conclusive evidence in any of our models.

The duration of the decision-making process is significantly correlated to learning as capacity building. This provides support for hypothesis 6, emphasizing that capacity building is a process that takes time, and therefore has resource and commitment requirements that organizers must address (Beierle & Cayford, Citation2002; Leach et al., Citation2013). However, process duration did not explain any of the other learning modes, suggesting that deliberation may happen independent of a longer process, and that learning may likewise inform outputs independent of process duration.

Turning to the contextual factors, trustful relationships among stakeholders and government actors apparently play an important role in explaining deliberation and capacity building. In model (1a) and (2b) trust emerged as a robust significant factor, confirming hypothesis 7 for these modes of learning. Trustful relationships may make it more likely that participants open up to the perspectives and knowledge of others, as participants trust each other’s intentions and consider each other as credible and legitimate sources of information, fostering deliberation and capacity building overall. Note that we did not assume trust to impact informed decision outputs, and indeed we do not find any significant coefficients that would indicate this.

Other contextual conditions – cooperative attitudes and conflict levels (hypotheses 8 and 9) – show significant effects for learning as capacity building (model (2)). The levels of both cooperativeness and value conflict are positively correlated to capacity building. While a positive relation was expected for cooperativeness, we did not necessarily expect a positive relation for value conflicts (nor did we expect a negative relation). As outlined above, conflict levels may have different effects on capacity building, on the one hand hindering productive interaction, and on the other acting as a catalyst for questioning one’s own positions and for learning about complex issues and situations. Our results point towards the latter effect.

Taken together, these findings reveal insightful patterns of co-variance and correlation for each mode of learning: Each mode of learning supports the subsequent one, with deliberation fostering capacity building, and both fostering informed decision outputs. While deliberation and informed decision outputs are largely correlated with process-related factors, capacity building is mainly related to contextual conditions. Our findings reinforce the sequential relation between deliberation, capacity building, and learning as informing decision outputs. This could also be relevant from the perspective of process organizers and participants. Advancing deliberation may be most easily achieved, and might be fostered, through process design that promotes communication and power delegation to participants. Deliberation may then serve as a catalyst for capacity building. However, in this case more demanding contextual (and mixed) rather than procedural factors also come into play. Levels of conflict, trust and cooperativeness have here proven significant predictors. However, these are much harder for process organizers to plan for and influence, and suggest that special attention must be paid to the institutional and societal context in which a decision-making process plays out. Finally, deliberation and capacity building may foster the incorporation of knowledge into decision outputs. Here again, our results suggest that special attention to procedural features is warranted, especially concerning communication structures.

4.2. The impact of learning on the environmental standard of the output

Inspecting hypotheses 10–12 reveals a mixed picture. While in model (4c) different kinds of learning display significant positive effects on the environmental standards of the output, these effects vanish when controlling for the influence of the previously identified process- and context-related variables (model 4b). Best model fit is actually reached in the model where learning variables were left out altogether (model 4a with lowest AIC). Hence, we cannot find robust support for hypotheses 10– 12. Instead, power delegated to participants, a trustful atmosphere, and cooperativeness show significant effects.Footnote7

These findings suggest that participation of stakeholders in environmental decision-making sets in motion processes and mechanisms through which participation influences the environmental standard of the output, beyond the identified modes of learning. Exploring what those mechanisms may be goes beyond the scope of this analysis. However, other studies emphasize the role of environmental agency (Brody, Citation2003), also in the sense of the environmental orientation of the entity organizing participatory processes (Kochskämper et al., Citation2018), government commitment (see Mukhtarov, et al. 2018 in this special issue), and the wider institutional context as important factors (Newig & Fritsch, Citation2009b) – aspects that were not a focus of this study, but would warrant further research.

5. Conclusions

In this study we have tested whether learning in environmental governance can be explained by collaborative and participatory process features and contextual conditions, and whether learning affects environmental governance outcomes. Drawing on a case survey of 307 cases of public environmental decision-making allows for some generalization across very different kinds of environmental governance processes, encompassing 22 different countries, a wide range of issues, and several decades of environmental governance. To our knowledge, this is the largest meta-study on environmental governance processes available. The general patterns we find may of course change when considering subsets of cases, such as different jurisdictions or issue areas. Despite their broad range, there is no guarantee that our cases are representative of all the environmental decision-making processes having taken place, most of which have not been described in scholarly publications. With these caveats in mind, we point to three main findings.

First, our analysis demonstrates that it is useful to distinguish the three modes of learning we introduced: learning as deliberation, learning as capacity building, and learning as informed decision outputs. We find that, as expected, the modes of learning build on one another: deliberation fosters capacity building, and both these modes foster informed decision outputs. Moreover, the learning variables show distinct patterns as to their antecedent factors.

Second, we were able to identify factors conducive to learning. Most prominent are structured methods of communication, facilitation and knowledge-exchange, which foster all modes of learning. To a lesser extent, power delegation and process duration also affect learning. Context factors such as trust and stakeholder cooperativeness, and the level of value conflict, were found to foster learning, too. Contrary to expectation, neither non-state actor participation, nor stakeholder diversity mattered significantly for any of the studied learning variables. From a practitioner point of view, these findings highlight the importance of using methods for structuring communication and knowledge exchange and the role of professional facilitation – as opposed to simply giving participants opportunities for open exchange.

Finally, and against all expectations, we found no evidence for the assumption that learning benefits environmental outcomes. So, is learning therefore unimportant? Arguably, the effects of learning are more complex and indirect. Learning can be ascribed a value in itself; deliberation and capacity building can be seen as desirable from a social point of view. Learning may also lead to a conscious decision not to change policy. Further, learning may have indirect effects on the environment, which we were not able to assess in our study. For example, these may occur on longer timescales or at other stages of the policy process, such as during the implementation phase.

But having said all this, it seems that we must acknowledge that learning by itself may play less of an immediate role in generating strong environmental outcomes than it has been widely assumed in the literature. More attention may be needed to establish in which ways and under which specific conditions learning in its different modes may in fact be able to benefit environmental outcomes. This we suggest could be done by conducting careful within-case analysis through causal process tracing, potentially relying on the database used here or similar datasets of cases.

Supplementary Material

Download MS Word (5.5 MB)Disclosure statement

No potential conflict of interest was reported by the authors.

Notes on contributors

Jens Newig is full professor and head of the research group on Governance and Sustainability at Leuphana University Lüneburg, Germany.

Nicolas W. Jager is a post doctoral researcher in the research group on Governance and Sustainability at Leuphana University Lüneburg, Germany.

Elisa Kochskämper is completing her PhD student in the research group on Governance and Sustainability at Leuphana University Lüneburg, Germany.

Edward Challies is a senior lecturer with the Waterways Centre for Freshwater Management at the University of Canterbury, Christchurch, New Zealand, and an adjunct senior research associate with the Research Group Governance and Sustainability at Leuphana University of Lüneburg, Germany.

Additional information

Funding

Notes

1 This was generated as part of the project ‘EDGE – Evaluating the Delivery of Participatory Environmental Governance using an Evidence-based Research Design’.

2 Outputs of participatory processes, for example, are often not legally binding, and considerable time may elapse until they are adopted by the political system, challenged in court, and become finally implemented.

3 Sources searched include: BASE; Google Books; Google Scholar; GVK+; Science Direct; SciVerse Hub; Scopus; SpringerLink; SSRN; Web of Science; Wiley Interscience.

4 Although the correlation between the two variables is relatively low (r = .29, p < .001), we decided to aggregate these variables nonetheless given their conceptual relation as part of the same mode of learning.

5 Means were calculated over the original variables.

6 See online supplementary material for more information about the model comparison.

7 Here, we cannot exclude the possibility that these factors may stem from a ‘halo effect’ whereby stakeholders in the original case studies attribute a higher degree of environmental effectiveness simply due to their positive feeling and the atmosphere of trust and cooperation in the process (Leach & Sabatier, Citation2005). As the data for environmental output stringency stems from very different sources, and most case study authors do not rely merely on stakeholder judgements but on their own assessment of the text of agreements, we do not deem this effect to be substantially distorting.

References

- Aldred, J., & Jacobs, M. (2000). Citizens and wetlands: Evaluating the Ely citizens’ jury. Ecological Economics, 34, 217–232.

- Armitage, D. (2008). Governance and the commons in a multi-level world. International Journal of the Commons, 2(1), 7–32.

- Armitage, D., Dzyundzyak, A., Baird, J., Bodin, Ö, Plummer, R., & Schultz, L. (2018). An approach to assess learning conditions, effects and outcomes in environmental governance. Environmental Policy and Governance, 28(1), 3–14.

- Baird, J., Plummer, R., Haug, C., & Huitema, D. (2014). Learning effects of interactive decision-making processes for climate change adaptation. Global Environmental Change, 27(1), 51–63.

- Beierle, T. C., & Cayford, J. (2002). Democracy in practice. Public participation in environmental decisions. Washington, DC: Resources for the Future.

- Brody, S. D. (2003). Measuring the effects of stakeholder participation on the quality of local Plans based on the principles of collaborative Ecosystem management. Journal of Planning Education and Research, 22(4), 407–419.

- Challies, E., Newig, J., Kochskämper, E., & Jager, N. W. (2017). Governance change and governance learning in Europe: Stakeholder participation in environmental policy implementation. Policy and Society, 36(2), 288–303.

- Daniels, S. E., & Walker, G. B. (1996). Collaborative learning: Improving public deliberation in ecosystem-based management. Environmental Impact Assessment Review, 16, 71–102.

- Dunlop, C. A., & Radaelli, C. M. (2018). Does policy learning Meet the standards of an analytical Framework of the policy process? Policy Studies Journal, 46(S1), S48–S68.

- Emerson, K., & Nabatchi, T. (2015). Collaborative governance Regimes. Washington, DC: Georgetown University Press.

- Edelenbos, J., Arwin, Van Buuren, & Nienke, van Schie. (2011). Co-producing knowledge: Joint knowledge production between experts, bureaucrats and stakeholders in Dutch water management projects. Environmental Science and Policy, 14(6), 675–684.

- Fazey, I., Evely, A. C., Reed, M. S., Stringer, L. C., Kruijsen, J., White, P. C. L., … Trevitt, C. (2013). Knowledge exchange: A review and research agenda for environmental management. Environmental Conservation, 40(1), 19–36.

- Fischer, F. (2000). Citizens, Experts, and the environment. The politics of local knowledge. Durham: Duke University Press.

- Fung, A. (2006). Varieties of participation in complex governance. Public Administration Review, 66 (Special Issue): 66-75.

- Gerlak, A. K., Heikkila, T., Smolinski, S. L., Huitema, D., & Armitage, D. (2018). Learning our way out of environmental policy problems: A review of the scholarship. Policy Sciences, 51(3), 335–371.

- Gerring, J. (2007). Case study research. Principles and Practices. Cambridge: Cambridge University Press.

- Heikkila, T., & Gerlak, A. K. (2013). Building a conceptual approach to collective learning: Lessons for public policy Scholars. Policy Studies Journal, 41(3), 484–512.

- James, L. R., Demaree, R. G., & Wolf, G. (1984). Estimating within-group interrater reliability with and without response bias. Journal of Applied Psychology, 69(1), 85–98.

- Janis, I. L. (1982). Groupthink (2nd ed.). Boston, MA: Houghton Milfin.

- Juerges, N., Weber, A., Leahy, J., & Newig, J. (2018). The role of trust in natural resource management conflicts: A Forestry case study from Germany. Forest Science, 64(3), 330–339.

- Kochskämper, E., Challies, E., Jager, N. W., & Newig, J. (2018). Participation for Effective environmental governance: Evidence from European Water Framework Directive implementation. Oxon: Routledge.

- Kolb, D. A. (1984). Experiential learning: Experience as the source of learning and development. Englewood Cliffs, NJ: Prentice-Hall.

- Kumar, N., Stern, L. W., & Anderson, J. C. (1993). Conducting Interorganizational research using Key Informants. Academy of Management Journal, 36(6), 1633–1651.

- Larsson, R. (1993). Case survey Methodology: Quantitative analysis of patterns across case studies. Academy of Management Journal, 36(6), 1515–1546.

- Leach, W. D., & Sabatier, P. A. (2005). Are trust and social capital the keys to success? Watershed partnerships in California and Washington. In P. A. Sabatier, W. Focht, M. Lubell, Z. Trachtenberg, A. Vedlitz, & M. Matlock (Eds.), Swimming Upstream. Collaborative approaches to Watershed management (pp. 233–258). Cambridge: MIT Press.

- Leach, W. D., Weible, C. M., Vince, S. R., Siddiki, S. N., & Calanni, J. C. (2013). Fostering learning through collaboration: Knowledge Acquisition and Belief change in Marine Aquaculture partnerships. Journal of Public Administration Research and Theory, 23(2), 1–32.

- Miller, D. (1992). Deliberative Democracy and social Choice. Political Studies, XL(Special Issue), 54–67.

- Newig, J., Adzersen, A., Challies, E., Fritsch, O., & Jager, N. (2013). Comparative analysis of public environmental decision-making processes: a variable-based analytical scheme. INFU Discussion Paper No. 37 / 13. Vol. 37/13 (Lüneburg). Retrieved from https://papers.ssrn.com/sol3/papers.cfm?abstract_id=2245518

- Newig, J., Challies, E., Jager, N. W., Kochskämper, E., & Adzersen, A. (2018). The environmental Performance of participatory and collaborative governance: A Framework of causal mechanisms. Policy Studies Journal, 46(2), 269–297.

- Newig, J., & Fritsch, O. (2009a). The case survey method and applications in political science. APSA 2009 Paper. Retrieved from SSRN: http://ssrn.com/abstract=1451643 (Toronto)

- Newig, J., & Fritsch, O. (2009b). Environmental governance: Participatory, multi-level – and effective? Environmental Policy and Governance, 19(3), 197–214.

- Newig, J., Günther, D., & Pahl-Wostl, C. (2010). Synapses in the network. Learning in governance networks in the context of environmental management. Ecology and Society, 15(4).

- Newig, J., & Kvarda, E. (2012). Participation in environmental governance: Legitimate and effective? In K. Hogl, E. Kvarda, R. Nordbeck, & M. Pregernig (Eds.), Environmental governance. The challenge of legitimacy and effectiveness (pp. 29–45). Cheltenham: Edward Elgar.

- Putka, D. J., Le, H., McCloy, R. A., & Diaz, T. (2008). Ill-structured measurement designs in organizational research: Implications for estimating interrater reliability. Journal of Applied Psychology, 93(5), 959–981.

- Reich, R. B. (1988). Policy Making in a Democracy, in The Power of Public Ideas (pp. 123–56), R. B. Reich (ed). Cambridge, MA & London, UK: Harvard University Press.

- Renn, O. (2004). The challenge of Integrating deliberation and expertise: Participation and discourse in risk management. In T. L. McDaniels & M. J. Small (Eds.), Risk analysis and society: An interdisciplinary characterization of the field (pp. 289–366). Cambridge: Cambridge University Press.

- Renn, O. (2006). Participatory processes for designing environmental policies. Land Use Policy, 23, 34–43.

- Rodela, R. (2011). Social learning and natural resource management: The emergence of three research perspectives. Ecology and Society 16 (4).

- Rowe, G., & Frewer, L. J. (2005). A Typology of public engagement mechanisms. Science, Technology, & Human Values, 30(2), 251–290.

- Smith, G. (2003). Deliberative democracy and the environment. London: Routledge.

- Underdal, A. (2002). One question, two answers. In E. L. Miles, A. Underdal, S. Andresen, J. Wettestad, J. B. Skjærseth, & E. M. Carlin (Eds.), Environmental regime effectiveness: Confronting theory with evidence (pp. 3–46). Cambridge: MIT Press.

- van Bruggen, G. H., Lilien, G. L., & Kacker, M. (2002). Informants in organizational marketing research: Why use multiple informants and how to aggregate responses. Journal of Marketing Research, 39(4), 469–478.

- Webler, T., & Tuler, S. (2002). Unlocking the puzzle of public participation. Bulletin of Science, Technology & Society, 22(3), 179–189.

- Weible, Chris, M., & Daniel, Nohrstedt. (2013). The Advocacy Coalition Framework. In Araral Eduardo, Fritzen Scott, Howlett Michael, Ramesh M., & Wu Xun (Eds.), Routledge Handbook of Public Policy. London: Routledge.

- Wood, M. (2015). Puzzling and powering in policy paradigm shifts: politicization, depoliticization and social learning. Critical Policy Studies, 9(1), 2–21.

- Wynne, B. (1992). Misunderstood misunderstanding: Social identities and public uptake of science. Public Understanding of Science, 1(3), 281–304.