Abstract

There has long been calls for improving the link between strategic planning and performance management. Despite such calls, there are few empirical studies of how performance management system design is related to performance information use in public sector organizations, which can be important for implementing and evaluating strategies to improve public sectors organizations’ performance. Utilizing data from 135 public sector organizations consisting of municipalities, counties, central government owned higher education institutions, and agencies in Norway, this paper analyzes how strategy formulation and performance measurement system design relate to purposeful performance information use. Multivariate analysis with partial least squares path modeling (PLS-PM) found a positive relationship between formal strategic planning and logical incrementalism with performance measurement system design as well as a positive relationship between performance measurement system design and performance information use. There were no significant direct relationships between formal strategic planning or logical incrementalism and performance information use, or between organizational size or government sector and performance information use. Therefore, performance measurement system design seems to be an important link between strategy formulation and performance information use.

Introduction

Strategic management, performance management, and performance information use are important for public sector organizations to operate effectively (George et al., Citation2019; Gerrish, Citation2016; Nitzl et al., Citation2018; Pollanen et al., Citation2017; Vinzant & Vinzant, Citation1996). Strategic management is defined as “an approach to strategizing by public organizations or other entities which integrates strategy formulation and implementation and typically includes strategic planning to formulate strategies, ways of implementing strategies, and continuous strategic learning” (Bryson & George, Citation2020, p. 8). Performance information can be used for many purposes (Behn, Citation2003), including aiding strategy formulation, monitoring strategy implementation, and evaluating performance and strategy implementation. For example, Sohn et al. (Citation2022) found that performance information was related to budget changes in government programs in South Korea over a ten-year period. In addition to performance information being used purposefully the performance information may also be used for legitimation (Korac et al., Citation2020). Moreover, performance information may be under-utilized or even non-used, even though the information is available (Pollitt, Citation2006; van Dooren, Citation2004). Performance information can also be too much used and have many unintended, “perverse” effects (de Bruijn, Citation2007; Radin, Citation2006). Rubin et al. (Citation2023), for example, found in a study of micro-level consequences of performance regimes that using different types of performance information affected job satisfaction and turnover intention differently in U.S. schools. Common to both skeptics and advocates of using performance information in politics and public administration is the concern for designing management systems that support democratic and strategic planning processes and using performance information to improve decision making and public value. This paper studies how strategic planning formulation and the design of performance measurement systems impact purposeful performance information use in public sector organizations. By “purposeful” we here mean use of information to support decision-making and manage organizational performance (Korac et al., Citation2020).

van Helden and Reichard (Citation2016) in their systematic review found that public sector organizations measure various dimensions of performance, and use performance information intensively, but that the links between strategies and the performance measurement systems were weak. Moreover, the applicability of strategy and performance management allegedly varies for example by environmental factors, the degree of uncertainty of technology, and degree of ambiguity of outcomes. Hence, one could expect that the context for the performance management, for example the organizational environment and the organizations’ strategic planning, as well as the design of the performance measurement systems, would vary not only between organizations in the private and public sectors, as van Helden and Reichard (Citation2016) discussed, but also between different types of organizations within the public sector. Therefore, one could also expect that public sector organizations such as municipalities, counties, agencies, and government-owned higher education institutions (HEIs), would formulate strategies differently and design their performance measurement systems differently to use performance information purposefully. If the formulation of strategies varies between public sector organizations, and public sector organizations design their performance measurement systems differently, then it is interesting to study the link between strategic planning and the performance measurement system and how this impacts the performance information use.

Despite calls for improving the link between strategic planning and performance management (Poister, Citation2010) and between performance management and performance information use (Pollitt, Citation2006)—and an extensive literature on strategic management (see for example, Bryson & George, Citation2020; Ferlie & Ongaro, Citation2022; Joyce & Drumaux, Citation2014) as well as on performance management (see for example, de Bruijn, Citation2007; Moynihan, Citation2008; Talbot, Citation2010; van Dooren et al., Citation2015)—the practice of linking strategic planning and performance management is challenging and there is still a need for more evidence. Kroll (Citation2015a) systematically reviewed many studies on drivers (determinants) for performance information use and identified several drivers, but there still seems to be few empirical studies of the link between environmental factors, strategic planning, and the design of the performance measurement systems, and their impacts on performance information use in different types of public sector organizations. This paper is a contribution to fill some of this gap in the literature.

Increased knowledge of the link between strategic planning and performance management in public sector organizations is important because much of the public sector strategy and performance management literature is general. There is, therefore, a need for more empirical studies to analyze the applicability of common theories and models and to inform policymakers and practitioners in designing and using strategic planning and performance management systems in different contexts. There is much research on performance information use (Kroll, Citation2015a) and there are many studies of the adoption and use of strategic planning and performance management in different public sector contexts—see, for example, Andrews et al. (Citation2012), Joyce and Drumaux (Citation2014), Frederickson and Frederickson (Citation2006), Kuhlmann (Citation2010), Moore (Citation1995), and Moynihan (Citation2008), for cases. Given that strategic management and performance management both are large and partly distinct academic fields, theory and teaching may still, however, be based on fashionable perceptions that are wrong or overstated and may not be useful for practice (Hatry, Citation2002). There is, therefore, still a need to connect the insights from these academic fields with more empirical research.

So far, thus, evidence-based knowledge on the link between strategic planning, performance management, and performance information use across different types of public sector organizations, have been sparse. Our research question is, therefore: how do strategic plan formulation and performance measurement system design relate to performance information use in public sector organizations?

This paper utilizes data on strategic planning and management in 135 public sector organizations. The data stems from a survey to all municipalities, counties, central government administrative bodies such as agencies, and government-owned higher education institutions in Norway in 2020. This data therefore represents different types of public sector organizations.

We have utilized this data to analyze whether there is any basis for the assumption that there are differences in performance management design and utilization of performance information between local and central government organizations and between large and small organizations. We find that there is a need for other explanations for performance information use than type of public sector organization and organizational size. Multivariate analysis of path models with composite measures (PLS-PM) showed that there were positive relationships between formal strategic planning, logical incrementalism, and the design of the performance management system. It was the design of the performance measurement system, however, which had the strongest effect on the performance information use in the public sector organizations. The result of our analysis has relevance for practice and contributes to theory and policy-making in public administration, in general, and to public sector strategic planning (George et al., Citation2019) and performance management (Gerrish, Citation2016) in particular.

The remainder of the paper is organized as follows. Section “Literature review and hypotheses” reviews literature and presents two theoretical perspectives and mechanisms with hypotheses that may explain performance information use in public sector organizations. Section “Research methods and data” presents the research design and data. Section “Analysis” analyses the empirical results. Section “Discussion” discusses the results. Section “Conclusion” concludes and gives suggestions for further research.

Literature review and hypotheses

Performance information use

The use of performance information in the planning process varies between organizations and types of organizations. In his systematic review of determinants for performance information use Kroll (Citation2015a) examined 25 studies, restricted to purposeful, managerial use. A majority of these came from the United States, and only two studies had mixed data from different tiers of government and different types of organizations. He identified measurement system maturity, stakeholder involvement, leadership support, support capacity, innovative culture, and goal clarity, as important factors, and organizational size was among other factors often not found significant. Kroll also recommended future research to include contingency factors and indirect effects.

There are several empirical studies of performance information use in municipalities and agencies that were not included in Kroll’s (Citation2015a) review or have been published later. These results support the view that national contexts and policy sectors (Askim, Citation2007), strategic planning (Johnsen, Citation2016; Poister & Streib, Citation2005; Vandersmissen et al., Citation2024), types of information users including managers (Grøn & Kristiansen, Citation2022; Johansen et al., Citation2018; Micheli & Pavlov, Citation2019; Taylor, Citation2011) and politicians (Askim, Citation2009; ter Bogt, Citation2004), performance measurement system design and maturity (Askim & Johnsen, Citation2023; Cavalluzzo & Ittner, Citation2004; Gerrish, Citation2016; Han & Moynihan, Citation2022), types of performance information (ter Bogt, Citation2004; Johansen et al., Citation2018; Kroll, Citation2013), training in performance management implementation (Kroll & Moynihan, Citation2015), learning from performance management (Askim et al., Citation2007), and institutionalization of performance measurement (Dimitrijevska-Markoski & French, Citation2019) are important for performance information use.

Abdel-Maksoud et al. (Citation2015) and studies of public sector organizations in Canada are notable additions to the literature with studies with mixed data from organizations across different tiers of government. They studied different types av strategic decision-making and found that strategic performance measures of efficiency and effectiveness were related with performance, and efficiency measures were related with both strategy implementation and strategy assessment decisions. Kroll (Citation2015b) found that managers in German cities used performance information goal-directed for action, corroborating the importance of goal clarity and maybe formal strategic planning. Kroll (Citation2016) studied the link between performance information use and organizational performance and found that the impact of managerial information use on performance was stronger in organizations that had adopted a strategy for change (prospecting). It seems, therefore, that there is a link between strategic planning, performance measurement system design, and performance information use as well as with performance.

Theoretical perspectives and mechanisms explaining performance information use in public sector organizations

We use two partially overlapping theoretical perspectives as a starting point for the ensuing analysis, namely organizational structural contingency theory and new institutional organizational theory.

Structural contingency theory explains the organization’s structure based on traits of the environment, the nature of the tasks and the size of the organization (Donaldson, Citation2001). The main concern of structural contingency theory is rational adaptation by improving the fit between an organization’s technology and its structure to important traits in the environment, such as uncertainty and complexity. Management control theory is widely based on structural contingency theory (Otley, Citation1999) and holds that there is no one right way to design a performance management system and that the systems should be designed according to external conditions and the organizations’ strategies. Nonetheless, management control and public management theory has models for analyzing typical configurations (Bedford & Malmi, Citation2015) as well as how performance measurement can lead to perverting behavior (de Bruijn, Citation2007, p. 31) if the systems do not fit the organization’s tasks, strategies, and culture.

New institutional organizational theory concerns the relationship between the organizational structure and norms in its environment (Powell & DiMaggio, Citation1991). The central tenet of institutional theory is that behavior of organizations and their members are limited by institutions and the presence of normative regulations and that organizations adapt to norms in the environment to become more legitime beyond purely “rational” adaptations (Meyer & Rowan, Citation1977). While structural organizational theory explains heterogeneity among organizations, new institutional theory is often used to explain why organizations become similar (isomorphism) (DiMaggio & Powell, Citation1983). The dispersion of structures is explained by coercive isomorphism (demands from the environment, in particular regulations from the government), imitative isomorphism (in particular, through educational institutions) or normative isomorphism (in particular, through professional standards). The choice of organizational structures and processes will involve management systems and hence performance information use.

Thus, the prevalence of performance management and the degree of performance information use can be analyzed as a function of adaptations of the organizations to norms in the environment and organizational design contingent on task uncertainty and management support capacity varying with organizational size. We identify five mechanisms that may explain differences in performance information use. The mechanisms provide a basis for formulating four hypotheses in terms of differences between organizations in the central and local government of different sizes and with different approaches to strategy formulation processes and performance management design as well as to what extent they use performance information.

Based on the three types of isomorphism in new institutional organizational theory, we develop three mechanisms to explain why different types of public sector organizations design performance management and hence use performance information differently.

Central and local government organizations are subject to different regulations

The first mechanism is that central government regulations put demands on how public sector organizations design their performance measurement system and hence impact on performance information use in organizations in central and local government (coercive isomorphism).

The purpose of performance measurement and management is to evaluate to what extent the results of organizational activities contribute to public value and comply with strategic plans. Performance management is the measurement and assessment of performance indicators (PIs) for efficiency, effectiveness, and equity, to improve decision making and organizational performance (Moynihan, Citation2008). van Dooren et al. (Citation2015, p. 32) have defined performance measurement and its relationship to performance information simply as:

Performance measurement is the bundle of deliberative activities for quantifying performance. The result of these activities is performance information.

Performance measurement is the basis for performance management and can be divided into three main forms: monitoring, benchmarking, and management by objectives. These three generic forms correspond to what Hood (Citation2007) termed as intelligence, rankings, and targets applications of performance measurement.

Within the three generic forms of performance management, there are many different performance management models. Monitoring (Wholey & Hatry, Citation1992), for example mixed scanning (Etzioni, Citation1967), uses previous results of organizations or activities as background information in intelligence. Benchmarking use other entities’ processes or results, ideally best practices, as basis for ranking organizations or services to learn and improve (Ammons, Citation1999). Management by objectives systems, where the formulation of objectives, self-control, and assessments of results are central, may use goals and targets but is more an administrative than a political process (Drucker, Citation1976, pp. 16–19). In practice, organizations mix elements from different performance management forms and models when they design their systems. Systems that mix two or more elements have evidently more extensive designs than pure systems.

The regulation of the public sector organizations’ performance management systems may be different in different organizational fields. Specifically, in many countries central government organizations are directly governed by detailed central government regulations. Local government organizations, in particular municipalities and counties, often are fully or partly autonomous. Local and central government organizations may therefore have different performance management systems design and performance information use because they must comply to different regulations.

The comparability of services within central and local government organizations differs

Imitation (mimetic isomorphism) is a second mechanism from new institutional organizational theory, which can explain both the diffusion of certain management models and performance information use. Organizations are uncertain about how to perform a task effectively and legitimately and consequently imitate other, similar organizations that appear to be successful (DiMaggio & Powell, Citation1983).

The comparability of services within central and local government organizations may, however, be different. This may, in turn, affect professional judgements regarding the appropriateness of specific performance management models for collecting interesting information for comparisons and learning (imitation), and hence affect performance information use.

Municipalities and counties are in a different situation than many central government organizations with regard to opportunities for comparing their services and performance with other services and organizations. Municipalities and counties may find such comparisons easier because there would always be many other municipalities and counties that could serve as benchmarks. In Norway, for example, the KOSTRA system has since 2002 provided comparable indicators for municipalities and counties, facilitating monitoring and benchmarking (Askim & Johnsen, Citation2023). The purpose of the comparisons and the use of the performance information is not necessarily pure copying but learning in a broad sense.

A project to develop a similar system for comparable data as KOSTRA for central government services in Norway (STATRES) was terminated in 2007 due to problems in providing comparable data, albeit some branch-specific systems are available providing comparable indicators for hospitals and higher education institutions. Central government organizations are often alone in providing a national public good. Unless a central government organization has a decentralized structure and has regional branches that provide comparable services, many central government organizations such as agencies are “one of a kind” and have few or none to compare to. Central government organizations can certainly look to similar, foreign entities, but it is not a given that corresponding foreign entities operate in the same manner or are subject to the same regulations.

The coercion and imitation mechanisms will contribute to similarities within organizations sharing the same type of norms. However, the norms and comparability may vary between different types of public sector organizations and thus create different use of performance information. Our first hypothesis is an expectation that local government organizations have many similar organizations for comparisons and therefore have more performance information use than central government organizations:

Hypothesis 1: Local government organizations use performance information more than central government organizations.

Public sector organizations use different performance management models

The third mechanism from new institutional organizational theory for explaining the diffusion of certain performance management models and their impacts on performance information use is pressure from professional norms (normative isomorphism). Performance management guidelines can in this context be seen as norms. Organizations in central and local government are expected to use the performance management models that are recommended for them. Central and local government organizations apply different performance management models due to different diffusion of performance management models in the respective fields, and these models may function as norms for good practice to adopt (norms).

We cannot state whether designing performance measurement systems with traditional performance management models such as management by objectives (Drucker, Citation1976) or with newer versions, such as management by objectives and results (Lægreid et al., Citation2006) and balanced scorecard (Johnsen, Citation2001; Kaplan & Norton, Citation1996), impact the use of performance information most. Moreover, organizations often blend different typical performance management models in their local systems. It is, nevertheless, interesting to study empirically if and eventually how the design of the public sector organizations’ performance measurement systems impact how the organizations follow up and use the performance information.

Hypothesis 2: Organizations with an extensive design of their performance measurement system use performance information more than organizations with a simple design of their performance measurement system.

Subsequently, we use structural contingency theory to develop two more mechanisms to explain how strategic planning and organizational design may affect performance management, and hence performance information use.

Larger organizational size provides more support capacity for performance management

Structural contingency theory implies that organizational size can have an impact on how organizations design their structures. Our fourth mechanism states with increasing organizational size support capacity, including management support capacity for performance measurements and performance information use, as well as the need for formal performance management systems increases for the organizations to be able to have organizational control and organizational learning. When the organization increases in size, it often leads to specialization by the hiring of management specialists in various fields, and management capacity and competence (support capacity) increase. It is, therefore, reasonable to believe that both big central and big local government organizations have larger departments for corporate governance, and thus have more resources to design and maintain performance measurement systems and to follow up the ensuing performance information, than smaller organizations.

Some earlier studies have been inconclusive on how municipal size affects the use of performance management in the municipalities (van Helden & Johnsen, Citation2002). Other empirical research indicates that organizational size is not related to performance information use, but could be related to performance management adoption, as Kroll (Citation2015a) discussed. Korac et al. (Citation2020), however, found a significant positive relationship between organizational size and purposeful use of performance information in Austrian local governments. It is therefore still interesting to study whether organizational size or other factors that vary with size (support capacity), have an impact on performance information use.

Hypothesis 3: Large organizations use performance information more than small organizations.

Measurability and goal clarity facilitates performance information use

From structural contingency theory, we also know that the organization’s tasks may have an impact on how organizations design their structures and use information, including performance measurement and the performance information use. Tasks may be related to goal clarity that is an important driver for performance information use. Some theories indicate that performance information is suitable for some purposes such as control and accountability when tasks are easy to measure and goals are clear, but performance information may also be useful for learning and goal formation and transparency when there is much uncertainty and ambiguity (Bryson et al., Citation2024). Thus, task uncertainty may be related to the strategic planning approach.

Strategy is broadly defined as the identification of important issues and the alignment of internal structures, systems, and processes, to fit the organization to an anticipated but uncertain future environment for the organization to survive and perform effectively and legitimately (Ferlie & Ongaro, Citation2022; Moore, Citation1995). Bryson and George (Citation2020, pp. 7–8) define strategic planning as:

(…) a reasonably deliberate and deliberative approach to strategizing by public organizations or other entities that focuses on strategy formulation and typically includes (a) analyzing the existing mandates, mission, values, and vision; (b) formulating updated mission, values, and vision statements; (c) analyzing the internal and external environment to identify strategic issues; and (d) formulating concrete and implementable strategies to address the identified issues.

There are several approaches to the process of formulating strategic plans in public sector organizations (Bryson, Citation2015; Bryson et al., Citation2009). We focus on formal strategic planning (the Harvard policy model) and logical incrementalism, which are two common models for conceptualizing the planning formulation process. The rational-linear Harvard Policy Model views strategic planning as a formal centralized process which aims to establish the best fit between the organization and its environment by means like SWOT (strengths, weaknesses, opportunities, and threats) analysis. Adapted to public management this approach pays due regard to stakeholders and top-management commitment (Moore, Citation1995). Another well-known approach, which developed from observations of strategic planning in practice and critique of centralized, formal planning, is logical incrementalism (Quinn, Citation1982). The core of logical incrementalism is to adapt the strategic plan to unfolding changes by incremental changes while learning from performance feedback.

In practice, many organizations would blend the two processual models to produce a strategy (if any). Vandersmissen et al. (Citation2024) measured strategic planning as one variable consisting of indicators for formal strategic planning and logical incrementalism without separating the two strategy formulating forms. They found that external relations mediated the relationship between strategic planning and managers’ and citizens’ perceptions of performance.

Many tasks are assumed to become less complex and more tangible—more “measurable” (van Dooren et al., Citation2015) and have more specific goals—in formal strategic planning processes than in strategy formulation processes that are constantly adapting, which may increase performance information use.

There are different types of tasks typically related to local and central government organizations. It is therefore natural, on the one hand, to look at what distinguishes central and local government tasks. In Norway there are three administrative tiers, central government responsible for national tasks, counties responsible for regional tasks, and municipalities responsible for local tasks. Many municipal and regional tasks are more specific and geographically delimited than central government tasks, and therefore probably more measurable (and comparable). Many national tasks tend to be very complex, and thus less measurable. Among other things, the central government has the main responsibility for policy formulation and legislation in all areas, and the central government organizations also perform inspections and supervision, and often do more governance than direct service provision to end users. Hence, one can assume that many tasks and services in local government organizations often are more measurable and more amenable for formal strategic planning and performance management than tasks and services in many central government organizations.

On the other hand, another relationship is also conceivable. Many local government organizations, such as municipalities and counties in Norway, are generalist (multi-purpose) organizations and cover many tasks within their geographical area of responsibility, resulting in a broad portfolio of tasks. Many central governmental organizations have only one or a small number of tasks, for example a single-purpose agency that has a narrow portfolio of tasks. If the information from a broad portfolio of tasks for the formal strategic planning becomes too extensive, it is difficult for decision-makers to get oversight. This information overload may hamper the use of the performance information, even though many services are relatively easy to measure. It should be noted that performance information is complex and may pose challenges for providers as well as users of information (Walker et al., Citation2018). This problem may also apply to for example the municipalities, although it is relatively easier to measure many municipal services than many central government agencies’ services. One common means to overcome information overload from complex performance measurements is to apply the balanced scorecard model, which many municipalities do.

This discussion gives, however, an ambiguous conclusion as to whether it is central or local government organizations that have the most measurable services and a service portfolio most amenable for performance management. There could, still, be reasons to believe that there are differences between organizations in central and local government in terms of how easy performance measurements are. Based on the assumption that when performance measurements are simpler and provides oversight, performance information will also be used to a greater extent, this fifth mechanism may help explain both the design of performance measurement systems and the performance information use. Moreover, design of the performance measurement system and performance information use should be seen in relation to the organizations’ strategies. We will therefore study how strategy formulation by formal strategic planning and logical incrementalism (Poister et al., Citation2013; Quinn, Citation1982) may affect performance measurement system design and performance information use.

We hypothesize that when goals are clear and services more measurable, for example as a result of a formal strategic planning processes which have utilized management tools and formulated specific goals, organizations use performance information more than when goals are unclear and services are less measurable, for example in ongoing strategy formulation processes by logical incrementalism.

Hypothesis 4: Organizations which formulate strategies by formal strategic planning use performance information more than organizations which formulate strategies by logical incrementalism.

Research methods and data

Research design

The research design for this inquiry is an observational study. Most of the data utilized stem from a survey on strategic planning and management in a population study of all 356 municipalities, 10 counties, 72 central government organizations (mostly agencies), and 21 state-owned higher education institution (universities and universities colleges) in Norway in 2020.

The data was collected in an electronic survey from September 2020 to April 2021, which was during the height of the covid-19 pandemic. It is often contended that surveys of organizations’ management practices should use multi responses from each organization. However, a previous similar survey with a multi respondent design did not provide multi responses from the organizations. Moreover, public sector organizations experience a “survey fatigue” which probably was reinforced during the pandemic. The survey was therefore designed as a single respondent survey, but the respondents were asked to reply on behalf of their organizations. Up to three reminders were sent to non-responders.

Of the 498 organizations in the population 86 municipalities, 7 counties, 40 central government organizations (agencies), and 11 government-owned higher education institutions responded, totaling 144 public sector organization resulting in a response rate of 28.9 percent, varying from 24.2 percent in the municipalities to 70 percent in the counties. See . One municipality did not identify its name and therefore could not be included in analyses involving size variables. The responding organizations were on average larger than the non-responding organizations, except for the seven responding counties with an average smaller than the three non-responding counties. A test of differences in organizational size between the responding and non-responding organizations (using Welch’s t-test of independent samples) indicated that the responding municipalities were significantly larger than the non-responding municipalities. This pattern may reflect that large municipalities tend to use strategic planning and performance management more than small municipalities and have also responded more to the survey than the small municipalities.

Table 1. Man-Years and Population by Non-Responding and Responding Organizations (N = 498).

One probable cause for the relatively low response rate among the municipalities was that many of these were preoccupied with adapting to and implementing measures for combating the covid-19 pandemic during the data collection period. The purpose for our analysis was, however, not to infer from our responding sample to the Norwegian population of public sector organizations but to probe certain mechanisms. The sample was therefore deemed adequate for our analysis.

The survey was sent to the organizations’ main email address and addressed to the chief administrative/executive officer and/or the person main responsible for the corporate strategic planning, asking the chosen informant to answer all questions on behalf of the organization. The individuals who responded most often were administrative/executive officers or assistant chief administrative/executive officers, or members of the top-management teams. We therefore deemed the data to have face validity concerning strategic planning and management and deliberate performance information use in public sector organizations.

In addition to the survey data, we also collected administrative data from official records on organizational size. We collected data for agreed man-years by year end 2020 in the municipalities and counties in the KOSTRA database from Statistics Norway. We collected the similar data for man-years for the central government organizations from The Agency for Public Management and Financial Management. In addition to data for man-years for all the organizations we collected data for municipal and county populations for 2020 from Statistics Norway. Population is a common measure for local government organizations’ size.

One may question whether higher educational institutions differ so much from other public organizations that they should be excluded from the study. We have done the analyses both with and without these organizations. This had no effect on the main results. Thus, we have included higher educational institutions in the reported analysis.

We used Jamovi 2.3.28 (The Jamovi project, Citation2022) for most of the univariate and bivariate analyses as well as for the principal component analysis. For the multivariate partial least square path modeling (PLS-PM) we used ADANCO 2.3.2 (Henseler & Dijkstra, Citation2015). In reporting the PLS-PM results we followed the guidelines by Benitez et al. (Citation2020). Deleting cases with missing values gave 135 cases for the usable sample.

Measurement

From the survey we originally selected seventeen items to measure our four main concepts: performance information use (dependent variable), formal strategic planning, logical incrementalism, and performance measurement system design. All the four variables were measured by replicating questions in existing research instruments (Poister et al., Citation2013; Poister & Streib, Citation2005). documents the survey questions used for measuring the four variables. All the items were measured with a seven-point Likert scale from 1 = Disagree strongly to 7 = Agree strongly.

Table 2. Survey Questions Used in the Measurement Models.

A principal component analysis documented that all the initial relevant items considered loaded on three fixed components. The lowest Kaiser-Meyer-Olkin (KMO) statistics was 0.85 and above the minimum common threshold of 0.6. The Bartletts test was significant (p<.001) and below the threshold of p<.05 (Hair et al., Citation2018). The assumptions for conducting principal component analysis were therefore satisfied. reports the results from the principal component analysis for the final measurement models.

Table 3. Principal Component Analysis (N = 135).

We measured strategic planning originally with nine items taken from Poister et al. (Citation2013) survey instrument for measuring strategy formulation as formal strategic planning and logical incrementalism. One item (var10) had a large unique variance of 0.70 not shared with its factor and was removed from the scale measuring formal strategic planning. We subsequently measured formal strategic planning (FSP) and logical incrementalism (LI) with four indicators each, with satisfactory reliability.

Further, we used items from Poister and Streib (Citation2005) survey instrument to measure performance measurement system design (PMS design) as an emergent variable (Henseler, Citation2021) for with four elements. The first three elements consist of measuring time series data, using the system for comparisons and benchmarking, and reporting the achievement of goals, elements which Hood (Citation2007) termed intelligence, targets, and rankings, respectively. The fourth element encompassed linking the strategy with the performance measurement system by changing the performance indicators to align to new strategies, resembling what de Bruijn (Citation2007) termed as “liveliness” in the performance measurement. The weight for benchmarking was close to zero and was therefore discarded from the final emergent variable.

Finally, we used five items to measure a latent variable for our dependent variable, purposeful performance information use. The five indicators were selected from a research instrument with 19 items (Poister & Streib, Citation2005) that measured how information from strategic planning were used to impact the focus on mission, goals, and priorities; external relations; management and decision making; employee supervision and development; and performance.

reports descriptive statistics for the indicators as well as the results of the measurement models. Performance information use, formal strategic planning, and logical incrementalism were measured as latent variables with Mode A consistent, which gives consistent parameters similarly to parameters in covariance-based structural equation modeling (SEM). Performance measurement system design was measured as an emergent variable using Mode B because this variable is a design variable and is formed by its indicators that measures the extent the organization has included one or more forms of performance measurement elements (management by objectives, monitoring by times series, benchmarking by comparing to others, and the linking of changes in the strategic plan to changes in the performance measurement system). All the three latent variables had high reliability scores from 0.84 to 0.87, above the recommended threshold of 0.70, indicating good construct reliability. Average variance extracted (AVE) varied from 0.56 to 0.59 and above the threshold of 0.50, indicating good convergent validity. One of the 13 indicator loadings for the latent variables were marginally below 0.70 and was retained due to its theoretical importance and all the indicator loadings were significant (p < 0.001). The three indicators for the emergent variable were all significant (p < 0.01). The variance inflation factor (VIF) index was below 3, which is the recommended threshold, for all the three indicators. The highest hetero-trait-mono-trait (HTMT) statistic was 0.81 (between formal strategic planning and logical incrementalism) and below the threshold of 0.85, indicating satisfactory discriminant validity.

Table 4. Assessment of Measurement Models (N = 135).

The potential risk of common method bias

The indicators for the multi-item variables were measured in the same survey, and by the same response method (Likert scales). Therefore, common method bias is a potential problem in our study, even though the survey data were collected from four sub-samples. As pointed out by Podsakoff et al. (Citation2003, pp. 881–885) the tendency for respondents to appear consistent and rational in their responses, along with implicit assumptions about relationships between items, social desirability to present one-self in a favorable light or to behave in a culturally acceptable manner, yea-saying, introductory respondent instructions, and the use of identical scales are among factors that may lead respondents to give similar answers on different items. This may in turn bias construct validity and reliability measures and bias estimates of relationships between constructs (Podsakoff et al., Citation2012), even if the gravity of the problem may be exaggerated (George & Pandey, Citation2017).

One statistical method of controlling for common source bias, not available in our case, is probably the CFA marker variable technique (Podsakoff et al., Citation2012). Harman’s one-factor on the other hand is a simple but not waterproof method for revealing bias (Jakobsen & Jensen, Citation2015). In our case this test shows that the first factor accounts for 47 per cent of the variance in all indicators. This is slightly below the recommended limit of 50 per cent. In addition, convergent validity (that the items for a latent variable converge to represent the underlying construct) measured by the average variance extracted (AVE) and discriminant validity (that conceptually different concepts are also statistically different) (Henseler, Citation2021) are satisfactory in our model. This might indicate that common source bias does not represent a substantial problem in our study.

Analysis

We first analyze bivariate relationships before we present the multivariate analysis.

Hypothesis 1: Different use of performance information in local and central government

To test hypothesis 1, compares the mean performance information use between local and central government. The local government organizations’ mean performance information use was lower than for central government organizations. The mean performance information use for local government organizations was 5.26 (std. dev. = 0.88), whereas the mean for central government organizations was in fact higher, 5.57 (std. dev. = 0.81). A Welch’s independent samples t-test, which does not assume equal variances between the samples, showed that this 0.21 difference was significant (Welch’s t-test −2.07 (df = 106.97), p < 0.05), suggesting that there was a significant difference in performance information use between local government and central government organizations. Thus, central government organizations reportedly used performance information more than local government organizations.

Table 5. Performance Information Use by Sector (N = 135).

Agencies had the highest mean performance information use and counties had the lowest performance information use. Higher education institutions had the second highest use of performance information. Possible explanations for the central government organizations having much performance information use may be that agencies are closely monitored by their ministries and higher education institutions have good opportunities for comparisons with other higher education institutions and compete for students. Municipalities also have good opportunities for comparisons but do not compete directly, even though there is competition for businesses and citizens “voting by the feet” (Tiebout, Citation1956).

Therefore, the mechanism, which stated that organizations with good opportunities for comparisons use performance information more than organizations with poor opportunities for comparisons, were only partly supported.

The same pattern with higher education institutions highest and municipalities lowest also applies for formal strategic planning and logical incrementalism.

Hypothesis 2: Design of the performance measurement system

To test hypothesis 2, we start by analyzing the bivariate correlation between performance measurement system design and performance information use (). The high and positive significant correlation (r = 0.62, p<.001) corroborates hypothesis 2 that organizations with an extensive design of their performance measurement system use performance information more than organizations with a simple design of their performance measurement system. This result is also supported in the multivariate analysis ( and ).

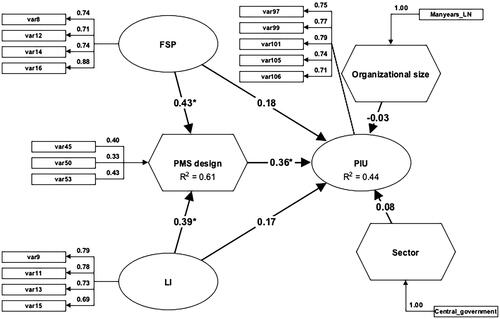

Figure 1. Main results from the measurement models and the structural model (N = 135). PLS-PM results, 300 iterations, 5,000 bootstrap samples. SRMR = 0.06 (HI95 = 0.05, HI99 = 0.06). Highest HTMT = 0.81. *p < 0.05; **p < 0.01; ***p < 0.001, two-tailed p-values. Path-coefficients are standardized (beta) coefficients.

Table 6. Spearman Correlations (N = 135).

Table 7. Assessment of the Structural Model (N = 135).

For performance measurement system design the higher education institutions had the most extensive systems and counties seemed to have had the least extensive systems but it should be noted that several counties had newly merged in a regional structural reform ending in 2020 and may not yet have prioritized adapting the performance measurement systems to the new strategies and structures at the time of the study.

Hypothesis 3: Organizational size

Hypothesis 3 stated that big organizations use performance information more than small organizations. shows that hypothesis 3 was not supported because the bivariate relationship was weak (r = 0.08) and not significant. It should also be noted that organizational size was not statistically significant in the multivariate analysis (see and ).

Hypothesis 4: Strategic planning process

To test hypothesis 4 on strategy formulation as well as the links with direct and indirect relationships, we conducted a multivariate analysis with performance information use as the dependent variable, formal strategic planning, and logical incrementalism as exogenous (independent) variables, and performance measurement system design as mediating variable. A dichotomous variable for sector (local government organization = 0, central government organization = 1) and organizational size (man-years, natural log-transformed) were control variables for hypothesis 1 and 3, respectively. We chose the variance-based partial least squares path modeling (PLS-PM) for estimating the model because PLS-PM can estimate path models with composite measures as well as latent variables and is also able to analyze models with small samples (Henseler, Citation2021).

reports results from the structural model. visualizes the path model. The model explained 44 percent of the variation in performance information use (R2 = 0.44) and 61 percent of the variation in performance measurement system design (R2 = 0.61). The global fit for the estimated model was satisfactory (SRMR = 0.06) but marginally higher than the 95 percent quantile of the reference distribution (HI95 = 0.05) but within the 99 percentile quantile (HI99 = 0.06). We found that formal strategic planning and logical incrementalism had positive relationships with performance measurement system design (beta = 0.43 and 0.39, respectively), both statistically significant for p < 0.05. Formal strategic planning had a medium effect (f2 = 0.17) and logical incrementalism had a weak effect (f2 = 0.13) on performance measurement system design, marginally supporting hypothesis 4. We found a positive and significant relationship between performance measurement system design and performance information use (beta = 0.36, p < 0.05) with a weak effect on performance information use (f2 = 0.09), supporting hypothesis 2.

There was no statistically significant, direct effect of neither formal strategic planning nor logical incrementalism on performance information use. Both effects of formal strategic planning and logical incrementalism on performance information use were mediated by performance measurement system design.

There was no corroboration for hypothesis 1 and 3 as both control variables had small and insignificant relationships with performance information use.

Discussion

Performance information use in central and local government organizations

More detailed regulation for performance management in central government than in local government may be one explanation for different degrees of performance information use.

In Norway, for example, the financial public management regulations (specifically, section 4 of the central government’s Financial Regulations) state that all central government organizations are required to formulate goals, set target, and measure performance, and ensure that the results are achieved efficiently and effectively, according to priorities given by superior authorities, and in compliance with rules and bureaucratic norms for good practice. The decision-making should be based on sound and sufficient information. Moreover, the organizations should design their control systems with due regards to specificity, risk, and materiality. This means that all central government organizations under the scope of the regulations are required to have a form of performance management that involves setting goals and measure performance.

The municipalities and counties also have regulations on planning and financial management. The Local Government Act of 1992, revised in 2018, is based largely on theory and norms on good strategic planning and financial management in both community planning and corporate governance. However, the municipalities and counties do not have the same detailed requirements as the central government organizations have. The municipality and county authorities are required to standardized reporting to the public via Statistics Norway, and hence to the central government (the KOSTRA system), but how the local authorities design their performance measurement systems and how they use their performance information, for example in annual reporting, is the prerogative of the local authorities. Despite that, the municipalities and counties do not have detailed regulations to the same extent as the central government organizations, performance management is nevertheless an established practice for most municipalities and counties, and arm-length performance management is an established principle in the central government’s governance of the local government (Meld. St. 12 (2011–Citation2012)). It is conceivable that widespread norms and established practices also in the municipalities contribute to the extensive performance information use, particularly from balanced scorecard and benchmarking (Askim, Citation2004; Askim et al., Citation2007; Johnsen, Citation1999; Madsen et al., Citation2019).

The central government organizations have a statutory requirement to have a form of performance management which can be interpreted as management by objectives and results (Lægreid et al., Citation2006). It is conceivable that performance management is used by some central government organizations because of the requirement in the regulations, but without this affecting the performance information use (actions) in the central government organizations as much as in the municipalities and counties. Performance management may then function mostly as “window dressing” for external legitimacy. The self-reported data in our analysis, however, indicates widespread purposeful use of performance information in central government organizations.

Regulation may play an important part for performance information use, but other factors are also important to understand differences in performance information use.

Performance measurement system design

Performance measurement system design turned out to be the variable with the strongest relationship with and the strongest effect on performance information use. Moreover, formal strategic planning and logical incrementalism were both related to performance measurement system design, but formal strategic planning had a slightly stronger positive relationship to performance measurement system design and performance information use than logical incrementalism. Thus, strategy formulation may be important for performance information use but mainly via its link to performance measurement system design.

In designing the performance measurement systems one could expect that on the one hand central government organizations use some variation of monitoring and management by objectives, for example management by objectives and results (Lægreid et al., Citation2006), more than local government organizations. Municipalities and counties, on the other hand, would use balanced scorecards (Madsen et al., Citation2019) and benchmarking more than central government organizations, at least agencies. An analysis of the elements in the performance measurement system design by sector showed that this was the case. Central government organizations reported performance associated with goals (i.e., management by objectives and results/targets) more than local government organizations and local government organizations used benchmarking (rankings) more than central government organizations.

The Norwegian Agency for Public and Financial Management (DFØ) largely interprets section 4 of the central government’s financial regulations as management by objectives. This agency is an authoritative professional body for central government administration, and its interpretations of the financial regulations serve as norms for other central government agencies’ understanding of the regulations and their management practices. For example, The Agency for Public Management and Financial Management published in 2006 guidelines on management by objectives and performance measurement (The Norwegian Agency for Public & Financial Management, 2006).

We expected that the municipalities and counties used balanced scorecards more than the central government organizations, but here we had no available data for comparisons. The municipalities are not required to use balanced scorecards, however, but the ministry’s guides give clear signals that this is advisable (a norm) for the municipalities. In 2002, the Ministry of Local Government and Regional Development published a guide for implementing balanced scorecards in the municipalities and counties (Ministry of Local Government & Regional Development, 2002). In 2004, the same ministry published another guide on performance management, including balanced scorecards and other forms of systematic performance measurement in the local government sector (Ministry of Local Government & Regional Development, 2004). We therefore assume that balanced scorecard is a common model for performance management within municipalities.

Several studies have analyzed the extent to which municipalities have adopted balanced scorecards. A survey from 2008 showed that more than half of the municipalities used balanced scorecards in 2008 (Hovik & Stigen, Citation2008, p. 33, 103). A survey from 2014 found that 65 per cent of the municipalities used balanced scorecard in 2014 (Daleq & Hobbel, Citation2014). A survey from 2019, however, showed that only 36 per cent of the municipalities used balanced scorecard, but this survey had a low response rate (Madsen et al., Citation2019).

Measurability and performance information use

Strategic planning, either it is formal or incremental—or often a blend of formal and incremental planning in practice (the bivariate correlation was r = 0.81)—can by using management tools and processes help to prioritize tasks and clarify objectives and goals to improve performance. This usage seems to increase measurability and facilitate improved fit between the strategy and the performance management system and increase the use of performance management over time.

Management support capacity

Organizational size is an often-used indicator for management support capacity, among other concepts. A common assumption is that big organizations have more management support capacity and/or competence and therefore could use more resources in designing their performance measurement systems and use performance information more than small organizations. This assumption was not supported, however, in our analysis. There was also a stronger positive, but still non-significant, bivariate relationship between organizational size and performance measurement system design (r = 0.20) (). Organizational size reflects many factors, including management capacity affecting the supply of information and uncertainty affecting the demand for information. Organizational size—used as an indicator in isolation—therefore blurs different mechanisms. Factors related to size remain interesting and may be relevant for explaining performance measurement system design as well as performance information use, but again, there seems to be also other factors at play.

Comparability

One reason why one often may expect that municipalities, and to some extent also counties, should use performance information more than many central government organizations is that municipalities have good opportunities for finding comparable organizations and services. Moreover, the municipalities and counties in Norway and in many other countries have readily available comparable performance indicators amenable for use in monitoring, benchmarking, and annual reporting. For many central government organizations finding relevant comparisons and data availability is much more challenging. The municipalities and counties therefore have better opportunities for designing their performance measurement systems and using performance information for benchmarking than most central government organizations. Higher education institutions, however, also have ample opportunities for benchmarking and readily available data for such use of the information, an opportunity which they seemed to have grasped. A bivariate analysis indicated that many municipalities had not grasped this opportunity to the same extent or may have been unwilling to engage in comparisons. Also, Siverbo and Johansson (Citation2006) in their study of relative performance measurement (benchmarking) in Swedish municipalities and Ammons and Rivenbark (Citation2008) in their study of a US benchmarking project reported that municipal officials, for several reasons, varied in their willingness to embrace comparisons. We have no systematic data on training in performance management reforms in Norway, but we know that benchmarking networks have been used systematically to increase competence and performance information use in municipalities in Norway (Askim et al., Citation2007), and that many municipalities routinely have used performance information for benchmarking for a long time (Hovik & Stigen, Citation2008). Even though many municipalities have good opportunities for benchmarking, many may not have had the motive or will to utilize this opportunity for using the performance information fully or may not have institutionalized such routines to the same degree as central government organizations that had more regulation.

We have earlier pointed to the issue of breadth of service portfolio and danger for information overload. The large number of services that multi-purpose municipalities must monitor and manage, may make performance management more challenging than for many single-purpose organizations, even though many municipal services are relatively easy to measure.

Other factors

Performance information use may vary between organizations, which we have analyzed, but also within organizations across organizational echelons, which we have not analyzed. Micheli and Pavlov (Citation2019) found in a case study in the UK that senior management used the information purposefully to improve efficiency and effectiveness as well as passively for monitoring, control, and reporting, while frontline staff only used the information passively, missing opportunities for making service improvements. Grøn and Kristiansen (Citation2022) in a representative study of public managers in Denmark also found that top managers used performance information, and in particular information on outcomes and user satisfaction, more than other echelons. These studies give divergent results to what Taylor (Citation2011) found earlier in her study of Australian state government agencies, that middle level managers used performance information for decision making more than top level managers.

There is also more research after Kroll’s (Citation2015a) review on how individual factors such as politicians’ background, leadership style, motivation, and involvement, explain performance information use (Askim, Citation2009; Moynihan & Lavertu, Citation2016), and how specific purposes such as learning and perceptions of the performance (negativity bias) affect performance information use (Andersen & Nielsen, Citation2020; George et al., Citation2020; Holm, Citation2018; Johnsen, Citation2012; Nielsen, Citation2014). Performance information use may therefore also vary by organizational positions and levels, but we don’t know systematically yet how this variation is.

Conclusions

Previous research has found mixed evidence regarding the link between strategy and performance management in public sector organizations but has identified many factors affecting performance information use. We analyzed how strategic planning, measured as formal strategic planning and logical incrementalism, were related to performance measurement system design and performance information use. In addition, we studied direct as well as indirect effects. Our mediating and control variables, performance measurement system design and organizational size, correspond to measurement system maturity and support capacity, which are some of the major drivers identified in earlier studies, and we explored how environmental contingencies for four different types of public sector organizations were related to performance information use. We found no support for the notion that local government organizations, due to more measurability and better comparability, and big organizations use performance information more than central government organizations and small organizations. Other factors are also at play and our analysis indicates that strategy formulation by formal strategic planning as well as logical incrementalism, performance measurement system design, and the link between these, are positively related to performance information use. Most importantly, the design of the performance measurement systems seems to be a crucial factor for linking the strategy formulation to using performance information for strategy implementation.

By recognizing the impact of performance measurement system design the link between strategy and performance information use may be stronger in public sector organizations than is often assumed. Moreover, given the important mediating effect of performance measurement system design practitioners may reap more benefit from the strategic planning by designing their performance measurement systems wisely. Information that are useful for monitoring and implementing the chosen strategy and probably also heeding the informational needs of different stakeholders and organizational echelons are cues for how to design the performance management system better.

Our findings have implications for theory and practice. Public management regulations affect strategic planning and performance management systems to some degree, but these factors are also partly under the discretion of the public sector organizations themselves. Hence, public sector organizations that want to improve the performance information use should formulate strategies such that objectives and targets measure substantial issues and reduces uncertainty and ambiguity, design their performance measurement systems to mitigate problems of information overload and adapted to the organizations’ specificity, risk, and materiality.

This study has some limitations. First, most of the data are observational and correlational data and come from a survey with single respondents. Replicating existing research instruments have been important for securing reliable and valid variables, but observational and correlational data do not prove causality and internal validity. Second, the responding organizations were on average larger than for the average in the population. Third, the data come from one country with a specific public management context. Public management reforms over many years have probably made strategic planning and performance management norms relatively similar across many countries, but still national and local public management regulations and practices may vary across countries. Norway, together with the other Nordic countries, is consistently rated as having an effective public sector and a high degree of digitized services. This could indicate that public management context in Norway has an innovative culture with much leadership support, which could have bearings on the results. External validity of some of the empirical relationships may be low, but we still believe the relationships between the factors have general valence.

Strategic planning and performance measurement are means to manage performance to create public value. Further studies could incorporate measures for organizational performance as well as more intermediate variables such as goal clarity, training, and stakeholder involvement in the strategic planning as well as in the performance measurement system design processes. It could also be interesting to identify specific performance management models in the design of the performance measurement systems to study if some models fit better to strategic planning than other models and impact performance information use and performance more than other models.

Acknowledgements

We acknowledge the comments we received when an earlier version of the paper was presented for the European Group of Public Administration (EGPA) annual conference, Permanent Study Group XI Strategic Management in Government, Lisbon, 7–9 September 2022. We also acknowledge the constructive comments from the Editor and the three reviewers.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Notes on contributors

Åge Johnsen

Åge Johnsen is a Professor of Public Policy at Oslo Business School, Oslo Metropolitan University, Norway. His research interests are public management reforms, strategic planning, performance management, change management, and audit and evaluation in the public sector.

Kerstin Solholm

Kerstin Emma Gunhild Ståhlbrand Solholm is a Senior Advisor at Innovation Norway. She works with performance management and governance.

Per Arne Tufte

Per Arne Tufte is an Associate Professor at Oslo Business School, Oslo Metropolitan University, Norway. His research interests include professionalism in financial advisory in social services, social science research methodology, and welfare reforms.

References

- Abdel-Maksoud, A., Elbanna, S., Mahama, H., & Pollanen, R. (2015). The use of performance information in strategic decision making in public organizations. International Journal of Public Sector Management, 28(7), 528–549. https://doi.org/10.1108/IJPSM-06-2015-0114

- Ammons, D. N. (1999). A proper mentality for benchmarking. Public Administration Review, 59(2), 105–109. https://www.jstor.org/stable/977630 https://doi.org/10.2307/977630

- Ammons, D. N., & Rivenbark, W. C. (2008). Factors influencing the use of performance data to improve municipal services: Evidence from the North Carolina benchmarking project. Public Administration Review, 68(2), 304–318. https://doi.org/10.1111/j.1540-6210.2007.00864.x

- Andersen, S. C., & Nielsen, H. S. (2020). Learning from performance information. Journal of Public Administration Research and Theory, 30(3), 415–431. https://doi.org/10.1093/jopart/muz036

- Andrews, R., Boyne, G. A., Law, J., & Walker, R. (2012). Strategic management and public service performance. Palgrave MacMillan.

- Askim, J. (2004). Performance management and organizational intelligence: Adapting the balanced scorecard in Larvik municipality. International Public Management Journal, 7(3), 415–438.

- Askim, J. (2007). How do politicians use performance information? An analysis of the Norwegian local government experience. International Review of Administrative Sciences, 73(3), 453–472. https://doi.org/10.1177/0020852307081152

- Askim, J. (2009). The demand side of performance measurement: Explaining councillors’ utilization of performance information in policymaking. International Public Management Journal, 12(1), 24–47. https://doi.org/10.1080/10967490802649395

- Askim, J., Johnsen, Å., & Christophersen, K. A. (2007). Factors behind organizational learning from benchmarking: Experiences from Norwegian municipal benchmarking networks. Journal of Public Administration Research and Theory, 18(2), 297–320. https://doi.org/10.1093/jopart/mum012

- Askim, J., & Johnsen, Å. (2023). Performance management and accountability: The role of intergovernmental information systems. In Filipe Teles (Ed.), Handbook on local and regional governance (pp. 437–452Edward Elgar. https://doi.org/10.4337/9781800371200.00042

- Bedford, D. S., & Malmi, T. (2015). Configurations of control: An exploratory analysis. Management Accounting Research, 27(2), 2–26. https://doi.org/10.1016/j.mar.2015.04.002

- Behn, R. D. (2003). Why measure performance? Different purposes require different measures. Public Administration Review, 63(5), 586–606. https://doi.org/10.1111/1540-6210.00322

- Benitez, J., Henseler, J., Castillo, A., & Schuberth, F. (2020). How to perform and report an impactful analysis using partial least squares: Guidelines for confirmatory and explanatory IS research. Information & Management, 57(2), 103168. https://doi.org/10.1016/j.im.2019.05.003

- Bryson, J. M. (2004). What to do when stakeholders matter: A guide to stakeholder identification and analysis techniques. Public Management Review, 6(1), 21–53. https://doi.org/10.1080/14719030410001675722

- Bryson, J. M. (2015). Strategic planning for public and nonprofit organizations. In J. Wright (Ed.), International encyclopedia of the social & behavioral sciences (2nd edition). Elsevier.

- Bryson, J. M., Crosby, B. C., & Bryson, J. K. (2009). Understanding strategic planning and the formulation and implementation of strategic plans as ways of knowing: The contributions of actor-network theory. International Public Management Journal, 12(2), 172–207. https://doi.org/10.1080/10967490902873473

- Bryson, J. M., & George, B. (2020). Strategic management in public administration. In B. Guy Peters & Ian Thynne (Eds.), Oxford research encyclopedia, politics. Oxford University Press.

- Bryson, J. M., George, B., & Seo, D. (2024). Understanding goal formation in strategic public management: a proposed theoretical framework. Public Management Review, 26(2), 539–564. https://doi.org/10.1080/14719037.2022.2103173

- Cavalluzzo, K. S., & Ittner, C. D. (2004). Implementing performance measurement innovations: Evidence from government. Accounting, Organizations and Society, 29(3–4), 243–267. https://doi.org/10.1016/S0361-3682(03)00013-8

- Daleq, B., & Hobbel, M. A. (2014). Spredning av balansert målstyring i norske kommuner. [The diffusion of balanced scorecard in Norwegian municipalities] [Master Thesis in Business Administration]. Handelshøyskolen i Trondheim.

- de Bruijn, H. (2007). Managing performance in the public sector (2nd Edition). Routledge.

- DiMaggio, P. J., & Powell, W. W. (1983). The iron cage revisited: Institutional isomorphism and collective rationality in organizational fields. American Sociological Review, 48(2), 147–160. Reprinted in revised edition in W. W. Powell & P. J. DiMaggio (Eds.). (1991). The new institutionalism in organizational analysis (pp. 63–82). University of Chicago Press.

- Dimitrijevska-Markoski, T., & French, P. E. (2019). Determinants of public administrators’ use of performance information: Evidence from local governments in Florida. Public Administration Review, 79(5), 699–709. https://doi.org/10.1111/puar.13036

- Donaldson, L. (2001). The contingency theory of organizations. Sage.

- Drucker, P. F. (1976). What results should you expect? A user’s guide to MBO. Public Administration Review, 36(1), 12–19. https://doi.org/10.2307/974736

- Etzioni, A. (1967). Mixed scanning. A “third” approach to decision-making. Public Administration Review, 27(5), 385–392. https://doi.org/10.2307/973394

- Ferlie, E., & Ongaro, E. (2022). Strategic management in public services organizations: Concepts, schools and contemporary issues (2nd edition). Routledge.

- Frederickson, D. G., & Frederickson, H. G. (2006). Measuring the performance of the hollow state. Georgetown University Press.

- George, B., & Pandey, S. K. (2017). We know the yin – but where is the yang? Toward a balanced approach on common source bias in public administration Scholarship. Review of Public Personnel Administration, 37(2), 245–270. https://doi.org/10.1177/0734371X17698189

- George, B., Baekgaard, M., Decramer, A., Audenaert, M., & Goeminne, S. (2020). Institutional isomorphism, negativity bias and performance information use by politicians: A survey experiment. Public Administration, 98(1), 14–28. https://doi.org/10.1111/padm.12390

- George, B., Walker, R., & Monster, J. (2019). Does strategic planning improve organizational performance? A meta-analysis. Public Administration Review, 79(6), 810–819. https://doi.org/10.1111/puar.13104

- Gerrish, E. (2016). The impact of performance management on performance in public organizations: A meta-analysis. Public Administration Review, 76(1), 48–66. https://doi.org/10.1111/puar.12433

- Grøn, C. H., & Kristiansen, M. B. (2022). What gets measured gets managed? The use of performance information across organizational echelons in the public sector. Public Performance & Management Review, 45(2), 448–472. https://doi.org/10.1080/15309576.2022.2045615

- Hair, J. F., Babin, B. J., Anderson, R. E., & Black, W. C. (2018). Multivariate data analysis (8th edition). Cengage.

- Han, X., & Moynihan, D. (2022). Does managerial use of performance information matter to organizational outcomes? The American Review of Public Administration, 52(2), 109–122. https://doi.org/10.1177/02750740211048891

- Hansen, J. R. (2011). Application of strategic management tools after an NPM-inspired reform: Strategy as practice in Danish schools. Administration & Society, 43(7), 770–806. https://doi.org/10.1177/0095399711417701

- Hansen, J. R., Pop, M., Skov, M. B., & George, B. (2022). A review of open strategy: Bridging strategy and public management research. Public Management Review, 26(3), 678–700. https://doi.org/10.1080/14719037.2022.2116091

- Hatry, H. P. (2002). Performance measurement: Fashions and fallacies. Public Performance & Management Review, 25(4), 352–358. https://doi.org/10.1080/15309576.2002.11643671