Abstract

Trust and privacy have emerged as significant concerns in online transactions. Sharing information on health is especially sensitive but it is necessary for purchasing and utilizing health insurance. Evidence shows that consumers are increasingly comfortable with technology in place of humans, but the expanding use of AI potentially changes this. This research explores whether trust and privacy concern are barriers to the adoption of AI in health insurance. Two scenarios are compared: The first scenario has limited AI that is not in the interface and its presence is not explicitly revealed to the consumer. In the second scenario there is an AI interface and AI evaluation, and this is explicitly revealed to the consumer. The two scenarios were modeled and compared using SEM PLS-MGA. The findings show that trust is significantly lower in the second scenario where AI is visible. Privacy concerns are higher with AI but the difference is not statistically significant within the model.

Introduction

Artificial intelligence (AI), particularly machine learning and deep learning is disrupting insurance and health. In insurance natural language processing is utilized extensively by virtual agents interacting with the consumer and AI is also used to detect fraudulent claims (Wang and Xu Citation2018). In health it is used to make a diagnosis and treatment (He et al. Citation2019). The use of AI creates value by offering insight and turning insight into action. This happens for several services across several channels and across the whole value chain. Humans still have an important role, but they need support to be able to utilize vast amounts of data and respond quickly. AI is broad and immature in relation to its potential, so this is a challenging area. This is an interdisciplinary topic that goes beyond the narrow focus of developing the necessary technology and testing the usability. Its interdisciplinary nature is comparable to the emergence of other technologies, such as blockchain. There are many questions that need answers, but these questions are heavily influenced by the present capability and the extant diffusion of the technology. The benefits of AI for the consumer in health insurance include customized offers and real-time adaptation of fees. Currently the interface between the consumer purchasing health insurance and AI raises some barriers such as trust and Personal Information Privacy Concern (PIPC) (Gulati, Sousa, and Lamas Citation2019). The consumer is not a passive recipient of the increasing role of AI. Many consumers have beliefs on what this technology should do. Furthermore, regulation is moving toward making it necessary for the use of AI to be explicitly revealed to the consumer (European Commission Citation2019). Therefore, the consumer is an important stakeholder and their perspective should be understood and incorporated into future AI solutions in health insurance. This research identified two scenarios, one with limited AI that is not in the interface, whose presence is not explicitly revealed to the consumer and a second scenario where there is an AI interface and AI evaluation, and this is explicitly revealed to the consumer.

The insights AI offers can be summarized into optimization, search and recommendation, and diagnosis and prediction. In addition to the improving technology of AI, the capabilities are also increasing because of the technologies it interacts with. These technologies include big data, internet of things, increased computing capabilities and blockchain (Riikkinen et al. Citation2018). In general, there is far more data and more capabilities to analyze it. This raises the question whether the impact of AI can be evaluated and guided in isolation or if all these technologies should be evaluated as a new context. Blockchain technology can support the internet of things in terms of security and integrity of the data, the internet of things creates far more data, big data and AI need to analyze it and they need more computing capabilities.

The challenges to AI depend on the specific implementation, the information system it is part of and the specific context. One challenge is implementations of AI that have negative impacts, for example on individuals’ health (He et al. Citation2019). The risks caused by AI seem to come from either using its capabilities to do something harmful more effectively or by AI making incorrect evaluations. In a fully automated system, mistakes will be implemented directly. If AI is working with humans, the humans may act based on the incorrect evaluation. As AI can be unpredictable this raises some questions in terms of control and how to manage the risks. Society, governments and other institutions like the European Union are attempting to regulate and offer guidelines on how to move forward with AI in an ethical way reducing the risks to the u consumer (European Commission Citation2019). This research focuses on the barriers from the consumer’s perspective when they are purchasing health insurance online.

AI offers some unique capabilities but most of the impact is as a part of a wave of innovation that will optimize and create new products, services, business models and business ecosystems. AI can be seen as the catalyst because it can harness the breadth of hardware and software in a way that was not possible before: It can mask the complexity and provide the value to the health insurance consumer. This increased role of AI, and the ecosystem of technologies it utilizes, influence the consumer’s attitude. The consumer will interpret some capabilities AI offers as enablers and some as risks and concerns. For example, limited trust and PIPC may be barriers.

The change from related technologies and other trends in society like greener living mean people want to see different principles and values from their insurers. Therefore, the new ethical, privacy and trust challenges AI brings can be approached as part of a holistic reevaluation of the relationship between a consumer and their health insurer. New business models may require a new ethical perspective. Ethics and regulation are evolving as the uses and business models of AI evolve. The new way of interacting with the consumer, the new interfaces or even business models must consider the enablers and barriers to AI in health insurance from the consumer’s perspective.

The next section will review health insurance, trust and PIPC to provide the theoretic foundation. This will be followed by the methodology that explains how the model is tested in the two scenarios, with and without visible AI. Finally, the analysis and the conclusion are presented.

Theoretical background

AI in health insurance raises many new issues grounded in the existing, and widely validated, constructs of ease of use, usefulness, trust and Personal Information Privacy Concern (PIPC).

Perceived ease of use and usefulness in health insurance with AI

Information systems have been divided into hedonic and utilitarian (van der Heijden Citation2004). Purchasing health insurance is mostly utilitarian as it is something useful but not necessarily an enjoyable process and the motivation to do it is not enjoyment. Adoption and attitude toward the use of a technology is influenced by some factors that are similar across different contexts and some that are different depending on the context. Perceived ease of use and perceived usefulness (Davis Citation1989; Venkatesh, Thong, and Xu Citation2016) have been found to influence adoption and use across several contexts such as adopting insurance (Lee, Tsao, and Chang Citation2015). In systems that are more focused on hedonism, a construct for enjoyment could be included along with ease of use and usefulness, but here it is not included. While these measures have been validated in the insurance sector the items used to operationalize them need to be adapted to capture the increased role of AI in health insurance. AI in health insurance can influence perceived ease of use with a personal assistant in several ways. Personal assistants are used in the interaction with the consumer and utilize machine learning for natural language processing and analyzing the consumer request. Firstly, the system using AI and a personal assistant can keep a constant state across many consumer queries. This means the system will keep all the relevant information together and utilize it for each query, so the consumer needs to make less effort. A second example is that AI can interpret unstructured data like pictures and emails from the consumer, so that information does not need to be reentered manually. AI in health insurance can influence perceived usefulness positively by processing applications fast and making customized offers. Some insurers offer customized quotes in minutes (Baloise Citation2019).

Trust in health insurance with AI

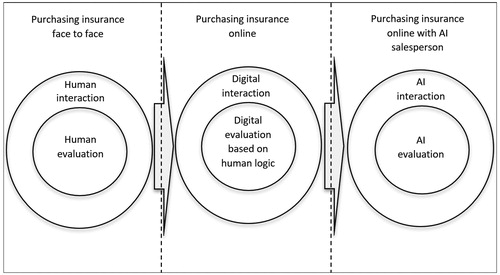

Trust is important where there is an exchange of value that involves some risk. The higher the risk the more important trust becomes. Making a purchase online is perceived to have higher risk compared to face to face and therefore the role of trust is more significant (McKnight and Chervany Citation2001). There is an additional risk in purchasing health insurance online because the consumer is taking a risk that the insurer will fulfill their duty and cover the consumer if they make a claim. Insurance companies and the information systems they use have developed sufficiently to build trust in the typical current scenario with limited AI that is not visible to the consumer. The increasing role of AI across the health insurance supply-chain brings new sources of distrust that come from the lack of human attributes in more stages of the supply chain, both front-end and back-end and the real or perceived unpredictability of AI. The consumer’s trust in a virtual agent they are interacting with can be reduced by the lack of human factors like visual and auditory emotions (Torre, Goslin, and White Citation2020). As illustrates the interaction of the consumer can be divided into three scenarios: Firstly, a traditional face to face interaction without utilizing technology. Secondly, a digital interaction online that still utilizes human logic primarily and uses AI in a limited way that is not visible to the consumer. Lastly, the third scenario involves the consumer interacting purely with an AI interface and with the underlying logic and decision making also based on AI.

The consumer’s trust online is influenced by the psychology of the consumer and sociological influences. The psychology of the individual consumer may give them a propensity to trust (Kim and Prabhakar Citation2004) which is similar to a disposition to trust (Zarifis et al. Citation2014) and a trusting stance (McKnight et al. Citation2011). The social dimension of trust includes institution based trust that covers structural assurance and situational normality (McKnight and Chervany Citation2001). Structural assurance refers to guarantees, seals of approval and protection from the bank or card used to make the transaction (Sha Citation2009). Situational normality can include reviews and conforming to social norms.

The risk the consumer perceives from AI having a significant and decisive role in every aspect of their health insurance can lower trust. There is limited familiarity with this level of AI, the ethics of AI are unclear, AI can be unpredictable, the transparency can be limited and the control the humans in the insurance company have over AI is unclear.

Personal information privacy concerns (PIPC) from threats by AI in health insurance

When a consumer enters personal information such as their date of birth, their bank account details and medical information on their health to acquire health insurance online there is a concern in how this information is used, shared and stored securely. For a consumer to purchase health insurance they need to provide an extensive amount of information; enough for a criminal to commit fraud. Even if misuse or a security breach is revealed to the consumer, they cannot change their health information like they can change their bank account details to protect their privacy. Therefore, sharing this health information causes privacy concerns (Bansal, Zahedi, and Gefen Citation2010). These privacy concerns can be a key predictor of using health services online (Park and Shin Citation2020). Privacy concerns can be divided into perceived privacy control and perceived privacy risks (Dinev et al. Citation2013). Perceived privacy control can include confidentiality, secrecy and anonymity. Perceived privacy risks can include the sensitivity of the information and the level of regulation (Dinev et al. Citation2013).

Using AI in healthcare insurance can reduce the perceived information control and increase the perceived risk. The lack of understanding of the role of AI in this context, the unpredictability of AI, the low transparency on the algorithms, the lack of humanness and the unclear ethics may increase the concerns over personal information privacy. Furthermore, AI is part of an ecosystem of technologies such as big data that enhance each other’s capabilities and pervasiveness. These increased capabilities and pervasiveness can elevate privacy concerns. When a consumer enters their personal information during the process of acquiring health insurance, they may be thinking about how this personal information could be used against them in the future. Currently, insurance companies offer privacy assurances and privacy seals that can reduce privacy concern (Hui, Teo, and Sang-Yong Citation2007) however these do not explicitly cover the role of AI.

Research model and hypotheses

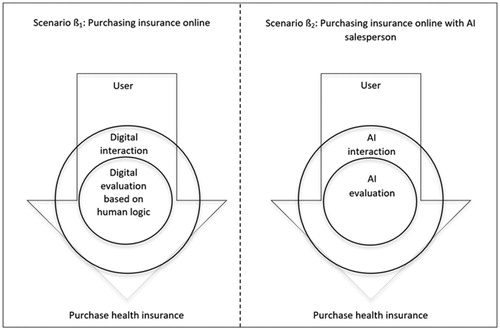

The online consumer evaluates the advantages and disadvantages of purchasing a product or service online before moving forward. Some weaknesses, or even threats, can be overlooked if the advantages are enough. An example of this is the privacy paradox (Norberg, Horne, and Horne Citation2007) where consumers still give their personal information despite their concerns. Therefore, it is necessary to identify all the relevant factors and model their relationship. The literature review identified two enablers and two barriers to the use of AI in insurance from the consumer’s perspective. The previous section on trust also identified how trust is influenced by the humanness of the interface with the insurer, and the humanness of the logic that leads to the evaluation of the insurance offered. As the consumer will be explicitly informed about their interaction with AI (European Commission Citation2019) this may influence their attitude and raise the barriers of insufficient trust and information privacy concerns. To better understand the scenario where the consumer purchases health insurance with an AI interface and AI logic and decision-making, this new scenario needs to be contrasted with the typical existing scenario. In the existing scenario there is limited AI, that is not in the interface, and its presence is not explicitly revealed to the consumer. Therefore, as illustrated in , two scenarios are contrasted, ß1, with limited AI, not explicitly revealed to the consumer and ß2 with an AI interface and AI evaluation explicitly revealed to the consumer.

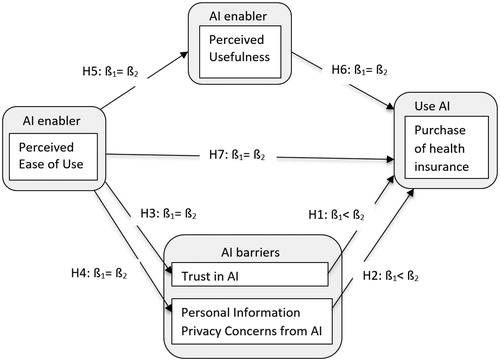

For these scenarios to be tested they are modeled as illustrated in . The seven hypotheses proposed cover the relationships between the five constructs. The first two constructs are the enablers, firstly the additional ease of use offered by AI and secondly the additional usefulness offered by AI. The second two constructs are the two barriers of trust and privacy concern. The final construct is the decision to purchase health insurance online.

The literature suggests that additional layers of technology, additional capabilities of technology and a reduction in the role of humans in a process can be perceived as an increase in the risk and therefore a reduction in trust and an increase in information privacy concern (McKnight et al. Citation2011). Therefore, the first and second hypotheses are the following:

H1: The consumer will have lower trust if the use of AI is visible to them during the process of purchasing health insurance online.

H2: The consumer will have higher Perceived Information Privacy Concern if the use of AI is visible to them during the process of purchasing health insurance online.

The literature suggests that perceived ease of use has a positive influence on perceived usefulness, trust, privacy concern and the purchase of health insurance in both scenarios (Gefen, Karahanna, and Straub Citation2003; Al-Khalaf and Choe Citation2020). Similarly, perceived usefulness has a positive influence on the purchase in both scenarios. Therefore, the final five hypotheses are the following:

H3: Perceived ease of use will have the same influence on trust in AI if AI is visible during the purchase of health insurance online.

H4: Perceived ease of use will have the same influence on Personal Information Privacy Concern from AI if AI is visible during the purchase of health insurance online.

H5: Perceived ease of use will have the same influence on perceived usefulness of AI if AI is visible during the purchase of health insurance online.

H6: Perceived usefulness will have the same influence on the purchase of health insurance online if AI is visible.

H7: Perceived ease of use will have the same influence on the purchase of health insurance online if AI is visible.

Research methodology

Measures

While the five constructs of the model have been widely utilized and validated, the items applied to measure them need to be adapted to the context of purchasing health insurance online with AI. illustrates the five constructs and the sources of the items that were adapted. Each question has a seven-point Likert for the participant to give their feedback from strongly disagree (1) to strongly agree (7).

Table 1. Constructs and their indicators.

Data collection

This research evaluates whether a consumer purchasing health insurance without visible AI will have higher trust and lower PIPC compared to when there is visible AI in the interaction. As this is evaluated from the consumer’s perspective, two consumer journeys are used with two separate groups of participants. The two journeys used are based on the process a consumer goes through in five insurers that were evaluated. The first group, ß1, are given a consumer journey for purchasing insurance in a process where AI is not visible and there is human participation from the insurance company. The second group ß2 are given a consumer journey for purchasing health insurance in a process where AI is used at every step and this role of AI is made clear to the consumer. The participants then completed a survey that covers the seven hypotheses. The survey was disseminated using the SoSci Survey platform that meets GDPR requirements and stores the data within the EU. The minimum sample size for a statistical power of 80% and a significance level of 1% for the model of five latent variables with three indicators each was calculated to be 176 (Hair et al. Citation2014). For the first group 248 participants completed the first survey and for the second group 237 completed the second survey. After incomplete and unreliable surveys were taken out 221 were left for the first survey and 217 for the second. The participants were UK residents. The demographic information for both groups is presented in .

Table 2. Demographic information for both groups.

Data analysis technique

The model was tested with Structural Equation Modeling (SEM) with the Partial Least Squares method (PLS). The relationships between the five variables was tested for each of the two groups separately and then the two groups were compared between themselves across the seven hypotheses. The two groups were compared by applying PLS multigroup analysis (MGA) and bootstrapping with Smart PLS. First the measurement model is evaluated, followed by the structural model.

Data analysis and results

The purpose of the multigroup analysis was to evaluate the effect of the moderator variable, in this case having visible human involvement, between the two groups. The Smart PLS-MGA will show whether the difference is statistically significant. The null hypothesis H0 is that there is no difference between the two groups and the alternative hypothesis H1 is that there is a difference. As PLS-MGA is a new and evolving analysis method, simpler descriptive statistics were also implemented.

Descriptive statistics

The differences between the mean values of the two groups are not large as illustrated in . However, two points about the difference between the scenarios must be highlighted: Firstly, they are consistent across the six items forming the two constructs of trust and PIPC. Secondly, in the first scenario, trust is marginally above 4, so marginally positive, and PIPC is marginally below 4. This positive trust and negative PIPC is conducive to a purchase. In the second scenario, despite the small difference this is reversed and therefore trust and PIPC are not conducive toward a purchase. This illustrates how small changes in perceptions on trust and PIPC can nevertheless be decisive.

Table 3. Mean values for both groups.

Measurement model

The reflective measurement model was evaluated in several ways. shows the results of the measurement model analysis. The factor loadings are over 0.7 so the indicators appear to be sufficiently reliable. The Composite Reliability (CR) is above 0.7 so the construct reliability between the items and the latent variable is sufficient (Hair et al. Citation2014). The convergent validity is evaluated by the Average Variance Extracted (AVE) AVE is above the required minimum of 0.5. The discriminant validity is below the 0.85 threshold (Hair et al. Citation2014).

Table 4. Results of the measurement model analysis.

The tests for measurement invariance are illustrated in . There is some invariance for two of the fifteen indicators, PEOU-3 and UI-1. Each variable has three reflective items which reduce the influence of each item with some invariance. The influence of a small degree of measurement invariance of an item in PLS-MGA is a topic of debate (Sarstedt, Henseler, and Ringle Citation2011) with some considered acceptable in multigroup analysis, as different groups may have some difference in their understanding of the measurement model (Rigdon, Ringle, and Sarstedt Citation2010).

Table 5. Test for measurement invariance.

Structural model

The coefficient of determination R2 for endogenous latent variables is ‘weak’ for P (0.004, 0.018) and T (0.004, 0.026) ‘moderate’ for PU (0.339, 0.419) and UI (0.562, 0.633) (Chin Citation1998). This was the same across both groups. The path coefficients are presented in . The final column evaluates the difference between the two models and whether the hypotheses are supported. Values below 0.05 or above 0.95 are significant in the PLS-MGA analysis. The paths T-UI (H1) and PU-UI (H6) have a significant difference. There was a difference expected for H1 which is supported. The difference for H6 was not expected. There was also a difference expected for PIPC-UI but none was found. The remaining hypotheses had no differences as expected. Therefore, the hypotheses H1, H3, H4, H5, H7 are supported by PLS-MGA and the hypotheses H2, H6 are not supported.

Table 6. Multi-group comparison test results.

Discussion

Theoretical contributions

AI is having an increasing influence and the health insurance sector is both intrinsically important and likely to be an important benchmark for the satisfactory exchange of sensitive information with AI. This research brought together the literature of AI, insurance, health, e-commerce, trust and Personal Information Privacy Concerns (PIPC). It is important to understand the consumer’s perspective as they have beliefs on what the role AI should be. Furthermore, the insurer must explicitly inform them if they are using AI when communicating with them in the interface (European-Commission Citation2019). This research identified two scenarios, one with limited AI that is not in the interface, whose presence is not explicitly revealed to the consumer and a second scenario where there is an AI interface and AI evaluation, and this is explicitly revealed to the consumer.

The two scenarios were modeled and compared using SEM PLS-MGA. Both models were similar in terms of which paths were strong and which were weak. The pathways from PEOU to PU, PU to UI, T to UI and PIPC to UI were strong while the paths from PEOU to UI, T and PIPC were weak. This indicates that the model captures most relationships well apart from PEOU which only has a strong influence on PU.

The analysis gives further support to the hypotheses H1, H3, H4, H5, H7. However, hypotheses H2, H6 are not supported. Therefore, both descriptive and PLS-MGA, support the different level of trust with and without visible AI involvement. Furthermore, it is also supported that trust is higher without visible AI involvement. This extends literature on how trust is lower in certain contexts (Thatcher et al. Citation2011) to the context of health insurance with AI. Our findings also extend the literature on how different forms of AI interaction influence trust (Torre, Goslin, and White Citation2020). Most of the current literature is focused on the use of language by clearly visible AI so it is beneficial that this research also evaluated the less visible AI. While the mean of PIPC was lower without visible AI involvement, PLS-MGA did not identify this as statistically significant within the model. Therefore, there is some support that privacy concerns that were validated to be stronger in other contexts (Dinev et al. Citation2015) are also stronger in this context with AI (Park and Shin Citation2020). More specifically, there is some support that privacy concerns influence the sharing of personal information on health (Park and Shin Citation2020; Chen, Zarifis, and Kroenung Citation2017).

Implications for practice

The implications for practice are related to how the reduced trust and increased privacy concern with visible AI are mitigated.

Avoid being explicit about the use of AI

In some parts of the world the use of AI must be stated and visible so there is no choice for health insurers. If there is no legal requirement to be explicit about the use of AI, then the insurer may decide not to be explicit about its use. This strategy has the limitation that several uses of AI such as chatbots and natural language processing are hard to conceal.

Mitigate the lower trust with explicit AI

The first step to mitigating the lower trust caused by the explicit use of AI is to acknowledge this challenge and the second step is to understand it. The quantitative analysis supports the existence of this challenge and the literature review indicates what causes it. The causes are the reduced transparency and explainability. A statement at the start of the consumer journey about the role AI will play and how it works may reinforce transparency and help to explain it, which will then reinforce trust. Secondly, the level of the importance of trust is increased as the perceived risk is increased. Therefore, the risks should be reduced. Thirdly, it should be illustrated that the increased use of AI does not reduce the inherent humanness. For example, it can be shown how humans train AI and how AI adopts human values. Alternatively, as it has been proven that trust building can focus on human (benevolence, integrity and ability) or system characteristics (helpfulness reliability and functionality) (Lankton, McKnight, and Tripp Citation2015), trust building can focus on system characteristics.

Lastly, beyond the specific consumer and their journey, society can be influenced to build trust toward AI. In addition to the psychological responses to the specific consumer journey, there are also the social influences. Therefore, society in general can be offered a positive narrative on the trustworthiness of AI and share positive experiences in social learning.

Mitigate the higher personal information privacy concern (PIPC) with explicit AI

The consumer is concerned about how AI will utilize their financial, health and other personal information. Health insurance providers offer privacy assurances and privacy seals, but these do not explicitly refer to the role of AI. Assurances can be provided about how AI will use, share and securely store the information. These assurances can include some explanation of the role of AI and cover confidentiality, secrecy and anonymity. For example, while the consumer’s information may be used to train machine learning it can be made clear that it will be anonymized first. The consumer’s perceived privacy risks can be mitigated by making the regulation that protects them clear.

Limitations and future research direction

Many theories and models of trust have been found to be valid across different cultures, however, it has also been proven that there can be some variation across cultures (Connolly Citation2013). Therefore, the model developed here could be further explored in different cultures.

While it was shown that trust is different with and without visible AI the role of PIPC should be explored further. The differences identified offer new avenues for further exploration. Furthermore, the value and limitations of SEM PLS-MGA were visible in this type of methodology.

Conclusion

This research identified two consumer journeys for purchasing health insurance: The first has limited AI that is not visible in the interface and its presence is not explicitly revealed to the consumer. The second has AI in the interface and the evaluation, and its presence is explicitly revealed to the consumer.

The two scenarios were modeled and compared using SEM PLS-MGA. For both models Perceived Usefulness, Trust and Personal Information Privacy Concern (PIPC) influenced the use of health insurance. Both descriptive analysis and PLS-MGA, support the lower level of trust with visible AI involvement in comparison to when AI is not visible. The mean of PIPC was higher with visible AI but this was not statistically significant within the model. These contributions clarify the relationship between the consumer, AI and the health insurance provider and set an agenda for future research on this topic. This agenda might be extended beyond health insurance to other transactions and applications, particularly those that require sensitive information.

References

- Al-Khalaf, E., and P. Choe. 2020. Increasing customer trust towards mobile commerce in a multicultural society: a case of Qatar in a multicultural society: a case of Qatar. Journal of Internet Commerce 19 (1):32–61. doi:10.1080/15332861.2019.1695179.

- Baloise. 2019. “Friday (Baloise Insurance).” 2019. https://www.friday.de/lp/corporate-en.

- Bansal, G., F. M. Zahedi, and D. Gefen. 2010. The impact of personal dispositions on information sensitivity, privacy concern and trust in disclosing health information online. Decision Support Systems 49 (2):138–150. doi:10.1016/j.dss.2010.01.010.

- Chen, L., A. Zarifis, and J. Kroenung. 2017. The role of trust in personal information disclosure on health-related websites. European Conference on Information Systems Proceedings 2017, 771–786. Guimaraes.

- Chin, W. W. 1998. The partial least squares approach to structural equation modelling. In In modern methods for business research, ed. G. A. Marcoulides, 295–336. Mahwah, NJ: Lawrence Erlbaum Associates.

- Connolly, R. 2013. Ecommerce trust beliefs: examining the role of national culture. In Information systems research and exploring social artifacts, eds. P. Isaias and M. B. Nunes, 20–42. Hershey: IGI Global. doi:10.4018/978-1-4666-2491-7.ch002.

- Davis, F. 1989. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly 13 (3):319–340. doi:10.2307/249008.

- Dinev, T., H. Xu, J. H. Smith, and P. Hart. 2013. Information privacy and correlates: an empirical attempt to bridge and distinguish privacy-related concepts. European Journal of Information Systems 22 (3):295–316. doi:10.1057/ejis.2012.23.

- Dinev, T., V. Albano, H. Xu, A. D’Atri, and P. Hart. 2015. Individuals’ attitudes towards electronic health records: a privacy calculus perspective. In Advances in healthcare informatics and analysis, edited by Ashish Gupta, Vimla Patel, and Robert Greenes, 19–50. New York: Springer.

- European-Commission. 2019. “Ethics Guidelines for Trustworthy AI.” https://ec.europa.eu/digital

- Gefen, D., E. Karahanna, and W. S. Straub. 2003. Trust and TAM in online shopping: an integrated model. MIS Quarterly 27 (1):51–90. doi:10.2307/30036519.

- Gulati, S., S. Sousa, and D. Lamas. 2019. Design, development and evaluation of a human-computer trust scale. Behaviour & Information Technology 38 (10):1004–1015. doi:10.1080/0144929X.2019.1656779.

- Hair, J., T. Hult, C. Ringle, and M. Sarstedt. 2014. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM). SAGE Publications. Vol. 46. Thousand Oaks. 10.1016/j.lrp.2013.01.002.

- He, J., S. L. Baxter, J. Xu, J. Xu, X. Zhou, and K. Zhang. 2019. The practical implementation of artificial intelligence technologies in medicine. Nature Medicine 25 (1):30–6. doi:10.1038/s41591-018-0307-0.

- Heijden, H. v d. 2004. User acceptance of hedonic information systems. MIS Quarterly 28 (4):695–704.

- Hoque, R., and G. Sorwar. 2017. Understanding factors influencing the adoption of MHealth by the elderly: An extension of the UTAUT model. International Journal of Medical Informatics 101 (September 2015):75–84. doi:10.1016/j.ijmedinf.2017.02.002.

- Hui, K. L., H. H. Teo, and T. L. Sang-Yong. 2007. The value of privacy assurance: an exploratory field experiment. MIS Quarterly 31 (1):19–33. 10.1177/0969733007082112.

- Kim, K. K., and B. Prabhakar. 2004. Initial trust and the adoption of B2C E-commerce. ACM SIGMIS Database: The DATABASE for Advances in Information Systems 35 (2):50–64. doi:10.1145/1007965.1007970.

- Lankton, N., H. McKnight, and J. Tripp. 2015. Technology, humanness, and trust: rethinking trust in technology. Journal of the Association for Information Systems 16 (10):880–918. doi:10.17705/1jais.00411.

- Lee, C.-Y., C.-H. Tsao, and W.-C. Chang. 2015. The relationship between attitude toward using and customer satisfaction with mobile application services: an empirical study from the life insurance industry. Journal of Enterprise Information Management 44 (3):347–367. 10.1108/MRR-09-2015-0216.

- McKnight, H., and N. L. Chervany. 2001. What trust means in E-commerce customer relationships: an interdisciplinary conceptual typology. International Journal of Electronic Commerce 6 (2):35–59. doi:10.1080/10864415.2001.11044235.

- McKnight, H., M. Carter, J. B. Thatcher, and P. Clay. 2011. Trust in a specific technology: an investigation of its components and measures. ACM Transactions on Management Information Systems 2 (2):1–25. doi:10.1145/1985347.1985353.

- McKnight, H., V. Choudhury, and C. Kacmar. 2002. Developing and validating trust measures for E-commerce: an integrative typology. Information Systems Research 13 (3):334–359. doi:10.1287/isre.13.3.334.81.

- Norberg, P., D. Horne, and D. Horne. 2007. The privacy paradox: personal information disclosure intentions versus behaviors. Journal of Consumer Affairs 41 (1):100–126. doi:10.1111/j.1745-6606.2006.00070.x.

- Park, Y. J., and D. Shin. 2020. Contextualizing privacy on health-related use of information technology. Computers in Human Behavior 105:106204–106209. doi:10.1016/j.chb.2019.106204.

- Rigdon, E. E., C. M. Ringle, and M. Sarstedt. 2010. Structural modeling of heterogeneous data with partial least squares. In Review of marketing research, ed. N. K. Malhotra, 7th ed., 255–296. Armonk: Sharpe.

- Riikkinen, M., H. Saarijärvi, P. Sarlin, and I. Lähteenmäki. 2018. Using artificial intelligence to create value in insurance. International Journal of Bank Marketing 36 (6):1145–1168. doi:10.1108/IJBM-01-2017-0015.

- Sarstedt, M., J. Henseler, and C. M. Ringle. 2011. Multigroup analysis in partial least squares (PLS) path modeling: alternative methods and empirical results. Advances in International Marketing 22 (2011):195–218. doi:10.1108/S1474-7979(2011)0000022012.

- Sha, W. 2009. Types of structural assurance and their relationships with trusting intentions in business-to-consumer e-commerce. Electronic Markets 19 (1):43–54. doi:10.1007/s12525-008-0001-z.

- Thatcher, J. B., H. McKnight, E. W. Baker, R. Ergün Arsal, and N. H. Roberts. 2011. The role of trust in postadoption IT exploration: an empirical examination of knowledge management systems. IEEE Transactions on Engineering Management 58 (1):56–70. doi:10.1109/TEM.2009.2028320.

- Torre, I., J. Goslin, and L. White. 2020. If your device could smile: people trust happy-sounding artificial agents more. Computers in Human Behavior 105:106215. doi:10.1016/j.chb.2019.106215.

- Venkatesh, V., J. Y. L. Thong, and X. Xu. 2016. Unified theory of acceptance and use of technology: a synthesis and the road ahead. Journal of the Association for Information Systems 17 (5):328–376. doi:10.17705/1jais.00428.

- Wang, Y., and W. Xu. 2018. Leveraging deep learning with LDA-based text analytics to detect automobile insurance fraud. Decision Support Systems 105:87–95. doi:10.1016/j.dss.2017.11.001.

- Wu, I. L., J. Y. Li, and C. Y. Fu. 2011. The adoption of mobile healthcare by hospital’s professionals: an integrative perspective. Decision Support Systems 51 (3):587–596. doi:10.1016/j.dss.2011.03.003.

- Xu, H., T. Dinev, H. J. Smith, and P. Hart. 2008. Examining the formation of individual’s privacy concerns: toward an integrative view. International conference on information systems, 1–16.

- Zarifis, A., L. Efthymiou, X. Cheng, and S. Demetriou. 2014. Consumer trust in digital currency enabled transactions. Lecture Notes in Business Information Processing 183:241–254. 10.1007/978-3-319-11460-6_21.

- Zhao, Y., Q. Ni, and R. Zhou. 2018. What factors influence the mobile health service adoption? A meta-analysis and the moderating role of age. International Journal of Information Management 43: 342–350. doi:10.1016/j.ijinfomgt.2017.08.006.