ABSTRACT

Background

UNESCO [(2015). Quality Physical Education Guidelines for Policymakers. Paris: UNESCO Press.] highlighted the importance of developing physical literacy (PL) from childhood, although it remains unclear how best to evidence an individual’s PL journey. The aim of this study was to explore key stakeholders’ views of current practice, future directions and effective implementation of PL assessment, with a view to informing the development of a rigorous, authentic, and feasible PL assessment.

Methods

Purposive samples of children aged between 6–7 years (n = 39), 10–11 years (n = 57), primary school teachers (n = 23), and self-defined experts with an interest in PL (academics n = 13, practitioners n = 8) were recruited to take part in a series of concurrent semi-structured focus groups. Each group included a maximum of six participants, lasted on average 40 (30–90) minutes were audio-recorded and transcribed verbatim. Data were analysed using deductive and inductive thematic analysis and key themes were represented by pen profiles.

Results

Higher order themes of (i) existing assessments, (ii) demand for PL assessment, (iii) acceptability of PL assessment, and (iv) factors related to implementation of PL assessments were identified. All stakeholders viewed the assessment of PL as important, but in line with well-established barriers within physical education (PE), assessment was not a priority in many schools, resulting in a variability in existing practice. No assessment of the affective and cognitive domains of PL was reported to be in use at participating schools. All stakeholder groups recognised the potential benefits of using technology within the assessment process. Children recognised that teachers were constantly making judgements to help them improve, and agreed that assessment could help record this, and that assessment should be differentiated for each child. Teachers widely reported that future assessment should be time-efficient, simple, and useful.

Conclusion

Study findings revealed a demonstrable need for a feasible PL assessment that could be effectively used in schools. To our knowledge, this is the first attempt to involve these stakeholders, and triangulate data, to inform future PL assessment and practice. Findings provide an evidence base to inform the onward development of a conceptually aligned PL assessment tool, suitable for use in schools. In turn, this will enable robust, empirical evidence to be collated, to evidence theory, and inform practice and policy.

Introduction

Physical literacy (PL) is commonly defined as being ‘an individual’s motivation, confidence, physical competence, knowledge and understanding to value and take responsibility for engagement in physical activities for life’ (International Physical Literacy Association (IPLA), Citation2017), though it has various interpretations internationally (Edwards et al. Citation2017; Shearer et al. Citation2018; Keegan et al. Citation2019). While physical literacy been identified as a guiding framework and overarching goal of quality physical education (PE) (UNESCO, Citation2015), there is lack of empirical evidence linking PL to health and well-being outcomes, PA determinants, or its own defining elements (Cairney et al. Citation2019). In part, this may have resulted from the difficulty in defining the concept, debate regarding the appropriateness of assessment, and ultimately, the lack of an accepted measure (Giblin, Collins, and Button Citation2014; Edwards et al. Citation2017, Citation2018; Robinson, Randall, and Barrett Citation2018; Barnett et al. Citation2019). Nevertheless, it has been argued that appropriate assessment of childhood PL could improve the standards, expectations, and the profile of PL and PE, which will lead to more ‘physically literate’ and active children (Tremblay and Lloyd Citation2010; Corbin Citation2016).

Throughout compulsory education, assessment is a critical aspect of pedagogical practice and accountability systems (DinanThompson and Penney Citation2015). Assessment of PL could be used to chart an individual’s progress, promote learning and highlight key areas for support and development (Penney et al. Citation2009; Green et al. Citation2018). In line with Edwards et al. (Citation2018), the current paper uses the term assessment as it is widely used and understood within educational and physical activity contexts. The term assessment should be taken to include measurement, charting, monitoring, tracking, evaluating, characterising, observing, or indicating physical literacy. Primary teachers reported that assessment in PE provides structure and focus to the planning, teaching and learning process, which positively impacts both the teacher and child (Ní Chrónín and Cosgrave Citation2013). Teachers themselves have, however, cited barriers to implementing assessment in PE for example; the lack of priority given to PE, limited time, space, and expertise (Lander et al. Citation2016; van Rossum et al. Citation2019), difficulty in assessment differentiation and limited availability of samples (Ní Chrónín and Cosgrave Citation2013), and varied beliefs, understandings, and engagement regarding assessment (DinanThompson and Penney Citation2015). Thus, considering the feasibility of an assessment tool is of vital importance when determining appropriate use within educational contexts (Barnett et al. Citation2019).

Despite being imperative to successful implementation, feasibility is often overlooked or not reported within the development stages of assessments (Klingberg et al. Citation2019). Bowen et al. (Citation2009) identified eight areas for consideration in feasibility studies: acceptability, demand, implementation, practicality, adaptation, integration, expansion, and limited efficacy. Acceptability (to what extent is a new assessment judged as suitable?), demand (to what extent is a new assessment likely to be used?), and implementation (to what extent can an assessment be successfully delivered to intended participants?) provide useful areas of focus when considering the feasibility of PL assessment in primary schools. Exploration of these areas can provide information around Can it work? [PL assessment], Does it work? [existing PL assessments, if applicable] and Will it work? [ideas for future PL assessment], allowing for potential solutions to the well-established barriers encountered when assessing in PE to be identified and explored with key stakeholders. ‘Experts’ are often consulted in the design of new assessment methods (Longmuir and Tremblay Citation2016; Longmuir et al. Citation2018; Barnett et al. Citation2019; Keegan et al. Citation2019), yet it is the teachers, support staff, and children who have the expertise on what would be feasible and acceptable for implementation within a school context (Morley et al. Citation2019). Thus, those involved in primary PE – the assessment users – should be involved in formative stages of research (Jess, Carse, and Keay Citation2016). Until recently, despite being widely encouraged to develop PL, teacher’s beliefs regarding the concept had not been examined (Roetert and Jefferies Citation2014). Children’s voices are also often neglected due to perceived barriers to involving children in research, e.g. interaction preference, linguistic and cognitive ability, and the validity and reliability of responses (Jacquez, Vaughn, and Wagner Citation2013). Research that involves children can be empowering and increases the likelihood that results will be accepted, meaningful, and valid (Jacquez, Vaughn, and Wagner Citation2013). Taken together, there is a lack of research that explores teachers’ and children’s perceptions of assessment in PE and of PL, and more research is warranted.

The aim of this study was to explore key stakeholders (academics/practitioners, teachers, and children) perceptions of PL assessment in primary PE, including current practice, with a view to informing future PL assessment development. Following Bowen et al. (Citation2009), three areas of feasibility were used as a framework for analysis: acceptability, demand, and implementation. These areas were deemed of particular importance in formative PL assessment work in order to provide pragmatic recommendations that would optimise the implementation of PL assessments in practice.

Methods

Design

A pragmatic research approach was undertaken using purposeful sampling with key PL stakeholders. Focus groups involving academics/practitioners with an interest in PL, teachers who regularly deliver primary PE and children in Key Stage One (KS1, 6–7 year old) and Key Stage Two (KS2, 10–11 year olds) were conducted concurrently between summer and winter 2018 across the United Kingdom (UK) (see ). The study was granted ethical approval by the Research Ethics Committee of Liverpool John Moores University (Ref. 18/SPS/037) and adheres to the Consolidated Criteria for Reporting Qualitative studies (COREQ) checklist of reporting for qualitative studies (Tong, Sainsbury, and Craig Citation2007). All adult participants provided written informed consent to take part in the study and brief demographic and biographic information. Headteacher and parent/carer consent was obtained for child participants, who themselves gave verbal assent.

Table 1. Participant distribution and school description.

Participants and settings

Children and teachers

Fourteen UK primary schools were invited to take part in the study via publicly available email lists with eight head teachers providing gatekeeper consent (see ). Following guidance on conducting focus groups with children (Gibson Citation2007; Angell, Alexander, and Hunt Citation2015), groups of four to six 6–7 year olds and 10–11 year olds were randomly selected to take part. A total of 39 children (18 female) aged 6–7 years old participated in 6 focus groups, 57 children (25 = female) aged 10–11 years old participated in 10 focus groups. 135 teachers and 115 teaching assistants (TA) were invited to take part in the study. 23 teaching staff (19 = female; 15 = teachers, 8 = TA, approximately 10% response rate), agreed to take part in six focus groups. Reasons for non-participation were not collected.

Experts

A convenience sample of academics/practitioners with an interest in PL assessment was sought to represent expert perspectives. 2018 IPLA (International Physical Literacy Association) Conference delegates were invited to take part in focus groups at the conference venue. Other academics/practitioners known to work within PL were contacted via publicly available email addresses and invited to take part in an additional focus group held at a University setting.

Forty academics/practitioners were invited to take part. Reasons for refusal to take part were mainly due to time constraints and lack of availability. 21 participants (8 = practitioners, 13 = academics; 14 = female) with ages ranging from 25 to 60+ years who classified themselves as working within education (n = 11), sport (n = 5), research (n = 2) or a combination of these sectors (n = 3), with a minimum of three years’ experience within that field. Two participants self-identified their PL experience level as ‘expert’, six as ‘proficient’, nine considered themselves as ‘competent’ and four identified as ‘beginner’.Throughout this paper, this group will be referred to as 'academic/practitioners'.

Data collection

Interactive focus groups were used to stimulate engagement, interest, and discussion. A semi-structured focus group guide was based on recommendations from Bowen et al. (Citation2009) to explore feasibility of a PL assessment with regards to (i) acceptability, (ii) demand, and (iii) implementation. Whilst all focus group schedules addressed these areas, the wording of questions was altered for different participant groups and checked by researchers experienced in conducting research with children (fourth and last author) and a Health and Care Professions Council Registered Practitioner Psychologist (third author). The focus group guide was piloted which resulting in refinement of the ordering and wording of questions. All focus groups were conducted by the first and second author, who both had experience in facilitating focus groups and working with children. Focus groups with teachers and children were conducted in a quiet space on the school premises such as a spare classroom or the staff room.

With the children’s focus groups, autonomous engagement was encouraged by offering children choices and nurturing a supportive relationship providing opportunities where children could voice their needs and opinions (Domville et al. Citation2019). Data collection was undertaken using an adaptation of the Write, Draw, Show, and Tell method (Noonan et al. Citation2016). At the start of each focus group, as an icebreaker, children were asked to write or draw about ‘a time they knew they had done well in PE’ and were then invited to talk about their drawings to prompt further discussion. This activity prompted children to recall and relate to their own experience while also participating in an engaging, creative task relevant to the focus group topic. The facilitator then prompted the children to think outside their experiences in PE to other assessment methods they are familiar with, and consider positives and negatives of the assessment experience. After this point, the facilitator introduced the concept of physical literacy using a series of images of children displaying various characteristics of physical literacy, with descriptions underneath with the stem ‘this person is … ’. For example, a picture of a child jumping into a swimming pool with the stem ‘this person is brave when swimming’, which was chosen as a child friendly term to represent confidence, persistence and willingness to try new things, which are affective elements consistent with international conceptualisations of physical literacy (Whitehead Citation2010; Whitehead Citation2010; Dudley Citation2015; Longmuir et al. Citation2015; Longmuir and Tremblay Citation2016; Edwards et al. Citation2017; Keegan et al. Citation2019). A further example was an image of a child looking hot and tired ‘this child tries really hard when playing games’ to represent fitness as an element within physical competence. Approximately four images and descriptions were given for each domain (physical, affective, cognitive), all images were chosen in consultation with the research team who have considerable experience working in physical literacy, physical activity, and with children. The facilitator read aloud each characteristic description and discussed these with the group. Children were then invited to ask questions around these characteristics and physical literacy in general and the discussion was deemed to reach saturation when no more questions were being asked. The facilitator then prompted the focus group to discuss different ways participants could assess these characteristics.

Within the expert academics/practitioner and teacher focus groups, questions followed similarly whereby participants were encouraged to discuss current experiences of assessment of PL and/or in PE, and positive and/or negative aspects, potential ways to overcome these barriers and to discuss what an ‘ideal assessment’ would look like.

Focus groups with children lasted between 30 and 45 minutes. Focus groups with teachers lasted between 45 and 60 minutes, while expert focus groups lasted between 60 and 90 minutes. All focus groups were audio-recorded using a digital dictaphone and transcribed verbatim.

Data analysis

Transcripts were imported into NVivo 12 (QSR International) for data handling. Transcripts were initially analysed through a deductive process using Bowen et al. (Citation2009) as a thematic framework. An inductive process was also used, enabling additional themes to be generated (Braun and Clarke Citation2006, Citation2019). Pen profiles represented analysis outcomes (MacKintosh et al. Citation2011) including self-defining and verbatim quotations and frequency data. Participant identifiers included participant number and stakeholder grouping, and focus group number, e.g. P1, expert (Participant 1 Expert Focus Group 1). For profile inclusion, the threshold was a minimum of 25% consensus within a theme and themes not reaching consensus were reported within the narrative (Foulkes et al. Citation2020). For transparency, the total number and percentage of individual participants who spoke in relation to a theme is therefore presented.

Methodological rigour

Recommendations made by Smith and McGannon (Citation2018) regarding qualitative methodology guided data collection and analysis note that as theory-free knowledge is not possible, the lead authors (first and second) acted as a critical friend for one another (Smith and McGannon, Citation2018). The lead authors independently back-coded the data analysis process from pen profiles to themes, codes, and transcripts to allow for dialogue regarding the acknowledgement of multiple truths, perspectives, and results to emerge from the research process. The lead authors then presented the pen profiles and verbatim quotations to the remaining co-authors, as a further means of cooperative triangulation (MacKintosh et al. Citation2011). Methodological rigour, credibility, and transferability were developed by verbatim transcription of data and triangular member-checking procedures comparison of data and pen profiles. The authors critically reflected their engagement with the analysis and cross-examined the data, providing opportunity to explore, challenge, and extend interpretations within the data (Braun and Clarke Citation2019).

Results

Stakeholders perceptions of PL are presented within three higher order deductive themes: acceptability, demand and implementation. Results indicated responses in relation to existing assessments and this was therefore included as an inductive higher order theme. In order to offer a more comprehensive and detailed insight into perceptions of PL assessment, the findings will be presented across the academics/practitioner, teacher and child narratives.

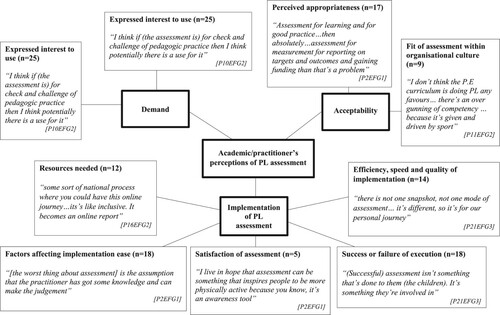

Academics/practitioners

presents a pen profile representing the higher and lower order themes conceptualised in the academics/practitioner focus groups. The most commonly cited higher order themes by frequency were demand (n = 21, 100%) and implementation (n = 21, 100%), followed by acceptability (n = 19, 90%). The most commonly cited lower order themes by frequency were success or failure of execution (n = 18, 86%), perceived demand (n = 17, 81%), and perceived appropriateness (n = 17, 81%). The inductive lower order themes of existing assessments (n = 15, 71%) was recognised within demand.

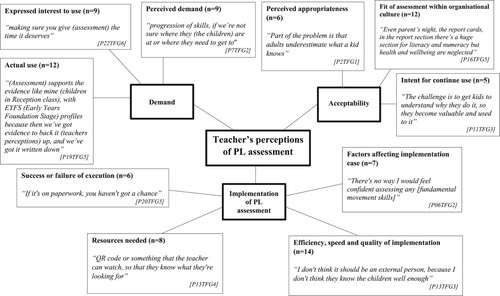

Teachers

presents a pen profile representing the higher and lower order themes conceptualised in the teacher focus groups. The most commonly cited higher order themes by frequency were acceptability (n = 17, 74%), followed by implementation (n = 16, 70%), and demand (n = 15, 65%). The most commonly cited lower order themes by frequency were efficiency, speed, and quality of execution (n = 14, 61%), fit with organisational culture (n = 12, 52%), and perceived demand (n = 9, 39%).

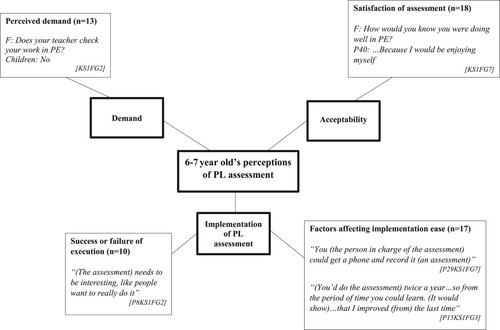

Children aged 6–7 years old

presents a pen profile representing the higher and lower order themes conceptualised in the 6–7-year-old focus groups. The most commonly cited higher order themes by frequency were implementation (n = 38, 97%), followed by acceptability (n = 23, 59%), demand (n = 23, 59%). The most commonly cited lower order themes by frequency were the inductive themes of role of others (n = 21, 54%), self-awareness (n = 21, 54%), and equipment (n = 18, 45%). The most frequently cited deductive themes were satisfaction (n = 18, 46%) and factors affecting implementation ease (n = 17, 44%).

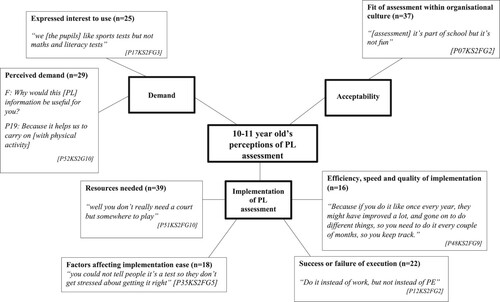

Children aged 10–11 years old

presents a pen profile representing the higher and lower order themes conceptualised in the 10–11-year-old focus groups. The most commonly cited higher order themes by frequency were implementation (n = 49, 86%), followed by acceptability (n = 37, 65%), demand (n = 29, 51%). The most commonly cited lower order themes by frequency were resources needed (n = 39, 68%), equipment (n = 39, 68%), and fit with organisational culture (n = 37, 65%).

Discussion

The aim of this study was to explore stakeholders’ views of current practice, future directions, and effective implementation of PL assessment, with a view to inform future PL assessment. All stakeholder groups viewed the assessment of PL as important, but despite this, findings suggest an identifiable gap in the assessment of the affective and cognitive domains of PL (i.e. motivation, confidence, and knowledge and understanding). All stakeholders proposed technology and self-assessment/reflection as part of an assessment process, with several other factors suggested to improve the feasibility of a potential PL assessment tool. The following discussion is sectioned to higher order themes identified, triangulating perspectives across the three stakeholder groups.

Acceptability

Academics/practitioner participants highlighted several barriers regarding the perceived appropriateness of PL assessment including the concept of PL itself.

PL doesn’t lend itself readily … to being assessed P4EFG1

Both teacher and academics/practitioner participant responses indicated that fit within organisational culture and perceived appropriateness were of importance. Potential future assessment of PL was deemed to fit in with perceptions of existing school processes and policies for recording evidence. Teachers perceived the acceptability of an assessment being linked closely to approval or support from management staff within the school ‘If your head teacher hasn’t got that mentality, then it’s doomed’ (P1EFG1). Research exploring headteacher and PE-co-ordinator perceptions, at an organisational level, noted that headteacher’s beliefs and values greatly influence a school’s PA opportunities (Domville et al. Citation2019). Findings from the current study concur with these findings, with teachers citing the need for CPD, PE curriculum time, support for extra-curricular activities and a need to engage headteachers by targeting curriculum and policy-level change.

Within the child focus groups, participants accepted that assessment is ‘part of school but it’s not fun’ (P19KS2FG4). A prevalent factor that influenced perceptions of satisfaction regarding assessment acceptability was the need for it to be a fun and enjoyable experience, also cited by academics/practitioners. Children described how they would enjoy an assessment of PL and how they would know they were successful. Participants across all stakeholder groups spoke of what would make an assessment fun, for example; level of challenge, being active, working with peers, and positive teacher feedback. Research has consistently linked enjoyment to meaningfulness, motivation, and more autonomously regulated behaviour in relation to PE and PA (Haerens et al. Citation2015; Beni, Ní Chróinín, and Fletcher Citation2019; Domville et al. Citation2019). Assessment should be positioned at an appropriate challenge level, and many children spoke candidly of the importance of this. In one school, children noted a ‘growth mind set’ regarding assessment and learning: ‘I wouldn’t feel bad if I got a red [a low mark], because mistakes help you learn’ (P40KS1FG7). Although this may demonstrate that some children within the study found it possible to frame lower marks as a positive, learning experience, this is not always the case (López-Pastor et al. Citation2013). Constructive feedback should be provided, creating a needs supportive environment around assessment is crucial (Black and Wiliam Citation2009; Tolgfors Citation2018, Citation2019). In order for the assessment to have both educational impact and inspire learning, participants should feel empowered (López-Pastor et al. Citation2013; Tolgfors Citation2018) with one academic/practitioner participant noting assessment itself could ‘inspire people to be active’ (P2EFG1), while in focus groups many children spoke of how an assessment would motivate them to improve different skills over time ‘Because if you do it like once every year, they [the student] might have improved a lot, and gone on to do different things, so you need to do it every couple of months, so you keep track’ [P48CFG9]. An appropriate PL assessment has the potential to create a motivational climate whereby children can become autonomously motivated to improve their own lifelong PL (DinanThompson and Penney Citation2015) yet the current study findings indicate the need for the ‘right balance’ between theory and practice, i.e. authentic assessment and aligned to PL theory, yet realistic to contextual demands and supported by appropriate training and guidance.

Demand

Academic/Practitioner groups also expressed a clear demand for assessment to chart progress in children and inform best practice within the education sector. The majority of academic/practitioners agreed that ‘ … to get governments involved, they want something tangible, don’t they? and the only way you can do that is by assessing in some way’ (P4EFG1) and that ‘developing a tool that allows us [teachers] to measure progress in PE will allow us to assess the methodologies that we employ in class’ (P10EFG2). Others felt ‘[Assessment is] not just for governments, it’s just to communicate something in meaningful terms’ (P1EFG1). Others spoke of the importance of tangible evidence for PL and its potential to provide accountability and subsequently influence policy; a prevalent factor in wider assessment research (López-Pastor et al. Citation2013; Ní Chrónín and Cosgrave Citation2013; DinanThompson and Penney Citation2015; Tolgfors Citation2019). Specific examples included supporting evidence for funding, protected PE curriculum time, and to advocate for the value of PE and PL.

Implementation

Across all stakeholder groups, the class teacher was deemed best placed to administer PL assessment, but it was stated that primary teachers vary in confidence, ability, and knowledge of PL. Many teachers cited a negative experience of external agencies coming into schools to deliver PE: ‘because I don’t think they know the children well enough … And they don’t have that whole view of the child’ (P5TFG2). If teachers were to administer an assessment it should be ‘ … just short, simple, that it’s easy for everybody to understand’ (P17TFG5).

Child participants also identified the class teacher as the most suitable to be put ‘in charge’ of assessment, identifying barriers and potential solutions to this. For example, children suggested a TA would be able to help with an assessment, and the recording of an assessment could improve implementation. The pivotal role of others in assessment was discussed frequently within the child groups suggesting that family, friends, teachers, and coaches could support and administer assessments, but this person had to be trusted to provide a positive and safe experience.

It was also identified in several focus groups that children themselves could be a part of the assessment implementation process. Such involvement could be an important part of children’s learning, a potential opportunity to ease the burden on the teachers themselves and aligns with the wider person-centred philosophy of PL (Green et al. Citation2018). Self-assessment in children has also been found to promote self-regulated learning and self-efficacy (Panadero, Jonsson, and Botella Citation2017) with children in this study recognising positive ways they already helped their friends and classmates in PE using technology such as tablets or phones to ‘ … Record yourself doing that [new skill] every day, and then as you start to do it, you’ll get better every day’ (P36KS2FG7). Technology may facilitate authentic assessment by enabling teachers and students to share the experience via platforms such as app-based software (Rossum, Morley, and Morley Citation2018). In the present study, children often stopped themselves mentioning technology when they realised it challenged current perceptions of PE. Teachers too advocated the use of technology, self-assessment, and peer assessment. At the surface level, it was perceived such strategies have the potential to overcome challenges relating to time, competence and confidence in relation to assessment. In addition, these strategies could also provide the opportunity for children to reflect and support one another and empower children to take ownership of their relationship with physical activity and develop their self-awareness. The assessment of physical literacy itself should be an enjoyable and motivating learning experience and could be seen as ‘Assessment as Learning’ opportunity, that is assessment is integrated with learning implicitly (Torrance Citation2007; Tolgfors Citation2019).

What about video evidence, so some children videoing each other and watching it back and assessing it together. Teachers don’t need to do it, the kids can. They can talk together, making it less formal. Motivating each other. (P02TFG1)

Record yourself doing that [new skill] every day, and then as you start to do it, you’ll get better every day. (P36CFG7)

Child participants explained why they thought it would be important for an assessment to be differentiated for children of different ages and abilities ‘ … we’re a tiny bit older and they’re a tiny bit younger … we won’t get better if it’s too easy, and if it’s too hard’ (KS1FG6). The use of longitudinal assessment to capture changes and provide support over time was identified as being important while ensuring that constructive and meaningful feedback was translated to the children.

Academics/practitioners also referred to issues that were not necessarily specific to PL assessment, but indicative of the challenges faced in implementing any assessment such as space, equipment, lack of training, and lack of teaching assistants present in PE lessons with time the most prevalent barrier. Teachers suggested they would require training and support in order to deliver an assessment effectively and children gave a variety of suggestions in response to how long an assessment should take [not longer than a PE lesson] and how often it should be conducted ‘ … Twice a year … So that from the period of time you could learn. [It would show] … that I improved [from] the last time’ (P15KS1FG3). Klingberg et al. (Citation2019) suggested that a ‘good’ assessment of fundamental movement skills in pre-schoolers should take less than 10 minutes. Considering an average UK class contains approximately 30 pupils assessing a whole class individually in one lesson is not appropriate. Adults seemed to feel that an ideal assessment would allow them to administer and provide feedback during a lesson, removing the potential of further ‘paperwork’ outside of class time. Members of the academics/practitioner group were consistent in suggesting that the assessment should be a regular process, over time, to build up a longitudinal picture of an individual’s PL journey.

It is evident that stakeholders perceive a large number of factors to affect the successful implementation of a PL assessment. Crucially, as a guiding principle, future assessment should consider the balance between the burden on the child and teacher versus the potential benefit of a comprehensive and time-consuming assessment process.

Existing assessments

The inductive theme of existing assessments highlighted that academics/practitioners were aware of existing PL tools and limitations (content, consistency, limited applied use). No teachers or students recalled the use of PL assessment in their schools. Assessment within primary PE was rare, and when it was used, teachers and children only referred to the physical domain. These assessments often resulted in children being sedentary for prolonged periods of a lesson and so the opposite of what they were trying to achieve in a PE.

During the child groups, participants shared assessment experiences in reference to key curricular subjects (i.e. Maths and English). Unfortunately, children often reported negative testing experiences, where they felt under pressure ‘ … You’re doing the test … it gets you like really worried and stuff’ (P19KS2FG4). Participants within the child focus groups associated taking part in PE as a fun experience and welcomed the idea of an assessment in this context. Therefore, it may be not the assessment itself that children are wary of but the experience typically surrounding formal assessments. Even in the youngest age group, children understood why an assessment was important.

P15 You can practise words so you know how you spell them.

P18 Because when you’re older, you want to be able to spell anything. (KS1FG3)

The purpose of PL is to engage and support children in PA participation for life (Whitehead Citation2001, Citation2010) and a supportive and nurturing assessment environment should be fostered. Examples of how practitioners can achieve this include providing opportunity to practice activities before being assessed, performing activities as part of a small group and pairing children with similar abilities to work together. Although assessment in other areas of the curriculum is common, research from Edwards et al. (Citation2019) identified that pedagogical and assessment practices are often not transferred from other subjects into the PE context, which could also be of benefit. Whilst our findings suggest that although participants indicated demand for an appropriate PL assessment, current existing assessments do not meet the needs of the teachers wanting to use the assessment. Therefore, future PL assessment tools should consider existing successful pedagogical and assessment practices in other areas of the curriculum that can be transferred into a PE context, but should also be aware and wary of the negative aspects of existing assessments.

Strengths and limitations

To the best of our knowledge, this is the first study to qualitatively investigate stakeholders’ perceptions of PL assessment and one of few studies in wider PE/PA assessment research study to consider children and teachers as stakeholders. Findings inform a number of recommendations that should influence future PL assessment considerations. This study has implications for future research (stakeholder involvement in assessment development, the need for longitudinal PL assessment, formative, and summative PL assessment to support both learning and accountability) and practice (holistic assessment that considers all PL domains, consideration of assessment feasibility in context, conducted by the class teacher, and subsequently teacher CPD). The findings do not allow conclusions to be drawn on specific assessments where further research is needed to evaluate the feasibility of specific PL assessments and how successfully these can be used within a primary school context

A further strength of this study is the number of focus groups conducted across different stakeholder groups, throughout the UK, and in a range of demographic settings. Comparisons of experiences across the UK were outside of the scope of the current study and could warrant further exploration. Despite contacting 14 schools, approximately only 10% of teachers agreed to take part in the present study with an acknowledgement that those who agreed to take part may have more positive experiences with PL, PE and assessment, and thus were more willing to take part. Furthermore, whilst it is generally agreed that assessment of PL is important beyond school PE (Barnett et al. Citation2019), this was also outside the scope of the current project, and we would encourage that parents/guardians should also be considered stakeholders in this age group.

Conclusions

To our knowledge, this is the first study to explore and triangulate the perceptions of children, teachers, and PL academics/practitioners, to inform the development of future PL assessment and practice. Critically, the inclusion of the children’s voice has enabled new and important insight into young people’s perceptions of PL and PL assessment. The findings from this study contribute to the evidence base to inform the onward development of a conceptually aligned PL assessment tool, suitable for use in schools. In turn, this will enable robust, empirical evidence to be collated, to evidence theory, and inform practice and policy.

Findings suggest that although participants indicated demand for an appropriate PL assessment, current existing assessments do not meet the needs of all stakeholders. Notably, there is a clear lack of assessments relating to the affective and cognitive domain used in current primary PE practice. Any future assessments of PL should consider the development and incorporation of assessment of these elements as part of a holistic and conceptually aligned PL assessment. Participants identified numerous factors that can influence implementation and acceptability of an assessment, and those developing an assessment should consider the balance between the purpose of the assessment and the potential assessment burden. This should include the consideration of logistical issues such as time, training and resources needed, as well as the theoretical and philosophical implications of assessing PL. In order to implement PL assessment within a school setting while enabling the teacher to be the assessor, which participants in this study clearly called for, CPD for teachers will be crucial. Further studies are also needed to consider the implications of using the suggested strategies of technology, peer assessment, and self-assessment in this population and appropriateness of these methods in practice. Stakeholder groups also expressed the importance of providing a positive experience for children where engagement is celebrated and their relationship with PA can be nurtured. Ultimately, future PL assessment should strive to be an integral part of an individual’s physical literacy journey, whereby the assessment itself is a learning experience and the process of participating in an assessment should contribute to the development of physical literacy and ultimately, engagement in lifelong physical activity.

Acknowledgements

The authors state that no financial interest or benefit arose from the direct applications of this research.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- Angell, C., J. Alexander, and J. A. Hunt. 2015. “‘Draw, Write and Tell’: A Literature Review and Methodological Development on the ‘Draw and Write’ Research Method.” Journal of Early Childhood Research 13 (1): 17–28. doi:https://doi.org/10.1177/1476718X14538592.

- Association Internationale des Écoles Supérieures d’Éducation Physique (AIESEP). (2020). AIESEP Position Statement on Physical Education Assessment https://aiesep.org/scientific-meetings/position-statements/

- Barnett, L. M., D. A. Dudley, R. D. Telford, D. R. Lubans, A. S. Bryant, W. M. Roberts, P. J. Morgan, et al. 2019. “Guidelines for the Selection of Physical Literacy Measures in Physical Education in Australia.” Journal of Teaching in Physical Education 38 (2): 119–125. doi:https://doi.org/10.1123/jtpe.2018-0219.

- Beni, S., D. Ní Chróinín, and T. Fletcher. 2019. “A Focus on the How of Meaningful Physical Education in Primary Schools.” Sport, Education and Society 24 (6): 624–637. doi:https://doi.org/10.1080/13573322.2019.1612349.

- Black, P., and D. Wiliam. 2009. “Developing the Theory of Formative Assessment.” Educational Assessment, Evaluation and Accountability 21 (1): 5–31. doi:https://doi.org/10.1007/s11092-008-9068-5.

- Bowen, D. J., M. Kreuter, B. Spring, L. Cofta-Woerpel, L. Linnan, D. Weiner, S. Bakken, et al. 2009. “How We Design Feasibility Studies.” American Journal of Preventive Medicine 36 (5): 452–457. Elsevier. doi:https://doi.org/10.1016/j.amepre.2009.02.002.

- Braun, V., and V. Clarke. 2006. “Using Thematic Analysis in Psychology.” Qualitative Research in Psychology 3 (2): 77–101. doi:https://doi.org/10.1191/1478088706qp063oa.

- Braun, V., and V. Clarke. 2019. “Reflecting on Reflexive Thematic Analysis.” Qualitative Research in Sport, Exercise and Health 11 (4): 589–597. Routledge. doi:https://doi.org/10.1080/2159676X.2019.1628806.

- Cairney, J., H. Clark, D. Dudley, and D. Kriellaars. 2019. “Physical Literacy in Children and Youth-a Construct Validation Study.” Journal of Teaching in Physical Education 38 (2): 84–90. doi:https://doi.org/10.1123/jtpe.2018-0270.

- Corbin, C. B. 2016. “Implications of Physical Literacy for Research and Practice: A Commentary.” Research Quarterly for Exercise and Sport 87 (1): 14–27. doi:https://doi.org/10.1080/02701367.2016.1124722.

- DinanThompson, M., and D. Penney. 2015. “Assessment Literacy in Primary Physical Education.” European Physical Education Review 21 (4): 485–503. doi:https://doi.org/10.1177/1356336X15584087.

- Domville, M., P. M. Watson, D. Richardson, and L. E. F. Graves. 2019. “Children’s Perceptions of Factors That Influence PE Enjoyment: A Qualitative Investigation.” Physical Education and Sport Pedagogy 24 (3): 207–219. doi:https://doi.org/10.1080/17408989.2018.1561836.

- Dudley, D. A. 2015. "A Conceptual Model of Observed Physical Literacy." The Physical Educator 72 (5).

- Edwards, L. C., A. S. Bryant, R. J. Keegan, K. Morgan, S. M. Cooper, and A. M. Jones. 2018. “Measuring’ Physical Literacy and Related Constructs: A Systematic Review of Empirical Findings.” In Sports Medicine, 659–682, Vol. 48, Issue 3. Springer International. https://doi.org/https://doi.org/10.1007/s40279-017-0817-9.

- Edwards, L. C., A. S. Bryant, R. J. Keegan, K. Morgan, and A. M. Jones. 2017. “Definitions, Foundations and Associations of Physical Literacy: A Systematic Review.” Sports Medicine 47 (1): 113–126. doi:https://doi.org/10.1007/s40279-016-0560-7. Springer International Publishing.

- Edwards, L. C., A. S. Bryant, K. Morgan, S. M. Cooper, A. M. Jones, and R. J. Keegan. 2019. “A Professional Development Program to Enhance Primary School Teachers’ Knowledge and Operationalization of Physical Literacy.” Journal of Teaching in Physical Education 38 (2): 126–135. doi:https://doi.org/10.1123/jtpe.2018-0275.

- Foulkes, J. D., L. Foweather, S. J. Fairclough, and Z. Knowles. 2020. “‘I Wasn’t Sure What It Meant to be Honest’ – Formative Research Towards a Physical Literacy Intervention for Preschoolers.” Children 7 (7): 76. doi:https://doi.org/10.3390/children7070076.

- Giblin, S., D. Collins, and C. Button. 2014. “Physical Literacy: Importance, Assessment and Future Directions.” Sports Medicine 44 (9): 1177–1184. Springer International Publishing. doi:https://doi.org/10.1007/s40279-014-0205-7

- Gibson, F. 2007. “Conducting Focus Groups with Children and Young People: Strategies for Success.” Journal of Research in Nursing Los Angeles 12 (5): 473–483. doi:https://doi.org/10.1177/17449871079791.

- Green, N. R., W. M. Roberts, D. Sheehan, and R. J. Keegan. 2018. “Charting Physical Literacy Journeys Within Physical Education Settings.” Journal of Teaching in Physical Education 37 (3): 272–279. doi:https://doi.org/10.1123/jtpe.2018-0129.

- Haerens, L., N. Aelterman, M. Vansteenkiste, B. Soenens, and S. Van Petegem. 2015. “Do Perceived Autonomy-Supportive and Controlling Teaching Relate to Physical Education Students’ Motivational Experiences Through Unique Pathways? Distinguishing Between the Bright and Dark Side of Motivation.” Psychology of Sport and Exercise 16 (P3): 26–36. doi:https://doi.org/10.1016/j.psychsport.2014.08.013.

- Heitink, M. C., F. M. Van der Kleij, B. P. Veldkamp, K. Schildkamp, and W. B. Kippers. 2016. “A Systematic Review of Prerequisites for Implementing Assessment for Learning in Classroom Practice.” Educational Research Review 17: 50–62. Elsevier Ltd. doi:https://doi.org/10.1016/j.edurev.2015.12.002.

- Hyndman, B., and S. Pill. 2018. “What’s in a Concept? A Leximancer Text Mining Analysis of Physical Literacy Across the International Literature.” European Physical Education Review 24 (3): 292–313. doi:https://doi.org/10.1177/1356336X17690312.

- International Physical Literacy Association (ITPLA). 2017. IPLA Definition. https://www.physical-literacy.org.uk/.

- Jacquez, F., L. M. Vaughn, and E. Wagner. 2013. Youth as Partners, Participants or Passive Recipients: A Review of Children and Adolescents in Community-Based Participatory Research (CBPR). doi:https://doi.org/10.1007/s10464-012-9533-7.

- Jess, M., N. Carse, and J. Keay. 2016. “The Primary Physical Education Curriculum Process: More Complex That You Might Think!!.” Education 3-13 44 (5): 502–512. doi:https://doi.org/10.1080/03004279.2016.1169482.

- Keegan, R. J., L. M. Barnett, D. A. Dudley, R. D. Telford, D. R. Lubans, A. S. Bryant, W. M. Roberts, et al. 2019. “Defining Physical Literacy for Application in Australia: A Modified Delphi Method.” Journal of Teaching in Physical Education 38 (2): 105–118. doi:https://doi.org/10.1123/jtpe.2018-0264.

- Klingberg, B., N. Schranz, L. M. Barnett, V. Booth, and K. Ferrar. 2019. “The Feasibility of Fundamental Movement Skill Assessments for Pre-School Aged Children.” Journal of Sports Sciences 37 (4): 378–386. doi:https://doi.org/10.1080/02640414.2018.1504603.

- Lander, N., P. J. Morgan, J. Salmon, and L. M. Barnett. 2016. “Teachers Perceptions of a Fundamental Movement Skill (FMS) Assessment Battery in a School Setting.” Measurement in Physical Education and Exercise Science 20 (1): 50–62. doi:https://doi.org/10.1080/1091367X.2015.1095758.

- Longmuir, P. E., and M. S. Tremblay. 2016. “Top 10 Research Questions Related to Physical Literacy.” Research Quarterly for Exercise and Sport 87 (1): 28–35. doi:https://doi.org/10.1080/02701367.2016.1124671.

- Longmuir, P. E., K. E. Gunnell, J. D. Barnes, K. Belanger, G. Leduc, S. J. Woodruff, and M. S. Tremblay. 2018. "Canadian Assessment of Physical Literacy Second Edition: A Streamlined Assessment of the Capacity for Physical Activity Among Children 8 to 12 Years of Age." BMC Public Health 18 (2): 1–12.

- Longmuir, P. E., C. Boyer, M. Lloyd, Y. Yang, E. Boiarskaia, W. Zhu, and M. S. Tremblay. 2015. "The Canadian Assessment of Physical Literacy: Methods for Children in Grades 4 to 6 (8 to 12 Years)." BMC Public Health 15 (1): 1–11.

- López-Pastor, V. M., D. Kirk, E. Lorente-Catalán, A. MacPhail, and D. Macdonald. 2013. “Alternative Assessment in Physical Education: A Review of International Literature.” Sport, Education and Society 18 (1): 57–76. doi:https://doi.org/10.1080/13573322.2012.713860.

- MacKintosh, K. A., Z. R. Knowles, N. D. Ridgers, and S. J. Fairclough. 2011. “Using Formative Research to Develop CHANGE!: A Curriculum-Based Physical Activity Promoting Intervention.” BMC Public Health 11 (1): 831. doi:https://doi.org/10.1186/1471-2458-11-831.

- Morley, D., T. Van Rossum, D. Richardson, and L. Foweather. 2019. “Expert Recommendations for the Design of a Children’s Movement Competence Assessment Tool for Use by Primary School Teachers.” European Physical Education Review 25 (2): 524–543. doi:https://doi.org/10.1177/1356336X17751358.

- Ní Chrónín, D., and C. Cosgrave. 2013. “Implementing Formative Assessment in Primary Physical Education: Teacher Perspectives and Experiences.” Physical Education and Sport Pedagogy 18 (2): 219–233. doi:https://doi.org/10.1080/17408989.2012.666787.

- Noonan, R. J., L. M. Boddy, S. J. Fairclough, and Z. R. Knowles. 2016. "Write, Draw, Show, and Tell: A Child-Centred Dual Methodology to Explore Perceptions of Out-of-School Physical Activity." BMC Public Health 16 (1): 1–19.

- O’Loughlin, J., D. N. Chróinín, and D. O’Grady. 2013. “Digital Video: The Impact on Children’s Learning Experiences in Primary Physical Education.” European Physical Education Review 19 (2): 165–182. doi:https://doi.org/10.1177/1356336X13486050.

- Panadero, E., A. Jonsson, and J. Botella. 2017. “Effects of Self-Assessment on Self-Regulated Learning and Self-Efficacy: Four Meta-Analyses.” Educational Research Review 22: 74–98. Elsevier Ltd. doi:https://doi.org/10.1016/j.edurev.2017.08.004.

- Penney, D., R. Brooker, P. Hay, and L. Gillespie. 2009. “Curriculum, Pedagogy and Assessment: Three Message Systems of Schooling and Dimensions of Quality Physical Education.” Sport, Education and Society 14 (4): 421–442. doi:https://doi.org/10.1080/13573320903217125.

- Robinson, D. B., L. Randall, and J. Barrett. 2018. “Physical Literacy (mis)Understandings: What do Leading Physical Education Teachers Know About Physical Literacy?” Journal of Teaching in Physical Education 37 (3): 288–298. doi:https://doi.org/10.1123/jtpe.2018-0135.

- Roetert, E. P., and S. C. Jefferies. 2014. “Embracing Physical Literacy.” Journal of Physical Education, Recreation & Dance 85 (8): 38–40. doi:https://doi.org/10.1080/07303084.2014.948353.

- Rossum, T. van, and D. Morley. 2018. “The Role of Digital Technology in the Assessment of Children’s Movement Competence During Primary School Physical Education Lessons.” 48–68. doi:https://doi.org/10.4324/9780203704011-4.

- Shearer, C., H. R. Goss, L. C. Edwards, R. J. Keegan, Z. R. Knowles, L. M. Boddy, E. J. Durden-Myers, and L. Foweather. 2018. “How is Physical Literacy Defined? A Contemporary Update.” Journal of Teaching in Physical Education 37 (3): 237–245. doi:https://doi.org/10.1123/jtpe.2018-0136.

- Stiglic, N., and R. M. Viner. 2019. “Effects of Screentime on the Health and Well-Being of Children and Adolescents: A Systematic Review of Reviews.” BMJ Open 9 (1): e023191. BMJ Publishing Group. doi:https://doi.org/10.1136/bmjopen-2018-023191.

- Smith, B., and K. R. McGannon. 2018. "Developing Rigor in Qualitative Research: Problems and Opportunities within Sport and Exercise Psychology." International Review of Sport and Exercise Psychology 11 (1): 101–121.

- Tolgfors, B. 2018. “Different Versions of Assessment for Learning in the Subject of Physical Education.” Physical Education and Sport Pedagogy 23 (3): 311–327. doi:https://doi.org/10.1080/17408989.2018.1429589.

- Tolgfors, B. 2019. “Transformative Assessment in Physical Education.” European Physical Education Review 25 (4): 1211–1225. doi:https://doi.org/10.1177/1356336X18814863.

- Tong, A., P. Sainsbury, and J. Craig. 2007. Consolidated Criteria for Reporting Qualitative Research (COREQ): A 32-item Checklist for Interviews and Focus Groups. https://doi.org/https://doi.org/10.1093/intqhc/mzm042.

- Torrance, H. 2007. “Assessment as Learning? How the Use of Explicit Learning Objectives, Assessment Criteria and Feedback in Post-Secondary Education and Training Can Come to Dominate Learning.” Assessment in Education: Principles, Policy and Practice 14 (3): 281–294. doi:https://doi.org/10.1080/09695940701591867.

- Tremblay, M., and M. Lloyd. 2010. “Physical Literacy Measurement – The Missing Piece.” Physical & Health Education Journal 76 (1): 26–30. http://www.albertaenaction.ca/admin/pages/48/Physical Literacy Article PHE Journal 2010.pdf.

- United Nations Educational, Scientific, and Cultural Organization (UNESCO). (2015). Quality Physical Education Guidelines for Policymakers. Paris: UNESCO Press.

- van Rossum, T., L. Foweather, D. Richardson, S. J. Hayes, and D. Morley. 2019. “Primary Teachers’ Recommendations for the Development of a Teacher-oriented Movement Assessment Tool for 4–7 Years Children.” Measurement in Physical Education and Exercise Science 23 (2): 124–134. doi:https://doi.org/10.1080/1091367X.2018.1552587.

- Whitehead, M. 2001. “The Concept of Physical Literacy.” European Journal of Physical Education 6 (2): 127–138. doi:https://doi.org/10.1080/1740898010060205.

- Whitehead, M. 2010. “Physical Literacy: Throughout the Lifecourse.” In Physical Literacy: Throughout the Lifecourse. Routledge Taylor & Francis Group. doi:https://doi.org/10.4324/9780203881903.

- Young, L., J. O’Connor, and L. Alfrey. 2019. “Physical Literacy: A Concept Analysis.” Sport, Education and Society. 25(8), 946-959.