ABSTRACT

Selective outcome reporting can result in overestimation of treatment effects, research waste, and reduced openness and transparency. This review aimed to examine selective outcome reporting in trials of behavioural health interventions and determine potential outcome reporting bias. A review of nine health psychology and behavioural medicine journals was conducted to identify randomised controlled trials of behavioural health interventions published since 2019. Discrepancies in outcome reporting were observed in 90% of the 29 trials with corresponding registrations/protocols. Discrepancies included 72% of trials omitting prespecified outcomes; 55% of trials introduced new outcomes. Thirty-eight percent of trials omitted prespecified and introduced new outcomes. Three trials (10%) downgraded primary outcomes in registrations/protocols to secondary outcomes in final reports; downgraded outcomes were not statistically significant in two trials. Five trials (17%) upgraded secondary outcomes to primary outcomes; upgraded outcomes were statistically significant in all trials. In final reports, three trials (7%) omitted outcomes from the methods section; three trials (7%) introduced new outcomes in results that were not in the methods. These findings indicate that selective outcome reporting is a problem in behavioural health intervention trials. Journal- and trialist-level approaches are needed to minimise selective outcome reporting in health psychology and behavioural medicine.

Selective outcome reporting in clinical trials involves reporting only some of the prespecified outcomes examined, including outcomes that were not prespecified, and/or changing the importance of outcomes in terms of primary and secondary importance (Kirkham et al., Citation2010; Thomas & Heneghan, Citation2022). Outcome reporting bias (ORB) arises from selective outcome reporting and involves reporting a subset of prespecified outcomes in the final publication based on knowledge of the results (Hutton & Williamson, Citation2000). This can occur due to awareness that significant results are more likely to be published than negative or null findings (DeVito & Goldacre, Citation2019; Hopewell et al., Citation2009) or that there may be a ‘time lag’ in publishing studies with null and/or negative findings (Dwan et al., Citation2008).

Selective outcome reporting is problematic because it can lead to overestimation of treatment effects and inflated effect sizes (Ioannidis, Citation2008; Macura et al., Citation2010; Shah et al., Citation2020; Thomas & Heneghan, Citation2022), increased frequency of false positive results (Olsson-Collentine et al., Citation2023), and misinterpretation of trial findings (Cristea & Naudet, Citation2019; Thomas & Heneghan, Citation2022). Selective outcome reporting can also contribute to research waste (Thomas & Heneghan, Citation2022; Yordanov et al., Citation2018) and removes transparency and openness in research reporting (Hagger, Citation2019; Ioannidis et al., Citation2017). Despite the adverse consequences of selective outcome reporting, high levels have been observed in trials within and across health areas. For example, in a systematic review of studies examining ORB in reports of randomised controlled trials (RCTs), 40–62% of studies reported at least one outcome that was changed, omitted, or introduced (Dwan et al., Citation2013). In a review of trials submitted to the BMJ, 14% of reported outcomes were not specified a priori, and 10% of pre-specified outcomes were not reported (Weston et al., Citation2016). Trials across a range of health areas have identified selective outcome reporting. For instance, in a review of surgical RCTs, 30% of outcomes were found to be incompletely reported (Wang et al., Citation2023); 14% of pharmacotherapy trials examined in another review demonstrated irregularities between published primary outcomes and those included in the trial registration (Lancee et al., Citation2022). A review of rehabilitation trials identified discrepancy rates of 61% and 27% between trial abstracts and main texts respectively, and study registrations (Komukai et al., Citation2024). A review of psychotherapy randomised controlled trials found that only 4.5% of reviewed trials had no discrepancies between the article and the trial registry (Bradley et al., Citation2017).

Evidence of selective outcome reporting in health psychology and behavioural medicine is scant. However, the prevalence of selective outcome reporting has been shown to be similar across psychological disciplines, with over 40% of American (John et al., Citation2012) and Italian (Agnoli et al., Citation2017) and over 50% of Brazilian (Rabelo et al., Citation2020) psychology researchers self-reporting some form of selective outcome reporting in their research. Examinations of differences between doctoral dissertations in psychology and subsequent publications have also found that 18% omitted outcomes and 9% added new outcomes in publications (Cairo et al., Citation2020). Further, a review of concordance between behavioural health trial registrations and primary outcome reporting specifically in the journal BMC Public Health identified that 70% of papers had selectively reported outcomes (Taylor & Gorman, Citation2022).

Behavioural interventions, which aim to change health and/or health behaviour outcomes (Michaelsen & Esch, Citation2022), focus on a range of disease areas and health outcomes. Findings from trials of these interventions inform health research, practice, and policy (Heneghan et al., Citation2017; Matvienko-Sikar et al., Citation2020). Thus, selective outcome reporting in such trials represents a ‘threat to evidence-based healthcare’ (Weston et al., Citation2016). Moreover, research in health psychology and behavioural medicine has the potential to profoundly influence individual, community, and population health and wellbeing (Segerstrom et al., Citation2023).

There is an increasing focus on improving the conduct and reporting of research in health psychology and behavioural medicine, particularly in terms of openness and transparency (Hagger, Citation2019; Kwasnicka et al., Citation2021; Segerstrom et al., Citation2023), which is impeded by selective reporting practices (Hagger, Citation2019; Ioannidis et al., Citation2017). For instance, a recent survey of international experts in open science and health psychology identified ‘examination of the extent that open science behaviours are currently practiced in health psychology’ as the top research priority in this area (Norris, Prescott, et al., Citation2022). Examining selective outcome reporting in trials of behavioural health interventions will likely enable identification of whether, and to what extent, selective reporting occurs, which is important to guide future research to improve the conduct, reporting, and transparency of trials of behavioural health interventions. To date, we are not aware of such a review across the health psychology and behavioural medicine literature. The aim of this review was therefore to examine selective outcome reporting in a broad sample of trials of behavioural health interventions, and to determine the potential for ORB.

Materials and methods

This review is reported in line with the Preferred Reporting for Items in Systematic Reviews and Meta Analyses (PRISMA) statement (Page et al., Citation2021). The review was pre-registered on PROSPERO (registration number: CRD42022345015).

Eligibility criteria

Inclusion criteria

Studies were eligible for inclusion if they reported on a RCT of a behavioural health intervention. The definition of a behavioural health intervention was operationalised as any intervention involving the active participation of any target group, with the goal of changing health outcomes and/or health behaviour outcomes (Michaelsen & Esch, Citation2022). Eligible studies had to report at least one health or health behaviour outcome. To gain insight into selective outcome reporting across health psychology and behavioural medicine trials, there were no restrictions on specific types of health or health behaviour outcomes. Studies were required to be published in or after January 2019. This range was chosen to allow sufficient time following the publication of the Consolidated Standards of Reporting Trials Statement for Reporting Social and Psychological Interventions (CONSORT-SPI 2018) in July 2018 (Montgomery et al., Citation2018) for researchers to follow guidelines regarding outcome reporting in this area. As such, the date range was chosen to capture those papers for which we would expect best practice in outcome reporting due to area-specific guidance on this presented in CONSORT-SPI 2018. Studies were required to be published in English, but there were no restrictions on trial location, population, or intervention or behaviour type.

Exclusion criteria

Studies that did not report on a RCT were excluded. We thus excluded quasi-randomised studies, feasibility/pilot trials, interim analyses, and secondary analyses of trials.

Search strategy

To enable the inclusion of a broadly representative sample of trials of behavioural health interventions, nine health psychology and behavioural science journals were searched: Health Psychology, Journal of Health Psychology, British Journal of Health Psychology, Journal of Behavioural Medicine, Annals of Behavioural Medicine, Psychology & Health, Applied Psychology: Health and Well-being, Translational Behavioural Medicine, and International Journal of Behavioral Medicine. These journals were chosen as they are all Q1 or Q2 journals, with good impact factors (currently ranging from 3.1 to 8.1), which are recognised by the research community in these areas as publishing robust and high-quality behavioural health research. Journals were searched for articles published from 1 January 2019 to 14 June 2023. Journals were individually searched using the online journal/publisher search function using the following broad search strategy: Random* Contro* Trial OR RCT. Broad search terms were used to identify all potentially eligible RCTs of behavioural health interventions because this review was not focused on specific intervention types or behaviours.

Identification of protocols and trial registrations for included studies was conducted via hand-searching papers for references to a trial registration and/or a published protocol. Where no trial registration or protocol was reported in-text, manual search was conducted up to 14 June 2023, on Clinicaltrials.gov and the World Health Organization's (WHO's) International Clinical Trial Registry Platform (ICTRP) search portals to identify corresponding registrations. Where protocols and/or trial registrations could not be identified through journal and registry searches, corresponding trial authors were emailed.

Screening

Titles and abstracts of identified papers were independently screened in duplicate by two reviewers (JOS, KMS, SK, or ST), followed by full text screening, which was also conducted independently in duplicate by two reviewers (JOS, KMS, SK, or ST). Inter-rater reliability was κ > 0.8 at all stages, indicating very high levels of agreement (McHugh, Citation2012). Any disagreements during screening were resolved by consensus discussion and/or recourse to a third reviewer where needed.

Data extraction

Data were extracted from the methods and results sections of each trial report and from the corresponding protocol/trial registration, where available, under the following headings: general information (author name, title, date of publication, country, protocol/trial registration, year of protocol/registration); study information (health area, intervention type, setting, population, outcome type); participant characteristics (sample size); intervention characteristics (intervention target, duration, mode of delivery, number of assessments); and outcome reporting (number and details of pre-specified outcomes in protocol/registration and methods, number and details of outcomes reported in the results section, number and details of statistically significant and null outcomes), and selective outcome reporting (number and details of prespecified outcomes not reported, number of new outcomes introduced, changes in outcome importance level). Statistically significant outcomes were considered as those reported as significant in trial reports, typically if p<0.05 or, in the absence of a p-value, an effect estimate with a CI that was indicative of p<0.05. All data were extracted using a pre-specified data extraction form (Supplementary File 1) by at least one reviewer (JOS, SK) and checked by a second reviewer (KMS, SK). For trial registrations and protocols, data were extracted from the most recent version.

Identification of outcome reporting discrepancies

At least one reviewer (JOS or SK) compared outcomes reported in the results sections of included studies with outcomes reported in the methods sections and the study protocols/registrations where available. All comparisons were checked by a second reviewer (KMS). Classification of consistencies and discrepancies between prespecified outcomes and those reported in results sections was informed by previously described classification approaches (Chan et al., Citation2004; Howard et al., Citation2017; Mathieu, Citation2009). Studies were considered to have no discrepancies from prespecified outcomes if all outcomes were reported in the methods and results section as they were in the study protocol/registration (where available). Similarly, if no study protocol/registration was available, studies were considered to have no discrepancies in outcome reporting between methods and results, if outcomes were reported in the study results section as they were in the methods section.

Decisions on discrepancies were informed by previously used methods (Chan et al., Citation2004; Mathieu, Citation2009) and were noted if: (1) a pre-specified outcome in the study protocol/registration was omitted from the results paper; (2) a new outcome that was not prespecified in the protocol/registration was introduced in the results paper; (3) an outcome included in the methods was omitted from the results; (4) a new outcome that was not included in methods was introduced in the results; (5) a primary outcome in either the methods and/or study protocol/registration was downgraded to a secondary outcome in the results; (6) a secondary outcome in the methods and/or study protocol/registration was upgraded to a primary outcome in the results. In addition, discrepancies were considered to potentially indicate bias if an outcome introduced in the results section, that was not included in the protocol/registration or the methods section, was reported as statistically significant. Discrepancies were also considered to potentially indicate bias if an outcome upgraded from a secondary outcome to a primary outcome was subsequently reported as statistically significant, or an outcome downgraded from a primary outcome to a secondary outcome was subsequently reported as non-significant.

Data synthesis

Data were assessed using narrative synthesis and in tabular format. Descriptive statistics were calculated for study characteristics. Outcome reporting was presented narratively and in tabular format to include the number and percentage of the types of outcome discrepancies as well as the number and percentage of trials for which there are no discrepancies. Results are presented first in terms of discrepancies between prespecified outcomes in the study protocol/registration and the results. As discrepancies between methods and results can be considered to occur at a different level to discrepancies from pre-specified outcomes, such discrepancies are then presented separately. Descriptive subgroup analysis was conducted for trials with a corresponding protocol/registration based on whether the protocol/registration was before publication of CONSORT-SPI 2018 (i.e., before or after 2019). This represents a change from the review protocol which was conducted to examine if availability of CONSORT-SPI 2018 had an impact on selective outcome reporting. Relationships between ORB and statistical significance were also examined descriptively due to the small sample size that would limit the meaningfulness of inferential statistics.

Results

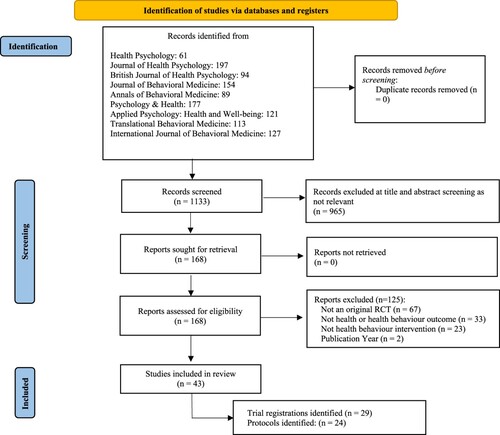

The search of the nine health psychology and behavioural medicine journals identified 1134 potentially relevant records. Of these, 43 trials were eligible for inclusion in this review. See .

Figure 1. PRISMA flow chart of included studies from original search to final stage. Note. All trials with protocols also had corresponding registrations.

The majority of the 43 trials were conducted in North America (n = 16, 37%) or Europe (n = 14, 33%). Health areas targeted included physical activity and/or sedentary behaviour (n = 10, 24%), obesity and/or weight (n = 8, 19%), and diet (n = 5, 12%). Behavioural health interventions examined included physical activity interventions (n = 8, 19%), implementation intention interventions (n = 4, 9%), and informational/educational interventions (n = 4, 9%). The majority of populations included were non-clinical (n = 29, 67%) and the majority of trials were conducted with adults (n = 41, 95%). Twenty-nine trials (67%) were registered on a trial registry; 24 (56%) also had a published protocol. The majority of trials were registered and/or had published a protocol before 2019. The mean sample size was 239 participants. See for full study characteristics.

Table 1. Study characteristics.

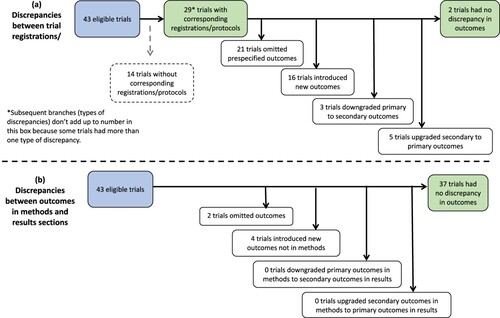

Discrepancies in outcome reporting were observed for trials published in all reviewed journals. Twenty-eight of the 43 reviewed trials (65%) demonstrated at least one discrepancy in findings either between the trial protocol/registration and the results paper or between the methods section and the results section of the final report. Only three of the 29 trials with discrepancies (10%) reported reasons for discrepancies. Reasons for discrepancies included omission due to missing outcome data and modification to data collection due to COVID-19; one trial stated some outcomes were ‘not reported here’ but did not provide additional information. See (see Supplementary File 2 for study level discrepancies). In a subgroup analysis based on whether trials had protocols/registrations prior to 2019, the majority of both trials with protocols/registrations prior to 2019 (86%) and after 2019 (90%) demonstrated some discrepancies between the protocol/registration and the results. More trials with protocols/registrations after 2019 (76%) than those with protocols/registrations before 2019 had outcomes omitted. (See Supplementary File 3).

Table 2. Outcome reporting in included trials (n=43).

Discrepancies or changes to levels of outcome importance between trial registration/protocol and results

Corresponding registrations/protocols were available for 29 (67%) of the 43 included trials ((a)). Twenty-six (90%) of these 29 trials had at least one discrepancy from the prespecified outcomes. Twenty-one (72%) of the 29 trials omitted at least one prespecified outcome from the trial registration/protocol in the results paper. Sixteen (55%) of the 29 trials introduced new outcomes in the results paper that were not included in the trial registration and/or protocol. Eleven (38%) of the 29 trials both omitted prespecified outcomes and introduced new outcomes in results paper.

The number of outcomes reported in the results paper that were prespecified in trial registrations/protocols ranged from 1-17 outcomes (median = 7), with 20%-100% of outcomes in reported papers being prespecified in trial registrations/protocols. The number of outcomes that were prespecified in trial registrations/protocols but were omitted in the results paper ranged from 1-20 outcomes (median = 5); 14%-83% of prespecified outcomes were omitted. The number of new outcomes that were introduced in the results paper (i.e., not prespecified in trial registrations/protocols) ranged from 1-9 outcomes (median = 2); 13%-83% of reported outcomes of outcomes reported in the results were newly introduced and not prespecified in trial registrations/protocols.

In terms of changes to levels of outcome importance, in three (10%) of the 29 trials with corresponding registrations/protocols, outcomes were downgraded from a primary outcome in the trial registration and/or protocol to a secondary outcome in the published results. In five (17%) of the 29 trials, outcomes were upgraded from a secondary outcome to a primary outcome.

Discrepancies between trial methods and results sections

Six (14%) of the 43 reviewed trials had discrepancies between methods and results sections of the published report. Three trials (7%) omitted outcomes from the published methods section in the results ((b)). Of these, one trial omitted two (13%) of 17 outcomes, one omitted one (10%) of 10 outcomes, and the other omitted five (45%) of 11 outcomes. Three trials (7%) introduced new outcomes in the published results that were not included in the methods section. The number of outcomes introduced ranged from one to two; the percentage of outcomes reported in the results that were newly introduced ranged from 10%-67% of outcomes. There were no observed instances of the level of outcome importance being changed between the methods and the results sections in any reviewed trials. In a subgroup analysis based on whether trials had protocols/registrations prior to 2019, no trials with protocols/registrations prior to 2019 demonstrated any some discrepancies between the methods and the results. Nineteen percent of trials with protocols/registrations after 2019 demonstrated discrepancies. (See Supplementary File 3).

Outcome reporting bias

In 19 trials, outcomes were added into the results section from the protocol/registration (n = 16; (a)) and/or methods section (n = 3; (b)). See . In these trials, a newly added outcome was reported as statistically significant in 16 trials (84%). Of these 16 trials, 26 newly added outcomes were reported as statistically significant across 13 trials (81%). Of the three trials in which outcomes were introduced in the results that were not in the methods, three newly added outcomes were reported as statistically significant across the 3 trials (100%). Of the three trials in which outcomes were downgraded from primary outcomes in the protocol/registration to secondary outcomes in the results ((a), ), one outcome was reported as statistically significant in only one trial (33%); seven downgraded outcomes were reported as null across two trials. Of the five trials in which outcomes were upgraded from a secondary outcome in the protocol/registration to a primary outcome in the results ((a), ), all upgraded outcomes were reported as statistically significant.

Table 3. Potential outcome reporting bias.

Discussion

To our knowledge, this review is the first examination of selective outcome reporting in a broad cohort of trials of behavioural health interventions in health psychology and behavioural medicine. Our findings demonstrate that some form of selective outcome reporting occurred in 67% of the trials included in this review. This is higher than previously observed levels of selective outcome reporting in broad cohorts of RCTs in health research, where selective outcome reporting ranged from 40-62% (Dwan et al., Citation2013; Weston et al., Citation2016) and in specific health areas, such as 15% in obesity clinical trials (Rankin et al., Citation2017). This finding is comparable to findings from a review of outcome reporting in behavioural health trials that were specifically published in the journal BMC Public Health (Taylor & Gorman, Citation2022). Outcome reporting discrepancies identified in our review included omission of pre-specified outcomes, introduction of new outcomes, and/or changes to prespecified levels of outcome importance in final published results. Identified discrepancies may be due to ‘spin’ or bias to present significant and/or novel/’exciting’ findings, poor reporting behaviours, and/or peer-review and editorial practices that fall short of ensuring transparency and confidence in research results.

Our findings have important and worrying implications for health psychology and behavioural medicine because selective outcome reporting compromises transparency (Hagger, Citation2019; Ioannidis et al., Citation2017), contributes to research waste (Thomas & Heneghan, Citation2022; Yordanov et al., Citation2018), and impacts evidence syntheses (Ioannidis et al., Citation2017). Further, selective outcome reporting distorts pooled treatment effects (Ioannidis, Citation2008; Macura et al., Citation2010; Shah et al., Citation2020; Thomas & Heneghan, Citation2022), which can lead to mis-informed decision making, with important implications for healthcare professionals and for patient, community, and population health and wellbeing (Segerstrom et al., Citation2023).

Making pre-specified research plans, such as protocols and trial registrations, publicly available enhances transparency and enables evaluations of outcome reporting (Calméjane et al., Citation2018; Chen et al., Citation2019; Thomas & Heneghan, Citation2022; Weston et al., Citation2016). That over half of the reviewed trials had a corresponding published protocol and/or trial registration is encouraging, and is comparable to findings in specific health areas, such as physical activity behaviour change interventions (Norris et al., Citation2022). However, that journals had published RCTs without corresponding trial registrations and/or protocols is surprising and worrying. It is particularly worrying given availability of guidance such as CONSORT-SPI 2018 (Grant et al., Citation2018), and that most of the journals searched in this review either require or encourage pre-registration, with some journals recommending specific registries, such as clinicatrials.gov.

Our findings indicated that more trials omitted than introduced outcomes. However it was not possible to examine whether this was due to knowledge of results, which is a common reason for such omissions (Smyth et al., Citation2011), because we did not have data on omitted outcomes. There are other potential reasons why trial authors may change outcomes; for instance, as noted in our findings, in one instance outcome discrepancies were due to steps taken to modify data collection during COVID-19. Regardless of the reasons for outcome omission, if outcomes were measured and subsequently not reported, this amounts to a waste of research time and resources. For instance, evidence from a recent review of 329 therapeutic, public health, and rheumatology-specific interventions found that the median time spent to collect data was 56.1 hours for primary outcomes and 190.2 hours for secondary outcomes (Gardner et al., Citation2022). That review also found a considerable range in costs for data collection, from approximately £53 in a small drug trial to almost £31,900 in a large phase III prostate cancer screening trial; the median overall data cost was found to be £8016 (Gardner et al., Citation2022). Given that our review identified a large number of omitted outcomes, this implies a large amount of resource waste in these trials.

Introduction of new outcomes, and changing levels of importance assigned to prespecified outcomes, as were observed in this review, are also problematic and reduce transparency, particularly where discrepancies are not explained. Trial samples sizes are derived based on the effect sizes researchers expect to observe in their primary outcome if the intervention is effective (Schulz & Grimes, Citation2005). Thus, changing a secondary outcome to a primary outcome impacts whether the trial is sufficiently powered to detect an effect in that outcome. Further, changing levels of importance is often tied to knowledge of outcome results and so introduces ORB (Hutton & Williamson, Citation2000). While we did not identify evidence of ORB for newly introduced outcomes in this review, in the majority of trials in which changes to the level of outcome importance was identified, the change was found to favour a statistically significant result, indicating ORB.

While discrepancies between methods and results sections were less frequent than discrepancies between protocols and the final report in the reviewed studies, identification of such discrepancies indicates issues with reporting behaviour and peer-review/editorial practices. Previous research has found that peer-reviewers often fail to identify discrepancies and deficiencies in reported methods and results (Chauvin et al., Citation2019; Hopewell et al., Citation2014) and there have been calls for greater robustness in peer-review and editorial processes (Ioannidis et al., Citation2017; Weston et al., Citation2016). Health psychology and behavioural medicine journals can help to minimise selective outcome reporting at the author, reviewer, and editorial levels. For instance, by requiring authors to account for any discrepancies in outcome reporting as a submission requirement, in addition to requiring pre-registration. While all reviewed journals instruct authors to follow relevant reporting guidelines, with most specifying CONSORT (Moher et al., Citation2010), only one journal (International Journal of Behavioral Medicine) provides explicit instruction on reporting and explaining differences between prespecified and reported outcomes in the final manuscript. Such explicit instruction is needed in all journals whereby authors must declare any outcome discrepancies and, where discrepancies occur, to confirm that they are explained in the text and/or in a supplementary file. Authors should be made aware that disclosing changes from protocols does not represent misconduct but instead better aligns with accurate, transparent, and complete reporting (Ioannidis et al., Citation2017).

It is the responsibility of peer-reviewers and editors to ensure that such transparent outcome reporting behaviour occurs, and that reporting guidance is followed. Recommendations for peer-reviewers and editors to more actively check trial protocols and registrations against trial publications to ensure transparent reporting, should consider burden-related challenges faced by peer-reviewers and editors, however. This is because, for the most part, peer-reviewers and editors act in a voluntary capacity (Severin & Chataway, Citation2021). As such, more pragmatic approaches are also needed, such as health psychology and behavioural medicine journals explicitly requiring (not just encouraging) pre-registration, structured reporting, and inclusion of clear statements and/or supplementary files explaining changes from protocols/registrations or, in the absence of changes, statements that changes have not occurred. For instance, that only 10% of trials with discrepancies reported reasons for discrepancies in this review, highlights a clear gap in reporting and peer-review/editorial checking. Requiring trialists to include such information provides an ‘easy win’ for journals and editors to maximise transparency moving forward. Future research into development and implementation of improved peer-review and editorial processes is needed to support improvements in transparent outcome reporting at this level.

Health psychology and behavioural medicine journals are making efforts to enhance transparency and openness. This includes the simultaneous publication of a statement from the Behavioral Medicine Research Council entitled ‘Open Science in Health Psychology and Behavioral Medicine’ in three leading journals in the field (i.e., Health Psychology, Psychosomatic Medicine & Annals of Behavioral Medicine, see Segerstrom et al., Citation2023) encouraging the increased use of open research practices and providing resources for researchers. The introduction of submissions of ‘registered reports’ (where peer review happens before data collection commences) and calls for pre-registration for all types of behaviour change interventions and research studies generally will help to improve transparency in outcome reporting and minimise selective outcome reporting (O’Connor, Citation2021; Thomas & Heneghan, Citation2022). Such efforts are applicable across study designs, which is important given that selective outcome reporting can also be problematic in non-randomised design studies and systematic reviews. For instance, 43% of Cochrane systematic reviews included in a recent examination demonstrated discrepancies from the review protocols (Shah et al., Citation2020). The role of selective outcome reporting in non-RCT studies in health psychology and behavioural medicine warrants further examination to identify and minimise selective outcome reporting across research conducted in this area.

Further efforts to improve outcome reporting in behavioural health trials in health psychology and behavioural medicine should also focus on trialist motivations and behaviours in outcome choice, measurement, and reporting. This includes the need for research to better understand the causes of selective outcome reporting, and barriers and facilitators to full and transparent outcome reporting, as has been done in other outcome methods areas, such as core outcome set use (Bellucci et al., Citation2021; Hughes et al., Citation2022; Matvienko-Sikar et al., Citation2022). Behavioural approaches to maximise open and transparent research practices (Norris & O’Connor, Citation2019) can also potentially help to improve outcome reporting. For instance, two recent studies have utilised the behaviour change wheel framework (Matvienko-Sikar et al., Citation2022; Osborne & Norris, Citation2022) to better understand barriers and facilitators and develop behaviour change theory-based approaches to increase uptake of core outcome sets (Matvienko-Sikar et al., Citation2022) and pre-registration (Osborne & Norris, Citation2022).

This study has some limitations. First, including RCTs published in nine health psychology and behavioural medicine journals limits generalisability of the findings beyond these journals. However, the chosen journals are recognised as publishing high quality research and cover a large proportion of published health psychology and behavioural medicine research. By limiting the review to papers published in these journals, we also limited the review to studies published in the English language, further limiting the generalisability. Second, inclusion of papers published from 2019, while intended to capture best practice in outcome reporting due to publication of the CONSORT-SPI-2018 guidance (Montgomery et al., Citation2018), limited ability to examine potential changes over time; future research could examine this, particularly in relation to specific health outcomes and/or interventions. Third, the majority of trials commenced before publication of the CONSORT-SPI-2018 checklist (Grant et al., Citation2018). However, the CONSORT statement (Moher et al., Citation2010) was available to all trialists during trial conduct, and all trials were published after the CONSORT-SPI-2018 checklist (Grant et al., Citation2018). As such, all trials should include reasons for changes as outlined as per CONSORT guidelines (Grant et al., Citation2018; Moher et al., Citation2010) and interestingly, only studies with protocols/registrations prior to CONSORT-SPI 2018 included explanations of discrepancies. Fourth, we did not contact the authors of the papers to identify reasons for discrepancies and so have limited knowledge of why discrepancies arose because only three papers provided reasons. Further research into this area would facilitate better understanding of why discrepancies occur to help minimise their occurrence. Finally, not all studies had corresponding protocols or trial registrations and so, in these instances, examinations were limited to discrepancies between methods and results sections only. However, we identified that discrepancies still emerged between these sections, highlighting an area for improvement in trial reporting. In addition, if no study protocol/registration was available we classified studies as having no discrepancies where changes were not evident from the methods section. It is plausible that studies without protocols/registrations are more likely to have selective outcome reporting than studies without protocols/registrations. Therefore, we are likely underestimating selective outcome reporting by only comparing methods and results sections for papers without protocols/registrations.

As has been noted by Moher (Moher, Citation2007), researchers have a moral obligation to transparently and completely report trial outcomes. However, our findings indicate high levels of selective outcome reporting and some evidence of ORB. Such findings clearly indicate an important area for improvement in health psychology and behavioural medicine. To reduce selective outcome reporting in this area, top-down and bottom-up efforts and approaches are needed. This includes improved peer-review and editorial processes, as well as improved trialist conduct and reporting behaviours. Such processes and behaviours should include, at a minimum, pre-registering trials completely and accurately, adhering to pre-registered plans, transparently explaining any changes from the protocol, and adhering to reporting guidelines; peer-review and editorial checks that these actions have occurred is also essential. Reducing selective outcome reporting in this area will improve the quality and robustness of reporting of behavioural health interventions, with important implications for patient and public health.

Authors contributions

KMS was involved in conceptualisation, methodology, investigation, data curation, analysis, supervision, writing the manuscript draft, and reviewing and editing it. JOS was involved in methodology, investigation, data curation, analysis, writing- original draft and review and editing. SK was involved in investigation, data curation, analysis, and review and editing of the manuscript draft. ST was involved in investigation, and review and editing of draft. KA, MB, SMcH, DOC, VS, ET, KD, IJS, and JK were involved in conceptualisation, methodology, and review and editing of the manuscript draft.

Supplemental Material

Download PDF (12.8 KB)Supplemental Material

Download PDF (145.2 KB)Supplemental Material

Download PDF (248.5 KB)Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Agnoli, F., Wicherts, J. M., Veldkamp, C. L. S., Albiero, P., & Cubelli, R. (2017). Questionable research practices among Italian research psychologists. PLoS One, 12(3), e0172792. https://doi.org/10.1371/journal.pone.0172792

- Bellucci, C., Hughes, K., Toomey, E., Williamson, P. R., & Matvienko-Sikar, K. (2021). A survey of knowledge, perceptions and use of core outcome sets among clinical trialists. Trials, 22(1), 937. https://doi.org/10.1186/s13063-021-05891-5

- Bradley, H., Rucklidge, J., & Mulder, R. (2017). A systematic review of trial registration and selective outcome reporting in psychotherapy randomized controlled trials. Acta Psychiatrica Scandinavica, 135(1), 65–77. https://doi.org/10.1111/acps.12647

- Cairo, A. H., Green, J. D., Forsyth, D. R., Behler, A. M. C., & Raldiris, T. L. (2020). Gray (Literature) matters: Evidence of selective hypothesis reporting in social psychological research. Personality and Social Psychology Bulletin, 46(9), 1344–1362. https://doi.org/10.1177/0146167220903896

- Calméjane, L., Dechartres, A., Tran, V. T., & Ravaud, P. (2018). Making protocols available with the article improved evaluation of selective outcome reporting. Journal of Clinical Epidemiology, 104, 95–102. https://doi.org/10.1016/j.jclinepi.2018.08.020

- Chan, A.-W., Hróbjartsson, A., Haahr, M. T., Gøtzsche, P. C., & Altman, D. G. (2004). Empirical evidence for selective reporting of outcomes in randomized trials: Comparison of protocols to published articles. JAMA, 291(20), 2457. https://doi.org/10.1001/jama.291.20.2457

- Chauvin, A., Ravaud, P., Moher, D., Schriger, D., Hopewell, S., Shanahan, D., Alam, S., Baron, G., Regnaux, J.-P., Crequit, P., Martinez, V., Riveros, C., Le Cleach, L., Recchioni, A., Altman, D. G., & Boutron, I. (2019). Accuracy in detecting inadequate research reporting by early career peer reviewers using an online CONSORT-based peer-review tool (COBPeer) versus the usual peer-review process: A cross-sectional diagnostic study. BMC Medicine, 17(1), 205. https://doi.org/10.1186/s12916-019-1436-0

- Chen, T., Li, C., Qin, R., Wang, Y., Yu, D., Dodd, J., Wang, D., & Cornelius, V. (2019). Comparison of clinical trial changes in primary outcome and reported intervention effect size between trial registration and publication. JAMA Network Open, 2(7), e197242. https://doi.org/10.1001/jamanetworkopen.2019.7242

- Cristea, I. A., & Naudet, F. (2019). Increase value and reduce waste in research on psychological therapies. Behaviour Research and Therapy, 123, 103479. https://doi.org/10.1016/j.brat.2019.103479

- DeVito, N. J., & Goldacre, B. (2019). Catalogue of bias: Publication bias. BMJ Evidence-Based Medicine, 24(2), 53–54. https://doi.org/10.1136/bmjebm-2018-111107

- Dwan, K., Altman, D. G., Arnaiz, J. A., Bloom, J., Chan, A.-W., Cronin, E., Decullier, E., Easterbrook, P. J., Von Elm, E., Gamble, C, Ghersi, D, Ioannidis, J. P. A., Simes, J., & Williamson, P. R. (2008). Systematic review of the empirical evidence of study publication bias and outcome reporting bias. PLoS One, 3(8), e3081. https://doi.org/10.1371/journal.pone.0003081

- Dwan, K., Gamble, C., Williamson, P. R., Kirkham, J. J., & the Reporting Bias Group. (2013). Systematic review of the empirical evidence of study publication bias and outcome reporting bias—an updated review. PLoS One, 8(7), e66844. https://doi.org/10.1371/journal.pone.0066844

- Gardner, H., Elfeky, A., Pickles, D., Dawson, A., Gillies, K., Warwick, V., & Treweek, S. (2022). A good use of time? Providing evidence for how effort is invested in primary and secondary outcome data collection in trials. Trials, 23(1), 1047. https://doi.org/10.1186/s13063-022-06973-8

- Grant, S., Mayo-Wilson, E., Montgomery, P., Macdonald, G., Michie, S., Hopewell, S., Moher, D. &, on Behalf of the CONSORT-SPI Group. (2018). CONSORT-SPI 2018 explanation and elaboration: Guidance for reporting social and psychological intervention trials. Trials, 19(1), 406. https://doi.org/10.1186/s13063-018-2735-z

- Hagger, M. S. (2019). Embracing open science and transparency in health psychology. Health Psychology Review, 13(2), 131–136. https://doi.org/10.1080/17437199.2019.1605614

- Heneghan, C., Goldacre, B., & Mahtani, K. R. (2017). Why clinical trial outcomes fail to translate into benefits for patients. Trials, 18(1), 122. https://doi.org/10.1186/s13063-017-1870-2

- Hopewell, S., Collins, G. S., Boutron, I., Yu, L.-M., Cook, J., Shanyinde, M., Wharton, R., Shamseer, L., & Altman, D. G. (2014). Impact of peer review on reports of randomised trials published in open peer review journals: Retrospective before and after study. BMJ, 349(jul01 8), g4145–g4145. https://doi.org/10.1136/bmj.g4145

- Hopewell, S., Loudon, K., Clarke, M. J., Oxman, A. D., & Dickersin, K. (2009). Publication bias in clinical trials due to statistical significance or direction of trial results. Cochrane Database of Systematic Reviews, 2009(1), MR000006. https://doi.org/10.1002/14651858.MR000006.pub3

- Howard, B., Scott, J. T., Blubaugh, M., Roepke, B., Scheckel, C., & Vassar, M. (2017). Systematic review: Outcome reporting bias is a problem in high impact factor neurology journals. PLoS One, 12(7), e0180986. https://doi.org/10.1371/journal.pone.0180986

- Hughes, K. L., Williamson, P. R., & Young, B. (2022). In-depth qualitative interviews identified barriers and facilitators that influenced chief investigators’ use of core outcome sets in randomised controlled trials. Journal of Clinical Epidemiology, 144, 111–120. https://doi.org/10.1016/j.jclinepi.2021.12.004

- Hutton, J. L., & Williamson, P. R. (2000). Bias in meta-analysis due to outcome variable selection within studies. Journal of the Royal Statistical Society Series C: Applied Statistics, 49(3), 359–370. https://doi.org/10.1111/1467-9876.00197

- Ioannidis, J. P. A. (2008). Why most discovered true associations are inflated. Epidemiology, 19(5), 640–648. https://doi.org/10.1097/EDE.0b013e31818131e7

- Ioannidis, J. P., Caplan, A. L., & Dal-Ré, R. (2017). Outcome reporting bias in clinical trials: Why monitoring matters. BMJ, 356, j408. https://doi.org/10.1136/bmj.j408

- John, L. K., Loewenstein, G., & Prelec, D. (2012). Measuring the prevalence of questionable research practices with incentives for truth telling. Psychological Science, 23(5), 524–532. https://doi.org/10.1177/0956797611430953

- Kirkham, J. J., Dwan, K. M., Altman, D. G., Gamble, C., Dodd, S., Smyth, R., & Williamson, P. R. (2010). The impact of outcome reporting bias in randomised controlled trials on a cohort of systematic reviews. BMJ, 340(feb15 1), c365. https://doi.org/10.1136/bmj.c365

- Komukai, K., Sugita, S., & Fujimoto, S. (2024). Publication bias and selective outcome reporting in randomized controlled trials related to rehabilitation: A literature review. Archives of Physical Medicine and Rehabilitation, 105(1), 150–156. https://doi.org/10.1016/j.apmr.2023.06.006

- Kwasnicka, D., ten Hoor, G. A., van Dongen, A., Gruszczyńska, E., Hagger, M. S., Hamilton, K., Hankonen, N., Heino, M. T. J., Kotzur, M., Noone, C., Rothman, A. J., Toomey, E., Warner, L. M., Kok, G., Peters, G.-J., & Luszczynska, A. (2021). Promoting scientific integrity through open science in health psychology: Results of the Synergy Expert Meeting of the European health psychology society. Health Psychology Review, 15(3), 333–349. https://doi.org/10.1080/17437199.2020.1844037

- Lancee, M., Schuring, M., Tijdink, J. K., Chan, A., Vinkers, C. H., & Luykx, J. J. (2022). Selective outcome reporting across psychopharmacotherapy randomized controlled trials. International Journal of Methods in Psychiatric Research, 31(1), e1900. https://doi.org/10.1002/mpr.1900

- Macura, A., Abraha, I., Kirkham, J., Gensini, G. F., Moja, L., & Iorio, A. (2010). Selective outcome reporting: Telling and detecting true lies. The state of the science. Internal and Emergency Medicine, 5(2), 151–155. https://doi.org/10.1007/s11739-010-0371-z

- Mathieu, S. (2009). Comparison of registered and published primary outcomes in randomized controlled trials. JAMA, 302(9), 977. https://doi.org/10.1001/jama.2009.1242

- Matvienko-Sikar, K., Byrne, M., Clarke, M., Kirkham, J., Kottner, J., Mellor, K., Quirke, F., Saldanha, J., Smith, I., Toomey, V., & & Williamson, E. (2022). Using behavioural science to enhance use of core outcome sets in trials: Protocol. HRB Open Research, 5, 23. https://doi.org/10.12688/hrbopenres.13510.1

- Matvienko-Sikar, K., Terwee, C. B., Gargon, E., Devane, D., Kearney, P. M., & Byrne, M. (2020). The value of core outcome sets in health psychology. British Journal of Health Psychology, 25(3), 377–389. https://doi.org/10.1111/bjhp.12447

- McHugh, M. L. (2012). Interrater reliability: The kappa statistic. Biochemia medica, 22(3), 276–282. https://doi.org/10.11613/BM.2012.031

- Michaelsen, M. M., & Esch, T. (2022). Functional mechanisms of health behavior change techniques: A conceptual review. Frontiers in Psychology, 13, 725644. https://doi.org/10.3389/fpsyg.2022.725644

- Moher, D. (2007). Reporting research results: A moral obligation for all researchers. Canadian Journal of Anesthesia/Journal canadien d'anesthésie, 54(5), 331–335. https://doi.org/10.1007/BF03022653

- Moher, D., Hopewell, S., Schulz, K. F., Montori, V., Gøtzsche, P. C., Devereaux, P. J., Elbourne, D., Egger, M., & Altman, D. G. (2010). CONSORT 2010 explanation and elaboration: Updated guidelines for reporting parallel group randomised trials. BMJ, 340(mar23 1), c869. https://doi.org/10.1136/bmj.c869

- Montgomery, P., Grant, S., Mayo-Wilson, E., Macdonald, G., Michie, S., Hopewell, S., & Moher, D. (2018). Reporting randomised trials of social and psychological interventions: The CONSORT-SPI 2018 Extension. Trials, 19(1), 1–14. https://doi.org/10.1186/s13063-018-2733-1

- Norris, E., & O’Connor, D. B. (2019). Science as behaviour: Using a behaviour change approach to increase uptake of open science. Psychology & Health, 34(12), 1397–1406. https://doi.org/10.1080/08870446.2019.1679373

- Norris, E., Prescott, A., Noone, C., Green, J. A., Reynolds, J., Grant, S., & Toomey, E. (2022a). Open Science Research Priorities in Health Psychology [Preprint]. PsyArXiv. https://doi.org/10.31234/osf.io/a7vrz

- Norris, E., Sulevani, I., Finnerty, A. N., & Castro, O. (2022b). Assessing Open Science practices in physical activity behaviour change intervention evaluations. BMJ Open Sport & Exercise Medicine, 8(2), e001282. https://doi.org/10.1136/bmjsem-2021-001282

- O’Connor, D. B. (2021). Leonardo da Vinci, preregistration and the Architecture of Science: Towards a More Open and Transparent Research Culture. Health Psychology Bulletin, 5, 39–45. https://doi.org/10.5334/hpb.30

- Olsson-Collentine, A., Van Aert, R. C. M., Bakker, M., & Wicherts, J. (2023). Meta-analyzing the multiverse: A peek under the hood of selective reporting. Psychological Methods. https://doi.org/10.1037/met0000559

- Osborne, C., & Norris, E. (2022). Pre-registration as behaviour: Developing an evidence-based intervention specification to increase pre-registration uptake by researchers using the Behaviour Change Wheel. Cogent Psychology, 9(1), 2066304. https://doi.org/10.1080/23311908.2022.2066304

- Page, M. J., McKenzie, J. E., Bossuyt, P. M., Boutron, I., Hoffmann, T. C., Mulrow, C. D., Shamseer, L., Tetzlaff, J. M., Akl, E. A., Brennan, S. E., Chou, R., Glanville, J., Grimshaw, J. M., Hróbjartsson, A., Lalu, M. M., Li, T., Loder, E. W., Mayo-Wilson, E., McDonald, S., … Moher, D. (2021). The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ, 372, n71. https://doi.org/10.1136/bmj.n71

- Rabelo, A. L. A., Farias, J. E. M., Sarmet, M. M., Joaquim, T. C. R., Hoersting, R. C., Victorino, L., Modesto, J. G. N., & Pilati, R. (2020). Questionable research practices among Brazilian psychological researchers: Results from a replication study and an international comparison. International Journal of Psychology, 55(4), 674–683. https://doi.org/10.1002/ijop.12632

- Rankin, J., Ross, A., Baker, J., O’Brien, M., Scheckel, C., & Vassar, M. (2017). Selective outcome reporting in obesity clinical trials: A cross-sectional review. Clinical Obesity, 7(4), 245–254. https://doi.org/10.1111/cob.12199

- Schulz, K. F., & Grimes, D. A. (2005). Sample size calculations in randomised trials: Mandatory and mystical. The Lancet, 365(9467), 1348–1353. https://doi.org/10.1016/S0140-6736(05)61034-3

- Segerstrom, S. C., Diefenbach, M. A., Hamilton, K., O’Connor, D. B., Tomiyama, A. J., Bacon, S. L., Behavioral Medicine Research Council, Bennett, G. G., Brondolo, E., Czajkowski, S. M., Davidson, K. W., Epel, E. S., Revenson, T. A., & Ruiz, J. M. (2023). Open science in health psychology and behavioral medicine: A statement from the behavioral medicine research council. Annals of Behavioral Medicine, 57(5), 357–367. https://doi.org/10.1093/abm/kaac044

- Severin, A., & Chataway, J. (2021). Overburdening of peer reviewers: A multi-stakeholder perspective on causes and effects. Learned Publishing, 34(4), 537–546. https://doi.org/10.1002/leap.1392

- Shah, K., Egan, G., Huan, L. N., Kirkham, J., Reid, E., & Tejani, A. M. (2020). Outcome reporting bias in Cochrane systematic reviews: A cross-sectional analysis. BMJ Open, 10(3), e032497. https://doi.org/10.1136/bmjopen-2019-032497

- Smyth, R. M. D., Kirkham, J. J., Jacoby, A., Altman, D. G., Gamble, C., & Williamson, P. R. (2011). Frequency and reasons for outcome reporting bias in clinical trials: Interviews with trialists. BMJ, 342(jan06 1), c7153–c7153. https://doi.org/10.1136/bmj.c7153

- Taylor, N. J., & Gorman, D. M. (2022). Registration and primary outcome reporting in behavioral health trials. BMC Medical Research Methodology, 22(1), 41. https://doi.org/10.1186/s12874-021-01500-w

- Thomas, E. T., & Heneghan, C. (2022). Catalogue of bias: Selective outcome reporting bias. BMJ EBM, 27(6), 370–372.

- Wang, A., Menon, R., Li, T., Harris, L., Harris, I. A., Naylor, J., & Adie, S. (2023). Has the degree of outcome reporting bias in surgical randomized trials changed? A meta-regression analysis. ANZ Journal of Surgery, 93(1–2), 76–82. https://doi.org/10.1111/ans.18273

- Weston, J., Dwan, K., Altman, D., Clarke, M., Gamble, C., Schroter, S., Williamson, P., & Kirkham, J. (2016). Feasibility study to examine discrepancy rates in prespecified and reported outcomes in articles submitted to The BMJ. BMJ Open, 6(4), e010075. https://doi.org/10.1136/bmjopen-2015-010075

- Yordanov, Y., Dechartres, A., Atal, I., Tran, V.-T., Boutron, I., Crequit, P., & Ravaud, P. (2018). Avoidable waste of research related to outcome planning and reporting in clinical trials. BMC Medicine, 16(1), 87. https://doi.org/10.1186/s12916-018-1083-x