Abstract

Guide dogs are sense-able agents that can assist Visually Impaired Persons (VIP) to achieve mobility. But could a guide dog be replaced by a robot dog? Based on video recordings and ethnomethodological ‘conversation analysis’ of VIPs who are mobile in a street environment with a remotely operated robodog or a guide dog, respectively, this paper shows the multisensory and semiotic capacities of non-human agents as assistants in navigational activities. It also highlights the differences between their type of agency and sense-ability, and thus their different roles in situations of assisted mobility and disability mobility. This paper contributes to research in assisted and disability mobility between humans and non-humans by showing how they work not as individual agents, but as ‘VIP + guide dog’ and ‘VIP + robodog + operator’ assemblages, and by demonstrating that these assemblages distribute and co-construct the practical perception of the material world which is necessary for accomplishing mobility.

Introduction

Dogs are mobile, and they can assist in the mobile activities of human beings. For thousands of years (Freedman and Wayne Citation2017), dogs have been used systematically as companions and helpers – for instance as watchdogs, hunting dogs, sled dogs and herding dogs. Today they also operate as specialised working dogs in the police force, in the military, as search-and-rescue dogs, detection dogs, therapy dogs, cadaver dogs and as service dogs. Service dogs – or assistance dogs – are working dogs specially trained to assist people with disabilities. Examples include mobility assistance dogs, medical assistance dogs, hearing dogs for the deaf, and guide dogs for the blind. What most service dogs have in common is that their job is to assist with mobile activities, and that there is in many countries a legal right for them to enter all areas of public life. Walking together with, and being guided by, a service dog occurs within ‘phenomenal fields’ (Garfinkel Citation2002) as ‘microgeographies of everyday life’ (Cresswell Citation2011), and shows up as a perspicuous setting for studying ‘micro-mobility’ (Fortunati and Taipale Citation2017), ‘corporeal mobility’ (Urry Citation2007), and ‘mobile formations’ (McIlvenny, Broth, and Haddington Citation2014).

However, as technology opens up new opportunities and the development of mobile robots gains momentum, further questions emerge: Can service dogs be replaced by mobile robots? Will a robot take over the job of a guide dog? In this paper, I apply an ethnomethodological approach (Garfinkel Citation1967) to a praxiological version of the question, namely: How do visually impaired people who use either a guide dog or a robodog accomplish mobile, navigational activities? This research question entails a narrow focus on the minute details of naturally and orderly organised multimodal actions as they occur in situated mobile settings. To research the question, this article investigates an experimental setting in which a VIP navigates together with, first, a guide dog, and second, the commercial robodog Spot, controlled by a human operator ().

The paper explores assisted and disability mobility with regards to visual impairment, guide dogs and robots. It contributes to the intersection between disability and mobilities, as suggested by Goggin (Citation2016, 533) who states in Mobilities, that the ‘strategically important zone where disability is crucially important is the shaping of new aspects of society and social relationships with digital technologies.’ My research presented in this paper is a contribution to mobility research in the form of insights into assisted and disability mobilities in the context of non-human agents that combine with a VIP as emerging assemblages. This paper will demonstrate how the different assemblages produce joint mobile actions based on the co-construction of distributed perception (cf. Due, Citation2021a). Multisensory perception of the world in assisted mobility settings is not a property of an individual’s cognitive processes, but rather of an emerging and socially accountable assemblage – in this case either the ‘VIP + robodog + operator’ or the ‘VIP + guide dog’, that builds actions in concert. In both cases there is a ‘bodily contact space’ (Cekaite Citation2016, 40) between the agents. But as I will show in this paper, the harness has a ‘functional signification’ (Garfinkel Citation2002, 84) as a tool for achieving a ‘mediated haptic sociality’ (Due, Citation2021c) necessary for being mobile as an assemblage.

Materials and methods

Applying an ethnomethodological framework

Ethnomethodology is a branch of sociology that deals with aspects of social order and knowledge in situ as practical accomplishments. Focusing on social activities as situated, mobile human productions – the thisness (haeccity) (Garfinkel Citation2002) of whatever is occurring – this approach seeks to analyse the intelligibility and accountability of social activities ‘from within’ those activities themselves. As society is always in the making, in flux, in movement, each and every situation is, strictly speaking, new. Garfinkel highlights the fact that we cannot imagine society – rather, it is ‘only actually found out’ (Garfinkel Citation2002, 96). This implies a respecification of practices otherwise taken for granted within sociology, psychology, linguistics, and so on. A priori representational methods are not regarded as sufficient, since the details of action and interaction can only be examined in and through each case. Consequently, each and every finding is discovered – and its particular details, as locally and endogenously produced, cannot be imagined. Furthermore, coordinated action between human beings does not require shared meanings or a shared understanding of each other’s ‘inner mental states’ (Edwards Citation1997). This makes it an ideal approach for studying interactions with non-human agents, because we ‘only’ study observable actions. Likewise, perception is not regarded as just a cognitive experience, but also as something tied practically to particular circumstances (Gibson Citation1979) and accomplished within social interactions as observable actions (Nishizaka Citation2020; Goodwin Citation2007; Due Citation2021a; Eisenmann and Lynch Citation2021; Garfinkel Citation1952; Cekaite Citation2020). When deployed in combination with conversation analysis, ethnomethodology can show how sequential organisation underpins human sociality (Kendrick et al. Citation2020; Sacks, Schegloff, and Jefferson Citation1974; Schegloff Citation2007). This approach, abbreviated to EM/CA, therefore enables the study of interactions between humans and non-humans.

An ethnomethodological and video ethnographic approach to mobility, as represented in Mobilities, has explored cycling (Lloyd Citation2017, Citation2019; McIlvenny Citation2015) for instance, as well as the use of technology while walking (Laurier, Brown, and McGregor Citation2016; Licoppe Citation2016). As such, an ethnomethodological approach to mobile activities fits nicely with what Sheller and Urry talk about as a new mobilities paradigm that problematises sedentarist theory that ‘treats as normal stability, meaning, and place, and treats as abnormal distance, change, and placelessness’ (Sheller and Urry Citation2006, 208). This paper makes a further contribution to the mobilities paradigm by highlighting how mobility is tied to perception and its social and co-creative nature, even in the context of non-human agents.

An EM/CA approach to visual impairment and the practice of navigating

EM/CA research on visual impairment dates back to the early days of ethnomethodology (see Robillard Citation1999). Garfinkel calls marginal cases involving VIPs ‘natural experiments’ (Rawls, Whitehead, and Duck Citation2020, 8ff), because their mobile practices may reveal taken-for-granted aspects of ordinary society. Psathas is particularly interested in one of the most obvious issues of vision loss: how to be mobile in the world. In several studies (e.g. Psathas Citation1976, Citation1992) he investigates navigation, mobility, orientation, wayfinding and walking and shows them to be practical accomplishments. Relieu, too, focuses on walking and talking, demonstrating the spatial embeddedness of talk in several publications (Morel and Relieu Citation2011; Quéré and Relieu Citation2001; Relieu Citation1994). Due and Lange study the use of the white cane (Due and Bierring Lange Citation2018a), the guide dog (Due and Lange Citation2018), and obstacle detection (Due and Bierring Lange, Citation2018b) in mobile contexts. They show, for instance, how obstacle detection is accomplished on the move and highlight the almost unimaginable issues that arise when dealing with the irregularities of societal objects such as signs and bikes. Von Lehn (Citation2010) and Kreplak & Mondémé (Citation2014) study mobility in museums, highlighting some of the tactile resources used by VIPs when exploring objects in spatial settings. The key insights from these ethnomethodological studies of navigation are that, for the VIP, walking is finely organised in minute detail, it requires both multisensorial perception and co-operative resources, such as a white cane, a companion, technology, or a guide dog in order to succeed, and also that problems may arise that cannot be anticipated in exact detail beforehand.

An EM/CA approach to non-human interaction

Ethnomethodological research has shown that it is possible to study interspecies interaction as practical action (cf. Goode Citation2007; Laurier, Maze, and Lundin Citation2006). The projection of the next relevant actions is key for sociality to evolve, and for joint action and activity to be accomplished. This is shown consistently in EM/CA (Auer Citation2005; Goodwin Citation2002; Mondada Citation2006; Schegloff Citation1984; Streeck Citation1995; Streeck and Jordan Citation2009). There is also strong evidence of sequence and preference organisation in other animal interaction (Rossano Citation2013). Ethnomethodological research on VIPs and guide dogs has shown how there is a finely embodied, coordinated monitoring between dogs and their owners on the move, with a particular focus on gaze and head direction (Mondémé; Citation2011a). Studies have shown how interspecies coordination is accomplished (Mondémé Citation2020) without taking into consideration what kind of agent a dog ‘really is’ compared to a human. Rather, the emphasis is on how they accomplish current situated activities together, moment by moment, in mobile settings. While this line of ethnomethodological research has illustrated shared walking and ‘interspecies intercorporeality’ (Due Citation2021c), work still remains to be done on precisely how, when navigating, obstacle detection is embedded in an interactional semiotic ecology of distributed perception between the sense-able agents.

In this paper, I contrast interactions involving a guide dog with those involving a robodog (Spot) equipped with artificial intelligence (AI) and with sensing and semiotic capabilities. Prior studies of robotic guide dogs and mobility dogs as assistive technologies (Estrada Citation2016; Manduchi and Kurniawan Citation2018) are scarce, and have only been conducted using robotic prototypes in experimental situations. Examples include the renowned MELDOG project (Tachi et al. Citation1981) and other forms of simple, computer vision-based guidance systems, which resemble suitcases on wheels (e.g. Guerreiro et al. Citation2019; Iwatsuka, Yamamoto, and Kato Citation2004) or are designed as flying drones (Folmer Citation2015). A few studies have tried to compare dogs with robot dogs, e.g. focusing on how to embody the appeal of dogs in physical robots (Konok et al. Citation2018; Krueger et al. Citation2021) or how to enjoy walking with a digitally augmented robot dog (Norouzi et al. Citation2019). However, these mobile robots are not only highly experimental, but also move using wheels. Spot, on the other hand, is a robot with legs – a mobile machine that enables locomotion on uneven surfaces and can navigate stair-like obstacles. In addition, Spot is already commercially available. Studying dog and robot interactions, Kerepesi et al. (Citation2006, 92) suggest that ‘more attention should be paid in the future to the robots’ ability to engage in cooperative interaction with humans’. This paper is a response to that call.

In the last couple of years, human-robot interaction (HRI) has also been examined through video-based analysis, using an ethnomethodological conversation analytic perspective. While to my knowledge no research so far has examined four-legged robots in social interaction from an EM/CA perspective, past research has shed light on the use of permanently fixed robots like Pepper (Due Citation2019) and Nao (Pelikan and Broth Citation2016) – as well as autonomous setups (Gehle et al. Citation2017), more experimental moving robots (Yamazaki et al. Citation2019) and quasi-autonomous systems such as telepresence robots (Due, Citation2021b). Physical robots typically have senses capable of processing information about the local environment and with a material structure that occupies positions in space. Interactions with intelligent robotic systems change the situation from a human participation framework (Goffman Citation1974) to a ‘synthetic situation’ (Knorr-Cetina Citation2009), which has consequences for practical action. Findings from EM/CA analysis of human-robot interaction suggest that the design and development of robots seldom takes into account the detailed sequential responsiveness and pragmatics of human action formation and interaction (Albert, Housley, and Stokoe Citation2019; Pitsch Citation2016). This paper contributes to EM/CA research in human-robot interaction by showing how assisted mobility is possible based on co-creative perceptive actions within emerging assemblages.

Data and methodology

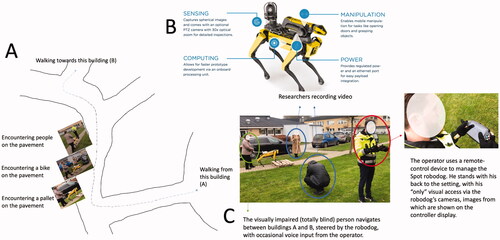

The data in this paper was collected as part of the research project BlindTech, studying visually impaired people’s use of new technologies. The video ethnographic data is from a study conducted within an experimental setting. The visually impaired participants were asked to walk along a designated outdoor route, from building A to building B ().

Figure 2. (A) The path that the visually impaired person is navigating. (B) The robodog Spot. (C) The operator who controls the robodog.

In this paper, I focus on one visually impaired person who walked the route first with her guide dog and then with the robodog. Along the specified route, the VIP had to navigate around a pallet (a 15 cm raised wood construction) and a cargo bike, both placed on the pavement. The VIP knew from the outset that she would need to pass these objects, but she did not know their whereabouts. As the route was more familiar the second time, the two situations are not strictly comparable. However, this is not considered problematic because the goal of the study is not to predict the outcome by introducing different variables, nor to work with randomisation or statistical models. Except for the design of the route, there were no formalised protocols, nor any strict procedures for the experimental design. The goal was not to compare according to equal measurements, but to provide knowledge on the enacted and embodied practices. The video-recorded actions can be regarded as naturally organised within the situation (Garfinkel Citation1991).

The robot known as Spot has several sensors and video cameras, as well as advanced AI algorithms that enable computer vision and 3D mapping of the environment. The robot is currently controlled remotely by an operator, but it autonomously coordinates its micro-movements and obstacle detections, which reduces the risk of overturning and/or collision. While the robot can be programmed to walk a specific route in a completely autonomous fashion, it is not yet considered safe enough for legal use by VIPs. While the operator controls the robodog’s overall direction and pace, the robot autonomously adjusts to local environments and obstacles, maintaining its balance, direction, pace, and so on. The operator controls the robot via a joypad with a screen on which he has visual access to the robot’s point of view through its onboard cameras. He controls it by moving a joystick to determine its direction and pace. For example, if the operator steers the robot towards an obstacle, it will autonomously either step over it or, if it is ‘un-stepable’, avoid collision by turning away and around, using its sensor capacities. The operator can in advance set the obstacle detection and distance parameters for avoiding collision. In this experiment the robot is set to autonomously swerve away from obstacles when it is about 30 cm from them. It is the operator who then gets it back on track. From an analytical perspective it is impossible to separate all the operator’s actions from the robot’s autonomous micro adjustments. They melt together into a merging assemblage.

In this experiment, two VIPs each walked the route six times in one day, using a white cane, a guide dog and a robodog respectively. The VIPs participated in the experiment voluntarily, and they have been anonymised in this paper, in accordance with national GDPR rules. In this research there is no engagement with any form of personal data. Furthermore, this is not considered to be animal research, since it was not an experimental laboratory study and since the dog was owned by, and under the supervision of, the VIP involved. This experimental study was permitted by, and conducted on the property of, the Teknologisk Institut, Denmark. Further approval of the study was not required. Video material was used for capturing natural interaction as it happens. Transcripts of specific excerpts have been chosen to show the sequential organisation of both talk-in-interaction and embedded bodily actions. The aim was to provide a close representation of the actual actions in a video excerpt and perform a detailed analysis. This, of course, is not ‘objective’ data, but data constructed through the different research phases (Ochs Citation1979). The notation system follows the Jeffersonian (Jefferson Citation2004) system for verbal transcripts, with frame grabs included from the video. Each image from the video has a timestamp and a corresponding number marked with an #. The ordering principle follows a sequential unfolding of events in time.

Results

Our analysis focuses on assisted mobility between the VIP and the two forms of non-human agent. It does this by unpacking the sequential organisation and the actions that make up the different observable practices involved. The emphasis is on what can be observed as displayed, practical action that is consequential within a specific situation. The analysis centres around obstacles along the route, namely a pallet on the pavement and a parked cargo bike. The interactional ecologies of the two situations are quite different because the robot is not an independent non-human agent as the dog is but an assemblage in which the operator controls global movements and the robot make its own autonomous micro-adjustments.

Analysing how the VIP + dog assemblage navigates a pallet on the pavement

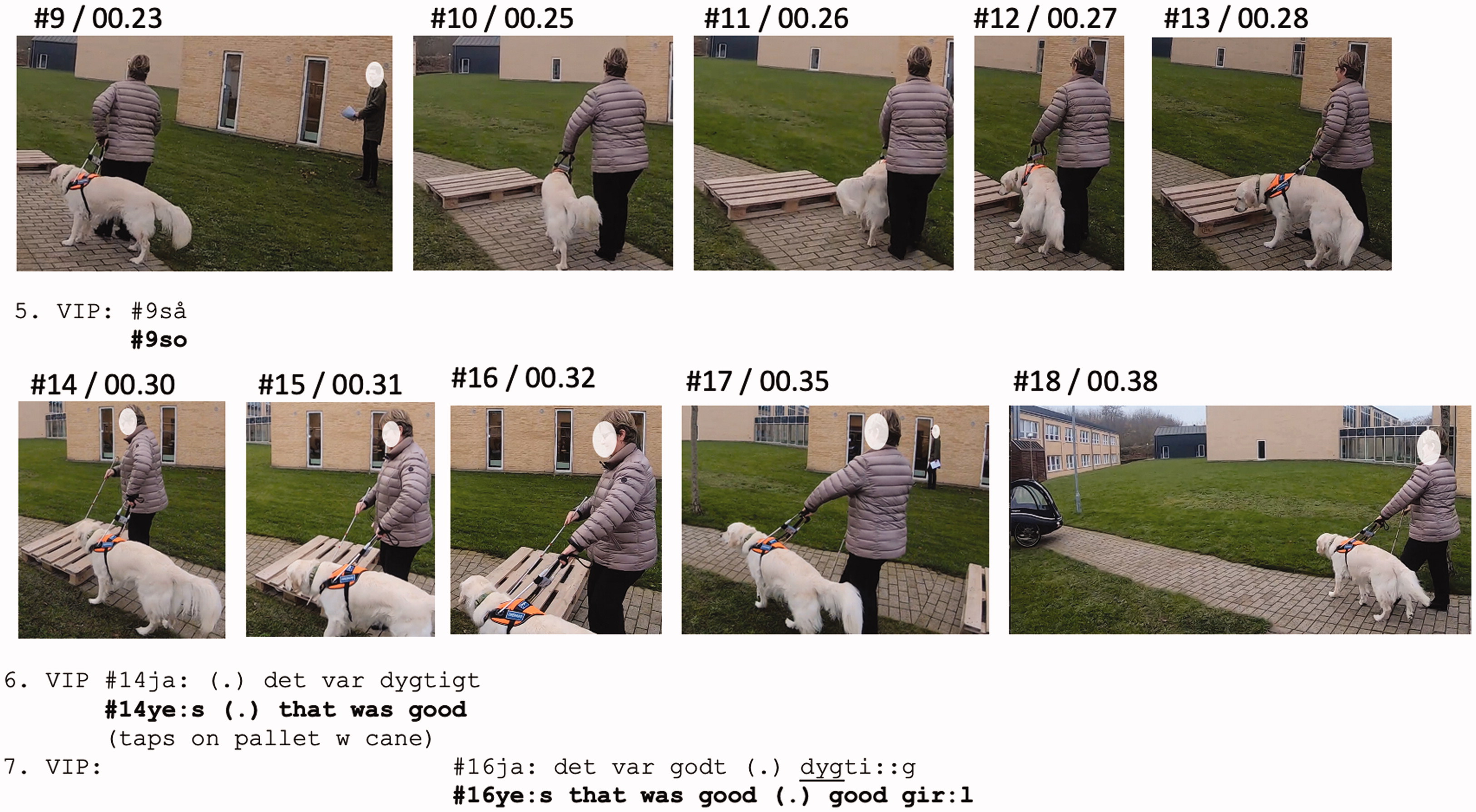

The first excerpt shows how the VIP and the dog walk over a pallet. The VIP knows there is a pallet somewhere on the pavement, and understands that she should pass it, or at least detect it. But she does not know exactly where it is. The dog is not trained to walk over pallets. Generally, guide dogs are trained to seek out the easiest way around an obstacle, rather than crossing over it. Before encountering the pallet, the VIP + dog assemblage has been walking for about 10 metres with no obstacles.

In line 5, the VIP produces a minimal verbal particle while moving her left steering arm from being bent, in a holding position, to being extended forward (#9–10). The dog treats this multimodal gestalt as a signal to proceed with walking. While this is a situated action, it is also a known paired action; one that the dog has been trained to recognize (Guide Dogs for the Blind Citation2019). The trajectory of the dog’s body is oriented towards going around the obstacle on the right (#10), instead of over the top of it. The VIP first follows it with her bodily alignment (#11). However, as the dog is about to move right around the pallet, the VIP steps onto the grass with her right foot (#11) and responds by pulling the dog’s harness in a leftward movement, placing her leg and knee close to the dog and almost gently pushing it back to the pavement (#11–12). The VIP is legally blind and there are no signs of her perceiving the nearby obstacle. The dog immediately responds to the VIP’s bodily actions by stopping and turning first its head, and then its entire body, leftwards (#12–13).

While the dog treats the trajectory up and over the pallet as dispreferred (which is observable from the way it seeks to guide the VIP around the pallet instead), the VIP treats the trajectory away from the pavement and onto the grass as dispreferred. It is therefore observable that they have different preferred strategies for moving forward, and that this entails bodily negotiation in this interspecies assemblage. The VIP utilises not only the distributed perception provided by the dog and the haptic sensory feedback coming through the harness, but also the sensory input from her feet and the white cane, which touches the different kinds of substrate, thus creating a multisensory gestalt embedded within the interspecies assemblage. It is observable how the VIP, via this multisensory and distributed perception, recognises that the dog’s projected trajectory is around the obstacle. The VIP senses the pallet with the cane in #13 only after she has steered the dog back to the pavement. After this, she compliments the dog. As the VIP/dog assemblage together encounters the pallet, the VIP reaches out and uses her white cane to tap the obstacle (#14–16), while offering affiliative encouragement to the dog for guiding her towards the pallet and enabling her to locate it: ‘ye:s (.) that was good ye:s that was good (.) good gir:l’ (l. 6–7). This is a compliment to the dog, for both guiding the VIP towards the pallet and for attempting to go around it, i.e. detection and obstacle avoidance in the same sequence. Although the VIP presumably receives tactile and auditory sensory input about the shape of the pallet and the demarcation between the pavement and the grass area (#15), she nevertheless bodily and verbally accepts the new trajectory as a haptic sensation distributed by the dog through the mediating harness – that is, not to go up and over the pallet, but around it. Notice how in #15 the VIP holds the dog back by withdrawing her left steering arm, as she observably recognises the ‘off-track’ path away from the pavement – and simultaneously detects the edge of the pallet. But then, shortly afterwards, she accepts the trajectory with her bodily alignment and with positive encouragement for the dog (l. 7, #16–18).

This analysis therefore indicates that, individually, neither the dog alone nor the VIP alone manages to navigate around the obstacle. Instead, this action is a co-constructive accomplishment between the agents: one which constructs them as an temporary assemblage whereby perception of the outer world is distributed through the different agent’s various kinds of sensory and semiotic capacities, enabling them to be mobile as one assemblage. EM/CA research has consistently shown how actions carried out through interactions are designed and produced as co-constructed, even within a simple utterance (Goodwin Citation1979). Recent developments have shown that this is also true in interspecies interactions (Mondémé Citation2020). This short analysis has also shown just how detailed the co-constructive organisation of a simple obstacle-passing activity may be, and the extent to which it relies on multimodal and multisensorial communication in a distributed perceptual field (Due Citation2021a). The next example shows how the same obstacle is managed by the VIP + robodog + operator assemblage, configuring this as a quite different situated ecology.

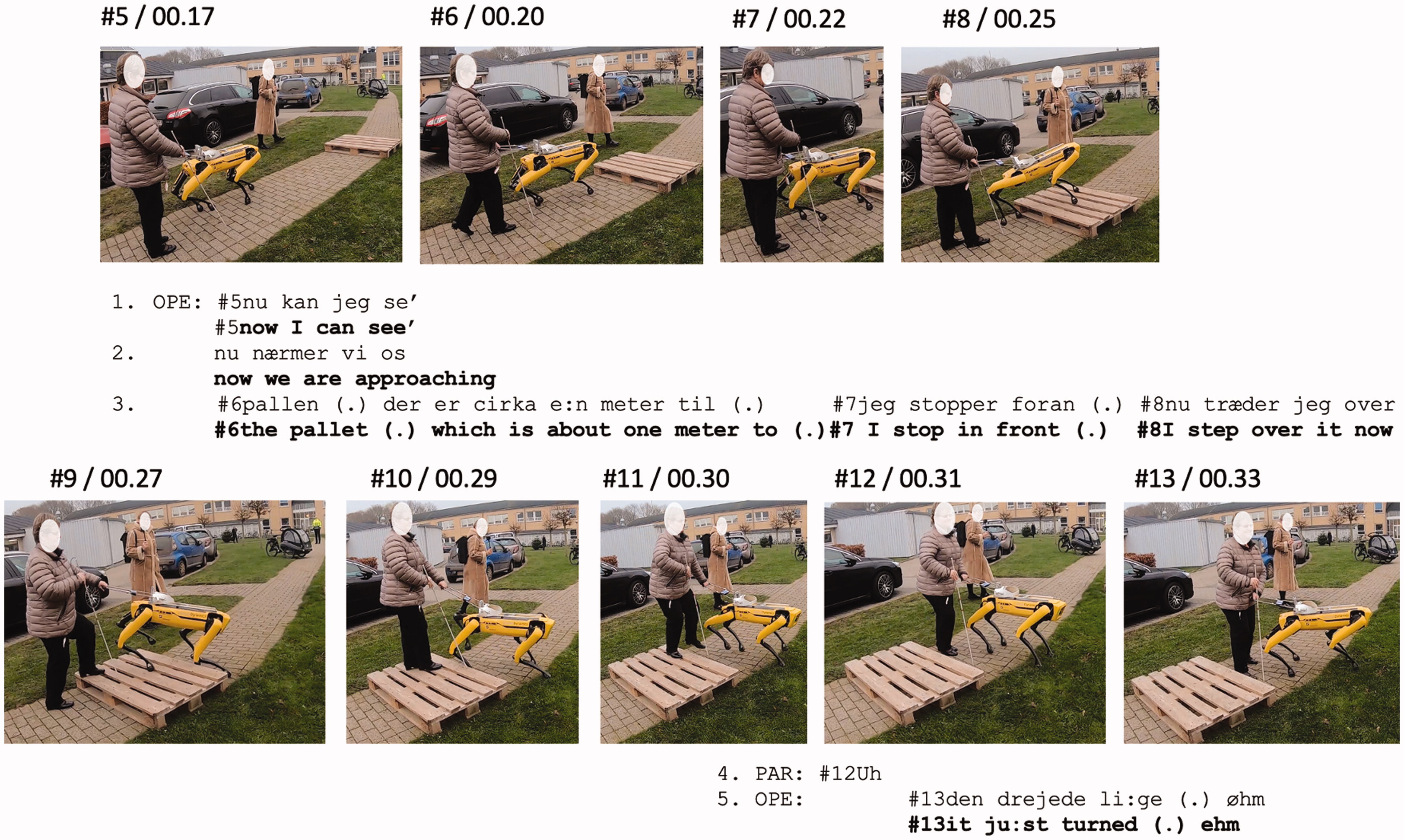

Analysing how the VIP + robodog + operator assemblage navigates a pallet on the pavement

Although the navigational route is the same one that the VIP has already walked with her guide dog, the interactional ecology is very different when navigating with the robodog. As mentioned, (cf. ), the robodog is controlled by a human operator. He cannot see the scene directly, only as it is mediated through the cameras on the robot, and he is able to interact verbally with the VIP. As they approach the pallet, the operator describes what he can see: ‘now I can see we are approaching the pallet which is about one meter to (.) I stop in front (.) I step over it now’ (l. 1–3). While he is talking, the operator simultaneously steers the robodog straight towards, and onto, the pallet. It is noticeable that the VIP simply follows the movements of the robodog towards the pallet (#5–7). This is presumably accomplished based both on the pull felt in the harness, and the verbal comments, which are designed as ‘online commentaries’ (Heritage and Stivers Citation1999). The operator then slows down and stops the robodog, commenting on the obstacle’s spatial location (‘in front’ (l. 3, #7), before continuing across the pallet.

While this sequence seems uncomplicated, the next part causes problems. While stepping up on the pallet, the robodog ends up at a skewed angle (#8). As the harness is a solid structure, the VIP ‘needs’ to respond to, and follow, the positioning of the robodog, and so the VIP steps up onto the pallet in a similarly skewed position relative to the pavement. As she has walked this route before, the VIP presumably knows that she needs to go straight, but she still adjusts to the angle of the robodog (#9–10). Consequently, when she steps down, following the robodog, she moves sideways (#11). Although she presumably receives tactile feedback about her position via the white cane touching the edge of the pallet (#10), the robodog pulls so quickly so that she cannot adjust or account in situ. Note that the robodog does not have any ability to haptically sense through the harness, and is therefore unable to sense any hesitation from the VIP. Within a second, she is pulled down from the pallet, sideways. This stepping down is consequently marked by an accountable ‘response cry’ (Goffman Citation1978) (‘UH’ l. 4)) which frames the situation as problematic (Due and Bierring Lange Citation2018b). Although the operator can see through the robot’s cameras, and hence can guide accordingly, he cannot perceive the ‘total environment’, which includes the robodog’s own position in space. He is steering the robot forward, but the robot autonomously makes the turned or skewed position on the pallet, causing the operator to respond (in the next position) with an evaluative description: ‘it ju:st turned (.) ehm’ (l.5). This turn functions as an account of both the skewed position and the VIP’s responsive cry.

Neither the VIP, the robodog, nor the operator accomplish this mobile activity alone. They do so as a moving assemblage in the situation, based on the distributed perception from each of the agents. Compared to the example with the guide dog, it is noticeable that the robodog + operator pulls via the harness, without any reciprocity in this kind of mediated haptic sociality. The fine-tuned responsiveness in the guide dog’s behaviour, as it constantly monitors and reacts to the bodily and tactile sensory signs from the VIP, is lacking in the robodog. This is visible when stepping down from the pallet. However, although there is no reciprocity in the robot’s design, the operator is present with audio-visual access, and responds in situ. Although the VIP cannot see the trajectory of the route, she still has a working vestibular system which enables perception of her body in relation to gravity, movement, orientation in space and balance (Highstein, Fay, and Popper Citation2004). This, in combination with sensory tactile and auditive inputs from the white cane and her feet, means that she recognises that there is a problem, but does not (cannot) correct it in situ. This analysis demonstrates that the fine-tuned and detailed reciprocity, adjustment and other-monitoring achieved between the VIP and the guide dog is not comparable with the way the VIP is pulled more roughly by the robodog – or the responsiveness available from the operator. But it also shows that the VIP, the robodog and the operator are each able to distribute different forms of perception, based on their different sensory capacities. In total, this enables the constitution of the emerging assemblage to be mobile.

Analysing how the VIP + dog assemblage navigates a parked bike on the pavement

Immediately after passing the pallet on the pavement, the route is straight for five to six metres along the pavement. Then they encounter a parked cargo bike. This excerpt starts at the moment the dog recognises the obstacle.

Three metres before encountering the bike parked on the pavement, the dog starts to orient towards it as an obstacle with which it has to deal. This is observable in the way it starts turning away from the pavement and towards the grass area (#19), probably to avoid a possible collision. The guide dog does not simply signal another trajectory, but carefully steps in front of the VIP, slowing down to signal that there is trouble ahead. The VIP probably senses the signalling: the bodily actions produced by the dog and transferred via tactile feedback from the harness, plus the dog touching her leg (#19). This is also observable as she interactionally responds to the dog’s actions in the next position: she pulls the dog to the left, puts her white cane in front of her for detection purposes (#20), and then verbally responds to the stopping as dispreferred, using a forward-projecting command: ‘walk forward (0.8)’ (l. 8). This command is produced as she simultaneously starts to move forward, slightly ahead of the dog (#21). As she finishes the turn with the command ‘find way’ (l.8), she taps on the pavement with the white cane to detect possible obstacles (#22), then extends the radius of the detecting cane by moving her arm forward in a semicircle (#23).

It is evident that the dog is responding to the bike as an obstacle ahead, but also that it is aware of this obstacle at an early stage, causing the VIP to try to locate the obstacle using her white cane (#20–23). Responding to the command (‘find way’ (l.8)), the dog chooses to move away from the pavement to the grass area in order to pass the bike (#24), but then immediately pulls back from the bike and moves in front of the VIP (#25), thereby visibly and bodily displaying a reaction to the obstacle as potentially dangerous and displaying this to the VIP. Because the dog is trained to recognise moving objects like cars and bikes for what they are (moving), it is presumably showing concern about the possible consequences of the bike’s mobility affordances in and through its careful approach to the object, while also using its own body as a ‘shield’ to protect the VIP (#25).

Again, this reaction from the dog is treated in the next position in the same fashion as before by the VIP (cf. #21–23). She does this sequentially, keeping the dog in a holding position with her withdrawn left arm (#26), while also moving her own body slightly forward and then extending her right arm, using the white cane to detect the area nearby (#27). The VIP responds interactionally to the dog’s signalling by trying to locate a possible obstacle in close proximity, but as she does not get any observable experience of detecting an obstacle, she upgrades her verbal command: ‘come then’ (l.9).

The bodily position that the VIP takes in #26–28 displays an orientation towards a straight trajectory; one in which her feet remain on the border between the pavement and the grass. What is noticeable as this scene unfolds is that the dog continuously resists staying on the pavement during the passing, and eventually succeeds in guiding the VIP around the bike by moving off the pavement. Following the command in line 9 (‘come then’), the VIP again scans the area with her white cane (#30), and in so doing she hits the bike (#31) which prompts her to produce a second movement command: ‘walk forward (0.4) come (click sound with mouth)’ (l.10). The dog responds by aligning its body and the direction of its head with that of the VIP’s body (#32). Then, as the dog moves forward around the bike, the VIP responds with the acknowledgment token ‘yes’ (l. 10). It is noticeable in #32 b that while the VIP projects a straight trajectory along the pavement, the dog starts moving around the bike. As the dog maintains a distance from the obstacle, the VIP ends up walking on the grass again (#33).

Although the VIP treats the grass as dispreferred, compared to walking on the pavement, she eventually accepts the trajectory communicated by the dog. Just as in the first example of dealing with the pallet, it is evident that the dog not only anticipates obstacles and pays attention to the organisational features of both the trajectory and the obstacles, but also engages in interactions and collaboration with the VIP in minute detail, which enables adjustments to be made within tenths of a second. The navigation around the obstacle is accomplished in a semiotically rich field, with multisensory inputs and communication. The VIP is relying on the feedback from the dog via the tactility of the harness, which establishes this mediated haptic sociality, and on the dog’s bodily actions, including stepping in front of the VIP as a ‘shield’, while simultaneously communicating to the dog through bodily movements and tactile senses. These actions, and their inherently social reciprocity, are sensorily accessible for the VIP, and they form part of her anticipation of what lies ahead. She receives warnings as distributed perception about an upcoming obstacle, but does not place complete trust in the dog alone. In situations in which the dog signals an obstacle ahead, the VIP employs her white cane as a resource for establishing a multisensory phenomenal field in which perception can be distributed between the agents as one moving assemblage. The semiotic ecology of signs from the dog, from the VIP, and from sensory inputs via the white cane and the VIP’s feet (along with the more subtle vestibular and proprioceptive sense systems), are occurring as an unfolding assemblage gestalt that constitutes the resources for navigation in situ. They are an assemblage in movement, based on co-constructing actions embedded within a distributed perceptual field. By comparison, this semiotic and interactional richness is not found in the case of the robodog + operator, as we will see in our final example.

Analysing how the VIP + robodog + operator assemblage navigates a bike parked on the pavement

This final excerpt deals with the same obstacle: the bike parked on the pavement. However, the way the VIP + robodog + operator assemblage recognises and moves around this obstacle is substantially different from the way in which this same task was accomplished by the VIP + guide dog assemblage. It is noticeable that the VIP carries the cane in a passive, non-detecting mode, with the right arm bent in the same position, during the whole sequence of walking past the obstacle (#16-20). This technique is typically used when a VIP is guided by means other than tapping of the cane from side-to-side. Long before reaching the bike, we saw in the prior analysis how the guide dog displays an orientation towards the obstacle, exhibiting and communicating concern about its current position. But the dog also lacks contextual knowledge about the bike and its possible future actions. The robodog, controlled as it is by an operator who can see through the cameras of the robot, recognises the obstacle for what it really is: a bike that will not move, because there is no rider on it, and which cannot be walked over (like the pallet). Without slowing down – and thereby not displaying orientation towards the obstacle as a serious source of potential trouble– the robodog continues straight ahead, but then pulls slightly right to walk past the bike (#17). What is particularly interesting, compared to the practice of walking with the guide dog, is that as the robodog makes an autonomously produced sharp turn to avoid the bike, the VIP, with no hesitation, is pulled away from the pavement and onto the grass (#18). In #19, the VIP is seen walking on the grass, passively following the robodog’s lead, without any objection or projection of this trajectory as being dispreferred. Shortly after the sharp turn back to the pavement (#19), the robodog + operator resumes a straight trajectory along the pavement (#20).

What is striking in this final example is how the robodog + operator establish assistive mobility by ‘pulling’ the VIP around the obstacle. The VIP is tacitly (and rather passively) following the robodog + operator’s lead without engaging in coordination, negotiation, or other forms of direct collaboration with the assemblage (e.g. about grass versus pavement). Nor does she observably orient towards other sensory inputs, e.g. from her feet or the cane. It is evident from this example that a straightforward trajectory, with minimal divergence from the pavement, even one that involves stepping into a presumably dispreferred grass area, is non-accountable in situ, which is presumably because the VIP trusts the operator’s judgements and his steering of the robodog, rather than the robodog alone. While this kind of robotic assemblage mobility is thus efficient in many ways, it also seems to reduce the agency and engagement of the VIP.

Discussion: Being mobile as an assemblage through distributed perception

Assisted mobility and disability mobility is a part of the ‘microgeographies of everyday life’ (Cresswell Citation2011), which in this paper has been studied as an example of ‘micro-mobility’ (Fortunati and Taipale Citation2017) and ‘corporeal mobility’ (Urry Citation2007) by focusing on two types of ‘mobile formations’ (McIlvenny, Broth, and Haddington Citation2014). Research has shown how mobile opportunities are not equally allocated. People with impairments may have a harder time getting around, and as theorised by e.g. Cresswell (Citation2010) and Sheller (Citation2016), there is always an underlying politics in mobility – or what Massey called the ‘power geometries’ (Massey Citation1994) of everyday life – because immobility can lead to social exclusion (cf. Hannam, Sheller, and Urry Citation2006). Obstacles on the pavement, like abandoned pallets and parked cargo bikes, are very ‘troublesome’ for visually impaired people (Due and Bierring Lange Citation2018b). Such obstacles are what Gleeson has termed ‘a critical manifestation, and cause, of social oppression because it reduces the ability of disabled people to fully participate in urban life’ (Gleeson Citation1996, 138). The current paper contributes to work on disability and mobilities (Goggin Citation2016) by showing the intricacies of non-human assistance. Assisted mobility using a guide dog is a well-known strategy for VIPs for overcoming lack of mobility, and in this paper I have analysed precisely how assisted mobility is accomplished in minute detail, as joint multimodal and multisensorial action in an assemblage. This has been contrasted with the use of a guiding robodog, steered by a human operator. In the following discussion, I describe the general findings of this paper, and the variations between the two types of assemblages with non-human agents.

The analysis shows overall how the VIP is not an isolated entity or individual, but momentarily assembled with a non-human agent, and that assisted mobility as such is a jointly co-constructed accomplishment. An assemblage is not a fixed and merged totality describable as a cyborg (Haraway Citation1991) or a hybrid (Callon and Law Citation1997), but rather a situated achievement in which each actor can fully be separated again (Due Citation2021b, Citation2022; Dant Citation2004). Assemblages consist according to Deleuze and Guattari (Citation2004) of heterogeneous elements that function together momentarily by fully being heterogenous and at the same time connected in an emergent production. I have in this paper done what Garfinkel calls an ‘ethnomethodological misreading’ (Eisenmann and Lynch Citation2021; Garfinkel Citation1991) of a concept in terms of respecifying it as a situated, practical accomplishment, i.e. making it praxeological. Thus, the process of assembling the VIP + non-human agents and producing accountable actions were shown to at the same time attain its own independent ontological status in practical terms. The assemblage was very concretely tied to the connecting harness and the emerging interactional ecology stretched along a material-discursive continuum (Deleuze and Guattari Citation2004) encompassing material obstacles and semiotic productions, and this ‘territorialization’ can easily be deterritorialized’ again when e.g. removing the harness and leaving the non-human agent, making the assemblages fluid and continually in flux (cf. Feely Citation2016; Monforte, Pérez-Samaniego, and Smith Citation2020).

I focused in this paper on the harness as the enabler of this situated assemblage. We have seen how the VIP achieved a form of mediated haptic sociality (Due Citation2021c) in which sensory information, kinesthetically transferred through the harness, was oriented towards by the VIP, and used as a tool for guiding and steering (cf. Michalko, Citation1999 ). However, the analysis of this showed substantial differences between the non-human agents. The harness, together with other multimodal actions (in the form of using body parts and verbal commands for steering) configured the VIP + guide dog as an assemblage with observable reciprocity – a form also described as interspecies intercorporeality (Due Citation2021c) because of its inter-bodily sensitivity. The same was not the case with the VIP + robodog + operator, where the VIP was instead pulled by the harness, and only minimal reciprocity was observed in the way the operator responded to a responsive cry by the VIP. Whereas the dog’s deviations were interactionally managed as subtle multimodal negotiations moment-by-moment, producing an observable orientation towards a reciprocity of perspectives (Schutz Citation1953), the robodog + operators’ deviation was shown to occasion an audible response cry with no interactional adjustment.

The dog resisted crossing the pallet, and initially struggled to walk past the bike, too. Obviously, it did not know what the pallet or bike ‘really’ was, but it observably oriented itself to both of them as obstacles which were not simply uncomplicated, but potentially troublesome. Consequently, it diverted from the pavement to the grass in order to solve the navigational problem. The robodog + operator, on the other hand, displayed mundane, taken-for-granted knowledge (Garfinkel Citation1967) about the affordances of pallets and bikes. The operator could easily see, via the robodog’s cameras, that the bike was static, with no rider on it, and therefore unlikely to make sudden and projectable movements. The human operator was therefore building on indexically relevant knowledge about the obstacles in these specific situations. We could argue that the dog made more of the environment relevant for assisted mobility than was necessarily seen from a human perspective. However, the robodog caused the most concern due to its problematic encounter with the pallet. This was where the VIP was pulled roughly and completely away from the pavement and onto the grass. The VIP + guide dog assemblage oriented towards the easiest problem-solving route, while adhering to an organisational form in which the pavement seemed preferrable to the grass, thereby enabling micro-adjustments along the way. On the other hand, the robodog + operator oriented towards the larger goal of succeeding in carrying out the activity efficiently, regardless of the surface involved. While the VIP interacted with the guide dog to determine the preferred route – and engaged in rich, semiotic, and multisensory actions – she was much more passive when following the robodog, which was shown to be unable to adjust to sensations through its harness.

Assisted mobility is, as illustrated, a joint activity based on co-constructed actions within an emerging assemblage, which in turn are based on distributed perception from very different kinds of agents that occur with varying degrees of agency in a phenomenal field. The ‘phenomenal field’ (Garfinkel Citation2002; Garfinkel and Livingston Citation2003; Merleau-Ponty Citation2002) is a ‘praxeological achievement’ (Eisenmann and Lynch Citation2021). In this paper I have studied it as a set of embodied, practical actions achieved by different mobile assemblages which comes together momentarily in order to achieve mobile activities. I have shown, in accordance with Garfinkel’s work on the phenomenal field (cf. Fele Citation2008), how the perception of specific features within the mobile environment occurs through concrete, practical, sensorial actions which are distributed and made part of a particular organisation of the world, while at the same time this world (its obstacles) impose constraints on the production of action. The organising principle for being mobile is not in the head of the VIP alone, but in a situated, demonstrable and observable interaction with the environment while navigating as the assemblage. The accomplishment of walking together, with either a guide dog or a robodog + operator, is therefore based on observable, practical actions related to the ‘praxeology of perception’ (Coulter and Parsons Citation1990). Both the guide dog and the robodog were shown to be sense-able and action-able agents, but in very different ways. Overall, both the agents were able to sense, produce actions and communicate sensory information in situated contexts, thus contributing to the co-construction of perception. However, whereas the dog, as a living creature, was held accountable for divergences, the same was not true for the robot on its own, but rather for the robodog + operator as an assemblage. Although the robodog produced micro-actions autonomously (such as the skewed positioning on the pallet), based on its sensory capacities, and although these actions were communicated as haptic sensations through the harness, thus displaying its situated agency and bearings in relation to human life, the overall responsibility was placed on the assemblage.

Thus, to answer the initial question – could a guide dog be replaced by a robot dog? – the simple answer is ‘no’. Because the emergent production of the different assemblages is indeed a substantial difference that makes a difference. Future studies on disability mobility could explore in more detail the ‘power geometries’ (Massey Citation1994) that possibly could get solved by turning away from a solipsistic treatment of the individual and towards an understanding of emergent, situated assemblages where the work of perception is distributed, and thus contribute to the program set out by Hannem, Sheller and Urry (Citation2006) to reduce social exclusion.

Acknowledgments

This research was conducted as part of the research project BlindTech. I want to thank Rikke Nielsen, Birgit Christensen, and the team at the Teknologisk Institut for help with data collection.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Albert, Saul, William Housley, and Elizabeth Stokoe. 2019. “In Case of Emergency, Order Pizza: An Urgent Case of Action Formation and Recognition.” In Proceedings of the 1st International Conference on Conversational User Interfaces, 1–2. CUI ’19, New York, NY, USA: Association for Computing Machinery.

- Auer, Peter. 2005. “Projection in Interaction and Projection in Grammar.” Text –Interdisciplinary Journal for the Study of Discourse 25 (1): 7–36. doi:10.1515/text.2005.25.1.7.

- Callon, Michel, and John Law. 1997. “After the Individual in Society: Lessons on Collectivity from Science, Technology and Society.” Cahiers Canadiens de Sociologie [Canadian Journal of Sociology] 22 (2): 165–182. doi:10.2307/3341747.

- Cekaite, Asta. 2016. “Touch as Social Control: Haptic Organization of Attention in Adult–Child Interactions.” Journal of Pragmatics 92 (January): 30–42. doi:10.1016/j.pragma.2015.11.003.

- Cekaite, Asta. 2020. “Human-to-Human Touch in Institutional Settings: A Commentary.” Social Interaction. Video-Based Studies of Human Sociality 3 (1). doi:10.7146/si.v3i1.120246.

- Coulter, Jeff, and E. D. Parsons. 1990. “The Praxiology of Perception: Visual Orientations and Practical Action.” Inquiry 33 (3): 251–272. doi:10.1080/00201749008602223.

- Cresswell, Tim. 2010. “Towards a Politics of Mobility.” Environment and Planning D: Society and Space 28 (1): 17–31. doi:10.1068/d11407.

- Cresswell, Tim. 2011. “Mobilities I: Catching Up.” Progress in Human Geography 35 (4): 550–558. doi:10.1177/0309132510383348.

- Dant, Tim. 2004. “The Driver-Car.” Theory, Culture & Society 21 (4–5): 61–79. doi:10.1177/0263276404046061.

- Deleuze, G., and F. Guattari. 2004. A Thousand Plateaus: Capitalism and Schizophrenia. London; New York: Continuum.

- Due, Brian L. 2019. “Laughing at the Robot: Incongruent Robot Actions as Laughables.” Mensch Und Computer 2019 - Workshopband. Bonn: Gesellschaft für Informatik e.V. doi:10.18420/muc2019-ws-640.

- Due, Brian L. 2021a. “Distributed Perception: Co-Operation between Sense-Able, Actionable, and Accountable Semiotic Agents.” Symbolic Interaction 44 (1): 134–162. doi:10.1002/symb.538.

- Due, Brian L. 2021b. “RoboDoc: Semiotic Resources for Achieving Face-to-Screenface Formation with a Telepresence Robot.” Semiotica 2021 (238): 253–278. doi:10.1515/sem-2018-0148.

- Due, Brian L. 2021c. “Interspecies Intercorporeality and Mediated Haptic Sociality: Distributing Perception with a Guide Dog.” Visual Studies 0 (0): 1–14. doi:10.1080/1472586X.2021.1951620.

- Due, Brian L. 2022. “The Haecceity of Assembling by Distributing Perception.” In Re-Configuring Human-Robot Interaction, edited by Andreas Bischof, Eva Hornecker, Antonia Lina Krummheuer, and Matthias Rehm. Hokkaido, Japan: Originaly Sapporo.

- Due, Brian, and Simon Lange. 2018a. “Semiotic Resources for Navigation: A Video Ethnographic Study of Blind People’s Uses of the White Cane and a Guide Dog for Navigating in Urban Areas.” Semiotica 2018 (222): 287–312. doi:10.1515/sem-2016-0196.

- Due, Brian L., and Simon Bierring Lange. 2018a. “The Moses Effect: The Spatial Hierarchy and Joint Accomplishment of a Blind Person Navigating.” Space and Culture 21 (2): 129–144. doi:10.1177/1206331217734541.

- Due, Brian L., and Simon Bierring Lange. 2018b. “Troublesome Objects: Unpacking Ocular-Centrism in Urban Environments by Studying Blind Navigation Using Video Ethnography and Ethnomethodology.” Sociological Research Online. 24 (4): 475–495. doi:10.1177/1360780418811963.

- Edwards, Derek. 1997. Discourse and Cognition. SAGE.

- Eisenmann, Clemens, and Michael Lynch. 2021. “Introduction to Harold Garfinkel’s Ethnomethodological ‘Misreading’ of Aron Gurwitsch on the Phenomenal Field.” Human Studies 44 (1): 1–17. doi:10.1007/s10746-020-09564-1.

- Estrada, Judy. 2016. Visually Impaired: Assistive Technologies, Challenges and Coping Strategies. New York: Nova Science Publishers, Incorporated.

- Feely, Michael. 2016. “Disability Studies after the Ontological Turn: A Return to the Material World and Material Bodies without a Return to Essentialism.” Disability & Society 31 (7): 863–883. doi:10.1080/09687599.2016.1208603.

- Fele, Giolo. 2008. “The Phenomenal Field: Ethnomethodological Perspectives on Collective Phenomena.” Human Studies 31 (3): 299–322. doi:10.1007/s10746-008-9099-4.

- Folmer, Eelke. 2015. “Exploring the Use of an Aerial Robot to Guide Blind Runners.” ACM SIGACCESS Accessibility and Computing 112 (112): 3–7. doi:10.1145/2809915.2809916.

- Fortunati, Leopoldina, and Sakari Taipale. 2017. “Mobilities and the Network of Personal Technologies: Refining the Understanding of Mobility Structure.” Telematics and Informatics 34 (2): 560–568. doi:10.1016/j.tele.2016.09.011.

- Freedman, Adam H., and Robert K. Wayne. 2017. “Deciphering the Origin of Dogs: From Fossils to Genomes.” Annual Review of Animal Biosciences 5 (February): 281–307. doi:10.1146/annurev-animal-022114-110937.

- Garfinkel, Harold. 1952. The Perception of the Other: A Study in Social Order. Cambridge, Mass: Harvard University.

- Garfinkel, Harold. 1967. Studies in Ethnomethodology. Englewood Cliffs, N. J.: Prentice Hall.

- Garfinkel, Harold. 1991. “Respecification: Evidence for Locally Produced, Naturally Accountable Phenomena of Order, Logic, Reason, Meaning, Methods, Etc. in and of the Essential Haecceity of Immortal Ordinary Society (I) – an Announcement of Studies.” In Ethnomethodology and the Human Sciences, edited by Graham Button, 10–19. Cambridge: Cambridge University Press.

- Garfinkel, Harold. 2002. Ethnomethodology’s Program: Working out Durkeim’s Aphorism. Lanham MD: Rowman & Littlefield Publishers.

- Garfinkel, Harold, and Eric Livingston. 2003. “Phenomenal Field Properties of Order in Formatted Queues and Their Neglected Standing in the Current Situation of Inquiry.” Visual Studies 18 (1): 21–28. doi:10.1080/147258603200010029.

- Gehle, Raphaela, Karola Pitsch, Timo Dankert, and Sebastian Wrede. 2017. “How to Open an Interaction between Robot and Museum Visitor?: Strategies to Establish a Focused Encounter in HRI.” In Proceedings of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, 187–95, HRI ’17, New York, NY, USA: ACM. doi:10.1145/2909824.3020219.

- Gibson, James J. 1979. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin.

- Gleeson, B. J. 1996. “A Geography for Disabled People?” Transactions of the Institute of British Geographers 21 (2): 387–396. doi:10.2307/622488.

- Goffman, Erving. 1974. Frame Analysis: An Essay on the Organization of Experience. Cambridge, MA: Harvard University Press.

- Goffman, Erving. 1978. “Response Cries.” Language 54 (4): 787–815. doi:10.2307/413235.

- Goggin, Gerard. 2016. “Disability and Mobilities: Evening up Social Futures.” Mobilities 11 (4): 533–541. doi:10.1080/17450101.2016.1211821.

- Goode, David. 2007. Playing with My Dog Katie: An Ethnomethodological Study of Dog-Human Interaction. West Lafayette, Indiana: Purdue University Press.

- Goodwin, Charles. 1979. “The Interactive Construction of a Sentence in Natural Conversation.” In Everyday Language: Studies in Ethnomethodology, edited by G. Psathas, 97–121. New York: Irvington Publishers.

- Goodwin, Charles. 2002. “Time in Action.” Current Anthropology 43 (S4): S19–S35. doi:10.1086/339566.

- Goodwin, Charles. 2007. “Participation, Stance and Affect in the Organization of Activities.” Discourse & Society 18 (1): 53–74. doi:10.1177/0957926507069457.

- Guerreiro, João, Daisuke Sato, Saki Asakawa, Huixu Dong, KrisM. Kitani, and Chieko Asakawa. 2019. “CaBot: Designing and Evaluating an Autonomous Navigation Robot for Blind People.” In The 21st International ACM SIGACCESS Conference on Computers and Accessibility, 68–82, ASSETS ’19, New York, NY, USA: Association for Computing Machinery.

- Guide Dogs for the Blind. 2019. “Training Phase Descriptions.” https://www.google.com/url?sa=t&rct=j&q=&esrc=s&source=web&cd=&ved=2ahUKEwi3z9WdkZj1AhVSQ_EDHQL5B34QFnoECAgQAQ&url=https%3A%2F%2Fwww.guidedogs.com%2Fuploads%2Ffiles%2FPuppy-Raising-Manual%2FTraining-Phase-Descriptions.pdf&usg=AOvVaw20xIM9N5gSO72GEUBoKU33.

- Hannam, Kevin, Mimi Sheller, and John Urry. 2006. “Editorial: Mobilities, Immobilities and Moorings.” Mobilities 1 (1): 1–22. doi:10.1080/17450100500489189.

- Haraway, Donna. 1991. “A Cyborg Manifesto. Science, Technology, and Socialist-Feminism in the Late Twentieth Century.” In Simians, Cyborgs and Women: The Reinvention of Nature, edited by Haraway , 149–181. New York: Routledge.

- Heritage, J., and T. Stivers. 1999. “Online Commentary in Acute Medical Visits: A Method of Shaping Patient Expectations.” Social Science & Medicine 49 (11): 1501–1517. doi:10.1016/s0277-9536(99)00219-1.

- Highstein, Stephen M., Richard R. Fay, and Arthur N. Popper. 2004. The Vestibular System. New York: Springer Science & Business Media.

- Iwatsuka, K., K. Yamamoto, and K. Kato. 2004. “Development of a Guide Dog System for the Blind People with Character Recognition Ability.” In Proceedings of the 17th International Conference on Pattern Recognition, 2004, ICPR 2004, Vol.1, 453–456.

- Jefferson, Gail. 2004. “Glossary of Transcript Symbols with an Introduction.” In Conversation Analysis: Studies from the First Generation, edited by Gene H. Lerner, 13–31. Amsterdam: John Benjamins Publishing Co.

- Kendrick, Kobin H., Penelope Brown, Mark Dingemanse, Simeon Floyd, Sonja Gipper, Kaoru Hayano, Elliott Hoey, et al. 2020. “Sequence Organization: A Universal Infrastructure for Social Action.” Journal of Pragmatics 168 (October): 119–138. doi:10.1016/j.pragma.2020.06.009.

- Kerepesi, A., E. Kubinyi, G. K. Jonsson, M. S. Magnusson, and Á. Miklósi. 2006. “Behavioural Comparison of Human-Animal (Dog) and Human-Robot (AIBO) Interactions.” Behavioural Processes 73 (1): 92–99. doi:10.1016/j.beproc.2006.04.001.

- Knorr-Cetina, Karin. 2009. From Pipes to Scopes. The Flow Architecture of Financial Markets. Konstant. Bibliothek der Universität Konstanz.

- Konok, Veronica, Beta Korcsok, Ádám Miklósi, and Márta. Gácsi. 2018. “Should We Love Robots? – the Most Liked Qualities of Companion Dogs and How They Can Be Implemented in Social Robots.” Computers in Human Behavior 80: 132–142. doi:10.1016/j.chb.2017.11.002.

- Kreplak, Yaël, and Chloé Mondémé. 2014. “Artworks as Touchable Objects.” In Interacting with Objects: Language, Materiality, and Social Activity, edited by Maurice Nevile, Pentti Haddington, Trine Heinemann, and Mirka Rauniomaa, 295–318. Amsterdam; Philadelphia: John Benjamins Publishing.

- Krueger, F., K. C. Mitchell, G. Deshpande, and J. S. Katz. 2021. “Human–Dog Relationships as a Working Framework for Exploring Human–Robot Attachment: A Multidisciplinary Review.” Animal Cognition 24 (2): 371–385. doi:10.1007/s10071-021-01472-w.

- Laurier, Eric, Barry Brown, and Moira McGregor. 2016. “Mediated Pedestrian Mobility: Walking and the Map App.” Mobilities 11 (1): 117–134. doi:10.1080/17450101.2015.1099900.

- Laurier, Eric, Ramia Maze, and Johan Lundin. 2006. “Putting the Dog Back in the Park: Animal and Human Mind-in-Action.” Mind, Culture, and Activity 13 (1): 2–24. doi:10.1207/s15327884mca1301_2.

- Lehn, Dirk vom. 2010. “Discovering ‘Experience-Ables’: Socially Including Visually Impaired People in Art Museums.” Journal of Marketing Management 26 (7–8): 749–769. doi:10.1080/02672571003780155.

- Licoppe, Christian. 2016. “Mobilities and Urban Encounters in Public Places in the Age of Locative Media. Seams, Folds, and Encounters with ‘Pseudonymous Strangers.” Mobilities 11 (1): 99–116. doi:10.1080/17450101.2015.1097035.

- Lloyd, Mike. 2017. “On the Way to Cycle Rage: Disputed Mobile Formations.” Mobilities 12 (3): 384–404. doi:10.1080/17450101.2015.1096031.

- Lloyd, Mike. 2019. “The Non-Looks of the Mobile World: A Video-Based Study of Interactional Adaptation in Cycle-Lanes.” Mobilities 14 (4): 500–523. doi:10.1080/17450101.2019.1571721.

- Manduchi, Roberto, and Sri Kurniawan. 2018. Assistive Technology for Blindness and Low Vision. Boca Raton: CRC Press.

- Massey, Doreen. 1994. “Power-Geometry and a Progressive Sense of Place.” In Travellers’ Tales: Narratives of Home and Displacement, edited by G. Robertson, M. Mash, L. Tickner, J. Bird, B. Curtis, and T. Putnam. London: Routledge.

- McIlvenny, Paul. 2015. “The Joy of Biking Together: Sharing Everyday Experiences of Vélomobility.” Mobilities 10 (1): 55–82. doi:10.1080/17450101.2013.844950.

- McIlvenny, Paul, Mathias Broth, and Pentti Haddington. 2014. “Moving Together Mobile Formations in Interaction.” Space and Culture 17 (2): 104–106. doi:10.1177/1206331213508679.

- Merleau-Ponty, Maurice. 2002. Phenomenology of Perception. London; New York: Routledge.

- Michalko, Rod. 1999. The Two-in-one: Walking with Smokie, Walking with Blindness. Philadelphia: Temple University Press.

- Mondada, Lorenza. 2006. “Participants’ Online Analysis and Multimodal Practices: Projecting the End of the Turn and the Closing of the Sequence.” Discourse Studies 8 (1): 117–129. doi:10.1177/1461445606059561.

- Mondémé, Chloé. 2011a. “Animal as Subject Matter for Social Sciences: When Linguistics Addresses the Issue of a Dog’s ‘Speakership.” In Non-Humans in Social Science: Animals, Spaces, Things, edited by Petr Gibas, Karolína Pauknerová, and Marco Stella, 87–105. Cervený Kostelec: Pavel Mervart.

- Mondémé, Chloé. 2011b. “Dog-Human Sociality as Mutual Orientation.” https://portal.findresearcher.sdu.dk/en/publications/dog-human-sociality-as-mutual-orientation.

- Mondémé, Chloé. 2020. La Socialité Interspécifique: Une Analyse Multimodale Des Interactions Homme-Chien. Limoges: Lambert-Lucas.

- Monforte, Javier, Víctor Pérez-Samaniego, and Brett Smith. 2020. “Traveling Material↔Semiotic Environments of Disability, Rehabilitation, and Physical Activity.” Qualitative Health Research 30 (8): 1249–1261. doi:10.1177/1049732318820520.

- Morel, Julien, and Marc Relieu. 2011. “Locating Mobility in Orientation Sequences.” Nottingham French Studies 50 (2): 94–113. doi:10.3366/nfs.2011-2.005.

- Nishizaka, Aug. 2020. “Multi-Sensory Perception during Palpation in Japanese Midwifery Practice.” Social Interaction. Video-Based Studies of Human Sociality 3 (1). doi:10.7146/si.v3i1.120256.

- Norouzi, Nahal, Kangsoo Kim, Myungho Lee, Ryan Schubert, Austin Erickson, Jeremy Bailenson, Gerd Bruder, and Greg Welch. 2019. “Walking Your Virtual Dog: Analysis of Awareness and Proxemics with Simulated Support Animals in Augmented Reality.” 2019 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), 157–168.

- Ochs, E. 1979. “Transcription as Theory.” In Developmental Pragmatics, edited by E. Ochs and B. Schieffelin. New York, NY: Academic Press.

- Pelikan, Hannah R. M., and Mathias Broth. 2016. “Why That Nao?: How Humans Adapt to a Conventional Humanoid Robot in Taking Turns-at-Talk.” In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, 4921–32. CHI’16, New York, NY, USA: ACM.

- Pitsch, Karola. 2016. “Limits and Opportunities for Mathematizing Communicational Conduct for Social Robotics in the Real World? Toward Enabling a Robot to Make Use of the Human’s Competences.” AI & SOCIETY 31 (4): 587–593. doi:10.1007/s00146-015-0629-0.

- Psathas, George. 1976. “Mobility, Orientation, and Navigation: Conceptual and Theoretical Considerations.” Journal of Visual Impairment & Blindness 70 (9): 385–391. doi:10.1177/0145482X7607000904.

- Psathas, George. 1992. “The Study of Extended Sequences: The Case of the Garden Lesson.” In Text in Context: Contributions to Ethnomethodology, edited by Graham Watson and Robert M. Seiler, 99–122. Newbury Park: SAGE.

- Quéré, Louis, and Marc Relieu. 2001. “Modes de Locomotion et Inscription Spatiale Des Inégalités. Les Déplacements Des Personnes Atteintes de Handicaps Visuels et Moteurs Dans l’esplace Public.” Convention Ecole des Hautes Etudes en Scienc es Sociales/Ministère de l’équipement, du transport et du logement-Direction générale de l’urbanisme, de l’habitat et de la constructio. Paris, Centre D'etude Des Mouvements Sociaux.

- Rawls, Anne Warfield, Kevin A. Whitehead, and Waverly Duck, eds. 2020. Black Lives Matter – Ethnomethodological and Conversation Analytic Studies of Race and Systemic Racism in Everyday Interaction. A Free Book in Coordination with Our Series Directions in Ethnomethodology And Conversation Analysis. Routledge.

- Relieu, Marc. 1994. “Les Catégories Dans L’action. L’apprentissage Des Traversées de Rue Par Des Non-Voyants [Categories in Action. Blind Persons Learning to Cross the Street].” Raisons Pratiques. L’enquête Sur Les Categories 5: 185–218.

- Robillard, Albert B. 1999. Meaning of a Disability: The Lived Experience of Paralysis. Philadelphia: Temple University Press.

- Rossano, Federico. 2013. “Sequence Organization and Timing of Bonobo Mother-Infant Interactions.” Interaction Studies. Social Behaviour and Communication in Biological and Artificial Systems 14 (2): 160–189. doi:10.1075/is.14.2.02ros.

- Sacks, Harvey L., Emmanuel A. Schegloff, and Gail Jefferson. 1974. “A Simplest Systematics for the Organization of Turn-Taking for Conversation.” Language 50 (4): 696–735. doi:10.1353/lan.1974.0010.

- Schegloff, Emmanuel A. 1984. “On Some Gestures’ Relation to Talk.” In Structures of Social Action, 266–296. Cambridge: Cambridge University Press.

- Schegloff, EmmanuelA. 2007. Sequence Organization in Interaction: A Primer in Conversation Analysis. Cambridge: Cambridge University Press.

- Schutz, Alfred. 1953. “Common-Sense and Scientific Interpretation of Human Action.” Philosophy and Phenomenological Research 14 (1): 1–38. doi:10.2307/2104013.

- Sheller, Mimi. 2016. “Uneven Mobility Futures: A Foucauldian Approach.” Mobilities 11 (1): 15–31. doi:10.1080/17450101.2015.1097038.

- Sheller, Mimi, and John Urry. 2006. “The New Mobilities Paradigm.” Environment and Planning A: Economy and Space 38 (2): 207–226. doi:10.1068/a37268.

- Streeck, Jürgen. 1995. “On Projection.” In Social Intelligence and Interaction, edited by E. Goody, 87–110. Cambridge, England: Cambridge University Press.

- Streeck, Jürgen, and J. S. Jordan. 2009. “Projection and Anticipation: The Forward-Looking Nature of Embodied Communication.” Discourse Processes 46 (2–3): 93–102. doi:10.1080/01638530902728777.

- Tachi, S., K. Tanie, K. Komoriya, Y. Hosoda, and M. Abe. 1981. “Guide Dog Robot—Its Basic Plan and Some Experiments with Meldog Mark I.” Mechanism and Machine Theory 16 (1): 21–29. doi:10.1016/0094-114X(81)90046-X.

- Urry, John. 2007. Mobilities. Cambridge: Polity.

- Yamazaki, Akiko, Keiichi Yamazaki, Yusuke Arano, Yosuke Saito, Emi Iiyama, Hisato Fukuda, Yoshinori Kobayashi, and Yoshinori Kuno. 2019. “Interacting with Wheelchair Mounted Navigator Robot.” doi:10.18420/muc2019-ws-651.