Abstract

Purpose: Eye-gaze technology offers professionals a range of feedback tools, but it is not well understood how these are used to support decision-making or how professionals understand their purpose and function. This paper explores how professionals use a variety of feedback tools and provides commentary on their current use and ideas for future tool development.Methods and Materials: The study adopted a focus group methodology with two groups of professional participants: those involved in the assessment and provision of eye-gaze technology (n = 6) and those who interact with individuals using eye-gaze technology on an ongoing basis (n = 5). Template analysis was used to provide qualitative insight into the research questions.Results: Professionals highlighted several issues with existing tools and gave suggestions on how these could be made better. It is generally felt that existing tools highlight the existence of problems but offer little in the way of solutions or suggestions. Some differences of opinion related to professional perspective were highlighted. Questions about automating certain processes were raised by both groups.Conclusions: Discussion highlighted the need for different levels of feedback for users and professionals. Professionals agreed that current tools are useful to identify problems but do not offer insight into potential solutions. Some tools are being used to draw inferences about vision and cognition which are not supported by existing literature. New tools may be needed to better meet the needs of professionals and an increased understanding of how existing tools function may support such development.

IMPLICATIONS FOR REHABILITATION

Professionals sometimes make use of feedback tools to infer the cognitive and/or visual abilities of users, although the tools are not designed or validated for these purposes, and the existing literature does not support this.

Some eye-gaze feedback tools are perceived as a “black box”, leaving professionals uncertain as to how to usefully interpret and apply the outputs.

There is an opportunity to improve tools that provide feedback on how well an eye-gaze system is working or how effectively a user can interact with this technology.

Professionals identified that tools could be better at offering potential solutions, rather than simply identifying the existence of problems.

Background

Eye-gaze technology refers to a method of accessing and operating computers or other assistive technology (AT) devices using the direction and rest of a user’s gaze as an input method. Assistive eye-gaze technology (hereafter: eye-gaze technology) can be used to interact with computers by users with severe motor disorders or movement limitations resulting from conditions including cerebral palsy and motor neuron disease [Citation1,Citation2]. Eye-gaze technology continuously monitors the user’s gaze point, the point at which their line of sight intersects with the display. It uses this data to control a computer interface, such as by translating gaze data into cursor movement. Thus, eye-gaze can be used as a substitute for a conventional mouse, keyboard, or touchscreen for those whose movements or level of accurate control impact or prohibit conventional use. Using eye-gaze technology, users can access a full range of mainstream and AT functions, including voice output communication software, computer control, gaming, and control of external devices, such as environmental control systems and smart home devices [Citation3]. As such, eye-gaze has the potential to increase the participation of both children and adults, some of whom may have previously not had a reliable method of accessing a computer [Citation4].

It is important to distinguish at this point between eye-gaze and eye-tracking technologies; the latter refers to a passive technology that records data on a variety of eye movements and ocular features but does not enable control [Citation5]. Since eye tracking is primarily used in research contexts and its use with disabled participants is rare [Citation6], this technology is outside the scope of discussion in this paper.

Assessment and use of eye-gaze technology

Despite the enabling potential of eye-gaze technology, research into its use remains at an early stage [Citation7]. Few studies have focused on the assessment of individuals for eye-gaze technology. In recent years, Karlsson and colleagues [Citation8] have proposed a set of clinical guidelines for decision-making around this technology which were drawn from stakeholder consultation with users, support workers, professionals, and others working with the technology. These guidelines bring to the fore the multidisciplinary nature of eye-gaze assessment, with the range of professionals involved likely to depend on the function for which the technology is intended. In addition to the user and their family, the core team is likely to include professionals from occupational therapy, specialist clinical technical professions, educators, and speech and language therapy [Citation9].

Assessment for eye-gaze technology in the UK typically involves a range of professionals similar to those proposed in Karlsson’s guidelines. This is due in part to the creation in 2014 of a network of centrally commissioned Specialist Services for augmentative and alternative communication (AAC) [Citation10]. Whilst these services are commissioned for the assessment and provision of high-tech AAC (including eye-gaze), the daily support of such technology frequently falls to local team members. Anecdotally, this is often led by a speech and language therapist, and the response rate in the above study would suggest this is not uncommon, with this profession being the largest group of responders [Citation8].

Included within these guidelines is the suggestion that professionals working with eye-gaze should have an established understanding of the different hardware and software options and capabilities available to support users, but professionals often report difficulties in accessing reliable information about the technology and its use [Citation11].

Feedback and feedback tools

Since the entry of eye-gaze into the AT market [Citation12], both eye-gaze technology hardware and software have developed significantly, with reduced costs and a proliferation of different hardware and software interfaces now available on the market. Hardware devices (in the form of eye-gaze cameras) have been released by different manufacturers, with each device being accompanied by manufacturer-specific software that allows configuration of various aspects of control and customisation of the user interface, for example, changing the cursor or “selection indicator” that lets the user know that they are targeting an item for selection [Citation13]. In the absence of any standardisation of these software packages or hardware components, manufacturers of specialist AT software often simplify this situation by providing a single control panel for eye-gaze access, allowing professionals and users to set parameters (such as dwell time, selection indicators) within their software using a consistent interface.

Eye-gaze cameras and their accompanying software can offer a range of feedback on aspects, such as a user’s positioning relative to the camera, the accuracy of their gaze point, or the reliability of their calibrations. The algorithms used within all assistive eye-gaze technology are proprietary and this also extends to details of how feedback is generated. These algorithms are typically considered by manufacturers to be commercially sensitive and are therefore undisclosed—tracking algorithms and methods of calibration are examples of this.

Eye-gaze technology developers provide professionals with a variety of different feedback tools to inform how systems are configured or used. Feedback tools are designed to give users and professionals insight into key operational aspects of the device (such as the position of the user and the quality of their calibration) or into the users’ performance in tasks (such as a “heat map”, which is a summary representation of where the users’ gaze point has been registered most often). In practice, professionals rely on the output of a variety of feedback tools for decision-making, however, these outputs are typically the outcome of undisclosed algorithmic calculations.

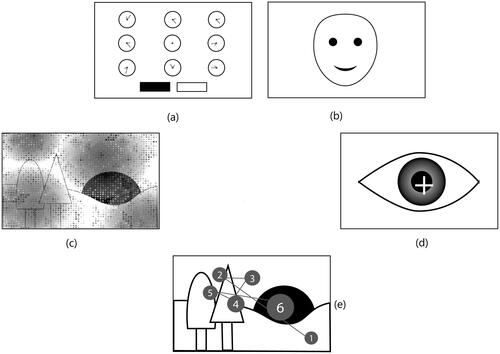

For this study, the authors selected five feedback tools that are commonly featured in eye-gaze technology software, illustrated in :

Figure 1. Graphical illustrations of the different feedback displays discussed in this paper: (a) calibration plot, (b) positioning guide, (c) heatmap, (d) live feed, (e) scanpath. The actual topic prompts used examples from existing AT software and can be viewed in the Supplemental Materials for this paper.

Calibration plot ()—provides feedback on the outcome of a calibration procedure. The calibration procedure is designed to provide extra information about a user’s eyes and eye movements with the goal of increasing the accuracy and precision of the tracking algorithm.

Positioning guide ()—provides information on the position of the user, relative to the eye-gaze camera, helping to ensure they are positioned within the trackbox—the area where the camera can track their eyes.

Heatmap ()—“two-dimensional graphical representation of data where the values of a variable are shown as colours” [Citation14], usually overlaid on the original stimulus material. In eye-gaze systems the data represented is typically fixation location and durations or total gaze duration.

Live feed ()—live video feed of the eye or whole face, often highlighting tracked features (pupil and corneal glint).

Scanpath ()—a graphical representation of a user’s fixations around the screen area, typically as a sequence of ordered fixations, illustrated by circles of increasing size corresponding to fixation duration, and connected by lines. Scanpaths are often overlaid on the graphical displays that elicited the fixation data.

Feedback tools are known to be used in both assessment and in everyday use of eye-gaze technology but, anecdotally, professionals often find their use and interpretation challenging. Further, tools are often perceived as being useful in measuring several aspects of performance, including system and tracking performance, as well as aspects of a user’s performance, such as their use of vision, gaze behaviours, and cognition. Although feedback tools may be advertised as being able to measure some of these aspects of performance, these tools are not typically designed for this purpose and none that we know of are externally validated instruments. This situation is reflected in the literature, where the authors know of no studies exploring how professionals make use of the feedback tools existing in current eye-gaze technology systems.

This study seeks to further the conversation about how feedback from eye-gaze technology is presented to professionals and how they make use of it to further their own understanding and to make decisions about when and how to use the technology with individual users.

Methods

This study aimed to investigate the attitude of AT professionals towards feedback given by eye-gaze devices, how they understand and apply that feedback in practice, and their thoughts on how existing feedback methods could be developed or improved. The research questions for the study were:

What feedback provided by eye-gaze technology do AT professionals find helpful?

How is feedback provided by eye-gaze technology used by AT professionals?

How do AT professionals describe performance with eye-gaze technology?

What information about a user’s vision, gaze and cognition do AT professionals gain from feedback provided by eye-gaze technology?

What improvements or changes in feedback provided by eye-gaze technology would AT professionals find helpful?

The study uses a phenomenological approach to explore these questions through consultation with a group of professionals with relevant viewpoints. The research team did not seek to recruit or represent a total sample of professionals and did not recruit with the target of achieving any theoretical data saturation, since the development or formation of a new theory was not the goal of the work [Citation15]. Instead, the research team considered that sample adequacy and a variety of professional backgrounds and contexts to be more important than the number of participants. Principles of the consolidated criteria for reporting qualitative research were followed in reporting the study in this paper [Citation16].

Recruitment of participants

Ethical approval for the study was granted by the School of Psychology Research Ethics Committee at the University of Leicester (Ethics Reference: 37538). In line with the methodological approach adopted for this study, purposive sampling was used to recruit two groups of participants. A decision was made to cap focus groups at a maximum of eight participants to ensure all participants were able to contribute. Recruitment was terminated when the research team felt that the information power was sufficient to provide helpful insight into the study’s objectives [Citation17]. Two groups of participants were defined by the research team, considering these to represent the groups of professionals who work most closely with eye-gaze and its users. The inclusion criteria for each group were as follows:

Group 1 (Assessment Group): AT professionals involved in the assessment and provision of eye-gaze technology in the UK.

Group 2 (Daily Use Group): professionals who interact with individuals using eye-gaze technology on an ongoing basis, in schools, colleges, or residential settings in the UK.

Participants were not excluded from the study based on client group, years of experience, or specific professional credentials, as it was intended that the groups would reflect the range of different professionals and roles that support eye-gaze assessment and implementation.

The study was advertised on relevant professionally-focused forums in the UK and social media channels, and some direct approaches to professionals were made by a member of the research team. This purposive sampling approach meant that participants were only recruited if they had significant, sustained exposure to eye-gaze technology in either assessment or use and support. A small incentive in the form of a shopping voucher was offered for participation in the study. Potential participants who expressed an interest in the study were provided with a participant information sheet and consented to the study.

Eleven participants were recruited. Participants’ professional roles are presented in . Several other potential participants were approached but were unable to participate due to a lack of time to commit to the study. No participants dropped out of the study before or during the focus groups. A product representative from an AAC company that supplies eye-gaze cameras responded to the recruitment approaches, the inclusion criteria and potential conflict of interest were reviewed at this point and discussed within the research team and with external advisors. It was concluded that product representatives are an integral part of the provision of eye-gaze technology in the UK and are frequently involved in assessments alongside state registered or independent professionals, therefore capturing this viewpoint was important to addressing the research questions.

Table 1. Participant professional roles (all participants are UK-based).

Data collection

A single focus group session was carried out with each group of participants. Both focus groups were conducted using Microsoft Teams videoconferencing software and consent was given by each participant to record and transcribe the content. Participants typically connected to the focus groups from their place of work. Sessions lasted around 90 min and were facilitated by two members of the research team, with one chairing the discussion. There was no need for repeat focus groups or individual interviews.

Research team positioning and reflexivity

All members of the research team have a doctoral-level academic background and work either in academia or in specialist clinical services at management level. The first and second authors have extensive experience working clinically in the assessment, provision, and support of eye-gaze technology. The third author has considerable research experience in the use of eye tracking to measure aspects of visual performance and human computer interaction. The research team has experience in conducting qualitative research in relevant fields. Several of the participants had pre-existing relationships with the research team through working in the same field for several years. It was not considered that these relationships would impact participants’ involvement since there was no conflict of interest with any researcher and the topics of discussion were related to a specific technology, rather than any aspect of service delivery or clinical practice where participants and members of the research team might have common ground.

Materials

The discussion guide and focus group topic prompts (see Supplemental Material for a PDF of the topic prompts—the discussion guide followed the same structure as the topic prompts) were developed by the research team based on the study objectives, research questions, and overarching methodology. The initial topic guides were then presented to three independent experts in the field for review: a senior research fellow at a major research and teaching hospital, a clinical lead for a specially commissioned AAC service, and an independent therapist with a clinical research background and over 40 years’ experience as a clinician and clinical lead in AAC and AT service delivery. Reviewers were given the study objectives in advance and then shown the research questions, before being shown the discussion guide and topic prompts. Feedback from the reviewers, obtained during interview sessions with the lead author, was subsequently incorporated into the design of the materials.

All three reviewers validated the approach and the overall goals of the study. Reviewers also confirmed that the feedback tools selected were representative of those typically found in eye-gaze technology and that the examples of feedback selected to use in the focus groups were representative and likely to be used in practice by focus group participants. One reviewer suggested the inclusion of multiple examples for two feedback tools (calibration plots and positioning guides) where there was significant variance in how the information was presented by different manufacturers. This suggestion was adopted and the topic prompts adapted accordingly. Suggestions were made on the amount of time allocated to each section of the focus group, which were also changed.

Focus groups were conducted by the first and third authors, with the first author acting as chair and leading the discussion. Both focus groups followed the same structure: introductions from the researchers and from all participants were followed by a brief overview of the work, during which the research team shared the motivation for the study; an initial question (“What information or feedback do you get from eye-gaze devices which you consider to be helpful?”) then invited general discussion within the groups; participants were then shown examples of the five different types of feedback commonly offered by eye-gaze technology and invited to discuss these.

For each feedback type, the researchers had selected what they considered to be one or two representative examples—which was confirmed through pilot testing with reviewers. Efforts were made to select examples that were not obviously from any particular software and to provide examples that included representation from a range of manufacturers. Given the small size of the AT manufacturer community and the professional experience of all participants, it was possible for individual manufacturers to be identified from the topic prompts. These were each accompanied by the same set of prompt questions:

What information are you getting from this sort of feedback?

How might you use this information to inform your practice or make decisions?

What do you think about the way this information is presented?

Do you have any ideas about how this information could be presented differently?

Data analysis

Data were analysed thematically using a codebook approach based on the principles of template analysis [Citation18]. Focus groups were transcribed initially using the Microsoft Teams automatic transcription functionality. Transcripts were then reviewed and corrected by the first author, using the original video recordings of the sessions for reference. This review of transcripts also served to better familiarise the first author with the data. The transcripts from the two focus group sessions constituted the data analysed in this study.

Transcripts were imported into NVivo 12 software. Preliminary coding followed, with transcripts iteratively coded by the first author during successive re-readings. Codes were identified when there were recurrent mentions or descriptions of a phenomenon by participants in either group, as described in the thematic analysis literature [Citation18,Citation19]. This process resulted in the identification of 42 individual codes. Definitions were then developed for these codes and the first author undertook several sessions of clustering similar codes together into themes and sub-themes, with particular attention paid to merging codes that frequently overlapped. The result was a template comprised of 18 sub-themes under five main themes. To ensure the robustness of the process, the second and third authors completed a validation exercise according to the following protocol:

For each code, a descriptive summary was provided by the first author.

The allocation of these codes to the sub-themes and themes was then checked and approved by the second and third authors.

For each theme and sub-theme, a further descriptive summary was developed by the first author.

Two representative quotes from each sub-theme were extracted by the first author.

The second and third authors reviewed the quotes to confirm that they were reflective of the sub-theme or theme to which they had been ascribed.

Full agreement on the template was reached within the group during a discussion session.

Another round of coding of the complete data was then carried out by the first author. Participants did not provide feedback on the findings, since the aims of this work are exploratory rather than the development of a theory or concrete set of recommendations.

Results

Results are presented as a descriptive interpretation of each subtheme, organised under their top-level theme. A full list of themes and sub-themes is presented in . Direct quotes from participants are included to aid the interpretation of the analysis. Quotes have been edited to ensure the anonymity of participants and, where required, to increase readability.

Table 2. Themes and subthemes identified during transcript analysis.

The two groups of participants are referred to as the Assessment Group and the Daily Use Group in this section. Commonly used acronyms for Speech and Language Therapist (SLT) and Occupational Therapist (OT) are also used throughout.

How professionals use feedback tools

Discussions in both groups focused on the different uses of feedback provided by the eye-gaze technology feedback tools and how professionals made use of the five feedback types.

Adjustments (device, environment, user)

Using feedback to inform adjustments was discussed in both focus groups. Participants involved in assessment (Assessment Group) and daily use (Daily Use Group) both reported instances of their using feedback tools to determine adjustments to the eye-gaze device setup, the environment, or a particular aspect of the user. Participants discussed the positioning guide as an exemplar of a useful piece of feedback in this regard, describing that this feedback tool supported adjustments to the relative position of the user and the eye-gaze camera.

Given how critical the positioning of the device is, the orientation of the device relative to the user, I just find [the positioning guide] so useful, especially for clients who’ve got a difficult time positioning a system for use, I just think [it’s really useful] having that very precise sliding indicator to show where you are, distance-wise. (P01, Clinical Scientist, Assessment Group)

Calibration plots were also described by both groups as a source of feedback that might prompt adjustment to the device setup or the user’s position:

Sometimes if there’s a bad line along the top that can indicate that I just need to push the device up or down a little bit which tends to fix that. (P04, OT, Assessment Group)

[calibration] could potentially inform the position of the device as well. Just having to physically raise the device or lower the device if we’re struggling at a particular point and recalibrate. That’s still the same, it’s an indicator, not a concrete thing. (P03, AAC Product Representative, Assessment Group)

[impact of the environment can be unclear] without the video: too much light coming in and things like that. (P08, SLT, Daily Use Group)

Although you can’t tell that [a bad result on the positioning guide] is glare. [It could be] cleaning glasses or somebody pointing and blocking the camera […]. (P06, Clinical Technologist, Assessment Group)

So it might be their glasses are in the way. So when they’re looking at the top left, the rim of their glasses is in the way or they’ve got a reflection from the screen on their glasses. (P08, SLT, Daily Use Group)

Informing hardware choice

Although the choice of eye-gaze hardware was not central to either discussion, both groups recounted examples of having used the feedback offered by different manufacturers to determine which hardware they would prefer to use. The one feedback type that was specifically cited as a potential determiner of hardware choice was the live feed: participants in the Assessment Group described a preference for cameras and software that offered this as an option.

Several members of the Assessment group also reported that they kept a stock of older hardware that was no longer commercially available because they valued certain functionality that was no longer available in the manufacturer’s offering:

I was a fan of the very early [MANUFACTURER] software – they had little lines showing the [location of] the sample points compared to where the final decided average gaze point [was] and that direction could really be helpful, seeing if it’s all to the left and all to the right. (P06, Clinical Technologist, Assessment Group)

We also still keep around a number of the old [MANUFACTURER] cameras with the old software so that we can get the lines with the separate eyes for those really complex users. (P02, OT, Assessment Group)

Guiding intervention

Both groups provided examples of when the feedback discussed had been used to make decisions on the interventions they might use with a person, beyond those directly related to the device or positioning.

Professionals in both groups discussed the potential for calibration performance to impact their choice of AAC vocabulary, with a good calibration linked to the selection of vocabulary with more items on the screen. It was also discussed that calibration might feed into decisions on what type or level of activities should be attempted with the eye-gaze system:

And I think as well from [calibration] data you would then use that to support you with a decision about the amount of cells that you’re going to provide somebody, whether they’re text to speech or whether they’re using their symbol-based vocabulary or whether they are using some cause and effect games or different games, and how many symbols or cells? Or are they using [full] computer access. So you use all this information for that. (P08, SLT, Daily Use Group)

Participants who worked daily with users of eye-gaze technology discussed that, if calibration outcomes were not optimal, ways of improving performance would be sought, rather than considering alternative methods of accessing a device. The use of training software to increase eye-gaze performance was discussed extensively by the Daily group of participants:

If the [calibration] doesn’t look marvellous and the bottom left is hard [in the example shown to focus group participants] and so you might try some specific targeted activities to try and improve people’s ability to access the whole screen. […] We might start using some specific targeted eye gaze training and games and things alongside communication or IT to target specific areas of the screen. (P11, OT, Daily Group)

[Regarding learners who are using eye-gaze] It’s not the last thing we try, but it’s not the first thing. Direct access takes quite a lot of cognitive loading out of what you’re trying to do. Most of us, if we can directly access a keyboard or directly access our iPhone, that’s what we choose to do. So you’ve probably been through quite a lot of different ways of trying to access things along your journey to looking at eye-gaze, because it’s quite tiring, the equipment can be quite expensive, it can need a certain amount of knowledge to be able to set it up and use it effectively. So I definitely wouldn’t give up if I got a bad calibration, for probably years, because it’s got the potential to enable you to sort of access things that other access methods won’t have. (P11, OT, Daily Group)

Informing interface design

Participants in both groups discussed that they used certain types of eye-gaze feedback to inform the design of interfaces for users. Both groups mentioned using calibration outcomes to guide the dimensions and placement of cells in an AAC system—relocating items if users were having difficulty targeting the calibration point in one particular part of the screen. Similarly, using calibration as a measure of overall accuracy was felt by participants in both groups to be a suitable way of determining what size of item or complexity of vocabulary layout to offer to a user.

[calibration] is kind of what determines, if I’m doing AAC, what vocabulary size I’m gonna kick off with. (P04, OT, Assessment Group)

The Daily Use Group discussed the use of heatmaps to overlay information about where the user was looking and, in turn, make decisions about where to position onscreen items:

Heat mapping and the [live feed] are really useful for that. So, if you’re creating individualised grid sets, you can arrange stuff where you want it: the most high frequency cells in the areas that they are able to access most readily. (P11, OT, Daily Use Group)

User presentation

Participants in both groups felt that they could use feedback methods to gain information regarding an eye-gaze user’s individual presentation, by which they were referring to a user’s oculomotor abilities, their cognition, or specific types of visual impairment or visual function.

Specific oculomotor disorders were mentioned in both groups, with strabismus and nystagmus both mentioned by name in the Assessment Group:

When you have a client with a strabismus, I think it’s very important to have data from each eye separately and not combined when you’re getting calibration feedback data. (P06, Clinical Technologist, Assessment Group)

We’ve also specifically targeted one eye before. We’ve got information that’s showing us that it’s picking up two eyes, but if somebody has a divergent squint it can be quite confusing, and if you can then tell software just to target one eye which has a more reliable gaze that can be really useful (P11, OT, Daily Use Group)

I’ve had it where people have had damage to their eyes before and they didn’t know [about it]. And then, having that calibration really helped to be able to then identify “Oh we’ll just use the right eye” (P08, SLT, Daily Use Group)

[On the use of heatmaps] So are they fixating? Are they tracking? Are they able to scan? Because it sometimes might appear as if they are, [but I use heat maps] as an analysis to reflect on where their skill set is and are they just exploring their screen? Do we need to do early visual attention stuff? Or are they starting to fixate and scan? (P09, SLT, Daily Use Group)

We can see whether or not this person’s eyes are matching the movements on the video when we watch it back. So we know whether or not they are purposefully moving their eyes to do what we expect somebody to do. So it is useful to tick off certain areas that we might be concerned about. (P04, OT, Assessment Group)

[At the early stages of assessment] If it’s the reflexive stuff, we wanna know if they can see [things on the screen]. So we’re ticking off whether or not their eyes are moving involuntarily, reflexively if you see what I mean. We’re not looking to see if they’re volitionally doing stuff, we don’t tend to do that very much, but maybe we should do. (P04, OT, Assessment Group)

Both groups cited a lack of input from other specialisms, such as ophthalmology, as a reason they would make use of eye-gaze feedback to learn more about an individual user’s presentation. In this scenario, professionals feel that they are trying to obtain information that might be helpful to support their decision-making, using the tools available to them in the absence of a full assessment.

We’re trying to figure out whether there’s a visual pursuit issue first. If it’s the reflexive stuff, we wanna know if they can see [a stimulus] because we don’t really get any CVI or ocular data from other professionals. So we need to know if [they] can see it. (P04, OT, Assessment Group)

The use of eye-gaze to obtain information about a user’s cognitive ability was discussed in both groups, although it was rarely named as such by either group. Instead, participants tended to refer to the idea of users making progress through skills that might be considered cognitive, such as cause and effect, object fixation, choice-making, etc. Here the use of eye-gaze training software packages was suggested by some participants as a way of developing visual and cognitive skills.

[If a poor calibration is reported] we might start using some specific targeted eye gaze training and games and things alongside communication or IT to target specific areas of the screen. (P11, OT, Daily Use Group)

For some students, eye gaze does fail just because […] the cognition isn’t quite there for them to control their eye movements in that way. (P09, SLT, Daily Use Group)

Support and training

Both groups of professionals would be expected to support others in using eye-gaze technology—either providing support and training for families and carers working with individual users or for other professionals providing general support. Both groups discussed types of eye-gaze feedback that they found useful in providing such support.

Participants in both groups recounted scenarios where feedback had been useful to give others an idea of what to look for as an indicator that the device was working well or not working as intended. Examples used by both groups were the colour coding of calibration plots and the face avatar used in some positioning guides.

It’s easier to say “[this is] the optimum position to be in and look, like this, with a smiley face” – that’s a nice easy way to describe that positioning to people. (P11, OT, Daily Group)

Both groups gave examples of having used feedback to illustrate specific points during training, having shown examples of a particular feedback type to show others “what good looks like”, or explaining how the device functions.

A lot of these sorts of tools allows people to see how it’s working: I will show clinicians photographs like [the topic prompt], showing how it works. (P04, OT, Assessment Group)

One element of support that was raised in the Assessment focus group was that of remote support. This was likely more of a concern for this group of professionals because of their working contexts and less frequent direct contact with individual users. In this scenario, professionals are unlikely to have direct “screen share” access to the device and hence the feedback from the device is typically described to them by a third party or viewed on an external camera. As such, there was a recurrence of the need for different levels of feedback for different users and functions:

When you’re supporting people over the phone and [they say] “the eye gaze isn’t working”, you can say “what’s the calibration looking like?”. If it’s lines, it’s much more difficult for them to describe [than if the device is giving feedback in the form of colours and numbers]. (P04, OT, Assessment Group)

Discussion with other stakeholders

Both groups reported that they had used feedback in discussions with users, families, and other professionals. Examples of cases where having feedback could both help and hinder discussion were provided.

The Daily Use Group discussed heatmaps as helpful discussion aids when feeding back to other stakeholders involved in eye-gaze provision. The group related this to the need to measure and share progress, or to aid target setting for individuals:

I find [heatmaps] really useful as well for showing other people: it’s a nice way to explain success with eye gaze or areas of progress as well. Which areas are they focusing on more? Is there a reason for that? How close are they to those target areas as well? (P10, SLT, Daily Use Group)

[heatmaps] include some things that don’t support an argument either way. And then it would be used against me to prove the other case. (P01, Clinical Scientist, Assessment Group)

We can get [calibration plots] in a nice clear visual, coloured form so that we can feedback to the person and their family: “well you know green is good and red is not so good” (P05, SLT, Assessment Group)

When [you’re with] the family who are looking for [hope], and you get these [green calibration points], sometimes that’s immediately translated by the family as like, “Oh great, they can use eye gaze”. [But if the user is subsequently unable to use eye-gaze functionally] the family then start potentially thinking that it’s the clinician that’s the problem because “the system actually understood them really well because [the calibration was all green]”. (P04, OT, Assessment Group)

Trial and error

Both groups expressed frustration that they felt there was a need to rely on trial and error, even once they had the information from the different feedback tools. In the Assessment Group, the participants discussed that they were able to achieve good outcomes for users through making incremental adjustments through trial and error, but that they felt this process could be expedited and made more systematic if the device could provide feedback on, for example, dwell times:

[Users] get given a device and it’s 1.5 second dwell time and that just becomes “how I use it”, when they could be achieving better by bringing that dwell time down in a controlled and informed way. Rather than “Slow down a bit. How’s that? Speed it up. How’s that?” (P01, Clinical Scientist, Assessment Group)

Sometimes it is positioning in the room or something else that’s throwing it off, and if you could angle them differently, that would make a big difference. At the moment, that seems to be quite trial and error. It’s just like, “Let’s see if we turn the lights down, does that make a difference? If we move over here, is that any better? (P11, OT, Daily Group)

Unhelpful feedback

There was general agreement across both groups that most feedback offered by eye-gaze devices was to some extent useful, with two notable exceptions: heatmaps and scan paths.

The groups had contrasting opinions on the usefulness of heatmaps with the Assessment Group concluding that they added little of use to the assessment and decision-making process, whereas the Daily Use Group felt that they were a useful tool for gaining insight, measuring progress, and sharing information about a user’s performance.

[Heatmaps] give you so much information. I think these are great and it’s brilliant. (P08, SLT, Daily Use Group)

[Responding the question “What information do you get from a heatmap] Nothing […] I’ve never found them useful. (P01, Clinical Scientist, Assessment Group)

There was some variability in the Assessment Group’s feelings about heatmaps, with participants suggesting use cases, such as ensuring that users were engaged with the screen or finding areas of the screen that were more difficult to access. The general feeling in this group, however, was much more cautious about the use of heatmaps than were the Daily Use Group.

Both groups felt that scan paths were not helpful to their practice, with no participant in either group using them regularly. Both groups felt that scan paths were more of a “research” tool or a tool to give very specific insight that was not relevant to the selection and provision of eye-gaze devices.

Isn’t this more used like for research applications like [putting] an eye-gaze camera underneath a shelf to see where someone looks when they walk into Boots? (P01, Clinical Scientist, Assessment Group)

The Assessment group felt that there were possible insights to be gained from scan paths but had significant worries that the data they presented was over-simplified: simply joining recorded fixations with straight lines rather than offering a true and accurate representation of gaze behaviour.

I feel like it’s just connecting the fixations, or what the algorithm says is a fixation, and it’s a little unclear sometimes what the algorithm is defining as a fixation. And then it’s just connecting [them] with straight lines. And [the user] could have gone off screen and we wouldn’t know. (P06, Clinical Technologist, Assessment Group)

There was some discussion in the Assessment Group that, properly executed, the overlay of scan paths on recorded video of onscreen content, coupled with audio recorded in the room could potentially provide some insight. There was debate around exactly what useful information could be gathered from this method, over simple observations of the cursor movement within other software.

I was watching back a recording and I can hear what information that individual is getting so this could be useful if I’m commenting on the star on the Christmas tree and at the same time [the user] then looked towards it, that is more useful for me because I’m like, “OK you’ve listened to what I’ve said, you’ve followed that”. (P03, AAC Product Representative, Assessment Group)

I would do a calibration and then go straight into using an AAC grid, typically. If something’s up, I might put on a motivating video but I don’t really mind about scan paths because I just want to see whether or not they are moving their eyes in a way that I would expect. (P04, OT, Assessment Group)

The Daily Use group concurred that a scan path offered very little interest above what they felt could be ascertained from a heatmap—citing that they felt this was a more complicated way of presenting similar data.

I very rarely use these, it takes a long time to pick apart. I think for the targets I’m usually working on and what I’m interested in, the heatmap is sufficient enough. I don’t tend to need this kind of data […] It would take a long time to explain to someone else. (P09, SLT, Daily Group)

Timing and availability of feedback tools

Participants in both groups discussed the timing and accessibility of feedback and these two sub-themes were often inter-related. For many in the Assessment Group, timing of feedback was considered a challenge, with devices not offering feedback in a timely fashion, leaving professionals to spot challenges or changes in a user’s performance before seeking the feedback to diagnose or explore further what the issues might be:

You’re only going here when you think “Why isn’t that working?” And it’s like a puzzle. You’re getting a clue and the more the more information you have, the more things you could try. (P06, Clinical Technologist, Assessment Group)

[Perhaps it would be better to do calibration and] not show it necessarily and go straight in. And then be able to go back and look at the data afterwards. So almost like calibrate, try whatever you’re doing and then go “OK that wasn’t quite ready. Let’s go look at the result”. So almost give that impression to the individual themselves to go try it. Is it working? Brilliant. [If not] then we can go back and look at our data from the calibration 5 minutes ago rather than being presented with it instantly. (P03, AAC Product Representative, Assessment Group)

I’ve wondered in the past whether or not the systems could provide guidance in lieu of a clinician. Things like: “Your accuracy seems to have dropped a bit, would you care to switch over to this two-hit keyboard I’ve got ready?” We do explain to clients and [say in training that] if you notice the accuracy is not very good you can try and recalibrate, you can check your position, you can do all these other things or if you just wanna get on with stuff you can switch to a two hit keyboard. But it rarely happens. (P04, OT, Assessment Group)

Or what you could do is have everything on the screen, but you change it to a two-step. So your camera just turns into a two-step when your access to eye gaze is more difficult. (P08, SLT, Daily Use Group)

Participants in this group discussed that there were limits to the types of interjections and advice they would be comfortable with a device offering and, although a consensus was not discussed or agreed upon, there was a general feeling that certain interventions were outside what should be outsourced to a device. The group discussed, for example, whether a device could recommend the provision of eye drops if a corneal glint could not be identified:

I’d also be a bit cautious around anything that says, “you might need eye drops” because from a manufacturing point of view it’s kind of dodgy ground of giving advice. I don’t know who’s at the other end of it and, if I tell them to do something that actually could cause harm, not that eye drops would necessarily, but I can see a can of worms depending on what advice we give would be. (P03, AAC Product Representative, Assessment Group)

The [CAMERA] in its original form, when it had the two LEDs on the front to show when it’s got sight of one or both eyes. That was something which is hugely useful. Because otherwise you can only really evaluate the stability of the tracking whilst you’ve got a track status window open in front of you (P01, Clinical Scientist, Assessment Group)

Professionals’ confidence in feedback tools

The confidence that the two groups of professionals had in the feedback from eye-gaze devices was the subject of much discussion in both groups.

Clarity of feedback

Clarity of feedback was linked in discussions to how easy the feedback was to interpret, with a particular focus on design choices, such as colours or labelling.

Discussions in the Daily Use Group viewed the clarity of feedback through the lens of usability. Discussion focused on how less experienced staff and support workers might use or interpret different types of feedback, relating this closely to the theme of experience and role. The discussion was characterised by the use of terms, such as “user friendly” and “simplicity”, with decisions around colour coding of calibration plots or positioning guides viewed as attempts to simplify the information for a less experienced user.

I think [colour coding] makes it easier for the end user because it’s not necessarily us that’s going to be doing this every day. It’s gonna be the support workers, so it does need to be user friendly. (P08, SLT, Daily Group)

It was notable in this way that the Assessment group viewed the simplification of information as opaque, perhaps obscuring the full picture:

At the moment, we’ve got the manufacturer labels of red is poor, yellow is average or something like that. And then green is good. I think [we need] something to actually qualify that a bit more. Because I wouldn’t say that is a fair reflection: the yellow being average. No, I’d say “red is a tracking error, yellow is bad and green is only good if it’s above 89” (P01, Clinical Scientist, Assessment Group)

Transparency of method

This theme was primarily discussed by the Assessment Group and only touched upon tangentially by the Daily Use Group. The Assessment Group expressed a perceived discrepancy between the presentation of the feedback and their own observations, relating this to a lack of access to the algorithms and calculations that generate the visual representations of each type of feedback:

Sample data from each point is very useful [in a calibration plot], rather than an average of where the projected gaze point is, compared to the offered gaze point. Seeing the grouping of where the sample points were and where those cumulatively appear can be very useful. I think, more transparency as to what the feedback means. So for example: 89? Well, what does that mean exactly? (P06, Clinical Technologist, Assessment Group)

[There] was a suggestion of “apply eye drops”. OK, but how does [the device] distinguish that the reflection that it’s seeing [means] there’s a problem with that reflection that probably needs eye drops? How does it know that’s on the cornea, it’s not a reflection on the lens of the pair of glasses? (P01, Clinical Scientist, Assessment Group)

In the Daily Use Group, the processes by which feedback was generated were discussed less. The group felt that most feedback tools were rendered simply to increase their usability and that they had ways of getting more information if required:

It’s who that information is useful for: where they’ve had a red calibration, for example, having that information to say “they did look at it for a period of time, but it wasn’t long enough to collect the data, so that’s why it’s red” […] But I don’t think that’s useful for service users. (P05, SLT, Assessment Group)

I suppose that’s where you use your information from the [live feed], isn’t it? In terms of that functionality of where they’re looking and how long they’re looking for, you get that information from the [live feed], do we need to necessarily know that from the calibration? (P05, SLT, Assessment Group)

Objectivity

The Assessment Group presented a clear distinction between feedback methods that they saw as purely objective, such as calibration plots, and those that were considered to have an element of subjectivity, such as heatmaps. This distinction was not overtly discussed by participants in the Daily Use Group.

The Daily Use Group felt that heatmaps were a valuable source of information, which could provide insight into a user’s looking behaviours, vision abilities, preferences, and attention. This group also felt that heatmaps were a good way to measure performance or progress and a basis for discussion with families, caregivers, and other support workers:

I find these really useful as well for showing other people: it’s a nice way to explain success with eye gaze or kind of areas of progress as well. Which areas are they focusing on more? Is there a reason for that? How close are they to those target areas as well? (P10, SLT, Daily Use Group)

They’re very easy to give to parents and teachers and everyone (P09, SLT, Daily Use Group)

Because it would be able to get that data because it’s got that data with the visual, so it would be able to do that if you needed, say, hard evidence. If a parent was really struggling to understand why the individual was struggling with the top right corner, let’s say, and then you could have that information. (P08, SLT, Daily Use Group)

The Assessment Group viewed both heatmaps and scan paths with scepticism, even going so far as to say that they did not provide any clinically useful information.

I’ve never found them useful because I don’t know about [what else was going on while the heatmap was generated]. The thing is, people get distracted and your normal saccadic eye movement [means] you will look around all over the place anyway. So yeah. So I’ve never found [heatmaps] useful. (P01, Clinical Scientist, Assessment Group)

This was linked to the discussion in this group about the uncertainty around how the information in these feedback tools was calculated:

I feel like [SOFTWARE] gives misleading data. I feel like it’s just connecting the fixations, [or what] the algorithm says is a fixation. And again, it’s a little unclear sometimes what the algorithm is defining as a fixation. (P06, Clinical Technologist, Assessment Group)

If you’re validating calibration by playing a Peppa Pig video or something, before you actually watch back the perceived eye movements, you do get the heatmap appear. And sometimes that can indicate that there is something going on, because all of the pattern is in the top left corner, whereas Peppa Pig was hanging around [on the right of the screen] and this is after a calibration, so you can see straight away [that] something’s up here because we would have thought this young person would be looking at Peppa Pig. It doesn’t give us any answers. It’s just one of the sort of triggers that you’re, like, “OK, let’s have a look at this, let’s figure out what’s going on” and it’s the first moment where you’re, like, “Oh that’s disappointing because it looked like they were watching Peppa Pig and it looked like they calibrated, but something’s awry”. But I wouldn’t use it to make any […] It doesn’t really solve any problems. (P04, OT, Assessment Group)

Even though someone could get a really good calibration, they then may not be able to use eye gaze. Often in our setting it can be due to cognitive difficulties. So, because it was very difficult to explain to a family because they were seeing [a] really good calibration, we played some family videos and we were using the heat mapping so that afterwards we [could show] that when they were playing [the user was] not looking at them: they were looking somewhere completely different. But his calibration was really good. So on paper, he looked like a good eye-gaze user, but he was having a lot of difficulties. So that’s the only instance I have used [a heatmap] and I have to say it was very, very, very useful. It was upsetting, but it was useful. (P05, SLT, Assessment Group)

I use a lot for showing staff what’s happening when they’re using [eye-gaze] and lots in target setting. So, finding out exactly where they are in their visual attention, visual development and then going from there, based on the activities that you’re choosing to target specific skills to work up towards using eye gaze for to access communication. (P09, SLT, Daily Use Group)

It’s really good for assessment data: the teachers that we work with like this, they can keep those in terms of a measure of progress as well (P07, SLT, Daily Use Group)

The objectivity of different feedback types had implications for how professionals in the Assessment Group felt about eye-gaze devices making adjustments, changes, or suggestions for the user. Where data was felt to be more objective (such as in accuracy of calibration), there was a greater willingness to potentially automate processes:

[If] there’s nothing subjective around its interpretation: the device would be able to tell you: at the end of calibration, at any point did it lose sight of your eyes. That would be very useful. (P01, Clinical Scientist, Assessment Group)

Impact of prior knowledge and experience on use of feedback tools

Both focus groups discussed the need for feedback tools to be useful to the intended audience. There was consensus between the groups that a “one size fits all” approach to feedback could cause frustration to users and to professionals and support staff at all levels.

Amount of feedback

The amount of feedback available to professionals was a subject of discussion in both focus groups. Both groups made a distinction between themselves and two other groups: support workers/families and the users of the technology. There was consensus between the focus groups that these groups would have different requirements from eye-gaze feedback and that it was potentially useful to be able to switch between these:

[Professionals need] a Nerd Mode or an advanced mode. I don’t know if you have gone onto YouTube and pressed the Nerd Mode button and it just gives you tons of information about the video that you’re watching, like all the codec data and stuff. That’s fascinating: but most people don’t want it. Having a little Nerd Mode button for us to [press] and it will just give you tons of data – that would be really handy. (P04, OT, Assessment Group)

[It’s good to keep feedback] as simple as possible for staff in care settings: being able to say “look at the numbers, if it’s green it’s good, roughly” and then having – I love that – a Nerd Mode for more detailed analysis when it’s needed. (P03, AAC Product Representative, Assessment Group)

I don’t think [providing more information on calibration outcomes] would be useful for everyone, and again, it’s who the information is useful for. I don’t think that’s useful for service users or for support staff. But therapists and professionals looking at it, perhaps we’re interpreting things differently. (P10, SLT, Daily Use Group)

Role, experience, and judgement

The Assessment Group highlighted that feedback too often relied on the knowledge of the professional using it for interpretation. Where feedback was presented with little annotation or labelling, the group felt that there was an unwarranted requirement for the professionals to interpret something that could be made more transparent:

On the [MANUFACTURER] positioning guide, I can never remember which is near and which is far and I use them every day. And so if I can’t remember, I don’t know how other people are going to remember these things. (P04, OT, Assessment Group)

It does get a bit confusing. [Suppliers will say] “it doesn’t have to be green all the way around, it could be red all the way around and that could actually be a good calibration for that individual”. (P08, SLT, Daily Use Group)

Because there’s a lot of people that are not computer literate that we work with who are meant to be supporting individuals, so having things that are very easy to look at is very positive. (P08, SLT, Daily Group)

Use of feedback tools in combination

Combining with observation

There was a general feeling that certain types of feedback (in particular calibration plots) were always used in combination with observations of the user. This was closely linked to the feeling that feedback often gave an indication that something was wrong, but did not always provide or suggest any solutions.

The Daily Use Group discussed the importance of viewing feedback alongside observations of a user’s gaze in low-tech or no-tech communication. This was often related to observations of the use of gaze for communication in other contexts being the basis for a trial of eye-gaze technology:

[I would] look at their use of eye pointing, eye gaze in low tech situations: do they use eye pointing to make choices from objects? Are they using the low tech E-Tran setup or anything like that? Because if they’re consistently able to use a low-tech system and that’s been the rationale behind trialling a high tech eye gaze [device] It may be something external [to the user]. And so I’d definitely look at their eye pointing skills outside of a computer based context. (P09, SLT, Daily Group)

If you are doing it remotely though, [the positioning guide might look] perfect and you’re like “there’s no positioning problem that I need to solve”. So what we always had to do was have somebody with a camera on WhatsApp or something so that we could actually look at the scene just to check that it was actually in the right position. (P04, OT, Assessment Group)

When [the positioning guide shows] lovely level eyes in the middle of the screen, that looks very nice to me as an OT, but it’s not actually always the best way for somebody to access eye-gaze technology. Sometimes people can see better, attend better, track better when they’re head is on the side, and one eye is higher than the other, and all the things I don’t like. So I think the sort of [ideal positioning indicators] aren’t always maybe the ones we want. Or they’re what we want, but they might not be what’s best for the learner. (P11, OT, Daily Group)

Similarly, when heatmaps were discussed, the groups felt that additional data gained from either observing the session or recording the audio within the room helped provide a helpful extra layer of interpretation. Both groups proposed some functionality where elements of the scene or environmental data could be captured alongside the currently available feedback, such as providing a video of the user during remote support sessions or giving information on ambient light levels during setup and usage.

Combining multiple feedback types

Less frequently discussed by either group was the option to combine multiple types of feedback. In both groups, this was limited only to certain scenarios, generally occurring when the professional was seeking to gather more information for decision-making.

I’d use [calibration plots] to further my assessment. So I’d try and see if we can improve some of those points by looking at the [positioning guide], by maybe just changing the targets that we’re looking at, just to try and see. I then go to my grids and go “Right, I’m gonna have to change things here to make it easier for them”. So yeah, I do further assessment I guess, I’d use it to help me further in my assessments. (P05, SLT, Assessment Group)

There was some discussion in the Daily Use Group that the use of heatmaps and scan paths in combination, for example, could offer a more comprehensive picture of what had taken place in an eye-gaze assessment session:

[Scan paths have] the real life timings of where somebody’s eyes are looking and flowing. The [heat map] is just “this is around about where they looked”. (P08, Speech and Language Therapist, Daily Group)

Related to this topic, both groups reflected that they felt it would be helpful to be able to retrospectively review certain types of feedback. Whilst scan paths and heatmaps do, in some cases, offer the option to watch the session back, there is no similar facility for calibration attempts, which the Assessment Group in particular felt would be helpful.

Discussion

This study provides insight into how professionals involved in eye-gaze provision and support perceive and interpret different types of feedback provided by eye-gaze technology. The discussion section of this paper is organised around the findings related to each of the five research questions.

What feedback provided by eye-gaze technology do professionals find helpful?

The findings from this study suggest that professionals find calibration plots, positioning guides, and live video feeds all constitute helpful feedback. Professionals do not seem to find scan path plots helpful, with only a small number of examples of their use being put forward, and no participant using them routinely.

The usefulness of heatmaps appears to be perceived differently by professionals depending on their professional perspective. Those supporting individuals using eye-gaze technology daily appear to perceive heatmaps as a useful tool for a variety of purposes including assessing aspects of a person’s visual function, gaining insight into their cognition, understanding preferences and motivation, and measuring progress over time. Those involved in assessment, by contrast, were generally dismissive of the use of heatmaps in decision-making and tended to only use this tool for purposes, such as exploring whether a user was engaging with the whole screen area, or if their gaze was being registered only in a particular part of the screen.

How is feedback provided by eye-gaze technology used by professionals?

This study suggests that the predominant use of feedback tools by professionals is live within sessions, with calibration plots and positioning guides being used to provide immediate information which can be used to make adjustments in support of individual users. The study suggests that current feedback tools are good at identifying the existence of a problem, but comparatively less good at giving insight into causes or potential solutions. Professionals expressed an interest in having feedback tools record and store elements of the process to which the feedback tool relates for subsequent, more in-depth analysis.

The use of heatmaps as a tool was where, in this study, there appeared to be most variance between professionals involved in different aspects of eye-gaze technology support. Heatmaps were given as an example of a feedback tool used retrospectively to draw inferences about how a person is using the technology or the appropriateness of activities and software chosen for or by the user. However, those using eye-gaze technology to support individuals in daily use appear to perceive heatmaps as being more scientific, more data driven, and more allied to the world of research than those professionals involved in eye-gaze technology assessment. This research suggests that heatmaps are sometimes presented as evidence of skills or as concrete representations of skills or abilities. It is known that the interpretation of heatmaps, in particular the attribution of specific intent to recorded gaze points and patterns, is highly subjective and may be prone to confirmatory biases [Citation5]. There are also several pragmatic issues in using heatmaps generated by AT software. When such tools are used in research, the data they summarise is less valid without accompanying information, such as details of the calibration, the duration of exposure, information about how the software has categorised a fixation, and a legend giving some sense of the duration or fixation count represented by the colour gradient [Citation5,Citation14].

It is interesting to note that professionals occasionally repurpose feedback tools for purposes other than those for which they are designed. The use of calibration plots to determine the size and layout of AAC vocabularies is an example of this: if a user is having difficulties calibrating on one side of the screen, cells may be compressed to the other, where their accuracy is better. Whilst there may be validity in this, there is a risk that this presents a technical solution to a user problem. This practice reinforces the suggestion from this study that feedback tools do not provide useful insight into the causes of problems that users experience: there may be many reasons why certain screen areas are hard to access and the discussion suggests that feedback tools are used more to identify a problem than to explore its cause. Further, the use of calibration plots to make decisions about vocabulary size, or even the choice of activity itself, suggests that there may be scenarios where eye-gaze has been decided upon as an access method and other considerations are adapted to fit this choice. There may be multiple reasons for this, such as other access methods having already been trialled and found to be unsuitable. The use of feedback tools for functions other than those intended may speak to a broader feeling that professionals lack the specific tools for some areas of decision-making and are instead “making do” with whichever tool they deem to be closest to their requirements.

This study suggests that professionals sometimes use multiple different feedback types to guide the same decisions. For example, using both the positioning guide and calibration plot to prompt adjustments to the relative positions of the device and the user. This may be interpreted as neither feedback method in isolation providing the complete picture. This sentiment was underlined by professionals noting that a live video feed could provide extra troubleshooting information, but that this feedback tool was not always available.

Using feedback in combination with clinical observation appears to be a standard practice by professionals. In some cases, it was felt that there was a need for professionals to interpret feedback (as was the case with heatmaps) or that the output of a feedback tool required a level of professional competence to be translated into changes to the system. In other cases, there were more pragmatic concerns—with the Occupational Therapists in both groups highlighting the potential for increased risk if making changes to a user’s position based only on an attempt to get the positioning guide to report a “good” position.

How do professionals describe performance with eye-gaze technology?

The concept of eye-gaze performance is not well defined in assistive technology literature or practice, but this study suggests that professionals view performance as a construct of both in-person and technical factors.

The most common way in which performance was discussed was in the context of progress: professionals appear to use eye-gaze technology feedback to measure the progress of their eye-gaze users, including tracking the development of “early” eye-gaze skills, such as fixation, tracking, and visual scanning. There is, to the authors’ knowledge, no support for implying or measuring visual ability or specific cognitive processes from eye-gaze performance in the existing literature. Whilst there exists some literature on the measurement of specific eye movements [Citation20], studies tend to focus on neurotypical populations [Citation21] or those without physical disability. Studies tend to use eye-tracking systems [Citation22,Citation23] with a higher sampling rate and more sophisticated software than is on offer in eye-gaze cameras. Where literature exists on eye-gaze technology, its focus is more on functional use, satisfaction, and evaluation of the technology itself [Citation4].

In this study, the discussion centred on the use of heatmaps as a way of measuring the development of skills and this is an area in which practice appears to not be aligned with the literature. In addition to the subjectivity of their interpretation, heatmaps created from eye-gaze devices are often recorded for varying lengths of time and with unknown or unreported calibration accuracy, making comparisons between them unlikely to be valid in representing progress with a particular skill [Citation14]. Borgestig et al. [Citation24] suggest that there is a need to measure change in performance over long periods (>1 year) for some children at the very early stages of learning to use eye-gaze technology. In practice, the feedback tools discussed in this study do not lend themselves well to measuring progress in this way, since they do not offer methods to objectively chart changes in accuracy or time on task, for example. In this context, it is perhaps understandable that heatmaps may be used as a proxy.

It is interesting to observe that the question of moving from eye-gaze to another access method, based on comparative performance, was not discussed in either group, although this may have been due to the focus of the project and perceptions of what the research team was interested in. Indeed, those supporting eye-gaze use daily appear reticent to consider abandoning eye-gaze technology, even in the face of relatively poor performance. The justification given for this was that eye-gaze was sometimes the last resort and hence was pursued as far as was practical. This finding may be suggestive of resources at a local level being scarce, so eye-gaze might not be considered until all other access methods had been trialled and, presumably, shown not to be appropriate. However, it is equally the case that eye-gaze systems are becoming cheaper and it is more common to find systems purchased for use by a whole class, year group, or school. In this eventuality, it is possible that eye-gaze is used because it is simply available to professionals, an aspect of decision-making that has been reported elsewhere in the published literature [Citation11]. The idea that eye-gaze is persisted with, whether significant progress is seen or not, may also be linked to the amount of expectation connected to the technology, with discussions elsewhere in the literature [Citation8,Citation25] on the allure of this technology, particularly where other access methods have been unsuccessful.

The tendency to persist with eye-gaze may also be explained by the emergence of software packages that claim to help users develop skills, such as fixation or cause and effect. Such packages support the idea that professionals should continue to persist with the technology, but the activities are not typically externally validated and the efficacy of this approach is yet to be explored by researchers [Citation26].

What information about a user’s vision, gaze, and cognition do professionals gain from feedback provided by eye-gaze technology?

This study suggests that existing eye-gaze feedback tools are being used to deduce specific information about a user’s vision, their use of gaze, or their cognition. Evidence supporting such deductions is, however, not well established [Citation27–30].

In the discussion around vision, there is an important distinction to be made between visual impairment, which refers to disorders or impairments of the eye, ocular-motor systems or cortical and subcortical visual systems, and visual ability, which describes how a person functions in vision-related activities [Citation31,Citation32].

Regarding aspects of visual impairment related to structure and function, participants felt that there was a role for the technology to support the identification of specific issues, namely strabismus and nystagmus. In the case of strabismus, professionals appear to use calibration plots to identify a user’s dominant eye to be used use in eye-gaze control. The simplification of feedback from this tool was perceived as a hindrance since it was difficult to separate out the data from each eye without separately calibrating each. This is perhaps another example of professionals “making do” when feedback tools are either absent or not meeting their needs. Professionals assessing for eye-gaze appear to perceive that any nystagmus severe enough to impact eye-gaze control would be observable without the need for an eye tracker. The use of existing feedback tools (heatmaps, calibration plots) to make an assessment of a user’s range of eye movements appears prevalent. Taken together, the above suggests professionals in this study are using eye-gaze technology to identify compensatory strategies for impairments of structure and function, rather than to diagnose or describe them.

Using eye-gaze technology to provide meaningful insight into a person’s visual ability is a considerably more vexing question. Professionals appear to use existing eye-gaze feedback tools to judge whether individuals were fixating and what these fixations likely meant in the context of the activity in which they occurred. This appears to be particularly the case in those working with eye-gaze technology daily and in the use of heatmaps. This practice appears contrary to the prevailing understanding that heatmaps merely aggregate gaze data and that conclusions drawn about what made people look at one area for longer are “highly speculative” if based solely on these maps [Citation5, p. 241].

Moving beyond gaze behaviours, it is known that cognitive functioning is often assumed rather than assessed, in particular for children with movement disorders who may be unable to access standard assessment materials [Citation33]. Therefore, there exists a risk that feedback tools, such as heatmaps may be used as confirmatory tools for the preconceptions of those using them—it is known in the broader literature that heatmaps are prone to confirmation bias [Citation5,Citation14]. This study suggests that professionals are inferring specific cognitive behaviours or abilities from feedback provided by eye-gaze technology. This practice again seems contrary to the literature which suggests that this inference is challenging and imprecise, especially when the activities have not been designed and validated for this purpose [Citation27,Citation34].

When using eye-gaze as a control device, users are subject to the “Midas Touch” problem: the fact that the same channel (i.e., the eye orientation) is being used to both receive and transmit information [Citation20,Citation35]. This makes disentangling unintended (reflexive) eye movements from intended (volitional) gaze shifts difficult since the device has no way of differentiating between them and both appear the same in a feedback tool, such as heatmaps. Fixation data can be used as an indicator of attention, since people tend to direct their gaze to the focus of their attention, using a combination of saccadic and smooth pursuit eye movements [Citation36,Citation37]. However, attention can be directed (overtly or covertly) to elements of a game or interface designed to capture the user’s attention or by sudden onsets [Citation38] or motion in the background, even when those are not relevant to the task at hand [Citation39].

What improvements in feedback provided by eye-gaze technology would professionals find helpful?

Feedback tailored for different stakeholders