?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

This paper analyses the associations between computer use in schools and at home and test scores by using TIMSS data covering over 900,000 children in fourth grade. When controlling for school fixed effects, pupils who use computers at school, especially those who use them frequently are found to achieve less than students who never use computers. Daily computer use at home is negatively associated with test scores, although monthly, and sometimes weekly, use is positively associated with pupil performance. There is no significant difference between subjects and only small gender and country differences are observed. Moreover, the result suggests that the negative association of computer use at school is larger among low-performing pupils than for high-performing pupils. The findings suggest a negative association of computer use at school and test scores but do not reject the possibility that computers have a positive impact on test scores if computers are used optimally.

1. Introduction and background

Among policy makers, it is widely believed that investment in Information and Communications Technology (ICT) has an important role in improving education (Organisation for Economic Co-operation and Development [OECD], Citation2006, Citation2010). Supporters propose that tasks such as taking notes, information searches, and collaboration can be made more efficient than with more traditional methods such as pen and paper. Previous research on computers in education has, however, found mixed results and the estimated impacts are often small and insignificant. See, e.g., Bulman and Fairlie (Citation2016) and Hall, Lundin, and Sibbmark (Citation2019) for a literature overview.

An argument against computers in classrooms is that computers have a distraction effect. In a quasi-experiment, Carter, Greenberg, and Walker (Citation2017) found that students at a military college who were allowed to use computers at lectures performed significantly worse than other students. Without supervision, students were more likely to use a computer for non-productive tasks, such as social media or chatting during lectures. Correspondingly, increased computer access did not improve grades or cognitive ability among younger children, and the computers were mostly used for entertainment purposes (Beuermann, Cristia, Cueto, Malamud, & Cruz-Aguayo, Citation2015).

Positive effects of computers are mostly found in studies where the intervention includes supervision and guided computer sessions. For example, Barrow, Markman, and Rouse (Citation2009) report a positive effect of using computer-assisted learning in algebra classes among children. They suggest that the positive effect is explained by motivational factors. The computers let the pupils learn at their own pace, which makes the lectures more engaging. Similarly, Muralidharan, Singh, and Ganimian (Citation2019) observed increased knowledge in Hindi and Maths among students who participated in after school programmes with computer access.Footnote1

In contrast to the experimental approach, Fuchs and Wössmann (Citation2004) examined the relationship of computer use and test scores with observational data from eighth graders in the Programme for International Student Assessment (PISA) study. They report an inverted u-shape between the frequency of computer use at school and test scores. Students who used computers a few times a year or several times a month achieved slightly higher maths scores than never users and significantly higher than students who used computers at school several times a week.

From experimental evidence, it seems like there is a distinction between increasing computer access or implementing computers in education with supervision. It is, therefore, interesting when Falck, Mang, and Wössmann (Citation2018) focus on the effect of different computer activities in the classroom on test scores. They used data at teacher-level in each subject to exploit within-student between-subjects variation and found positive effects of using computers to look up information but adverse effects by using the computer to practice skills. The overall effect of computer use was, however, close to zero.

Studies such as Hull and Duch (Citation2019) and Yanguas (Citation2020) use quasi-experimental approaches as they exploit one-laptop-per-child programmes and evaluate the long-term effects of computers on a larger scale. Hull and Duch (Citation2019) found the effects on test scores in mathematics to be small and the effects only appeared after a few years. In contrast, Yanguas (Citation2020) did not find any positive effect of increased computer access on test scores or cognitive skills. In fact, the results suggest that educational attainment may even decrease by participating in the one-laptop-per-child programme.

This paper contributes to the current literature by studying the associations between computer use and test scores among young pupils. The analysis is based on data from 900,000 fourth graders from the 2011 and 2015 waves of the Trends in International Mathematics and Science Study (TIMSS).

The focus is on younger children, in contrast to the previous studies using TIMSS or PISA data, as there are reasons to believe that the association of computer use and test scores are different for 15-year-old students and 10-year-old children. For example, children who fall behind early in school tend to be disadvantaged later in their education (see, e.g., Hernandez, Citation2011). It is, therefore, of great interest to study computer use among children at younger ages.

Since the TIMSS study is based on observational data, this paper cannot provide a relationship with a causal interpretation such as an experimental study would. The computer use is not randomly distributed in the treatment and control group, which means that the findings in this study should be interpreted in terms of conditional correlations rather than as causal relationships.Footnote2 On the other hand, the empirical approach allows for a larger set of observations than an experimental study. More importantly, the estimated results in observational studies are based on children’s actual computer use, instead of ideal or closely monitored computer use in an experimental setting. Both experimental and observational methods have their strengths and weaknesses. The experimental studies are beneficial in the sense that one can determine the actual treatment effect of computers in education. It is, however, debatable whether the result will reappear if computers are implemented under other circumstances than an experiment. A study with an observational approach can, therefore, complement experimental findings with larger external validity, even if estimated associations are not causal.

Previous studies using TIMSS or PISA data have either reported the result by each country separately or used country fixed effects to account for unobserved heterogeneity between countries. This paper contributes to the literature by instead using school fixed effects. This is important because there may be unobserved heterogeneity between schools that can affect learning outcomes and test scores. For example, schools with more resources are more likely to have access to computers and can also invest in high-quality teachers. This can lead to an upward bias in the computer estimate if teacher quality or school resources are ignored in the analysis. School fixed effects will thus be used to control for this kind of unobserved heterogeneity, unlike earlier studies, which mostly use country fixed effects. Furthermore, computer use might have different implications by gender, different countries (OECD and non-OECD countries), and among high- and low-performing pupils. For this reason, this paper also contributes to the existing literature by studying the impact of computers in different subsamples.

The main analysis uses a regression model that allows for plausible values to be used as dependent variables. The same model is applied to subsamples to determine the association between computer use and test scores among girls and boys and in different countries. Finally, an unconditional quantile regression (UQR) is utilised to analyse the impact of computer use in different parts of the test score distribution.

The results suggest an overall negative association between frequent computer use and test scores by regressing test scores on the pupils’ computer use and other determinants of academic achievement. Initially, there is a positive association between computer use and test scores when no background controls are included. When background controls and country fixed effects are added, the inverted u-shape found in previous studies appears but the size of the shape shrinks when school fixed effects are introduced. The positive association between computer use and test scores almost disappears for school users when school fixed effects are included, and only moderate use of computers at home is associated with higher test scores. The impact of computers is similar between subjects but it varies more between the TIMSS waves.

The subsample analysis shows no significantly different association between computer use at school and test scores across gender. However, girls’ scores are significantly more positively associated with computer use at home compared to boys’ computer use. Computer use at school is more positively, or less negatively, associated with test scores in OECD countries than in non-OECD countries. Finally, there is evidence that computer use in schools is more negatively associated with test scores among low-performing pupils than among individuals in the middle and top of the score distribution.

The paper is organised as follows: Section 2 defines the educational production function. Section 3 describes the TIMSS study and descriptive data. Section 4 presents an empirical specification. Sections 5 and 6 report the results and concludes.

2. Educational production function

The framework of an educational production function is a common approach to explore the relationship between educational inputs and outputs. An educational production function is used to analyse the role of educational inputs such as class size, socioeconomic status, teacher characteristics or computer use on outputs such as test scores or educational attainment (Hanushek, Citation1979). The following theoretical framework is inspired by Fuchs and Wössmann (Citation2004) and Fiorini (Citation2010).

Pupil achievement can be described by a production function that is built upon a number of variables that either increase or decrease the pupil’s performance. The educational production function for each pupil is here described by:

where is the achievement for individual i. Achievement is given by the test scores and is a function of the inputs: computer use at school,

, computer use at home

, individual characteristics

, home background

, school factors

, and teacher characteristics

.

Computer use at school and at home is not randomly assigned. This implies that computer use is influenced by other observable and unobservable characteristics in the educational production function. For example, computer use at school is related to computer access at school, which in turn is determined by a school’s resources. Correspondingly, pupils from more advantaged background are more likely to have access to computers at home and are therefore more likely to use them there.

We assume that

That is, computer use at school is determined by home, school, teacher factors, whereas home use is also influenced by individual characteristics. If these assumptions are true, they imply that and

will be correlated with

and

and

, and

, respectively. Hence, bias arises if these are not considered in the model. There is, however, no clear theoretical background for the self-selection of computer use at school on student level. Both high- and low-ability pupilsFootnote3 can be assigned to computers in education: pupils who are ahead of their peers can be assigned to use computers in class, as could individuals who are falling behind.

TIMSS data provide a rich set of observable background information, including information about both pupil and family background. School fixed effects are included to control for unobserved heterogeneity between schools, which solves the omitted variable problem at the school level.

Still, if unobservable characteristics in EquationEquations (2)(2)

(2) and (Equation3

(3)

(3) ) are correlated with test scores, there is a potential risk of omitted variable bias. An example of an omitted variable relates to the parents’ involvement in their child’s education, which is positively correlated with the test scores. If parents who find education important are more likely to give their child access to a computer, the positive effect can be absorbed by the computer estimates unless this is captured by other control variables. Even if school fixed effects control for unobservable characteristics across schools, it does not fully account for selection as the computer use is not randomly assigned at student level.

3. Data

This paper uses data from the 2011 and 2015 waves of TIMSS, where questions regarding ICT in education are included in the student, home, school, and teacher questionnaire. Questions such as the number of computers per school or internet access at home are enquired in the school and home questionnaire but the most relevant question regarding pupils’ computer habits is found in the student questionnaire.

In both waves, the pupils are asked how often they use a computer at school and at home. Their answers are reported in four categories: “every day or almost every day”, “once or twice a week”, “once or twice a month”, and “never or hardly never”, which are referred to here as “daily”, “weekly”, “monthly”, and “never”. The questions about the pupils’ computer use at school and home differ somewhat between the two waves: while the questions in 2015 asked to what extent the student used computers or tablets for schoolwork, the questions in 2011 were only about computer use as such, implying that the pupils’ answers also can reflect how often they use computers for social media, chatting, and playing games. Therefore, computer use in 2015 is likely to be more correlated with the amount of schoolwork than computer use in 2011 and might explain some of the decrease in computer use at home in 2015. Another distinction is that tablets were not defined as computers in the questionnaire from 2011. The complete questions from the questionnaires can be found in in the Appendix.

Section 3.1 aims at giving a background of TIMSS while Section 3.2 discusses the chosen background variables in the educational production function.

3.1. Trends in mathematical and science study (TIMSS)

TIMSS has been carried out every four years since 1995 and aims to explore the factors that contribute to academic success. It enables participating countries to measure the effectiveness of their educational systems in a global context and focuses on differences in curricula between different school systems. The target populations in TIMSS are all enrolled pupils in the fourth and eighth grade, but the eighth graders will not be analysed in this paper. The selection of pupils is made in two stages. The schools are sampled in the first stage, and one or more classes in the selected school are randomly selected in the second stage. Weights are used in the analysis to compensate for the students’ probabilities of being sampled (Mullis, Martin, & Gonzalez, Citation2016).

Each pupil, parent, teacher, and principal answers a questionnaire with background questions. These surveys are designed to address questions about the pupils’ motivations and socioeconomic backgrounds, the schools’ characteristics, and the teachers’ backgrounds and teaching approaches. The test contains questions on mathematics and science, and the question formats are both multiple choice and open questions. All questions cannot be answered by one pupil given the amount of available time for the test. The questions are therefore divided into booklets and blocks, with one student answering one of these booklets. A booklet contains two blocks of mathematics and two blocks of science, with each block containing 10–14 question items. There are in total 28 blocks distributed in 14 different booklets. For a more detailed description of the booklet design, see Mullis (Citation2013). The scores are reported on a scale from 0 to 1000 points. The scale’s centre point remains constant (at 500 points) for every assessment cycle and the standard deviation is 100 points. The scale is set to correspond to the achievement distribution of TIMSS 1995 to make the results comparable over time (Mullis et al., Citation2016).

The goal of TIMSS is to collect data on achievement for a representative sample of pupils. To give an unbiased estimate of pupils’ knowledge, TIMSS uses item response theory (IRT) scaling methods to construct an overall description of the achievement of the entire student population. It is common to measure latent variables, such as knowledge and achievement, by estimating several scores, so-called plausible values, that are drawn from a distribution, given the individual ability, background and the difficulty of the question. The reported plausible values in the TIMSS are imputed values and not the actual test score in a traditional sense. Thus, plausible values should be used to analyse achievement in the population rather than the performance for an individual test-taker. The plausible values in TIMSS are constructed from the combined responses of individual students and the booklets they were assigned. Each of these estimates should then be weighted together to give an unbiased estimate.Footnote4 Using plausible values is especially useful in large-scale surveys since the test is given during a limited time and the pupil only answers a subset of the total item pool. The importance of using all plausible values is, however, under discussion. Jerrim, Lopez-Agudo, Marcenaro-Gutierrez, and Shure (Citation2017) compare changes to OLS estimates when one or all five plausible values are used and only find trivial changes in effect sizes. The survey-makers and others, such as Wu (Citation2005) and Von Davier, Gonzalez, and Mislevy (Citation2009), emphasise plausible values as an important feature of the test design. As the plausible value scores fluctuate within individuals and between countries, the results will depend on the choice of the plausible value. Hence, this paper will use all five plausible values to conduct the analysis. Details of the implementation of plausible values are further described in Section 4.

A problem with TIMSS data is the amount of missing values that arise when the questionnaires are not correctly filled. This results in smaller sample size because observations that contain a missing value are dropped when running a regression. A complete case regression could result in a non-response bias if there is non-randomness in the missing values. For example, if unemployed parents avoid stating their occupational status, a complete regression could give biased estimates because pupils with unemployed parents would be dropped from the sample. in the Appendix reports the missing values in the dataset. In most cases, the fraction of missing values is small, but some important variables have a large number of missing values. A dummy category for the missing values in these variables is created to avoid a large reduction in the number of observations and missing values bias. This procedure is applied for the variables father’s employment status, mother’s employment status, father’s occupation, and mother’s occupation, since for these the proportion of missing values is over 15% (which is a limit that follows the TIMSS principle of a minimum school-response and class-response rate of 85%; see Mullis et al. (Citation2016). Missing categories are also used in highest parent education in 2015. Furthermore, neither England nor the United States completed the home survey and are therefore excluded from the analysis.

3.2. Descriptive data

After excluding observations with missing values, but including dummy categories for missing values, the datasets contain 440,834 and 499,273 individuals for TIMSS 2011 and 2015, respectively. In total, 37 countries and regions are represented in 2011 and 51 in 2015, with 32% of the observations belonging to an OECD country in 2011 and 42% in 2015. A list of participating countries and regions (such as Hong Kong, Ontario, and Quebec) can be found in in the Appendix. shows the descriptive statistics of the key variables. An extensive list of all variables included in the analysis can be found in in the Appendix.

Table 1. Computer use in TIMSS 2011 and TIMSS 2015

Pupil characteristics are captured by age, gender, and birth country. Age is assumed to be an important part of the education production function even if the overall effect of age on educational outcomes is under discussion. There is some evidence that older children perform better at tests, given that they have not retained or skipped class because of low or high ability (Bedard & Dhuey, Citation2006; Mühlenweg & Puhani, Citation2010). On the other hand, other studies suggest that younger students benefit from having older peers, especially in the long run (Black, Devereux, & Salvanes, Citation2011; Cascio & Schanzenbach, Citation2016; Fredriksson & Öckert, Citation2014). Pupils in both samples are on average ten years old, and the span is between 9 and 11 years old.

Gender is assumed to have an impact on educational outcomes as the previous literature reports gender gaps, often in favour of boys in mathematics test scores (see, e.g., Dickerson et al., Citation2015; Fryer & Levitt, Citation2010; Robinson & Lubienski, Citation2011; Rodríguez-Planas & Nollenberger, Citation2018). The gender distribution is equal in TIMSS 2011 and 2015, with approximately 50% girls in both years.

Another pupil characteristic that is likely to affect the achievement is immigration status. For example, Dustmann, Frattini, and Lanzara (Citation2012) compared PISA test scores among immigrant children and found performance gaps in educational performance. The achievement was, however, heterogeneous across countries and was strongly related to family background. An indicator variable for being born outside the test country is, however, only included in the model in the analysis of TIMSS 2015 as TIMSS 2011 did not collect this information.

The second main part of the educational production function is home factors. Several studies highlight the importance of family background for children’s educational performance. Parental support and expectations of the pupil particularly matter for academic achievement (Hattie, Citation2009). TIMSS identifies the level of parental education, parental occupation, parental working status and books at home by a parental questionnaire and these variables are included in the analysis to control for home background. Books at home are a proxy for socioeconomic status, with more books being associated with higher socioeconomic status. It is especially suitable to use when working in a cross-country sample as the measure is comparable over different cultures (Schütz, Ursprung, & Wössmann, Citation2008; Wössmann, Citation2016). The number of books can show how the parents value knowledge, and may also reveal the family’s financial situation as families with low economic resources are less likely to spend their money on books. TIMSS combines parental background and books at home to measure home resources for learning, which can be used as an index for socioeconomic factors.Footnote5

Socioeconomic factors, measured by home resources, seem to be only weakly correlated to the frequency of computer use at school but do influence how much the pupil uses a computer at home. Data, reported in in the Appendix, show that most pupils, regardless of their family background, use a computer once or twice a week at school (between 40% and 46%). Resources at home are more related to computer use at home. In 2011, almost half of the pupils with many (47%) and some resources (49%) use a computer every day at home and only a minority among these pupils never use a computer at home (4% and 7%). As expected, pupils with few resources use a computer at home less frequently than pupils with more resources. Merely 29% of the low-resource pupils use a computer every day at home and 39% report that they never use a computer at home. The frequency of computer use declines in 2015 as the question is redefined so that computer use is related to schoolwork. In 2015, among pupils with many resources, the daily computer use at home is only 34% and the number of pupils who never use a computer increases to 17%. Forty per cent of the pupils with few resources never use a computer at home and almost 28% use a computer every day.

Not surprisingly, computer use at home and in schools is correlated to some degree. Pupils who use the computer a lot in schools also tend to use it more frequently at home and the same pattern appears for the pupils with less use. The correlation is, however, never larger than 65% between the categories and varies to a larger extent in the weekly and monthly categories. See in the Appendix.

The largest differences in computer use at school between OECD and non-OECD countries are observed in daily and weekly use in 2011, but these differences are only marginal in 2015 (see in the Appendix). The computer use at home is relatively similar between country types except among never users in 2011 and for daily use in 2015. More exactly, the share of never users in 2011 and daily users in 2015 is approximately 6 and 5 percentage point larger in non-OECD countries, while the differences are smaller for the other categories. in the Appendix shows how computer use varies across genders: boys tend to use a computer more frequently in general. For example, 52% of boys use a computer every day at home compared to 44% of girls in 2011. The computer use was more homogenous in 2015, when the only notable difference was in daily and weekly use, with boys tending to use a computer more frequently than girls at both locations.

4. Empirical specification

A regression with mathematics and science test scores as the dependent variables and the variables in the educational production function as independent variables. The test scores for each subject are reported in five plausible values; hence, the regression is executed by the pv-module that enables use of provided TIMSS weights and five plausible values as the dependent variable.Footnote6 This module estimates the test scores with several plausible values by calculating the statistics for each plausible value and then taking the average of these to calculate the population statistic. Provided student weights and jackknife repeated replication (JRR) techniques are applied to account for sampling design of TIMSS and calculate the standard errors.Footnote7

The educational production function is empirically estimated by the following equation:

Here, denotes the test score of individual i in school j;

and

, described in the previous section, are vectors of computer use consisting of indicator variables for daily, weekly, or monthly use; and

is a vector of the control variables containing information about student and home background, teacher variables and

represents schools fixed effects. The included control variables are computer use at school, computer use at home, gender, student age, country of origin, resources at home, parent highest education, father working status, father occupation, mother working status, mother occupation, teacher gender, teacher’s education and years been teaching.

Methods of quantile regression are utilised to analyse how computer use is associated with test scores among low-, median- and high-achieving pupils. One issue with quantile regression is that the quantiles are conditional on the covariates, which leads to a result that is not always generalisable or applicable to a population. The use of unconditional quantile regression (UQR) is therefore suggested to measure different quantiles when there are many covariates (Borah & Basu, Citation2013; Borgen, Citation2016; Killewald & Bearak, Citation2014). In contrast to quantile regression, UQR defines the quantiles before the regression and is consequently not influenced by the covariates.

The UQR is estimated by running a regression of the recentred influence function (RIF) of the unconditional quantile on the explanatory variable. Firpo, Fortin, and Lemieux (Citation2009) and Borgen (Citation2016) define the RIF as:

where is the outcome variable;

is the value of

at the quantile

;

is the cumulative distribution function of

;

is the density of

at

;

is an indicator function that identifies whether the value of the outcome variable for the individual is below

; and RIF is a dummy variable, holding

for values below the chosen quantile and

for values at or above the chosen quantile. The estimates from the UQR show the test score for the chosen quantile

. Killewald and Bearak (Citation2014) and instruction of the method can be found in Firpo et al. (Citation2009) and Borgen (Citation2016).

5. Results

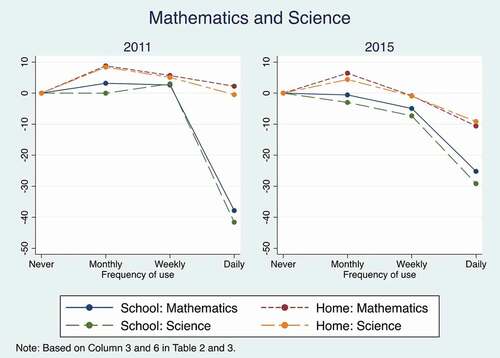

The estimated associations of computer use on test scores in mathematics and science are reported in , with standard errors in the parentheses. The associations for computer use are reported by frequency of use and location and never users are selected as the reference category. Columns 1 and 4 only include variables for computer use, while columns 2 and 5 add control variables for student background and country fixed effects. The main results with school fixed effects are reported in columns 3 and 6 and the corresponding estimates are illustrated in . Because school fixed effect, besides controlling for variation across schools within countries, control for all variation across countries, country fixed effects are not included when school fixed effects are included.

Table 2. Regression on computer use at school and at home and test scores in mathematics and science (2011)

Table 3. Regression on computer use at school and at home and test scores in mathematics and science (2015)

This section first describes how the results are affected by model specifications, while the second part presents the main results. The final section compares the impact of computers on boys and girls, OECD and non-OECD countries, and low-, median- and high-performing pupils.

In columns 1 and 4, there is an overall significant and positive association between computer use and test scores, except for daily use at school. Monthly users are the highest-performing group of pupils and they achieve up to 74 and 57 points more than never users in 2011 and 2015, respectively. The positive estimates for computer use decline when variables for student background and country fixed effects are added in columns 2 and 5. Estimates for weekly and monthly computer use at school become less positive, and the estimate for daily use is less negative in this specification. Furthermore, the standard errors shrink and the significance decreases for weekly and monthly use at home when background controls are included.

The results reported in columns 2 and 5 with country fixed effects support some of the findings of an inverted u-shape reported by Fuchs and Wössmann (Citation2004). They found that computer use and performance are positively related to monthly users, which declines and become significantly negative for the students who use a computer more than a few times a week. This inverted u-shape is most apparent in school use in 2011. Conversely, in 2015, the u-shape appears for home use (most of all in mathematics) and the positive estimates of school use is insignificant and negative for science.

When country fixed effects are replaced with school fixed effects, the positive estimates of computer use shrink in magnitude and the significance levels become less strong in 2011.Footnote8 The inverted u-shape, which is observed in a simple model of just computer use, diminishes and almost disappears in the model specification with background controls and school fixed effects.

The regression estimates from columns 3 and 6, with background variables and school fixed effects, are shown in to get an overview of the main result. The estimated test scores are plotted by subject, frequency of use, and location, and the result from the TIMSS wave is reported separately in each graph. There is a consistent and reappearing pattern across years and between subjects: computer use at home is associated with higher achievement than computer use at school and moderate use (monthly and weekly) is associated with higher scores than daily use. Mathematics and science results follow the same patterns. The significance level is not visible in the figure but report that some of the estimates become insignificant in the main model with school fixed effects. Daily school use is significantly associated with lower test scores for both subjects in both years, but no distinct pattern in significance level appears for the other estimates.

Even if the pattern is consistent between the years, the size of the estimates and the significance levels vary over the waves. In general, computer use and test scores are positively associated with more groups in 2011 than 2015 but the estimates of daily use are more negative in 2011. The negative association is especially distinct for school users, as daily users score around 25–42 points less than never users accordingly to the point estimates. Given that 100 points on the TIMSS scale equal one standard deviation, the estimates are equivalent to 0.25 and 0.40 standard deviations respectively. Except for moderate use in 2011, computer use at school is always negatively associated with test scores, while the result for home computer use is more ambiguous. Moderate use of computers at home shows a small significant positive association with test scores: weekly use is associated with higher test scores in 2011, but not in 2015, and the estimates for monthly users are positive and significant in both years. The largest difference appears for daily computer use at home, as there is a significant negative estimate for daily home users in 2015, while the corresponding groups in 2011 show a zero or small negative association.

The results suggest an overall negative association between computer use in schools and test scores, where the estimated differences among never users and daily users are between 0.25 and 0.4 standard deviations in favour of the never users. In contrast, studies such as Muralidharan et al. (Citation2019) or Hull and Duch (Citation2019) report positive effects of increased computer access on test scores in the size of 0.37 and 0.13 standard deviations, respectively. The estimated associations in this study are more in line with the findings from Carter et al. (Citation2017) who report that students who were allowed to use computers scored 0.18 standard deviations lower than their counterparts. A number of studies did not, however, find any significant impact of computer use on educational outcomes (see, e.g., Beuermann et al., Citation2015; Falck et al., Citation2018; Yanguas, Citation2020).

These results also show the importance of including controls for determinants of the educational production function and support the suggestion that test scores are determined by many factors other than computer use. Inclusion of background controls and school fixed effects changes the estimates significantly, with school fixed effects adjusting for unobserved heterogeneity between different schools. Fuchs and Wössmann (Citation2004) also report a negative association between computer use at school and test scores when it is used frequently (several times a week or more). The size of the negative association is, however, larger in this study. They report that the frequent users achieved 4 points less than never users, while the estimated association for daily users is 41 points in this study. Note that weekly and daily computer use is considered as frequent computer use in the PISA data used by Fuchs & Wössmann. In conclusion, location and frequency of use matters when analysing the impact of computer use on test scores. School use is associated with a lower test score than computer use at home and daily computer use is rarely positively associated with test scores. Even if the negative associations are larger in this study than the findings by Fuchs & Wössmann in absolute terms, a negative association of 0.4 standard deviations is not considerably large in the TIMSS context.

5.1. Subgroup comparisons

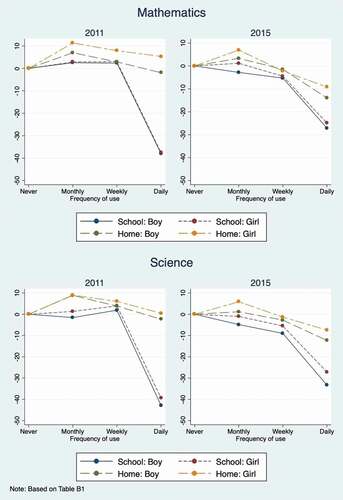

The association of computer use and test scores is likely to vary between heterogeneous groups of pupils. Therefore, additional analyses are conducted on subsamples based on gender, OECD membership, and low-, median- and high-performing pupils. The first two analyses use the same method as the main model, while the last comparison uses unconditional quantile regression analysis to determine the impact of computers in different parts of the distribution. The results from the gender and country comparisons are presented in figures and a complete table of regression estimates and their significance levels are found in the Appendix.

reports the results from the gender comparison. The results are divided into four separate graphs instead of two because of the increased number of reported estimates. The two graphs at the top of the figure show regression estimates for mathematics scores while the bottom graphs illustrate the science results. The comparison of girls and boys by school and home use is visible within each graph. As with the main result, home use is better than school use, and moderate use is still most positively associated with test scores. The trends are almost identical between subjects, showing that the impact of computers is similar for mathematics and science. Computer use at home tends to be more positively associated with test scores for girls than for boys but the gender differences in associations tend to be small. The largest gender difference is observed among home use for mathematics results in 2011, with girls scoring between 3 and 13 points higher in every frequency of use. Overall, there is no clear evidence that one gender benefits more from computer use than the other.

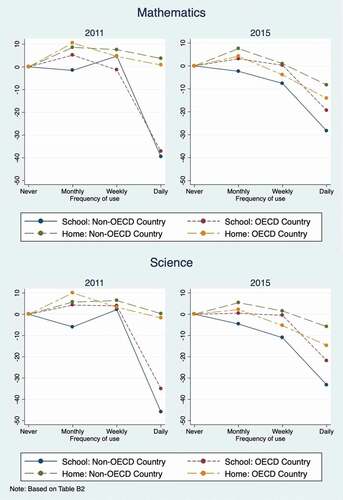

A country-by-country comparison could be made to analyse heterogeneous effects on country levels (e.g. Spiezia, Citation2011). Instead, this paper divides the participating countries into OECD and non-OECD countries, assuming that these countries are somewhat homogenous. Nonetheless, descriptive data show that there are no large differences in computer use between the type of countries (see in the Appendix).

shows regression estimates from a model with background variables and school fixed effects on subsamples of OECD and non-OECD countries. The figure reports the subject and year in separate graphs to highlight country differences. There is an overall positive association between monthly use and test scores. Daily use and test scores are negatively associated and in both groups of countries and computer use in schools are more negatively associated with test scores, although the result of country differences is somewhat ambiguous. The trend lines for school use in OECD countries are almost always above the non-OECD group, indicating that computer use in schools is less negatively associated with test scores in OECD countries than in non-OECD countries. The result is, more or less the opposite for home users. The test scores in non-OECD countries are also more positively associated with all frequencies of computer use at home than OECD countries in 2015, but only for more frequent use (weekly and daily) in 2011.

Figure 3. Associations between computer use and test scores in mathematics and science test scores by OECD membership

The final part of this analysis focuses on the impact of computers on different points of the test score distribution. Do the associations between computer use and test scores differ between low-, middle- and high-performing pupils? An unconditional quantile regression (UQR) is applied to analyse how associations vary along with the test score distribution. School weights are used instead of student weights and only the first plausible value for each subject is used as the dependent variable. Also, the standard errors are robust and clustered at school level.Footnote9 The estimates from the UQR should not be directly compared to the pv-regression as the model differs in weights and the application of plausible values. However, comparing the results presented in columns 3 and 6 in with those in in the Appendix, where OLS regression with the same weights and robust standard errors as in the UQR is used, indicates that the difference in weights and application of plausible values only have a small impact on the estimates.

show that the relationship of computer use at school and test scores varies between quantiles. Background controls and school fixed effects are included in all the regressions. Each quantile of the test score distribution is represented by one column. The result is in line with the previous findings that daily use in school is negatively related to test scores. Here the same is found for daily use in both years, both subjects, and across all percentiles. In 2011, for example, a pupil in the 10th percentile who used a computer every day performs 62 points less in mathematics than a non-user. The estimate of daily computer use is, by contrast, less negative in the 90th percentile: a pupil in the 90th percentile only achieved 12 points less than a pupil who never used a computer in school. The same pattern for daily use also appears for the science scores in 2011 and both subjects in 2015. The results also show that all computer use in school is negatively associated with test scores for low-performing students. For both subjects and years, the associations are negative for all frequencies in the 10th percentile, and the associations are significant except for monthly use in 2015. Furthermore, the standard errors are larger in the lower part of the distribution, indicating that the estimates are less precise. The estimates in the 50th and 90th percentile are in most cases positive and significant but small in size.

Table 4. Unconditional quantile regression on computer use at school and at home and test scores in mathematics and science 2011

Table 5. Unconditional quantile regression on computer use at school and at home and test scores in mathematics and science 2015

The results from computer use at school do not apply to computer use at home. The pupils who benefit most from computer use at home are in the 10th or 50th percentile. Overall, computer use at home is more associated with a positive impact on test scores in 2011 than in 2015, at least when a computer is used monthly or weekly. Daily computer use and test scores are in most cases negatively associated for both high- and low-achieving pupils. Still, the negative association is not as large as for daily computer use at school. The size of the largest negative estimate is −19 points compared to estimates of −72 points in daily school use.

In conclusion, the results show that the estimates for computer use vary in different parts of the test score distribution, suggesting that the association between computer use at school and test scores is more negative among low-performing pupils than high-performing pupils. Daily computer use at school is negatively associated with test scores, but the negative association is smaller among high-achieving pupils. This does not hold for computer use at home, where the estimates in the middle and lower end of the score distribution are more positively associated with computer use. The test scores in the 90th percentile also positively associated with monthly computer use and the negative association is most evident in weekly and daily home use in 2015.

6. Conclusion

This paper examines the relationship between computer use and test scores among 10-year-old pupils in TIMSS 2011 and 2015. It analyses the impact of computers by including a large set of background controls for determinants that influence pupils’ performance, as well as school fixed effects to control for unobserved heterogeneity between schools. This study finds little evidence that there is a positive association between computer use in schools and pupils’ learning, except in 2011 for moderate use and among high-performing pupils. Monthly and weekly computer use is positively associated with test scores but the associations are most times weak. The results could be affected by selection, but given the extensive controls the results can be seen as a piece of evidence suggesting that one cannot simply implement computers in education and expect the achievement to increase. Consistent with previous studies (see e.g. Beuermann et al., Citation2015; Carter et al., Citation2017; Yanguas, Citation2020), this study finds mostly no or weak evidence that computers in education are increasing achievement. The evidence for positive effects of using computers reported in previous studies (e.g. Barrow et al., Citation2009; Muralidharan et al., Citation2019) is limited to cases when computers have been implemented in aided computer sessions and even then, the size of the positive effects are relatively small. The results of this paper also suggest that daily use of computers in school might affect test scores negatively, if computers are used as they were used in 2011 and 2015.

The relationship between computers and test scores varies by frequency of use and location. School use is more negatively associated with test scores than home use, with some groups achieving 70 points (about 0.7 standard deviations) less than pupils who never use a computer. On the other hand, if computers are used at moderate amounts, the performance is positively related to home use. The estimated positive relation is however small. Analysis of gender and country subsamples show similar trends, with gender and country seeming to matter relatively little.

More interesting is that the association between computer use at school and test scores varies in different parts of the test score distribution. The results show larger negative estimates of computer use at school among low-performing pupils than high-performing pupils, while the opposite holds for home use. The positive relation of home use for the bottom of the distribution is, however, not large and are non-significant in many cases. Overall, the test score estimates are many times not larger than ten points, which in a TIMSS context corresponds to 0.10 of a standard deviation of the test score distribution. Even if the observed difference is not substantial, there is a distinct pattern of frequent use of computers in school being negatively associated with test scores, especially among low- and median performing students.

The positive association of computer use at home is weaker in this paper than in previous studies that utilised similar methods but on older pupils. The inclusion of school fixed effects seems to be a reason for the observed results. A contributing explanation can be that fourth graders do not benefit from computers in the same way as eighth graders. Pupils at this age may need training with traditional methods to get the basic knowledge that will allow them to use a computer more efficiently later in their education. Another explanation of the results might be that the distraction effect is more important among young pupils. It might be so that younger pupils are more likely to use the computers for entertainment purposes rather than educational activities, as Beuermann et al. (Citation2015) observed.

Note that the focus of this paper is the associations between computer and test scores, not the potential of computers. The results report a negative association of daily computer use and test scores given how computers were used in 2011 and 2015. What is shown in this study is that computer use at schools was generally associated with lower test scores in mathematics and science, but the results do not provide causal evidence on the effect of computers in education. The findings from this study do not, however, reject that computers have a positive impact on educational achievement if computers are optimally implemented. Therefore, the result is not in conflict with the result from studies that evaluate the effect of computers by studying one-to-one laptop programmes or computer sessions guided by teachers. The results from this study reflect the actual computer use by pupils, which is perhaps the explanation why the results are more in line with studies that analyse the impact of increased computer access rather than the effect from guided computer sessions.

Future studies could focus on finding methods to estimate the causal effect of computer use on achievement when the computers are implemented on a larger scale or investigate the difference between simply increasing computer access and increasing the computer access within a monitored programme. It would also be interesting to determine the role of computers in other subjects than mathematics and science.

Acknowledgments

The author would like to thank Tomas Raattamaa, Olle Westerlund, David Granlund, Magnus Wikström, Nafsika Alexiadou and two anonymous referees for helpful comments and suggestions. Financial support by Handelsbanken (ref. P2016-0299) is gratefully acknowledged.

Disclosure statement

No potential conflict of interest was reported by the author.

Additional information

Funding

Notes on contributors

Linn Karlsson

Linn Karlsson is a PhD student at Umeå University, where she furthers her research in Economics of Education. Her interests include ICT in education, admissions and adult education.

Notes

1. The impact of computer use and education have been investigated by a number of other studies. See, e.g. Banerjee, Cole, Duflo, and Linden (Citation2007), Cristia, Ibarrarán, Cueto, Santiago, and Severín (Citation2012), Leuven, Lindahl, Oosterbeek, and Webbink (Citation2007), Machin, McNally, and Silva (Citation2007) and Spiezia (Citation2011).

2. It is important to make a distinction between causal and non-causal relationships. Non causal relationships mistaken for causal effects can lead to false or misleading conclusions, such as described by DiNardo and Pischke (Citation1997).

3. Pupils with special needs are excluded from the TIMSS study in the sampling process. Special needs pupils include children with physical, mental or severe learning disabilities (LaRoche, Joncas, & Foy, Citation2016).

4. See Foy et al. (Citation2015) for a detailed description of plausible values and how to apply plausible values in regression analysis.

5. For example, a student is considered with many resources if there are at least 100 books and 25 children books at home and at least one parent with a university degree and a professional occupation.

6. Pv is a module in Stata written by Kevin Macdonald and is designed to estimate large-scale international assessments with plausible values. For further description of the module, see (Macdonald, Citation2008).

7. TIMSS uses a two-stage cluster design which allows the regression errors to be correlated within schools and within classes. Appropriate weights and variance estimation techniques (such as jackknife repeated replication) are required to get accurate point estimates and standard errors (LaRoche et al., Citation2016).

8. The computer estimates between the model specification with country fixed effects and school fixed effects are tested for significant differences. Of the 24 estimates for computer use, 16 are significantly affected by using school fixed effects instead of country fixed effects. The estimates that are not statistically different at p < 0.05 are daily and monthly computer use for both subjects and both years.

9. The stata command xtrifreg allows unconditional quantile regression (UQR) with a large number of fixed effects. It does not support the use of several plausible values or student weights. It was written by Borgen (Citation2016) and is a version of the UQR developed by Firpo et al. (Citation2009). See Killewald and Bearak (Citation2014) for a formal econometric description.

References

- Banerjee, A. V., Cole, S., Duflo, E., & Linden, L. (2007). Remedying education: Evidence from two randomized experiments in India. The Quarterly Journal of Economics, 122(3), 1235–1264.

- Barrow, L., Markman, L., & Rouse, C. E. (2009). Technology’s Edge: The Educational Benefits of Computer-Aided Instruction. American Economic Journal: Economic Policy, 1(1), 52–74.

- Bedard, K., & Dhuey, E. (2006). The persistence of early childhood maturity: International evidence of long-run age effects. The Quarterly Journal of Economics, 121(4), 1437–1472.

- Beuermann, D. W., Cristia, J., Cueto, S., Malamud, O., & Cruz-Aguayo, Y. (2015). One laptop per child at home: Short-term impacts from a randomized experiment in Peru. American Economic Journal: Applied Economics, 7(2), 53–80.

- Black, S. E., Devereux, P. J., & Salvanes, K. G. (2011). Too young to leave the nest? The effects of school starting age. The Review of Economics and Statistics, 93(2), 455–467.

- Borah, B. J., & Basu, A. (2013). Highlighting differences between conditional and unconditional quantile regression approaches through an application to assess medication adherence. Health Economics, 22(9), 1052–1070.

- Borgen, N. T. (2016). Fixed effects in unconditional quantile regression. The Stata Journal: Promoting communications on statistics and Stata, 16(2), 403–415.

- Bulman, G. and R. W. Fairlie (2016), “Technology and education: Computers, software, and the internet” in Hanushek, E. A., S. Machin and L. Woessman (eds.), Handbook of the Economics of Education, Elsevier, Amsterdam.

- Carter, S. P., Greenberg, K., & Walker, M. S. (2017). The impact of computer usage on academic performance: Evidence from a randomized trial at the United States Military Academy. Economics of Education Review, 56, 118–132.

- Cascio, E. U., & Schanzenbach, D. W. (2016). First in the class? Age and the education production function. Education Finance and Policy, 11(3), 225–250.

- Cristia, J., Ibarrarán, P., Cueto, S., Santiago, A., & Severín, E. (2012). Technology and child development: Evidence from the one laptop per child program. American Economic Journal: Applied Economics, 9(3), 295-320. Chicago.

- Dickerson, A., McIntosh, S., & Valente, C. (2015). Do the maths: An analysis of the gender gap in mathematics in Africa. Economics of Education Review, 46, 1–22. doi:https://doi.org/10.1016/j.econedurev.2015.02.005

- DiNardo, J. E., & Pischke, J.-S. (1997). The returns to computer use revisited: Have pencils changed the wage structure too? The Quarterly Journal of Economics, 112(1), 291–303.

- Dustmann, C., Frattini, T., & Lanzara, G. (2012). Educational achievement of second-generation immigrants: An international comparison. Economic Policy, 27(69), 143–185.

- Falck, O., Mang, C., & Wössmann, L. (2018). Virtually no effect? Different uses of classroom computers and their effect on student achievement. Oxford Bulletin of Economics and Statistics, 80(1), 1–38.

- Fiorini, M. (2010). The effect of home computer use on children’s cognitive and non-cognitive skills. Economics of Education Review, 29(1), 55–72.

- Firpo, S., Fortin, N. M., & Lemieux, T. (2009). Unconditional quantile regressions. Econometrica, 77(3), 953–973.

- Foy, P., Martin, M. O., Mullis, I. V. S., Yin, L., Cotter, K., & Liu, J. (2015). Reviewing the TIMSS Advanced 2015 Achievement Item Statistics. In Methods and Procedures in TIMSS Advanced 2015 (pp. 11.1–11.19). Retrieved from https://timssandpirls.bc.edu/publications/timss/2015-a-methods/chapter-11.html

- Fredriksson, P., & Öckert, B. (2014). Life-cycle Effects of Age at School Start. The Economic Journal, 124(579), 977–1004.

- Fryer Jr, R. G., & Levitt, S. D. (2010). An empirical analysis of the gender gap in mathematics. American Economic Journal:Applied Economics, 2(2), 210–240.

- Fuchs, T., & Wössmann, L. (2004). Computers and student learning: Bivariate and multivariate evidence on the availability and use of computers at home and at school. Brussels Economic Review, 2004(47), 359–386.

- Hall, Caroline & Lundin, Martin & Sibbmark, Kristina, 2019. “A laptop for every child? The impact of ICT on educational outcomes,„ Working Paper Series 2019:26, IFAU - Institute for Evaluation of Labour Market and Education Policy.

- Hanushek, E. A. (1979). Conceptual and empirical issues in the estimation of educational production functions. The Journal of Human Resources, 14(3), 351–388.

- Hattie, J. (2009). Visible learning: A synthesis of over 800 meta-analyses relating to achievement. Routledge.

- Hernandez, D. J. (2011). Double Jeopardy: How Third-Grade Reading Skills and Poverty Influence High School Graduation. Annie E. Casey Foundation.

- Hull, M., & Duch, K. (2019). One-to-One technology and student outcomes: Evidence from Mooresville’s digital conversion initiative. Educational Evaluation and Policy Analysis, 41(1), 79–97.

- Jerrim, J., Lopez-Agudo, L. A., Marcenaro-Gutierrez, O. D., & Shure, N. (2017). What happens when econometrics and psychometrics collide? An example using the PISA data. Economics of Education Review, 61, 51–58.

- Killewald, A., & Bearak, J. (2014). Is the motherhood penalty larger for low-wage women? A comment on quantile regression. American Sociological Review, 79(2), 350–357.

- LaRoche, S., Joncas, M., & Foy, P. (2016). Sample Design in TIMSS 2015. In Methods and Procedures in TIMSS 2015 (pp. 3.1–3.37). Retrieved from http://timss.bc.edu/publications/timss/2015-methods/chapter-3.html

- Leuven, E., Lindahl, M., Oosterbeek, H., & Webbink, D. (2007). The effect of extra funding for disadvantaged students on achievement. The Review of Economics and Statistics, 89(4), 721–736.

- Kevin Macdonald, 2008. “PV: Stata module to perform estimation with plausible values,„ Statistical Software Components S456951, Boston College Department of Economics, revised 03 Feb 2019.

- Machin, S., McNally, S., & Silva, O. (2007). New Technology in Schools: Is There a Payoff? The Economic Journal, 117(522), 1145–1167.

- Mühlenweg, A. M., & Puhani, P. A. (2010). The evolution of the school-entry age effect in a school tracking system. Journal of Human Resources, 45(2), 407–438.

- Mullis, I.V.S. & Martin, M.O. (Eds.) (2013). TIMSS 2015 Assessment Frameworks. Retrieved from Boston College, TIMSS & PIRLS International Study Center website: http://timssandpirls.bc.edu/timss2015/frameworks.html.

- Martin, M. O., Mullis, I. V. S., & Hooper, M. (Eds.). (2016). Methods and Procedures in TIMSS 2015. Retrieved from Boston College, TIMSS & PIRLS International Study Center website: http://timssandpirls.bc.edu/publications/timss/2015-methods.html.

- Muralidharan, K., Singh, A., & Ganimian, A. J. (2019). Disrupting education? Experimental evidence on technology-aided instruction in India. American Economic Review, 109(4), 1426–1460.

- Organisation for Economic Co-operation and Development. (2006). Are students ready for a technology-rich world?: What PISA studies tell us. OECD Publishing.

- Organisation for Economic Co-operation and Development. (2010). Are the New Millennium Learners Making the Grade?: Technology Use and Educational Performance in PISA 2006. OECD Publishing.

- Robinson, J. P., & Lubienski, S. T. (2011). The development of gender achievement gaps in mathematics and reading during elementary and middle school: Examining direct cognitive assessments and teacher ratings. American Educational Research Journal, 48(2), 268–302. doi:https://doi.org/10.3102/0002831210372249

- Rodríguez-Planas, N., & Nollenberger, N. (2018). Let the girls learn! It is not only about math… it's about gender social norms. Economics of Education Review, 62, 230–253. doi:https://doi.org/10.1016/j.econedurev.2017.11.006

- Schütz, G., Ursprung, H. W., & Wössmann, L. (2008). Education policy and equality of opportunity. Kyklos, 61(2), 279–308.

- Spiezia, V. (2011). Does Computer Use Increase Educational Achievements? Student-level Evidence from PISA. OECD Journal: Economic Studies, 2010(1), 1–22.

- Von Davier, M., Gonzalez, E., & Mislevy, R. (2009). What are plausible values and why are they useful. IERI Monograph Series, 2(1), 9–36.

- Wössmann, L. (2016). The Importance of School Systems: Evidence from International Differences in Student Achievement. Journal of Economic Perspectives, 30(3), 3–32.

- Wu, M. (2005). The role of plausible values in large-scale surveys. Studies in Educational Evaluation, 31(2–3), 114–128.

- Yanguas, M. L. (2020). Technology and educational choices: Evidence from a one-laptop-per-child program. Economics of Education Review, 76, 101984.

Appendix A.

Table A1. Questionnaire questions

Table A2. Missing values in each variable

Table A3. Participating countries in TIMSS 2011 and 2015

Table A4. Categorical variables

Table A5. Continuous variables

Table A6. Resources at home and computer use

Table A7. OECD membership and computer use

Table A8. Gender and computer use

Table A9. Correlation between computer use in school and at home

Appendix B.

Table B1. Gender-specific regressions

Table B2. OECD and non-OECD country regressions

Table B3. Standard OLS regression