Abstract

Policymakers have demonstrated an interest in using measures of student achievement to inform high-stakes teacher personnel decisions, but the idea of using student outcomes as a teacher performance measure is complex to implement for a variety of reasons, not least of which is the fact that there is no universally agreed upon statistical methodology for translating student achievement into a measure of teacher performance. In this article, we use statewide data from North Carolina to evaluate different methodologies for translating student test achievement into measures of teacher performance at the elementary level. In particular, we focus on the extent to which there are differences in teacher effect estimates generated from different modeling approaches, and to what extent classroom level characteristics predict these differences. We find models that only include lagged achievement scores and student background characteristics are highly correlated with specifications that also include classroom characteristics, while value-added models (VAMs) estimated with school fixed effects have a lower correlation. Teacher effectiveness estimates based on median student growth percentiles are highly correlated with estimates from VAMs that include student background characteristics, despite the fact that the two methods for estimating teacher effectiveness are, at least conceptually, quite different. But even when the correlations between performance estimates generated by different models are quite high for the workforce as a whole, there are still sizable differences in teacher rankings generated by different models that are associated with the composition of students in a teacher's classroom.

1. STUDENT ACHIEVEMENT AS A TEACHER PERFORMANCE MEASURE

Policymakers appear increasingly inclined to use measures of student achievement, often state assessment results, to inform high-stakes teacher personnel decisions. This has been spurred on by the federal government's Teacher Incentive Fund and Race to the Top (RttT) grant programs, each of which urge states and localities to tie teacher performance to compensation, renewal, and tenure.Footnote1 There are good reasons for this: current teacher evaluation systems in most school districts appear to be far from rigorous.Footnote2 Current schemes used in the vast majority of school systems in the country place nearly all teachers in the top ranked performance category. A recent study (Weisburg et al. Citation2009), for instance, showed that more than 99% of teachers in districts using binary ratings were rated satisfactory and about 95% of teachers received one of the top two ratings in districts using a broader range of ratings. As Secretary of Education Arne Duncan put it, “Today in our country, 99% of our teachers are above average” (Gabriel Citation2010).

This “Lake Wobegon Effect,” where nearly all teachers are evaluated as above average, flies in the face of considerable empirical evidence that teachers differ substantially from each other in effectiveness.Footnote3 It also does not reflect the assessment of the teacher workforce by administrators or other teachers (Tucker Citation1997; Jacob and Lefgren Citation2008; Weisburg et al. Citation2009). Evaluation systems that fail to recognize the true differences among teachers greatly hamper the ability of policymakers to make well-informed decisions about the teaching workforce, the key education resource over which they have control.

In theory, most human resource policies in schools could, and arguably should, depend on teacher performance evaluations. There are in fact multiple avenues through which performance measures might be used to improve the quality of the teacher workforce and hence student achievement. Performance evaluations could, for instance, help identify those teachers who are struggling and would benefit from professional development or mentoring (Darling-Hammond et al. Citation2012), or for the identification of teacher characteristics that are aligned with effectiveness, which in turn might improve the screening and hiring processes in many districts (Rockoff and Speroni Citation2011). And, as noted above, there is a current push to use such evaluations for higher stakes purposes: to financially reward effectiveness under pay for performance systems (Podgursky and Springer Citation2007), or for selective retention purposes such as helping determine tenure (Goldhaber and Hansen Citation2010) and layoffs (Boyd et al. Citation2010; Goldhaber and Theobald Citation2011). None of these uses are possible, however, if there is no variation in measured performance.

But while conceptually simple, the idea of using student outcomes as a teacher performance measure is difficult to implement for a variety of reasons, not least of which is the fact that there is no universally agreed upon statistical methodology for translating student achievement measures into teacher performance.Footnote4 Under RttT, there are a number of evaluation systems currently being implemented at the state level that use student growth on standardized tests as a metric for teacher job performance.Footnote5 The fact that different states will be using different methods to translate student test scores into a measure of teacher performance raises the question of whether the choice of model matters. In this article, we extend the literature that focuses on the implications of model specification for teacher performance rankings using statewide data from North Carolina, and explore the extent to which classroom-level characteristics predict between-model differences in teacher rankings.

Our findings are consistent with research that finds high correlations in value-added teacher performance rankings regardless of whether models include student or classroom-level covariates. Correlations between the aforementioned models and those that include school fixed effects are far lower. Much less is known about how performance rankings compare between value-added measures and performance estimates based on median student growth percentiles (SGPs). Interestingly, we find these SGP-based measures of teacher performance to be highly correlated with estimates from value-added models (VAMs) that include lagged scores and student background characteristics, despite the fact that the two methods for estimating teacher effectiveness are, at least conceptually, quite different. But, even when the correlations between performance estimates generated by different models are quite high for the workforce as a whole, there are still sizable differences in teacher rankings generated by different models that are associated with the composition of students in a teacher's classroom.

2. USING STUDENT TEST PERFORMANCE AS A GAUGE OF TEACHER EFFECTIVENESS

There are two broad categories of models that are used by states to associate student growth with teacher effectiveness: SGP models (also commonly known as the “Colorado Growth Model”) and VAMs.Footnote6 The primary distinctions between the two approaches are the estimation methodology and the use of control variables included in each model.

SGPs, by design, are descriptive measures of growth that provide an easily communicated metric to teachers and stakeholders. Betebenner, an advocate of SGPs and the author of the SGP package which calculates them, warns that they are not intended to be aggregated for the purpose of making causal inferences about the reasons for classroom-level differences in student growth (Betebenner Citation2007).Footnote7 By not including covariates, such as the receipt of free or reduced price lunch, SGPs do not explicitly account for differences in student background. This omission makes it difficult to make strong causal claims. But while they do not explicitly control for student background, they may implicitly account for differences in student backgrounds by using a functional form that compares students who are quite similar to each other in terms of baseline achievement. Although many states have committed to using SGPs as a component of teaching performance evaluations, there have been few studies comparing effectiveness estimates based on SGPs with other measures.

VAMs have long been used by economists focusing on assessing the effects of schooling attributes (class size, teacher credentials, etc.) on student achievement (Hanushek Citation1979, Citation1986). More recently, they have been used in an attempt to identify the contributions of individual teachers in the learning process (Ballou, Sanders, and Wright Citation2004; McCaffrey et al. Citation2004; Gordon, Kane, and Staiger Citation2006). In VAMs, teacher performance estimates are generally derived in one step.Footnote8 Furthermore, unlike SGPs, VAM performance estimates are often intended to be treated as causal because they are estimated based on models that often include student covariates. The academic literature, however, is divided concerning the extent to which different VAM specifications can be used to generate unbiased estimates of the effectiveness of individual teachers using nonexperimental data (Ballou, Sanders, and Wright Citation2004; McCaffrey et al. Citation2004; Kane and Staiger Citation2008; Rothstein Citation2010; Chetty, Friedman, and Rockoff Citation2011; Koedel and Betts Citation2011; Goldhaber and Chaplin Citation2012).Footnote9

The question of whether VAM or SGP estimates of teacher performance are in fact unbiased measures of true teacher performance is clearly important, but not the issue we address. Instead the focus of this article is on the extent to which different model estimates are comparable.Footnote10 A small body of literature compares estimates of teacher performance generated from different VAM specifications.Footnote11 In general, teacher effects from models including and excluding classroom-level variables tend to be highly correlated with one another (r > 0.9) (Ballou, Sanders, and Wright Citation2004; Harris and Sass Citation2006; Lockwood et al. Citation2007), while models including and excluding school fixed effects yield estimates with correlations close to 0.5 (Harris and Sass Citation2006). Harris and Sass (Citation2006) also found lower correlations between estimates from student fixed effects and student random effects models (r = 0.45) and between student fixed effects models and models with student characteristics (r = 0.39). Research has also found that VAM estimates are more sensitive to the outcome measure than with model specification (Lockwood et al. Citation2007; Papay Citation2010). There is less agreement between traditional models that use a prior-year test score and those that use same-year, cross-subject achievement scores to control for student ability at the high school level (Goldhaber et al. Citation2011).

While the above research does suggest relatively high levels of overall agreement for various VAMs, even strong correlations can result in cross-specification differences in teacher classifications that could be problematic from a policy perspective. For instance, one of the criticisms of the Los Angeles Times’ well-publicized release of teacher effectiveness estimates connected to teacher names is that the estimates of effectiveness were sensitive to model specification. Briggs and Domingue (Citation2011), for instance, compared results of teachers’ ratings from a specification they argue is consistent with that used to estimate effectiveness for the Times, to specifications that include additional control variables.Footnote12 They reported the correlation between the two specifications to be 0.92 in math and 0.79 in reading. However, when teachers are classified into effectiveness quintiles, only about 60% of teachers retained the same effectiveness category in math, while in reading only 46% of teachers shared the same ratings of effectiveness (Briggs and Domingue Citation2011). These findings are not surprising to statisticians, but the results of value-added analysis are viewed by teachers’ unions as “inconsistent and inconclusive” (Los Angeles Times Citation2010).

Another concern for policymakers who wish to tie teacher performance to student test scores is the stability of teacher effect estimates over time. Models may generate unbiased estimates of teacher effectiveness but still be unstable from one year to the next. Using a large dataset of elementary and middle school math tests in Florida, McCaffrey et al. (Citation2009) estimated several VAMs and find year-to-year correlations generally ranging from 0.2 to 0.5 in elementary schools and 0.3 to 0.6 in middle schools. Comparing teacher rankings, they find that about a third of teachers ranked in the top quintile are again in the top quintile the next year. Goldhaber and Hansen (Citation2013) perform similar analyses using statewide elementary school data from North Carolina over a 10-year period. They find year-to-year correlations of teacher effectiveness estimates ranging from 0.3 to 0.6 and conclude that these magnitudes are consistent with the stability of performance measures from occupations other than teaching. For instance, year-to-year correlations in batting averages for professional baseball players, a metric used by managers for high stakes decisions, is about 0.36 (Glazerman et al. Citation2010; MET Citation2010).Footnote13

Despite the increasing popularity of SGPs, there are few formal studies comparing SGPs to other models that produce teacher effect estimates. Goldschmidt, Choi, and Beaudoin (Citation2012) focused on differences between SGPs and VAMs at the school level and found school effects estimated with SGPs to be correlated most highly with effects generated by simple panel growth models and covariate adjusted VAMs with school random effects, with correlations between 0.23 and 0.91.Footnote14 They also found a high level of agreement between SGPs and VAMs with random effects when schools are divided into quintiles of effectiveness. At the school level, the two measures place elementary schools in the same performance quintile 55% of the time and within one quintile 95% of the time. At the school level, effectiveness estimates based on SGPs are less stable over time (with correlations ranging between 0.32 and 0.46) than estimates generated by VAMs with school fixed effects (with correlations ranging between 0.71 and 0.81).

Ehlert et al. (Citation2012) also investigated school-level differences between various models and find estimates from school-level median SGPs, single-stage fixed effects VAMs, and proportional two-stage VAMs to have correlations of about 0.85. Despite this overall level of agreement, they find meaningful differences between the estimates from SPG and VAM models for the most advantaged and disadvantaged schools. For example, only 4% of the schools in the top quartile according to the SGP model are high poverty schools, while 15% of the schools in the top quartile according to the two-stage VAMs are considered high poverty. Furthermore, for schools that fall into the top quartile of effectiveness according to the proportional two-stage method but fall outside the top quartile according to the SGP model, the aggregate percent free/reduced price lunch is about 70%. Schools in the top quartile according to the SGP model, but outside the top quartile according to the two-stage VAM have an aggregate percent free/reduce price lunch of about 33%.

We found only two studies that compare SGP measures of teacher performance to measures generated by other types of models. While not the focus of their study, Goldhaber and Walch (2012) noted correlations between a covariate adjusted VAM and median SGPs of roughly 0.6 in reading and 0.8 in math. Wright (Citation2010) compared teacher effectiveness estimates based on SGPs to those generated by the EVAAS multivariate response model. While Wright does not report direct correlations between the two models, he does find greater negative correlations with median SGPs and classroom poverty level than the equivalent EVAAS model.

3. DATA AND ANALYTIC APPROACH

3.1. North Carolina Data

The data we use for this analysis are managed by Duke University's North Carolina Education Research Data Center (NCERDC) and are comprised of administrative records of all teachers and students in North Carolina. This dataset includes student standardized test scores in math and reading, student background information, and teacher employment records and credentials from school years 1995–1996 through 2008–2009.Footnote15

While the dataset does not explicitly match students to their classroom teachers, it does identify the person who administered each student's end-of-grade tests. There is good reason to believe that at the elementary level most of the listed proctors are, in fact, classroom teachers. To increase confidence in our teacher–student link, we take several precautionary measures. First, we use a separate personnel file to eliminate proctors who are not designated as classroom teachers, or who are listed as teaching a grade that is inconsistent with the grade level of the proctored exam. We also restrict the analytic sample to include only self-contained, nonspecialty classes in grades 3–5 and only include classrooms with at least 10 students for whom we can calculate an SGP and no more than 29 students (the maximum number of elementary students per classroom in North Carolina). We estimate two-year effects for adjacent years, thus requiring that teachers be in the sample for at least two consecutive years. In addition to these teacher-level restrictions, we also exclude students missing prior-year test scores from the analytic sample, since prescores are required in the models we use to estimate teacher effectiveness.

These restrictions leave us a sample of 20,844 unique teachers and 76,518 unique teacher-year observations spanning 13 years. Descriptive statistics for the restricted and unrestricted teacher samples are displayed in . We find the restricted and unrestricted samples to be similar in terms of observable characteristics.

Table 1. Descriptive statistics for restricted and unrestricted samples

Table 2. Descriptions of teacher effectiveness measures

3.2. Methods of Estimating Teacher Performance

In our analysis, we explore the extent to which four different teacher performance estimates compare to one another. These are generated using SGPs or VAMs as we describe below.

Student Growth Percentiles

SGPs are generated using a nonparametric quantile regression model in which student achievement in one period is assumed to be a function of one or more years of prior achievement (Koenker Citation2005). Quantile regression is similar to OLS but instead of fitting the conditional mean of the dependent variable on the levels of the independent variables, it fits the conditional quantiles of the dependent variable (Castellano Citation2011). The calculation of SGPs employs polynomial splines (specifically B-splines or ‘‘basis-splines’’) basis functions which use smooth, nonlinear regression lines that model nonlinearity, heteroscedasticity, and skewness of test score data over time.

Calculation of a student's SGP is based upon the conditional density associated with student prior scores at time t using prior scores as conditioning variables (Betebenner Citation2009).Footnote16 Given assessment scores for t occasions, t ≥ 2, the th conditional quantile for current test score, A1 is based upon previous test scores, At − 1, At − 2…, A1:

(1)

The b-spline based quantile regression procedure is used to accommodate nonlinearity, heteroscedasticity, and skewness of the conditional density associated with the dependent variable and is denoted by seven cubic polynomials pieced together, k = 1, 2, …, 7, that “smooth” irregularities and previous test measures, m = 1, …, t − 1 (Betebenner Citation2009).

When computing SGPs, we allowed the function to automatically calculate knots, boundaries, and LOSS.HOSS values; by default the program places knots at the 0.2, 0.4, 0.6, and 0.8 quantiles.Footnote17 Also, default arguments of the program were employed which produce rounded SGP values from 1 to 99 for each student. Considering the limitations of how SGPs are calculated by the SGP package in the statistical program R, we generate SGPs in two ways: using a maximum of three prior scores, and using only one prior score.Footnote18 However, because the teacher effectiveness estimates are very similar regardless of whether we use a single year or multiple years (r = 0.96 for math; r = 0.93 for reading), we only report the results for SGPs based on a single prior-year score.

Student achievement, as measured by SGPs, is translated into teacher performance by taking the median SGP, which we refer to as the median growth percentile (MGP), across students for each teacher. We generate two-year teacher effectiveness estimates by pooling each teacher's students over two consecutive years and finding the MGP for that time period.Footnote19 We compare the MGP estimates to effectiveness estimates derived from various VAM specifications.

Value-Added Models

A typical method for estimating teacher effectiveness includes a fixed effect for each teacher spanning multiple time periods (in our case, based on two years of student–teacher matched data):

(2) where i indexes students, j indexes teachers, and t indexes time period. In the above general formulation, the estimated average teacher effect over each two-year time period, t, is typically derived by regressing achievement at a particular point in time (Aijt) on measures of prior achievement (Ai(t prior)), a vector of student background characteristics (Xit), a vector of classroom characteristics (κjt), and teacher fixed effects (τ).Footnote20,Footnote21 The error term (ϵijt) is assumed to be uncorrelated with the predictors in the regression.

We estimate three variants of Equation (Equation2(2) ). The student background VAM includes: achievement on math and reading tests from the previous year; a vector of student background characteristics (gender, race/ethnicity, free/reduced price lunch status (FRL), learning disability, limited English proficiency, and parents’ education level); and teacher fixed effects.Footnote22 The classroom characteristics VAM builds on the student background VAM by also including the following classroom-level variables: classroom percentages of FRL, disability, and minority students; percentage of students with parental education of bachelor's degree or higher; average prior-year math and reading achievement; and class size.Footnote23 We also estimate a school fixed effects VAM, where we substitute the classroom-level characteristics with school fixed effects. This model specification yields estimates identified based on within-school variation in student performance/teacher effectiveness.

Our main analysis focuses on these three VAM specifications, along with the median SGPs. In addition, we also estimate six other variations of Equation (Equation2(2) ): (1) a simple specification controlling only for prior-year same subject achievement score; (2) a specification that includes student achievement from two prior years; (3) a specification with student and school-level characteristics; (4) one that only includes same-subject and cross-subject prior achievement; (5) a specification that uses the same covariates as the student background VAM, but excludes students with a disability in math, reading, or writing from the sample; and (6) a specification that includes student fixed effects. Many of these specifications yielded teacher effectiveness estimates that were highly correlated (i.e., a correlation over 0.95 in math and 0.92 in reading) with at least one of the three VAM specifications that we describe above, and they are all rarely used by states and districts to assess teacher performance. For the sake of parsimony, we do not describe these results in Section 4.Footnote24 provides a summary description of each of the teacher effectiveness models we use in our final comparison.

Finally, it is common practice to account for measurement error with an empirical Bayes (EB) shrinkage adjustment, where each teacher effectiveness estimate is weighted toward the grand mean based on the reliability of the estimate (Aaronson, Barrow, and Sander Citation2007; Boyd et al. Citation2008). Estimates with higher standard errors (i.e., those that are less reliable) are shrunken more toward the mean, while more reliable estimates are less affected. We find the adjusted estimates to be very similar to the unadjusted estimates and choose to report results of the analysis using the unadjusted estimates.Footnote25

4. RESULTS

Prior to our discussion about agreement/disagreement between estimates of individual teacher effects generated by the various models, we briefly note a few findings about the estimated impact of teacher effectiveness on student achievement. We look at the impact of a one standard deviation change in teacher effectiveness on student achievement, as measured in standard deviation units (effect size) from each of the VAMs used in this analysis. reports these effect sizes for math and reading and includes both unadjusted estimates as well as effect sizes adjusted for sampling error.Footnote26

Table 3. Estimated effect sizes for VAMs

Our findings suggest that a one standard deviation increase in the distribution of teacher effectiveness (for example moving from the median to the 84th percentile) translates to an increase of about 0.15–0.25 standard deviations of student achievement, magnitudes consistent with findings in other studies (e.g., Aaronson, Barrow, and Sander Citation2007; Goldhaber and Hansen, forthcoming).Footnote27 Two additional patterns emerge. First, both the unadjusted and adjusted measures tend to be larger for math. This is not surprising given that teacher effectiveness (and schooling resources in general) tends to explain a larger share of the variance in student outcomes in math than reading (e.g., Aaronson, Barrow, and Sander Citation2007; Koedel and Betts Citation2007; Schochet and Chiang Citation2010).

Second, in most of the models the sampling error adjustment reduces the estimated magnitude of changes in teacher quality by 10%–20%. It is notable, however, that the adjustment has a larger effect in the school fixed effects specification, particularly in reading. As we noted above, the validity of the different model estimates is not the focus of our article, but the fact that the different models yield teacher effect estimates with varying precision is certainly a policy relevant issue. For instance, there may well be a tradeoff between using an unbiased but noisy measure of teacher effectiveness and one that is slightly biased but more precisely estimated.Footnote28 This is a point we return to briefly in the conclusion.

4.1. Teacher Effects Across Model Specifications

reports the Pearson's correlation coefficients between the various estimates of teacher effectiveness.Footnote29,Footnote30

Table 4. Pairwise correlation matrix of teacher effectiveness estimates

Our findings are consistent with the existing literature in several respects. First, we find that the correlations are higher for estimates of teacher effectiveness in math than reading; for each of the model comparisons, the correlations are generally between 0.05 and 0.10 higher for math (Panel A) than for reading (Panel B), again, consistent with findings that the signal-to-noise ratio tends to be higher for math than reading estimates.

Research on the influence of peer effects on student achievement tends to suggest that the composition of the classrooms in which students are enrolled can have an impact on their achievement. In particular, being in a school with high concentrations of student poverty (Caldas and Bankston Citation1997), or low-achieving peers (Lavy, Silva, and Weinhardt Citation2012) are found to have a negative impact on student achievement. However, peer effects at the classroom level are generally found to be small (i.e., relative to one's own background) (Burke and Sass Citation2008).Footnote31 We too find that many of these classroom-level characteristics are statistically significant, but the models in that include classroom-level variables (a classroom measure of poverty, prior year test scores, parental education, percent of students with a disability, percent minority, and class size) are highly correlated (r = 0.99) with the Student Background VAM specification that does not include classroom-level controls.Footnote32

As we noted above, we are unaware of any research (other than Wright Citation2010, who compares MGPs to the EVAAS model) focusing on differences between VAM teacher effectiveness estimates and those generated from SGPs. While SGP models are conceptually distinct from VAMs, it turns out that the estimates of teacher effectiveness show a high level of agreement; we find correlations over 0.9 for math and 0.8 for reading with VAM specifications that control for student or classroom-level covariates.Footnote33 The lowest correlations are found between these models and the School FE VAMs: the correlations between math estimates from the School FE VAMs and the other specifications range from 0.65 (Student Background VAM) to 0.61 (MGPs) and are lower in reading (ranging from 0.58 to 0.48).

As discussed in Ballou, Mokher, and Cavalluzzo (Citation2012), it is arguably more important for different models to generate similar performance ratings for the most effective and least effective teachers than for teachers in the middle of the distribution, since teachers at the tails are more likely to be affected by high stakes policies such as tenure and merit pay. While Ballou, Mokher, and Cavalluzzo (Citation2012) reported a greater degree of disagreement between models for the most effective and least effective teachers than those in the middle of the distribution, we find that differences in teacher effects are fairly consistent throughout the effectiveness distribution.Footnote34

While the correlations across model specifications suggest the extent to which different models produce similar estimates of teacher effectiveness across the entire sample, they do not suggest whether these estimates may be systematically different for teachers instructing different types of students. We explore this issue below.

4.2. Classroom Characteristics Predicting Model Agreement/Disagreement

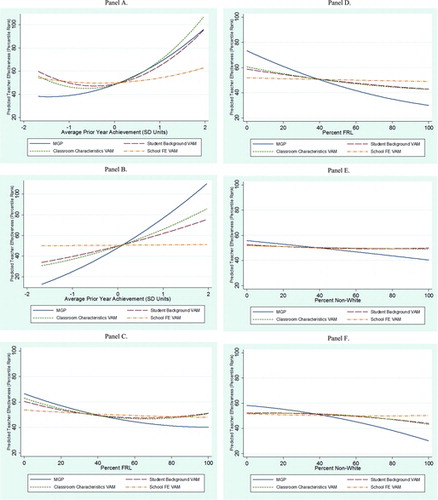

To assess the relationship between the student composition of teachers’ classrooms and their rankings according to different models, we first rank teachers on the performance distribution according to each model and then estimate the percentile rankings as a function of three classroom-level student characteristics: class average prior year achievement (a simple average of math and reading test scores across students in a classroom), the percentage of students receiving free/reduced price lunch, and the percentage of minority students in the classroom.Footnote35 In , we plot the predicted percentile ranks of teacher effectiveness for each model against each of the classroom characteristics.

In general, as average prior achievement increases the predicted percentile rank of effectiveness increases. For example, going from an average prior achievement score of 0 to 1 (in standard deviations of student achievement) results in an increase of about 15 percentile points in math for MGPs, the Student Background VAM, and the classroom characteristics VAM (panel (A)). We see a similar pattern for these three measures in reading (panel (B)), but with MGPs, the difference between 0 and 1 standard deviations of prior student achievement is even higher (about 25 percentile points). There is some evidence of a U-shaped nonlinear relationship in math. The relatively flat lines with the School FE VAMs in both math and reading show little variation in predicted percentile ranks across the distribution of prior achievement.

Looking across the distribution of percent FRL, we find lower predicted values for high poverty classrooms for each model. The difference in effectiveness between high and low poverty classrooms is greatest for MGPs: going from a 20% FRL classroom to an 80% FRL classroom results in a drop in ranking by about 15 percentile points in math (panel C) and about 25 points in reading (panel D). There is less of a difference between the 20% and 80% marks for the Student Background VAM and Classroom Characteristics VAM, with about a 5–7 percentile point drop in math and about a 10 percentile point drop in reading. As was the case with average prior achievement, there is much less variation in the predicted school FE rankings across the distribution of percent FRL.

We find that MGPs also showed the greatest differences when we look across the distribution of percent minority, with a drop of about 8 percentile points in math (panel E) and about 20 in reading (panel F) when we compare classrooms with 20% and 80% minority students. There is relatively little variation across the distribution for the predicted percentile ranks from the three VAMs. These results indicate that the models seem to behave differently for different classroom compositions.

We illustrate how different classroom compositions might affect rankings for different models by focusing on three different classroom types. Specifically, we define classrooms as being “advantaged,” “average,” or “disadvantaged” based on the aggregate student-level average prior achievement and the percentage of the classroom enrolled in the free/reduced price lunch program. Advantaged classrooms are those in the highest quintile of prior achievement and the lowest quintile of percent FRL; disadvantaged classrooms are those in the bottom quintile of prior achievement and top quintile of percent FRL; average classrooms are in the middle quintile for both classroom characteristics.Footnote36

For each of the various model specifications, we generate percentile ranks for individual teachers, then average the percentile ranks for each of the stylized classroom types. The results of this exercise are reported in .

Table 5. Average percentile ranks for stylized classrooms

The results suggest that more advantaged classrooms tend to be staffed with more effective teachers, but the magnitude of the differences in average percentile rank between advantaged and disadvantaged classrooms varies considerably across models. The School FE VAM, for example, suggests little teacher inequity across classroom types as compared to the other models, a finding that results from the fact that the school fixed effect absorbs much of the variation in teacher effectiveness.

Table 6. Intertemporal stability: correlations of teacher effectiveness estimates over time

Another notable finding is that the Classroom Characteristics VAM shows a similar distribution of teacher effectiveness to the Student Background VAM, which does not include classroom aggregate covariates. One might think that the inclusion of classroom-level covariates would reduce (or eliminate) the estimated differences in teacher effectiveness across classrooms given that the effectiveness estimates should be orthogonal to these classroom characteristics by construction. There are two explanations for why this is not the case. The first is that we include a classroom variable in this model, class size, which is not used in categorizing stylized classrooms. We find that being in a larger class tends to have a small (but statistically significant) negative effect on student achievement, and that disadvantaged students tend to be assigned to slightly smaller classes.Footnote37 A second explanation is that the Classroom Characteristics VAM may not fully capture the relationship between classroom characteristics and student achievement; this is both because classroom characteristics are not interacted with all of the other covariates in the model and because they are estimated within teacher. To the degree that teachers tend to be assigned to similar classrooms over time, the within-teacher estimates will be based on a restricted range, and the estimates may fail to fully capture the effect on student achievement of assignment to classrooms with different student compositions (e.g., very high or low poverty classrooms). As a result, the coefficients on the classroom-level variables may be attenuated, decreasing their value as control variables in the VAM.Footnote38

As we have stressed throughout, our comparison of models does not suggest whether one or another is more valid, but given the magnitude of the differentials presented above, the results certainly suggest that even when overall correlations between measures of effectiveness are high, certain models result in higher estimates for certain teachers, depending on the composition of their classrooms. For example, the Student Background VAM and MGPs are highly correlated (correlation coefficient of 0.93 in math); however, the average percentile rank for teachers of advantaged classes is 4 percentile points higher for the MGPs, while the average rank is about 7 points lower than that of the Student Background VAM for teachers in disadvantaged classes. We find even bigger differences in reading, where average rankings for advantaged classes are 11 percentile points higher according to MGPs than with Student Background VAM, while in disadvantaged classrooms MGPs are about 10 points lower. In sum, the models have a higher degree of agreement for teachers in the middle of the distribution of classroom characteristics and larger differences at the tails of the classroom composition distribution. Whether the magnitudes of the differences across models are meaningful is a policy question, but our findings suggest it is important to look at the tails of the distribution of classroom characteristics to get a full picture of how teacher effectiveness estimates behave across specifications.

4.3. Intertemporal Stability

In this section, we investigate the intertemporal stability of VAMs and MGPs. The issue of intertemporal stability is an important one given that a high degree of instability is seen as a signal that VAMs are unreliable (Darling-Hammond et al. Citation2012), that is, we would be more likely to see wide swings of estimated performance from one year to the next. This in turn makes the use of student-growth based measures of teacher effectiveness challenging from a policy standpoint (Glazerman et al. Citation2010; Baker Citation2012). The fact that some specific VAMs generate effectiveness estimates that are only moderately correlated from year to year has been well-documented (e.g., Goldhaber and Hansen Citation2013; McCaffrey et al. Citation2009), but, to our knowledge, there have been no studies that investigate the intertemporal stability of teacher estimates from School FE VAMs or MGPs.

In , we show the intertemporal correlations of performance estimates for math (panel (A)) and reading (panel (B)). The first column represents the correlation between effectiveness estimates from adjacent two-year time periods, with no overlapping students (e.g., the estimate that includes student scores from the 2000–2001 and 2001–2002 school years compared to the estimate that includes student scores from the 2002–2003 and 2003–2004 school years). The next column represents the correlation between estimates with a one-year gap (e.g., the estimate that includes student scores from the 2000–2001 and 2001–2002 school years compared to the estimate that includes student scores from the 2003–2004 and 2004–2005 school years, with the gap year being 2002–2003).

Not surprisingly, the adjacent two-year performance periods are more highly correlated than are performance estimates with gap years between them. This finding has been previously documented for particular VAM specifications (Goldhaber and Hansen 2013; McCaffrey et al. Citation2009).

We find the highest intertemporal correlations with the Student Background VAM and the Classroom Characteristics VAM (0.63 or greater in adjacent time periods in math, 0.47 or greater for reading), with slightly lower correlations for MGPs (0.59 for adjacent time periods in math, 0.43 for reading) and significantly lower correlations for the School FE VAMs (0.32 in math, 0.18 in reading).Footnote39 While some critics of value-added and other test-based measures of teacher performance cite low intertemporal correlations as problematic, as noted in Section 2, these correlations are comparable to correlations of year-to-year performance for professional baseball players (Glazerman et al. Citation2010; MET Citation2010).

We would expect a higher degree of intertemporal variability for the School FE VAMs since it is likely easier to change relative position within a school than it is to change relative position across the whole workforce. For example, an above average teacher in a school of above average teachers may have within-school rankings that vary year-to-year but still be consistently above average relative to all teachers in the sample.

5. CONCLUSIONS

Policymakers wishing to use student growth measures as an indicator of a teacher's job performance rightly worry about the properties of the estimates generated from different models, as well as the extent to which model choice might influence teacher rankings. We explore these issues in this descriptive paper examining the extent to which different methods of translating student test scores into measures of teacher performance produce consistent rankings of teachers, whether classroom characteristics predict these differences, and the stability over time of different performance estimates.

To be clear, we do not believe that the results presented here provide any definitive guidance about which model ought to be adopted; the impact on students of using a student growth measure as a factor in teacher performance ratings will depend both on how it is used and whether its use leads to behavioral changes. SGPs, for instance, are described as a means of sidestepping “many of the thorny questions of causal attribution and instead provide descriptions of student growth that have the ability to inform discussions about assessment outcomes and their relation to education quality” (Betebenner Citation2009, p. 43). For the purpose of starting conversations about student achievement, SGPs might be a useful tool, but one might wish to use a different methodology for rewarding teacher performance or making high-stakes teacher selection decisions.Footnote40

Complicating matters is the fact that how teachers respond to the use of student growth measures may be influenced by their perceptions about whether the growth measures are fair and accurate, and perceptions may or may not line up with the true properties of a measure. For example, as we have discussed, there are differences in the precision of the estimates generated by different models and there may well be a tradeoff between the validity, transparency, and reliability of measures. Models that include a more comprehensive set of student and classroom variables are arguably more valid, but they are also probably less transparent to teachers than MGPs because teachers cannot themselves calculate their own performance measure based on an aggregation of the performance of their students. Likewise, an unbiased but noisy measure of teacher performance might undermine teachers’ confidence in the system because there is less reliability in the estimates over time.

Given that for at least some uses of student-growth measures of teacher performance we care about teachers’ reactions to the measure, it is not possible to determine whether one model appears to be preferable to another without assessing the behavioral effects of using student growth measures on the teacher workforce. We do believe it makes sense for policymakers to be transparent with stakeholders about how teacher rankings can be affected by model choice. This study has shown that although different models may produce estimates that are highly correlated, models can still generate arguably meaningful differences in teacher rankings based on the type of students teachers are serving.

The choice of model is often a joint decision between district/state leaders, principals and teachers. In this article, we have provided research to inform stakeholders’ perceptions of the reliability, fairness, and accuracy of estimates generated from four different models. This study has shown that although different models may produce estimates which are highly correlated, there are noticeable differences in teacher rankings when comparing models, which suggest that the student makeup of teachers’ classrooms may predict how high (or low) their estimates are. Moreover, we show that the more causal models, which debatably provide more accurate estimates of teacher performance (i.e., school and student fixed effects specifications), produce estimates that are far less stable over time compared to the less saturated specifications.

Notes

The 2010 application for the TIF states “an applicant must demonstrate, in its application, that it will develop and implement a [performance-based compensation system] that rewards, at differentiated levels, teachers and principals who demonstrate their effectiveness by improving student achievement…” Furthermore, the application states that preference is given to applicants planning to use value-added measures of student growth “as a significant factor in calculating differentiated levels of compensation…” Similarly, the RttT selection criteria outlined in the 2010 application include the design and implementation of evaluation systems that “differentiate effectiveness using multiple rating categories that take into account data on student growth…as a significant factor…”

For reviews, see Goldhaber (Citation2010) and Toch and Rothman (Citation2008).

See, for instance, Aaronson, Barrow, and Sander (Citation2007), Goldhaber, Brewer, and Anderson (Citation1999), and Rivkin, Hanushek, and Kain (Citation2005).

Several large firms—e.g., SAS, Value Added Research Center at University of Wisconsin, Mathematica, and Battelle for Kids—offer competing, though not necessarily fundamentally different, services for this translation process.

We use the terms teacher job performance and teacher effectiveness interchangeably.

Several states, including Tennessee, Ohio, Pennsylvania, and North Carolina, have contracted with SAS to use the Education Value-Added Assessment System (EVAAS), a particular type of value-added model. EVAAS uses several different types of models, depending on the objective (estimating school, district, or teacher effectiveness) and available data. None of the models include student background characteristics as control variables, but some models do include prior scores as covariates. The EVAAS layered teacher multivariate response model is a longitudinal, linear mixed model, where multiple years and subjects of student test scores are estimated simultaneously. In the univariate response model, scores are estimated separately by year, grade, and subject, with prior scores included as covariates in the model. For more detail on EVAAS, see Wright et al. (Citation2010).

However, in practice this is exactly how SGPs have been used. For example, Denver Public Schools uses teacher-level median student growth percentiles to award teachers bonuses (Goldhaber and Walch Citation2012). Barlevy and Neal (Citation2012) recommended a pay-for-performance scheme that uses a similar system.

However, other common estimation approaches include two stage random effects or hierarchical linear modeling. For more information, see Kane and Staiger (Citation2008).

For more on the theoretical assumptions underlying typical VAM models see Harris, Sass, and Semykina (Citation2010), Rothstein (Citation2010), and Todd and Wolpin (Citation2003).

We are unaware of any research that assesses the validity of SGP-based measures of individual teacher effects.

For an example of VAM comparisons at the school level, see Atteberry (Citation2011).

Additional variables at the student-level included race, gender, grade (4th or 5th), prior year test score, and alternate subject prior year test score; teacher-level variables included years of experience, education, and credential status. Specifics about the methodology used to estimate the value-added reported by the Los Angeles Times are described in Buddin (Citation2010).

The intertemporal stability is similar to other high-skill occupations thought to be comparable to teaching (Goldhaber and Hansen 2013).

The Simple Panel Growth Model is described as a simple longitudinal mixed effects model, where the school effects are defined as the deviation of a school's trajectory from the average trajectory. The covariate-adjusted VAMs with random school effects predict student achievement using multiple lagged math and reading scores and student background variables as covariates, along with school random effects.

This dataset has been used in many published studies (Clotfelter, Ladd, and Vigdor Citation2006; Goldhaber Citation2007; Goldhaber and Anthony Citation2007; Jackson and Bruegmann Citation2009).

B-spline cubic basis functions, described in Wei and He (Citation2006), are used in the parameterization of the conditional percentile function to improve goodness-of-fit, and the calculations are performed using the SGP package in R (R Development Core Team Citation2008).

Knots are the internal breakpoints that define a spline function. Splines must have at least two knots (one at each endpoint of the spline). The greater the number of knots the more “flexible” the function to model the data. In this application, knots are placed at the quantiles stated above, meaning that an equal number of sample observations lie in each interval. Boundaries are the points at which to anchor the B-spline basis (by default this is the range of data). For more information on Knots and Boundaries, see Racine (Citation2011). LOSS: lowest obtainable scale score; HOSS: highest obtainable scale score.

In particular, the conditional distribution of a student's SGP is limited to a sample of students having at least an equal number of prior score histories and values.

We also generate teacher effectiveness estimates based on the mean of the student growth percentiles for each teacher but find the estimates to be highly correlated with the MGPs (r = 0.96 in math; r = 0.93 in reading). We choose to only report the results for MGPs.

Lockwood and McCaffrey (Citation2012) argued that measurement error in prior-year test scores results in biased teacher effectiveness estimates and recommend using a latent regression that includes multiple years of prior scores to mitigate the bias.

Teacher fixed effects for the Student Background and Classroom Characteristics VAMs are generated with the user-written Stata program fese (Nichols Citation2008); the School FE VAM estimates, which requires two levels of fixed effects, are generated with the user-written Stata program felsdvreg (Cornelissen Citation2008).

Note that some student background variables are not available in all years. VAMs include separate indicators for learning disability in math, reading, and writing.

Because we estimate VAMs with teacher fixed effects, the effect of the classroom characteristics are identified off of variation within teachers across years. Classroom-level variables are continuous, and the effects are assumed to be linear. If teachers have similar classroom characteristics across time periods, for example, classrooms with 70% FRL and 72% FRL in consecutive years, the effect of percent FRL will be based on small within-teacher differences and may be attenuated (Ehlert et al. Citation2012). Ashenfelter and Krueger (Citation1994) discussed a similar problem with using pairs of twins to investigate the economic returns to schooling. They found small within-pair differences in schooling, leading to a high degree of measurement error.

See Goldhaber, Walch, and Gabele (Citation2012) for comparisons of these omitted specifications (available online at http://www.cedr.us/papers/working/CEDR%20WP%202012-6_Does%20the%20Model%20Matter.pdf). We also estimate two-stage random effects VAMs similar to the school-level VAMs described in Ehlert et al. (Citation2012). We find these estimates to be highly correlated (r = 0.99) to estimates from the teacher single-stage Student Background VAM and classroom characteristics VAM specifications described above.

The correlations between the adjusted and unadjusted effects are greater than 0.97 in math and greater than 0.94 in reading for each the VAMs.

Adjusted effect sizes are calculated using the following equation: where

represents the variance of the estimated teacher effects, kj represents the number of student observations contributing to each estimate, and SE (

) represents the standard errors of the estimated teacher effects.

Research that estimates within-school teacher effects tends to find smaller effects sizes, in the neighborhood of 0.10 (Hanushek and Rivkin Citation2010).

For instance, the student fixed effects specifications (not reported here), which are favored by some academics in estimating teacher effectiveness, were very imprecisely estimated

Recall that includes descriptions of the various model specifications and methods of estimating teacher effectiveness. We do not focus on the extent to which effectiveness in one subject corresponds to effectiveness in another subject. For more information on cross-subject correlations, see Goldhaber, Cowan, and Walch (Citation2012).

Spearman rank correlations are similar to the correlation coefficients reported in . Additionally, the correlations between the EB-adjusted VAM estimates are very similar to the reported correlations using the unadjusted estimates; in nearly all cases, the differences in correlation coefficients are 0.01 or less.

For example, in math at the elementary level Burke and Sass (Citation2008) found that a one-point increase in mean peer achievement results in a statistically significant gain score increase of 0.04 points—equivalent to an increase of 0.0015 standard deviations of achievement gains.

One of the classroom level variables we include is class size and one might hypothesize that the high correlation for models with and without classroom level controls has to do with the fact that having a more challenging class (e.g., high poverty students) tends to be offset by having a lower class size. To test this, we re-estimate the Classroom Characteristics VAM excluding class size, but find that this has little effect on the correlations. This finding is consistent with Harris and Sass (Citation2006).

These correlations are higher than those reported in a similar comparison using school effectiveness measures (Ehlert et al. Citation2012). One explanation for this is that random factors (e.g., students having the flu on testing day) that influence student achievement, and hence teacher effectiveness measures, are relatively more important when the level of aggregation is the classroom rather than the school. This is indeed what one would expect if the random shocks primarily occur at the classroom level but are not strongly correlated across classrooms in a school.

The means of the absolute value of the difference between Student Background and Classroom Characteristics VAM estimates are consistently close to 0.02 in math and 0.013 in reading at the 10th, 25th, 50th, 75th, and 90th percentiles of the effectiveness distribution.

We focus exclusively on prior achievement, free/reduced price lunch, and minority students because there is relatively little variation in other student characteristics at the classroom level in the North Carolina sample. Since we estimate two-year teacher effects, we calculate classroom-level characteristics based on two years of data. To allow for nonlinear relationships, we also include squared and cubed terms for each classroom characteristic.

The analytic sample includes 7,672 “advantaged” classrooms, 3,820 “average” classrooms, and 8,002 “disadvantaged” classrooms according to these definitions. Since the student characteristics are aggregated over two years these are not true classrooms, but instead are averages across two classrooms.

When we exclude class size from the Classroom Characteristics VAM, we find a more (but not completely) equitable distribution of teacher effectiveness across stylized classrooms.

Ehlert et al. (Citation2012) discussed a similar case when school-level control variables are included in VAMs with school fixed effects. The school-level control variables are identified using variation within schools over time and often the changes in student composition within schools over time are small, making it unlikely to capture systematic differences in school context. Therefore, the ratio of variation due to measurement error to total identifying variation will be greater when school fixed effects are included in the model, attenuating the coefficients on the school-level covariates. This same issue arises with the estimation of teacher fixed effects.

While not reported here, we also estimated teacher effects with VAMs that included student fixed effects and find the year-to-year correlations to be much lower than other VAMs and MGPs due to the imprecision of the estimates. This is consistent with McCaffrey et al. (Citation2009).

Ehlert et al. (Citation2012) made the case for using a proportional, two-stage value-added approach that assumes the correlation between growth measures of effectiveness and student background covariates is zero, forcing comparisons to be between similar teachers and schools.

REFERENCES

- Aaronson, D., Barrow, L., and Sander, W. (2007), “Teachers and Student Achievement in the Chicago Public High Schools,” Journal of Labor Economics, 25, 95–135. Available at http://www.journals. uchicago.edu/doi/abs/10.1086/508733.

- Ashenfelter, O., and Krueger, A. (1994), “Estimates of the Economic Return to Schooling From a New Sample of Twins,” The American Economic Review, 84, 1157–1173.

- Atteberry, A. (2011), Defining School Value-Added: Do Schools that Appear Strong on One Measure Appear Strong on Another? Evanston, IL. . Available at http://www.eric.ed.gov/ERICWebPortal/search/detailmini. jsp?_nfpb=true&_&ERICExtSearch_SearchValue_0=ED518222&ERIC ExtSearch_SearchType_0=no&accno=ED518222.

- Baker, B.D. (2012), “The Toxic Trifecta, Bad Measurement & Evolving Teacher Evaluation Policies.” . Available at http://nepc.colorado.edu/ blog/toxic-trifecta-bad-measurement-evolving-teacher-evaluation-policies.

- Ballou, D., Mokher, C.G., and Cavalluzzo, L. (2012), “Using Value Added Assessment for Personnel Decisions: How Omitted Variables and Model Specification Influence Teachers’ Outcomes,” . Available at http://www.aefpweb.org/sites/default/files/webform/AEFP-Using VAM for personnel decisions_02-29-12.pdf.

- Ballou, D., Sanders, W.L., and Wright, P.S. (2004), “Controlling for Student Background in Value-Added Assessment of Teachers,” Journal of Education and Behavioral Statistics, 29, 37–65. . Available at http://jeb.sagepub.com/cgi/content/abstract/29/1/37.

- Barlevy, G., and Neal, D. (2012), “Pay for Percentile,” American Economic Review, 102, 1805–1831.

- Betebenner, D. (2007), Estimation of Student Growth Percentiles for the Colorado Student Assessment Program, Dover, NH: National Center for the Improvement of Educational Assessment. . Available at http://www.cde.state. co.us/ dedocs/Research/PDF/technicalsgppaper_betebenner.pdf.

- ——— (2009), “Norm- and Criterion-Referenced Student Growth,” Educational Measurement: Issues and Practice, 28, 42–51. . Available at http://doi.wiley.com/10.1111/j.1745-3992.2009.00161.x.

- Boyd, D.J., Grossman, P., Lankford, H., Loeb, S., and Wyckoff, J. (2008), Measuring Effect Sizes: The Effect of Measurement Error. National Conference on Value-Added Modeling, Madison, WI: University of Wisconsin.

- Boyd, D.J., Lankford, H., Loeb, S., and Wyckoff, J.H. (2010). Teacher Layoffs: An Empirical Illustration of Seniority vs. Measures of Effectiveness, Washington, DC: National Center for Analysis of Longitudinal Data in Education Research. . Available at http://www. caldercenter.org/UploadedPDF/1001421-teacher-layoffs.pdf.

- Briggs, D., and Domingue, B. (2011), Due Diligence and the Evaluation Of Teachers A Review of the Value-Added Analysis Underlying the Effectiveness Rankings of Los Angeles Unified School District Teachers by The Los Angeles Times, Boulder, CO: National Education Policy Center. . Available at http://greatlakescenter.org/docs/Policy_Briefs/Briggs_LATimes.pdf.

- Buddin, R. (2010), How Effective are Los Angeles Elementary Teachers and Schools? . Available at http://www.latimes.com/media/acrobat/2010-08/55538493.pdf.

- Burke, M.A., and Sass, T.R. (2008), “Classroom Peer Effects and Student Achievement,” CALDER working paper #18. . Available at http://www.urban.org/UploadedPDF/1001190_peer_effects.pdf.

- Caldas, S.J., and Bankston, C. (1997), “Effect of School Population Socioeconomic Status on Individual Academic Achievement,” The Journal of Educational Research, 90, 269–277. . Available at http://apps.webofknowledge. com.offcampus.lib.washington.edu/full_record.do?product=WOS&search_ mode=GeneralSearch&qid=17&SID=2FC8FA7Hp8kFmOCeOOF&page= 1&doc=2.

- Castellano, K. (2011). Unpacking Student Growth Percentiles: Statistical Properties of Regression-Based Approaches With Implications for Student and School Classifications, Doctoral Dissertation, University of Iowa. . Available at http:/ir.uiowa.edu/etd/931.

- Chetty, R., Friedman, J.N., and Rockoff, J.E. (2011), “The Long-Term Impacts of Teachers: Teacher Value-Added and Student Outcomes in Adulthood,” . NBER Working Paper no. #17699.

- Clotfelter, C., Ladd, H., and Vigdor, J. (2006), “Teacher–Student Matching and the Assessment of Teacher Effectiveness,” The Journal of Human Resources, 41, 778–820.

- Cornelissen, T. (2008), “The Stata Command Felsdvreg to Fit a Linear Model With Two High-Dimensional Fixed Effects,” Stata Journal, 8, 170–189.

- Darling-Hammond, L., Amrein-Beardsley, A., Haertel, E., and Rothstein, J. (2012), “Evaluating Teacher Evaluation: Popular Modes of Evaluating Teachers Are Fraught With Inaccuracies and Inconsistencies, But the Field has Identified Better Approaches,” Phi Delta Kappan, 93, 8–15. . Available at http://uwashington.worldcat.org.offcampus.lib.washington.edu/title/evalua ting-teacher-evaluation/oclc/779377670&referer=brief_results.

- Ehlert, M., Koedel, C., Parsons, E., and Podgursky, M. (2012), “Selecting Growth Measures for School and Teacher Evaluations: Should Proportionality Matter?” . Working Paper no. #1210, University of Missouri.

- Gabriel, T. (2010, September 2), “A Celebratory Road Trip for Education Secretary,” New York Times, p. A24. . Available at http://www.nytimes.com/2010/09/02/education/02duncan.html.

- Glazerman, S., Loeb, S., Goldhaber, D., Staiger, D., Raudenbush, S., and Whitehurst, G. (2010), Evaluating Teachers: The Important Role of Value-Added. . Available at http://www.brookings. edu/∼/media/Files/rc/reports/2010/1117_evaluating_teachers/1117_evalua ting_teachers.pdf.

- Goldhaber, D. (2007), “Everyone's Doing It, But What Does Teacher Testing Tell Us About Teacher Effectiveness?” Journal of Human Resources, 42, 765–794.

- ——— (2010), When the Stakes Are High, Can We Rely on Value-Added? Exploring the Use of Value-Added Models to Inform Teacher Workforce Decisions, Washington, DC: Center for American Progress. . Available at http://www.americanprogress.org/issues/2010/12/pdf/vam.pdf.

- Goldhaber, D., and Anthony, E. (2007), “Can Teacher Quality be Effectively Assessed? National Board Certification as a Signal of Effective Teaching,” Review of Economics and Statistics, 89, 134–150.

- Goldhaber, D., Brewer, D., and Anderson, D. (1999), “A Three-Way Error Components Analysis of Educational Productivity,” Education Economics, 7, 199–208.

- Goldhaber, D., and Chaplin, D. (2012), “Assessing the “Rothstein Falsification Test.” Does it Really Show Teacher Value-added Models are Biased?” . Mathematica Working Paper. Available at http://www. mathematica-mpr.com/publications/pdfs/education/rothstein_wp.pdf.

- Goldhaber, D., Cowan, J., and Walch, J. (2012), “Is a Good Elementary Teacher Always Good? Assessing Teacher Performance Estimates Across Contexts and Comparison Groups,” . CEDR Working Paper no. #2012-7.

- Goldhaber, D., Goldschmidt, P., Sylling, P., and Tseng, F. (2011), “Assessing Value-Added Model Estimates of Teacher Contributions to Student Learning at the High School Level,” . CEDR Working Paper no. #2011-4.

- Goldhaber, D., and Hansen, M. (2010), “Using Performance on the Job to Inform Teacher Tenure Decisions,” American Economic Review, 100, 250–255.

- ——— (2013), “Is it Just a Bad Class? Assessing the Long-Term Stability of Estimated Teacher Performance,” Economica, 80, 589–612.

- Goldhaber, D., and Theobald, R. (2011), “Managing the Teacher Workforce in Austere Times: The Implications of Teacher Layoffs,” . CEDR Working Paper no. #2011-1.2. Available at http://www.cedr.us/papers/working/CEDR WP 2011-1.2 Teacher Layoffs (6-15-2011).pdf.

- Goldhaber, D., and Walch, J. (2012), “Strategic Pay Reform: A Student Outcomes-Based Evaluation of Denver's ProComp Teacher Pay Initiative,” Economics of Education Review, 31, 1067–1083.

- Goldhaber, D., Walch, J., and Gabele, B. (2012), “Does the Model Matter: Exploring the Relationship Between Different Student Achievement-Based Teacher Assessments,” . CEDR Working Paper no. #2012-6. Available at http://www.cedr.us/papers/working/CEDR%20WP%202012-6_Does%20the%20Model%20Matter.pdf.

- Goldschmidt, P., Choi, K., and Beaudoin, J.P. (2012), Growth Model Comparrison Study: A Summary of Results, Washington, DC. . Available at http://www. ccsso.org/Documents/2012/Summary_of_Growth_Model_Comparison_ Study_2012.pdf.

- Gordon, R.J., Kane, T.J., and Staiger, D.O. (2006), Identifying Effective Teachers Using Performance on the Job. Hamilton Project White Paper, Washington, DC: Brookings Institution. . Available at http://www.brook.edu/views/papers/200604hamilton_1.htm.

- Hanushek, E. (1979), “Conceptual and Empirical Issues in the Estimation of Education Production Functions,” Journal of Human Resources, 14, 351–388.

- ——— (1986), “The Economics of Schooling—Production and Efficiency in Public-Schools,” Journal of Economic Literature, 24, 1141–1177.

- Hanushek, E.A., and Rivkin, S.G. (2010), “Generalizations About Using Value-added Measures of Teacher Quality,” American Economic Review, 100, 267–271.

- Harris, D., and Sass, T.R. (2006), “Value-Added Models and the Measurement of Teacher Quality,” . unpublished manuscript.

- Harris, D., Sass, T., and Semykina, A. (2010), Value-Added Models and the Measurement of Teacher Productivity, Washington, DC, unpublished manuscript.

- Jackson, C., and Bruegmann, E. (2009), “Teaching Students and Teaching Each Other: The Importance of Peer Learning for Teachers,” American Economic Journal: Applied Economics, 1, 85–108.

- Jacob, B., and Lefgren, L. (2008), “Can Principals Identify Effective Teachers? Evidence on Subjective Performance Evaluation in Education,” Journal of Labor Economics, 26, 101–136.

- Kane, T., and Staiger, D. (2008), “Estimating Teacher Impacts on Student Achievement: An Experimental Evaluation,” . NBER Working Paper no. #14607. Available at http://www.nber.org/papers/w14607.pdf?new_window=1.

- Koedel, C., and Betts, J. (2007), Re-Examining the Role of Teacher Quality in the Educational Production Function, San Diego, CA: University of Missouri. . Available at http://economics.missouri. edu/working-papers/2007/WP0708_koedel.pdf.

- ——— (2011), “Does Student Sorting Invalidate Value-Added Models of Teacher Effectiveness? An Extended Analysis of the Rothstein Critique,” Education Finance and Policy, 6, 18–42.

- Koenker, R. (2005), Quantile Regression (Econometric Society Monographs) (p. 366), Cambridge: Cambridge University Press. . Available at http://www.amazon.com/Quantile-Regression-Econometric-Society-Monog raphs/dp/0521608279.

- LA Times (2010), “Study Critical of Teacher Layoffs (2010, December 24),” Los Angeles Times. . Available at http://www.latimes. com/news/local/teachers-investigation/la-me-teachers-study-critical-ap,0, 6451518.story.

- Lavy, V., Silva, O., and Weinhardt, F. (2012), “The Good, the Bad, and the Average: Evidence on Ability Peer Effects in Schools,” Journal of Labor Economics, 30, 367–414.

- Lockwood, J.R., and McCaffrey, D.F. (2012), “Reducing Bias in Observational Analyses of Education Data by Accounting for Test Measurement Error,” . unpublished manuscript, RAND Corporation.

- Lockwood, J.R., McCaffrey, D.F., Hamilton, L., Stecher, B., Le, V.-N., and Martinez, J.F. (2007), “The Sensitivity of Value-Added Teacher Effect Estimates to Different Mathematics Achievement Measures,” Journal of Education Measurement, 44, 47–67.

- McCaffrey, D., Koretz, D., Lockwood, J.R., Louis, T.A., and Hamilton, L.S. (2004), “Models for Value-Added Modeling of Teacher Effects,” Journal of Educational and Behavioral Statistics, 29, 67–101.

- McCaffrey, D., Sass, T.R., Lockwood, J.R., and Mihaly, K. (2009), “The Intertemporal Variability of Teacher Effect Estimates,” Education Finance and Policy, 4, 572–606.

- MET (2010), Learning About Teaching: Initial Findings from the Measures of Effective Teaching Project, Bill & Melinda Gates Foundation. . Available at http://www.metproject.org/downloads/Preliminary_Findings-Research_ Paper.pdf.

- Nichols, A. (2008), “FESE: Stata Module to Calculate Standard Errors for Fixed Effects,” Boston College Department of Economics. . Available at http://econpapers.repec.org/software/bocbocode/s456914.htm.

- Papay, J. (2010), “Different Tests, Different Answers: The Stability of Teacher Value-Added Estimates Across Outcome Measures,” American Educational Research Journal, 48, 163–193.

- Podgursky, M., and Springer, M. (2007), “Teacher Performance Pay: A Review,” Journal of Policy Analysis and Management, 26, 909–949.

- R Development Core Team (2008), R: A Language and Environment for Statistical Computing, Vienna, Austria: R Foundation for Statistical Computing. . Available at http://www.r-project.org.

- Racine, J. (2011), A Primer on Regression Splines. . Available at http://cran.r-project.org/web/packages/crs/vignettes/spline_primer.pdf.

- Rivkin, S., Hanushek, E., and Kain, J. (2005), “Teachers, Schools, and Academic Achievement,” Econometrica, 73, 417–458.

- Rockoff, J., and Speroni, C. (2011), “Subjective and Objective Evaluations of Teacher Effectiveness: Evidence From New York City,” Labour Economics, 18, 687–696.

- Rothstein, J. (2010), “Teacher Quality in Educational Production: Tracking, Decay, and Student Achievement,” Quarterly Journal of Economics, 125, 175–214.

- Schochet, P., and Chiang, H. (2010), Error Rates in Measuring Teacher and School Performance Based on Student Test Score Gains. NCEE 2010-4004. National Center for Education Evaluation and Regional Assistance. . Available at http://www.eric.ed.gov/ERICWebPortal/search/detailmini.jsp?_nfpb=true&_&ERICExtSearch_SearchValue_0=ED511026&ERICExtSearch_SearchType_0=no&accno=ED511026.

- Toch, T., and Rothman, R. (2008). Rush to Judgment: Teacher Evaluation in Public Education, Washington, DC: Education Sector. . Available at http://www.educationsector.org/usr_doc/RushToJudgment_ES_Jan08.pdf.

- Todd, P., and Wolpin, K. (2003), “On the Specification and Estimation of the Production Function for Cognitive Achievement,” Economic Journal, 113(485), F3–F33.

- Tucker, P. (1997), “Lake Wobegon: Where All the Teachers are Competent (Or, Have We Come to Terms With the Problem of Incompetent Teachers?),” Journal of Personnel Evaluation in Education, 11, 103–26.

- Wei, Y., and He, X. (2006), “Conditional Growth Charts,” The Annals of Statistics, 34, 2069–2097.

- Weisburg, D., Sexton, S., Mulhern, J., and Keeling, D. (2009), The Widget Effect: Our National Failure to Acknowledge and Act of Differences in Teacher Effectiveness, New York: The New Teacher Project.

- Wright, P. (2010), An Investigation of Two Nonparametric Regression Models for Value-Added Assessment in Education, SAS. . Available at http://www.sas.com/resources/whitepaper/wp_16975.pdf.

- Wright, P., White, J., Sanders, W., and Rivers, J. (2010), “SAS EVAAS Statistical Models,” . SAS White Paper. Available at http://www. sas.com/resources/asset/SAS-EVAAS-Statistical-Models.pdf.