?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

This paper introduces a novel method for instructing wearable robotic arms based on neck movements to enhance user control. By capturing head movements representing four aiming directions and analysing surface electromyography (sEMG) signals from specific muscles, the study employs the random forest algorithm for movement recognition. Results show that utilising sEMG signals from six muscles improve classification accuracy, and applying the variational mode decomposition (VMD) algorithm enhances feature extraction. Notably, right-side muscles, particularly the sternocleidomastoid muscles, significantly impact movement classification. These findings provide theoretical support for better recognition of neck movements.

1. Introduction

Wearable combat arms are a type of wearable robotic arm that can enhance human strength and quickly give the body additional capabilities. With the rapid development of related disciplines, three-headed and six-armed robotic arms have gradually become possible, meeting the wearer's demand for simultaneously completing multiple tasks and expanding the range of human activities and application fields. Wearable robotic arms have been developed for many years and accumulated a large number of achievements. They were first proposed by ASADA from MIT in the United States, who introduced the concept of supernumerary robotic limbs (SRL), which provides two additional robotic arms for human users (Llorens-Bonilla et al., Citation2012). In subsequent research, the team optimised and expanded the functionality of SRL (Bright & Asada, Citation2017; Parietti et al., Citation2015; Parietti & Asada, Citation2014). After years of development, the field of wearable robotic arms has accumulated a wealth of research. Notable institutions such as Keio University and Waseda University in Japan, Koryo University in South Korea, Arizona State University and Cornell University in the United States have all achieved significant results in this area (Al-ada et al., Citation2019; Gopinath & Weinberg, Citation2016; Maekawa et al., Citation2019; Nabeshima et al., Citation2019; Nguyen et al., Citation2019; Seo et al., Citation2016). Most designs of wearable robotic arms have abandoned methods such as button pressing and gestures during the development process, as these arms are primarily designed to complete their own tasks while freeing up the wearer's hands. The current control methods commonly include voice control, mapping of leg body movements, electroencephalogram (EEG) control, eye movement control, and the introduction of other operators besides the wearer for control. In 2017, Tomoya Sasaki et al. from Keio University, Japan, developed a robot arm system called MetaLimbs that is controlled by mapping the user's lower limb movements to the robot arm. The system is attached to the waist and uses bending sensors placed at the user's feet and knees to drive the robotic arms via the movement of these body parts. The system is equipped with sensors that provide real-time force feedback to assist users in positioning their toes correctly for grasping while controlling the robotic arms with their feet (Sasaki et al., Citation2017, Citation2018; Tomoya et al., Citation2017). Similarly, The Third Thumb, designed by New Zealand designer Danielle Clode in 2017, also controlled the bending and rotation of prosthetic fingers through foot movements. This wearable hand-aid product, also known as the ‘sixth finger,’ can assist users with disabilities in performing a variety of tasks by cooperating with other fingers, from replacing lost functionality to extending the capabilities of the human body. Currently, it is being developed towards neuroscientific methods (Amoruso et al., Citation2022; Kieliba et al., Citation2020, Citation2021). In 2018, Christian I. Penaloza et al. proposed an intelligent external limb controlled by the human brain, which can perform corresponding operations based on the signals received from a brain–machine interface when the user imagines movements. The system's visual context awareness enables it to recognise objects and human behaviour, supplementing the information received from the brain–machine interface and allowing for more complex actions to be performed when faced with the same signal (Penaloza et al., Citation2018). Yongtian He believes that the performance of lower limb system control based on brain–machine interfaces is relatively good, but due to issues such as a small sample size and safety hazards, practical application is still far away (He et al., Citation2018). Catherine Véronneau et al. developed a wearable external limb robot that is worn around the waist. The system uses a master-slave control method and is controlled by another operator through a miniaturised handheld arm to assist in coordinating the robotic arm (Veronneau et al., Citation2020). In addition, Reuben Aronson's team attempted to send commands to wearable robotic arms through eye-tracking technology. They proposed combining visual attention and operational intent recognition by analysing the hand-eye coordination process during human-machine interaction, attempting to predict the user's intent through eye gaze patterns. They clustered and extracted areas of interest on the displayed image based on eye gaze patterns and classified objects in each area of interest, enabling the robotic arm to perform grasping tasks (Aronson & Admoni, Citation2019; Koochaki & Najafizadeh, Citation2018). In 2020, Zhang Yuzhuo et al. developed an intelligent handwriting robot system based on voice control, which achieves functions such as voice recognition, comparison and correction, text conversion, real-time handwriting based on voice input, font switching, and handwriting precision control (Zhang et al., Citation2020). In 2022, Zhang Bingkai used voice control to enable the Dobot robot to play chess, with control precision and voice recognition accuracy meeting the requirements of playing Go. This provides a new way of entertainment for the elderly or disabled people (Zhang et al., Citation2022). Masaaki Fukuoka et al. from Keio University also attempted to use human facial expressions to determine the user's intent and enable the wearer to control a robotic arm using facial expressions (Fukuoka et al., Citation2019). Each of the above control methods has its own advantages and disadvantages, effectively replacing manual control for robotic arms. Specific control strategies can be designed based on the specific environment and requirements. The operational environment of wearable combat robotic arms is more complex and diverse. Voice control is vulnerable to noise interference, and certain operational tasks necessitate silence, rendering speech-based communication impractical. During the combat process, limbs are the main unit of movement, and they are not suitable for transmitting robotic arm commands without affecting the human body. In addition, the current control accuracy of brainwave and eye movement control is difficult to meet the requirements. Therefore, this article chooses to transmit instructions based on sEMG signals of the neck muscles. SEMG refers to a technique in which the electrical potential signals on the surface of muscles are amplified and recorded through electrodes. These potential signals are caused by the interaction between neurons and muscle cells during muscle contraction. SEMG can be used to study muscle movement control, muscle diseases, and rehabilitation treatment, and can be applied in laboratory and practical environments. It can be used to obtain information such as muscle activity intensity, frequency, timing, and phase, providing important information about muscle mechanics and motion coordination. It has been widely used in the verification of interactions and comfort of wearable equipment. In 2022, Zhu Penglin et al. designed a lower limb wearable exoskeleton control system based on sEMG signals from the main muscles involved in shoulder flexion and abduction movements. The system controlled the thigh-related joints’ servomotors based on sEMG signals from the shoulder muscles and provided relevant torque to complete the movement based on three-dimensional gait data collected from patients with special gaits (Zhu et al., Citation2022). In 2016, Xu Chaoli et al. proposed an intelligent mobile robot control method based on sEMG signals from the calf muscles, which can be used to determine the user's motion intent. The maximum redundancy minimum correlation algorithm was used to identify and classify four motion intentions of forward, backward, left turn, and right turn, thus achieving real-time coordination and interaction between humans and robots (Xu et al., Citation2016). The neck muscles are rarely used as the primary muscles for tactical actions or combat missions. However, these muscles work with the cervical vertebrae to achieve six degrees of freedom in head movement, allowing for a variety of movements that transmit a significant amount of information. The neck muscles have low fat content and are clearly visible due to their location, making them ideal sEMG collection points. Therefore, control commands for wearable robotic arms can be achieved through sEMG data from the neck muscles.

This article uses sEMG data from the neck muscles to classify four dynamic movements of the head. The single dynamic movement is separated into multiple groups of static movements for recognition and classification. Two features, iEMG and information entropy, are used in addition to VMD to decompose the muscle data, and partial IMFs are used for classification analysis. Finally, the feasibility of control strategies for wearable robotic arms based on sEMG signals from the neck muscles is verified through experiments. The number of muscles and VMD decomposition features’ influence on classification effectiveness is analysed and summarised, and the relationship between neck muscles and neck movements is analysed based on experimental results.

2. Problem analysis

2.1. Combat robotic arm problem analysis

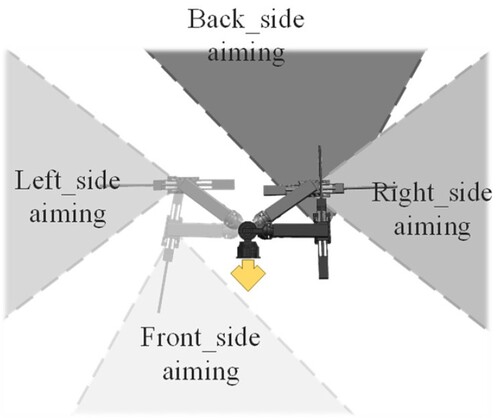

In combat environments, there is a higher requirement for the human–computer interaction capabilities of wearable equipment. Wearable combat robotic arms can effectively protect the wearer by carrying lethal end-effectors at the end and endowing them with certain accurate strike capabilities. During missions, the wearable combat robotic arm can perform striking tasks in the wearer's blind spots, such as behind the wearer, or replace the wearer to perform striking tasks when both hands are occupied. When the robotic arm is in operation, its structure can be simplified into a four-degree-of-freedom structure, with one group named tracking group located near the end responsible for target tracking, and another group named adjustment group located near the body responsible for large-scale posture adjustments. Based on this, the coordinate system centred on the wearer can be divided into four areas, with each area corresponding to a set of adjustment group pose coordinates. When a command is given, the robotic arm can quickly reach the set position and begin target searching. After finding the target, it locks onto and tracks it according to the mechanical arm structure and search range. The division method and adjustment group posture are shown in Figure .

During combat, the wearer's limbs are required to participate. Sending instructions through limb movements may interrupt the task and affect operational efficiency. Remote personnel who are unable to be present on the battlefield have difficulty grasping the situation and making tactical decisions compared to wearers who are physically on the battlefield. There is also a lot of noise in the battlefield environment, making it difficult to collect instructions through voice control methods. In some tasks that require silence, voice commands are not applicable. Overall, sEMG signals are suitable for a wide range of applications in different scenarios as compared to other control methods, since they can be used in various situations and under various conditions.

2.2. Choosing neck exercises

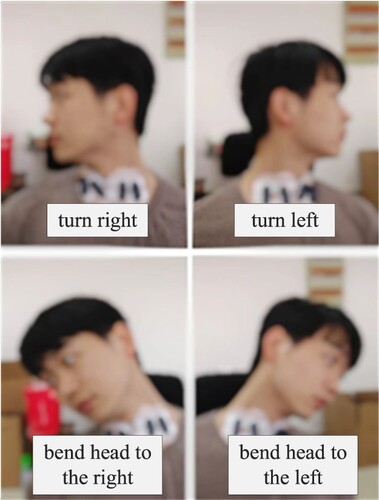

As the connecting part between the human torso and head, the neck typically does not require large range of motion in daily life and various tactical movements. It usually has lower strength requirements, and to maintain sensory stability of the head, its muscles are typically in a relatively stable state. Neck muscles are abundant and prominent, making them easy to locate, with a thinner layer of fat, which makes them an ideal location for sEMG signal collection. To ensure that the robotic arm's movements better conform to human observation habits, four sets of dynamic movements are designed as instructions. As shown in Figure .

When an anomaly appears on the left side, the human body habitually turns its head to the left, so turning the head to the left corresponds to scanning and targeting the left space. When the combat robotic arm recognises the movement command, it quickly moves to the left area to begin target searching. The same applies for targeting on the right side. Leaning to the left corresponds to frontal aiming, which is used for multi-target strikes or bypassing cover to strike. Leaning to the right corresponds to scanning the back area.

To prevent head movements during normal operations from being misinterpreted as commands and causing adjustments to the robotic arm posture, this article divides the four sets of dynamic movement commands into two movement primitives. The actions with the most judgment results within a recorded window are noted, and only when movement primitive appear in a certain sequence in the recording, will they be recognised as corresponding movements and trigger the command. Additionally, muscle states that indicate neck relaxation and minor head movement during regular work are marked as the 9th category of movements and will only be recognised as commands when arranged in a specific order.

2.3. Choosing neck muscles

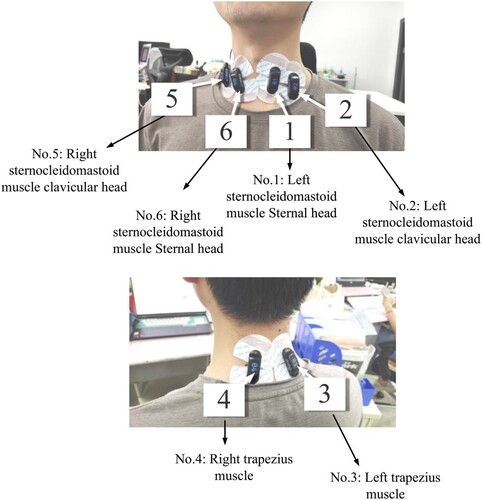

Neck movements are accomplished through the coordination of multiple muscles. The sEMG signals of neck muscles have been used in various human motion studies. In 2016, Mingxing Zhu and colleagues conducted research and analysis on pharyngeal muscle activity during swallowing using high-density sEMG signals of the neck muscles (Zhu et al., Citation2016). And in 2018, Mingxing Zhu conducted research on the mechanism of human vocalisation by studying the neck muscles (Zhu et al., Citation2018). In 2019, high-density sEMG (HD-sEMG) technology were used to collect muscle signals from the face and neck muscles, and analysed the mechanism of action of each muscle during speech (Zhu et al., Citation2019). In addition to research on the mechanism of neck movements, sEMG signals of neck muscles are also used for evaluating fatigue states in the neck and upper back. In 2018, Xi Yang tested the effect of the AGS backpack on relieving fatigue in neck, shoulder, and back muscles using muscles such as the extensor of neck and trapezius (Yang et al., Citation2018). In the same year, Miao Jia and colleagues conducted research on the effects of long-term low-head posture while using mobile phones on the human neck. They mainly collected muscle data from the sternocleidomastoid muscle (primarily the anterior cervical muscle), the cervical trapezius muscle, and the cephalic muscle (mainly the posterior cervical muscle) (Jia et al., Citation2018). Kavita Kushwah and colleagues conducted an analysis of head movements using sEMG signals of neck muscles. They used sEMG signals to measure muscle activity in the upper trapezius muscle in different head positions during both standing and sitting postures. The study showed statistically significant differences in the root mean square (RMS) values of sEMG signals in different head positions, indicating that sEMG signals can be used to study good head posture (Kushwah et al., Citation2018). This article chose to collect electromyography signals from the neck muscles, specifically the sternocleidomastoid muscle and the trapezius muscle. These three muscles are relatively superficial in the neck region and directly involved in human head movements. The sternocleidomastoid muscle is the most superficial muscle in the neck region and is divided into two muscle bundles. The slender bundle attaches to the sternum, known as the sternal head, while the flat bundle attaches to the anterior surface of the inner third of the clavicle, known as the clavicular head. The collection position for this muscle is at the mid-lower belly of both muscle bundles. The lower portion of the trapezius muscle is significantly affected by shoulder movements, and considering the uncertainty of shoulder movements in complex environments, the upper part was chosen as the collection position for this muscle. The designed head movements have a minimal effect on the electrodes installed at this location, making it less likely to produce invalid signals due to electrode compression caused by head movements. In reference to neck muscle exercise methods, the experimental participants are required to turn their heads to one side. By adding resistance to head movements and inhaling, the sternocleidomastoid muscle can be emphasised. The collection position is as shown in Figure .

3. Data preprocessing and classification algorithms

This chapter introduces the algorithms used in this study. The data processing pipeline consists of bandpass filtering and rectification of the acquired raw signals as the initial step. Then, iEMG and entropy features are extracted from the initial signal for classification. Subsequently, VMD is performed, and the first six Intrinsic Mode Function (IMF) signals are extracted for further classification comparison. The feature classification is completed using the Random Forest algorithm.

In this study, we used the sliding window technique for feature extraction and extracted two time-domain features: iEMG and entropy.

iEMG: iEMG refers to the integrated value of sEMG signals over the entire detection period. It can reflect the total activation level of muscles within a certain period of time and is a feature that characterises muscle contraction. represents the signal value.

(1)

(1) Entropy: Entropy is a frequency domain feature of sEMG signals that computes the energy distribution of the signals and can be used to describe their complexity. As the signal becomes more random or complex, the value of entropy also increases.

represents the signal value.

represents the probability that the signal takes the value

.

(2)

(2)

3.1. Feature extraction based on VMD

In order to improve the accuracy of the classification algorithm, this paper performed VMD on the signals, and some features were extracted from the IMF obtained from the VMD decomposition. The VMD was proposed by Dragomiretskiy and Zosso (Citation2014). This method assumes that any signal is composed of a series of sub-signals with specific centre frequencies and finite bandwidths, i.e. IMFs. This method is based on the classical Wiener filtering theory and solves variational problems to determine the centre frequency and bandwidth limitations of the sub-signals within a signal. Then, valid components corresponding to each centre frequency in the frequency domain can be found, and the so-called mode functions can be obtained.

The VMD algorithm has several parameters, including the number of components K, regularisation parameter alpha, stopping accuracy epsilon, and iteration number n. The selection of these parameters can have an impact on the performance of the VMD algorithm. Considering that the characteristics of sEMG signals vary greatly under different actions, it is difficult to optimise a unified set of optimal VMD parameters. A unified VMD parameter may not be able to achieve the best classification results for different actions. However, in consideration of the real-time changes in VMD parameters during the classification process, it is difficult to use the features obtained by varying VMD parameters for constructing a classification model; moreover, constructing VMD with different parameters will result in excessive data. Therefore, a balance is struck, and a unified set of VMD parameters is chosen. In this paper, we optimised the VMD decomposition results for multiple sets of actions, determined the approximate range of the optimal solution, and selected a set of VMD parameters within the range for signal processing.

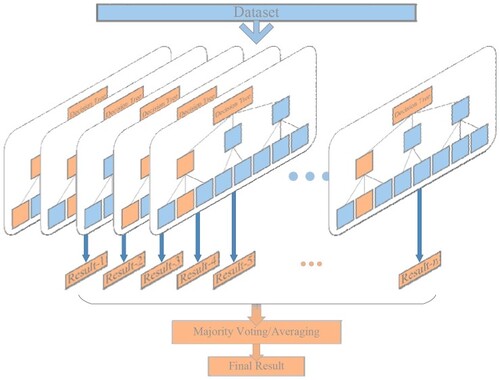

3.2. Random forest

Random Forest is a classifier or regressor composed of multiple decision trees. During training, each decision tree is constructed based on a random subset of the original dataset, as shown in Figure . The Random Forest algorithm has a wide range of applications and is one of the most widely used machine learning algorithms, including facial recognition, drug design, financial risk control, and stock market prediction, among others.

The training process of a random forest includes the following steps: (1) Randomly sample training data from the original dataset, and use bootstrap sampling to obtain r different sets of samples from the training set with replacement, each of which serves as a root node sample set for r decision trees. The remaining data after each sampling is used as out-of-bag data for evaluating model errors. (2) For any decision tree, when splitting a node, randomly select several features from all features and perform optimal variable splitting. The decision tree should be grown to its maximum extent. (3) Repeat step (2) until all decision trees have been grown. At this point, the training of the random forest is complete.

4. Experimental results and analysis

The experimental equipment used is INFO Instrument's wireless sEMG sensor PicoX, which is suitable for clinical gait analysis and other studies that require high signal quality. The product has a 2000 Hz EMG sampling frequency and a 500 Hz IMU sensor sampling frequency, a battery life of up to 10 h, can be configured with up to 36 synchronous channels, and the measurement range can be extended up to 40 m.

sEMG signals are relatively weak biological electrical signals that can be affected by various interference factors such as respiration and heart rate. Therefore, sEMG signals cannot be used directly and need to be processed through a series of treatments such as filtering for feature extraction. sEMG signals typically fall within the frequency range of around 20 Hz. Bandpass filters with a passband range of 20–500 Hz are commonly used to remove high- and low-frequency noise from muscle signals while retaining the main working frequency. By baseline drift processing of sEMG signals, the baseline of the muscle signal is moved to a constant position to make it easier to analyse and compare the signal.

Five volunteers participated in the experiment, and through ethical considerations, the subjects were informed of the experimental content before the experiment. Each volunteer collected sEMG signals from six positions. The action groups to be classified were divided into two action segments, with a total of 8 groups. In addition, sEMG data from normal working conditions were collected as the ninth category to avoid erroneous instructions.

Each action is divided into two phases: the starting phase and the holding phase. The data collection method for the starting phase is as follows: the subject relaxes the neck muscles and maintains the posture between facing forward and the position between the starting phase and the holding phase. After receiving the start command, the subject starts to collect sEMG data while adjusting the posture towards the holding phase, rotating from the current state to the holding phase action within about 1 s, and then ends the data collection. After a one-minute rest, the next set of data is collected. The data collection method for the holding phase is to hold the posture in the specified position and collect data for 5 s. After stopping the collection, a one-minute rest is taken before starting the next set of data collection.

The collected data is filtered through a bandpass filter and baseline drift is removed. The iEMG and information entropy features are extracted using sliding window algorithm, and the extracted features are used as input to train the random forest algorithm for classification. Different numbers of muscle features are extracted to compare the classification performance under different numbers of muscles. The number of muscles ranges from 2 to 6, with combinations for cases where there are fewer than six muscles. There are 15 combinations for 2 muscles, 20 combinations for 3 muscles, 15 combinations for 4 muscles, 6 combinations for 5 muscles, and 1 combination for 6 muscles. Each set of data undergoes 50 rounds of classification training, and the evaluation metrics are calculated to obtain the average values for each number of muscle combinations. The parameters for the random forest algorithm are set as follows: n_estimators is 100, and random_state is 42.

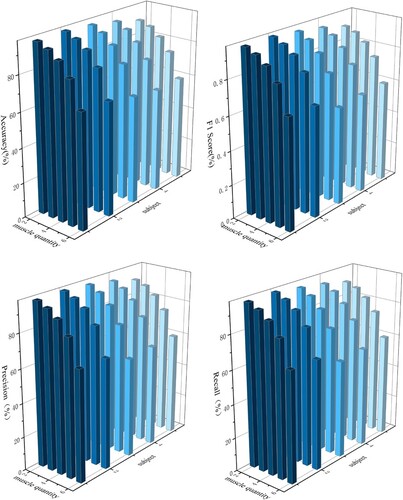

The classification results are shown in Figure .

As shown in the figure, the influence of muscle quantity on classification results is relatively stable for different subjects, and there is a clear trend change. The six muscle collection positions all contribute to classification performance, with a higher accuracy rate obtained as more muscles are collected. The highest accuracy rate is obtained when all 6 muscles are collected. As the number of muscles decreases, the rate of decrease in accuracy rate gradually accelerates. In cases where fewer muscles are collected, adding a small number of muscles can significantly improve the accuracy rate, while adding more muscles does not result in a significant improvement in efficiency. In cases where fewer muscles are collected, increasing the number of measured muscles appropriately can lead to more obvious improvements in accuracy rate. The average values of data from the five experimenters are shown in Table .

Table 1. The classification performance of the five subjects.

In addition, the standard deviation of the classification results among the recorded 50 times is shown in Figure .

When multiple muscles are collected, stable classification performance can be achieved by extracting only iEMG and information entropy features.

After comparing the classification performance based on initial signal information entropy and iEMG features, additional VMD decomposed features were added for comparison. The classification performance was then compared among the initial signal features, VMD decomposed features, and the combination of initial signal and VMD decomposed features. The number of components K is 8, regularisation parameter is 450, the stopping accuracy is 1e-7, and the maximum number of iterations n is 100.

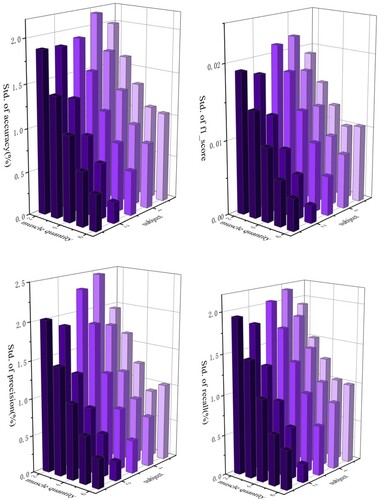

The results are shown in Figure .

As shown in the figure, adding VMD component features significantly improved the accuracy, and in the case of a small number of muscles, the accuracy improvement effect was significant. The combination of VMD decomposition features with initial signal features has the highest accuracy among the above three situations, but the improvement in accuracy is less compared to the situation where only VMD features are used. The average values of data from the five experimenters are shown in Table .

Table 2. Classification accuracy performance based on different features and muscle quantities

From Table , it can be observed that the method combining VMD decomposition features with initial signal features achieves the highest accuracy. However, the improvement in accuracy compared to the method utilising only VMD features is not significant. There are several reasons for this result.

Firstly, the VMD decomposition features are already capable of extracting crucial and effective information that reflects the characteristics of neck movements. Consequently, using VMD features alone can already achieve high classification accuracy, and the addition of initial signal features provides limited improvement in accuracy. Secondly, adding initial values may introduce some noise or redundant information, leading to overfitting issues when the model processes the data. This could weaken the performance of the model on the classification task, resulting in less pronounced improvements in accuracy.

Therefore, although combining VMD decomposition features with initial signal features improves accuracy to some extent, the improvement is relatively limited due to the aforementioned reasons. In future research, further optimisation of feature selection and model design can be explored to fully leverage the potential of initial signal features and enhance the degree of accuracy improvement.

Classification performance may also vary among different muscle combinations. As the addition of VMD features resulted in higher classification accuracy and lower standard deviation among the 50 rounds of calculations, providing a more stable classification performance, the following analysis focuses on the different muscle combinations when both VMD and initial signal features are included. The classification accuracy for different muscle combinations is shown in Table .

Table 3. Classification accuracy performance based on different muscle combinations.

The classification accuracy for different muscle groups is summarised in Table . When comparing muscle groups with the same number of muscles, the group with the lowest accuracy rate had the fewest occurrences of right sternocleidomastoid muscle clavicular head (only twice), while left sternocleidomastoid muscle clavicular head appeared the most frequently (18 times). The group with the highest accuracy rate had the most occurrences of right sternocleidomastoid muscle clavicular head (24 times), while left sternocleidomastoid muscle clavicular head appeared the least frequently (10 times). This suggests that right sternocleidomastoid muscle clavicular head may have a greater impact on neck movement recognition, while the contribution of left sternocleidomastoid muscle clavicular head to the classification performance may be less significant or unclear.

The classification accuracy data from the five subjects were integrated, and a weighted average method was used to score the performance of single muscle classification. The formula is as follows:

(3)

(3) i is the identifier of the muscle, j is the identifier of the group,

. is the accuracy of group j and m is the total number of groups that contain muscle i. The muscle scoring results for muscle groups containing 3, 4, and 5 muscles are shown in Table .

Table 4. Different muscle scoring results.

Due to differences in the experimenters’ movement habits and understanding of the movements, muscle scoring may vary among individuals, but there are certain trends. As shown in Table , the highest muscle scores within groups are most concentrated in the position of right sternocleidomastoid muscle clavicular head, which obtained the highest score 11 times, followed by right sternocleidomastoid muscle Sternal head with the highest score 4 times. The second highest scores were concentrated in right sternocleidomastoid muscle Sternal head, with 6 occurrences of the second highest scores. Right trapezius muscle, left trapezius muscle, and right sternocleidomastoid muscle clavicular head received the second highest scores 3 times each. The lowest muscle scores were mostly concentrated in the position of left sternocleidomastoid muscle clavicular head, receiving the lowest score 6 times, followed by right trapezius muscle which received the lowest score 5 times. The second lowest scores were concentrated in left sternocleidomastoid muscle clavicular head, with 6 occurrences of the second lowest score. Right sternocleidomastoid muscle Sternal head obtained the second lowest score 4 times (Table ).

Table 5. Summary of muscle scoring performance.

Based on the experimental data, it was found that the muscle positions with higher scores were mostly distributed on the neck's right side among the experimenters, while those with lower scores were distributed on the left side. The positions of the two muscles with the highest and lowest scores were the same as the two muscles previously mentioned as having the greatest and least impact on classification accuracy, respectively. This suggests that the features of muscles on the right side of the neck may contribute to better classification performance. The experimental results did not conform to the expected symmetrical distribution of the human body, which may be related to all the experimenters being right-handed.

5. Conclusion

This article proposes a control strategy based on sEMG signals of neck muscles for controlling robotic arms in conjunction with wearable combat equipment, addressing the difficulty in issuing commands to the mechanical arm. The wearer designs specific movements to generate sEMG signals. These signals undergo feature extraction, training, and classification using the RF algorithm to identify different movement primitives. When the movement primitives are arranged in a specific order, they are considered as commands to perform corresponding actions by the combat mechanical arm. Based on the results presented in this paper, it was found that classification accuracy increases with the number of muscles used for data collection. The experimental results showed that although using a unified parameter VMD algorithm for processing all surface EMG data cannot achieve optimal decomposition results, it can still improve the classification accuracy of surface EMG signals. Compared with directly extracting features from initial signals, adding VMD decomposed features can significantly improve the accuracy rate. The classification performance was better when the right sternocleidomastoid muscle clavicular head were involved, while it was slightly worse when the left sternocleidomastoid muscle clavicular was used. Based on data collected from the 5 experimenters in this study, using neck muscles on the right side for neck movement classification yielded higher accuracy compared to using muscles on the left side.

6. Discussion

The results of this study demonstrate that VMD processing can be used to expand the feature space of sEMG signals, even when the number of muscles used for data collection is low or the number of features extracted is limited, resulting in higher accuracy without increasing the number of muscles. When the number of available muscles for data collection is limited, selecting more muscles on the right side for feature extraction can yield better classification performance. In future research, left-handed experimenters will be included to investigate the reasons behind the higher classification accuracy of right-side neck muscles. The experimental findings can be applied to address the problem of non-invasive sEMG sensors detaching easily in complex environments. When the sensor falls off or the signal becomes abnormal, further processing and analysis of that signal channel should be stopped, and corresponding feature extraction methods should be designed to maintain high command recognition ability even when some channels experience problems, thereby enhancing the system's adaptability in complex environments.

Code or data availability

The data used and analysed during the study are available from the first author on reasonable request.

Acknowledgements

Thanks to the experiment participants for their help and Nanjing University of Science and Technology for its support to this study. Authors’ contributions: Kaifan Zou, Meng Zhu, Boxuan Zheng, Zihui Zhu, Changlong Jiang contributed to the design and implementation of the research, the analysis of the results, Kaifan Zou contributed to the writing of the first draft of the manuscript. Xiaorong Guan commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Kaifan Zou

Kaifan Zou, pursuing the Ph.D. degree with the School of Mechanical Engineering, Nanjing University of Science and Technology, Nanjing, China. His research focuses on wearable exoskeletons and mechanical arms, with technical expertise in path planning, surface electromyography signal processing, and mechatronic control.

Xiaorong Guan

Xiaorong Guan, Associate Professor at Nanjing University of Science and Technology, mainly researches in the following areas: individual soldier exoskeleton and intelligent equipment, electromechanical integrated lightweight weapon equipment, weapon gas dynamics, weapon system simulation and optimisation technology, new concept and principle automatic weapons, specialised experimental equipment for light weapons.

Meng Zhu

Meng Zhu received the B.S. degree from the School of Mechanical Engineering, Yangzhou University, Yangzhou, China, in 2018. He is currently pursuing the Ph.D. degree with the School of Mechanical Engineering, Nanjing University of Science and Technology, Nanjing, China. His current research interests include exoskeleton robot design and their kinetic analysis, control strategy for exoskeleton, sEMG signal analysis and application of MMG signal.

Boxuan Zheng

Boxuan Zheng received the B.S. degree from Southeast University, Nanjing, China, in 2018 and the M.S. degree in mechanical engineering from Nanjing University of Science and Technology, Nanjing, China, in 2023. He is currently a software engineer. His research interest includes human-machine interaction, hybrid EMG-Based control system and brain-like interrlgence.

Zihui Zhu

Zihui Zhu, Master of Engineering, graduated from Nanjing University of Science and Technology in 2024, with a major in Artillery, Automatic Weapons, and Ammunition Engineering. He mainly engages in research in the field of individual soldier intelligent equipment technology.

Changlong Jiang

Changlong Jiang, pursuing the Ph.D. degree with the School of Mechanical Engineering, Nanjing University of Science and Technology, Nanjing, China, majored in the human-machine coupling dynamics of exoskeleton robots. He has knowledge reserve and practical experience in the structural design, manufacturing of exoskeleton robots and dynamic simulation analysis.

References

- Al-ada, M., Höglund, T., Khamis, M., Urbani, J., & Nakajima, T. (2019). Orochi: Investigating requirements and expectations for multipurpose daily used supernumerary robotic limbs. In Ah2019: Proceedings of the 10th Augmented Human International Conference 2019, New York, NY, USA, March (pp. 1–9), Article 37.

- Amoruso, E., Dowdall, L., Kollamkulam, M. T., Ukaegbu, O., Kieliba, P., Ng, T., Dempseyjones, H., Clode, D., & Makin, T. R. (2022). Intrinsic somatosensory feedback supports motor control and learning to operate artificial body parts. Journal of Neural Engineering, 19(1), Article 016006. https://doi.org/10.1088/1741-2552/ac47d9

- Aronson, R. M., & Admoni, H. (2019). Semantic gaze labeling for human-robot shared manipulation. In The 11th ACM Symposium, June (pp. 1–9), Article no. 2.

- Bright, Z., & Asada, H. H. (2017). Supernumerary robotic limbs for human augmentation in overhead assembly tasks. In Robotics: Science and Systems (pp. 1–10).

- Dragomiretskiy, K., & Zosso, D. (2014). Variational mode decomposition. IEEE Transactions on Signal Processing, 62(3), 531–544. https://doi.org/10.1109/TSP.2013.2288675

- Fukuoka, M., Verhulst, A., Nakamura, F., Takizawa, R., Masai, K., & Sugimoto, M. (2019). Facedrive: Facial expression driven operation to control virtual supernumerary robotic arms. In International Conference on Computer Graphics and Interactive Techniques, New York, NY, USA (pp. 9–10).

- Gopinath, D., & Weinberg, G. (2016). A generative physical model approach for enhancing the stroke palette for robotic drummers. Robotics and Autonomous Systems, 86, 207–215. https://doi.org/10.1016/j.robot.2016.08.020

- He, Y. T., Eguren, D., Azorin, J. M., Grossman, R. G., Luu, T. P., & Contreras-Vidal, J. L. (2018). Brain-machine interfaces for controlling lower-limb powered robotic systems. Journal of Neural Engineering, 15(2), 1–15.

- Jia, M., Yang, Z. L., Chen, Y. M., & Wen, Y. L. (2018). Neck muscles fatigue evaluation of subway phubber based on sEMG signals. In 2018 11th International Symposium on Computational Intelligence and Design (ISCID), Hangzhou, China (pp. 141–144).

- Kieliba, P., Clode, D., Maimon-Mor, R., & Makin, T. (2021). Robotic hand augmentation drives changes in neural body representation. Science Robotics, 6(54), 1–13. https://doi.org/10.1126/scirobotics.abd7935

- Kieliba, P., Clode, D., Maimon-Mor, R. O., & Makin, T. R. (2020). Neurocognitive consequences of hand augmentation. Cold Spring Harbor Laboratory.

- Koochaki, F., & Najafizadeh, L. (2018). Predicting intention through Eye gaze patterns. In 2018 IEEE Biomedical Circuits and Systems Conference (BioCAS), Cleveland, OH, USA (pp. 1–4).

- Kushwah, K., Narvey, R., & Singhal, A. (2018). Head posture analysis using sEMG signal. In 2018 International Conference on Advanced Computation and Telecommunication (ICACAT), Bhopal, India (pp. 1–5).

- Llorens-Bonilla, B., Parietti, F., & Asada, H. H. (2012). Demonstration-based control of supernumerary robotic limbs. In 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura, Algarve, Portugal, October 7–12 (pp. 3936–3942).

- Maekawa, A., Takahashi, S., Saraiji, M. Y., Wakisaka, S., Iwata, H., & Inami, M. (2019). Naviarm: Augmenting the learning of motor skills using a backpack-type robotic arm system. In The 10th Augmented Human International Conference 2019, march (pp. 1–8).

- Nabeshima, J., Saraiji, M. Y., & Minamizawa, K. (2019). Arque: Artificial biomimicry-inspired tail for extending innate body functions. In ACM SIGGRAPH 2019 posters (SIGGRAPH ‘19), New York, NY, USA (pp. 1–2), Article 52.

- Nguyen, P. H., Imran Mohd, I. B., Sparks, C., Arellano, F. L., Zhang, W., & Polygerinos, P. (2019). Fabric soft poly-limbs for physical assistance of daily living tasks. In 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada (pp. 8429–8435).

- Parietti, F., & Asada, H. H. (2014). Supernumerary robotic limbs for aircraft fuselage assembly: Body stabilization and guidance by bracing. In 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China (pp. 1176–1183).

- Parietti, F., Chan, K. C., Hunter, B., & Asada, H. H. (2015). Design and control of supernumerary robotic limbs for balance augmentation. In 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA (pp. 5010–5017).

- Penaloza, C., Hernandez-Carmona, D., & Nishio, S. (2018). Towards intelligent brain-controlled body augmentation robotic limbs. In 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Miyazaki, Japan (pp. 1011–1015).

- Sasaki, T., Saraiji, M. Y., Fernando, C. L., Minamizawa, K., & Inami, M. (2017). Metalimbs: Multiple arms interaction metamorphism. In Siggraph ‘17: ACM SIGGRAPH 2017 Emerging Technologies, July (pp. 1–2), Article 16.

- Sasaki, T., Saraiji, M. Y., Minamizawa, K., & Inami, M. (2018). Metaarms: Body remapping using feet-controlled artificial arms. In Adjunct Proceedings of the 31st Annual ACM Symposium on User Interface Software and Technology, October (pp. 140–142).

- Seo, W., Shin, C. Y., Choi, J., Hong, D., & Han, C. S. (2016). Applications of supernumerary robotic limbs to construction works: Case studies. In ISARC 2016 - 33rd International Symposium on Automation and Robotics in Construction (pp. 1041–1047).

- Tomoya, S., Saraiji, M. Y., Fernando, C. L., Minamizawa, K., & Inami, M. (2017). Metalimbs: Metamorphosis for multiple arms interaction using artificial limbs. In ACM SIGGRAPH 2017 Posters, July 30–August 03.

- Veronneau, C., Denis, J., Lebel, L. P., Denninger, M., & Plante, J. S. (2020). Multifunctional remotely actuated 3-DOF supernumerary robotic Arm based on magnetorheological clutches and hydrostatic transmission lines. IEEE Robotics and Automation Letters, 5(2), 2546–2553. https://doi.org/10.1109/LRA.2020.2967327

- Xu, C. L., Lin, K., Yang, C., Wu, C. H., & Gao, X. R. (2016). Cooperative intelligent robot control method using leg surface EMG signals. Chinese Journal of Biomedical Engineering, 35(4), 385–393.

- Yang, X., Li, Y., Guo, L., Sui, X. Y., Ng, P. P.-T., & Kwan, B. W.-F. (2018). A comparative study of AGS and Non-AGS backpacks on relieving fatigue of neck and back muscles. In 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Miyazaki, Japan (pp. 2370–2375).

- Zhang, B. K., Liu, H., Zheng, W. X., Ji, H., Zhang, J. H., Li, T., & Zhang, Q. J. (2022). Development of robot chess-playing system based on voice control. Scientific and Technological Innovation, 25, 159–162.

- Zhang, Y. Z., Zheng, S. J., & Pan, L. (2020). Writing robot system with four degree of freedom trajectory planning based on speech control. Journal of Computer Applications, 40(S01), 284–288.

- Zhu, M. X., Huang, Z., Wang, X. C., Zhuang, J. S., Zhang, H. S., Wang, X., Yang, Z. J., Lu, L., Shang, P., Zhao, G. R., Chen, S. X., & Li, G. L. (2019). Contraction patterns of facial and neck muscles in speaking tasks using high-density electromyography. In 2019 13th International Conference on Sensing Technology (ICST), Sydney, NSW, Australia (pp. 1–5).

- Zhu, M. X., Lu, L., Yang, Z. J., Wang, X., Liu, Z. Z., Wei, W. H., Chen, F., Li, P., Chen, S. X., & Li, G. L. (2018). Contraction patterns of neck muscles during phonating by high-density surface electromyography. In 2018 IEEE International Conference on Cyborg and Bionic systems (CBS), Shenzhen, China (pp. 572–575).

- Zhu, M. X., Yang, W. Z., Samuel, O. W., Xiang, Y., Huang, J. P., Zou, H. Q., & Li, G. L. (2016). A preliminary evaluation of myoelectrical energy distribution of the front neck muscles in pharyngeal phase during normal swallowing. In 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA (pp. 1700–1703).

- Zhu, P. L., Su, Z. X., Tang, J. X., Wu, Y. X., Yu, S. J., & Tang, H. Y. (2022). Lower limb exoskeleton control system based on shoulder muscle surface electromyogram. Beijing Biomedical Engineering, 41(5), 510–515.