Abstract

This study investigates the perceptions of teachers and students toward formative assessment (FA) in higher education settings. The researchers developed a four-construct perception scale, namely self-assessment, interactive formal assessment, in-class diagnostic assessment, and subjective assessment. Data were collected from 216 participants—91 teachers and 125 students. The findings demonstrated that both teachers and students have identically perceived interactive and in-class diagnostic assessments. Nonetheless, they distinctively perceived self-assessment and subject performance assessment demonstrating a significant difference. The students reported self-assessment greater than the teachers, whereas they perceived the subject-performance assessment lower compared to the teachers. The findings suggest that English as a foreign language (EFL) or English as a second language (ESL) learners benefit from formative assessment if teachers evaluate students’ progress based on their own development rather than being evaluated in comparison to other students’ development.

PUBLIC INTEREST STATEMENT

Formative assessment plays a significant role in supporting students’ learning. This study aimed to develop a scale to measure how English university teachers and students perceive different types of formative assessment. The study also examined whether any significant difference exists between the two parties. The researchers sent an online survey to 91 university teachers and 125 students. The results revealed that teachers and students perceived in-class diagnostic and interactive assessments similarly. Yet, they perceived subject performance assessment and self-assessment differently. More specifically, the students perceived self-assessment greater compared to the teachers. However, they reported the subject-performance assessment lower than the teachers. The researchers provided following suggestions: reconceptualizing the formative assessment, assessing students’ progress according to their development without comparing them with other learners’ progress, improving students’ motivation to influence their perceptions of formative assessment positively, conducting formative assessments on a regular basis, Giving feedback on time, and providing institutional support.

1. Introduction

Educational researchers and teachers have had much interest in formative assessment (FA) over the past few decades as this assessment type reflects and enhances student learning (Brazeal et al., Citation2016; Ozan & Kincal, Citation2018). Contemporary literature depicts the significant impact of formative assessment on student achievement. Researchers have proposed almost a similar definition of formative assessment—a process which involves exchanging feedback, aiming to improve the quality of learning and teaching process, resulting in enhancing learner autonomy and maximizing learning outcome (Black & Wiliam, Citation2018; McManus, Citation2008; Van der Kleij et al., Citation2018). Formative assessment allows teachers to become reflective practitioners, collecting ongoing feedback about student progress and planning their future lessons (Wuest & Fisette, Citation2012). Formative assessment, a “policy pillar of education significance” (Van der Kleij et al., Citation2018, p. 620), is broadly perceived as an acceptable classroom practice for teachers and decision making tasks for educational authorities (Torrance, Citation2012).

Researchers in various contexts, particularly in ESL/EFL settings, have investigated the effectiveness of formative assessment in teaching and learning. Ozan and Kincal (Citation2018) in Turkey, through a mixed-methods study, found that ESL students in their experimental group had a higher achievement level. Similarly, Lee (Citation2011), in a case study in China, found that formative assessment has a long-term positive impact on student writing skills. In Afghanistan’s neighboring countries like Pakistan and Iran, formative assessment has received a lot of attention as well. For instance, Haq et al. (Citation2020) found that formative assessment facilitated Pakistani college students’ English writing skills. Naghdipour (Citation2017) found that although formative assessment is beneficial for learning and teaching, its incorporation in teaching is challenging.

2. Problem statement

Most university teachers believe that the feedback they offer for their students is unbiased, in depth comprehensible, motivating, and productive (Mulliner & Tucker, Citation2017). The teachers also frequently make an underlying assumption that the learners understand the ways to implement their feedback and improve their performance. However, all the learners do not essentially perceive and interpret the teachers’ feedback the same as their teachers (Orsmond & Merry, Citation2011; Weaver, Citation2006).

There has been less previous practical evidence for how formative assessment could inform university teachers’ effective practices, and shape learners’ performance (Dunn & Mulvenon, Citation2009), especially in the context of Afghanistan. The context is underrepresented in the field of English language studies (Nazari et al., Citation2021), and none of the previous research explored teachers and students’ perceptions of formative assessment, which is a relatively new concept, in Afghanistan. As individuals’ perceptions are subject to change by the influence of contexts (Borg, Citation2003), it is crucial to conduct a research to develop a relevant scale to investigate how Afghan EFL teachers and learners perceive formative assessment.

To address this gap, this quantitative study aimed at designing and validating a scale for EFL classroom assessment. Hence, the literature on classroom assessment was meticulously examined and four sub constructs, namely self-assessment, interactive-informal assessment, in-class diagnostic assessment, and subject-performance assessment, were extracted. Therefore, the following research questions guide this study:

To what extent the formative assessment perception scale fulfill psychometric properties (reliability and validity)?

How do Afghan EFL university teachers and students perceive about formative assessment?

Are there any significant differences between Afghan teachers and students with respect to their perceptions of formative assessment?

This study on students’ and teachers’ perceptions toward formative assessment opens threads for further discussion on formative assessment, and its contributions to our field have broad applicability. Teachers in both ESL and EFL contexts can benefit from understanding how teachers’ perceptions toward a concept could vary from those of their students and how that perception might influence their practices. This can impact policymakers and curriculum designers’ decision making in the materials they develop and the number of sessions they allocate for each subject or course.

3. Concept clarification

Assessment as a core concept is the process of accumulating data and appraising students’ knowledge of a particular language and skills to apply it (Chapelle & Brindley, Citation2010). Brown and Abeywickrama (Citation2019) argued that assessment is also a continuous process that embraces various methodological strategies and tactics and emerges in different types among which formative assessment is a salient one. Bennett (Citation2011) argued that the term formative assessment have not represent precise practices and artefacts yet. Existing definitions acknowledge different enactments that the impacts need to diverge extensively from a particular learners’ population and specific enactment to the next one. Brown and Abeywickrama (Citation2019) defined formative assessment as:

Evaluating students in the process of “forming” their competencies and skills with the goal of helping them to continue that growth process. The key to such a formation is the delivery (by the teacher) and internalization (by the student) of appropriate feedback on performance, with an eye toward the future continuation (or formation) of learning. (p. 8)

Formative assessment itself constitutes various conceptual categories. Self-assessment is a “wide variety of mechanisms and techniques through which students describe (i.e., assess) and possibly assign merit or worth to (i.e., evaluate) the qualities of their own learning processes and products” (Panadero et al., Citation2016, p. 804). In contrast, informal assessment embraces a wide continuum of feedback provided for the learners (Brown, Citation2004). It can also be of several forms, beginning with nonjudgmental, random, spontaneous remarks and replies, accompanying instructions and other impromptu comments to the learners (Brown & Abeywickrama, Citation2019). However, a diagnostic assessment aims to recognize various features of a language which a learner is required to improve as well as what a language course needs to cover (Brown & Abeywickrama, Citation2019). On the contrary, scaffolding refers to the process of assisting less experienced learners to realize their potential via support from knowledgeable the other (Vygotsky, Citation1978). The support is given via social interaction within a learner’s zone of proximal development, the gap between learners’ potential and actual development. The teacher identifies learning gaps and utilizes various scaffolding strategies as formative assessment. The teacher observes the learner’s development and engagement and subsequently, provides him feedback.

4. Theoretical framework to develop perception scale

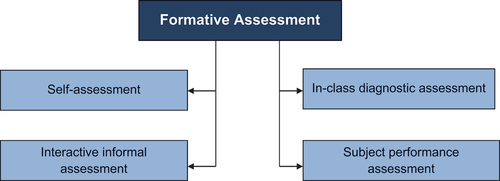

Gan et al. (Citation2019) found that the most salient formative assessment categories included: (1) self-assessment (Butler & Lee, Citation2010; Qasem, Citation2020; Vasu et al., Citation2020), (2) interactive informal assessment, (3) in-class diagnostic assessment, (4) subject performance assessment, (5) teacher scaffolding. However, due to the limited scope, this study only employed the first four categories to develop a scale, measure the university teachers’ and students’ perceptions and identify the possible discrepancies between the two parties (See ).

The reasons to select Gan et al.’s (Citation2019) study for the theoretical framework are twofold: 1) the research mainly focused to appraise what assessment practices EFL students undergo in the classroom and their impacts on the students’ emotional investment in learning process; 2) it also developed a classroom assessment practices questionnaire conducting exploratory factor analysis due to the absence of hypotheses regarding the underlying frame for the scale.

Qasem (Citation2020) defined learners’ self-assessment (LSA) as a facilitating and supportive task which is well integrated in task-based teaching and student-centered approach. Self-assessment can offer ample opportunities for ESL learners to have control over their learning (Vasu et al., Citation2020). M. Oscarson (Citation2013) argued that learners’ self-assessment skills are important for evaluating their outcomes and identifying a meaningful connection between this practice and their learning. Qasem (Citation2020) also explored significant impacts of LSA on students’ learning performance. LSA develops students’ engagement, emotional investment, and English language skills as they get indirect but constructive feedback to ensure their success. It also enhances students’ autonomy in the learning process. In a similar vein, Butler and Lee (Citation2010) found that LSA boosted students’ confidence and performance when learning English. It also has good potential to minimize maladaptive behaviors compared to indirect teacher feedback (Vasu et al., Citation2020). Furthermore, A. D. Oscarson (Citation2009) stated two major benefits of LSA on the students. They can perform the self-assessed learning tasks more effectively and at the same time, LSA enhances their self-regular practices. LSA develops self-regulated learning “goal setting, strategy planning, strategy use, attribution and adaptive behavior” (Vasu et al., Citation2020, p. 1). Therefore, teachers and learners maintained a positive perception of self-assessment. However, Butler and Lee (Citation2010) found that the perception of LA practice and its effectiveness varied based on the contexts. Despite these benefits and varied perceptions, Qasem (Citation2020) stated that some students do not engage in this type of assessment due to not having enough ESL proficiency.

Interactive-informal assessment is also one of the formative assessment strategies that English language teachers use in the L2 learning context to understand and ensure that students learned the concepts during in-class activities. This type of assessment can include various tasks and strategies such as questioning, one-on-one conferencing, dialogic feedback and so forth (Ruiz-Primo, Citation2011). Gan et al. (Citation2019) argued that this type of formative assessment could serve as a good strategy to understand and promote learners’ emotional investment and internal drives. Interactive-informal assessment works well if it is dynamic and engages both teacher and students in meaningful dialogue (Carless, Citation2011). Despite its significance, this assessment type is not part of university assessment policies and even some English teachers ignore it.

Another formative assessment type is diagnostic assessment. Jang and Wagner (Citation2013) stated, “Diagnostic assessment enables teachers to make inferences about learners’ strengths and weaknesses in the skills being taught” (p. 1). Gan et al. (Citation2019) found that in-class diagnostic assessment was frequently used in English language classrooms. In this type of assessment, a teacher may use group discussion, assess this collective dialogic interaction, and use several quizzes to check the learners’ comprehension of the concepts. Xiao and Yang (Citation2019) stated that in-class diagnostic assessment also supports learners’ self-regulation in L2 learning. Promoting students’ generated quizzes, the study found that designing quizzes empowered learners by providing opportunities to evaluate the quizzes at the process level and learn through. In a similar vein, Jang and Wagner (Citation2013) questioned the assumption that gave students a passive role as exclusively feedback recipients. The authors explored diagnostic assessment beyond the cognitive domain; viewed students as change agents in the process, examining their non-cognitive characteristics of perception on assessment and learning. They claimed that learners’ goal orientations facilitate how students interpret feedback and apply it in their learning process. Kim (Citation2015) discussed the feasibility of cognitive diagnosis assessment in various attributes such as strategy, skills and knowledge gained by students. Despite its perceived usefulness, some teachers shaped negative perceptions about diagnostic assessment and its ultimate purpose in the classroom (Jimola & Ofodu, Citation2019).

Ultimately, Gan et al. (Citation2019) found that learners frequently experienced performance-oriented assessment practices. Marhaeni et al. (Citation2019) suggested that this type of assessment can support students’ learning ownership and promote their writing achievement in English classes. Palm (Citation2008) argued that performance assessment could provide ample opportunities to better measure students’ communication and complicated skills. Despite these positive affordances, teachers’ perception and willingness to conduct performance assessment heavily relies on the context and whether related instruments are available or not.

5. Literature review

A plethora of existing literature defines formative assessment as an assessment for learning (Lee et al., Citation2019; Leong et al., Citation2018; Wei, Citation2010). Contemporary literature shows the crucial roles of formative assessment in higher education. Some scholars argue that using formative assessment in teaching empowers students to grow and become self-regulated learners (Yorke, 2003, Xiao & Yang, Citation2019; Yastibas & Yastibas, Citation2015; Zou & Zhang, Citation2013), and some claim that formative assessment functions as an external factor in motivating students to learn (Andersson & Palm, Citation2017; Yu et al., Citation2020; Zhan, Citation2019). According to Shana and Abd Al Baki (Citation2020), formative assessment allows learners and teachers to understand the extent students have learned during a timespan. Despite numerous published research on formative assessment, the concept has been considered an enigma in the field.

Recent studies focus on the effects of formative assessment on student motivation (Fong et al., Citation2019; Hwang & Chang, Citation2011; Lee et al., Citation2018; Tang & Liu, Citation2018; Waller & Papi, Citation2017; Yu et al., Citation2020; Zhan, Citation2019), and effects of formative assessment on learning progress (Black & Wiliam, Citation2006; Carless, Citation2011; Clinchot et al., Citation2017). These studies revealed either similar or conflicting results about university teachers’ and students’ perceptions depending on contextual factors, different research methods and theoretical frameworks.

6. Formative assessment and students’ learning motivation

Dweck (Citation2017) argued that students’ goal orientation is not fixed and can be changed by the way feedback is given. The determination and feedback sign are the predictors of changes in motivation and performance (Richard, Citation2003). Many scholars attempted to examine FA and learning motivation. Identifying the deficiency in evidence about effects of feedback on writing motivation, Yu et al. (Citation2020) developed comprehensive L2 feedback measures to examine how different feedback influences students’ degree of writing motivation in the Chinese EFL environment. This Likert-scale instrument was designed to evaluate teacher writing feedback aspects, consisting of writing process-oriented feedback, scoring/evaluative feedback, expressive feedback, written corrective feedback (WCF), and peer feedback. The study revealed that expressive feedback appeared to be the most common L2 writing feedback type and written corrective feedback the least common. It also showed that students’ writing motivation level dropped due to process-oriented and written corrective feedback. However, expressive, self, peer and scoring feedback improved learners’ motivation. Waller and Papi (Citation2017) also investigated how writing motivation impacts learners’ orientations toward WCF. The study revealed that writing motivation was related to this orientation. Tang and Liu (Citation2018) argued that L2 writing feedback might affect students’ motivation in terms of anxiety, writing self-efficacy, self-regulating and goal-seeking behaviors. .

However, Zhan (Citation2019) examined students’ perceptions of continuous assessment (CA) functions on their learning in a college English course using semi-structured interviews. The study revealed that learners’ perceptions toward this type of assessment improved the formative promises and most students believed that it served as a motivating force for learning. It also revealed that less than half of the students perceived the judgmental role of continuous assessment. The study also discussed that weak informing and strong extrinsic motivational roles could limit students’ continuous learning; the participants’ perception of CA as a stimulus was ecologically rational due to education system, examination culture, and university assessment policies in China, whereas lecturer-based feedback and grading scores as well as context shift from secondary school to college led to lower recognition of CA as a motivator.

7. Effects of formative assessment on learning progress

Evans et al. (Citation2014) found that formative assessment can promote students’ engagement in the learning process and have learners maintain positive responses to various assessments. Most teachers redefined formative assessment to an interactive, learning-oriented, dynamic and reflective pedagogy: Assessment for Learning. This formative assessment engages students to take key roles in the learning process by adopting the assessment values. It also involves teachers to provide support for understanding the goals, constructive feedback, incorporating the results of assessment for designing the lessons ahead, and enough scaffolding to fill the learning gaps (Black & Wiliam, Citation2006). Clinchot et al. (Citation2017) argued that it would be much more effective if teachers make this formative assessment responsive to students’ needs. Lee et al. (Citation2019) also claimed that assessment as learning could enhance students’ learning. The authors examined applying this type of assessment in two writing classrooms from teachers’ and students’ viewpoints, specifically aiming to explore the perceived pros and cons. The study revealed that teachers’ attempts improved writing assessment literacy. It could be effective if they constantly participate in relevant professional development programs to enrich current assessment as learning practices and empower learners to be assessment adept. Aligned with students’ central role, Jiang (Citation2014) examined questioning as a formative assessment strategy used to encourage learners’ thinking, show their learning level, and provide the basis to inform pedagogical decisions in the classrooms. Cropley and Cropley (Citation2016) further suggested that formative computer-assisted assessment can help teachers to promote students’ creativity; this technology can also offer feedback on different aspects of a product, person, process, and press, the four aspects of creativity.

A burgeoning body of research exists on students’ and teachers’ perceptions about the ways to implement formative assessment. Bhagat and Spector (Citation2017) argued that technology supports formative assessment to improve learning attitude, motivation, and performance in different disciplinary fields. In a similar vein, Baleni (Citation2015) examined online formative assessment in higher education and its affordances from university teachers’ and students’ point of views. After using different formative assessment techniques, including online tests and forums, the researcher identified several benefits. The online formative assessment resulted in enhancing learners’ commitment; their flexibility in taking the tests; providing quicker feedback, saving time and cost for grading and administration.

8. Perceptions of formative assessment in different contexts

Feedback was mostly viewed as an unidirectional process from teacher to students in the past (Hattie & Gan, Citation2011); this perspective did not support students’ learning to a greater extent. Similarly, the scholars previously accentuated the significance of the sender, receiver, and quality of feedback on learning progress while excluding the ways feedback was perceived by teachers and students. However, it became recently apparent that the role of students’ perception and the extent they meaningfully engage with the feedback determined learning success (Winstone et al., Citation2017). A direct relationship exists between the level of learning engagement and the perception about formative assessment and feedback. Hence, several scholars attempted to examine the perceptions about formative assessment across different contexts and its effects on learning. For instance, In Saudi Arabia, formative assessment is positively perceived due to improving college students’ learning outcomes. The university teachers’ perceptions were also influenced by a number of factors, including educational level and teaching experience (Alsubaiai, Citation2021). In Netherland, Leenknecht et al. (Citation2020) examined the extent formative assessment supports university students’ motivation and investigated the mediating role of need satisfaction. The findings revealed that the learners’ perception of FA’s use is positively connected to their autonomy, competence level, and autonomous motivation.

College students have been assessed to explore the extent they learn from the tested topics at Kent State University, USA. Through predictive category learning judgments (CLJs), they approximately calculated the number of questions they could properly respond to a range of designated subject matters. They completed postdictive CLJs for similar topics after the test. The results revealed that they had a better formative evaluation afterward. This study concluded with creating more accurate postdictive CLJs. The average students’ performance could also limit their aptitude to evaluate their topical knowledge (Rivers et al., Citation2019). Since formative assessment practices are not informed and used by the teachers every so often, Box et al. (Citation2015) examined contextual components, either internal or external, that limited or supported this type of assessment. Including Cornett’s curriculum development model of personal practice theories as a framework, the study showed that diverse differences existed among teacher participants’ in terms of personal practice. Many restraining or supporting factors were also identified that influenced the perceptions about formative assessment use, including teachers’ knowledge, habits, high-stakes tests, learners’ dispositionality, teacher-student expectations, and implementing a directive instead of non-directive constructive teaching method, and finally, the tension to cover the curriculum. Chow and Hollo (Citation2018) argued that teachers’ perception of students’ language and behavioral performance should have been given much attention. Their study revealed that a low degree of agreement existed between teacher language ratings and assessment. It also showed that language measures had distinct differences and varied based on students’ behavioral characteristics.

Andersson and Palm (Citation2017) examined the impact of formative assessment training on a teacher professional development program on students’ performance in a Swedish context. The study revealed that the intervention group outperformed the controlled group indicating that the training shaped teachers’ perceptions about related formative assessment practices using different strategies. It, in turn, improved students’ academic achievement. However, the study called for extending the collaboration level. Moreover, it identified very varied multi-level learners and short duration of related PD programs as constraints for developing formative assessment practices. Similarly, Widiastuti et al. (Citation2020) examined the discrepancies between teachers’ perception and formative assessment in EFL classrooms. The study revealed that the teachers who had higher levels of continuous professional development maintained more positive perception toward formative assessment.

Havnes et al. (Citation2012) found that the ways teachers and students’ perceive feedback practices varied significantly across gender and within subjects. Xiao and Yang (Citation2019) argued that formative assessment improves students’ self-regulated English learning practices in a Chinese context. The study showed that students were engaged in formative assessment practices and became self-regulated learners. The students maintained a positive perception of these practices, which supported their English language learning and self-regulation skills throughout the process. However, these perceptions about formative assessment are subject to change by the influence of contexts (Borg, Citation2003). More importantly, assessing the teachers’ and students’ perceptions and identifying discrepancies provide significant insights about effective implementation of formative assessment in language classrooms. Therefore, developing a perception scale can improve quality of FA and in turn, maximize learning outcomes (Van der Kleij, Citation2019). In addition, even though perceived usefulness associated with formative assessment in higher education seems to be well understood, there is little known about teachers’ and students’ perceptions of FA and its discrepancies in an underrepresented context like Afghanistan.

9. Methodology

9.1. Participants and settings

Participants of this study were 91 Afghan EFL university teachers (42.1%) and 125 students (57.9%). Using convenience sampling, the researchers recruited the academic staff and student participants from different higher education institutions, including Herat University. Most teacher participants’ were holding MA degree (n = 85) and a few had PhD (n = 6).They taught English as a Foreign Language and various subjects, including American and British Literature, Introduction to Research, Academic, Business, Creative Writing, and many other courses. The students’ education ranged from undergraduate (n = 95) to MA graduate (n = 30). The students’ age falls between 18 to 24 years (M = 21.50, SD = 1.71). However, university teachers’ age ranged from 25 to 40 years (M = 29.60, SD = 4.21). The participants’ native languages were Dari and Pashto. Prior to conducting the study, the researchers obtained the participants’ consent and approval. The authors also followed confidentiality by keeping the data in a secure place, and ensuring the participants that their personal data will not be disclosed without their permission.

Education in Afghanistan has been influenced by various administrative and ideological factors over the past decades. Several international organizations contributed to support the reconstruction process especially in education. Similarly, English language instruction has undergone various changes due to existing demand in the society. The educational institutes highlighted the importance of English and even the Ministry of Higher Education (MoHE) planned to make English the primary medium of instruction in the universities. Therefore, it focused on teaching English and assessing students’ language knowledge and skills. MoHE delineated the assessment policies and types in the Ministry of Higher Education Development Program (2018) including both formative and summative assessments. However, MoHE assigned more scores for later assessment type compared to FA and it is mainly due to the relevant top-down policies which undermined the significance of formative assessment on maximizing learning outcomes and limited teachers’ agency in this regard (Golzar et al., Citation2022; Nazari et al., Citation2021)

9.2. Data collection

To examine the university teachers’ and students’ perceptions of formative assessment, a survey questionnaire was designed and developed according to Gan et al.’s (Citation2019) study results. Gan et al.’s (Citation2019) identified five most salient formative assessment categories (1) self-assessment (Butler & Lee, Citation2010; Qasem, Citation2020; Vasu et al., Citation2020), (2) interactive informal assessment, (3) in-class diagnostic assessment, and (4) subject performance assessment, (5) teacher scaffolding. However, due to the limited scope of this study, the researchers only focused on the first four FA categories. Subsequently, 25 items were extracted and designed based on the review of literature. The construct of self-assessment included 6 items, interactive informal assessment was comprised of 8 items, in-class diagnostic assessment had 6 items, and subject performance assessment built-in 5 items.

To have easier access to the participants, the researchers used Google Forms. This online survey included questions to obtain demographic information (age and education) and 25 five-point Likert scale items, containing strongly agree (5), agree(4), neutral(3), disagree(2), and strongly disagree(1), to gain quantitative data. The scale was used for both university teachers and students. Moreover, for face validity, 4 EFL expert teachers were requested to analyze the items and pinpoint any ambiguity they observe in the items. They all verified that the items were perfectly comprehensible. Ultimately, the survey link was sent to the university teachers and students to fill up through emails and Telegram. It was further validated and also improved by removing some items. Data collection was conducted between November and December of 2021.

9.3. Data analysis

After collecting data, the Analysis of Moment Structures (AMOS) software was used to run confirmatory factor analysis (CFA) to validate the scale. In addition, the Statistical Package for Social Sciences (SPSS) software was employed to obtain descriptive statistics (Mean, Standard Deviation, Std. Error Mean, and etc.) to understand university teachers’ and students’ perceptions of formative assessment. It was also utilized to gain inferential statistics to examine the differences between the two groups’ perceptions of formative assessment through t-test.

10. Results

10.1. CFA and reliability of the scale

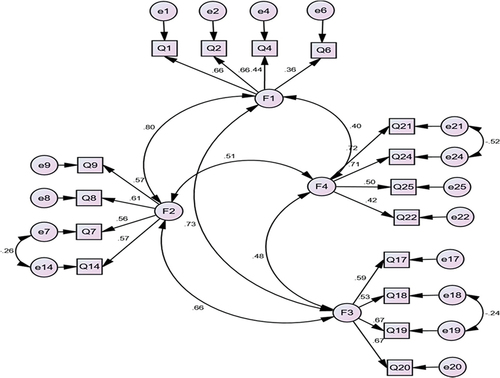

To substantiate the construct validity of the newly-developed scale of the study i.e., formative assessment perception scale, Confirmatory Factor Analysis (CFA) was used (Figure ). The scale includes four sub-constructs of self-assessment, interactive-informal assessment, in-class diagnostic assessment, and subject-performance assessment. To improve the model fit, items which had a factor loading below 0.4 (i.e., Q3, 5, 10, 11, 12, 13, 15, 16, 23) were removed from the scale. Table presents initial factor loadings for the scale items. Afterwards, CFA was run again and factor loadings after removing the items are presented in Table . Goodness-of-fit indices are reported in Table . The criterion for acceptance is different across researchers; in the present study χ2/ df should be less than 3 (Ullman, Citation2001), TLI and CFI should be over .90, and RMSEA should be less than .08 (Browne & Cudeck, Citation1993). Based on the obtained results, the model fits the data adequately, hence confirming the structure of the formative assessment perception scale. In addition, the Cronbach alpha estimated for this scale was .82, confirming the reliability of the scale. After removing 9 items, the improved perception scale included 16 items which were equally distributed to four sub-constructs (See Appendix A).

Table 1. Initial item factor loadings

Table 2. Final item factor loadings

Table 3. Goodness of fit indices

10.2. Perceptions of teachers and students

Table demonstrates the descriptive statistics of the two groups. Regarding the first, second and third sub-constructs i.e. self-assessment, interactive assessment, and in-class diagnostic assessment, teachers and students obtained a mean score between 16 and 17 indicating that they almost agreed with the statements in these three sub-constructs (Items of the scale are in Appendix A). That is to say, regarding self-assessment, university teachers and students both unanimously agreed that students should choose their learning needs and objectives, assess their progress, and use concept mapping to evaluate their learning. With respect to interactive informal assessment, there was consensus on the usefulness of peer feedback for learners, and on teachers’ responsibility to correct learners’ mistakes, assess their responses and check their understanding through interaction. With regards to In-class diagnostic assessment, there was general agreement that university teachers should conduct diagnostic assessments and announced quizzes to identify learners’ needs and assess their understanding respectively. The teachers were also expected to examine the learners’ gap of knowledge and use the available materials to check their understanding. As for the fourth sub-construct of the study, i.e., subject performance assessment, however, university teachers and students showed different levels of agreement with the statements. In general, the teachers agreed that they should use multiple choice questions, essay writing, gap fill, and short-answer questions for evaluation purposes while students viewed these items somewhat neutrally.

10.3. Mean differences

In order to check the mean differences between English language learners and university teachers with respect to the four factors of the study, a series of independent sample t-tests were run. Table presents the results of the independent samples t-test across the four factors between the teachers and students. As indicated in Table , there were significant differences between the two groups with regards to self-assessment (t = −2.06, p < .05) and subject performance assessment (t = −3.71, p < .05). According to Table , mean scores of the university teachers on the self-assessment factor (Teacher, M = 16.26; Student, M = 16.89) as well as the subject-performance assessment factor (Teacher, M = 14.80; Student, M = 16.08) were lower than those of the students. With regards to interactive-informal assessment and subject performance assessment, no significant difference between the university teachers and students was observed indicating they perceived the two subcontracts similarly.

Table 4. Descriptive statistics of the teachers and learners

Table 5. Results of the Independent Samples T-test on Teachers and Students

11. Discussion

After developing a formative assessment perception scale, the descriptive statistics revealed that teachers and students almost equally perceived interactive informal assessment and in-class diagnostic assessment. However, their perceptions toward self-assessment (t = −2.06, p < .05) and subject performance assessment (t = −3.71, p < .05) were significantly different. More specifically, the results revealed that the learners reported greater values compared to the teachers in subject performance assessment and self-assessment constructs. However, Hansen (Citation2020) found that the teacher and students possessed many commonalities. She argued that it is highly significant for the university teachers “to develop a mutual learning dialogue and the active effort and participation by both parties in such formative activities as self-assessment, reflection as feedback and dialogue” (p. 1). Similarly. Winstone et al. (Citation2017) stated that the discrepancy between the teachers’ and students’ perceptions can be bridged by fostering dialogue among the stakeholders.

The study results revealed that teachers’ and students’ perceptions on self-assessment and its usefulness significantly varied. Wang (2016) argued that students’ perceptions of self- assessment was tied with their perceptions about the rubric used by the teachers. The university EFL students perceived rubric as helpful instrument to develop their self-regulatory practices by supporting them through setting goals, planning, self-monitoring, and self-reflection stages. Butler and Lee (Citation2010) argued that self-assessment had positive impacts on the learners’ language performance and their confidence in learning English after running several statistical analyses. Butler and Lee (Citation2010) also stated that teachers’ understandings about feedback quality and assessment affected their success when employing novel self-assessment activities. Munoz and Álvarez (Citation2007) examined the relationship between teachers’ assessment and learners’ self-assessment. They found that both forms are correlated to a larger extent and students maintained positive attitudes toward self-assessment. Learners’ accuracy in self-assessing is closely associated with their experience in carrying out the relevant procedures. Munoz and Álvarez (Citation2007) also proposed some pedagogical implications including implementation of ongoing self-assessment along with teachers’ continuous support, raising cultural awareness to accept self-assessment, providing scaffolding for students to employ self-assessment as a medium to recognize cognitive and metacognitive strategies in learning, and developing teachers’ professionalism in term of promoting their students’ autonomy and providing quality feedback.

Examining teachers’ and students’ perceptions about FA, Van der Kleij (Citation2019) found that teachers perceived feedback quality more positively compared to the students in particular subjects. Moreover, learners’ self-efficacy level, self-regulation, and innate beliefs projected their perceptions about quality of feedback. The above students’ individual idiosyncrasies interceded the connection between learners’ degree of achievement and their perception about quality of feedback. These individual characteristics are pivotal to govern how learners perceive the ways formative assessment supports learning, involve them in the feedback process, and promoting their understanding of course content (Shute, Citation2008).

Unlike the results of our study, Jimola and Ofodu (Citation2019) found that most English language teachers in their sample population had imprecise perceptions about the aim of diagnostic assessment and also maintained negative perceptions towards it. If teachers aim to apply diagnostic assessment, they are required to provide detailed feedback about learners’ learning status within a cognitive domain. To maximize learning outcomes and usefulness of diagnostic assessment, it will be effective to recognize different constituents of a cognitive domain; for example, reading and its components. It results in improving English learning process (Kim, Citation2015).

The teachers could meticulously think about the ways and implement formative assessment aligned with their perceptions and belief systems (Widiastuti, et al., 20,220). Black and Wiliam (Citation2006) identified five important ways that formative assessment facilitates the learning process. It elucidates learning expectations and measures for students’ success, divulges students’ level of understanding to the teachers, provides feedback to move students’ forward in regard to instructional attunements and self-assessment, and encourages peer interaction and triggered learning ownership among students. Considering the five objectives, Brazeal et al. (Citation2016) examined students’ perceptions about formative assessment. Most participants maintained positive views and perceived FA to support their own learning through different ways. However, their perceptions about FA types varied when achieving particular objectives. Brazeal et al. (Citation2016) suggested teachers and administrators not oversimplify learners’ perceptions or implement linear and fixed assumptions. In contrast, Ogange et al. (Citation2018) argued that students perceived FA with almost no significant variation. The study revealed that participants were given more early feedback from computer-marked and peer assessments rather than teacher-marked assessment. Such a perception resulted in mounting resistance toward FA.

They resisted formative assessment because of grading criteria and instructional design, including testing time and group project arrangement (Brazeal et al., Citation2016). Similarly, learners’ negative perceptions about unfairness of grading and related policies resulted in such a resistance (Chory-Assad & Paulsel, 2004). According to Wiggins et al. (Citation2005), teachers possibly would reduce students’ resistance by aligning learning goals, course content, exam items, and FA undertakings. The teachers could minimize specific resistance types by adjusting grading and employing particular FA designated tasks to fully engage students as well as spurring emotional investment and improving preferred task’s dynamics in the classroom.

Conducting a critical review of research, Dunn and Mulvenon (Citation2009) argued that there is a little empirical evidence to back up the most effective formative assessment practices. Through a meta-analysis of existing literature, Bennett (Citation2011) found that even though wide-ranging practices related to formative assessment support learning, the formative assessment varies from one implementation to another and one population to the next. In a systematic review of 52 articles, Yan et al. (Citation2021) identified the factors that influenced teachers’ enactment of and intention for FA: personal and contextual. The personal factors included education and training, instrumental attitude, teaching beliefs, skills, self-efficacy, affective attitude, and subjective norm. However, the contextual factors were educational institute environment, internal institutional support, job’s condition, learners’ characteristics, external policies, and cultural norm. Sach (Citation2012) also stated that the teachers recognized formative assessment as a pivotal factor in supporting the learning process, yet they felt less confident than they claimed to be in employing relevant strategies. Their perception about FA undertook changes in regard to teacher experience. Moreover, teachers’ positive perceptions about FA have not been fully translated into classroom practices due to existing fallacy in considering FA as an additional constituent of regular instruction rather than integral part of teaching. The teachers who maintained a promising instrumental attitude, positive subjective norm, and greater self-efficacy were expected to include formative assessment in their classroom practices to a greater extent (Yan & Cheng, Citation2015). Bennett (Citation2011) also suggested that it could by highly effective and beneficial if formative methods and techniques are conceptualized as integral constituents of an all-inclusive system in which all parts work harmoniously to support learning process.

12. Concluding remarks

This study developed a formative assessment perception scale, including 16 individual items in four constructs: Self-assessment, interactive informal assessment, in-class diagnostic assessment, and subject-performance assessment. The findings showed that teachers and students almost identically perceived interactive and in-class diagnostic assessments. Nonetheless, they distinctively perceived self-assessment and subject performance assessment demonstrating a significant difference. The students reported self-assessment greater than the teachers, whereas they perceived the subject-performance assessment lower compared to the teachers.

The results of the present study propose several implications. In terms of the pedagogical implications, it is significant to remember that teachers’ and students’ perceptions play a pivotal role in employing formative assessment in EFL classrooms. Teachers could identify the sources to understand these perceptions and plan accordingly to improve FA activities. It will be more effective if the teachers maintain a critical view toward the four constructs to bring changes in developing and implement context-specific FA tasks via actual teaching practices. The initiatives in formative assessment need to accentuate conceptualizing well-defined and more specific approaches founded on methods and overall processes ingrained within particular content areas (Bennett, Citation2011).

Worthy of note is that emotional investment has a positive impact on shaping FA perceptions. Teachers could improve the effects of FA on learning by highlighting the significance of continuous assessment and bringing innovative and preferable FA activities into the classroom. Formative assessment in this sense allows teachers to evaluate students’ progress based on their own development rather than being evaluated in comparison to other students’ growth. Teachers’ and students’ perceptions are dependent on the contexts and their relevant available facilities to implement various FA constructs.

Furthermore, incorporating regular student self-assessment into courses, providing feedback for learning, and holding both students and teacher accountable for the results of assessments enhance the practicality of FA in language classrooms. In large classes, where assessing individual students is difficult, teachers could initiate focus group assessment. The assessment types used to identify the interactions of students with the curriculum and its learning outcomes could be done from the viewpoints of both the students and the curriculum. Such inclusion could help teachers go beyond the judgmental evaluation of students and collect descriptive data about their students’ performance.

The administrators and policymakers could improve the EFL learning process by investing on the teachers’ capability in terms of FA knowledge and by providing logistic, technical and professional support. Finally, a huge gap exists for a cross-context study to investigate teachers’ and students’ perception on formative assessment in various countries, which can be an agenda for future research.

To situate the findings and implications of this study, it is essential to identify its limitations: first, the study recruited all student participants from one university rather than several institutions across the country. Additionally, the data was only obtained through a survey questionnaire whereas the reasons for existing discrepancy between teachers’ and students’ perceptions can be further investigated through semi-structured interviews from the two target groups. Moreover, the study did not include administrators’ and policymakers’ voices to understand the sources of the inconsistencies and what should be done to improve the perceptions, formative assessment practices and learning process in general. Lastly, since this study did not investigate the participants’ perceptions toward different formative assessment types, future research could explore whether some formative assessment designs are more effective than others, and why.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Jawad Golzar

Jawad Golzar is a faculty member at the English Department, Herat University, Afghanistan. He holds a master’s degree in TESOL, and he has obtained it through Fulbright Scholarship from Indiana University of Pennsylvania, USA. He has participated in numerous academic, personal and professional development programs within the past few years. His research interests include teacher identity, educational technology, teaching methods and issues related to giving voices to others.

References

- Alsubaiai, H. S. (2021). Teachers’ perception towards formative assessment in Saudi Universities’ Context: A review of literature. English Language Teaching, 14(7), 107–19. https://doi.org/10.5539/elt.v14n7p107

- Andersson, C., & Palm, T. (2017). The impact of formative assessment on student achievement: A study of the effects of changes to classroom practice after a comprehensive professional development programme. Learning and Instruction, 49, 92–102. https://doi.org/10.1016/j.learninstruc.2016.12.006

- Baleni, Z. G. (2015). Online formative assessment in higher education: Its pros and cons. Electronic Journal of E-Learning, 13(4), 228–236.

- Bennett, R. E. (2011). Formative assessment: A critical review. Assessment in Education: Principles, Policy & Practice, 18(1), 5–25. https://doi.org/10.1080/0969594X.2010.513678.

- Bhagat, K. K., & Spector, J. M. (2017). Formative assessment in complex problem-solving domains: The emerging role of assessment technologies. Journal of Educational Technology & Society, 20(4), 312–317.

- Black, P., & Wiliam, D. (2006). Assessment for learning in the classroom. In J. Gardner (Ed.), Assessment and learning: Theory, practice and policy (pp. 9–25). Sage Publication.

- Black, P., & Wiliam, D. (2018). Classroom assessment and pedagogy. Assessment in Education: Principles, Policy & Practice, 25(6), 551–575. https://doi.org/10.1080/0969594X.2018.1441807

- Borg, S. (2003). Teacher cognition in language teaching: A review of research on what language teachers think, know, believe, and do. Language Teaching, 36(2), 81–109. https://doi.org/10.1017/S0261444803001903

- Box, C., Skoog, G., & Dabbs, J. M. (2015). A case study of teacher personal practice assessment theories and complexities of implementing formative assessment. American Educational Research Journal, 52(5), 956–983. https://doi.org/10.3102/0002831215587754

- Brazeal, K. R., Brown, T. L., Couch, B. A., & Brickman, P. (2016). Characterizing student perceptions of and buy-in toward common formative assessment techniques. CBE—Life Sciences Education, 15(4), 1–14. https://doi.org/10.1187/cbe.16-03-0133

- Brown, H. D. (2004). Language assessment: Principles and classroom practices (1st ed.). Pearson Education.

- Brown, H. D., & Abeywickrama, P. (2019). Language assessment: Principles and classroom practices (3rd ed.). Pearson Education.

- Browne, M. W., & Cudeck, R. (1993). Alternative ways of assessing model fit. In K. A. Bollen & J. S. Long (Eds.), Testing structural equation models (pp. 136–162). Sage.

- Butler, Y. G., & Lee, J. (2010). The effects of self-assessment among young learners of English. Language Testing, 27(1), 5–31. https://doi.org/10.1177/0265532209346370

- Butler, Y.G., & Lee, J. (2010). The effects of self-assessment among young learners of English. Language Testing, 27(1), 5–31. https://doi.org/10.1177/0265532209346370

- Carless, D. (2011). From testing to productive learning: Implementing formative assessment in confucian-heritage settings. Routledge. https://doi.org/10.4324/9780203128213

- Chapelle, C. A., & Brindley, G. (2010). What is language assessment? In N. Schitt (Ed.), An Introduction to Applied Linguistics (2nd ed., pp. 247–267). Hodden Education.

- Chory‐Assad, R. M., & Paulsel, M. L. (2004). Classroom justice: Student aggression and resistance as reactions to perceived unfairness. Communication Education, 53(3), 253–273. https://doi.org/10.1080/0363452042000265189

- Chow, J. C., & Hollo, A. (2018). Language ability of students with emotional disturbance: Discrepancies between teacher ratings and direct assessment. Assessment for Effective Intervention, 43(2), 90–95. https://doi.org/10.1177/1534508417702063

- Clinchot, M., Ngai, C., Huie, R., Talanquer, V., Lambertz, J., Banks, G., Weinrich, M., Lewis, R., Pelletier, P., & Sevian, H. (2017). Better formative assessment. The Science Teacher, 84(3), 69. https://doi.org/10.2505/4/tst17_084_03_69

- Cropley, D., & Cropley, A. (2016). Promoting creativity through assessment: A formative computer-assisted assessment tool for teachers. Educational Technology, 56(6), 17–24.

- Dunn, K. E., & Mulvenon, S. W. (2009). A critical review of research on formative assessments: The limited scientific evidence of the impact of formative assessments in education. Practical Assessment, Research, and Evaluation, 14(1), 1–11 https://doi.org/10.7275/jg4h-rb87.

- Dweck, C. S. (2017). From needs to goals and representations: Foundations for a unified theory of motivation, personality, and development. Psychological Review, 124(6), 689. https://doi.org/10.1037/rev0000082

- Evans, D. J., Zeun, P., & Stanier, R. A. (2014). Motivating student learning using a formative assessment journey. Journal of Anatomy, 224(3), 296–303. https://doi.org/10.1111/joa.12117

- Fong, C. J., Patall, E. A., Vasquez, A. C., & Stautberg, S. (2019). A meta-analysis of negative feedback on intrinsic motivation. Educational Psychology Review, 31(1), 121–162. https://doi.org/10.1007/s10648-018-9446-6

- Gan, Z., He, J., & Liu, F. (2019). Understanding classroom assessment practices and learning motivation in secondary EFL students. Journal of Asia TEFL, 16(3), 783. http://dx.doi.org/10.18823/asiatefl.2019.16.3.2.783.

- Golzar, J., Miri, M. A., & Nazari, M. (2022). English language teacher professional identity aesthetic depiction: An arts-based study from Afghanistan. Professional Development in Education, 1–21. https://doi.org/10.1080/19415257.2022.2081248.

- Hansen, G. (2020). Formative assessment as a collaborative act. Teachers’ intention and students’ experience: Two sides of the same coin, or? Studies in Educational Evaluation, 66, 1–10. https://doi.org/10.1016/j.stueduc.2020.100904

- Haq, M. N. U., Mahmood, M., & Awan, K. (2020). Assistance of formative assessment in the improvement of English writing skills at intermediate level. Global Language Review, 5(3), 34–41. https://doi.org/10.31703/glr.2020(V-III).04

- Hattie, J., & Gan, M. (2011). Instruction based on feedback. In P. Alexander & R. E. Mayer (Eds.), Handbook of research on learning and instruction (pp. 249–271). Routledge.

- Havnes, A., Smith, K., Dysthe, O., & Ludvigsen, K. (2012). Formative assessment and feedback: Making learning visible. Studies in Educational Evaluation, 38(1), 21–27. https://doi.org/10.1016/j.stueduc.2012.04.001

- Hwang, G.J., & Chang, H.-F. (2011). A Formative assessment-based mobile learning approach to improving the learning attitudes and achievements of students. Computers & Education, 56(4), 1023–1031 https://doi.org/10.1016/j.compedu.2010.12.002.

- Jang, E. E., & Wagner, M. (2013). Diagnostic feedback in the classroom. In A. Kunnan (Ed.), Companion to language assessment. Wiley-Blackwell., 2, 693–711. https://doi.org/10.1002/9781118411360.wbcla081

- Jiang, Y. (2014). Exploring teacher questioning as a formative assessment strategy. RELC Journal, 45(3), 287–304. https://doi.org/10.1177/0033688214546962

- Jimola, F. E., & Ofodu, G. O. (2019). ESL teachers and diagnostic assessment: Perceptions and practices. ELOPE: English Language Overseas Perspectives and Enquiries, 16(2), 33–48. https://doi.org/10.4312/elope.16.2.33-48

- Kim, A. Y. (2015). Exploring ways to provide diagnostic feedback with an ESL placement test: Cognitive diagnostic assessment of L2 reading ability. Language Testing, 32(2), 227–258. https://doi.org/10.1177/0265532214558457

- Lee, I. (2011). Formative assessment in EFL writing: An exploratory case study. Changing English, 18(1), 99–111. https://doi.org/10.1080/1358684X.2011.543516

- Lee, I., Yu, S., & Liu, Y. (2018). Hong Kong secondary students’ motivation in EFL writing: A survey study. TESOL Quarterly, 51(1), 176–187. https://doi.org/10.1002/tesq.364

- Lee, I., Mak, P., & Yuan, R. E. (2019). Assessment as learning in primary writing classrooms: An exploratory study. Studies in Educational Evaluation, 62, 72–81. https://doi.org/10.1016/j.stueduc.2019.04.012

- Leenknecht, M., Wijnia, L., Köhlen, M., Fryer, L., Rikers, R., & Loyens, S. (2021). Formative assessment as practice: The role of students’ motivation. Assessment & Evaluation in Higher Education, 46(2), 236–255. https://doi.org/10.1080/02602938.2020.1765228

- Leong, W. S., Ismail, H., Costa, J. S., & Tan, H. B. (2018). Assessment for learning research in East Asian countries. Studies in Educational Evaluation, 59, 270–277. https://doi.org/10.1016/j.stueduc.2018.09.005

- Marhaeni, A. A. I. N., Kusuma, I. P. I., Dewi, N. L. P. E. S., & Paramartha, A. A. G. Y. (2019). Using performance assessment to empower students’ learning ownership and promote achievement in EFL writing courses. International Journal of Humanities, Literature & Arts, 2(1), 9–17.

- McManus, S. (2008). Attributes of effective formative assessment. Council of Chief State School Officers.

- Mulliner, E., & Tucker, M. (2017). Feedback on feedback practice: Perceptions of students and academics. Assessment and Evaluation in Higher Education, 42(2), 266–288. https://doi.org/10.1080/02602938.2015.1103365

- Munoz, A., & Álvarez, M. E. (2007). Students’ objectivity and perception of self-assessment in an EFL classroom. The Journal of Asia TEFL, 4(2), 1–25.

- Naghdipour, B. (2017). Incorporating formative assessment in Iranian EFL writing: A case study. The Curriculum Journal, 28(2), 283–299. https://doi.org/10.1080/09585176.2016.1206479

- Nazari, M., Miri, M. A., & Golzar, J. (2021). Challenges of second language teachers’ professional identity construction: Voices from Afghanistan. TESOL Journal, 12(3), e587. https://doi.org/10.1002/tesj.587

- Ogange, B. O., Agak, J. O., Okelo, K. O., & Kiprotich, P. (2018). Student perceptions of the effectiveness of formative assessment in an online learning environment. Open Praxis, 10(1), 29–39. https://doi.org/10.5944/openpraxis.10.1.705

- Orsmond, P., & Merry, S. (2011). Feedback alignment: Effective and ineffective links between tutors’ and students’ understanding of coursework feedback. Assessment and Evaluation in Higher Education, 36(2), 125–136. https://doi.org/10.1080/02602930903201651

- Oscarson, A. D. (2009). Self-Assessment of Writing in Learning English as a Foreign Language: A Study at the Upper Secondary School Level. ( PhD Thesis University of Gothenburg).

- Oscarson, M. (2013). Self-assessment in the classroom. In A. Kunnan (Ed.), The companion to language assessment (pp. 712-729). Wiley-Blackwell.

- Ozan, C., & Kincal, R. Y. (2018). The effects of formative assessment on academic achievement, attitudes toward the lesson, and self-regulation skills. Educational Sciences: Theory and Practice, 18(1), 85–118. https://doi.org/10.12738/estp.2018.1.0216.

- Palm, T. (2008). Performance assessment and authentic assessment: A conceptual analysis of the literature. Practical Assessment, Research & Evaluation, 13(4), 1–11 https://doi.org/10.7275/0qpc-ws45.

- Panadero, E., Brown, G. T., & Strijbos, J. W. (2016). The future of student self-assessment: A review of known unknowns and potential directions. Educational psychology review, 28(4), 803–830.

- Qasem, F. A. A. (2020). The effective role of learners’ self-assessment tasks in enhancing learning English as a second language. Arab World English Journal, 11(3), 502–514 https://dx.doi.org/10.24093/awej/vol11no3.33.

- Richard, M. E. (2003). Goal Orientation and Feedback Sign as Predictors of Changes in Motivation and Performance ( Master’s Thesis). Louisiana State University.

- Rivers, M. L., Dunlosky, J., & Joynes, R. (2019). The contribution of classroom exams to formative evaluation of concept-level knowledge. Contemporary Educational Psychology, 59, 1–11. https://doi.org/10.1016/j.cedpsych.2019.101806

- Ruiz-Primo, M. A. (2011). Informal formative assessment: The role of instructional dialogues in assessing students’ learning. Studies in Educational Evaluation, 37(1), 15–24. https://doi.org/10.1016/j.stueduc.2011.04.003

- Sach, E. (2012). Teachers and testing: An investigation into teachers’ perceptions of formative assessment. Educational Studies, 38(3), 261–276. https://doi.org/10.1080/03055698.2011.598684

- Shana, Z. A., & Abd Al Baki, S. (2020). Using plickers in formative assessment to augment student learning. International Journal of Mobile and Blended Learning, 12(2), 57–76. https://doi.org/10.4018/ijmbl.2020040104

- Shute, V. J. (2008). Focus on formative feedback. Review of Educational Research, 78(1), 153–189. https://doi.org/10.3102/0034654307313795

- Tang, C., & Liu, Y. T. (2018). Effects of indirect coded corrective feedback with and without short affective teacher comments on L2 writing performance, learner uptake and motivation. Assessing Writing, 35, 26–40. https://doi.org/10.1016/j.asw.2017.12.002

- Torrance, H. (2012). Formative assessment at the crossroads: Conformative, deformative and transformative assessment. Oxford Review of Education, 38(3), 323–342. https://doi.org/10.1080/03054985.2012.689693

- Ullman, J. B. (2001). Structural equation modeling. In B. G. Tabachnick & L. S. Fidell (Eds.), Using multivariate statistics (4th ed., pp. 653–771). Allyn & Bacon.

- van der Kleij, F. M., Cumming, J. J., & Looney, A. (2018). Policy expectations and support for teacher formative assessment in Australian education reform. Assessment in Education: Principles, Policy & Practice, 25, 620–637. https://doi.org/10.1080/0969594X.2017.1374924

- van der Kleij, F. M. (2019). Comparison of teacher and student perceptions of formative assessment feedback practices and association with individual student characteristics. Teaching and Teacher Education, 85, 175–189. https://doi.org/10.1016/j.tate.2019.06.010.

- Vasu, K. A., Mei Fung, Y., Nimehchisalem, V., & Md Rashid, S. (2020). Self-regulated learning development in undergraduate ESL writing classrooms: Teacher feedback versus self-assessment. RELC Journal , 51 (3). , . https://doi.org/10.1177/0033688220957782

- Vygotsky, L. S. (1978). Mind in society: The development of higher psychological processes. Harvard University Press.

- Waller, L., & Papi, M. (2017). Motivation and feedback: How implicit theories of intelligence predict L2 writers’ motivation and feedback orientation. Journal of Second Language Writing, 35, 54–65. https://doi.org/10.1016/j.jslw.2017.01.004

- Weaver, M. (2006). Do students value feedback? Students’ perception of tutors’ written feedback. Assessment and Evaluation in Higher Education, 31(3), 379–394. https://doi.org/10.1080/02602930500353061

- Wei, L. (2010). Formative assessment: Opportunities and challenges. Journal of Language Teaching and Research, 1(6), 838–841. https://doi.org/10.4304/jltr.1.6.838-841

- Widiastuti, I. A. M. S., Mukminatien, N., Prayogo, J. A., & Irawati, E. (2020). Dissonances between teachers’ beliefs and practices of formative assessment in EFL classes. International Journal of Instruction, 13(1), 71–84. https://doi.org/10.29333/iji.2020.1315a

- Wiggins, G. P., Wiggins, G., & McTighe, J. (2005). Understanding by design. ASCD.

- Winstone, N. E., Nash, R. A., Parker, M., & Rowntree, J. (2017). Supporting learners’ agentic engagement with feedback: A systematic review and a taxonomy of recipience processes. Educational Psychologist, 52(1), 17–37. https://doi.org/10.1080/00461520.2016.1207538

- Wuest, D. A., & Fisette, J. L. (2012). Foundations of physical education, exercise science, and sport (17th ed.). McGraw-Hill.

- Xiao, Y., & Yang, M. (2019). Formative assessment and self-regulated learning: How formative assessment supports students’ self-regulation in English language learning. System, 81, 39–49. https://doi.org/10.1016/j.system.2019.01.004.

- Yan, Z., & Cheng, E. C. K. (2015). Primary teachers’ attitudes, intentions and practices regarding formative assessment. Teaching and Teacher Education, 45, 128–136. https://doi.org/10.1016/j.tate.2014.10.002

- Yan, Z., Li, Z., Panadero, E., Yang, M., Yang, L., & Lao, H. (2021). A systematic review on factors influencing teachers’ intentions and implementations regarding formative assessment. Assessment in Education: Principles, Policy & Practice, 28(3), 228–260.

- Yastibas, A. E., & Yastibas, G. C. (2015). The use of E-portfolio-based Assessment To Develop Students’ Self-Regulated Learning In English Language Teaching. Procedia - Social and Behavioral Sciences, 176, 3–13. https://doi.org/10.1016/j.sbspro.2015.01.437

- Yu, S., Jiang, L., & Zhou, N. (2020). Investigating what feedback practices contribute to students’ writing motivation and engagement in Chinese EFL context: A large scale study. Assessing Writing 44 , 1–15. https://doi.org/10.1016/j.asw.2020.100451

- Zhan, Y. (2019). Motivated or informed? Chinese undergraduates’ beliefs about the functions of continuous assessment in their college English course. Higher Education Research & Development, 39(5), 1055–1069. https://doi.org/10.1080/07294360.2019.1699029.

- Zou, X., & Zhang, X. (2013). Effect of different score reports of Web-based formative test on students’ self-regulated learning. Computers & Education, 66, 54–63. https://doi.org/10.1016/j.compedu.2013.02.016

Appendix A

Factor 1 (Self-Assessment)

(1) Learners should determine their learning needs.

(2) Learners should implement concept mapping to evaluate their learning.

(3) Learners should choose their learning objectives.

(4) Learners should assess their own learning progress.

Factor 2 (Interactive-Informal Assessment)

(5) Learners should benefit from peer feedback as an evaluation instrument.

(6) Teachers should check learners’ understanding and progress via interactions.

(7) Teachers should evaluate learners’ responses.

(8) Teachers should rectify learners’ mistakes.

Factor 3 (In-class Diagnostic Assessment)

(9) Teachers should conduct diagnostic assessments to identify learners’ needs.

(10) Teachers should administer announced quizzes to evaluate learners’ understanding.

(11) Teachers should examine the learners’ gap of knowledge.

(12) Teachers should use the contents available to check learners’ understanding.

Factor 4 (Subject Performance Assessment)

(13) Teachers should use multiple choice questions to evaluate learners.

(14) Teachers should use essay writing to evaluate learners.

(15) Teachers should use gap fill to evaluate learners.

(16) Teachers should use short-answer questions to evaluate learners.