Abstract

The unprecedented COVID-19 pandemic has changed many aspects of society, including education. While online learning aims to avoid the transmission of viruses, however, what causes the success or failure of online learning needs to be investigated. This study tries to answer the question by analyzing how self-regulated learning, digital literacy, and the mediation of course satisfaction influence students’ academic performance in online learning situations caused by the COVID-19 pandemic in Indonesia. We employed Partial Least Square Structural Equation Modelling (PLS-SEM) on 358 respondents gathered from an online survey questionnaire completed by undergraduate students during the pandemic. The study finds that self-regulated learning is the key factor, followed by digital literacy. Course satisfaction also proved to mediate self-regulated learning and digital literacy on academic performance.

1. Introduction

It has been two years since the COVID-19 pandemic was officially declared to have entered Indonesian territory. Many aspects of society have changed caused by social distancing, from partial until full lockdowns. Numerous things are becoming the new norm today, such as people being required to wear masks when in public spaces, places usually used as gathering places being limited in capacity and operating hours, and places of worship have been temporarily closed. Among them, the most striking thing since the pandemic is that teaching and learning activities in schools and campuses are not allowed and replaced with online learning, not only in Indonesia but school closure is also implemented throughout the global level. Following UNESCO recommendations, educational institutions are encouraged to replace the face-to-face learning process with online learning (Crawford et al., Citation2020).

The application of a learning management system (LMS) in online learning is challenging. This challenge comes from two sources. Firstly, it is caused by the physical absence of lecturers, and secondly is caused by the loss of the campus academic atmosphere, so that students tend to use their productive time to do other things besides studying (Elvers et al., Citation2003; Levy & Ramim, Citation2012; Michinov et al., Citation2011). Because online learning has powerful characteristics in self-autonomy, self-regulation has an important role in the process. If we reflect in the context of learning in regular classrooms, self-regulatory behaviors have been shown to play an important role in students’ academic performance (Gonzalez-Nucamendi et al., Citation2021; Lan, Citation1996; Orange, Citation1999; Perry et al., Citation2012). If this behavior has a vital role in regular classrooms, then it can be expected that self-regulatory skills will play an even much more critical role in online learning, where students are isolated from each other in their respective rooms, because the lack of self-regulation can lead students to negative traits, such as procrastination (Hong et al., Citation2021). Students who lack self-regulatory learning skills are suspected of manipulating their self-autonomy so that the learning tasks they should have completed in online courses are unfinished.

In addition to self-regulated learning, the ability to use digital devices, or known as digital literacy also plays an important role here. This is because online learning is not only about using devices to access the LMS but also how to synthesize information and prevent risks that may occur when accessing the internet (Rodríguez-de-Dios & Igartua, Citation2016). If the understanding of literacy in the past meant demonstrating the capacity to extract sense from what they read (Prior et al., Citation2016), then digital literacy also has similar characteristics, because the skill to read and to write was not enough to identify a person as being literate (Miranda et al., Citation2018). A person can be said to have digital literacy if they have the ability to understand and use various information that they obtained from a variety of digital sources (Gilster, Citation1997).

Researchers have revealed that self-regulated learning influences students’ course satisfaction (Kuo et al., Citation2014; Puzziferro, Citation2008; Wang et al., Citation2013). If explored further, course satisfaction is known to have implications for student academic performance (Blanz, Citation2014; Topală, Citation2014). Also, based on the previous study, self-regulated learning and digital literacy are known to influence academic performance directly, but previous research has only focused more on the regular classroom setting (Bail et al., Citation2008; Leung & Lee, Citation2012; Lucieer et al., Citation2016; Vrana, Citation2014). However, as far as the authors know, there is no study about how digital literacy influences course satisfaction in the online learning environment, even though in the online learning process the use of digital devices is absolutely necessary. Regarding how course satisfaction in mediating digital literacy and self-regulated learning on academic performance also has never been studied before. Thus, this study aims to fill these gaps by investigating them. Again, this study becomes important because it captures a broader understanding of how self-regulated learning, digital literacy, and course satisfaction influence students’ academic performance in online learning situations caused by the unprecedented COVID-19 pandemic in Indonesia.

2. Theoretical background and hyphothesis development

2.1. Self-regulated learning

According to Zimmerman (Citation2000), self-regulation is defined as self-generated thoughts, feelings, and actions that are planned and cyclically adjusted to achieve personal goals. Meanwhile, Bandura (Citation1986) states that self-regulated learning represents the relationship between triadic processes, namely personal, behavior and environment. Furthermore, when referring to Schunk and Ertmer (Citation2000), self-regulation is a cycle when personal, environmental, and behavioral aspects experience changes during the learning process. In online learning, students have complete control over their learning. Thus, they must do things independently related to their learning, including planning, regulating, monitoring, and evaluating. Successful self-regulated learning is characterized by active engagement, adjustment, and readjustment of learning strategies according to various factors they met.

Furthermore, even though students who carry out online learning need to be independent and autonomous, they are also expected to be able to carry out self-management. Self-regulated learning has much in common with learners’ ability to exert self-control. Previous literature has shown that aspects such as resisting temptation, resisting distractions, focusing on long-term goals, and delaying short-term gratification are all part of self-regulation (Zhu et al., Citation2016). However, this is not easy to maintain (Elvers et al., Citation2003; Levy & Ramim, Citation2012; Michinov et al., Citation2011). Previous literature states that self-regulation in online learning settings tends to create learning difficulties for students compared to face-to-face learning (Lajoie & Azevedo, Citation2006; Lee et al., Citation2008; Samruayruen et al., Citation2013; Tsai & Tsai, Citation2010). Furthermore, if not managed properly, an unorganized profile from the aspect of self-regulated learning is associated with poor academic outcomes, such as a low GPA of students (Barnard et al., Citation2009).

2.2. Digital Literacy

The concept of digital literacy has changed in recent years, and even this term is often confused, as there is no general consensus among academics on its definition (Bawden, Citation2001, Citation2008; Hockly, Citation2012). Initially, this term expressly referred to knowledge of hardware and software. Thus, people are considered to have digital literacy if they know how to use a word processing application such as Microsoft Word. Furthermore, along with the advancement of internet technology, until around the 1990s, some academics used this term to refer to the ability to read and understand the hypertextual text and multimedia (Bawden, Citation2001). Nevertheless, this concept is seen as more than just using the software or the device itself. Therefore, it relates to expertise and skills in the use of mechanics as well as knowledge and skills about using these devices for different purposes (Chisholm, Citation2006). The importance of technology is seen not merely from the capacity to use technology but also from the intellectual, social, and ethical aspects. This is when the concept of digital literacy must take into account. It is relevant in today’s class setting, while education does depend on technological use, even since primary school (Buckingham, Citation2015; Casey & Bruce, Citation2011; Unsworth, Citation2005). At juvenile and adult levels, digital literacy becomes a vital capacity in mastering not only for the sake of daily tasks and daily routines but also in all sectors of society, including in higher education and the professional world (Ahmed & Roche, Citation2021; Mohammadyari & Singh, Citation2015; Siddiq et al., Citation2017). If students have excellent digital literacy skills and, as a consequence, they know how to use technology, it will bring them several benefits for their learning because technology gives students easy access to academic resources, makes them more productive, feel connected, with more immersive, engaging, and relevant experience (Burton et al., Citation2015).

Digital literacy can be interpreted as an individual’s awareness, attitude, and ability to use digital tools and facilities appropriately to identify, access, manage, integrate, evaluate, analyze and synthesize digital resources, build new knowledge, create media expressions, and communicate with others, in the context of specific life situations, to enable constructive social action, and for reflection (Hockly, Citation2012; Martin, Citation2005). Although today’s generation is digital natives (Al-htaybat et al., Citation2018) and they are at the forefront of new technologies, it is not a guarantee that they will be able to use them wisely because today’s generation has many difficulties managing information, assessing the credibility of information, building their digital identity, and manage privacy in their online activities (Fernández-Villavicencio, Citation2012). Furthermore, referring to Rodríguez-de-Dios and Igartua (Citation2016), there are five dimensions of digital literacy, namely:

Technological or Instrumental Skill

Communication Skill

Information Skill

Critical Skill

Security Skill

2.3. Course Satisfaction

Concerning terminology about satisfaction in education, it is often referred to by different names, such as student satisfaction (Xiao & Wilkins, Citation2015), learning satisfaction (Topala & Tomozii, Citation2014), and course satisfaction (Frey et al., Citation2003; Wang et al., Citation2013). However, this study uses the term of course satisfaction. In most countries, students are required to pay tuition fees, then, like consumers in business sector, their satisfaction needs to be considered by universities (Xiao & Wilkins, Citation2015). Referring to Rashidi and Moghadam (Citation2014), course satisfaction can be interpreted as the relationship between students’ expectations and what they actually get. Previous study has proven that students with high course satisfaction will tend to earn higher grades on their final exam (Puzziferro, Citation2008).

This can be imperative in online learning. With the limited interaction and many potential disruptions, course satisfaction can be in jeopardy (Dong et al., Citation2020; Watermeyer et al., Citation2022), while a large body of evidence proves that course satisfaction plays a significant role in academic performance (Abuhassna et al., Citation2020; Blanz, Citation2014; Hanus & Fox, Citation2015; Ko & Chung, Citation2014). Hence, the success of online learning can be achieved when students are satisfied with their learning experience (course satisfaction), and consequently, they will have satisfying academic performance (Chang & Smith, Citation2008; Marks et al., Citation2005; Puzziferro, Citation2008).

Based on the theoretical grounding and previous findings, we propose five hypotheses as follows:

H1: Self-regulated learning has a significant positive direct effect on course satisfaction

H2: Digital literacy has a significant positive direct effect on course satisfaction

H3: Course satisfaction has a significant positive direct effect on academic performance

H4: Course satisfaction mediates the relationship between self-regulated learning and academic performance

H5: Course satisfaction mediates the relationship between digital literacy and academic performance

3. Research Model

The model proposed in this study can be seen in Figure , which generated by the theoretical grounding and hypotheses postulated. In this model, self-regulated learning and digital literacy act as an exogenous latent variable. Meanwhile, the course satisfaction variable acts as an endogenous latent variable with a dual relationship as both independent and dependent, and the academic performance variable acts as an endogenous latent variable.

4. Method

4.1. Research design and sample

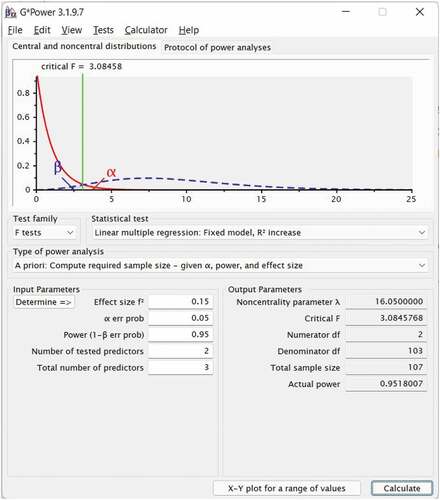

This study used survey research design as its research procedure. Survey research designs are methods in quantitative research in which researchers administer a survey to a sample or to the entire population of people to describe the attitudes, opinions, behaviors, or characteristics of the population (Creswell, Citation2012). Furthermore, the type of survey research design used is cross-sectional survey designs, where data is collected at one point in time (Creswell, Citation2012). In this study, the sample was selected using convenience sampling procedure. In determining the sample size, the authors followed the recommendations given by Hair Jr et al. (J.F Hair et al., Citation2016), where to determine the required number of samples, it should be in line with the statistical power. To calculate the required sample size and the statistical power, the authors utilized G*Power software (Faul et al., Citation2007). The authors used error measurements of type one and two at α = 0.05 and power (1—β) = 0.95, while the effect size = 0.15 to achieve medium effect size as the minimum threshold (Cohen, Citation2013; J.F Hair et al., Citation2016). The number of predictors as the model offered by the researcher are 3 predictors, with 2 number of tested predictors. The calculation shows that the minimum sample required in this study is 107. Furthermore, the complete settings authors used to analyze the sample size and the results can be seen in Figure . The sample consisted of undergraduate students who studied in two universities in Indonesia.

4.2. Instrumentation and data collection

The instruments used in data collection in this study have been validated by previous research. The self-regulated learning variable uses the Online Self-Regulated Learning Questionnaire (OSLQ; Barnard et al., Citation2009), the digital literacy variable uses an instrument developed by Rodriguez-de-Dios et al. (Citation2016). Meanwhile, the learning satisfaction variable was adopted from the Course Satisfaction Questionnaire (CSQ) instrument (Frey et al., Citation2003), and academic performance using an instrument that has been validated by Nayak (Citation2018). All variables were measured by utilizing five points Likert scale, where 1 refers to “strongly disagree” and 5 refers to “strongly agree”.

In data collection, authors conducted a web-base survey among Indonesian students. The number of completed questionnaires was 358, where this sample size fulfilled the minimum sample calculated using the G*Power application (107 sample size).

4.3. Sample demographic background

Table shows the background of the sample who participated in this study. The categories of all samples (n = 358) are divided into gender, university, and the most frequent devices used in online learning. More than half were female (69.55%), and the remaining were male (30.45%). In terms of universities, the number of samples from the two universities was still relatively comparable in size, with 201 samples from Universitas Negeri Medan (56.15%), while those from Universitas Islam Negeri Sumatera Utara were 157 (43.85%). Interestingly, based on the devices used in attending online lectures, the majority of the samples participating in this study used mobile phones in online learning, with a total of 300 (83.8%), and the remaining using laptops (16.2%).

Table 1. Sample demographic background

4.4. Data analysis procedure

Because the constructs authors want to examine are complex and contain two layers of constructs thus, the hierarchical component models (HCMs) in Partial Least Square Structural Equation Modeling (PLS-SEM) were employed for data analysis. HCMs have two elements: the higher-order component (HOC), which captures the more abstract higher-order entity, and the lower-order components (LOCs), which capture the subdimensions of the higher-order entity (J.F Hair et al., Citation2016). Furthermore, the type of HCMs used in this study is reflective-formative type models (Type II). Regarding the approach to estimate the HCMs, there are three approaches to estimate the parameters in HCMs models using PLS-SEM, they are the repeated indicator approach (Lohmöller, Citation2013; Wold, Citation1982), the two-stage or sequential approach (Ringle et al., Citation2012; Wetzels et al., Citation2009), and the hybrid approach (Becker et al., Citation2012; Ciavolino & Nitti, Citation2013). However, authors used repeated indicator with Mode B formative measurement to estimate the parameters in HCMs in this study. The advantage of the repeated indicator approach is its capability to assess all constructs simultaneously instead of assessing lower-order and higher-order dimensions separately (Becker et al., Citation2012). Mode B for the repeated indicator also consideres to more appropriate referring to Becker et al. (Citation2012).

In general, PLS-SEM chosen with the reason of the nature of this research is exploratory and predictive (Henseler et al., Citation2016; J.F Hair et al., Citation2016). In addition, the utilization of PLS-SEM is also preferred because it allows researchers to approximate complex models with many constructs, indicators, and structural paths without having to worry about distributional assumptions on research data since PLS-SEM is non-parametric in nature (J.F Hair et al., Citation2016). Three main steps were conducted in analyzing the results: (1) evaluation of measurement models for first-order constructs, (2) evaluation of the first-order constructs on the second-order constructs, (3) evaluation of the structural model (Becker et al., Citation2012; J.F Hair et al., Citation2016; Ringle et al., Citation2015). The explanation for both evaluations will be explained in the next session.

5. Results

5.1. Evaluation of measurement models for first-order constructs

The first-order constructs in this study have reflective constructs. The assessment of reflective constructs involves convergent validity, internal consistency reliability, and discriminant validity (J.F Hair et al., Citation2016). Convergent validity is the degree to which a measure correlates with other measures of the same construct (J.F Hair et al., Citation2016) requiring both loading factors and Average Variance Extracted (AVE) to exceed 0.5. Furthermore, internal consistency reliability is a form of reliability used to determine whether the items measuring a construct are similar in their scores. It required composite reliability and Cronbach’s alpha to be above 0.6 (J.F Hair et al., Citation2016). The last aspect in evaluating the measurement models for first-order constructs is discriminant validity. While there are many approaches to evaluating the discriminant validity, such as cross-loading (Henseler et al., Citation2009), Fornell-Larcker criterion (Fornell & Larcker, Citation1981), and Heterotrait-monotrait ratio (HTMT; Henseler et al., Citation2015). HTMT is considered to have a more accurate examination since cross loading and Fornell-Larcker criterion suffer to recognize discriminant validity issues (Henseler et al., Citation2015). For the threshold, the HTMT confidence interval must not include 1. For a more conservative threshold, 0.85 seems warranted (Henseler et al., Citation2015).

However, the results shown in Table are the second run analysis. In the first analysis (all indicators can be seen in ), the measurement that does not meet the requirements has been removed, namely SRL13, SRL14, SRL15, DL6, DL 7, DL8, DL22, DL23, DL24, and DL25. SRL13, SRL14 and SRL15 were parts of Time Management sub-construct from Self-Regulated Learning. DL6 and DL7 were parts of Technological Skill sub-construct from Digital Literacy. DL8 was one of the Personal Security Skill sub-construct from Digital Literacy. While DL22, DL23, DL24, and DL25 were parts of Information Skill sub-construct from digital literacy. Therefore, two sub-constructs were discarded completely: Time Management from Self-Regulated Learning, and Information Skill from Digital Literacy. Table shows that all constructs have adequate convergent validity, internal consistency reliability, and discriminant validity. Nonetheless, the details of HTMT results can be seen in Table . Once it is confirmed that the evaluation of measurement models for first-order constructs is viable, then it can proceed to the evaluation of the second-order constructs.

Table 2. Convergent validity and internal consistency reliability measures

Table 3. HTMT values for discriminant validity

5.2. Evaluation of the first-order constructs on the second-order constructs

The evaluation of the second order follows the same process or analogy used to evaluate the first-order constructs (Chin, Citation1998a). However, since the second order is formative, several researchers have emphasized that traditional validity assessments do not apply like its reflective counterpart (Götz et al., Citation2010; Henseler et al., Citation2009; J.F Hair et al., Citation2016; Petter et al., Citation2007). Fundamentally, formative models assume that the indicators have an influence or shape the construct (J.F Hair et al., Citation2016; Jarvis et al., Citation2003). It makes a different interpretation and evaluation of the measurement (Götz et al., Citation2010; J.F Hair et al., Citation2016). Thus, in general, the evaluation of the second-order constructs contains two steps: the indicator level (in which the first-order constructs now act as indicators), and the second-order constructs (Henseler et al., Citation2009).

In the first stage, we need to assess whether every first-order construct contributes to forming the second-order construct (Chin, Citation1998a; Hair et al., Citation2011). However, as a reminder, we treated the path as weights instead of loading factor. Note that the weights obtained are the scores we obtained in the first stage. Referring to Andreev et al. (Citation2009), the indicators’ weight should exceed 0.1. Furthermore, bootstrapping should be employed to verify the significance (Hair et al., Citation2011; Henseler et al., Citation2009). Table shows that all first-order constructs’ weights are higher than 0.10 and have a significant level based on 5,000 bootstrapping, which means there is empirical support for the first-order constructs in terms of the construction of the formative second-order constructs (Hair et al., Citation2011).

Table 4. Weights of the first-order constructs on the second-order constructs

Furthermore, it is necessary to assess the nomological validity at the second-order construct level. This validity manifested in the magnitude and significance of the relationships between the second-order formative construct with the other constructs in the model (Henseler et al., Citation2009). The results in Table indicate a significant relationship between second-order formative constructs in this study and other constructs in the model. It means the nomological validity has been met.

Table 5. Hypotheses tests and effect size results

5.3. Evaluation of the structural model (inner model)

After the outer model is proven to be reliable and valid, then the inner model estimates should be examined for the sake of hypothesized relationships among constructs in the model (Hair et al., Citation2012; J.F Hair et al., Citation2016). However, PLS-SEM is different from CB-SEM. It makes the goodness-of-fit of CB-SEM not fully transferrable to PLS-SEM. Therefore, the inner model goodness-of-fit in this study was evaluated following Chin & others (Chin, Citation1998a, Citation1998b), Henseler et al. (Citation2009), and Hair Jr et al. (J.F Hair et al., Citation2016) by assessing the f2 and Q2 effect size. Furthermore, the standardized path coefficients and significance levels with 5,000 bootstrapping allow researchers to test the proposed hypotheses.

Many researchers rely on R2 to examine the explained variance of the endogenous constructs. However, since the second-order constructs in the model of this study use repeated indicators, surely the variance of the second-order construct will be perfectly explained, and the explained variance will be equal to 1. Therefore, another approach is used, known as the Stone-Geisser’s Q2 (Geisser, Citation1975; Stone, Citation1974). In the PLS-SEM application, this approach follows a blindfolding procedure and then tries to estimate the omitted part using the estimated parameters (Vinzi et al., Citation2010). In this study, researchers used SmartPLS blindfolding feature with the omission distance used was 7. This value follows the recommendation by Chin (Chin, Citation1998a) and Henseler et al. (Citation2012) that omission distance should be between 5 and 10. For interpretation, if Q2 > 0, it means the model has predictive relevance. However, if Q2 < 0 it represents a lack of predictive relevance (Henseler et al., Citation2009; Vinzi et al., Citation2010).

Besides Q2, numerous scholars (Henseler et al., Citation2009; J.F Hair et al., Citation2016; Ringle et al., Citation2020) also recommended evaluating the effect size of each path using f2 (Cohen’s effect size; Cohen, Citation2013). Values between 0.02 and 0.15, between 0.15 and 0.35, and over 0.35 represents small, medium, and large effect size, respectively (Henseler et al., Citation2012; Vinzi et al., Citation2010). Similar to f2, this three level can be applied to Q2 as well (Henseler et al., Citation2009).

Table presents the path coefficients and the significance levels. The direct effects show that self regulated learning has a stronger effect (β = 0.51, p < 0.001) on course satisfaction than digital literacy (β = 0.24, p < 0.001). In terms of the influence of course satisfaction on academic performance, the path coefficient shows a significant effect, with β = 0.11, p < 0.01. Thus, hypothesis 1, hypothesis 2, and hypothesis 3 are supported. Regarding the indirect effect on academic performance through course satisfaction, the coefficient reveals that self-regulated learning (β = 0.05, p < 0.05) has proven to be important as well as digital literacy (β = 0.03, p < 0.05) in influencing academic performance of students. Therefore, hypothesis 4 and hypothesis 5 are supported. The calculation of f2 effect size in Table also shows that the path of self-regulated learning on course satisfaction has a medium effect size, while digital literacy on course satisfaction and course satisfaction on academic performance has a small effect size. The results in Table also indicate that Q2 effect size of exogenous constructs in the model of this study have an adequate effect size. Self-regulated learning and digital literacy were revealed to have large predictive relevance, while course satisfaction revealed to have a medium effect size

6. Discussion and implications

This study aimed to explore how self-regulated learning, digital literacy, and course satisfaction shaped academic performance in online learning settings during the unprecedented COVID-19 pandemic in Indonesia. From the literature review, the authors hypothesized that both self-regulated learning and digital literacy directly affect course satisfaction, while course satisfaction directly influences students’ academic performance. Furthermore, self-regulated learning and digital literacy are intervened by course satisfaction in the relationship to students’ academic performance.

Our results found that self-regulated learning positively impacts the level of students’ course satisfaction. This finding supports the idea of Kuo et al. (Citation2014) that interaction, internet self-efficacy, and self-regulated learning roles as predictors of student satisfaction in online education courses. Furthermore, in Massive Open Online Courses (MOOCs) setting, Li (Citation2019) finding also shows a similar result, that self-regulated learning strategy was a key variable in students’ course satisfaction. Also, the empirical evidence in this study found that digital literacy predicted students’ course satisfaction. This finding corroborates the ideas of Eshet-Alkalai (Citation2004), who stated that digital literacy is a survival skill in this digital era, including in the learning process. That is why having an understanding of how to use digital tools in the learning process is crucial. Because if students do not have adequate digital literacy, students may experience a tendency to depression in the use of technology, or what is known as technostress, which will surely decrease the academic productivity of university students (Upadhyaya, Citation2021). Another important finding was that course satisfaction positively impacts the students’ academic performance. These results match those observed in recent studies. A study conducted by Bossman and Agyei (Citation2022) proved that technology and instructor dimensions, e-learning satisfaction influence the academic performance of distance students in Ghana.

The mediating effects of course satisfaction proposed in our study suggest that self-regulated learning does not straightforwardly lead to a higher level of academic performance of students, but through course satisfaction. Similar results also applied to digital literacy. These results suggest that nurturing digital literacy and self-regulated learning ignites the students’ course satisfaction, which in turn positively affects academic performance. These results support the idea of other scholars that course satisfaction has a crucial role in mediating one variable to another. Take an example of the research conducted by Ko and Chung (Citation2014) that found student satisfaction has a mediating role of teaching quality of teachers and students’ academic performance. Furthermore, Nye et al. (Citation2021) also reported similar pattern.

The present results are significant in at least two major aspects. First, in the sample and setting in this study, digital literacy and course satisfaction do not only act as a stand-alone variable that impacts students’ academic performance; it also needs a high level of student satisfaction at the prior, so that students’ academic performance can be fostered. And second, in the time of online learning, self-regulated learning acted as the biggest predictor of the students’ academic performance. It means success online learning needs a high level of self-regulated learning from students. Practically speaking, in the community of students with low self-regulated learning, authors are really doubtful that online learning can run smoothly or have the expected outcome.

These findings may help us to understand the online learning situation in Indonesia. The discovery of this study found that digital literacy, while it acted as the second biggest predictor of academic performance, but it still held a high coefficient. It means digital literacy is important. However, as we can notice, Indonesia is a country with high inequality in many education indicators, let alone socioeconomic status (Ikeda & Echazarra, Citation2021; OECD, Citation2018). Imagine, is it possible to have a high level of digital literacy while, in fact, many Indonesian children do not have access to the digital device in their home? Again, we doubt, except the government can guarantee the accessible of the digital devices. In terms of self-regulated learning, this study has important implications for using or developing the teaching strategies that can foster students’ self-regulated learning. Dignath et al. (Citation2008) suggest using what is called as self-regulated learning training programs. These programs proved to be effective, even at the primary school level. So the educator needs to realize that self-regulated learning is more likely to be nurtured than nature. The educator can not just teach how to understand the subject matter but need to stimulate the self-regulated learning of students.

While COVID-19 is still around, but in Indonesia, the teaching and learning process started to be conducted in schools or campuses. Thus, online learning is no longer fully implemented. Many parties think that online learning should continue to be carried out to reduce the number of transmission and droughts caused by COVID-19. However, this study gives a glimpse that online learning can be successfully implemented, but still with a high level of caution of every stakeholder involved, in order not to let the noble ideals to be achieved actually plunge students into the abyss of ignorance. This study suggests that the government and educational policymakers move the learning process from the “virtual world” to the actual classroom as soon as possible, especially in Indonesia, where online learning readiness is not too good (Afrianti & Aditia, Citation2020). If online learning really needs to be conducted, blended learning platform is preferable to fully online learning because blended learning combines the benefit of both worlds (Dziuban et al., Citation2018), but still, the implementation needs an enormous amount of caution. As the finding from this study indicates, academic performance in an online environment really depends on digital literacy capacity. If the students cannot use the digital device well, do not rely on technological devices to deliver your learning.

As a closing statement to hinder us from doing malpractice in education, we need to put what the distinguished scholar in pedagogy, Paulo Freire, said into heart: “We have methods to approach the content, methods to make us get closer to the learners. Some methods of approaching students can in fact push us very far from the student.” (Horton & Freire, Citation1990).

Acknowledgements

Not Applicable

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Abuhassna, H., Al-Rahmi, W. M., Yahya, N., Zakaria, M. A. Z. M., Kosnin, A. B. M., & Darwish, M. (2020). Development of a new model on utilizing online learning platforms to improve students’ academic achievements and satisfaction. International Journal of Educational Technology in Higher Education, 17(1), 1–23. https://doi.org/10.1186/s41239-020-00216-z

- Afrianti, N., & Aditia, R. (2020). Online Learning Readiness in Facing the Covid-19 Pandemic at MTS Manunggal Sagara Ilmi, Deli Serdang, Indonesia. Journal of International Conference Proceedings (JICP), 3(2), 59–66.

- Ahmed, S. T., & Roche, T. (2021). Making the connection: Examining the relationship between undergraduate students’ digital literacy and academic success in an English medium instruction (EMI) university. Education and Information Technologies, 26(4), 4601–4620. https://doi.org/10.1007/s10639-021-10443-0

- Al-htaybat, K., Von, A.-A. L., & Alhatabat, Z. (2018). Educating digital natives for the future: Accounting educators ’ evaluation of the accounting curriculum. Accounting Education, 1(1), 1–25. https://doi.org/10.1080/09639284.2018.1437758

- Andreev, P., Heart, T., Maoz, H., & Pliskin, N. (2009). Validating Formative Partial Least Squares (PLS) Models: Methodological Review and Empirical Illustration. ICIS 2009 Proceedings. https://aisel.aisnet.org/icis2009/193

- Bail, F. T., Zhang, S., & Tachiyama, G. T. (2008). Effects of a self-regulated learning course on the academic performance and graduation rate of college students in an academic support program. Journal of College Reading and Learning, 39(1), 54–73. https://doi.org/10.1080/10790195.2008.10850312

- Bandura, A. (1986). Social foundations of thought and action: A social cognitive theory. Prentice-Hall, Inc.

- Barnard, L., Lan, W. Y., To, Y. M., Paton, V. O., & Lai, S. L. (2009). Measuring self-regulation in online and blended learning environments. Internet and Higher Education, 12(1), 1–6. https://doi.org/10.1016/j.iheduc.2008.10.005

- Bawden, D. (2001). Information and digital literacies: A review of concepts. Journal of Documentation, 57(2), 218–259. https://doi.org/10.1108/EUM0000000007083

- Bawden, D. (2008). Origins and Concepts of Digital Literacy. Digital Literacies: Concepts, Policies and Practices, Peter Lang.

- Becker, J. M., Klein, K., & Wetzels, M. (2012). Hierarchical Latent Variable Models in PLS-SEM: Guidelines for Using Reflective-Formative Type Models. Long Range Planning, 45(5–6), 359–394. https://doi.org/10.1016/j.lrp.2012.10.001

- Blanz, M. (2014). How do study satisfaction and academic performance interrelate? An investigation with students of Social Work programs. European Journal of Social Work, 17(2), 281–292. https://doi.org/10.1080/13691457.2013.784190

- Bossman, A., & Agyei, S. K. (2022). Technology and instructor dimensions, e-learning satisfaction, and academic performance of distance students in Ghana. Heliyon, 8(4), e09200. https://doi.org/10.1016/j.heliyon.2022.e09200

- Buckingham, D. (2015). Defining digital literacy-What do young people need to know about digital media? Nordic Journal of Digital Literacy, 10(Jubileumsnummer), 21–35. https://doi.org/10.18261/1891-943X-2015-Jubileumsnummer-03

- Burton, L. J., Summers, J., Lawrence, J., & Noble, K. (2015). Digital Literacy in Higher Education: The Rhetoric and the Reality. https://doi.org/10.1057/9781137476982_9

- Casey, L., & Bruce, B. C. (2011). The practice profile of inquiry: Connecting digital literacy and pedagogy. E-Learning and Digital Media, 8(1), 76–85. https://doi.org/10.2304/elea.2011.8.1.76

- Chang, S.-H.-H., & Smith, R. A. (2008). Effectiveness of personal interaction in a learner-centered paradigm distance education class based on student satisfaction. Journal of Research on Technology in Education, 40(4), 407–426. https://doi.org/10.1080/15391523.2008.10782514

- Chin, W. W. (1998a). MIS quarterly (pp. vii–xvi). https://www.jstor.org/stable/249674

- Chin, W. W. (1998b). Modern Methods for Business Research, 295. Psychology Press.

- Chisholm, J. F. (2006). Cyberspace violence against girls and adolescent females. Annals of the New York Academy of Sciences, 1087(1), 74–89. https://doi.org/10.1196/annals.1385.022

- Ciavolino, E., & Nitti, M. (2013). Using the hybrid two-step estimation approach for the identification of second-order latent variable models. Journal of Applied Statistics, 40(3), 508–526. https://doi.org/10.1080/02664763.2012.745837

- Cohen, J. (2013). Statistical power analysis for the behavioral sciences. Academic press.

- Crawford, J., Henderson, K. B., Rudolph, J., Malkawi, B., Glowatz, M., Burton, R., Magni, P. A., & Lam, S. (2020). Journal of Applied Learning & Teaching COVID-19: 20 countries ’ higher education intra-period digital pedagogy responses. Journal of Applied Learning & Teaching, 3(1), 1–20. https://doi.org/10.37074/jalt.2020.3.1.7

- Creswell, J. W. (2012). Educational reserach: Planning, conducting and evaluating (4th ed.). Pearson.

- Dignath, C., Buettner, G., & Langfeldt, H. P. (2008). How can primary school students learn self-regulated learning strategies most effectively? A meta-analysis on self-regulation training programmes. Educational Research Review, 3(2), 101–129. https://doi.org/10.1016/j.edurev.2008.02.003

- Dong, C., Cao, S., & Li, H. (2020). Young children’s online learning during COVID-19 pandemic: Chinese parents’ beliefs and attitudes. Children and Youth Services Review, 118, 105440. https://doi.org/10.1016/j.childyouth.2020.105440

- Dziuban, C., Graham, C. R., Moskal, P. D., Norberg, A., & Sicilia, N. (2018). Blended learning: The new normal and emerging technologies. International Journal of Educational Technology in Higher Education, 15(1), 1–16. https://doi.org/10.1186/s41239-017-0087-5

- Elvers, G. C., Polzella, D. J., & Graetz, K. (2003). Procrastination in Online Courses: Performance and Attitudinal Differences. Teaching of Psychology, 30(2), 159–162. https://doi.org/10.1207/S15328023TOP3002_13

- Eshet-Alkalai, Y. (2004). Digital Literacy: A Conceptual Framework for Survival Skills in the Digital era. Journal of Educational Multimedia and Hypermedia, 13, 93–106. https://www.learntechlib.org/primary/p/4793/

- Faul, F., Erdfelder, E., Lang, A.-G., & Buchner, A. (2007). G* Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behavior Research Methods, 39(2), 175–191. https://doi.org/10.3758/BF03193146

- Fernández-Villavicencio, N. G. (2012). Alfabetización para una cultura social, digital, mediática y en red. Revista Española de Documentación Científica, 35, 17–45. https://doi.org/10.3989/redc.2012.mono.976

- Fornell, C., & Larcker, D. F. (1981). Structural equation models with unobservable variables and measurement error: Algebra and statistics. Sage Publications Sage CA.

- Frey, A., Faul, A., & Yankelov, P. (2003). Student perceptions of web-assisted teaching strategies. Journal of Social Work Education, 39(3), 443–457. https://doi.org/10.1080/10437797.2003.10779148

- Geisser, S. (1975). The predictive sample reuse method with applications. Journal of the American Statistical Association, 70(350), 320–328. https://doi.org/10.1080/01621459.1975.10479865

- Gilster, P. (1997). Digital Literacy. Wiley & Sons.

- Gonzalez-Nucamendi, A., Noguez, J., Neri, L., Robledo-Rella, V., Garc, R. M. G., & Escobar-Castillejos, D. (2021). The prediction of academic performance using engineering student’s profiles. Computers \& Electrical Engineering, 93, 107288. https://doi.org/10.1016/j.compeleceng.2021.107288

- Götz, O., Liehr-gobbers, K., & Krafft, M. (2010). Evaluation of structural equation models using the partial least squares (PLS) approach. In V. E. Vinzi, W. W. Chin, & H. Wang (Eds.), Handbook of partial least squares (pp. 691–711). Springer.

- Hair, J. F., Hult, G. T. M., Ringle, C., & Sarstedt, M. (2016). A primer on partial least squares structural equation modeling (PLS-SEM). Sage publications.

- Hair, J. F., Ringle, C. M., & Sarstedt, M. (2011). PLS-SEM: Indeed a silver bullet. Journal of Marketing Theory and Practice, 19(2), 139–152. https://doi.org/10.2753/MTP1069-6679190202

- Hair, J. F., Sarstedt, M., Ringle, C. M., & Mena, J. A. (2012). An assessment of the use of partial least squares structural equation modeling in marketing research. Journal of the Academy of Marketing Science, 40(3), 414–433. https://doi.org/10.1007/s11747-011-0261-6

- Hanus, M. D., & Fox, J. (2015). Assessing the effects of gamification in the classroom: A longitudinal study on intrinsic motivation, social comparison, satisfaction, effort, and academic performance. Computers \& Education, 80, 152–161. https://doi.org/10.1016/j.compedu.2014.08.019

- Henseler, J., Hubona, G., & Ray, P. A. (2016). Using PLS path modeling in new technology research: Updated guidelines. Industrial Management and Data Systems, 116(1), 2–20. https://doi.org/10.1108/IMDS-09-2015-0382

- Henseler, J., Ringle, C. M., & Sarstedt, M. (2012). Using partial least squares path modeling in advertising research: Basic concepts and recent issues. In S. Okazaki (Ed.), Handbook of research on international advertising. Edward Elgar Publishing. https://doi.org/10.4337/9781781001042

- Henseler, J., Ringle, C. M., & Sarstedt, M. (2015). A new criterion for assessing discriminant validity in variance-based structural equation modeling. Journal of the Academy of Marketing Science, 43(1), 115–135. https://doi.org/10.1007/s11747-014-0403-8

- Henseler, J., Ringle, C. M., & Sinkovics, R. R. (2009). The use of partial least squares path modeling in international marketing. New challenges to international marketing, 20, 277–319. https://doi.org/10.1108/S1474-7979(2009)0000020014

- Hockly, N. (2012). Digital literacies. ELT Journal, 66(1), 108–112. https://doi.org/10.1093/elt/ccr077

- Hong, J. C., Lee, Y. F., & Ye, J. H. (2021). Procrastination predicts online self-regulated learning and online learning ineffectiveness during the coronavirus lockdown. Personality and Individual Differences, 174(December), 2020. https://doi.org/10.1016/j.paid.2021.110673

- Horton, M., & Freire, P. (1990). We make the road by walking: Conversations on education and social change. Temple University Press.

- Ikeda, M., & Echazarra, A. (2021). How socio-economics plays into students learning on their own: Clues to COVID-19 learning losses. Pisa in Focus, 114, 1–7. https://doi.org/10.1787/2417eaa1-en

- Jarvis, C. B., MacKenzie, S. B., & Podsakoff, P. M. (2003). A critical review of construct indicators and measurement model misspecification in marketing and consumer research. Journal of Consumer Research, 30(2), 199–218. https://doi.org/10.1086/376806

- Ko, W.-H., & Chung, F.-M. (2014). Teaching Quality, Learning Satisfaction, and Academic Performance among Hospitality Students in Taiwan. World Journal of Education, 4(5), 11–20. https://doi.org/10.5430/wje.v4n5p11

- Kuo, Y. C., Walker, A. E., Schroder, K. E. E., & Belland, B. R. (2014). Interaction, Internet self-efficacy, and self-regulated learning as predictors of student satisfaction in online education courses. Internet and Higher Education, 20, 35–50. https://doi.org/10.1016/j.iheduc.2013.10.001

- Lajoie, S. P., & Azevedo, R. (2006). Teaching and learning in technology-rich environments. Lawrence Erlbaum Associates Publishers.

- Lan, W. Y. (1996). The effects of self-monitoring on students’ course performance, use of learning strategies, attitude, self-judgment ability, and knowledge representation. Journal of Experimental Education, 64(2), 101–115. https://doi.org/10.1080/00220973.1996.9943798

- Lee, T.-H., Shen, P.-D., & Tsai, C.-W. (2008). Applying web-enabled problem-based learning and self-regulated learning to add value to computing education in Taiwan’s vocational schools. Journal of Educational Technology \& Society, 11(3), 13–25. https://www.jstor.org/stable/jeductechsoci.11.3.13

- Leung, L., & Lee, P. S. N. (2012). Impact of Internet Literacy, Internet Addiction Symptoms, and Internet Activities on Academic Performance. Social Science Computer Review, 30(4), 403–418. https://doi.org/10.1177/0894439311435217

- Levy, Y., & Ramim, M. (2012). [Chais] A Study of Online Exams Procrastination Using Data Analytics Techniques. Interdisciplinary Journal of E-Learning and Learning Objects, 8(1), 97–113. https://www.learntechlib.org/p/180892/

- Li, K. (2019). MOOC learners’ demographics, self-regulated learning strategy, perceived learning and satisfaction: A structural equation modeling approach. Computers \& Education, 132, 16–30. https://doi.org/10.1016/j.compedu.2019.01.003

- Lohmöller, J.-B. (2013). Latent variable path modeling with partial least squares. Physica Heidelberg.

- Lucieer, S. M., Jonker, L., Visscher, C., Rikers, R. M. J. P., & Themmen, A. P. N. (2016). Self-regulated learning and academic performance in medical education. Medical Teacher, 38(6), 585–593. https://doi.org/10.3109/0142159X.2015.1073240

- Marks, R. B., Sibley, S. D., & Arbaugh, J. B. (2005). A structural equation model of predictors for effective online learning. Journal of Management Education, 29(4). https://doi.org/10.1177/1052562904271199

- Martin, A. (2005). DigEuLit–a European framework for digital literacy: A progress report. Journal of ELiteracy, 2(2), 130–136. https://citeseerx.ist.psu.edu/document?repid=rep1&type=pdf&doi=7e9ba99227c94e610b9ff2a6ee08c187c179dc25

- Michinov, N., Brunot, S., Le Bohec, O., Juhel, J., & Delaval, M. (2011). Procrastination, participation, and performance in online learning environments. Computers \& Education, 56(1), 243–252. https://doi.org/10.1016/j.compedu.2010.07.025

- Miranda, P., Isaias, P., & Pifano, S. (2018). Digital Literacy in Higher Education: A Survey on Students’ Self-assessment. Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), 10925(LNCS), 71–87. https://doi.org/10.1007/978-3-319-91152-6_6

- Mohammadyari, S., & Singh, H. (2015). Understanding the effect of e-learning on individual performance: The role of digital literacy. Computers and Education, 82, 11–25. https://doi.org/10.1016/j.compedu.2014.10.025

- Nayak, J. K. (2018). Relationship among smartphone usage, addiction, academic performance and the moderating role of gender: A study of higher education students in India. Computers and Education, 123(May), 164–173. https://doi.org/10.1016/j.compedu.2018.05.007

- Nye, C. D., Prasad, J., & Rounds, J. (2021). The effects of vocational interests on motivation, satisfaction, and academic performance: Test of a mediated model. Journal of Vocational Behavior, 127, 103583. https://doi.org/10.1016/j.jvb.2021.103583

- OECD. (2018). Equity in Education. Pisa. OECD. https://doi.org/10.1787/9789264073234-en

- Orange, C. (1999). Using peer modeling to teach self-regulation. Journal of Experimental Education, 68(1), 21–39. https://psycnet.apa.org/doi/10.1080/00220979909598492

- Perry, V., Albeg, L., & Tung, C. (2012). Meta-analysis of single-case design research on self-regulatory interventions for academic performance. Journal of Behavioral Education, 21(3), 217–229. https://doi.org/10.1007/s10864-012-9156-y

- Petter, S., Straub, D., & Rai, A. (2007). MIS Quarterly, 31(4), 623–656. https://doi.org/10.2307/25148814

- Prior, D. D., Mazanov, J., Meacheam, D., Heaslip, G., & Hanson, J. (2016). Attitude, digital literacy and self efficacy: Flow-on effects for online learning behavior. The Internet and Higher Education, 29, 91–97. https://doi.org/10.1016/j.iheduc.2016.01.001

- Puzziferro, M. (2008). Online technologies self-efficacy and self-regulated learning as predictors of final grade and satisfaction in college-level online courses. International Journal of Phytoremediation, 21(1), 72–89. https://doi.org/10.1080/08923640802039024

- Rashidi, N., & Moghadam, M. (2014). The Effect of Teachers’ Beliefs and Sense of Self-Efficacy on Iranian EFL Learners’ Satisfaction and Academic Achievement. Tesl-Ej, 18(2), 1–23. http://www.tesl-ej.org/pdf/ej70/a3.pdf

- Ringle, C. M., Sarstedt, M., Mitchell, R., & Gudergan, S. P. (2020). Partial least squares structural equation modeling in HRM research. The International Journal of Human Resource Management, 31(12), 1617–1643. https://doi.org/10.1080/09585192.2017.1416655

- Ringle, C. M., Sarstedt, M., & Straub, D. W. (2012). A critical look at the use of PLS-SEM in MIS quarterly. MIS Quarterly: Management Information Systems, 36(1), 1. https://doi.org/10.2307/41410402

- Ringle, C. M., Wende, S., & Becker, J.-M. (2015). SmartPLS 3. .

- Rodríguez-de-Dios, I., & Igartua, -J.-J. (2016). Skills of Digital Literacy to Address the Risks of Interactive Communication. Journal of Information Technology Research, 9(1), 54–64. https://doi.org/10.4018/JITR.2016010104

- Rodriguez-de-Dios, I., Igartua, -J.-J., & González-Vázquez, A. (2016). Development and validation of a digital literacy scale for teenagers. Proceedings of the Fourth International Conference on Technological Ecosystems for Enhancing Multiculturality, 1067–1072.

- Samruayruen, B., Enriquez, J., Natakuatoong, O., & Samruayruen, K. (2013). Self-regulated learning: A key of a successful learner in online learning environments in Thailand. Journal of Educational Computing Research, 48(1), 45–69. https://doi.org/10.2190/EC.48.1.c

- Schunk, D. H., & Ertmer, P. A. (2000). Self-regulation and academic learning: Self-efficacy enhancing interventions. In M. Boekaerts, M. Zeidner, P. R. Pintrich (Eds.), Handbook of self-regulation (pp. 631–649). Elsevier.

- Siddiq, F., Gochyyev, P., & Wilson, M. (2017). Learning in Digital Networks – ICT literacy: A novel assessment of students’ 21st century skills. Computers and Education, 109, 11–37. https://doi.org/10.1016/j.compedu.2017.01.014

- Stone, M. (1974). Cross-validatory choice and assessment of statistical predictions. Journal of the Royal Statistical Society: Series B (Methodological), 36(2), 111–133. https://www.jstor.org/stable/2984809

- Topală, I. (2014). Attitudes towards Academic Learning and Learning Satisfaction in Adult Students. Procedia - Social and Behavioral Sciences, 142, 227–234. https://doi.org/10.1016/j.sbspro.2014.07.583

- Topala, I., & Tomozii, S. (2014). Learning satisfaction: Validity and reliability testing for students ’ learning satisfaction questionnaire (SLSQ). Procedia - Social and Behavioral Sciences, 128, 380–386. https://doi.org/10.1016/j.sbspro.2014.03.175

- Tsai, M. J., & Tsai, C. C. (2010). Junior high school students’ Internet usage and self-efficacy: A re-examination of the gender gap. Computers and Education, 54(4), 1182–1192. https://doi.org/10.1016/j.compedu.2009.11.004

- Unsworth, L. (2005). E-literature for children: Enhancing digital literacy learning. Routledge.

- Upadhyaya, P. (2021). Impact of technostress on academic productivity of university students. Education and Information Technologies, 26(2), 1647–1664. https://doi.org/10.1007/s10639-020-10319-9

- Vinzi, V. E., Chin, W. W., Henseler, J., & Wang, H. 2010. Handbook of partial least squares. Vol. 201(1):Springer.

- Vrana, R. (2014). Digital literacy as a prerequisite for achieving good academic performance. Communications in Computer and Information Science, 492, 160–169. https://doi.org/10.1007/978-3-319-14136-7_17

- Wang, C. H., Shannon, D. M., & Ross, M. E. (2013). Students’ characteristics, self-regulated learning, technology self-efficacy, and course outcomes in online learning. Distance Education, 34(3), 302–323. https://doi.org/10.1080/01587919.2013.835779

- Watermeyer, R., Crick, T., & Knight, C. (2022). Digital disruption in the time of COVID-19: Learning technologists’ accounts of institutional barriers to online learning, teaching and assessment in UK universities. International Journal for Academic Development, 27(2), 148–162. https://doi.org/10.1080/1360144X.2021.1990064

- Wetzels, M., Odekerken-Schröder, G., & Oppen, C. V. (2009). Assessing Using PLS Path Modeling Hierarchical and Empirical Construct Models: Guidelines. MIS Quarterly, 33(1), 177–195. https://doi.org/10.2307/20650284

- Wold, H. (1982). Systems under Indirect Observation. North-Holland.

- Xiao, J., & Wilkins, S. (2015). The effects of lecturer commitment on student perceptions of teaching quality and student satisfaction in Chinese higher education. Journal of Higher Education Policy and Management, 37(1), 98–110. https://doi.org/10.1080/1360080X.2014.992092

- Zhu, Y., Au, W., & Yates, G. (2016). University students’ self-control and self-regulated learning in a blended course. The Internet and Higher Education, 30, 54–62. https://doi.org/10.1016/j.iheduc.2016.04.001

- Zimmerman, B. J. (2000). Attaining self-regulation: A social cognitive perspective. In M. Boekaerts, P. R. Pintrich, M. Zeidner (Eds.), Handbook of self-regulation (pp. 13–39). Elsevier.