Abstract

Artificial intelligence-based tools are rapidly revolutionizing the field of higher education, yet to be explored in terms of their impact on existing higher education institutions’ (HEIs) practices adopted for continuous learning improvement, given the sparsity of the literature and empirical experiments in undergraduate degree programs. After the entry of ChatGPT -a conversational artificial intelligence (AI) tool that uses a deep learning model to generate human-like text response based on provided input—it has become crucial for HEIs to be exposed to the implications of AI-based tools on students’ learning outcomes, commonly measured using an assessment-based approach to improve program quality, teaching effectiveness, and other learning support. An empirical study has been conducted to test the ChatGPT capability of solving a variety of assignments (from different level courses of undergraduate degree programs) to compare its performance with the highest scored student(s). Further, the ChatGPT-generated assignments were tested using the best-known tools for plagiarism detection to determine whether they could pass the academic integrity tests, including Turnitin, GPTZero, and Copyleaks. The study reported the limitations of the Bot and highlighted the implications of the newly launched AI-based ChatGPT in academia, which calls for HEIs’ managers and regulators to revisit their existing practices used to monitor students’ learning progress and improve their educational programs.

PUBLIC INTEREST STATEMENT

The recent entry of the conversational artificial intelligence tool of ChatGPT is considered as a disruptive technology. Based on this premise, the researchers investigated if ChatGPT can respond effectively to the variety of assessment tools used by the instructors at undergraduate level to develop students’ technical skills, problem-solving skills, critical thinking skills, communication skills, etc., as part of the program learning outcomes. The findings confirmed that ChatGPT can easily solve the variety of assessments and the existing plagiarism detection softwares are still far from validating the academic integrity of the students’ worked answers. The findings suggest that HEIs need to revisit their current performance-based evaluation systems for monitoring students’ learning and development and also their academic integrity policies. Also, the instructors need to rethink of their current assessment tools and come up with innovative techniques to ensure academic integrity among the students.

1. Introduction

The use of artificial intelligence by students to improve their learning and performance is not new to the education sector (Du Boulay, Citation2016). Several artificial intelligence (AI) assisted tools daily, such as learning management systems, online discussion boards, exam integrity, transcriptions of lectures, assisted chatbots, and so on, are used inside as well as outside the class, to make the learning experience effective and exciting (Chai et al., Citation2021; Chassignol et al., Citation2018; Ciolacu et al., Citation2018). ChatGPT (Generative Pretrained Transformer) is a name, which has been recently added to this list. It is a language model developed by OpenAI, which can understand natural language and generate human-like responses (Lund & Wang, Citation2023; Brown et al., Citation2020). It works as a smart conversational tool that can understand complex inputs and provide detailed, original, and accurate answers to the questions asked (Van Dis et al., Citation2023), thus supporting and hampering students’ learning at the same point in time, who need not put hours of effort into solving assignment questions on their own to develop the necessary skills for future work roles.

Although most people use ChatGPT as an alternative to search engines or for creative writing, it can write a student’s paper as well as computer code, critically analyze research writings, solve case studies, or any other task assigned to writing emails, letters, or poetry, with or without instructions (as you may like) (Atlas, Citation2023). “It can be used in a variety of educational contexts, such as providing students with immediate feedback on their homework or helping them with research projects or to personalize the learning experience for each student by providing them with tailored responses based on their specific needs and abilities” (ChatGPT itself, in response to the question posted what you can offer to the students?). It has the potential to build on conversation and provide holistic answers based on the entire conversation with follow-up questions (Bhattacharya et al., Citation2023). This conversational interface and dialogic nature give ChatGPT a greater jump in enhanced user interpretability and allow the users to gain a larger perspective on the concept under discussion (Gilson et al., 2023).

University professors continue to contend with the idea that students have access to ChatGPT, which can write anything from cookie recipes to computer codes to relationship advice (Gordon, Citation2023; Stokel-Walker, Citation2022). Universities and schools fear cheating and wrong claim of work authorship by the students in the absence of any tool capable of detecting AI-assisted writing or plagiarized work (Ventayen, R., Ventayen, Citation2023), hence, opening doors to the discussion of the legitimacy of the existing performance evaluation models followed in the higher educational systems to determine students’ learning achievements and education quality.

Academic integrity issues are not new to academia, but the arrival of AI-powered chatbots has baffled academics from across the world about their implications and ethical use (Flores-Vivar & García-Peñalvo, Citation2023; Gelman, Citation2023), which answers the questions in a few seconds with human-like language. As per the existing practice in academia, the information gathered from students’ evaluations is used to shape strategies for learning improvement at each level of the educational system. In the classroom, teachers use this information to adjust their teaching approaches and strategies to meet students’ learning needs (CERI, Citation2008). Likewise, management teams use this information at the policy level to monitor students’ learning, provide support, and set priorities for improving the learning outcomes at the institutional level, regulated by the relevant government authorities who stay vigilant to the students’ learning and career development through their enforced compliance mechanisms and regular audits of the educational systems. Thus, making learning outcomes central in the education policy development and paradigm shift in education with greater emphasis on student learning instead of teaching only (Aasen, Citation2012; Hopmann, Citation2008; Prøitz, Citation2015)

Teachers, institutions’ management, and academic regulators’ use of student learning outcome information can only be useful for developing strategies to improve learning if it is the true reflection of students’ performance in the course (Prøitz, Citation2015; Shepard, Citation2007). If their worked assignments and reports completed during the instruction period are AI-written but fulfill the criteria of academic integrity, existing performance evaluation approaches are no longer relevant to assess students’ learning outcomes, whether at the course-level, program-level, or institutional level, forcing the teachers, educational institutions, and academic regulators to revisit the approach for managing students’ continuous learning and development. As Jeff Maggioncalda, CEO of Coursera states that “people using ChatGPT will not only outsource writing but also the thinking”, and outsourcing critical thinking to the technology can be vulnerable to manipulation and misinformation and harmful to the whole society (HR Observer, Citation2023).

Post-ChatGPT launch, educational institutions, being custodians of knowledge creation and knowledge sharing, have no clue on how to respond to this new tool: on the one hand, we promote the use of technology for learning new information; on the other hand, we must be ethical, responsible, and cautious (Gelman, Citation2023). This question is posited by Emirate-based higher educational institutions and regulators as well, who have advised them to embrace AI-based tools and explore how they can be used safely, instead of blocking them (see García-Peñalvo, Citation2023; Telecom Review, Citation2023). Emirates institutions have started embracing new technology (Badam, Citation2023) and offering free training programs to students in smart learning (see Hamdan Bin Mohammed Smart University, Dubai). It is imperative to identify the impact of this tool on the knowledge sector (Krugman, Citation2022) before this new technology penetrates deeper into the region and the news spreads among the students who are yet to be trained on the ethical use of ChatGPT in the learning process (Van Dis et al., Citation2023).

This matter is timely and significant because with our current performance evaluation model for ensuring students’ learning outcomes at the course, program, and university level through the utilization of assortment of tools (whether in traditional classroom settings or using online applications), runs the risk of issuing a high-grade point average (GPA) to everyone, confirming the achievement of their respective program learning outcomes, simply by submission of work on time or presence in class, which might not be a true reflection of students’ knowledge and mastery in the course or field. The negative word-of-mouth in the market for low-quality learners can inevitably spoil educational institutions’ credibility (Altbach, Citation2004) and may raise questions regarding their need to prepare a future workforce or entrepreneurs anymore.

1.1. Study Objectives

This article presents a case study using an experiment-based approach to observe whether ChatGPT can provide full-baked solutions to the assortment of assessment tools used for developing students’ skills in critical thinking, problem solving, communication, knowledge application, research, teamwork, ethics, and so on—critical for their professional and career development. It compares the solutions provided by ChatGPT with the highest number of students who worked on answers to determine the difference between the two in terms of their performance and further assesses whether the tools commonly used to check academic integrity can validate authorship and determine whether the answers are AI-generated. The current study fills the existing gap in the field by investigating the potential consequences of students’ relying too heavily on chatbots for learning purposes so that timely action can be taken by the universities in preparing effective guidelines and policies to facilitate the appropriate and ethical use of this new technology in education sector (Tlili et al., Citation2023). The study contributes to the following by determining, a) ChatGPT’s capability of responding effectively to the assortment of tools used for assessing students’ learning outcomes at the undergraduate level; b) ChatGPT’s capability of passing academic integrity thresholds; and c) the inability of our existing performance evaluation model adopted by the educational systems to monitor and ensure that students are developing their program-level skills in an AI-fueled learning environment. In essence, it fills the aforementioned gaps existing in the domain and poses an important question of whether ChatGPT supports students in learning or hampers their critical thinking, problem-solving, and writing skills, leaving them less prepared for future roles in the industry. To the best of our knowledge, no such investigation has been conducted in the region at a higher education level. The research findings will fill the existing gap in the knowledge domain of AI-assisted learning technologies in the educational sector (Atlas, Citation2023; Van Dis et al., Citation2023).

The next section elaborates on prior literature, followed by the study methodology, experimental findings, and discussion of the implications of the findings. This study concludes with its limitations and directions for future research.

1.2. Performance Monitoring Evaluation Models Adopted by Higher Educational Institutions (HEIs)

The performance monitoring evaluation model (Gallegos, Citation1994) adopted by HEIs aids the review of students’ learning outcomes against a defined set of standards and helps identify the strengths and weaknesses of learners, which can be used as feedback to improve their performance (Kluger & DeNisi, Citation1996; Rheinberg et al., Citation2000). This puts student learning at the heart of the evaluation approach adopted in educational systems as a foundation for continuous improvement, and evidence of students’ learning outcomes at the course or program level leads to transformational changes in institutional ongoing-support, teaching practices, and advances in students’ learning outcomes in response to areas of weakness identified (Mason & Calnin, Citation2020), to continue making progress in student learning and development (Cambridge Assessment International Education, Citation2021). As CERI (Citation2008) reinforced, establishing learning goals and tracking students’ progress towards those goals makes the learning process transparent and builds students’ confidence.

Within higher educational systems, in each instructional period, students enrolled in different courses work on different activities (in-class and out-of-class) to demonstrate their knowledge and skills through a variety of work to meet the needs of a variety of students (OECD, Citation2002, Citation2003). Various assessments help to determine students’ ability to transfer learning in different situations. The instructor evaluates the students’ performance on the established criteria, outlining the requirements and expectations from the assignments (usually explained in advance) or exams to correct and deepen their understanding (CERI, Citation2008). This performance assessment helps to evaluate students’ learning outcomes in relation to course-level learning goals and to develop their self-esteem, intrinsic motivation, and so on (Trautwein & Köller, Citation2003), by providing timely feedback and adjusting teaching strategies as well as course quality (Parker, Citation2013). The students’ achievement of learning outcomes of each course confirms that they have built an adequate knowledge base and skills mastery expected as program learning outcomes.

The program learning outcomes essentially guide the development of skills among students most in demand by the local/global industry. Technical skills are not the only criteria to determine students’ success in their future professional roles; rather, soft skills, such as communication, teamwork, leadership, research, critical thinking, problem solving, and other related interpersonal skills, are important in each program’s learning outcome to prepare students for their future work roles (Shuman et al., Citation2005; Willmot & Colman, Citation2016). In a Wall Street Journal survey of 900 executives, 92% reported that soft skills, such as communication, critical thinking, and curiosity, are as important as technical skills and mostly short in supply (Ashford, Citation2019; Davidson, Citation2016). While technical skills are essential for securing a job, it is the right mix of soft skills that ultimately drives career advancement and growth in tightened labor markets with heated competition (Willmot & Perkin, Citation2011). In addition, individuals do not work in isolation, continually work in diverse teams, and collaborate with others on multidisciplinary projects, and the overall success of the project depends on these skills (Deepa & Seth, Citation2013).

These soft skills are developed among the students through practice-oriented activities conducted during the instructional period of the program (Willmot & Colman, Citation2016), such as oral presentations, group projects, creative writing, problem-solving activities, critical thinking, and case analysis, data analysis, and research work, among many others. They are most used in higher education to develop students soft skills along with technical skills, measured as program learning outcomes—verifying that students are prepared enough in their respective programs for success in their work roles. While developing these skills, the importance of effort cannot be neglected (Ames, Citation1992).

Long technology has played an important role in providing learning platforms to increase students’ engagement and learning. Recently, a new name has been added to a list of artificial intelligence (AI)-assisted tools available at the discretion of students for their learning. ChatGPT is a new tool, and research is ongoing to understand its strengths and weaknesses. The literature on the strengths of ChatGPT as an AI-assisted learning tool is discussed in the next section.

1.3. Chat GPT in Academia

Launched on 30 November 2022, ChatGPT received 1 million users in less than a week after its public release (Jooris, Citation2023) and now stands at up to 100 million users as of 2 February 2023 (Reuters, Citation2023). The GPT is built on “transformer architecture” model. The Transformer architecture was first introduced by Google researchers back in 2017, which is based on a neural network mechanism (Vaswani et al., Citation2017). It has the ability to handle long dependencies on sequences of data, which makes it a great choice for natural language processing and computer vision.

It is trained using more than 175 billion parameters and is consistently updated with new data (Gilson et al., Citation2022). To train ChatGPT, an unsupervised learning process was used by providing it with existing data as input without explicit instructions on what the correct output should be. The unsupervised learning process allowed ChatGTP to adapt to new situations without the need for manual programming or rule writing. During training, the model was refined using “language modeling” technique to predict the next word in a sequence of text. At its very core, ChatGPT utilizes a combination of advanced AI models and techniques, such as multi-layered transformer networks, attention mechanisms, and adaptive computation to achieve results (Campello de Souza et al., Citation2023).

The combination of advanced AI models enables AI chatbots to achieve high-level performance and excel in generating human-like text, recognizing patterns, correcting errors, debugging code, and generalizing information (Surameery & Shakor, Citation2023). It is a large language model that generates responses by culling from huge datasets using machine learning to answer questions. Professor of Educational Technology (Ryan Watkins) from George Washington University said that ChatGPT works like an autocorrect on cell phones but is 100 times more powerful, trained on billions of pieces of information available on the Internet (Rivera, Citation2023). Unlike other natural language processing tools that extract text from existing materials, ChatGPT uses a complex neural network model to generate text by accurately predicting the next word using probability statistics.

Using ChatGPT, one may feel that he/she is interacting with a domain expert of the subject who can understand complex composite queries and answer accordingly. It is its dialogic nature and conversational capability that separates it from previous models and makes it an effective educational tool (Gilson et al., 2023). As Pavlik (Citation2023) reported, ChatGPT arguably has the potential to pass the Turing test (meaning humans through interactive conversations with machines cannot tell if he/she is interacting with a machine or a person, as proposed by Turing (Citation1950). However, its information is limited to data before 2021 because it was trained on data that did not include information created after that year. On the other side, Jenna Lyle, the deputy press secretary for New York public schools, said in an interview with the Associated Press that as the tool provides quick answers to the questions, it does not build critical thinking and problem-solving skills, and thus negatively affects student learning and lifelong success (Korn & Kelly, Citation2023; Novak, Citation2023).

1.4. Academic integrity—A must have

Unethical behavior and cheating runs rampant in the society and higher educational institution is no immune to it. McCabe and Trevino (Citation1996) reported in their study based on 31 colleges and 60,000 students that one in three students are engaged in academic misconduct and fraud. In another study, McCabe and Pavela (Citation1993) shared that more than half of all undergraduate students’ cheat, confirmed by Nonis and Swift (Citation2001). Likewise, in a poll at Duke University, more than 2000 students confirmed that at least once they plagiarized (Owings, Citation2002). Similarly, Bloomfield (2005) reported that 30% of students plagiarize on all their papers. The exceeding number of studies reporting high level of academic dishonesty and cheating among the students (Ludeman, Citation2005) suggests that beyond the foundation of trust between students and instruction, a layer of accountability and credibility is essential for ensuring “academic integrity”, as integrity failures can damage credibility and reputation of higher educational institutions (Altbach, Citation2004).

nAcademic integrity—a term though open to its widest interpretations —is commonly used as a proxy for the students’ conduct in relation to plagiarism and cheating (Macfarlane et al., Citation2014). There is a relatively new focus on educational policy pointing towards the importance of preventing academic misconduct by building academic norms and integrity through standards and regulations (Sun, 2006). Educational institutions mostly have their academic integrity policies in place to deal with academic dishonesty and strengthen academic standards, well communicated to students by faculty on a regular basis.

In order to establish better practices for ensuring good academic conduct, it is important to verify the identity and authorship of students’ submitted work (Macfarlane et al., Citation2014) while not being intrusive of their privacy (Amigud et al., Citation2017). Technology plays an important role in achieving this goal by providing platforms for secure and efficient evaluation of students’ work. These technological tools have been classified into five types (Heussner, Citation2012): 1) remote live proctoring; 2) remote web processing; 3) browser lockdowns; 4) keystroke pattern recognizers; and 5) plagiarism detectors. These technologies are used to verify the identity, validate authorship, and regulate the learning environment. The tools available have limitations, serving only one specific purpose or goal. For instance, online assignments are checked for plagiarism and confirming authorship, but fail to verify identity (Amigud et al., Citation2017), and the Lockdown Browser can verify the identity but fail to validate authorship claims in the case of cheating. Although none of the tools provided a foolproof solution and often required further investigation and verification by the instructor when a flag was raised, however, they still proved to be effective in ensuring academic integrity to some extent, e.g., plagiarism-detection tools such as: TurnItIn and Edutie (Denisova-Schmidt, Citation2016; Boehm, Justice, and Weeks, 2009).

Moving forward, with the arrival of ChatGPT, a new AI tool that allows anyone to generate essays and scripts in a few seconds close to human-like writings, an important question at hand is how academic integrity can be ensured in the work coming from the students but produced by ChatGPT (Ventayen, Citation2023).

2. Methodology

2.1. Study design

A quasi-experimental design was adopted to conduct the research and achieve the study objectives, without involving human subjects. Rather, the students (control group) graded work from randomly selected courses was compared against the answers generated by ChatGPT (experimental set) to determine the performance achievements of both on the existing assessment tools adopted by the instructors. This research design helped us to compare the learning performance of student vs. bot when it was deemed unethical to run a true experiment, keeping in mind that ChatGPT and its use should not be introduced to the students for experimental purposes, unless clear directions have been given by the university management and the educational institution regulators with the appropriate course of action to introduce and regulate its use among the students for learning purposes.

Experiments were conducted using the program learning outcomes of the Bachelor of Business Administration in Human Resource Management (BBA-HRM) program (Figure ) offered by a high-ranking private sector HEI based in Abu Dhabi Emirates. Of the eight program learning outcomes, only two focused on technical knowledge and technical skills development, while the rest focused on developing the necessary soft skills (critical thinking, research, communication, teamwork, and leadership, ethics, and autonomy), as required by the current job market. All learning outcomes associated with the courses picked for experiment whether at course level, program level, or university level, have been reworded keeping in view institution’s intellectual property rights.

Figure 1. Key elements of undergraduate program learning outcomes.

The study plan (Table ) categorizes courses into five sections: University General Education Courses (33 credit hours), College Requirement Courses (45 credit hours), Specialization Courses (33 credit hours), Supporting Courses (9 credit hours), and Practical Training (3 credit hours). As practical training is an internship course where students gain hands-on experience in the industry, and its assessment is primarily based on their work performance (conducted by the field supervisor) and oral presentation of their work report, it was not included in the current experiment.

Table 1. Study plan of undergraduate program

A Python program was developed to randomly select a course from each section of the study plan by using the SAMS random selector application. The “random.choice()” function from the “random” library was utilized to perform the selection. Subsequently, one assignment from each course was selected to run the experiment. A variety of assessments were included in the experiment, that is, case analysis, empirical study report, self-reflection group work, and calculation-based assignments, to assess the capability of ChatGPT in solving a variety of assessments of different complexity levels, used by instructors to ensure that important skills of students are developed while putting effort into solving them.

The stepwise details of the experiment and the results are discussed in the next section.

3. Findings & analysis

3.1. ChatGPT performance vs. highest student performance

To begin with, four courses were selected at random from the four main sections of the study plan: one from General University Education (Leadership & Teamwork year-one-level course), one from College Requirement Courses (Organizational behavior year-two-level course), and one from Specialization Courses (HR [.] Staffing year-three-level course), and one from a supporting course (Quality Management year-four-level course). The courses selected at random were restricted to a list of theoretical courses, keeping in mind the significance of verbal comprehension in HR-based degree programs.

Next, the selected courses’ files were retrieved from the college archives to access assessment tools used to evaluate students’ learning outcomes in the previous semester by the concerned instructor along with the instructor’s answers and the highest graded students’ work samples. These 13-items based course e-files include course study plans and materials, assessment tools and worked answers, students’ performance samples and grades, and reports on actions taken and proposed to improve students’ learning experiences. They are prepared and archived by course instructors at the end of each semester for audit, knowledge sharing, and continuous improvement purposes.

The list of assessments conducted in each selected course was used to determine the assessments to be included in the experiment from the assortment of tools (quiz, exams, assignment, case study, projects, presentation, etc.) used to assess students’ learning outcomes in the respective courses. The selection was made keeping in mind the inclusion of a variety of assessment tools of different complexity levels. The tool available at the discretion of the faculty members to ensure academic integrity of the students’ work is TurnItIn.

The selected assignments were visited, and related questions were imputed in the ChatGPT to obtain responses. These responses were then shared with the respective instructors who led that course in the recent previous semester to mark them without sharing that they marked the assignments answered by ChatGPT (anonymously named as “Ghanam P. Tareq”), to avoid any bias.

This randomly selected experimental trial was conducted to examine the academic performance of ChatGPT compared to the control group of students who completed plagiarism-free assignments on their own as part of their course-learning assessments before this AI tool was launched and made available for public use. The assignments answered by ChatGPT were marked by the same instructors, and a comparison was made between the marks given to the student work earlier and to “Ghanam” work.

3.1.1. Session 1: general university education course—leadership & teamwork

Leadership and Teamwork (GUE course) was last taught in the recent academic year 2022–2023. A case study was randomly selected from all assessment tools (exam, quiz, applied assignments, case analysis, research project, and presentation) used in this course to assess GUE learning outcomes. The selected case study helped in achieving a course learning outcome (CLO) 2 (analyse leadership practices in organizations) aligned with GUE Learning outcomes B (communication skills [written]), D (application of knowledge), and E (self & professional development).

The case study was based on TATA Corporation, which discussed the leadership characteristics of Tata in context to the cultures at different levels, and whether his leadership traits, behaviors, or style were suitable for Emirates-based leaders. The students answered three questions after reading the case: (1) What are the characteristics of Tata’s leadership? 2) Which levels of culture would Tata Corporation need to consider? 3) Can his management style be implemented in U. A. E-based firms? The highest marks scored by the student on the 421 words worked solution were 6 out of 6 with 3% plagiarism coming from the cover sheet only, checked using the Turnitin application.

The same questions were asked by the ChatGPT without sharing the case study (Table ). It answered all three questions without any contextual information in less than 5 seconds. The answers were uploaded to Turnitin to check the plagiarism percentage. In the written assignment of 371 words, the plagiarism percentage was ZERO. In addition, no flag was detected for the submission with a message that “no hidden text and no replaced characters.” Thus, the answers passed the Academic Integrity Test. Subsequently, the assignment was given to the same faculty member for grading who had taught this course in the previous semester and graded the students’ assignments as well. “Ghanam” scored 6 out of 6 as well. The faculty was asked to compare the previous student worked answers (highest graded) with “Ghanam” responses, who using experiential judgement reported that the solutions provided by “Ghanam” were more coherent, critical, and well communicated with no language errors.

Table 2. ChatGPT response – general university education course

3.1.2. Session 2: college requirement course—organizational behavior

Next, the Organizational Behavior course (a College Requirement course usually taught in the second year of the program) was randomly selected. This course was taught in the previous recent semester of academic year 2022–2023. Subsequently, in-class activity was selected from among the six types of assessment tools (exams, quizzes, in-class activities, case analysis, research projects, and presentations) used in the course to assess students’ learning outcomes. The selected activities helped in achieving course learning outcomes (CLO) 4 (examine motivational theories and their practical applications) aligned with Program Learning Outcomes 3 (critical-thinking skills) and 5 (autonomy and responsibility).

The in-class activity was self-reflection work given to the students after completing the discussion on the topic of “motivation” to understand the practical application of motivational theories in their personal life choices. The students were asked 1) to individually determine their motivation when they made the decision to attend college and what motivated them to expend more effort in this class and to continue to attend college and expend the effort necessary to graduate? 2) Next, they were asked to decide which motivational theory best fitted the results of their discussion and were invited to give a short description of the most common motivators found among the group with justification of the chosen motivational theory. 3) Lastly, as a team, they were asked for motivational suggestions to increase the likelihood of their study performance. The students were marked on the spot during the class activity to answer the questions individually for question 1 and group-based for questions 2 and 3. The highest score by the student/group was 3 out of 3.

The same questions were asked by ChatGPT without sharing the study material or context specific to the topic (Table ). In response to the first question to identify self-motivation for joining a college, course, or degree program, it started “as an AI language model, I do not have personal motivations or the ability to attend college or participate in discussions. However, I can provide some general reasons that students might choose to attend college and put effort into their studies and provide a list of general motivators for attending college and course or pursuing the degree. Likewise, in response to the next question, which was based on group thinking and mutual agreement, ChatGPT responded that

as an AI language model, I cannot participate in discussions or make decisions as a group. However, I can suggest that the motivations mentioned in the previous answer could potentially be explained by Self-Determination Theory. ChatGPT built on its previous response and chose appropriate motivational theory as a determinant of students

Table 3. ChatGPT response—college requirement course

Subsequently, the ChatGPT responses were given to the instructor for grading who had taught this course in the previous semester and conducted this in-class activity. “Ghanam” got perfect score. The instructor reported that “Ghanam’s” responses were critical, well-thought, adequate, and well-communicated.

3.1.3. Session 3: specialization course—HR (.) Staffing

Subsequently, in continuation of our experiment, the HR (.) staffing course (usually taught in the third year of the program) was randomly selected using a random generator application from the Specialization Courses section. It is a mandatory specialization course for all students registered in the HRM program. Case studies, exams, projects, and presentations were used as assessment tools to evaluate students’ learning outcomes. A project was randomly selected to assess whether ChatGPT could follow the guidelines given and prepare the report (2000 words) on the field study findings. The group-based empirical case study expected students to analyze the existing human resource planning, recruitment, and staffing processes, including staffing plans, recruitment strategies, selection tools/assessment methods, and retention techniques, in ANY organizations (based on Emirates) and identify the best practices implemented by the firm to achieve its HR Staffing strategy and goals (Table ).

Table 4. ChatGPT response – specialization course

The query was run, and it was clear from the ChatGPT response that it understood the requirements of the field investigation well and selected the Emirates Group (Dubai-based international airline) as a firm to conduct the case study, gathered relevant information regarding its existing staffing system, analyzed its strengths and areas for improvement, and proposed a viable plan of action to further improve its staffing strategy. ChatGPT followed all the requirements of the project, except for the required length of the report (generated 623 word report). Another query was given to ChatGPT in continuation to the conversation that “answer should be 2000 words, try again.” To this ChatGPT responded ‘I apologize for the previous response. Here’s a more detailed answer that meets the required word count and regenerated case report of 627 words for the same firm. Hence, ChatGPT can also prepare empirical case-based reports on local firms, but in limited words. It was inquired from ChatGPT that ‘what is the maximum number of words ChatGPT can generate as an answer? ChatGPT responded that “as an AI language model, ChatGPT can generate responses of various lengths from few words to several hundred words (.) depending on factors like complexity of question, amount of information available, the level of detail required in the answer”. Currently, the limited word count seems to be a limitation of the bot.

The case study report prepared by “Ghanam” was handed over to the instructor who prepared this assignment and assessed the students’ performance on this project in the previous semester. The instructor graded seven out of 10, as in-depth analysis and recommendations were missing. The case study report was tested for academic integrity, and 14% plagiarism was detected in “Ghanam” work.

3.1.4. Session 4: supporting compulsory course—production (.) management

Finally, in continuation with our series of experiments to achieve the first study objective, Production (.) Management course (usually taught in the fourth year of the program) was randomly selected using a random generator application from the compulsory supporting course section. The assessments used by the instructor to monitor students’ performance in the previous semester included exams, projects, quizzes, and problem-solving assignments. A problem-solving assignment was selected to test the ChatGPT capability of using quantitative and qualitative techniques to analyze key operational dimensions (e.g., capacity management, inventory management, and quality management) for productivity, decision-making, and control purposes. Eight question-based assignments were assigned to ChatGPT with information on the products, work centers, and processing time (Table ). In less than 2 minutes, ChatGPT responded to the first four questions. During the experiments, it was noted that the bot’s response time was limited to less than 2 minutes; any question unanswered until then was left as it was. Regardless of this limitation, the questions left unanswered were asked again by ChatGPT, and responses were received in less than a minute. In total, the bot took approximately 3 minutes to solve the assignment at hand, which passed the academic integrity test from Turnitin with 4% plagiarism (trivial) detected.

Table 5. ChatGPT response—supporting course

“Ghanam” worked answers were given to the instructor who graded the students on this assignment in the recent previous semester for scoring. The instructor commented that the submitted work was perfect and scored 10 out of 10. The analysis provided was detailed, including all the required steps and an adequate explanation for the given recommendations.

3.2. Academic Integrity Assessment of the ChatGPT Responses

Instructors use different techniques to verify the academic integrity and validate the authorship of the work. During in-class activities, instructors ensure physical monitoring to control the learning environment and ensure that students work on the assigned tasks. On the other hand, outside-the-class assignments or projects (whether individual or group-based) are usually accepted online on University’s Learning Management Systems. The common practice in the higher education sector is to use the Turnitin application (or others) to validate the authorship and ensure the academic integrity of each assignment submitted, which helps the instructors evaluate the students’ learning achievement at the course level as well as the program level.

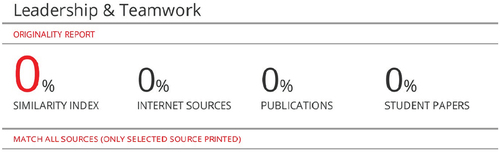

To assess the academic integrity of the ChatGPT responses, the Turnitin application was used to determine whether it could detect AI-generated texts. ChatGPT responses given to the case analysis assignment (GUE course) were selected and uploaded to the Turnitin application. Turnitin deducted 0% plagiarism (Figure ), and ChatGPT passed the academic integrity test. In the case study report (specialization course), 14% plagiarism was detected in the work done by Bot and only 4% in problem-solving assignments (Supporting course).

Figure 2. Turitin report—ChatGPT responses academic integrity assessment.

Once it was confirmed that Turnitin can be of little or no help in detecting AI-based writing, two tools claiming that they can detect AI-based texts were selected: GPTZero and Copyleaks, and ChatGPT responses were uploaded one after another to test their ability to detect AI content (Table ).

Table 6. Academic integrity assessment reports

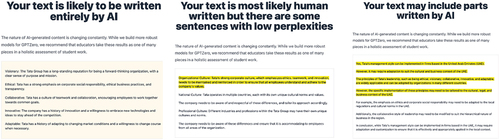

GPTZero was able to detect that ChatGPT response to question-1 was “written entirely by AI.” However, the question-2 response by ChatGPT was not completely detected; GPTZero reported it as “most likely human written (.) some sentences with low perplexity”. For question-3 ChatGPT response, GPTZero identified that “text may include part written by AI.” Thus, confirming that GPTZero still needs further precision in its AI content detection services (Figure ), and it provides no evidential support to the instructors on the compromise made on academic integrities like Turnitin, which might result in high marks for the students who falsely claimed ownership of the work completed by AI-bot.

Figure 3. Gptzero report—ChatGPT responses academic integrity assessment.

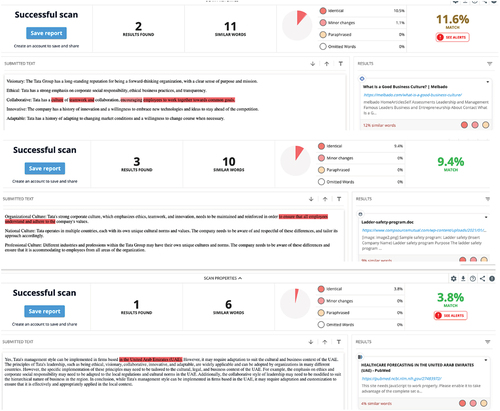

Next, Copyleaks was utilized to check whether this tool could identify that the responses to all three questions were AI-generated text and not human-written. Nonetheless, like GPTZero, this tool was also unable to detect that the complete text was AI-generated. Rather, a small percentage of words in each answer were identified as matching with the existing resources (Figure ), leading to the same issue of high marks scored by students who falsely claimed work authorship.

Figure 4. Copyleaks report—ChatGPT responses academic integrity report.

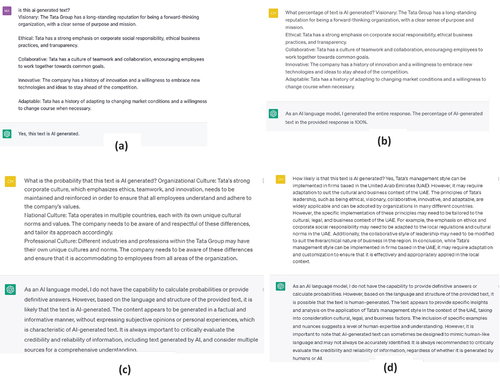

Lastly, ChatGPT was used to assess if it correctly reports about its responses to the assignment questions. An answer to question-1 was imputed with the query if “this is AI generated text?” To this, ChatGPT replied that “yes, this text is AI generated” (Figure ). Subsequently, the query was tweaked and reposted that “what percentage of text is AI generated?”, to which ChatGPT confirmed that “as an AI language model I generated entire response. The percentage of AI-generated text in the provided response is 100%” (Figure ). Hence, the response confirmed that ChatGPT can determine the percentage of the AI generated text in the given essay.

Figure 5. ChatGPT self-assessment – academic integrity report.

It was further tested for the next two assignment questions. This time the query was tweaked, and ChatGPT was questioned regarding question 2 of the assignment “what is the probability that this text is AI generated?”, to which it responded that “as an AI model I do not have the capability to calculate probabilities or provide definitive answers (.) and based on language and structure it is likely that text is AI generated” (Figure ). Another query regarding question 3 of the assignment was imputed “how likely is that this text is AI generated?”. To which ChatGPT answered that “. based on the language and structure of the provided text, it is possible that the text is human-generated (.) it is important to note that AI-generated text sometimes be designed to mimic human-like language and may not always be accurately identified.” (Figure ).

Different responses of the ChatGPT to different query keywords confirmed that this tool cannot accurately validate the authorship of the work. Though its response is better when interrogated with AI-generated text in terms of percentage instead of probability and likelihood. In a nutshell, it is not a completely reliable tool for assessing academic integrity of students’ work. The instructors still need to use their subjective judgement for each student worked answer, which might not be an effective solution. Even if they use ChatGPT to determine the percentage of AI generated text, it does not seem to be viable, as each assignment needs to be uploaded individually to validate the authorship claim, which is a time-consuming effort that instructors may not be willing to make.

4. Discussion

An experimental trial was conducted on randomly selected courses from each of the four major sections of the study plan of the undergraduate program (BBA in HRM): General University Education Courses, College Requirement Courses, Specialization Courses, and Supporting Compulsory courses, and the study findings confirmed:

ChatGPT’s capability to respond effectively to an assortment of tools used for assessing students’ learning outcomes at the undergraduate level.

ChatGPT’s capability of passing academic integrity thresholds.

From among the assortment of assessment tools used in each course to evaluate student performance and learning outcomes in the previous semester, one was selected to examine the academic performance of ChatGPT on that assignment compared to the highest performing student/s (controlled group) who completed that assignment on their own as part of their course learning assessments before ChatGPT was launched and made available for public use. The assignments answered by ChatGPT were marked by the same instructors, and a comparison was drawn between the marks given to the student work and ChatGPT’s work to determine if AI-bot was able to comprehend different sets of instructions to complete a variety of assignments, such as case analysis, critical thinking assignments, project reports, and problem-solving. The ChatGPT responses to all four assignments were scored by the respective faculty members who had taken those courses in the previous semester (without sharing that, it was AI-generated text). The scores confirmed that ChatGPT was able to perform at par, with the highest performing student(s) in the class in case analysis, self-reflection group work, and problem-solving assignment. It also generated an empirical case study report on Emirate-based firms following the guidelines given; however, it was not able to meet the report length requirement (i.e., 2000 words). Despite the repeated queries, the response was limited to approximately 625–635 words. It is worth testing in future studies whether project requirements can be broken down into four to five segments (introduction, literature review, analysis, findings, recommendation, etc.) in a built-on conversation, and the responses generated to each requirement can be combined to meet the report length requirements.

The series of experimental results established ChatGPT’s capability to deal with a variety of assignments given to the students during the instructional period to improve their knowledge application skills along with soft skills of critical thinking, problem-solving, effective communication (writing) skills, and research skills. During the experiment, two limitations were identified in the Bot in its current standing: first, it is limited in the length of its response, meaning it generates answers of less than 650 words approximately despite discrete and repeated requests on the required word count; second, it processes queries in less than 2 minutes, and unanswered queries are ignored once approximately 2 minutes have passed in solving the queries given. This provides room for instructors to redesign their assignments, keeping in mind these two limitations in new technology, which might not stay for long, keeping in view the learning and development speed of this technology.

The next question was to determine the availability of technology to support academic integrity (Caren, Citation2022). Turnitin—a name familiar to all educators and institutions—a known tool for checking academic integrity gave a promising response to the growing concern regarding ChatGPT with the risk of contract cheating and students’ misrepresentation of AI-assisted writing as their own work. They claimed that over the last two years, they have been working on technology to recognize AI-assisted writing, and their new in-market products for educators will be incorporated with AI writing detection capabilities that can detect some forms of AI-assisted writing to assess students’ work authenticity. However, the study confirmed that ChatGPT passed the academic integrity tests from the most used plagiarism detection tool in academia-Turnitin, which was not able to verify whether the text was AI generated and detected a small percentage (in course 3 and 4 assignments) or even no plagiarism (in course 1 assignment) at all in the ChatGPT responses reported by Ventayen (Citation2023). Recently, TurnItIn has introduced a new AI writing indicator feature, which may detect the percentage of AI generated text in the document though not visible in similarity reports. It further states that the percentage is interpretive and may not indicate misconduct, and instructors must decide how to handle work that may have been produced or partially produced by AI. This new feature needs testing over a period of time to determine if its enhanced capabilities can stay up to the claim it has made and can determine AI-fueled plagiarism with 100% confidence, instead of leaving it on instructor’s subjective judgement.

Furthermore, the new tools—GPTZero and Copyleaks—self-acclaim that they can detect AI and were tested to determine whether they can validate the academic integrity of AI-fuelled assignments (Spencer, Citation2023). The results confirmed that both tools in their current standing are unable to detect AI-generated text completely, although GPTZero performed better than Copyleaks; however, it was not 100% accurate, thus answering the concern raised by Spencer (Citation2023). Moreover, Copyleaks is on its way to launch a new section for detecting AI content, which needs further testing and validation by instructors.

Next, ChatGPT was tested as well; its own responses to assignment-1 were imputed, and a query was created if it was AI-generated text, and the response was affirmative. When the query was tweaked and the bot was asked about the percentage of text AI-generated, it was able to accurately determine that 100% text was generated by it. However, when the key terms of “probability” and “likelihood” were used to determine if responses to question-2 and question-3 of the assignments were AI-generated, ChatGPT affirmed that it cannot calculate the probability or provide definitive answers and gave mixed responses. It is shared that based on the language and structure question-2’s response is likely to be AI-generated, whereas question-3’s response is likely to be human-generated. This indicates that ChatGPT can detect AI fueled plagiarism with specific keywords but not with precision or with any evidence. It also does not highlight the specific sentences that are determined as AI-generated. Even if it is used to get some insights whether some percentage of text or whole of it is AI generated or not, the question is how instructors can use this tool to determine if students’ submitted work passes the academic integrity thresholds. Currently, manually inputting responses from at least five to seven assessments of each student from a class of at least 30 students does not seem to be a feasible approach. This can be cumbersome, exhausting, and overwhelming for the instructor, who can use this time in better ways to contribute to the students’ learning experiences instead of wasting hours in confirmation from ChatGPT if the students’ work is authentic or AI-generated.

Currently, the available tools for validating work authorship are not capable of completely identifying AI-generated texts. They lack precision and are not adequately reliable for assessing students’ work in their current state. AI-fueled plagiarism detection tools might take some time to meet the required expectations. In the absence of any such tool, educators would have to rely on traditional approaches to enforce academic integrity by a) redesigning their assessment tools where AI tools cannot provide readymade answers and students have to put the required effort, b) clearly communicating the rules and regulations regarding the use of ChatGPT to the students, c) encouraging original work and discouraging direct copy of information from ChatGPT, d) educating students on the importance of academic integrity (Flores-Vivar & García-Peñalvo, Citation2023), and e) conducting occasional assessments and exams in controlled environments.

An effective teacher leadership is required to develop student’s character to adopt new technology for their learning and development (Crawford et al., Citation2023). The students need to be informed by academic faculty on a regular basis about the importance of academic integrity and the consequences of academic misconduct. They must be trained on how to make ethical use of AI bot for learning purposes and the importance of being original in ideas (Mhlanga, Citation2023). They should be educated on how overreliance on AI generated work can hamper their integrity, technical know-how, critical-thinking skills, and problem-solving skills, which may impact on their psychological well-being (Salah et al., Citation2023).

On the one hand, though ChatGPT is posing challenge to the world of education, its potential advantages in facilitating students’ learning due to dialogic nature (Gilson et al., 2023) and the ability to understand human language cannot be ignored. ChatGPT has the potential to improve students’ learning outcomes by providing them with an interactive learning experience, where they can ask follow-up questions on a subject and gain in-depth knowledge (Crawford et al., Citation2023). It also has the potential to provide real-time feedback on students’ work and provide them with a chance of improvement by offering them personalized support based on their needs and learning styles.

It is a multilingual model and can process text input in several languages, including Arabic and English, among several others (Christensen, Citation2023), although taking more time still provides answers. This provides an opportunity to the students who are learning in their second language (i.e., English in the case of Emirates). They can make use of ChatGPT to understand the concepts in their native language by giving query in their first language (i.e., Arabic). The bot can also help students brainstorm ideas and think outside the box by providing different perspectives and alternative approaches, thus initiating critical thinking skills.

Moreover, ChatGPT claims that it can understand poorly written text with grammatical and spelling errors and still able to identify their meaning based on the context of the sentence (Starcevic, 2023). If that is the case, ChatGPT might be able to improve the inclusion of students with communication disabilities, impacting on their ability to speak, understand, or write. It can read poorly structured sentences and simplify complex texts into simple summaries for early-stage readers (Starcevic, 2023). Yet its vitality for assistive technologies and questions of authorship are yet to be answered by further investigating if ChatGPT can facilitate the learning and engagement of students with learning disabilities.

Likewise, it can help instructors in several ways to be more effective in delivering teaching material, increasing student engagement, and assessing student performance (Christodoulou, Citation2023). ChatGPT, in response to the query regarding its benefits in advancing teaching and learning responded that: a) it can grade essays automatically and give human-like feedback (see Kim et al., Citation2019), b) it can translate material in different languages accessible to wider audience, c) it can provide interactive learning experience and personalized tutoring to the students through its conversational agent (see Chen et al., Citation2020), and d) it can help teachers to implement adaptive learning system by adjusting their teaching methods based on student’s progress (Baidoo-Anu & Owusu Ansah, Citation2023). Over a period, more information will flow on the transformative use of ChatGPT to support teachers in improving their teaching effectiveness and increase students’ learning outcomes.

Initially, public educational institutions across New York City banned teachers and students from using ChatGPT on the district’s network and devices (Korn & Kelly, Citation2023) followed by several other jurisdictions across the D.C. region. Likewise, public schools in Virginia blocked it on county-issued devices under “Children’s Internet Protection Act” educational guidelines, so its suitability for minors can be confirmed (Gelman, Citation2023). The regulators across the Europe are scrutinizing this AI chatbot as well to determine if it complies with privacy regulations after Italy blocked its use, citing data privacy concerns. Though, the preliminary response of educational institutions to this intelligent chatbot remained mixed with a few doomsayers predicting the end of education in its current form (Crawford et al., Citation2023), banning the new technology from students’ use is not a choice (Codina, Citation2022). Particularly in a country like Emirates, which aspires to be placed among the world’s leading countries in artificial intelligence by 2031 (U.AE, Citation2022). The country’s AI strategy sets clear objectives to deploy an integrated smart digital system capable of delivering rapid services to customers and building a new market with high economic value that is well connected through AI across important industries (U.AE, Citation2018). When integrating AI into enterprises, personal lives, and government systems is a strategic priority for the country (quoted by Sheikh Mohammed bin Rashid Al Makhtoum-Vice President of Emirates) (Arabian Business, Citation2019), HEIs must train their students to use AI-assisted tools to prepare them for AI-powered future jobs instead of banning their use altogether to deal with academic integrity issues. The higher institutions must revisit their current performance-based evaluation approach to assess students’ learning outcomes and teaching effectiveness and develop new innovative assessment methods for ensuring development of students’ technical, creative thinking, problem solving, communication, and other important skills most needed in new AI-oriented job markets.

5. Study Implications

The current study findings filled the existing gap in the domain by uncovering the challenges that academia will soon face in managing students’ lifelong learning and career development based on current performance monitoring evaluation models in the era of AI-based chatting bots (Gallegos, Citation1994). Higher educational institutions base their educational policies and diagnosis and design of the programs on the outcomes of students’ learning by assessing their performance against the defined set of standards based on program learning outcomes, which helps to identify the gap areas (used as feedback) to improve program quality, teaching effectiveness, and students’ learning experience. Student performance-based evaluations of learning outcomes are conducted regularly, with or without interventions, whenever required, as this is the foundation for evidence-based continuous improvement in students’ learning outcomes.

In a situation where ChatGPT allows students to generate highly precise and original text without any effort in a few seconds and calls into question the traditional methods of plagiarism detection, the question posited is how assessment-based performance evaluation models will serve their purpose in HEIs. In the absence of any academic integrity verification tool, ChatGPT will give students an unfair advantage over those who do not use the tool and work on their own, not scoring best on assessments but still developing the necessary skills for future work roles. Students’ achievement in their assignments will no longer be a reflection of their learning or confirmation if they have built an adequate knowledge base and skills mastery expected as program learning outcomes, making available information on students’ achievements less reliable for policy decisions (Prøitz, Citation2015). The easy availability of half-cooked or full-cooked solutions by AI bot undermines the very goal of education, which is to challenge and develop students’ knowledge, technical expertise, and soft skills, and may result in a decrease in the value of educational systems that we are familiar with for a long period.

Therefore, it is time to revisit existing performance evaluation approaches as well as the design of assessments adopted by HEIs to monitor and ensure students’ learning and skills development in a new era of ChatGPT (D’Agostino, Citation2023; Koenders & Prins, Citation2023), keeping in mind the limitations of ChatGPT reported in the current study. To move forward, faculty members are posed with an important question, if they are ready to step up and design innovative assessment methods where academic integrity can be ensured, or if they want to go back to the handwritten or oral exams conducted earlier when technology was not widespread in education. In summary, it is the beginning of an era of intelligent learning tools in academia, smart enough to solve complex queries in just a few seconds in built on conversation and being custodians of knowledge and learning, higher educational institutions, and educators are responsible for adapting to the new technologies and continuing to find ways to ensure the development of students’ technical and soft skills for their lifelong learning and career success while ensuring honesty and integrity in their academic conduct.

6. Conclusion

The existing performance-based evaluation systems adopted by HEIs to ensure that students are learning and developing the necessary skills for future job markets seem likely to be unserviceable with the arrival of ChatGPT. This article explicitly confirms that ChatGPT is smart enough to write assignments, analyze case studies, develop project reports, and provide solutions to work-based problems; however, it is limited in the length of assignment created and the time of response. Regardless, it passes the academic integrity tests from the commonly used tool by the instructors in academia to validate authorship, that is, Turnitin. In addition, the self-proclaimed tools for detecting AI-generated text, such as GPTZero and Copyleaks, seem insufficient in their current standing to assess the essays generated by AI-based ChatGPT.

The study proposes that if HEIs at Emirates look forward to utilizing this latest technology for learning purposes and allows students to utilize it for their class and home assignments, the HEIs may need to revisit their performance-based evaluation model. Existing assessment tools (e.g., knowledge-based exams, problem-solving questions, critical thinking assignments, and creative writing) are not adequate to confirm students’ learning and performance in the absence of any tool capable of validating the authorship of the work. The work produced by the ChatGPT can easily be claimed by students as their own, and mere submission on time can help them achieve the highest score, which questions the utility of the existing performance evaluation system as a foundation for maintaining and improving program quality and students’ learning outcomes in the first place.

It is evident that ChatGPT is an effective tool for improving students’ academic performance. The results suggest that ChatGPT can be used to personalize instructions and provide immediate feedback to students. Additionally, ChatGPT can be used to provide students with tailored responses based on their specific needs and abilities. Overall, the implementation of the ChatGPT in education has the potential to improve students’ academic performance and enhance their overall learning experience.

Therefore, it is important for educational institutions to have open discussions with all important stakeholders to set achievable expectations from students regarding the acceptable use of AI-assisted writing tools before accepting it thoughtlessly. It is important to understand at the national level how students can be allowed to use it under the right circumstances and under the supervision and guidance of the instructors, with effective training on its ethical use.

7. Limitations and future directions

The current study was limited in its design, where we were not able to involve students, keeping in mind that ChatGPT should not be introduced to students unless planned appropriately by HEIs and academia regulators. In future studies, once teachers and students are trained enough to make appropriate use of the learning process, it would be interesting to see the impact of ChatGPT on students’ learning outcomes using pre- and post-test assessments, that is, analyzing students’ performance before and after they have used ChatGPT for learning purposes. It would also be interesting to conduct surveys and interviews to comprehend students’ perceptions of ChatGPT support in improving their learning engagement and outcomes. In addition, the current study is limited by its timeframe. ChatGPT entered academia roughly two to 3 months ago, and its uses and weaknesses are yet to be explored. Therefore, longitudinal and time-series studies to measuring the impact of AI-based learning interventions over time at the student level can help academic managers and regulators in data collection benchmarks and comparison over time to evaluate and redesign learning approaches and teaching effectiveness. It would also be interesting to replicate this study in other Middle Eastern countries as well as in the Western world to assess how the new technology influenced their existing assessment designs and program evaluation approaches.

Availability of Data & Material

All data generated or analyzed during this study are included in figures and tables submitted along the manuscript.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

Notes on contributors

Iffat Sabir Chaudhry

Iffat Sabir chaudhry received her PhD in Management with a focus on organizational behaviors and its psychological and social aspects. Her research activities are primarily focused on the systems designs, processes, and technology, and their influence on productivity. Aligned to the research focus, the authors intended to investigate if Artificial Intelligence–based Chatbot has influenced the higher educational institution approach of using performance-based evaluation models for setting policies and taking corrective actions to improve learning and teaching effectiveness. Specially in Emirates which aspires to be the hub of AI technology in the region and is already shifting towards a smart economy aligned to its Vision-2030. Iffat and Sayed conceptualized the study, designed the framework, conducted the test and analyzed the findings to draw results and conclusions; Prof. Ghaleb reviewed the project and its helped in its final editing; Habib participated in the testing phase of the study. These findings helped us to learn that ChatGPT is detrimental to the academic integrity of the students and, therefore, HEIs need to revisit their approach to deal with this disruptive technology as well as revise their academic integrity policy and communicate it effectively to the students. This research is a part of our institutions endeavors to acknowledge and contribute to the government’s endeavors of embracing Artificial Intelligence in education as part of its Vision-2030 by highlighting the areas, which need to be focused by the HEI’s management and regulators.

References

- Aasen, P. (2012). Accountability under ambiguity. Dilemmas and contradictions in education. In A.-L. Østern, K. Smith, T. Krüger, M.-B. Postholm, & T. Ryghaug (Eds.), Teacher education research between national identity and global trends (pp. 77–30). Academics.

- Altbach, P. G. (2004). The question of corruption in academe. International Higher Education, 34(March), 8–10.

- Ames, C. (1992). Classrooms: Goals, structures, and student motivation. Journal of Educational Psychology, 84(3), 261–271. https://doi.org/10.1037/0022-0663.84.3.261

- Amigud, A.M., Arnedo-Moreno, Daradoumis, Daradoumis, J., Guerrero-Roldan, T., & Guerrero-Roldan, A. -E. (2017). Using learning analytics for preserving academic integrity. International Review of Research in Open and Distributed Learning, 18(5), 192–210. https://doi.org/10.19173/irrodl.v18i5.3103

- Arabian Business. (2019). UAE adopts new national AI strategy aimed at becoming global leader, 22 April 2019. Retrieved from https://www.arabianbusiness.com/industries/technology/418254-uae-adopts-new-national-ai-strategy-aimed-at-becoming-global-leader

- Ashford, E. (2019). Employers stress need for soft skills. 16 January 2019. Retrieved from https://www.ccdaily.com/2019/01/employers-stress-need-soft-skills/

- Atlas, S. (2023). ChatGPT for higher education and professional development: A guide to conversational AI. Retrieved from https://digitalcommons.uri.edu/cba_facpubs/548/

- Badam, R. (2023). UAE working on ‘GPT-powered AI tutors’ to transform education. The National UAE, Mar 4, 2023. Retrieved from https://www.thenationalnews.com/uae/education/2023/03/04/uae-working-on-gpt-powered-ai-tutors-to-transform-education/

- Baidoo-Anu, D., & Owusu Ansah, L. (2023). Education in the era of generative artificial intelligence (AI): Understanding the potential benefits of ChatGPT in promoting teaching and learning. SSRN 4337484.

- Bhattacharya, K., Bhattacharya, A. S., Bhattacharya, N., Yagnik, V. D., Garg, P., & Kumar, S. (2023). ChatGPT in surgical practice—a New Kid on the Block. The Indian Journal of Surgery, 1–4. https://doi.org/10.1007/s12262-023-03727-x

- Brown, T. B., Mann, B., Ryder, N., Subbiah, M., & Kaplan, J. D. (2020). Advances in Neural Information Processing Systems. Language Models are Few-Shot Learners, 33, 1877–1901.

- CAIE-Cambridge Assessment International Education. (2021). Education brief: School evaluation. Retrieved from https://www.cambridgeinternational.org/Images/637044-education-brief-school-evaluation.pdf

- Campello de Souza, B., Serrano de Andrade Neto, A., & Roazzi, A. (2023). ChatGPT, the cognitive mediation networks theory and the emergence of sophotechnic thinking: How Natural Language AIs Will Bring a New Step in Collective Cognitive Evolution. Available at SSRN 4405254.

- Caren, C. (2022). AI writing: The challenge and opportunity in front of education now. 15 December 2022. Retrieved from https://www.turnitin.com/blog/ai-writing-the-challenge-and-opportunity-in-front-of-education-now

- CERI. (2008). Assessment for Learning Formative Assessment. Paper presented in OECD/CERI International Conference titled Learning in the 21st Century: Research, Innovation and Policy. Retrieved from https://www.oecd.org/site/educeri21st/40600533.pdf

- Chai, C. S., Lin, P. Y., Jong, M. S. Y., Dai, Y., Chiu, T. K., & Qin, J. (2021). Perceptions of and behavioral intentions towards learning artificial intelligence in primary school students. Educational Technology & Society, 24(3), 89–101.

- Chassignol, M., Khoroshavin, A., Klimova, A., & Bilyatdinova, A. (2018). Artificial Intelligence trends in education: A narrative overview. Procedia computer science, 136, 16–24. https://doi.org/10.1016/j.procs.2018.08.233

- Chen, Y., Chen, Y., & Heffernan, N. (2020). Personalized math tutoring with a conversational agent. arXiv preprint arXiv:2012.12121.

- Christensen, A. (2023). How many languages does ChatGPT support? Complete ChatGPT Language List, February 3, 2023. Retrieved from https://seo.ai/blog/how-many-languages-does-chatgpt-support.

- Christodoulou. (2023). Could ChatGPT mark your students’ essays? 10 January 2023. Retrieved from https://www.tes.com/magazine/teaching-learning/general/ai-marking-teachers-could-chatgpt-mark-your-students-essays

- Ciolacu, M., Tehrani, A. F., Binder, L., & Svasta, P. M. (2018, October). Education 4.0-Artificial Intelligence assisted higher education: Early recognition system with machine learning to support students’ success. In 2018 IEEE 24th International Symposium for Design and Technology in Electronic Packaging(SIITME), Romania (pp. 23–30). IEEE.

- Codina, L. (2022). How to use ChatGPT in the classroom with an ethical perspective and critical thinking: A proposition for teachers and educators. Retrieved from http://bit.ly/3iKBFAE

- Crawford, J., Cowling, M., & Allen, K. A. (2023). Leadership is needed for ethical ChatGPT: Character, assessment, and learning using artificial intelligence (AI). Journal of University Teaching & Learning Practice, 20(3), 02. https://doi.org/10.53761/1.20.3.02

- D’Agostino, S. (2023). Designing Assignments in the ChatGPT Era, 31 January 2023. Retrieved from https://www.insidehighered.com/news/2023/01/31/chatgpt-sparks-debate-how-design-student-assignments-now

- Davidson, K. (2016). Employers find ‘soft skills’ like critical thinking in short supply, The Wall Street Journal, August 30, 2016. Retrieved from https://www.wsj.com/articles/employers-find-soft-skills-like-critical-thinking-in-short-supply-1472549400

- Deepa, S., & Seth, M. (2013). Do soft skills matter? – Implications for educators based on recruiters’ perspectives. IUP Journal of Soft Skills, 7(1), 7–20.

- Denisova-Schmidt, E. (2016) The slippery business of plagiarism. Center for International Higher Education. May 24. Retrieved from: https://www.insidehighered.com/blogs/world-view/slippery-business-plagiarism

- Du Boulay, B. (2016). Artificial intelligence as an effective classroom assistant. IEEE Intelligent Systems, 31(6), 76–81. https://doi.org/10.1109/MIS.2016.93

- Flores-Vivar, J. M., & García-Peñalvo, F. J. (2023). Reflections on the ethics, potential, and challenges of artificial intelligence in the framework of quality education (SDG4). Comunicar, 31(74), 35–44. https://doi.org/10.3916/C74-2023-03

- Gallegos, A. (1994). Meta-evaluation of school evaluation models. Studies in Educational Evaluation, 20(1), 41–54. https://doi.org/10.1016/S0191-491X(00)80004-8

- García-Peñalvo, F. (2023). La percepción de la Inteligencia Artificial en contextos educativos tras el lanzamiento de ChatGPT: disrupción o pánico. Education in the Knowledge Society (EKS), 24, e31279. https://doi.org/10.14201/eks.31279

- Gelman, S. (2023). DC region schools ban AI tool ChatGPT. 12 January 2023. Retrieved from https://wtop.com/local/2023/01/dc-region-schools-ban-ai-tool-chatgpt/

- Gilson, A., Safranek, C., Huang, T., Socrates, V., Chi, L., Taylor, R. A., & Chartash, D. (2022). How well does ChatGPT do when taking the medical licensing exams? The implications of large language models for medical education and knowledge assessment. medRxiv, 9(Feb), e45312.

- Gordon, B. (2023). North Carolina Professors Catch Students Cheating with ChatGPT, Government Technology, 12 January 2023. Retrieved from https://www.govtech.com/education/higher-ed/north-carolina-professors-catch-students-cheating-with-chatgpt

- Heussner, K. M. (2012). 5 ways online education can keep its students honest. Retrieve from https://gigaom.com/2012/11/17/5-ways-online-education-can-keep-its-students-honest/

- Hopmann, S. T. (2008). No child, no school, no state left behind: Schooling in the age of accountability 1. Journal of Curriculum Studies, 40(4), 417–456. https://doi.org/10.1080/00220270801989818

- HR Observer. (2023). Coursera CEO Says Impact of Linguistic Technology Unclear, HR Observer, February 14, 2023. Retrieved from https://www.thehrobserver.com/talent-management/coursera-ceo-says-impact-of-linguistic-technology-unclear/

- Jooris, L. (2023). Why ChatGPT is set to revolutionise business. 17 January 2023. Retrieved from https://www.arabianbusiness.com/opinion/why-chatgpt-is-set-to-revolutionise-business

- Kim, S., Park, J., & Lee, H. (2019). Automated essay scoring using a deep learning model. Journal of Educational Technology Development and Exchange, 2(1), 1–17.

- Kluger, A. N., & DeNisi, A. (1996). The effects of feedback interventions on performance: A historical review, a meta-analysis, and a preliminary feedback intervention theory. Psychological Bulletin, 119(2), 254–284. https://doi.org/10.1037/0033-2909.119.2.254

- Koenders, L., & Prins, F. (2023). The influence of ChatGPT on assessment: can you still use take-home exams and essays?, Educational development and training, Utrecht University. Retrieved from https://www.uu.nl/en/education/educational-development-training/knowledge-dossier/the-influence-of-chatgpt-on-assessment-can-you-still-use-take-home-exams-and-essays

- Korn, J., & Kelly, S. (2023). New York City public schools ban access to AI tool that could help students cheat, 6 January 2023. Retrieved from https://edition.cnn.com/2023/01/05/tech/chatgpt-nyc-school-ban/index.html

- Krugman, P. (2022, December 6th). Does ChatGPT Mean Robots are Coming for the Skilled Jobs? The New York Times. http://bit.ly/3HdnAp2

- Ludeman, R. (2005). Student leadership and moral accountability. Journal of College and Character, 2, Retrieved from. http://www.collegevalues.org/ethics.cfmin=460&d=1