Abstract

As educational settings increasingly integrate innovative technologies into teaching methodologies, it becomes crucial to understand how teachers, especially those in rural schools, develop and apply these integrative competencies. Neglecting this endeavour risks leading to the abandonment or underutilisation of these technologies once they are deployed in schools. This descriptive study explored the technological pedagogical content knowledge (TPACK) of life sciences teachers, focusing on their competencies to integrate virtual labs (VLs) into classroom practices. The research, framed by Koehler and Mishra’s TPACK framework, involves a quantitative analysis of data collected from 186 life sciences teachers. The findings indicate that, overall, respondents exhibited low levels of TPACK, suggesting a limited proficiency in integrating VL technologies into their teaching methods. However, analysis of the individual TPACK domains revealed a notable mastery of non-technology-related domains, particularly in content knowledge (CK), pedagogical knowledge (PK), and pedagogical content knowledge (PCK). The emphasis on in-service teachers suggests that teaching experience significantly contributes to the development of these foundational knowledge components. Despite the proficiency in non-technology domains, a significant disparity is evident in technology-related TPACK domains, with lower competency levels observed in technological knowledge (TK), technological pedagogical knowledge (TPK), and overall TPACK. Notably, this study addresses a gap in educational research by emphasising the significance of rural school teachers, a population often overlooked in such studies. The study recommends that tailored professional development, coupled with more widespread adoption of VLs, has the potential to enhance teachers’ overall TPACK to adeptly leverage this technology in their teaching.

1. Introduction

Teaching science is widely perceived as a complex task, primarily due to the inherent abstractness of scientific concepts necessitating advanced cognitive abilities in learners to logically connect arguments and reconstruct meanings (Dewey & Bentley, Citation1949; Holbrook et al., Citation2022; Vlachos et al., Citation2024). This complexity is further amplified by specialised terminologies within the major branches of science – biology, chemistry, and physics. The intricacies escalate with the teaching of science in rural schools, characterised by distinct challenges such as high teacher turnover, creating challenges in attracting and retaining qualified and skilled science teachers who possess the essential qualifications and expertise (Gray et al., Citation2016; Hlalele & Mosia, Citation2020; Nemes & Tomay, Citation2022). Moreover, science teachers in rural schools regularly find themselves stretched across many subjects, teaching multiple grades and navigating demanding learner-to-teacher ratios (Kokela & Malatji, Citation2023). Consequently, despite initial interest, competent teachers frequently migrate to urban schools, leaving rural schools with a shortage of qualified science teachers and relying on underqualified and unqualified personnel. Research on teacher demographics underscores the prevalence of low competency among teachers in rural schools in the subjects they teach, particularly with respect to integrating technology into pedagogy (Goldhaber et al., Citation2020; Ingersoll & Tran, Citation2023).

Historically, the criteria for teacher competence were rooted in the command of subject content (content knowledge) and adept application of teaching techniques (pedagogical knowledge) (Shulman, Citation1986). Nevertheless, Shulman (Citation1987) subsequently argued that, although a robust grasp of the subject content and the effective implementation of pedagogical methods were essential, they alone could not entirely encapsulate the knowledge of a proficient teacher. To address this, Shulman (Citation1987) proposed the notion of pedagogical content knowledge (PCK). PCK entails integrating pedagogical knowledge (PK) and content knowledge (CK) into one framework, shaping how specific subject areas are structured, adapted, and delivered to enhance learner understanding (Shulman, Citation1986). While Shulman’s perspective continues to be relevant (Shulman, Citation1987, Citation2015), a significant departure in teacher knowledge discourse since the 1980s is the widespread integration of innovative technologies in contemporary teaching − learning spaces, including in rural schools.

One such innovation in contemporary science education is the virtual lab (VL), a subset in the category of simulations. VLs, founded on computer models derived from physical laboratories and associated experimental processes, provide distinct advantages. For example, early adopters of this technology have heralded it as valuable in supporting experimentation related to complex phenomena such as thermodynamics, chemical reactions, or electricity (Benbelkacem et al., Citation2024; Mahling et al., Citation2023; Pellas et al., Citation2020). Moreover, specific scholars in the realm of science education have posited that VLs could function as an alternative platform, providing an avenue for teachers and students in schools without physical science laboratories and equipment to conduct experiments (Engel et al., Citation2023; Kapilan et al., Citation2021). This suggests a potential opportunity for teachers and students in rural schools to leverage this technology for enhanced science education.

Despite the recognised benefits of VLs for rural school science teaching and its anticipated widespread adoption in such settings in South Africa, concerns have arisen about whether teachers in these environments possess the required knowledge to leverage this technology meaningfully in their teaching. This concern is particularly relevant for teachers in rural schools who may not have been formally or informally trained in technology integration as part of their initial teacher education programs. According to Koehler and Mishra (Citation2006), meaningful integration of technology takes place when there is a purposeful pedagogical intention guiding the choice of particular technologies to augment specific teaching approaches. This pedagogical intent has to be firmly rooted in curriculum-related and teaching–learning activities. Koehler and Mishra (Citation2009) further contended that effective technology integration into pedagogy is only achievable if teachers have specialised knowledge to teach with technology, known as technological pedagogical content knowledge (TPACK). TPACK encapsulates a teacher’s knowledge grounded in a nuanced appreciation of the intricate interactions between content, pedagogy, and technology, enabling them to design appropriate and context-specific teaching methodologies. TPACK, therefore, becomes the foundation for competent teaching with educational technologies, demanding an understanding of “epistemological theories and how technologies can advance knowledge, introduce new epistemologies, or reinforce existing ones” (Koehler & Mishra, Citation2006, p. 3). Thus, for rural school science teachers, acquiring TPACK would signify their ability to effectively enrich their science teaching by harnessing the inherent potential in successful VL integration.

Presently, since VL discourse has gone global and garnered considerable scholarly interest, it is crucial to highlight a significant disparity in the representation of studies related to VLs in science education between the Global North and the Global South. To date, most research in this domain has been conducted in the Global North, leaving a notable void in representation from the Global South. Cox and Graham (Citation2009) emphasised that TPACK is “unique, temporary, situated, idiosyncratic, adaptive, and influenced by different contexts” (p. 47). This emphasis highlights the need for diverse perspectives, including those from rural schools in the Global South. While some studies on VLs in developing countries exist, such as those by Okono et al. (Citation2023) in Kenya, Umukozi et al. (Citation2023) in Rwanda, and Aliyu and Talib (Citation2019) in Nigeria. All these studies primarily focused on the impact of VLs on learners’ academic achievements. In the South African context, limited investigations into teachers’ perspectives on virtual learning environments have been conducted, particularly in higher education, by scholars such as Shambare et al. (Citation2022), Jantjies and Matome (Citation2021), Umesh and Penn (Citation2019), and Solomon et al. (Citation2019). While these studies primarily centred on perceptions and mainly reported teachers’ positive perceptions toward VLs, our argument transcends mere perceptions as an ingredient for successful VL adoption. We contend that the successful adoption of any technology into teaching, to a greater extent, hinges on teachers’ competency or knowledge (TPACK), enabling them to leverage the technology skillfully.

This paper aimed to answer the question: What are the TPACK levels of rural school life sciences teachers in the integration of the VL into teaching? A significant contribution of this study is its exploration of teachers’ TPACK, an underexplored area in research in South Africa. Notably, the focus is on science teachers in rural schools, a group often overlooked in the global educational research discourse. The additional significance of this paper lies in its analysis of the individual TPACK components of rural school science teachers, identifying strengths and knowledge areas needing improvement for meaningful VL integration. The subsequent sections are structured as follows: Section 2 reviews prior studies, Section 3 discusses the theoretical underpinnings of the study, Section 4 details the methodology, and Section 5 presents the results, which are discussed in Section 6. Sections 7, 8, 9, and 10 conclude the paper by addressing potential limitations, contemplating implications, and suggesting future directions, respectively.

2. Literature review

2.1. Meaning of virtual lab

Our literature review shows that the term “Virtual Lab” is often used in a broad sense. Generally, VL refers to web-based platforms or software that provide a rich and immersive learning environment. These platforms typically use interactive applications, visualisations, and graphics sourced from networked content to allow users to conduct scientific experiments online. In fact, we note that there are three types of VL, but the distinctions between them are often blurred:

Simulations available online contain several aspects of laboratory experiments that are chiefly used for visualisations. These are called “CyberLabs” or classical simulations (Lee & Lee, Citation2019).

Real experiments managed through a network whose output and settings are presented via the internet. Such labs are called Remote Laboratories (García-Zubía, Citation2021).

Simulations that undertake to imitate real laboratory experiments as closely as possible through teachers’ and learners’ engagement in “hands-on” experiences. These are called VLs, which is the focus of this paper (Tatli & Ayas, Citation2013).

Therefore, to begin defining VL, we acknowledge that a widely accepted definition is yet to emerge. For example, Lee and Lee (Citation2019, p. 3) define VL as:

A website or software designed for interactive learning through the simulation of actual phenomena. It enables students to investigate a subject by comparing and contrasting various scenarios, pause and resume applications for note-taking and reflection, and gain practical, experiential knowledge over the internet.

2.2. Virtual lab components

VL is comprised of several components that collectively create a simulated lab environment. At the core, there are the experiments themselves, designed to replicate real-world lab activities (García-Zubía, Citation2021). These experiments can vary in complexity, from simple tasks like mixing chemicals to more intricate simulations such as modelling chemical reactions or engineering processes. The interactive interface is another critical component, providing users with a user-friendly and intuitive way to engage with VL (Alam & Mohanty, Citation2023). This interface typically includes drag-and-drop functionality, sliders, and buttons that allow users to adjust experimental parameters and observe changes in real time. The visualisations provided by VL are also significant, offering graphical representations such as charts, graphs, or animations that help users understand the experiment’s outcomes (Aslan Akyol & Yucel, Citation2024). VL also include tools for data collection and analysis (Lee & Lee, Citation2019). Users can gather experimental data and analyse it within VL or export it for further analysis using external software. This capability is especially useful in educational settings, where students can learn data analysis skills alongside traditional scientific methods.

3.3. Application of VL in education

VL has become indispensable in education, providing innovative alternatives to traditional classroom and laboratory settings. These digital platforms offer safe and cost-effective environments where students can conduct experiments without the risks and constraints of physical setups (Aliyu and Talib (Citation2019). For instance, chemistry students can mix chemicals and observe reactions, while physics students can explore complex concepts like magnetism and electricity without the need for specialised equipment. The flexibility of VL has proven particularly valuable in remote learning contexts, allowing students to continue their studies from any location with an internet connection (Reginald, Citation2023). This adaptability was crucial during the COVID-19 pandemic, ensuring educational continuity despite physical distancing measures. Beyond flexibility, VL can enhance learning through interactive and engaging experiences. They feature intuitive interfaces, rich visualisations, and robust data analysis tools, encouraging students to actively participate in experiments, adjust variables, and see real-time outcomes (García-Zubía, Citation2021). Additionally, Ghazali et al. (Citation2024) assert that VL fosters collaboration, enabling students to work together on experiments, share data, and discuss results remotely, thus promoting peer-to-peer learning and teamwork.

2.4. Assessing science teachers’ TPACK

The understanding of teachers’ TPACK has grown increasingly intricate due to its context-specific nature. Additionally, discord and debates surrounding TPACK and its components have been documented in the literature (Cox & Graham, Citation2009; Niess & Gillow-Wiles, Citation2017), prompting diverse methods for assessing teachers’ TPACK. These approaches encompass self-assessment surveys (Kartal & Çınar, Citation2022), classroom observations (Choi & Paik, Citation2021), lesson plan evaluation (Bingimlas, Citation2018), as well as various tools such as pre- and post-interviews and video recordings of teaching (Durdu & Dag, Citation2017). Among these multiple assessment methods, self-reported evaluations, which may not accurately depict teachers’ classroom practices, continue to dominate as the primary data-gathering instrument. The studies subsequently discussed provide insights into the examination of teachers’ TPACK.

First, Akyuz’s (Citation2018) research in Türkiye investigated the interrelationships among the TPACK components. Lesson plans from 138 pre-service mathematics teachers enrolled in a technology-integration course spanning 5 years were analysed. A specialised instrument delineating each TPACK component was devised and employed to assess the performance of pre-service teachers. To enhance the analysis, a self-assessment survey was administered to discern any disparities between the two evaluation methods. The findings reveal the identification of four distinct knowledge domains within the TPACK framework – referred to as TPACK-C, TPACK-P, Tech, and Core. Notably, self-assessment and performance measures exhibited similar findings, except for domains related to pedagogy, specifically PK, TPK, and TPACK.

Second, Evens et al. (Citation2018) in Belgium delved into the integrated and explicit presentation of CK, PK, and PCK in teacher education. The investigation involved 174 first year science education students. The study sought to determine whether exclusive presentation of CK and PK is sufficient for developing PCK, whether exclusive presentation of PCK is adequate for developing CK and PK, and whether integrating PK, CK, and PCK affects PCK development. Results indicate that presenting only two knowledge constructs to students proved inadequate for the development of the third, and amalgamating knowledge constructs did not influence the development of PCK.

Third, Kaplon-Schilis and Lyublinskaya (Citation2020) work in the United States examined whether PK, TK, content knowledge in science and mathematics (CKS and CKM, respectively), and TPACK are distinct domains of the TPACK framework. The investigation also sought to formulate tools for evaluating each core domain within the theoretical TPACK framework. By subjecting the newly devised instruments to exploratory and confirmatory factor analyses, the research proposed that the TPACK model maintain its distinctiveness from CKS, CKM, PK, and TK. Further examination through multiple linear regression revealed that CK, PK, and TK do not function as predictors for TPACK. These results open possibilities for independently assessing different facets of teacher knowledge as outlined within the TPACK framework.

Fourth, Valtonen et al. (Citation2020) measured 86 pre-service teachers’ TPACK confidence in Finland. The study utilised data derived from 86 lesson plans, each featuring technology integration, designed by the first year student teachers. These lesson plans included a dedicated section wherein students delineated their areas of confidence and challenge. A quantitative analysis of these sections was undertaken within the theoretical framework of TPACK. The results reveal four TPACK areas that were categorised as either challenging, confident, or both for the students. Notably, PK emerged as the pivotal factor, emphasising specific facets of PK that can be targeted for the enhancement of TPACK in teacher education.

Fifth, Thohir et al. (Citation2022) work assessed the competencies among pre-service teachers to integrate technology into science teaching. The research employed a three-round Delphi method involving 30 science experts. In Round 1, 19 experts participated, followed by 17 in Round 2 and 15 in Round 3. The experts responded to a series of questions through two questionnaires in rounds 2 and 3, with their identity remaining anonymous. The findings reveal a consensus among experts, leading to the establishment of four TPACK dimensions (4D-TPACK), namely meta-learning, character, skill, and knowledge. Furthermore, the researchers identified 26 pivotal competencies, subsequently refined into 14 and categorised across the 4 dimensions. Notably, PK and TK received high-level competence ratings from the experts.

Sixth, Luo et al. (Citation2023) investigated the significance of selected TPACK domains in Early Childhood Education (ECE) in China. A survey instrument comprising 29 items categorised into 4 domains aligned with the theoretical TPACK model and tailored to the ECE setting was formulated and adminstered to 1192 currently employed early childhood teachers participating in nationwide training initiatives. Confirmatory factor analysis (CFA) and path analysis were employed to construct a model with a satisfactory fit. The validated model revealed the following key findings: (1) TPK, PK, and TK were significantly correlated; (2) TPK, PK, and TK each positively correlated with TPACK; and (3) PK, nevertheless, exerted a comparatively lesser impact on TPACK. These findings imply that ECE training programs should redirect their emphasis away from singular technological skills (ie TK) and, instead, concentrate on equipping teachers with a mastery of pedagogical methods for seamlessly incorporating digital technology into teaching practices.

Our literature review unveiled certain research gaps that this study aims to address. First, a predominant focus on pre-service teachers was observed in the existing research on TPACK. Notably, Setiawan et al. (Citation2019) review spanning the years 2011–2017 underscored this trend, revealing that the majority of studies (66%) centred on pre-service teachers, with only one-third (31%) examining in-service teachers. This discrepancy highlights a notable dearth of understanding regarding the development of TPACK among in-service teachers, particularly in comparison to their pre-service counterparts. Therefore, our study endeavours to fill this void by investigating the TPACK development of in-service teachers, particularly within the domain of life sciences.

Second, a geographical imbalance was identified in the distribution of TPACK research. Handayani et al. (Citation2023) bibliometric analysis of TPACK research trends revealed that the United States of America led in the number of studies conducted, followed by Türkiye, Australia, Singapore, and South Korea. This observation underscores a significant research gap, wherein a limited number of studies have been conducted on TPACK in the Global South as opposed to the Global North. Although some research has explored teachers’ TPACK in developing countries, the emphasis has been on pre-service teachers. Hence, our research aims to contribute to the existing knowledge base by investigating in-service teachers’ TPACK, specifically in the Global South, and providing valuable insights into leveraging the VL in teaching within this context.

3. Technological pedagogical content knowledge (TPACK) framework

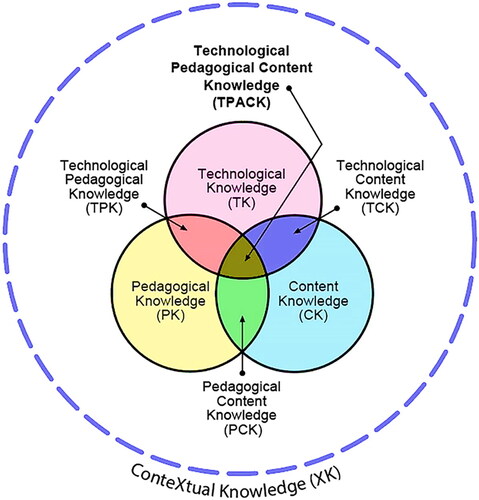

The TPACK framework serves as a vehicle for defining teachers’ essential knowledge to proficiently integrate educational technologies into their classrooms (Koehler & Mishra, Citation2006). TPACK draws upon the seminal work of Shulman (Citation1987), who initially proposed the notion of a point of convergence between PK and CK (PCK). By exploring the convergence of three distinct domains of teacher knowledge – pedagogy, content, and technology – the TPACK model offers a valuable lens to comprehend the intricate dynamics of technology integration in teaching. Scholars, notably Leahy and Mishra (Citation2023), have emphasised the significance of the TPACK framework in illuminating how the incorporation of technology influences the complex interplay between content expertise, pedagogical strategies, and the effective utilisation of technological tools in the classroom. In this regard, Koehler and Mishra (Citation2006) asserted that TPACK directs focus toward the notion that:

New technological resources reshape pedagogical knowledge, content knowledge, and pedagogical content knowledge. Furthermore, effective teaching with technology is context-dependent, and it necessitates a profound understanding of how technology interacts with pedagogy and content. (p. 13)

Good teaching is not simply adding technology to the existing teaching and content domain. Instead, the introduction of technology causes the representation of new concepts. It requires developing a sensitivity to the dynamic, transactional relationship between all three components suggested by the TP[A]CK framework. (p. 87)

Figure 1. Updated TPACK diagram (Mishra, Citation2019, p. 2).

below shows the resulting TPACK knowledge constructs.

Table 1. The eight TPACK framework constructs.

We adopted the TPACK framework due to its potential to offer lenses to understand the kinds of knowledge we sought. This framework not only guided our research design but also allowed us to explore the nuanced relationships between teachers’ self-reported content expertise, pedagogical strategies, and the adept use of VLs in rural schools.

4. Methodology

This research employed a quantitative approach and a descriptive survey design. A descriptive study is “one that is designed to describe the distribution of one or more variables, without regard to any causal or other hypotheses” (Aggarwal & Ranganathan, Citation2019, p. 34). Notable scholars such as Harris and Hofer (Citation2011), Bada and Jita (Citation2021), and Zheng et al. (Citation2024) employed this design in their research and deemed it suitable for describing phenomena without any manipulation. We present our methodology under the following headings: research population and respondents, questionnaire design, data collection procedures, and data analysis.

4.1. Sampling design and recruitment of respondents

4.1.1. Study context

This study explored TPACK among secondary school teachers in the Eastern Cape province.

4.1.2. Population

The target population includes all Life Sciences teachers in rural schools in the Eastern Cape province.

4.1.3. Sampling method

We employed a random sampling method for our study. This method was considered random because, after distributing the questionnaires, we had no control over who would choose to respond (Hirose & Creswell, Citation2023). Participation in the survey was entirely voluntary.

4.1.4. Sample size

This investigation involved 186 individuals selected from the pool of secondary school teachers in the Eastern Cape province, South Africa. To be eligible for involvement, survey respondents were required to be qualified life sciences teachers working in rural and underprivileged schools, and it was a prerequisite that they had access to technological resources such as computers at their places of work. displays the specific demographics of the respondents, encompassing gender, age, teaching experience, and educational level.

Table 2. Demographics of the respondents.

The respondents exhibited a notable gender disparity, with a higher representation of female teachers (n = 119; 64%) compared to male teachers (n = 67; 36%). The average age of the respondents was 34, with 22.5% falling into this category. Furthermore, most respondents (n = 115; 61.8%) were distributed across the 31–40 years and 41–50 years age brackets. Concerning teaching experience, the largest segment (n = 68; 36.6%) of surveyed respondents reported teaching experience of 5–10 years. Additionally, regarding the level of education, a significant proportion of the respondents (n = 97; 52.2%) indicated holding a Bachelor of Education degree.

4.2. Questionnaire design

This study employed a measurement scale derived from the classical scale proposed by Schmidt et al. (Citation2009), utilising a 5-point Likert scale ranging from 1 (strongly disagree) to 5 (strongly agree). The questionnaire was structured into two distinct sections: The initial section focused on collecting demographic information (7 items), while the second section gathered responses related to the TPACK constructs (TK = 6 items, PK = 8 items, PCK = 5 items, CK = 6 items, TPK = 3 items, and TPACK = 3 items). It is noteworthy that, apart from minor wording modifications tailored to the specific technology under investigation, no alterations were made to the user acceptance scale. The questionnaire employed in the study comprised a total of 38 items.

4.2.1. Reliability and validity of the questionnaire instrument

Prior to this study, several researchers had already evaluated the reliability of this study’s instrument using the widely accepted Cronbach alpha coefficient test of inter-item consistency reliability (Cronbach, Citation1951; Cliff, Citation1984; Hajjar, Citation2018). Paulsen and BrckaLorenz (Citation2017) asserted that “using existing, previously tested measures indicates that the data are reliable and can help increase the likelihood that new data are reliable” (p. 53). The Cronbach alpha coefficients obtained from the collected data demonstrate high reliability, with values falling within the range of 0.75 to 0.94. This is in line with Cohen et al. (Citation2017), who stated as follows:

Cronbach’s alpha is a metric used to assess internal consistency, yielding a reliability coefficient ranging from 0 to 1. The interpretation typically considers scores above 0.90 as very highly reliable, 0.80–0.90 as highly reliable, 0.70–0.79 as reliable, 0.60–0.69 as minimally reliable, and scores below 0.60 as unacceptable. (pp. 638–641)

Table 3. Questionnaire reliability statistics.

4.3. Data collection procedures

The questionnaire was randomly distributed to 200 life sciences teachers via email. Respondents were allocated three weeks to complete and return the questionnaire voluntarily. Upon receiving the completed questionnaires, the quantitative data were coded and captured in a Microsoft Excel spreadsheet. Subsequently, an initial data inspection (data cleaning) was conducted to identify missing values before the data were transferred to the SPSS program for analysis. Fourteen questionnaires contained incomplete information, rendering them unsuitable for contribution to this research. Ultimately, a total of 186 questionnaires were deemed usable for subsequent data analysis.

4.4. Data analysis

The data captured on the Excel spreadsheet were transferred to SPSS version 29 for analysis. We utilised descriptive statistical analysis, with a primary emphasis on central tendency, frequency distribution, and measures of association and dispersion, including standard deviation (SD), mean (M), and frequency (N), to examine respondents’ perceptions of the VL for rural school science teaching.

4.5. Ethics approval

We obtained ethical clearance for the study from the General/Human Research Ethics Committee at the University of the Free State, with approval number UFS-HSD2022/1276/22. This committee rigorously reviewed our study protocol, ensuring compliance with ethical standards. Prior to their participation, we provided all participants with detailed information about the study, including its purpose, procedures, potential risks, and benefits. Participants were given ample opportunity to ask questions and seek clarification before providing their informed consent. Consent was documented through signed consent forms, and in cases where verbal consent was obtained, it was recorded appropriately. We affirm that our research was conducted in accordance with ethical principles, and the rights and dignity of all participants were upheld throughout the study process.

5. Results

To understand and explain the respondents’ perceptions of VLs, we utilised Fisher and Marshall (Citation2009) classification for interpreting the mean scores of the five-point Likert scale. below shows the mean score classification.

Table 4. Classification of mean scores.

5.1. Respondents’ demographic information

This section delineates the key demographic characteristics of the respondents, encompassing their age, gender, teaching experience, and educational level. The random selection process in this study ensured equal opportunities for both male and female teachers, resulting in a predominant female representation (n = 119; 64%) (). This aligns with existing literature, exemplified by the work of Moosa and Bhana (Citation2023), who characterised the South African teaching profession as predominantly female or feminised. Furthermore, these findings mirror the Department of Basic Education (DBE, Citation2022) statistics, reporting a composition of 73.5% female teachers and 26.5% male teachers in South Africa.

Concerning age distribution, a substantial cohort (34.4%) fell within the 31–40-year age bracket, while a combined 61.8% were distributed across the 31–40- and 41–50-year age groups (). These results are in line with patterns observed in the existing literature and may reflect the current demographic landscape of the teaching profession in South Africa. Conversely, a minor segment of respondents (n = 3; 1.6%) were 61 years or older, potentially influenced by retirement patterns in South Africa. Despite potential age-related differences in technology perception, existing research (eg Moosa & Bhana, Citation2023) has not identified conclusive correlations between age and technology use in educational settings. Related to age is teaching experience, in which regard a substantial proportion of respondents (n = 68; 36.6%) held 5–10 years of experience, with the second largest group (n = 39; 21.0%) boasting 11–15 years (). A substantial majority of the respondents (83.9%) possessed over five years of experience. This may imply positive perceptions toward technology adoption due to heightened familiarity and exposure to professional development opportunities.

Regarding the level of education, the majority of the respondents (n = 97; 52.2%) held a Bachelor of Education degree, and a smaller percentage (n = 11; 5.9%) possessed a master’s degree, with no reported doctoral degrees (). Inclusion of the category other (n = 13; 7.0%) accommodates respondents with qualifications beyond those explicitly provided, such as unqualified teachers. Notably, only 7.0% of the respondents can be classified as unqualified or underqualified, indicative of adherence to government recruitment policies over the past decade (DBE, Citation2022). This broad inclusion of teachers with diverse qualifications is rooted in the assumption that all teachers, regardless of their formal qualifications, contribute valuable perceptions pertinent to understanding the integration of VLs for teaching.

5.2. TPACK Descriptive statistics

The results we present are a result of descriptive statistical analysis from the surveyed teachers’ questionnaire responses. Considering teachers’ TPACK was crucial, because although teachers might have positive perceptions of VLs, meaningful adoption of such a technology may be affected due to a lack of knowledge and skills to teach with it. below presents the descriptive statistics for each TPACK domain.

Table 5. Descriptive statistics: TPACK domains.

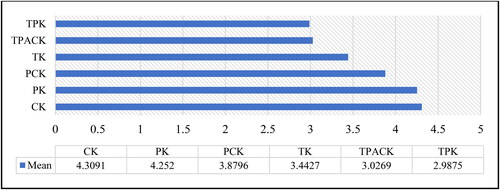

shows the mean, standard deviation, minimum, and maximum values for the six TPACK domains, grouped into two categories – three domains associated with non-technology constructs and three associated with technology constructs. The non-technology constructs displayed higher mean scores, all exceeding 3.8: CK (M = 4.31; SD = 0.46), PK (M = 4.25; SD = 0.38), and PCK (M = 3.88; SD = 0.68). In addition, the standard deviations for these constructs were lower, signalling strong agreement that respondents possessed a more solid grasp of the subject content and teaching strategies. In contrast, the technology-related TPACK domains showed lower mean values than the non-technology ones: TK (M = 3.44; SD = 1.00), TPK (M = 2.99; SD = 1.0377), and TPACK (M = 3.03; SD = 0.96). This observation implies that life sciences teachers may need to enhance their TK and technology-related pedagogical skills. below provides a graphical representation of the descriptive statistics of the TPACK domains. The purpose of the graph is to offer a more precise visualisation and to facilitate a comparative analysis of the prominence of the different TPACK components.

Interestingly, the TK among the respondents was substantial (M = 3.44; SD = 1.00). However, it experienced a decrease when combined with the other two knowledge constructs to create TPK (M = 2.99; SD = 1.04) and TPACK (M = 3.03; SD = 0.96). These findings suggest that life sciences teachers are more proficient in their PK and CK than in other knowledge domains. This underscores the significance of subject content expertise and its effective delivery to learners, highlighting a lower confidence level in their TK. One potential explanation for their high level of CK and PK may stem from the South African education system’s emphasis on subject specialisation. Possession of a degree by teachers in South Africa in their subjects is a prerequisite, providing them with comprehensive CK in their respective subjects. Moreover, the focus on improving pedagogical skills during continuous professional teacher development programs may have contributed to their superior PK. Furthermore, the continuous professional teacher development programmes prioritise the CK and PK domains, potentially contributing to life sciences teachers’ advanced knowledge in these areas. Furthermore, the higher TK mean score compared to other technology-related domains highlights the importance of TK in particular and its impact on life sciences teachers’ use of VLs. As discussed earlier, the teachers may benefit from professional development programs that focus on developing their TK and skills to keep up with the continuous introduction of new technology tools in education. To fully comprehend the respondents’ knowledge, we further analysed the individual TPACK components, as presented below.

5.2.1. Technological knowledge

The questionnaire required the respondents to respond to seven items for TK. These statements and the relevant statistics obtained are shown in .

Table 6. Descriptive statistics: Technological knowledge (N = 186).

Based on the mean ratings of all the individual items presented in , the respondents exhibited high levels of TK. Notably, the statement that scored the highest mean rating was related to the respondents’ ability to learn technology easily (M = 3.82; SD = 1.03), implying that they possess a certain level of adaptability and willingness to explore new technological tools (in this case, VLs) in their classrooms. Furthermore, the survey findings suggest that the respondents have a high degree of comfort in utilising various technologies in their teaching, as reflected in the similar scores obtained for the items I frequently play around with technologies (M = 3.39; SD = 1.19), I know which technologies would work best for my life sciences teaching (M = 3.31; SD = 1.13), and I can teach with the use of different technologies (M = 3.31; SD = 1.13). These results suggest that the respondents possess self-assurance in their technological capabilities and are well equipped to use educational technologies in their pedagogical approaches.

However, the questionnaire findings also demonstrate that the respondents’ knowledge of a lot of different technologies registered the lowest mean value (M = 3.13; SD = 1.24), suggesting that some respondents may lack confidence in their familiarity with various technological tools. The high standard deviation for this item (1.24) indicates a considerable variance in respondents’ levels of TK, with some exhibiting greater proficiency than others. This finding implies that teachers who frequently employ technology may still need to acquire the necessary skills to remain abreast of emerging technology tools and their uses in the teaching–learning spaces. Overall, the statistical analysis shows that respondents exhibited a high level of TK and can effectively work with various technologies. The overall mean rating (M = 3.44; SD = 1.00) indicates that the respondents are well versed in using technological tools. These results are encouraging and suggest that these respondents are well prepared to meet the technological demands of contemporary teaching practices, such as those involving VLs.

5.2.2. Content knowledge

The questionnaire required the participants to respond to six statements concerning their CK. below displays the statistics in this regard.

Table 7. Descriptive statistics: Content knowledge (N = 186).

The computations presented in above show how respondents responded regarding their CK. The highest mean is attributed to the statement I am familiar with the life sciences content that CAPS prescribes (M = 4.46; SD = 0.53), showing that the respondents have a strong familiarity with the subject matter outlined in the CAPS. This was followed by sufficient knowledge to answer most learners’ life sciences questions (M = 4.38; SD = 0.57), suggesting that the respondents felt confident in their ability to address learners’ queries related to the subject. On the contrary, the statement with the lowest mean rating is I have various ways and strategies of developing my own life sciences understanding (M = 4.17; SD = 0.52). The low standard deviation for this item (0.52) indicates that the respondents had a firm agreement regarding their level of proficiency in developing their understanding of life sciences content through various methods and strategies.

Overall, the findings suggest that the respondents regarded themselves as highly knowledgeable in their subject content, as evidenced by the individual items’ mean scores exceeding 4. The standard deviation values ranging from 0.52 to 0.64 also suggest high agreement levels among the respondents. The CK construct had a very high mean rating (4.31) and standard deviation value (0.46), signifying that the respondents’ CK level was high and that most respondents responded similarly. It is worth noting that these findings are not surprising, given that subject specialisation is a prerequisite for life sciences teachers to graduate from their initial teacher training courses. However, it is essential to recognise that there may be a mismatch between the respondents’ self-reported CK levels and their actual competency in the subject content. Future studies may explore teachers’ actual CK using objective measures to afford a more comprehensive understanding of their competencies.

5.2.3. Pedagogical knowledge

The questionnaire required respondents to respond to eight statements concerning their PK, with the statistics yielded displayed in .

Table 8. Descriptive statistics: Pedagogical knowledge (N = 186).

provides insight into the respondents’ PK domain. As seen from the table, the mean ratings for all the PK statements are above 4. This finding suggests that the respondents held high regard for their aptitude in directing learners in adopting suitable learning approaches and supervising their learning progress. Notably, the item I know how to organise and maintain class management and control recorded the highest mean rating (M = 4.41; SD = 0.58), emphasising the importance of effective class management and control as a basis for successful teaching. Additionally, the respondents strongly perceived that they are familiar with the prescribed life sciences textbooks and other learning resources used in most South African classrooms (M = 4.29; SD = 0.59). However, the lowest mean value on the PK scale was for the item on knowing how to assess learners’ performance in life sciences, including knowledge of different cognitive levels, degrees of question difficulty, and the concept of a “reasonable learner” (M = 4.10; SD = 0.56). This finding, nevertheless, demonstrates that the respondents believed that they could assess learners’ performance in life sciences. In sum, the respondents reported very high PK levels, with an overall mean value of 4.25, along with a very high agreement level for all the items (SD = 0.38). Furthermore, the high mean scores for PK indicate that the respondents believed they could stretch their learners’ thinking by crafting challenging activities.

5.2.4. Pedagogical content knowledge

The questionnaire required the respondents to respond to five PCK items, with below displaying the statistics.

Table 9. Descriptive statistics: Pedagogical content knowledge (N = 186).

The descriptive statistics in show the mean ratings and standard deviation values of the participating life sciences teachers’ PCK levels. A closer examination of the individual item mean ratings demonstrates that all the mean values exceeded 3.5, revealing high levels of teacher PCK. Specifically, the statement with the highest mean score was I have knowledge to teach life sciences using audios and videos, eg from YouTube (M = 4.15; SD = 0.75), highlighting the importance of multimedia resources in life sciences teaching from the respondents’ perspectives. This was followed closely by three items that had similar means: I know which life sciences concepts/topics to teach using simulations (M = 3.88; SD = 0.81), I know how to teach life sciences using digital boards, eg data projectors and smartboards (M = 3.86; SD = 1.00), and I know how to teach specific life sciences concepts/topics using specific virtual lab experiments (M = 3.86; SD = 0.96). All three these items had mean scores above 3.8, indicating very high self-reported teacher PCK levels.

On the other hand, the lowest mean rating was recorded for the statement I have the knowledge to teach life sciences using virtual labs on mobile devices such as cell phones, tablets, and iPads (M = 3.65; SD = 1.0413). Generally, the overall PCK mean score was high (M = 3.88; SD = 0.68), suggesting that the teachers in this study possessed the requisite knowledge and skills to connect PK and CK to provide their learners with diverse learning opportunities. The results imply that life sciences teachers in rural schools know various teaching strategies and resources to improve their learners’ learning outcomes in life sciences. However, there is still room for improvement in some areas of teacher knowledge, such as incorporating VLs in teaching.

5.2.5. Technological pedagogical knowledge

The questionnaire required respondents to respond to three TPK items, and below shows the statistics obtained.

Table 10. Descriptive statistics: Technological pedagogical knowledge (N = 186).

The statistical analysis results of the six TPACK constructs () revealed that the lowest mean score (M = 2.99; SD = 1.04) is attributed to TPK. This result indicates that respondents responded neutrally to the TPK items. The respondents’ responses are evidence of their poor TPK levels in the use of the VL as a pedagogical tool. To delve deeper into the TPK construct, above displays the results of the three individual TPK items. The mean values for the individual items ranged from 2.79 to 3.10, signifying that respondents’ confidence in integrating VLs into their pedagogy was low to moderate. In particular, the item I can choose technologies that enhance learners’ understanding of a lesson (M = 3.10; SD = 1.15) recorded the highest mean value compared to other items. Further scrutiny of the individual TPK statements shows that the lowest mean rating (M = 2.79; SD = 1.13) was observed for the item I always think critically about how to use virtual labs in my life sciences class. This suggests that most respondents were either unsure or lacked confidence in their ability to think critically about using VLs in their teaching. Moreover, the high standard deviation (1.13) suggests significant variation in respondents’ responses. The overall TPK mean score (M = 2.99; SD = 1.04) signifies low levels regarding respondents’ ability to choose which aspects of VL experiments can enhance their teaching. These results are not unexpected given the novelty of VLs, where much is yet to be known about the potential of VLs to improve teaching. It can thus be concluded that with more widespread adoption and use of VLs, most life sciences teachers in rural schools will develop higher TPK levels required to use this tool for teaching effectively.

5.2.6. Technological pedagogical content knowledge

The questionnaire required the respondents to respond to three TPACK items, with the statistics obtained from the analysis shown in .

Table 11. Descriptive statistics: Technological pedagogical content knowledge (N = 186).

The statistical analysis revealed an overall mean rating for TPACK of 3.03 (SD = 0.96) and that the mean values for all the TPACK statements exceeded 3.0, revealing moderate to high TPACK levels. This finding shows that the respondents were moderately confident in their competency to teach life sciences concepts/topics that appropriately combine the content with different teaching strategies using science experiments in a virtual lab (M = 3.04; SD = 0.99). The same applies to the respondents’ belief in their ability to teach life sciences concepts/topics that appropriately combine the content with technology skills using science experiments in a virtual lab (M = 3.03; SD = 1.00). Moreover, the survey findings indicate that the respondents further concurred that they could adapt and use particular experiments in virtual labs to meet their different learners’ learning capabilities (M = 3.01; SD = 1.04). Furthermore, the standard deviation values for the TPACK items are above 1.0, suggesting more variation in the respondents’ responses. Notably, when compared with other technology-related domains, the overall mean for the TPACK domain (M = 3.03; SD = 0.96) slightly exceeded that for TPK (M = 2.99; SD = 1.04), but it was lower than the TK mean score (M = 3.44; SD = 1.00). This is an exciting finding, as it suggests that the teachers in this research believed that they could integrate VLs in their classrooms despite their competence in their ability to utilise it being lower.

6. Discussion

This study delved into teachers’ self-reported levels of TPACK and its application in VL integration across six TPACK constructs. These constructs are grouped into two categories: non-technology-related domains (PCK, PK, and CK) and technology-related domains (TPACK, TPK, and TK). Notably, our findings (see ) reveal higher mean scores for the non-technology domains, indicating a strong grasp of subject content and pedagogy among the respondents. However, the technology-related TPACK domains exhibited lower average mean scores. These results challenge previous research by Kaplon-Schilis and Lyublinskaya (Citation2020), Valtonen et al. (Citation2020), and Luo et al. (Citation2023), which found lower levels of PCK, PK, and CK in their participants. In contrast, our study uncovered higher levels of PCK, PK, and CK among the participating teachers. One possible explanation lies in our focus on in-service teachers, who, through teaching experience, may have had the opportunity to develop these foundational knowledge components.

This study aligns with earlier research by Koehler and Mishra (Citation2009), Cox and Graham (Citation2009), and Thohir et al. (Citation2022) affirming that practicing teachers generally exhibit higher levels of PK, CK, and PCK compared to TK, TPK, and TPACK. This observation raises questions about when teachers in rural schools will attain advanced proficiency in technology-related TPACK domains. The higher mean scores for non-technology domains compared to technology-related domains suggest that life sciences teachers understand subject content and teaching approaches better than technological expertise. This aligns with the teacher training system of South Africa, where qualified teachers must have a degree in their specific subject. Continuous professional development programs prioritise workshops addressing content gaps and enhancing pedagogical skills, further strengthening teachers’ proficiency in CK, PK, and PCK.

Moreover, in line with Luo et al. (Citation2023) findings, our study identified TK as the most prominent domain among the three technology-related components. While life sciences teachers may possess a robust understanding of TK on its own, the integration of TK with CK and PK presents challenges, as evidenced by the lower mean scores for TPK and TPACK (see ). This discovery aligns with earlier studies by Jang and Tsai (Citation2013), Choi and Paik (Citation2021), and Ong and Annamalai (Citation2023), supporting Koehler and Mishra (Citation2006) observation that teachers often struggle to comprehend the dynamic interplay and transactional relationships between CK, PK, and TK. It is essential to recognise the constant evolution of technology, requiring ongoing updates and adaptations. The dynamic nature of technology may contribute to the delay in developing technology-related knowledge domains compared to more stable domains such as CK and PK. Therefore, the swift changes in technology and its integration into teaching may elucidate the comparatively lower self-reported knowledge levels in technology-related domains among life sciences teachers in rural schools. Recognising this dynamic context underscores the importance of continuous professional development to keep teacher abreast of technological advancements and their effective integration into teaching practices.

7. Conclusion

The early stages of VL implementation in many Global South countries, including South Africa, raise the need for a comprehensive understanding of teachers’ competence in utilising VLs for teaching, given its anticipated widespread adoption. A lack of comprehension of this competency could lead to potential risks, such as abandonment or underutilisation post deployment in schools. This paper examined teachers’ self-reported TPACK concerning the integration of VLs into rural school teaching. The findings uncovered an overall low level of TPACK among teachers in rural schools, signalling the necessity for enhanced competencies to integrate VLs effectively. The paper recommends tailored professional development and increased VL adoption to address this challenge. While there is a notable mastery of non-technology domains, especially in CK, PK, and PCK, the study highlighted a significant disparity in technology-related TPACK domains, with lower competency levels observed in TK, TPK, and overall TPACK. The research breaks new ground by investigating the specific context of rural and poorly resourced schools, an under-researched area in South Africa, laying the foundation for valuable insights crucial to understanding teachers’ TPACK and facilitating the successful and widespread adoption of VLs in these settings.

8. Implications

The integration of VLs in teaching represents an emerging research area in South African science education. This study serves as one of the initial steps in investigating teachers’ TPACK for successful VL adoption in rural schools, establishing the foundation for this field of study. The insights from rural school science teachers’ TPACK levels provide guidance for designing targeted interventions and support systems. Policymakers can utilise these insights to develop initiatives aimed at enhancing teachers’ knowledge to effectively leverage technology in teaching, thereby ensuring equitable access to quality education in remote areas. The implications extend globally to the discourse on educational technology integration in diverse learning environments, including rural and marginalised regions. South African rural schools offer valuable lessons for teacher, researchers, and policymakers worldwide, fostering collaboration to address challenges and optimise the benefits of virtual learning. As technology evolves, the research findings contribute to a dynamic framework for adapting and refining virtual learning practices, not only in South Africa but also in various other socio-economic and cultural contexts. Ultimately, this study enriches the ongoing dialogue on the intersection of technology and education, advocating for a more inclusive and responsive approach to advancing learning opportunities for all.

9. Limitations

Every research study has inherent strengths and limitations, and the current study is no exception. This paper explored the perceptions of rural science teachers regarding VLs. However, certain limitations need acknowledgement. The study faced constraints related to the data collection timeframe, as it was conducted during a demanding period for teachers. This timeframe coincided with the pressure to cover the curriculum for year-end examinations, potentially impacting the thoroughness of participating teachers’ responses to questionnaires.

10. Future studies

This research anticipates the imminent widespread adoption of VLs by countries in the Global South. With the expected roll-out in rural schools and similar contexts, the study recommends longitudinal, scalability, and sustainability investigations to assess teachers’ TPACK development over time. Given the evolution of technology adoption and teaching practices, conducting longitudinal studies spanning an extended period would yield valuable insights into the long-term impacts and challenges associated with VL integration in rural school classrooms. These studies could focus on interventions such as professional development concerning VL integration in resource-poor rural schooling contexts.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Brian Shambare

Brian Shambare is a Lecturer in the Department of Mathematics, Natural Sciences and Technology Education, University of the Free State. His research primarily focuses on integrating innovative technologies into science education, with a special interest in addressing the needs of marginalised communities, such as those in rural regions.

Thuthukile Jita

Thuthukile Jita is an associate professor for the Department of Curriculum Studies and Higher Education and a program director for Work Integrated Learning at the University of the Free State (UFS). Her research interests include curriculum studies, pre-service teacher education, the use of Information Communication and Technologies (ICT) in subject teaching, E-Learning, Work Integrated Learning (WIL), and science education.

References

- Aggarwal, R., & Ranganathan, P. (2019). Study designs: Part 2–descriptive studies. Perspectives in Clinical Research, 10(1), 34–36. https://doi.org/10.4103/picr.PICR_154_18

- Akyuz, D. (2018). Measuring technological pedagogical content knowledge (TPACK) through performance assessment. Computers & Education, 125, 212–225. https://doi.org/10.1016/j.compedu.2018.06.012

- Alam, A., & Mohanty, A. (2023). Design, development, and implementation of software engineering virtual laboratory: A boon to computer science and engineering (CSE) education during Covid-19 pandemic. In Proceedings of third international conference on sustainable expert systems: ICSES 2022 (pp. 1–20). Springer Nature Singapore. https://doi.org/10.1007/978-981-19-7874-6_1

- Aliyu, F., & Talib, C. A. (2019). Virtual chemistry laboratory: A panacea to problems of conducting chemistry practical at science secondary schools in Nigeria. International Journal of Engineering and Advanced Technology, 8(5c), 544–549. https://doi.org/10.35940/ijeat.E1079.0585C19

- Aslan Akyol, Z., & Yucel, M. (2024). Remote accessible/fully controllable fiber optic systems laboratory design and implementation. Computer Applications in Engineering Education, 32(1), e22694. https://doi.org/10.1002/cae.22694

- Bada, A. A., & Jita, L. C. (2021). E-learning facilities for teaching secondary school physics: Awareness, availability and utilisation. Research in Social Sciences and Technology, 6(3), 227–241. https://doi.org/10.46303/ressat.2021.40

- Benbelkacem, G., Elzawawy, A., & Rahemi, H. (2024). The development and implementation of a UAS certificate program at Vaughn College of Aeronautics and Technology. AIAA SCITECH 2024 Forum, 8, Orlando, FL. https://doi.org/10.2514/6.2024-0919

- Bingimlas, K. (2018). Investigating the level of teachers’ knowledge in technology, pedagogy, and content (TPACK) in Saudi Arabia. South African Journal of Education, 38(3), 1–12. 10.15700/saje.v38n3a1496

- Choi, K., & Paik, S. H. (2021). Development of pre-service teachers’ TPACK evaluation framework and analysis of hindrance factors of TPACK development. Journal of the Korean Association for Science Education, 41(4), 325–338. https://doi.org/10.14697/jkase.2021.41.4.325

- Cliff, N. (1984). An improved internal consistency reliability estimate. Journal of Educational Statistics, 9(2), 151–161. https://doi.org/10.3102/10769986009002151

- Cohen, L., Manion, L., & Morrison, K. (2017). Observation. In L. Cohen, L. Manion & K. Morrison (Eds.), Research methods in education (pp. 542–562). Routledge.

- Cox, S., & Graham, C. R. (2009). Using an elaborated model of the TPACK framework to analyse and depict teacher knowledge. TechTrends, 53(5), 60–69. 10.1007/s11528-009-0327-1

- Cronbach, L. J. (1951). Coefficient alpha and the internal structure of tests. Psychometrika, 16(3), 297–334. https://doi.org/10.1007/BF02310555

- DBE. (2022). National policy on quintile schools. DBE. https://www.gov.za/sites/default/files/gcis_document/202212/47756gon2874.pdf

- Dewey, J., & Bentley, A. F. (1949). Knowing and the known. Beacon Press. https://books.google.com/books/about/Knowing_and_the_Known.html?id=u6hs208TpWwC

- Durdu, L., & Dag, F. (2017). Pre-service teachers’ TPACK development and conceptions through a TPACK-based course. Australian Journal of Teacher Education, 42(11), 150–171. https://search.informit.org/doi/10.3316/informit.245910712701186 https://doi.org/10.14221/ajte.2017v42n11.10

- Engel, K. T., Davidson, J., Jolley, A., Kennedy, B., & Nichols, A. R. (2023). Development of a virtual microscope with integrated feedback for blended geology labs. Journal of Geoscience Education, 71(1), 1–15. https://doi.org/10.1080/10899995.2023.2202285

- Evens, M., Elen, J., Larmuseau, C., & Depaepe, F. (2018). Promoting the development of teacher professional knowledge: Integrating content and pedagogy in teacher education. Teaching and Teacher Education, 75, 244–258. https://doi.org/10.1016/j.tate.2018.07.001

- Fisher, M. J., & Marshall, A. P. (2009). Understanding descriptive statistics. Australian Critical Care, 22(2), 93–97. https://doi.org/10.1016/j.aucc.2008.11.003

- García-Zubía, J. (2021). Remote laboratories: Empowering STEM education with technology. World Scientific. https://doi.org/10.1142/9781786349439_0008

- Ghazali, A. K. A., Aziz, N. A. A., Aziz, K., & Tse Kian, N. (2024). The usage of virtual reality in engineering education. Cogent Education, 11(1), 2319441. https://doi.org/10.1080/2331186X.2024.2319441

- Goldhaber, D., Strunk, K. O., Brown, N., Naito, N., & Wolff, M. (2020). Teacher staffing challenges in California: Examining the uniqueness of rural school districts. AERA Open, 6(3), 233285842095183. https://doi.org/10.1177/2332858420951833

- Gray, M. L., Johnson, C. R., & Gilley, B. J. (Eds.). (2016). Queering the countryside: New frontiers in rural queer studies (Vol. 11). NYU Press. https://0-muse.jhu.edu.wam.seals.ac.za/pub/193/monograph/book/76201ER

- Hajjar, S. T. (2018). Statistical analysis: Internal-consistency reliability and construct validity. International Journal of Quantitative and Qualitative Research Methods, 6(1), 27–38. https://doi.org/10.15091/ijqqrm.2018.v6i1.617

- Handayani, S., Hussin, M., & Norman, M. (2023). Technological pedagogical content knowledge (TPACK) model in teaching: A review and bibliometric analysis. Pegem Journal of Education and Instruction, 13(3), 176–190. https://doi.org/10.47750/pegegog.13.03.19

- Harris, J. B., & Hofer, M. J. (2011). Technological pedagogical content knowledge (TPACK) in action: A descriptive study of secondary teachers’ curriculum-based, technology-related instructional planning. Journal of Research on Technology in Education, 43(3), 211–229. https://doi.org/10.1080/15391523.2011.10782570

- Hirose, M., & Creswell, J. W. (2023). Applying core quality criteria of mixed methods research to an empirical study. Journal of Mixed Methods Research, 17(1), 12–28. https://doi.org/10.1177/15586898221086346

- Hlalele, D., & Mosia, M. (2020). Teachers’ sense of community in rural learning ecologies. Alternation – Interdisciplinary Journal for the Study of the Arts and Humanities in Southern Africa, 27(2), 101–124. https://doi.org/10.29086/2519-5476/2020/v27n2a6

- Holbrook, J., Chowdhury, T. B. M., & Rannikmäe, M. (2022). A future trend for science education: A constructivism-humanism approach to trans-contextualisation. Education Sciences, 12(6), 413. https://doi.org/10.3390/educsci12060413

- Ingersoll, R. M., & Tran, H. (2023). Teacher shortages and turnover in rural schools in the US: An organisational analysis. Educational Administration Quarterly, 59(2), 396–431. https://doi.org/10.1177/0013161X231159922

- Jang, S. J., & Tsai, M. F. (2013). Exploring the TPACK of Taiwanese secondary school science teachers using a new contextualised TPACK model. Australasian Journal of Educational Technology, 29(4), 566–580. https://doi.org/10.14742/ajet.282

- Jantjies, M., & Matome, T. J. (2021). Student perceptions of virtual reality in higher education. In D. Ifenthaler, D. G. Sampson, & P. Isaías (Eds.), Balancing the tension between digital technologies and learning sciences (pp. 167–181). Springer. https://doi.org/10.1007/978-3-030-65657-7_10

- Kapilan, N., Vidhya, P., & Gao, X. Z. (2021). Virtual laboratory: A boon to the mechanical engineering education during Covid-19 pandemic. Higher Education for the Future, 8(1), 31–46. https://doi.org/10.1177/2347631120970757

- Kaplon-Schilis, A., & Lyublinskaya, I. (2020). Analysis of relationship between five domains of TPACK framework: TK, PK, CK math, CK science, and TPACK of pre-service special education teachers. Technology, Knowledge and Learning, 25(1), 25–43. https://doi.org/10.1007/s10758-019-09404-x

- Kartal, B., & Çınar, C. (2022). Pre-service mathematics teachers’ TPACK development when they are teaching polygons with Geogebra. International Journal of Mathematical Education in Science and Technology, 55(5), 1171–1203. 10.1080/0020739X.2022.2052197

- Koehler, M. J., & Mishra, P. (2006). What happens when teachers design educational technology? The development of technological pedagogical content knowledge. Journal of Educational Computing Research, 32(2), 131–152. 10.2190/0EW7-01WB-BKHL-QDYV

- Koehler, M., & Mishra, P. (2009). What is technological pedagogical content knowledge (TPACK)? Contemporary Issues in Technology and Teacher Education, 91, 60–70. https://doi.org/10.1177/0963297X0900900106

- Kokela, S. J., & Malatji, K. S. (2023). An evaluation of the capacity of South African schools to offer multi-grade teaching: A case study of schools in the Sekhukhune South District, www.emporepublishers.co.za. https://doi.org/10.4102/emr.v22i1.2533

- Leahy, S., & Mishra, P. (2023). TPACK and the Cambrian explosion of AI. Society for Information Technology & Teacher Education International Conference (pp. 2465–2469). Association for the Advancement of Computing in Education (AACE). https://www.learntechlib.org/primary/p/222145/

- Lee, S. G., & Lee, J. H. (2019). Student-centered discrete mathematics class with cyber lab. Communications of Mathematical Education, 33(1), 1–19. https://doi.org/10.7468/jksmee.2019.33.1.1

- Luo, W., Berson, I. R., Berson, M. J., & Park, S. (2023). An exploration of early childhood teachers’ technology, pedagogy, and content knowledge (TPACK) in mainland China. Early Education and Development, 34(4), 963–978. https://doi.org/10.1080/10409289.2022.2079887

- Mahling, M., Wunderlich, R., Steiner, D., Gorgati, E., Festl-Wietek, T., & Herrmann-Werner, A. (2023). Virtual reality for emergency medicine training in medical school: Prospective, large-cohort implementation study. Journal of Medical Internet Research, 25, e43649. https://doi.org/10.2196/43649

- Mishra, P. (2019). Considering contextual knowledge: The TPACK diagram gets an upgrade. Journal of Digital Learning in Teacher Education, 35(2), 76–78. https://doi.org/10.1080/21532974.2019.1588611

- Mishra, P., Blankenship, R., Mourlam, D., Berson, I., Berson, M., Lee, C. Y., Peng, L. W., Jin, Y., Lyublinskaya, I., Du, X., & Warr, M. (2022). Reimagining practical applications of the TPACK framework in the new digital era. Society for Information Technology & Teacher Education International Conference (pp. 2198–2203). Association for the Advancement of Computing in Education (AACE). https://doi.org/10.1145/3591512.3592457

- Moosa, S., & Bhana, D. (2023). Men who teach early childhood education: Mediating masculinity, authority and sexuality. Teaching and Teacher Education, 122Article, 103959. https://doi.org/10.1016/j.tate.2022.103959

- Nemes, G., & Tomay, K. (2022). Split realities: Dilemmas for rural/gastro tourism in territorial development. Regional Studies, 56(1), 1–10. https://doi.org/10.1080/00343404.2022.2084059

- Niess, M. L., & Gillow-Wiles, H. (2017). Expanding teachers’ technological pedagogical reasoning with a systems pedagogical approach. Australasian Journal of Educational Technology, 33(3), 3473. https://doi.org/10.14742/ajet.3473

- Okono, E., Wangila, E., & Chebet, A. (2023). Effects of virtual laboratory-based instruction on the frequency of use of experiment as a pedagogical approach in teaching and learning of physics in secondary schools in Kenya. African Journal of Empirical Research, 4(2), 1143–1151. https://doi.org/10.51867/ajernet.4.2.116

- Ong, Q. K. L., & Annamalai, N. (2023). Technological pedagogical content knowledge for twenty-first century learning skills: The game changer for teachers of Industrial Revolution 5.0. Education and Information Technologies, 29(2), 1939–1980. 10.1007/s10639-023-11852-z

- Paulsen, J., & BrckaLorenz, A. (2017). Internal consistency. Faculty Survey of Student Engagement. https://hdl.handle.net/2022/24498

- Pellas, N., Dengel, A., & Christopoulos, A. (2020). A scoping review of immersive virtual reality in STEM education. IEEE Transactions on Learning Technologies, 13(4), 748–761. https://doi.org/10.1109/TLT.2020.3019405

- Reginald, G. (2023). Teaching and learning using virtual labs: Investigating the effects on students’ self-regulation. Cogent Education, 10(1), 2172308. https://doi.org/10.1080/2331186X.2023.2172308

- Schmidt, D. A., Baran, E., Thompson, A. D., Mishra, P., Koehler, M. J., & Shin, T. S. (2009). Technological pedagogical content knowledge (TPACK) the development and validation of an assessment instrument for preservice teachers. Journal of Research on Technology in Education, 42(2), 123–149. https://doi.org/10.1080/15391523.2009.10782544

- Setiawan, H, Phillipson, S, Isnaeni, W, Sudarmin, (2019). Current trends in TPACK research in science education: A systematic review of literature from 2011 to 2017. Journal of Physics: Conference Series, 1317(1), 012213. https://doi.org/10.1088/1742-6596/1317/1/012213

- Shambare, B., Simuja, C., & Olayinka, T. A. (2022). Understanding the enabling and constraining factors in using the virtual lab: Teaching science in rural schools in South Africa. International Journal of Information and Communication Technology Education, 18(1), 1–15. https://doi.org/10.4018/IJICTE.307110

- Shulman, L. (1987). Knowledge and teaching: Foundations of the new reform. Harvard Educational Review, 57(1), 1–23. https://doi.org/10.17763/haer.57.1.j463w79r56455411

- Shulman, L. S. (1986). Those who understand: Knowledge growth in teaching. Educational Researcher, 15(2), 4–14. https://doi.org/10.3102/0013189X015002004

- Shulman, L. S. (2015). PCK: Its genesis and exodus. In A. Berry, P. Friedrichs & J. Loughran (Eds.), Re-examining pedagogical content knowledge in science education (pp. 13–23). Routledge. https://doi.org/10.4324/9781315735665.1

- Solomon, Z., Ajayi, N., Raghavjee, R., & Ndayizigamiye, P. (2019). Lecturers’ perceptions of virtual reality as a teaching and learning platform. In S. Kabanda, H. Suleman & S. Gruner (Eds.), ICT Education: 47th Annual Conference of the Southern African Computer Lecturers’ Association, SACLA 2018 (pp. 299–312). Springer International Publishing. https://doi.org/10.17159/02221753/2018/v67n1a11

- Tatli, Z., & Ayas, A. (2013). Effect of a virtual chemistry laboratory on students’ achievement. Journal of Educational Technology & Society, 16(1), 159–170. https://www.jstor.org/stable/jeductechsoci.16.1.159

- Thohir, M. A., Jumadi, J., & Warsono, W. (2022). Technological pedagogical content knowledge (TPACK) of pre-service science teachers: A Delphi study. Journal of Research on Technology in Education, 54(1), 127–142. https://doi.org/10.1080/15391523.2020.1814908

- Umesh, R., & Penn, M. (2019 The use of virtual learning environments and achievement in physics content tests. Proceedings of the International Conference on Education and New Development (pp. 493–497). https://doi.org/10.36315/2019v1end112

- Umukozi, A., Yadav, L. L., & Bugingo, J. B. (2023). Effectiveness of virtual labs on advanced level physics students’ performance in simple harmonic motion in Kayonza District, Rwanda. African Journal of Educational Studies in Mathematics and Sciences, 19(1), 85–96.

- Valtonen, T., Leppänen, U., Hyypiä, M., Sointu, E., Smits, A., & Tondeur, J. (2020). Fresh perspectives on TPACK: Pre-service teachers’ own appraisal of their challenging and confident TPACK areas. Education and Information Technologies, 25(4), 2823–2842. https://doi.org/10.1007/s10639-019-10092-4

- Vlachos, I., Stylos, G., & Kotsis, K. T. (2024). Primary school teachers’ attitudes towards experimentation in physics teaching. European Journal of Science and Mathematics Education, 12(1), 60–70. https://doi.org/10.30935/scimath/13830

- Zheng, L., Umar, M., Safi, A., & Khaddage-Soboh, N. (2024). The role of higher education and institutional quality for carbon neutrality: Evidence from emerging economies. Economic Analysis and Policy, 81, 406–417. https://doi.org/10.1016/j.eap.2023.12.008