Abstract

Emotional engagement is essential in human communication, and the meaning of emotions often entails multimodal relationships. Besides language, multimodality and emotions are semiotic systems that have increasingly attracted the attention of researchers, especially concerning their contribution to the meaning-making process in communication. However, research trends that address how emotions and multimodal components (hereafter multimodal emotions) complement each other in the meaning-making process have not been extensively researched. Hence, this research aims to identify research trends on multimodality and emotions studied from 2018 to 2022. The method used in this research was systematic literature review (SLR) to review, identify, evaluate and interpret all existing research on this topic. Data were obtained from IEEE Xplore, Science Direct and Emerald journals which were then sorted based on the PRISMA method. This analysis acquired comprehensive information on the multimodal components of emotions, data acquisition technologies, datasets and emotional focus and trends. This SLR demonstrated that the research of emotions involving multimodality was conducted with a focus on audio-visual mode with the use of machines namely EEG and LSTM sourced from the IEMOCAP dataset and focused on positive and negative emotions, with anger being the largest focus. Moreover, the findings also showed the interconnectedness of each multimodal component of emotions. Based on these findings, this study suggests that multimodal emotion research should focus on identifying and investigating the meanings generated from emotions to produce good communication. Lack of subject privacy and unmanageable bias in research are other things to consider for future research directions.

IMPACT STATEMENT

Communication is a meaning-making endeavor comprised language and various semiotic systems, including multimodality and emotion. No research has yet identified how emotion and multimodal components complement each other. This study aims to identify research trends on multimodality and emotion from journal articles published in 2018–2022 to investigate multimodal components and other data sets used to explore the meaning-making process and to explore how emotions are comprehensively accessed. Using the PRISMA method, the data was analyzed to obtain comprehensive information regarding the multimodal components of emotions, data acquisition technologies, datasets and emotional focus with research trends. This SLR showed that emotion research involving multimodality was conducted with a focus and dominance on audio-visual mode with the use of machines namely EEG and LSTM sourced from the IEMOCAP dataset type. In addition, the dominant emotion focus in this study was positive and negative emotions, with anger being the largest focus.

1. Introduction

Emotions are enacted on the basis of each person’s subjective experience, whilst multimodal emotions are patterns of human emotions in interaction to convey meaning in communication. This concept has been developed for about two decades since it first appeared in the 1990s (Lim et al., Citation2022; Morton et al., Citation2020; Song et al., Citation2019). Initially, the studies of multimodal emotions were a part of research in the field of psychology before being developed into various research themes. Over the last five years, trends in multimodal emotion research have focused on recognizing emotions (Barrett et al., Citation2019; Keltner et al., Citation2019; Waterloo et al., Citation2018), identifying emotions (Davies et al., Citation2019; Lim et al., Citation2022; Swain et al., Citation2018) and conducting experiments on tools or technologies that can evoke emotions (Baig & Kavakli, Citation2019; Muszynski et al., Citation2021; Zhang et al., Citation2020). The Urgency of Multimodal Emotion Research in Communication: 1. Effective Communication: Multimodal emotions enable clearer and more effective message delivery. Combining facial expressions, tone of voice, body language and other elements can strengthen verbal messages and reduce misunderstandings. 2. Understanding Emotional Nuances: Multimodal emotions help us grasp emotional nuances that might not be conveyed solely through words. For instance, a hesitant tone of voice may indicate uncertainty even if the words sound positive. 3. Building Relationships: Multimodal emotional components can foster trust and better connections between individuals. Showing interest and attention multimodally can make others feel comfortable and valued. 4. Enhancing Social Skills: The ability to comprehend and utilize multimodal emotions is crucial for improving social skills. By recognizing multimodal emotional components, we can interact with others more appropriately and situationally.

In the development of this concept, multimodal emotions have been researched with regard to communication in general, such as interaction in daily life between people, in educational contexts between teachers and students, in film context between actors, in business context between sellers and buyers, and in a company context between employees and customers, or between superiors and subordinates. This coverage shows that multimodal emotions are a resources for making meaning and interpreting messages conveyed in communication (Fröhlich et al., Citation2019; Löffler et al., Citation2018; Mills & Unsworth, Citation2018). For example, research by Davies et al. (Citation2019), Chou and Budenz (Citation2020), Men and Yue (Citation2019), Strandberg et al. (Citation2020), Wirz (Citation2018) and Amini et al. (Citation2018) shows the importance of emotional meaning in communication. Based on the preceding explanation and literature review, no prior research has focused on the process of meaning-making involving multimodal emotions in communication. Existing studies have primarily concentrated on emotion recognition, emotion identification and the development of tools to elicit emotions. This research aims to identify common components of multimodal emotions to formulate more in-depth and comprehensive directions for future research, particularly in emotional meaning.

The increasingly broad context of communication requires a review of the literature to see how meaning can be provided in communication by involving multimodal emotions. This initial exploration of meaning-making processes focused on identifying common components of multimodal emotions to formulate more in-depth and comprehensive future research directions, especially in the area of emotional meaning. Multimodal research on human emotions is very important to study and it is directly beneficial to set right interaction context to create good and effective communication. The data from previous research on multimodal emotion studies were collected to determine the methods and the data collection technique, and were obtained from publications exploring multimodal emotions from 2018 to 2022. This period was chosen considering the significant impact of the Industrial Revolution version 4.0 (Germany, America, China, Singapore) on the increasing number of studies on multimodal emotions, seen from the large use of technology, such as datasets and several applications in identifying emotions that arise from participants. This research relies on a systematic literature review (SLR) to methodically review and identify journals that follow predetermined stages or protocols in each phase (see Mohamed Shaffril et al., Citation2021; van Dinter et al., Citation2021; Williams et al., Citation2021), and to avoid subjective identification in providing journal article identification procedures (van Dinter et al., Citation2021).

Conducted due to the absence of literature review examining the relationship between multimodality and emotions in terms of meaning formation, this SLR was intended to answer the following questions: 1) How are the different multimodal components analyzed and categorized? 2) What tools and technologies can be used to obtain multimodal emotion data? 3) What datasets are available to analyze multimodal emotions and their main features? and 4) What emotional focus is available to obtain better multimodal accuracy?

2. Theoretical framework

2.1. Previous research

The emergence of multimodal emotions is based on human recognition using diverse modalities such as sound, gaze, movement and other natural behaviors to characterize individuals’ sentiments from various modes, such as voices, writing, actions and others. Multimodal components refer to the various elements humans employ to express and communicate their emotions. These elements extend beyond mere sounds and encompass a broader range of nonverbal cues: 1) Gaze, the direction of one’s gaze, eye contact and facial expressions can convey a wide spectrum of emotions, including happiness, sadness, anger and confusion. 2) Movement, body movements, such as gestures, posture and facial expressions, provide additional information about an individual’s emotional state. 3) Natural behaviors and actions like smiling, frowning, or crying serve as nonverbal ways to express emotions. 4) Voice, the tone, intonation and volume of one’s voice can effectively convey one’s emotions. 5) Actions, physical actions, such as touching someone or giving a gift, can also communicate emotions. Many studies have characterized such recognition in terms of expressions of emotions (Barrett et al., Citation2019; Keltner et al., Citation2019; Millar & Lee, Citation2021; Uusberg et al., Citation2019; Van Kleef, Citation2021; Waterloo et al., Citation2018), identification of emotions (Davies et al., Citation2019; Lim et al., Citation2022; Swain et al., Citation2018), exploration of emotions (Jeon et al., Citation2018; Li, Ch’ng, et al., Citation2018; Li, Song, et al., Citation2018; Nabi et al., Citation2018) and experimentation of tools to capture the emergence of emotions (Askool et al., Citation2019; Athavipach et al., Citation2019; Mehta et al., Citation2018). The search for meanings from emotion evolves in tandem with the human need to identify emotional meanings, which tend to be abstract. Based on recent scientific development, emotions are studied not only between human interactions but also human interactions with computers and robots, and vice versa (Kim et al., Citation2022; Tsiourti et al., Citation2019).

The research of multimodal emotions has experienced rapid development in recent years. This is also influenced by the power of the 4.0 industrial revolution in which meanings can be interpreted concerning human language and semiotic systems and the assistance of digital tools. Primarily with the assistance of computers (Clayton & Ogunbode, Citation2023; Jiang et al., Citation2020; Marechal et al., Citation2019), the analysis of meanings produced by human emotions and other multimodal resources can be facilitated by digital tool for rendering data to yield enormous amounts of data and clustered data. In utilizing the available tool for rendering data, this study leverages existing datasets to help search for data previously gathered manually (Marshall & Wallace, Citation2019). Many scholars have conducted similar research to quickly derive findings from specific challenges while maintaining high data accuracy (Boehm et al., Citation2022; Sharma & Giannakos, Citation2020) like other research identifying consumer emotions toward the service of a company’s employees and the emotional influence of leaders on their subordinates (Keller et al., Citation2020; Kumari et al., Citation2022; Wang & Xie, Citation2020; Winasis et al., Citation2021).

Numerous studies have collaborated on numerous techniques employing computers to identify emotions to maintain reliable study outcomes and avoid research bias (Seeber et al., Citation2020; Zhang et al., Citation2020). However, the use of machines in recording one’s emotions still suffers from some drawbacks, such as lack of privacy of research subjects.

3. Method

3.1. Design

The design of the search followed the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) standards to gain relevant literature (Jamshidi et al., Citation2022; Xu et al., Citation2021). The notion of multimodal emotions in the context of the Industrial Revolution version 4.0 age has become widely known since 2018. This is based on the period of this year when there was a transformation in the merger between information and communication technology.

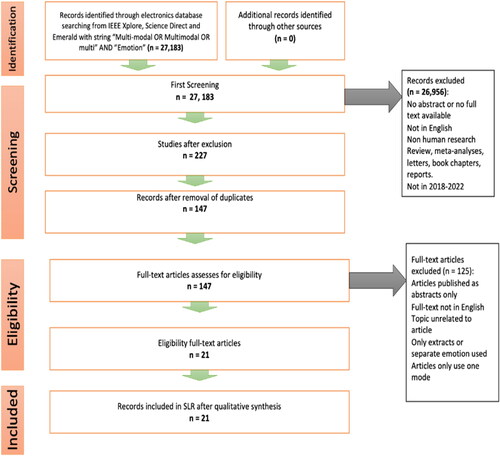

The identification of gaps and trends was systematically performed based on the number of articles collected, which was then selected based on certain criteria (). Due to our lack of access to data, it was decided to select three databases that we considered relevant to the subject of this research.

3.2. Search strategy and sample

Identification is the initial stage of the PRISMA standard. A literature search was conducted with the keywords or stings ‘Multi-modal OR Multimodal OR multi’ AND ‘Emotion’ in accordance with the title of this research. According to Papadakis (Citation2021) and Toorajipour et al. (Citation2021), literature searches should be based on inclusion and exclusion criteria. At this stage, 27,183 scientific publications were retrieved from the IEEE Xplore, Science Direct and Emerald databases. Furthermore, at the screening stage, article selection was carried out using the exclusion criteria by eliminating articles that only contained abstracts, were not written in English, and were a review, meta-analyses, newspapers, essays, reports or book chapters published from 2018 to 2022. At this stage, 277 articles were obtained. Then, double articles were removed, resulting in 147 articles. The next stage is the eligibility stage. In this stage, only full text articles were selected to be the data. Finally, the total number of articles met the criteria was 21 articles. This selection process was also based on the diversity of fields of science, countries, dataset components and emotions generated. In this last stage, each article was reviewed for its consistency of title, abstract, keywords and research methodology, techniques, the field of case studies and the distinguished strategies used. Indicators Aligning with the Research Focus on ‘The Meaning-Making Process Involving Multimodal Emotions in Communication’: 1) Presence of Multimodal Components, does the research under consideration analyze at least two multimodal components (e.g. facial expressions and tone of voice) to comprehend the communicated emotions? 2) Consistency of Multimodal Components, are the analyzed multimodal components (e.g. facial expressions and tone of voice) consistent in conveying the same emotions? Or are there inconsistencies that could affect the intended meaning? 3) Contribution of Multimodal Components, do the analyzed multimodal components contribute to understanding the communicated emotional meaning compared to using only one component? Evaluation of Research Data during the Sample Selection Process: 1) Literature Search, employing relevant keywords and credible database sources; 2) Inclusion and Exclusion Criteria, establishing clear criteria for selecting articles relevant to the research; 3) Phased Selection, applying the established criteria to filter and obtain more focused data.

3.3. Data analysis

After collecting all of the data, the researchers read the data in depth and inserted information from reading regarding categories of multimodal emotional components into a Ms. Excel table. These components included research focus, scientific field, research method and design, data collection tools, research subjects (dataset and age) and emotions (emotional focus, emotional stimulus, theory being applied and emotional experience). The next stage was to interpret the results of each category to find trends from multimodal emotion research over the past five years.

4. Results

4.1. Bibliographical information

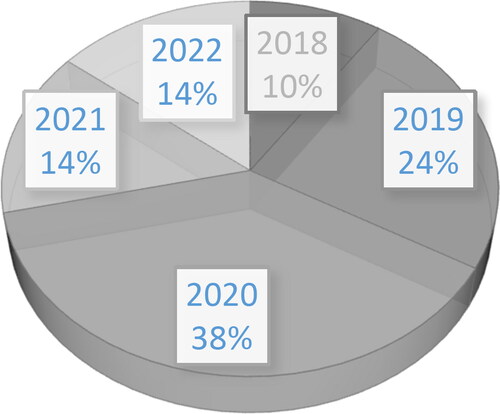

This section presents 21 main journal articles (n = 12) and international proceedings articles (n = 9). More articles covering the topic of multimodal emotions were published in 2020 than in the two years before and after (see ). The most published articles were in 2020 (n = 8), while the least was in 2018 (n = 2), in 2019. There was an increasing trend of publications (n = 5). The trend of multimodal emotion studies decreased in 2021 as well as in 2022 (n = 3). However, this downward trend can be interpreted as an indication that publications in academic repositories related to this topic were experiencing saturation. This conjecture can be seen from the tendency of multimodal emotion studies to focus more on emotion recognition (n = 21) (see for the bibliographical information in tables and pie charts).

Table 1. Multimodal research in understanding emotion.

4.2. Scientific information

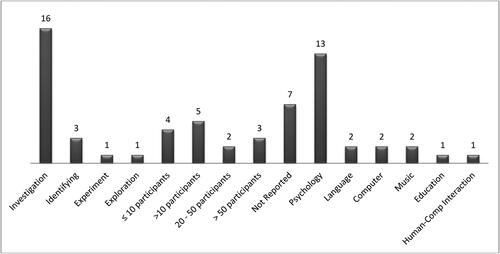

This section reviews general information about the collected articles, namely the categories of research methods used, the inquiry process model applied, the research participants involved and the scientific fields widely researched. Of the 21 primary studies, the research design of all data used quantitative methods with a model of the inquiry process, namely investigation (n = 16), identifying (n = 3), exploration and research development, each amounting to one article (n = 1). In terms of research subjects, the types of data significantly influenced the choice of the research subjects. Of the 21 data, various amounts of those were found, namely: data from the videoed speech from ≤ 10 participants (n = 4), speech from >10 participants (n = 5), speech from 20 to 50 participants (n = 2), speech > 50 participants (n = 3) and data taken other than participants (n = 7), such as, from tweets, songs and time duration (hours). The collected multimodal emotion articles highlighted the recognition of emotions using machines as a tool to help analyze data derived from the datasets. Over the past five years, the study of multimodal emotions has spread across diverse disciplines. If seen specifically, this knowledge is spread over several fields of science (see ). The most dominant science is psychology (n = 13).

Discussion of multimodal emotions is indeed a target in the field of psychology. This is related to the realm of emotion, which is closely related to psychology. Other fields that have been widely researched are music and language, as well as computers with the same amount of data (n = 2). Other scientific areas that are the focus of research on using multimodal emotions are the fields of human–computer interaction and education with the same number (n = 1) (see for the details of description).

4.3. Research locations

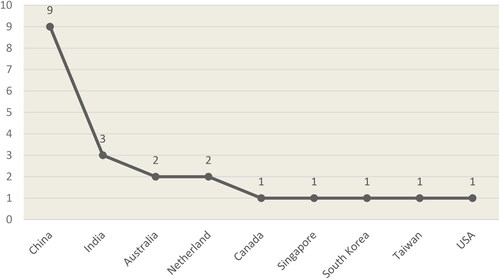

The distribution of research locations (see ) shows that the dominant multimodal emotions studies occurred in China (n = 8). This happened because of China’s connection as the source of the spread of COVID-19, in 2019. Meanwhile, research locations in other countries were evenly spread, resulting in disparity in one or two articles. The data show that the study of multimodal emotions has piqued the interest of researchers from China, India, the Netherlands, Australia, Canada, Singapore, South Korea, Taiwan and the USA.

4.4. How can the different multimodal components be analyzed and categorized?

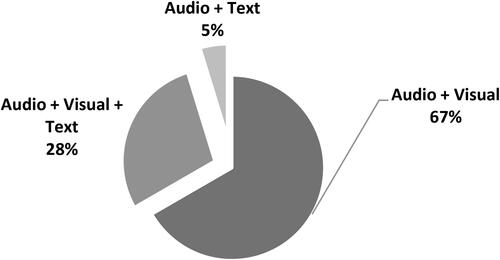

The literature indicates that the multimodal components of emotion used three modes: audio + visual + text. These three modes have become the research trends for the last five years. It has been identified that 14 out of 21 data (66.67%) used audio + visual modes. This trend of multimodal selection supports common ways of humans using multimodal means involving emotions (Bhattacharya et al., Citation2023; Cai et al., Citation2020; Caihua, Citation2019; Dahmane et al., Citation2022; Garg et al., Citation2021; Ghaleb et al., Citation2019a, Citation2019b; Guanghui & Xiaoping, Citation2021; Hu et al., Citation2022; Jo et al., Citation2020; Priyasad et al., Citation2020; Song et al., Citation2019; Tung et al., Citation2019).

The choice of audio + visual turns out to be dominant as one of the supporting tools to observe emotional expressions (see He et al., Citation2022; Kossaifi et al., Citation2019; Ma et al., Citation2019; Wang & Xie, Citation2020). Individuals’ empirical feelings can barely be identified, but emotional expressions can be observed through expressions (Ruba & Repacholi, Citation2020; Zerback & Wirz, Citation2021). Therefore, according to those studies, a tool or technology is needed to identify and carry out stages of multimodal components. In this stage, each of the 21 data was given a code. Next, the mode frequency or modality components are calculated to indicate how many of the 21 data used multimodal components (see for the visualization of the results). Based on the investigation of each of the data, the trend of multimodal components shows that the selection of audio + visual + text components was 28.57%, and the selection of the audio + text mode was 4.76%.

4.5. What tools and technologies can be used to obtain multimodal emotions data?

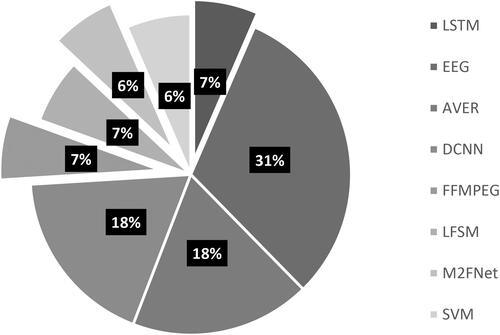

The literature indicates that the researchers used machines to identify tools and technologies for data acquisition over the past five years. As analyzed in the research reports, the use of machines was influenced by the Industrial Revolution version 4.0, which began to touch the virtual world (Egger et al., Citation2019). More specifically, according to Egger, humans, machines and data are inseparable from making everything available everywhere, significantly influencing the meaning-making process involving multimodal emotions. Thus, the study of emotions has morphed into multimodal research (Azmi et al., Citation2022; Ninaus et al., Citation2019; Zhang et al., Citation2020). In exploiting the tools and technologies for conducting multimodal emotion research, the researchers used 6 out of 21 data (66.67%) from LSTM technology to identify emerging emotions (Cai et al., Citation2020; Dahmane et al., Citation2022; Li, Ch’ng, et al., Citation2018; Li, Song, et al., Citation2018; Song et al., Citation2019; Sun et al., Citation2020; Zhang et al., Citation2020). More specifically, emotion identification based on subsequent multimodal research using EEG tools totaled 5 data (23.81%), while other tools or technologies used in the emotions research included AVER, DCNN, FFMPEG, LFSM, M2 Net and SVM (see ).

4.6. What datasets are available for analyzing multimodal emotions and what are their main features?

The use of datasets in the form of raw data presented in tabular form that can be further processed in multimodal emotions research, has become a trend over the past five years (Bayoudh et al., Citation2022; Gandhi et al., Citation2022). In general, the dataset is owned by certain institutions or agencies granting permission to use it for scientific purposes (Deinsberger et al., Citation2020).

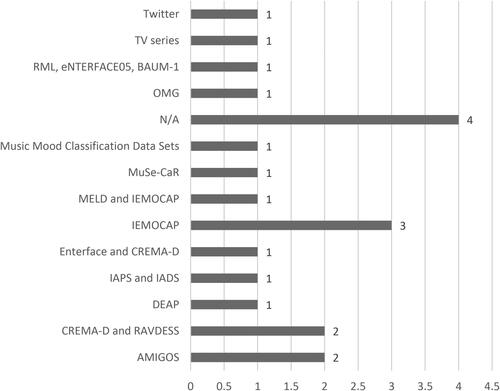

The third research question addresses the use of datasets in multimodal emotions research. The use of datasets is based on different research purposes. However, the literature analysis shows that the entire research (n = 21) used tools and technology affecting the use of data. Of the 21 data, a total of 17 data (80.95%) used the dataset as the research object. Four studies (19.05%) were identified to use the IEMOCAP dataset (Cai et al., Citation2020; Chudasama et al., Citation2022; Priyasad et al., Citation2020; Zhang et al., Citation2020). With the same percentage (19.05%), the researchers did not use the dataset. Next, the most frequently used datasets are CREMA-D and RAVDESS (14.29%). The rest used the related and adapted datasets to the research objectives, such as the use of datasets originating from TV series and Twitter (see ).

4.7. What is the focus of emotions available to gain better multimodal accuracy?

The literature reveals the focus of emotions research, that is, researchers’ goal in examining emotions by using a multimodal approach. The investigation into the focus of emotions research was carried out to find out what emotions are being investigated and how often certain emotions become the focus of the researchers. Nine of the 21 articles (42.86%) made positive, negative and neutral emotions to be identified for research purposes (Cai et al., Citation2020; Caihua, Citation2019; Chudasama et al., Citation2022; Hu et al., Citation2022; Jo et al., Citation2020; Priyasad et al., Citation2020; Zhang et al., Citation2020). Researchers’ second most frequent focus was on positive and negative emotions (23.81%). The analysis of the literature indicates the same number of researchers as the second most frequent focus, which did not mention the focus of emotions (23.81%). Meanwhile, one research article (4.76%) was found to focus on negative emotions ().

Table 2. Frequency of multimodal emotions research by focus of emotions.

Table 3. Frequency of multimodal emotions research by emotions focus cluster.

Based on the review of the literature, as seen in , almost all of the researchers focused on positive, negative, and neutral emotions (50%) for the last five years. The researchers intended to study emotions thoroughly and comprehensively (see Li et al., Citation2022; Bashir et al., Citation2021). Some researchers did not mention the emotional focus of their research objectives (such as Arthanarisamy & Palaniswamy, Citation2022; Garg et al., Citation2021; Ghaleb et al., Citation2019a, Citation2019b; Gordon et al., Citation2018).

4.8. Summary of findings from systemic literature review

The results of bibliographical information on emotional research show that there has been quite a decrease in the percentage of approximately 24% from 2020 to 2021. However, it does not mean that the study of multimodal emotions is no longer of interest to researchers. This is because many studies on multimodal emotions are still being published in a wider scope of publications.

The theoretical analysis used in this study focused on a multimodal framework employing machines. This focus brings a consequence of tapping the same topics over and over again, notwithstanding the researchers’ revisions in some portions. Thus, the search leads to the observation of semiotic modes, which are significantly influenced by technological advancement (Singh, Citation2019). In this investigation, the researchers capitalized on the role of digital devices and machines in their multimodal study.

This analytical procedure was undertaken to provide a systematic and comprehensive review of multimodal emotions research. As previously described about multimodal components in examining emotions, audio + visual ranks first as the mode researchers used most frequently. This is because the majority of researchers used machines to analyze emotions. In addition, the audio + visual facilities in the video can evoke emotions naturally and in various ways (Bagila et al., Citation2019; Ismail et al., Citation2021; Pandeya & Lee, Citation2021). For example, if someone watches a film (audio + visual), at the same time, it will bring up emotions as a result of watching the film. The arising emotions are then captured by a machine or tool, which produces output in the form of whatever emotions arise.

It was found from the literature review that utilizing tools as expressions of emotion recognition experienced by humans has been most frequently used for multimodal emotional data acquisition over the past five years. This system is known to be more economical to use for certain purposes (Dzedzickis et al., Citation2020; Kim et al., Citation2019; Mittal et al., Citation2020). The desired results are achieved because low-cost equipment can easily capture the data needed to assess emotions (Laureanti et al., Citation2020). Furthermore, machines can also analyze large and varied amounts of data (Ngiam & Khor, Citation2019; Sarker, Citation2021).

In those studies, the analysis of the tools and technology used more frequently psychologically sensory nature to capture and identify emotions. For example, long short-term memory network (LSTM) was used as a tool to memorize a collection of information that has been stored for a long time, as well as delete information that is no longer relevant. LSTM is more efficient in processing, predicting, as well as classifying data based on a certain time sequence. Those studies also identified another technology: the EEG sensor, also known as Electroencephalography, which is a method for recording the brain’s electrical activity on the surface of the scalp (Beniczky & Schomer, Citation2020). The EEG records the fluctuations in the electrical potentials that occur as a result of the activity of brain cells. As research progresses and measurement tools become more sophisticated, EEG is increasingly being used in research on cognitive function. Although it provides the advantages of using machines in multimodal emotional research, it still shows drawbacks, such as lack of subject privacy and uncontrollable research bias (Bhattacharya et al., Citation2023).

As seen in , most researchers focused on datasets containing recorded dialog datasets in the form of videos. The use of video included an audio + visual tool that supported the expression of emotions. for example, the The Interactive Emotional Dyadic Motion Capture (IEMOCAP) Dataset from the University of Southern California consists of >150 video recordings of male and female dialog, with two speakers per session, with a total of >300 sessions for each speaker. The researchers used IEMOCAP more frequently than other datasets because they found a more varied range of emotions than other datasets. This diversity of emotions was needed to identify the emergence of what emotions were generated based on gender and time. Systematically, this research also produced data that did not use datasets as research objects to identify emotions in multimodal emotions research. Four studies did not use a dataset because the researchers of the studies used adults as objects to identify the emotions that arose after being given an action. For example, someone who was watching a video simultaneously had an EEG device installed. Next, the EEG would analyze and bring up any visible emotions from that person. Those researchers only intended to identify the emotions generated after being given treatment. On the other hand, most of the researchers have only reached the identification stage, without any attempts to reach the emotional meaning stage. Meanwhile, the meaning of emotion is no less important to study, considering that emotion has an abstract meaning depending on the cause and effect of its appearance, context and even the speakers’ cultures.

Furthermore, in almost every emotion data, anger (90.48%) is always the focus of emotion and attention. The appearance of angry emotions as negative emotions in research can appear to research’s demand to understand the meaning of this emotion from many aspects, such as biological, emotional, intellectual, social and spiritual aspects (Jakovljević & Jakovljević, 2021). Other emotions rarely own the complexity of this aspect. In addition, anger is an acute emotional reaction that arises from several stimulating situations, such as threats, self-restraint, verbal attacks, disappointment and frustration (Muhajarah, Citation2022; Mufid et al., Citation2021).

Technological tools in the form of artificial intelligence (AI) and machine learning (ML) widely aid humans with their benefits in the workforce, where the tools can be used to help leaders understand their employees’ emotions regarding decisions on salary increases, job promotions and assignments. Furthermore, these technological tools can be used to come across a person’s tendency to commit suicide, detect drunk drivers, observe a person’s health when entering a country or help in the credit-granting system. AI and ML are also used by certain parties in the court decision-making process in selecting job applicants and assigning academics in the education system. This is, however, different from the use of AI and ML in the emotional realm. Although several studies reveal that AI and ML can read the emotions produced by a person, the result cannot be used as an absolute decision. The meaning of emotions must be based on the context that supports an emotion before and after the emotion is produced. This is what AI and ML cannot translate or define. When AI and ML are asked about when, how, and where certain emotions are generated, these cannot be answered and defined by AI and ML.

5. Conclusion

Multimodal emotion research has gained significance for its contribution to the meaning-making process (Illendula & Sheth, Citation2019; Marechal et al., Citation2019). As reviewed in this study, we focused on bibliographical information, methods and scientists, as well as general sections of multimodal emotions, i.e. multimodal components, data acquisition tools and technologies, datasets and emotion focus, to see the trend and directions of multimodal emotions research. Some highlighted points include a focus on the multimodal components; this literature review can affirm the choice of researchers, the majority of the audio + visual components in the research, influenced by research objectives and use of datasets, and data acquisition tools. Regarding the data acquisition tools and technology used in multimodal emotion research, all research (100%) used machines facilitated through easy access to digital tools in the Industrial Revolution 4.0, which began in 2018. Low costs, short processing time and large and varied quantities of data have triggered the use of machines. However, the use of machines shows some drawbacks, such as the absence of privacy for research subjects and the lack of research bias control.

In terms of datasets, the majority of multimodal research trends used datasets (80.95%), which is in line with the use of machines for data acquisition. In terms of emotional focus, many researchers focused on investigating positive, negative and neutral emotions (42.86%). Of all the emotions, anger gained the highest focus (90.48%). The focus on emotions only reached the identification stage. The findings can provide an initial reference to the prudent design of psychology and pedagogy and can be applied in a more appropriate strategy to optimize all modes and generate positive emotions in every learning process.

Based on the literature review, by taking into account the general components of emotional multimodal research, this study suggests that the researchers examine emotions using more modes. The more modes are used, the more comprehensive and higher quality the results will be. Further, more variety of modes can minimize bias in research (Bhattacharya et al., Citation2023). It is important to note that choosing a mode or component should also be adjusted to the research objectives and objects. If the subject’s conditions do not permit, the audiovisual mode is highly recommended (Bhattacharya et al., Citation2023; Cai et al., Citation2020). A Holistic Perspective on Multimodal Emotion Components: 1) Analyzed Components: Current research typically focuses on audiovisual components (sound and visuals) for emotion analysis. This choice is influenced by research objectives and the ease of access to tools and technologies driven by the Industrial Revolution 4.0 era. 2) Limitations of Components: Relying solely on audiovisual components has its drawbacks. This research suggests analyzing emotions using a wider range of multimodal components to achieve more comprehensive and high-quality results. 3) Component Variation: The greater the variety of multimodal components employed, the lower the likelihood of bias in research. Some studies suggest that component selection should be tailored to the research aims and subjects. 4) Recommended Components: If research subjects are unable to utilize multiple components, audiovisual components remain highly recommended due to their user-friendliness.

We acknowledge that this research has substantial limitations. Lack of access in obtaining databases, we only limit to three databases namely IEEE Xplore, Science Direct and Emerald. Therefore, future research is expected to add more and diverse database sources, such as Scopus Elsavier, PubMed and DOAJ. Deeper investigations on multimodal emotions can be conducted using larger results and interpretations from multiple databases.

This study also recommends that other researchers should be able to examine emotions not only on basic positive, negative, and neutral emotions but also on more derivative emotions. It is even better if multimodal emotions research examines the meaning of emotions reaching beyond the identification stage. Furthermore, researchers can examine multimodal emotions research with a focus on the construction of meaning generated by emotions from different regions or countries. This construction certainly influences cross-cultural knowledge from the countries/regions. Another implication is that future researchers can compare several data acquisition tools and technologies to find a more representative tool to analyze the resulting emotions.

Author details

Reny Rahmalina*

E-mail: [email protected]

ORCID ID: https://orcid.org/0000-0003-1016-7448

Wawan Gunawan

E-Mail: [email protected]

ORCID ID: https://orcid.org/0000-0003-1792-8350

Linguistics Study Program, Postgraduate School, Universitas Pendidikan Indonesia, Indonesia.

Correction

This article has been corrected with minor changes. These changes do not impact the academic content of the article.

Citation information

Cite this article as: What multimodal components, tools, dataset and focus of emotion are used in current research of multimodal emostion: A systematic literature review, Reny Rahmalina and Wawan Gunawan, Cogent Social Sciences.

Disclosure statement

The author(s) declared no potential conflicts of interest with respect to the research, authorship and/or publication of this article.

Additional information

Funding

Notes on contributors

Reny Rahmalina

Reny Rahmalina is a doctoral student in the linguistics program at the graduate school, Universitas Pendidikan Indonesia (Bandung, Indonesia). Her research interests include linguistics studies, innovation, education and teaching. Her works have been published in national and international journals, such as Atlantis press and others.

Wawan Gunawan

Wawan Gunawan, M.Ed., Ph.D., is a lecturer in the linguistics doctoral program at the graduate school, Universitas Pendidikan Indonesia. One of the fields he has been focusing on in recent years is multimodal studies. Based on the discovery of the close relationship between emotion and multimodality, we decided to investigate how emotion and multimodality components complement each other. We collaborated to accomplish this work. Future research is expected to focus on the meaning of the emotions generated. The use of data from datasets requires consideration of subject privacy and resulting bias.

References

- Amini, M., Amini, M., Nabiee, P., & Delavari, S. (2018). The relationship between emotional intelligence and communication skills in healthcare staff. Shiraz E-Medical Journal, 20(4). https://doi.org/10.5812/semj.80275

- Arthanarisamy, R. M. P., & Palaniswamy, S. (2022). Subject independent emotion recognition using EEG and physiological signals–a comparative study. Applied Computing and Informatics. http://doi.org/10.1108/aci-03-2022-0080

- Askool, S., Pan, Y. C., Jacobs, A., & Tan, C. (2019). Understanding proximity mobile payment adoption through technology acceptance model and organisational semiotics: An exploratory study. https://aisel.aisnet.org/ukais2019/38/

- Athavipach, C., Pan-Ngum, S., & Israsena, P. (2019). A wearable in-ear EEG device for emotion monitoring. Sensors (Basel, Switzerland), 19(18), 4014. https://doi.org/10.3390/s19184014

- Azmi, A., Ibrahim, R., Abdul Ghafar, M., & Rashidi, A. (2022). Smarter real estate marketing using virtual reality to influence potential homebuyers’ emotions and purchase intention. Smart and Sustainable Built Environment, 11(4), 870–890. https://doi.org/10.1108/SASBE-03-2021-0056

- Bagila, S., Kok, A., Zhumabaeva, A., Suleimenova, Z., Riskulbekova, A., & Uaidullakyzy, E. (2019). Teaching primary school pupils through audio-visual means. International Journal of Emerging Technologies in Learning (iJET), 14(22), 122–140. https://doi.org/10.3991/ijet.v14i22.11760

- Baig, M. Z., & Kavakli, M. (2019). A survey on psycho-physiological analysis & measurement methods in multimodal systems. Multimodal. Multimodal Technologies and Interaction, 3(2), 37. https://doi.org/10.3390/mti3020037

- Barrett, L. F., Adolphs, R., Marsella, S., Martinez, A. M., & Pollak, S. D. (2019). Emotional expressions reconsidered: Challenges to inferring emotion from human facial movements. Psychological Science in the Public Interest: A Journal of the American Psychological Society, 20(1), 1–68. https://doi.org/10.1177/1529100619832930

- Bashir, S., Bano, S., Shueb, S., Gul, S., Mir, A. A., Ashraf, R., Noor, N., & Shakeela. (2021). Twitter chirps for Syrian people: Sentiment analysis of tweets related to Syria Chemical Attack. International Journal of Disaster Risk Reduction, 62, 102397. https://doi.org/10.1016/j.ijdrr.2021.102397

- Bayoudh, K., Knani, R., Hamdaoui, F., & Mtibaa, A. (2022). A survey on deep multimodal learning for computer vision: Advances, trends, applications, and datasets. The Visual Computer, 38(8), 2939–2970. https://doi.org/10.1007/s00371-021-02166-7

- Beniczky, S., & Schomer, D. L. (2020). Electroencephalography: Basic biophysical and technological aspects important for clinical applications. Epileptic Disorders: International Epilepsy Journal with Videotape, 22(6), 697–715. https://doi.org/10.1684/epd.2020.1217

- Bhattacharya, P., Gupta, R. K., & Yang, Y. (2023). Exploring the contextual factors affecting multimodal emotion recognition in videos. IEEE Transactions on Affective Computing, 14(2), 1547–1557. https://doi.org/10.1109/TAFFC.2021.3071503

- Boehm, K. M., Khosravi, P., Vanguri, R., Gao, J., & Shah, S. P. (2022). Harnessing multimodal data integration to advance precision oncology. Nature Reviews. Cancer, 22(2), 114–126. https://doi.org/10.1038/s41568-021-00408-3

- Cai, L., Dong, J., & Wei, M. (2020). Multi-modal emotion recognition from speech and facial expression based on deep learning [Paper presentation]. 2020 Chinese Automation Congress (CAC) (pp. 5726–5729). https://doi.org/10.1109/CAC51589.2020.9327178

- Caihua, C. (2019). Research on multi-modal mandarin speech emotion recognition based on SVM [Paper presentation]. 2019 IEEE International Conference on Power, Intelligent Computing and Systems (ICPICS) (pp. 173–176). https://doi.org/10.1109/ICPICS47731.2019.8942545

- Chou, W. Y. S., & Budenz, A. (2020). Considering emotion in COVID-19 vaccine communication: Addressing vaccine hesitancy and fostering vaccine confidence. Health Communication, 35(14), 1718–1722. https://doi.org/10.1080/10410236.2020.1838096

- Chudasama, V., Kar, P., Gudmalwar, A., Shah, N., Wasnik, P., & Onoe, N. (2022). M2FNet: Multi-modal fusion network for emotion recognition in conversation. Proceedings of theCVF Conference on Computer Vision and Pattern Recognition (pp. 4652–4661). https://doi.org/10.48550/arXiv.2206.02187

- Clayton, S., & Ogunbode, C. (2023). Looking at emotions to understand responses to environmental challenges. Emotion Review, 15(4), 275–278. https://doi.org/10.1177/17540739231193757

- Dahmane, M., Alam, J., St-Charles, P. L., Lalonde, M., Heffner, K., & Foucher, S. (2022). A multimodal non-intrusive stress monitoring from the pleasure-arousal emotional dimensions. IEEE Transactions on Affective Computing, 13(2), 1044–1056. https://doi.org/10.1109/TAFFC.2020.2988455

- Davies, S. R., Halpern, M., Horst, M., Kirby, D. S., & Lewenstein, B. (2019). Science stories as culture: Experience, identity, narrative and emotion in public communication of science. Journal of Science Communication, 18(05), A01. https://doi.org/10.22323/2.18050201

- Deinsberger, J., Reisinger, D., & Weber, B. (2020). Global trends in clinical trials involving pluripotent stem cells: A systematic multi-database analysis. NPJ Regenerative Medicine, 5(1), 15. https://doi.org/10.1038/s41536-020-00100-4

- Dzedzickis, A., Kaklauskas, A., & Bucinskas, V. (2020). Human emotion recognition: Review of sensors and methods. Sensors (Basel, Switzerland), 20(3), 592. https://doi.org/10.3390/s20030592

- Egger, M., Ley, M., & Hanke, S. (2019). Emotion recognition from physiological signal analysis: A review. Electronic Notes in Theoretical Computer Science, 343, 35–55. https://doi.org/10.1016/j.entcs.2019.04.009

- Fröhlich, M., Sievers, C., Townsend, S. W., Gruber, T., & van Schaik, C. P. (2019). Multimodal communication and language origins: Integrating gestures and vocalizations. Biological Reviews of the Cambridge Philosophical Society, 94(5), 1809–1829. https://doi.org/10.1111/brv.12535

- Gandhi, A., Adhvaryu, K., Poria, S., Cambria, E., & Hussain, A. (2022). Multimodal sentiment analysis: A systematic review of history, datasets, multimodal fusion methods, applications, challenges and future directions. Information Fusion, 91, 424–444. https://doi.org/10.1016/j.inffus.2022.09.025

- Garg, S., Patro, R. K., Behera, S., Tigga, N. P., & Pandey, R. (2021). An overlapping sliding window and combined features based emotion recognition system for EEG signals. Applied Computing and Informatics, https://doi.org/10.1108/ACI-05-2021-0130

- Ghaleb, E., Popa, M., & Asteriadis, S. (2019a). Metric learning-based multimodal audio-visual emotion recognition. IEEE Multimedia, 27(1), 1–1. https://doi.org/10.1109/MMUL.2019.2960219

- Ghaleb, E., Popa, M., & Asteriadis, S. (2019b). Multimodal and temporal perception of audio-visual cues for emotion recognition [Paper presentation]. 2019 8th International Conference on Affective Computing and Intelligent Interaction (ACII) (pp. 552–558). https://doi.org/10.1109/ACII.2019.8925444

- Gordon, R., Ciorciari, J., & van Laer, T. (2018). Using EEG to examine the role of attention, working memory, emotion, and imagination in narrative transportation. European Journal of Marketing, 52(1–2), 92–117. https://doi.org/10.1108/EJM-12-2016-0881

- Guanghui, C., & Xiaoping, Z. (2021). Multi-modal emotion recognition by fusing correlation features of speech-visual. IEEE Signal Processing Letters, 28, 533–537. https://doi.org/10.1109/LSP.2021.3055755

- He, L., Niu, M., Tiwari, P., Marttinen, P., Su, R., Jiang, J., Guo, C., Wang, H., Ding, S., Wang, Z., Pan, X., & Dang, W. (2022). Deep learning for depression recognition with audiovisual cues: A review. Information Fusion, 80, 56–86. https://doi.org/10.1016/j.inffus.2021.10.012

- Hu, L., Li, W., Yang, J., Fortino, G., & Chen, M. (2022). A sustainable multi-modal multi-layer emotion-aware service at the edge. IEEE Transactions on Sustainable Computing, 7(2), 324–333. https://doi.org/10.1109/TSUSC.2019.2928316

- Illendula, A., & Sheth, A. (2019). Multimodal emotion classification [Paper presentation]. Companion Proceedings of the 2019 World Wide Web Conference (pp. 439–449). https://doi.org/10.1145/3308560.3316549

- Ismail, S. N. M. S., Aziz, N. A. A., Ibrahim, S. Z., Khan, C. T., & Rahman, M. A. (2021). Selecting video stimuli for emotion elicitation via online survey. Human-Centric Computing and Information Sciences, 11(36), 1–18. https://doi.org/10.22967/HCIS.2021.11.036

- Jakovljevic, M., & Jakovljevic, I. (2021). Sciences, arts and religions: The triad in action for empathic civilization in Bosnia and Herzegovina. Science, Art and Religion, 1(1–2), 5–22. https://doi.org/10.5005/sar-1-1-2-5

- Jamshidi, L., Heyvaert, M., Declercq, L., Fernández-Castilla, B., Ferron, J. M., Moeyaert, M., Beretvas, S. N., Onghena, P., & Van den Noortgate, W. (2022). A systematic review of single-case experimental design meta-analyses: Characteristics of study designs, data, and analyses. Evidence-Based Communication Assessment and Intervention, 17(1), 6–30. https://doi.org/10.1080/17489539.2022.2089334

- Jeon, L., Buettner, C. K., & Grant, A. A. (2018). Early childhood teachers’ psychological well-being: Exploring potential predictors of depression, stress, and emotional exhaustion. Early Education and Development, 29(1), 53–69. https://doi.org/10.1080/10409289.2017.1341806

- Jiang, Y., Li, W., Hossain, M. S., Chen, M., Alelaiwi, A., & Al-Hammadi, M. (2020). A snapshot research and implementation of multimodal information fusion for data-driven emotion recognition. Information Fusion, 53, 209–221. https://doi.org/10.1016/j.inffus.2019.06.019

- Jo, W., Kannan, S. S., Cha, G. E., Lee, A., & Min, B. C. (2020). Rosbag-based multimodal affective dataset for emotional and cognitive states [Paper presentation].2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC) (pp. 226–233). https://doi.org/10.1109/SMC42975.2020.9283320

- Keller, S. B., Ralston, P. M., & LeMay, S. A. (2020). Quality output, workplace environment, and employee retention: The positive influence of emotionally intelligent supply chain managers. Journal of Business Logistics, 41(4), 337–355. https://doi.org/10.1111/jbl.12258

- Keltner, D., Sauter, D., Tracy, J., & Cowen, A. (2019). Emotional expression: Advances in basic emotion theory. Journal of Nonverbal Behavior, 43(2), 133–160. https://doi.org/10.1007/s10919-019-00293-3

- Kim, B., de Visser, E., & Phillips, E. (2022). Two uncanny valleys: Re-evaluating the uncanny valley across the full spectrum of real-world human-like robots. Computers in Human Behavior, 135, 107340. https://doi.org/10.1016/j.chb.2022.107340

- Kim, J. H., Kim, B. G., Roy, P. P., & Jeong, D. M. (2019). Efficient facial expression recognition algorithm based on hierarchical deep neural network structure. IEEE Access, 7, 41273–41285. https://doi.org/10.1109/ACCESS.2019.2907327

- Kossaifi, J., Walecki, R., Panagakis, Y., Shen, J., Schmitt, M., Ringeval, F., Han, J., Pandit, V., Toisoul, A., Schuller, B., Star, K., Hajiyev, E., & Pantic, M. (2019). Sewa db: A rich database for audio-visual emotion and sentiment research in the wild. IEEE Transactions on Pattern Analysis and Machine Intelligence, 43(3), 1022–1040. https://doi.org/10.1109/TPAMI.2019.2944808

- Kumari, K., Abbas, J., Hwang, J., & Cioca, L. I. (2022). Does servant leadership promote emotional intelligence and organizational citizenship behavior among employees? A structural analysis. Sustainability, 14(9), 5231. https://doi.org/10.3390/su14095231

- Laureanti, R., Bilucaglia, M., Zito, M., Circi, R., Fici, A., Rivetti, F., Valesi, R., Oldrini, C., Mainardi, L.T., & Russo, V. (2020). Emotion assessment using Machine Learning and low-cost wearable devices [Paper presentation].2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC) (pp. 576–579). https://doi.org/10.1109/EMBC44109.2020.9175221

- Lee, J. H., Kim, H. J., & Cheong, Y. G. (2020). A multi-modal approach for emotion recognition of TV drama characters using image and text [Paper presentation]. 2020 IEEE International Conference on Big Data and Smart Computing (BigComp) (pp. 420–424). https://doi.org/10.1109/BigComp48618.2020.00-37

- Li, M., Ch’ng, E., Chong, A. Y. L., & See, S. (2018). Multi-class Twitter sentiment classification with emojis. Industrial Management & Data Systems, 118(9), 1804–1820. https://doi.org/10.1108/IMDS-12-2017-0582

- Li, W., Huan, W., Hou, B., Tian, Y., Zhang, Z., & Song, A. (2022). Can emotion be transfered? A review on transfer learning for EEG-Based Emotion Recognition. IEEE Transactions on Cognitive and Developmental Systems, 14(3), 833–846. https://doi.org/10.1109/TCDS.2021.3098842

- Li, X., Song, D., Zhang, P., Zhang, Y., Hou, Y., & Hu, B. (2018). Exploring EEG features in cross-subject emotion recognition. Frontiers in Neuroscience, 12, 162. https://doi.org/10.3389/fnins.2018.00162

- Lim, F. V., Toh, W., & Nguyen, T. T. H. (2022). Multimodality in the English language classroom: A systematic review of literature. Linguistics and Education, 69, 101048. https://doi.org/10.1016/j.linged.2022.101048

- Liu, G., & Tan, Z. (2020). Research on multi-modal music emotion classification based on audio and lyirc [Paper presentation]. 2020 IEEE 4th Information Technology, Networking, Electronic and Automation Control Conference (ITNEC) (Vol. 1, pp. 2331–2335). https://doi.org/10.1109/ITNEC48623.2020.9084846

- Löffler, D., Schmidt, N., & Tscharn, R. (2018). Multimodal expression of artificial emotion in social robots using color, motion and sound [Paper presentation]. Proceedings of the 2018 ACM/IEEE International Conference on Human-Robot Interaction (pp. 334–343). https://doi.org/10.1145/3171221.3171261

- Ma, Y., Hao, Y., Chen, M., Chen, J., Lu, P., & Košir, A. (2019). Audio-visual emotion fusion (AVEF): A deep efficient weighted approach. Information Fusion, 46, 184–192. https://doi.org/10.1016/j.inffus.2018.06.003

- Marechal, C., Mikolajewski, D., Tyburek, K., Prokopowicz, P., Bougueroua, L., Ancourt, C., & Wegrzyn-Wolska, K. (2019). Survey on AI-based multimodal methods for emotion detection. High-performance modelling and simulation for big data applications. 11400, 307–324. https://doi.org/10.1007/978-3-030-16272-6

- Marshall, I. J., & Wallace, B. C. (2019). Toward systematic review automation: A practical guide to using machine learning tools in research synthesis. Systematic Reviews, 8(1), 163. https://doi.org/10.1186/s13643-019-1074-9

- Mehta, D., Siddiqui, M. F. H., & Javaid, A. Y. (2018). Facial emotion recognition: A survey and real-world user experiences in mixed reality. Sensors (Basel, Switzerland), 18(2), 416. https://doi.org/10.3390/s18020416

- Men, L. R., & Yue, C. A. (2019). Creating a positive emotional culture: Effect of internal communication and impact on employee supportive behaviors. Public Relations Review, 45(3), 101764. https://doi.org/10.1016/j.pubrev.2019.03.001

- Millar, B., & Lee, J. (2021). Horror films and grief. Emotion Review, 13(3), 171–182. https://doi.org/10.1177/17540739211022815

- Mills, K. A., & Unsworth, L. (2018). IP ad animations: Powerful multimodal practices for adolescent literacy and emotional language. Journal of Adolescent & Adult Literacy, 61(6), 609–620. https://doi.org/10.1002/jaal.717

- Mittal, T., Bhattacharya, U., Chandra, R., Bera, A., & Manocha, D. (2020). M3er: Multiplicative multimodal emotion recognition using facial, textual, and speech cues. Proceedings of the AAAI Conference on Artificial Intelligence, 34(02), 1359–1367. https://doi.org/10.1609/aaai.v34i02.5492

- Mohamed Shaffril, H. A., Samsuddin, S. F., & Abu Samah, A. (2021). The ABC of systematic literature review: The basic methodological guidance for beginners. Quality & Quantity, 55(4), 1319–1346. https://doi.org/10.1007/s11135-020-01059-6

- Morton, D. P., Hinze, J., Craig, B., Herman, W., Kent, L., Beamish, P., Renfrew, M., & Przybylko, G. (2020). A multimodal intervention for improving the mental health and emotional well-being of college students. American Journal of Lifestyle Medicine, 14(2), 216–224. https://doi.org/10.1177/1559827617733941

- Mufid, M., Masruri, S., & Azhar, M. (2021). Controlling anger on Al-Qur’an and psychology of Islamic education perspective (A study at on private higher education). Review of International Geographical Education Online, 11(9), 56–70. https://doi.org/10.17762/pae.v58i2.3313

- Muhajarah, K. (2022). Anger in Islam and its relevance to mental health. Jurnal Ilmiah Syi’ar, 22(2), 141–153. https://doi.org/10.29300/syr.v22i2.7417

- Muszynski, M., Tian, L., Lai, C., Moore, J. D., Kostoulas, T., Lombardo, P., Pun, T., & Chanel, G. (2021). Recognizing induced emotions of movie audiences from multimodal information. IEEE Transactions on Affective Computing, 12(1), 36–52. https://doi.org/10.1109/TAFFC.2019.2902091

- Nabi, R. L., Gustafson, A., & Jensen, R. (2018). Framing climate change: Exploring the role of emotion in generating advocacy behavior. Science Communication, 40(4), 442–468. https://doi.org/10.1177/1075547018776019

- Ngiam, K. Y., & Khor, W. (2019). Big data and machine learning algorithms for health-care delivery. The Lancet Oncology, 20(5), e262–e273. https://doi.org/10.1016/S1470-2045(19)30149-4

- Ninaus, M., Greipl, S., Kiili, K., Lindstedt, A., Huber, S., Klein, E., Karnath, H.-O., & Moeller, K. (2019). Increased emotional engagement in game-based learning–A machine learning approach on facial emotion detection data. Computers & Education, 142, 103641. https://doi.org/10.1016/j.compedu.2019.103641

- Pandeya, Y. R., & Lee, J. (2021). Deep learning-based late fusion of multimodal information for emotion classification of music video. Multimedia Tools and Applications, 80(2), 2887–2905. https://doi.org/10.1007/s11042-020-08836-3

- Papadakis, S. (2021). Tools for evaluating educational apps for young children: A systematic review of the literature. Interactive Technology and Smart Education, 18(1), 18–49. https://doi.org/10.1108/ITSE-08-2020-0127

- Priyasad, D., Fernando, T., Denman, S., Sridharan, S., & Fookes, C. (2020). Attention driven fusion for multi-modal emotion recognition [Paper presentation]. ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (pp. 3227–3231). https://doi.org/10.1109/ICASSP40776.2020.9054441

- Ruba, A. L., & Repacholi, B. M. (2020). Do preverbal infants understand discrete facial expressions of emotions? Emotion Review, 12(4), 235–250. https://doi.org/10.1177/1754073919871098

- Sarker, I. H. (2021). Machine learning: Algorithms, real-world applications and research directions. SN Computer Science, 2(3), 160. https://doi.org/10.1007/s42979-021-00592-x

- Seeber, I., Bittner, E., Briggs, R. O., de Vreede, T., de Vreede, G. J., Elkins, A., Maier, R., Merz, A. B., Oeste-Reiß, S., Randrup, N., Schwabe, G., & Söllner, M. (2020). Machines as teammates: A research agenda on AI in team collaboration. Information & Management, 57(2), 103174. https://doi.org/10.1016/j.im.2019.103174

- Sharma, K., & Giannakos, M. (2020). Multimodal data capabilities for learning: What can multimodal data tell us about learning? British Journal of Educational Technology, 51(5), 1450–1484. https://doi.org/10.1111/bjet.12993

- Singh, N. (2019). Big data technology: Developments in current research and emerging landscape. Enterprise Information Systems, 13(6), 801–831. https://doi.org/10.1080/17517575.2019.1612098

- Song, T., Zheng, W., Lu, C., Zong, Y., Zhang, X., & Cui, Z. (2019). MPED: A multi-modal physiological emotion database for discrete emotion recognition. IEEE Access, 7, 12177–12191. https://doi.org/10.1109/ACCESS.2019.2891579

- Strandberg, C., Styvén, M. E., & Hultman, M. (2020). Places in good graces: The role of emotional connections to a place on word-of-mouth. Journal of Business Research, 119, 444–452. https://doi.org/10.1016/j.jbusres.2019.11.044

- Sun, L., Lian, Z., Tao, J., Liu, B., & Niu, M. (2020). Multi-modal continuous dimensional emotion recognition using recurrent neural network and self-attention mechanism [Paper presentation]. Proceedings of the 1st International on Multimodal Sentiment Analysis in Real-Life Media Challenge and Workshop (pp. 27–34). https://doi.org/10.1145/3423327.3423672

- Swain, D. L., Langenbrunner, B., Neelin, J. D., & Hall, A. (2018). Increasing precipitation volatility in twenty-first-century California. Nature Climate Change, 8(5), 427–433. https://doi.org/10.1038/s41558-018-0140-y

- Toorajipour, R., Sohrabpour, V., Nazarpour, A., Oghazi, P., & Fischl, M. (2021). Artificial intelligence in supply chain management: A systematic literature review. Journal of Business Research, 122, 502–517. https://doi.org/10.1016/j.jbusres.2020.09.009

- Tsiourti, C., Weiss, A., Wac, K., & Vincze, M. (2019). Multimodal integration of emotional signals from voice, body, and context: Effects of (in) congruence on emotion recognition and attitudes towards robots. International Journal of Social Robotics, 11(4), 555–573. https://doi.org/10.1007/s12369-019-00524-z

- Tung, K., Liu, P. K., Chuang, Y. C., Wang, S. H., & Wu, A. Y. A. (2019). Entropy-assisted multi-modal emotion recognition framework based on physiological signals [Paper presentation]. 2018 IEEE-EMBS Conference on Biomedical Engineering and Sciences (IECBES), IEEE (pp. 22–26). https://doi.org/10.1109/IECBES.2018.8626634

- Uusberg, A., Taxer, J. L., Yih, J., Uusberg, H., & Gross, J. J. (2019). Reappraising reappraisal. Emotion Review, 11(4), 267–282. https://doi.org/10.1177/175407391986261

- Van Dinter, R., Tekinerdogan, B., & Catal, C. (2021). Automation of systematic literature reviews: A systematic literature review. Information and Software Technology, 136, 106589. https://doi.org/10.1016/j.infsof.2021.106589

- Van Kleef, G. A. (2021). Comment: Moving (further) beyond private experience: On the radicalization of the social approach to emotions and the emancipation of verbal emotional expressions. Emotion Review, 13(2), 90–94. https://doi.org/10.1177/1754073921991231

- Wang, Z., & Xie, Y. (2020). Authentic leadership and employees’ emotional labour in the hospitality industry. International Journal of Contemporary Hospitality Management, 32(2), 797–814. https://doi.org/10.1108/IJCHM-12-2018-0952

- Waterloo, S. F., Baumgartner, S. E., Peter, J., & Valkenburg, P. M. (2018). Norms of online expressions of emotion: Comparing Facebook, Twitter, Instagram, and WhatsApp. New Media & Society, 20(5), 1813–1831. https://doi.org/10.1177/1461444817707349

- Williams, R. I., Jr, Clark, L. A., Clark, W. R., & Raffo, D. M. (2021). Re-examining systematic literature review in management research: Additional benefits and execution protocols. European Management Journal, 39(4), 521–533. https://doi.org/10.1016/j.emj.2020.09.007

- Winasis, S., Djumarno, D., Riyanto, S., & Ariyanto, E. (2021). The effect of transformational leadership climate on employee engagement during digital transformation in Indonesian banking industry. International Journal of Data and Network Science, 5(2), 91–96. https://doi.org/10.5267/j.ijdns.2021.3.001

- Wirz, D. (2018). Persuasion through emotion? An experimental test of the emotion-eliciting nature of populist communication. International Journal of Communication, 12, 1114–1138. https://doi.org/10.5167/uzh-149959

- Xu, C., Furuya-Kanamori, L., Kwong, J. S. W., Li, S., Liu, Y., & Doi, S. A. (2021). Methodological issues of systematic reviews and meta-analyses in the field of sleep medicine: A meta-epidemiological study. Sleep Medicine Reviews, 57, 101434. https://doi.org/10.1016/j.smrv.2021.101434

- Zerback, T., & Wirz, D. S. (2021). Appraisal patterns as predictors of emotional expressions and shares on political social networking sites. Studies in Communication Sciences, 21(1), 27–45. https://doi.org/10.5167/uzh-206208

- Zhang, X., Wang, M. J., & Guo, X. D. (2020). Multi-modal emotion recognition based on deep learning in speech, video and text [Paper presentation]. 2020 IEEE 5th International Conference on Signal and Image Processing (ICSIP) (pp. 328–333). https://doi.org/10.1109/ICSIP49896.2020.9339464