Abstract

Background: DriveSafe DriveAware (DSDA) is a cognitive fitness-to-drive screen that can accurately predict on-road performance. However, administration is restricted to trained assessors. General practitioners are ultimately responsible for determining fitness to drive in many countries but lack suitable tools. We converted DSDA to touchscreen to provide general practitioners and other health professionals with a practical fitness-to-drive screen. This necessitated the development of an automatic data collection system. We took a user-centred design approach to test usability of the system with older adults, the group most likely to take the test. Method: Middle-aged and older adult volunteers were asked to try an iPad application to assist in the development of a fitness-to-drive screen. Seventeen males and 18 females (mean age 70 years) participated in four trials; each participant was tested only once. We tested all text and function changes until all older adults could successfully self-administer the screen. Results: Older adults found basic touchscreen functions easy to perform, even when unfamiliar with the technology. Conclusion: Usability testing allowed us to develop a user-friendly touchscreen data collection system and ensured that design errors were not missed. Psychometric evaluation of data gathered with touchscreen DSDA was conducted in a separate study prior to use in clinical practice.

PUBLIC INTEREST STATEMENT

DriveSafe DriveAware (DSDA) is valid, predictive test that occupational therapists have used for many years to determine if drivers can manage the cognitive aspects of driving. However, DSDA is not practical for medical practice. General practitioners (GPs) are ultimately responsible for determining medical fitness to drive, but lack the tools. Therefore, a touchscreen version of DSDA was developed to provide GPs with a practical, valid, predictive test to determine patient cognitive fitness to drive.

Older adults are the patients most likely to take touchscreen DSDA due to age-related changes and the onset of medical conditions such as stroke and dementia that may affect driving. However, older adults can have difficulty with technology due to cognitive changes and reduced vision, hearing, and reaction time. Therefore, older adults were consulted in the touchscreen DSDA development stage to ensure the test was user-friendly. It would not have been possible to develop a successful test without usability testing because we could not have predicted what difficulties older adults may encounter.

1. Introduction

People in many cultures consider driving as one of their most valued daily living activities (Al-Hassani & Alotaibi, Citation2014; Dickerson, Reistetter, & Gaudy, Citation2012; Fricke & Unsworth, Citation2001). Gaining a license is considered a rite of passage for young people and older adults want to drive for as long as possible, often with no plan for cessation (Coxon & Keay, Citation2015; Kostyniuk & Shope, Citation2003). However, driving is complex and therefore easily disrupted by illness, injury, or age-related changes. Chronic medical conditions, particularly amongst older drivers, are associated with increased crash risk and driving errors (Austroads, National Transport Commission, Citation2016; Charlton et al., Citation2010; Dobbs, Heller, & Schopflocher, Citation1998; Fildes, Citation2008; Jang et al., Citation2007; Marshall, Citation2008; Marshall & Man-Son-Hing, Citation2011; Sims, Rouse-Watson, Schattner, Beveridge, & Jones, Citation2012). Despite this, researchers recommend fitness to drive is determined on an individual basis, with a focus on functional status, rather than diagnosis (Charlton et al., Citation2010; Marshall, Citation2008; Marshall & Man-Son-Hing, Citation2011).

General practitioners are the professionals ultimately responsible for determining medical fitness to drive in most countries and are in an ideal position to screen drivers because: 1) patients usually present to them in the first instance; 2) they are required to fill out license authority medical forms (Dobbs et al., Citation1998; Sims et al., Citation2012), and 3) there is mandatory reporting of medically “at risk” drivers in jurisdictions of many countries including the US, Canada, and Australia (Austroads, National Transport Commission, Citation2016; Jang et al., Citation2007). Surveys show general practitioners believe they should be responsible for making determinations about fitness to drive but lack valid and reliable driver screens that are practical for use in medical practice (Dobbs et al., Citation1998; Fildes, Citation2008; Jang et al., Citation2007; Marshall, Demmings, Woolnough, Salim, & Man-Son-Hing, Citation2012; Molnar, Patel, Marshall, Man-Son-Hing, & Wilson, Citation2006; Sims et al., Citation2012; Wilson & Kirby, Citation2008; Woolnough et al., Citation2013; Yale, Hansotia, Knapp, & Ehrfurth, Citation2003).

The desktop (original) version of DSDA is a cognitive fitness-to-drive test showing promise as a driver-screening instrument. Data gathered with original DSDA are face valid, reliable, sufficiently predictive, test-retest reliable, and trichotomise patients via two evidence-based cut-off scores based on the likelihood of passing an on-road assessment (i.e. “Likely to Pass”, “Requires Further Testing”, and “Likely to Fail”) (Hines & Bundy, Citation2014; Kay, Bundy, & Clemson, Citation2009b; Citation2009a, Citation2008, Kay, Bundy, Clemson, Cheal, & Glendenning, Citation2012; O’Donnell, Morgan, & Manuguerra, Citation2018). However, original DSDA is not practical for medical practice and requires a trained administrator. Therefore, we further developed the test so it would be suitable for administration by general practitioners. Prior to development of the new screen, we surveyed a representative sample of 200 Australian general practitioners to identify their preferences regarding a driver screen (Brown, Cheal, Cooper, & Joshua, Citation2013). General practitioners reported they needed a brief (mean and median 10 min), valid and simple test. Thus, we designed DSDA to be largely self-administered via iPad, with capacity for a practice nurse to set up and supervise the self-administered components.

Because the majority of drivers likely to take touchscreen DSDA will be older adults, we wanted to be sure that older adults could use tablet technology and feel comfortable with it (Cook et al., Citation2014; Dixon, Bunker, & Chan, Citation2007; Matthew et al., Citation2007; Ryan, Corry, Attewell, & Smithson, Citation2002; White, Janssen, Jordan, & Pollack, Citation2015). Older adults experience age-related changes that could affect interaction with a digital tablet (e.g. reduced vision, hearing loss, reduced reaction time, reduced coordination, and cognitive changes). We wanted to design an interface that would consider these limitations so that touchscreen DSDA examined the desired construct (i.e. awareness of the driving environment and one’s own driving performance) (Kay et al., Citation2009a) and not individual differences in ability to enter responses via a touchscreen. First, we reviewed optimum touchscreen design guidelines for older adults. The most relevant for this project included: large targets (minimum .31” or 8 mm); large, simple fonts; high-contrast colours; contrasting targets and backgrounds; caution in the design of drag tasks including testing with seniors first; avoidance of scrolling; simple and meaningful icons; and, avoidance of distracting or irrelevant elements (Kobayashi et al., Citation2011; Loureiro & Rodrigues, Citation2014; Schneider, Wilkes, Grandt, & Schlick, Citation2008).

We took a user-centred design approach to avoid potentially costly, time-consuming user-interface problems in the clinical research phase. Hegde (Citation2013) described usability testing as the cornerstone of best practice when designing medical devices. Usability is defined as the extent to which a product or service can be used with efficiency, effectiveness, and satisfaction by the target users to achieve specified goals in the specified context of use (International Organization for Standardization, Citation2010). The user-centred design philosophy places end users at the centre of the design process (Dorrington, Wilkinson, Tasker, & Walters, Citation2016; McCurdie et al., Citation2012). Elements of the design are refined via an iterative process (Hegde, Citation2013; McCurdie et al., Citation2012; Rogers & Mitzner, Citation2016).

We sought to make the touchscreen version of DSDA as similar as possible to the original version in order to retain test validity. However, we were transitioning from a test where a trained administrator collected, interpreted, and scored variable data via participant verbal responses, to a test where variable data were collected via participant touchscreen responses and scored automatically. This necessitated the development of an automatic variable data collection and scoring system that would reflect the decisions that would otherwise have been made by a trained assessor. In the present study, we addressed the research question “Does the touchscreen data collection system we designed collect variable data in a way that is user-friendly for older adults who may be unfamiliar with the technology?” We sought to answer this question by testing the usability of the touchscreen DSDA data collection system with older adults concurrently with touchscreen DSDA software design and programming.

2. Method

The University of Sydney Human Research Ethics Committee provided approval for the study. We conducted four rounds of usability testing on 4 days over 1 month. Results from each round informed the next stage of design and programming. We aimed to test approximately 10 participants per round. Testing with larger numbers was not considered beneficial because a repeated pattern of errors emerged after trials with 7–10 individuals. These errors needed to be addressed in programming before further feedback was useful. Each participant was tested only once.

2.1. Setting

We conducted Round-1 and Round-4 at a large aged-care residential facility. We conducted Round-2 and Round-3 at a community centre. Round-2 occurred within the context of a social group for older adults. Both centres were located in Sydney, Australia.

2.2. Participants

We placed an advertisement in community meeting areas at both centres, asking for older adult volunteers to assist in the development of a fitness-to-drive screen for general practitioners. Potential volunteers were informed that their information would be anonymous; we would provide no advice regarding their driving. Volunteers advised centre staff if they wished to participate. A total of 35 adults volunteered: 17 males and 18 females aged between 41 and 89 (mean age 70 years); 83% (29 participants) were over 65. We collected no identifying data. No one withdrew or was excluded after agreeing to participate. Participant characteristics including iPad use are listed in Table .

Table 1. Summary of participant characteristics

Round-1 and Round-4 participants lived in supported care units. Eleven were ambulant; two mobilised via wheelchair. Three reported a past stroke; three reported hearing impairment; one reported significant vision impairment. Round-2 participants were all retired, ambulant, generally well, driving, and living independently in the community. Round-3 participants were younger and more active than the other groups. All were in paid employment and driving. Inclusion of a middle-aged group allowed comparison with a different generational cohort. The educational status of the sample was: post graduate degree (number [n] = 1), university degree (n = 7), college certificate (n = 6), completion of high school (n = 5), completion of middle high school (n = 9), and completion of primary school (n = 1), not reported (n = 6).

2.3. Instruments

DriveSafe measures awareness of the driving environment (Kay et al., Citation2009a, Citation2008). Touchscreen DriveSafe consists of 10 images of a 4-way intersection (see sample image in Figure ). Each image includes between two and four potential hazards (i.e. people or vehicles). These hazards appear for 4 s then disappear, leaving only the blank intersection. Participants are asked to recall the hazards that were displayed, touching the blank intersection to identify hazard type, location, and direction of movement.

Figure 1. DriveSafe image sample.

Reproduced with permission from Cheal B, Kuang H. DriveSafe DriveAware for Touch Screen Administration Manual Pearson Australia, 2015

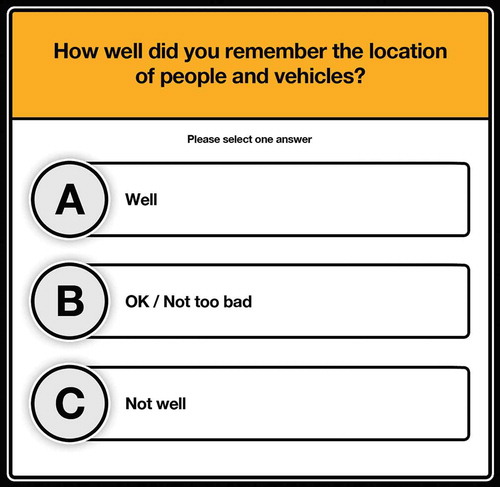

DriveAware measures awareness of one’s own driving abilities (Kay et al., Citation2009b, Citation2009a). Touchscreen DriveAware (Kay et al., Citation2009b) consists of two self-administered questions and five questions administered by a general practitioners or suitably qualified health professional (see sample question in Figure ). The DriveAware items yield a discrepancy score based on the difference between the patient’s self-ratings and the clinician’s ratings, or performance in DriveSafe.

Figure 2. DriveAware image sample.

Reproduced with permission from Cheal B, Kuang H. DriveSafe DriveAware for Touch Screen Administration Manual Pearson Australia, 2015

Touchscreen DSDA was presented to participants via a 3rd generation iPad (operating system 9), with a 9.7-inch, 2048 × 1536 mm (264 pixels per inch), multitouch “retina” display. Headphones and a stylus were available to use depending on each participant’s preference.

3. Procedure

We developed a pilot version of touchscreen DSDA based on screen blueprints, which provided a visual guide of the skeletal framework and arrangement of elements in the iPad application. We performed several rounds of in-house testing and quality checks until we had created a satisfactory version for the present study.

The first author trialled the pilot version at the two centres. At the time of the trial, each participant sat on a chair with the iPad on a table in front. Volume and brightness were adjusted to full. Participants adjusted the position of the iPad to suit their focal length and chose whether to use a stylus or headphones. The examiner recorded participant actions and comments during testing with attention to apparent ability to understand test requirements, operate functions (e.g. tap, drag, undo, and buttons), and evidence of any anxiety or frustration. The examiner also recorded any technical difficulties related to programming. The first author conducted a brief interview with each participant post testing including questions regarding test difficult, ability to understand and follow written and audio instructions, and ability to operate the device.

After each round of testing, the first author discussed any difficulties encountered and potential solutions with the iPad application developers. Agreed programming and design changes were made and quality checks performed, followed by re-testing with participants. Testing continued until touchscreen DSDA was fully programmed and participants could independently self-administer the test.

3.1. Analysis

The analysis focused on functional outcomes: whether participants could understand the test requirements, successfully perform the associated actions via the touchscreen (e.g. tap a target or adjust an arrow direction), and complete the required task in a timely manner and without errors. We assessed these outcomes against the project goals and objectives presented in Table .

Table 2. Touchscreen DriveSafe DriveAware development goals and objectives

4. Results

The following is a summary of the main challenges encountered and the solutions implemented prior to testing in subsequent rounds.

4.1. Test set-up

Usability testing provided important insight regarding optimum test set-up. For example, four Round-1 participants forgot to wear their reading glasses and all failed to attend to the screen at the commencement of each item. Some had difficulty entering responses via touch, largely due to incorrect finger angle when the iPad was flat on the table. We addressed these difficulties by adding a written and audio prompt to put reading glasses on if worn; adding a countdown (i.e. “3, 2, 1”) and bell to cue timely attention; and placing the iPad on a stand angled to 20 degrees. Result from Round-2 and Round-3 testing indicated these measures had resolved the testing difficulties; we identified no further test set-up challenges.

Three participants reported hearing loss in Round-4. One had a significant loss, did not wear hearing aids, and could not hear the instructions with full volume. However, all three participants reported hearing the instructions clearly once wearing headphones. One participant had a significant hand tremor that impaired touch ability. A stylus solved this problem. Round-2 participants were asked to try both stylus and finger inputs and could use either equally successfully.

4.2. Drivesafe

The primary goal for the DriveSafe evaluation was to determine user-friendly input methods.

4.2.1. Object location input

Round-1 participants (n = 9) triggered unwanted object location responses by resting a hand on the screen or by incorrect touch. Also, we encountered technical difficulties for “tap” functions because some objects were situated too close together. We resolved all difficulties via use of an iPad stand and optional stylus, a programmed 0.5-s delay between taps to allow menus to open, and adjustment of object proximity. We encountered no further difficulties in subsequent rounds.

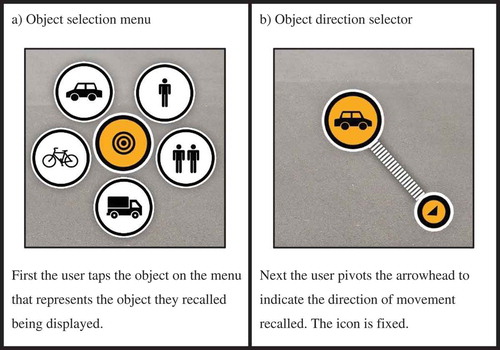

4.2.2. Object type input

Round-1 participants had difficulty with object-type menus overlapping each other or the screen edge, preventing option selection. We overcame these difficulties by programming a 1-cm exclusion zone around the perimeter of the screen and by moving close objects further apart. Round-2 participants failed to notice or use the icon depicting two people (see Figure )). Therefore, we enlarged it and moved it to a more prominent location on the menu (from left to right). We added forced selection of a couple icon to a practice item and inserted an error message to provide clarification (i.e. “For 2 people walking together, use the 2-person icon”). We encountered no further difficulties in subsequent rounds.

4.2.3. Direction of movement input

We trialled two direction input methods in Round-1: an 8-way arrow icon (i.e. tap the arrow representing the object’s direction) and a drag icon (i.e. drag the object in the desired direction). More people preferred “drag” to “arrows” (n = 10:2). One participant found both options difficult. Participants reported drag provided a more accurate reflection of their intended direction stating, “The arrows are not really spot on” and “I wish there was one in the middle”. Because the drag function was the most successful, we discontinued arrow testing. Five participants in Round-2 had difficulty with the drag motion, either not dragging the icon far enough or trying to drag it too far. We resolved this in subsequent rounds by fixing the icon at the tap location and snapping out a ghosted arrow once the participant selected the object type. The ghosted arrow became solid once touched (see Figure )). We also extended the drag radius and required a pivot action, which older adults found easier than drag alone.

We wanted to make the touchscreen interface user-friendly without prompting responses. For example, in the original version of DriveSafe, patients could “forget” to provide a verbal response for the “direction” category; this contributed to scoring and served to discriminate amongst individuals. We initially tried “no cue” for this category but each of the 13 participants forgot to enter at least one response. Lack of a visual cue was therefore not useful for discriminating ability amongst participants of varying ability levels. We addressed the problem of eliciting a response, whilst minimising cueing, by inserting the ghosted arrow. This prompted participants to enter a direction response but, if they failed to respond, they could still proceed.

4.2.4. Item progression

In Round-1, we identified a significant problem in self-administration of item progression via the “next” button. All participants over-focused on tapping this button, missing the brief display of objects. We corrected this by a) delaying the appearance of the “next” button until a first response had been entered; b) adding a written and audio instructions once objects had disappeared (i.e. “Tap where you saw the object”); and c) inserting a message at the conclusion of each item (i.e. “Are you sure you have completed this screen?”). Some participants tried to enter responses before objects had disappeared. This was largely resolved by the aforementioned programming changes. Inserting an error message was not viable, as this would have distracted participants from committing the hazards to memory.

Designing a user-friendly “undo” process proved challenging in all rounds. Middle-aged and older participants had contrasting experiences and difficulties. We added undo instructions into the demonstration image and forced undo practice because Round-1 and Round-2 older adults did not understand how to use the undo button. However, the middle-aged participants strongly disliked being forced to practice (e.g. stating, “I don’t think I should have to press undo. I didn’t make a mistake” and “No. I’m not undoing because I did it right”). Making practice optional in conjunction with additional instructions worked well for all adults.

4.2.5. Understanding of test requirements

In Round-1, only one of the 13 participants understood the test requirements. Participants continually pressed the next button, missing many items. We resolved this in Round-2 with the forced practice of incorrect demonstration items and two levels of in-application instructions. To avoid disrupting test flow for participants who quickly understood test requirements, only participants having difficulties received second-level instructions. The wording of some test instructions confused participants in Round-2. For example, one DriveSafe instruction stated, “You will now see 10 images of an intersection”. Including numerals confused the participants (e.g. “That’s a problem. I thought I needed to look for 10 items”), so we removed them. Field-testing allowed identification of particular words or colours that were problematic. For example, participants failed to understand the word “marker”. Thus, we substituted the word “arrow”. Participants reported a red message background made them feel like they had done something wrong. We resolved this by changing the background colour to blue.

Round-4 participants had the least iPad exposure: only one participant reported owning an iPad but rarely using it. The others had never used one. One 79-year-old male with significant hearing loss, full vision loss in one eye, and glaucoma in the other eye, presented a particular challenge (he was still driving). He reported he had never seen an iPad and did not understand the touchscreen concept. Despite this, he completed the test successfully and with ease, wearing headphones. The only difficulty he had was working out how to drag the direction icon. The second level of in-application instructions addressed the difficulty and he required no administrator assistance.

We learned about the need to create an administrator-assisted option for test administration because two participants struggled with the practice items (neither had used an iPad before). A brief verbal prompt addressed their difficulties but the first participant became frustrated after five item repetitions. We developed an administrator-assist procedure to meet needs we observed during the study. For example, we concluded that an administrator should intervene after four unsuccessful practice attempts.

We chose not to develop a solution for every observed problem. For example, one participant took twice as long as the others to complete the test and demonstrated behaviours not observed amongst other participants (e.g. placing a car in the foreground of every image although there was no object at this location). These difficulties may have related to test design or to the participant’s cognitive deficits. Because we did not observe a similar problem in any other participant, we did not develop a programming solution.

4.3. Driveaware

Participants self-administered DriveAware with ease through all rounds of testing. Therefore, we made no adjustments. The original design worked well in practice.

5. Discussion

Older adults in this study found basic touchscreen operations easy to perform without training, even when unfamiliar with the technology. All participants quickly understood how to interact with the iPad, although 23 of 29 participants aged over 65 had never or rarely used one. Participants’ overall response to the touchscreen test was positive; spontaneous comments from four participants indicated they found the test face-valid (e.g. “I think it is fair in the fact that it makes you look at your surrounds, which a lot of older people don’t, and just basic road rules I guess” and “That seemed quite good. If my doctor made me do this to check my driving, that would be fair”).

DriveSafe was the more difficult subtest to convert to iPad administration because it was the more complex aspect of DSDA. The original version relied on an administrator to interpret patient verbal responses. It was fundamental to consider motor and cognitive performance required for touch-based functions such as “tap” and “drag” in the DSDA conversion (Findlater, Froehlich, Fattal, Wobbrock, & Dastyar, Citation2013). Consistent with other research (Cockburn, Ahlström, & Gutwin, Citation2012; Findlater et al., Citation2013; Kobayashi et al., Citation2011), participants found tap intuitive and drag more difficult. However, participants were not precise when entering responses via either action. This may be due to parallax, because the target is obscured by the finger, or due to the additional dexterity and pressure required to “hold” and slide the target against resistance (Cockburn et al., Citation2012; Findlater et al., Citation2013; Kobayashi et al., Citation2011; Loureiro & Rodrigues, Citation2014). The arrow design that avoided the need for a precise dragging action worked better than the other options trialled. We considered this lack of precision when determining scoring in a subsequent study, allowing generous zones to be scored as correct. Consistent with design guidelines for older adults (Loureiro & Rodrigues, Citation2014), we found timing of interface elements critical to smooth progression of the test. For example, participants encountered difficulties in Round-1 because the “next” button appeared before it was needed, resulting in over-focus on this button and misapplication.

Cueing timely attention to the small iPad screen was fundamental in the conversion because participants determined when to progress to the next item. In touchscreen DSDA, a loud bell and countdown timer successfully cued attention via auditory and visual prompts. We did not standardise distance to the screen based on touchscreen design guidelines for older adults which recommended seniors be free to adjust the iPad distance for comfortable viewing (Loureiro & Rodrigues, Citation2014). This worked well in practice. Participants did not have difficulty observing the smaller hazards, which varied in size from .04” (10 mm) to .24” (62 mm).

We tested all text and function changes across age groups as any change significantly impacted performance. The middle-aged cohort had difficulty with different instructions and functions than the older age groups. Inclusion of participants with challenges common to older age, such as hearing and vision loss, tremors, and potential cognitive changes, avoided user-interface problems that might have occurred if we had considered only the needs of able-bodied users. The performance of participants with these challenges informed test set-up and administration procedures and identified the need for an examiner-assisted version of administration.

5.1. Limitations

A limitation of this study was the small sample size, which may have resulted in low-probability errors not being detected (Hegde, Citation2013). However, we considered a range of other sources to mitigate this: literature review, task analysis, prototyping, interviews, expert reviews, and continuous quality checking.

5.2. Conclusion

A user-centred design process allowed us to develop a user-friendly touchscreen data collection system for older adults, the group most likely to take touchscreen DSDA and most likely to struggle with the technology. The approach taken allowed us to test and validate our design assumptions and ensured that design errors were not missed. We believe that conducting usability testing concurrently with iPad application design, programming and evaluation resulted in significant cost, time, and efficiency benefits. A further study was conducted to develop an automatic data scoring system to reflect the decisions that would otherwise have been made by an expert rater (Cheal, Bundy, Patomella, Scanlan & Wilson, Citation2018). Additionally, a further study was conducted to examine the psychometric properties and predictive validity of data gathered with touchscreen DSDA before it could be used in clinical practice (Cheal & Kuang, Citation2015).

Competing interests

The authors declare no competing interests.

Implications for rehabilitation

● Desktop (original) DSDA is a cognitive fitness-to-drive test that requires a trained administrator.

● Conversion of DSDA to touchscreen provides general practitioners and other health professionals without driver-assessment training, with a practical, clinical cognitive fitness-to-drive screen.

● Usability testing of touchscreen DSDA throughout the design and programming phase provided information critical to ensuring the test was user-friendly for older adults and design errors were not missed.

Beth Cheal was employed by Pearson to project manage the conversion to touchscreen. Anita Bundy is a test author and receives royalties from sale of DSDA.

Acknowledgements

We acknowledge the contributions of the iPad application developer Very Livingstone.

Additional information

Funding

Notes on contributors

Beth Cheal

Touchscreen DriveSafe DriveAware (DSDA) is a standardised assessment of cognitive fitness to drive that has been administered by driver-trained occupational therapists for many years. Our group converted DSDA into a practical and predictive touchscreen test for general practitioners to use in medical practice. This involved a number of research phases. First, we tested usability of touchscreen DSDA with older adults throughout the design and programming phases to ensure the test was user-friendly. Next, we developed and tested an automatic data collection and scoring system that reflected the decisions that would have been made by a trained-assessor. Finally, we conducted a study to examine the internal validity, reliability and predictive validity of data gathered with touchscreen DSDA. A standardised on-road assessment was the criterion measure. Rasch analysis provided evidence that touchscreen DSDA had retained the strong psychometric properties of original DSDA. The present paper relates to the test development stage.

References

- Al-Hassani, S. B., & Alotaibi, N. M. (2014). The impact of driving cessation on older Kuwaiti adults: Implications to occupational therapy. Occupational Therapy in Health Care, 28, 264–276. doi:10.3109/07380577.2014.917779

- Austroads, National Transport Commission. (2016). Assessing fitness to drive for commercial and private vehicle drivers. Sydney: Austroads.

- Brown, F., Cheal, B., Cooper, M., & Joshua, N. (2013). DriveSafe drive aware digital development: General practitioner survey findings. Sydney, Australia: Pearson Clinical Assessment Australia & New Zealand.

- Charlton, J., Sjaanie, K., Odell, M., Devlin, A., Langford, J., O’Hare, M., … Scully, M. (2010). Influence of chronic illness on crash involvement of motor vehilce drivers (2nd ed.). Melbourne, Australia: Monash University Accident Research Centre.

- Cheal, B., Bundy, A., Patomella, A., Scanlan, J. N. & Wilson, C. (2018). Converting the DriveSafe subtest of DriveSafe DriveAware for touchscreen administration. Australian Occupational Therapy Journal. (In press). doi:10.1111/1440-1630.12558

- Cheal, B., & Kuang, H. (2015). DriveSafe drive aware for touch screen administration manual. Sydney, Australia: Pearson Australia Group Pty Ltd. Retrieved from: https://www.pearsonclinical.com.au/products/view/563

- Cockburn, A., Ahlström, D., & Gutwin, C. (2012). Understanding performance in touch selections: Tap, drag and radial pointing drag with finger, stylus and mouse. International Journal of Human-Computer Studies, 70, 218–233. doi:10.1016/j.ijhcs.2011.11.002

- Cook, D. J., Moradkhani, A., Douglas, K. S., Prinsen, S. K., Fischer, E. N., & Schroeder, D. R. (2014). Patient education self-management during surgical recovery: Combining mobile (iPad) and a content management system. Telemedicine and e-Health, 20, 312–317. doi:10.1089/tmj.2013.0219

- Coxon, K., & Keay, L. (2015). Behind the wheel: Community consultation informs adaptation of safe-transport program for older drivers. BMC Research Notes, 8, 764. doi:10.1186/s13104-015-1745-0

- Dickerson, A. E., Reistetter, T., & Gaudy, J. R. (2012). The perception of meaningfulness and performance of instrumental activities of daily living from the perspectives of the medically at-risk older adults and their caregivers. Journal of Applied Gerontology, 32, 749–764. doi:10.1177/0733464811432455

- Dixon, S., Bunker, T., & Chan, D. (2007). Outcome scores collected by touchscreen: Medical audit as it should be in the 21st century? Annals of the Royal College of Surgeons of England, 89, 689–691. doi:10.1308/003588407X205422

- Dobbs, A. R., Heller, R. B., & Schopflocher, D. (1998). A comparitive approach to identify unsafe older driver. Accident Analysis & Prevention, 30, 363–370. doi:10.1016/S0001-4575(97)00110-3

- Dorrington, P., Wilkinson, C., Tasker, L., & Walters, A. (2016). User-centered design method for the design of assistive switch devices to improve user experience, accessibility, and independence. Journal of Usability Studies, 11, 66–82.

- Fildes, B. N. (2008). Future directions for older driver research. Traffic Injury Prevention, 9, 387–393. doi:10.1080/15389580802272435

- Findlater, L., Froehlich, J. E., Fattal, K., Wobbrock, J. O., & Dastyar, T. (2013). Age-related differences in performance with touchscreens compared to traditional mouse input. ACM conference on human factors in computing systems (CHI). 2013. Paris, France.

- Fricke, J., & Unsworth, C. (2001). Time use and importance of instrumental activities of daily living. Australian Occupational Therapy Journal, 48, 118–131. doi:10.1046/j.0045-0766.2001.00246.x

- Hegde, V. (2013). Role of human factors/usability engineering in medical device design. Proceedings annual Reliability and Maintainability Symposium (RMAS). Orlando, FL: IEEE.

- Hines, A., & Bundy, A. (2014). Predicting driving ability using DriveSafe and DriveAware in people with cognitive impairments: A replication study. Australian Occupational Therapy Journal, 61, 224–229. doi:10.1111/1440-1630.12112

- International Organization for Standardization. (2010). ISO 9241-210: 2010 Ergonomics of human system interaction - Part 210: Human-centered design for interactive systems. Genève, Switzerland: Author.

- Jang, R. W., Man-Son-Hing, M., Molnar, F. J., Hogan, D. B., Marshall, S. C., Auger, J., … Naglie, G. (2007). Family physicians’ attitudes and practices regarding assessments of medical fitness to drive in older persons. Journal of General Internal Medicine, 22, 531–543. doi:10.1007/s11606-006-0043-x

- Kay, L., Bundy, A., & Clemson, L. (2008). Predicting fitness to drive using the visual recognition slide test (USyd). The American Journal of Occupational Therapy : Official Publication of the American Occupational Therapy Association, 62, 187–197.

- Kay, L., Bundy, A., & Clemson, L. (2009a). Predicting fitness to drive in people with cognitive impairments by using DriveSafe and DriveAware. Archives of Physical Medicine and Rehabilitation, 90, 1514–1522. doi:10.1016/j.apmr.2009.03.011

- Kay, L., Bundy, A., & Clemson, L. (2009b). Validity, reliability and predictive accuracy of the driving awareness questionnaire. Disability and Rehabilitation, 31, 1074–1082. doi:10.1080/09638280802509553

- Kay, L., Bundy, A., Clemson, L., Cheal, B., & Glendenning, T. (2012). Contribution of off-road tests to predicting on-road performance: A critical review of tests. Australian Occupational Therapy Journal, 59, 89–97. doi:10.1111/j.1440-1630.2011.00989.x

- Kobayashi, M., Hiyama, A., Miura, T., Asakawa, C., Hirose, M., & Ifukube, T. (2011). Elderly user evaluation of mobile touchscreen interactions. (P. Campos, editor,). Lisbon, Portugal: International Federation for Information Processing.

- Kostyniuk, L. P., & Shope, J. T. (2003). Driving and alternatives: Older drivers in Michigan. Journal of Safety Research, 34, 407–414.

- Loureiro, B., & Rodrigues, R. (2014). Design guidelines and design recommendations of multi-touch interfaces for elders. ACHI 2014: The seventh international conference on advances in computer-human interactions. Barcelona, Spain.

- Marshall, S. C. (2008). The role of reduced fitness to drive due to medical impairments in explaining crashes involving older drivers. Traffic Injury Prevention, 9, 291–298. doi:10.1080/15389580801895244

- Marshall, S. C., Demmings, E. M., Woolnough, A., Salim, D., & Man-Son-Hing, M. (2012). Determining fitness to drive in older persons: A survey of medical and surgical specialists. Canadian Geriatrics Journal, 15, 101–119. doi:10.5770/cgj.15.30

- Marshall, S. C., & Man-Son-Hing, M. (2011). Multiple chronic medical conditions and associated driving risk: A systematic review. Traffic Injury Prevention, 12, 142–148. doi:10.1080/15389588.2010.551225

- Matthew, A. G., Currie, K. L., Irvine, J., Ritvo, P., Santa Mina, D., Jamnicky, L., … Trachtenberg, J. (2007). Serial personal digital assistant data capture of health-related quality of life: A randomized controlled trial in a prostate cancer clinic. Health and Quality of Life Outcomes, 5, 38. doi:10.1186/1477-7525-5-38

- McCurdie, T., Taneva, S., Casselman, M., Yeung, M., McDaniel, C., Ho, W., & Cafazzo, J. (2012, Fall). mHealth consumer apps the case for user-centered design. Horizons. 49–54.

- Molnar, F. J., Patel, A., Marshall, S. C., Man-Son-Hing, M., & Wilson, K. G. (2006). Clinical utility of office-based cognitive predictors of fitness to drive in persons with dementia: A systematic review. Journal of American Geriatric Society, 54, 1809–1824. doi:10.1111/j.1532-5415.2006.00967.x

- O’Donnell, J. M., Morgan, M. K., & Manuguerra, M. (2018, March 2). Patient functional outcomes and quality of life after microsurgical clipping for unruptured intracranial aneurysm. A prospective cohort study. Journal of Neurosurgery. 1–8.

- Rogers, W. A., & Mitzner, T. L. (2016). Envisioning the future for older adults: Autonomy, health, well-being, and social connectedness with technology support. Futures, 87, 133–139.

- Ryan, J. M., Corry, J. R., Attewell, R., & Smithson, M. J. (2002). A comparison of an electronic version of the SF-36 general health questionnaire to the standard paper. Quality of Life Research, 11, 19–26.

- Schneider, N., Wilkes, J., Grandt, M., & Schlick, C. (2008)Investigation of input devices for the age-differentiated design of human-computer interaction. Proceedings of the human factors and Ergonomics society 52nd annual meeting - 2008. New York, Santa Monica, CA: Human Factors and Ergonomics Society; .

- Sims, J., Rouse-Watson, P., Schattner, A., Beveridge, A., & Jones, K. (2012). To drive or not to drive: Assessment dilemmas for GPs. International Journal of Family Medicine, 1–6. doi:10.1155/2012/417512

- White, J. H., Janssen, H., Jordan, L., & Pollack, M. (2015). Tablet technology during stroke recovery: A survivor’s perspective. Disability Rehabilitation, 37, 1186–1192. doi:10.3109/09638288.2014.958620

- Wilson, L. R., & Kirby, N. H. (2008). Individual differences in South Australian general practitioners’ knowledge, procedures and opinions of the assessment of older drivers. Australasian Journal on Ageing, 27, 121–125. doi:10.1111/j.1741-6612.2008.00304.x

- Woolnough, A., Salim, D., Marshall, S. C., Weegar, K., Porter, M. M., Rapoport, M. J., … Vrkljan, B. (2013). Determining the validity of the AMA guide: A historical cohort analysis of the assessment of driving related skills and crash rate among older drivers. Accident Analysis & Prevention, 61, 311–316. doi:10.1016/j.aap.2013.03.020

- Yale, S., Hansotia, P., Knapp, D., & Ehrfurth, J. (2003). Neurologic conditions: Assessing medical fitness to drive. Clinical Medicine & Research, 1, 177–188. doi:10.3121/cmr.1.3.177