ABSTRACT

Since the first descriptions of design research (DR), there have been calls to better define it to increase its rigour. Yet five uncertainties remain: (1) the processes for conducting DR, (2) how DR differs from other forms of research, (3) how DR differs from design, (4) the products of DR, and (5) why DR can answer certain research questions more effectively than other methodologies. To resolve these uncertainties, we define educational design research as a meta-methodology conducted by education researchers to create practical interventions and theoretical design models through a design process of focusing, understanding, defining, conceiving, building, testing, and presenting, that recursively nests other research processes to iteratively search for empirical solutions to practical problems of human learning. By better articulating the logic of DR, researchers can more effectively craft, communicate, replicate, and teach DR as a useful and defensible research methodology.

Design research (DR) provides educational researchers with a methodology for “use-inspired basic research” (Stokes, Citation1997). In DR, researchers design and study interventions that solve practical problems in order to generate effective interventions and theory useful for guiding design (Brown, Citation1992; Collins, Joseph, & Bielaczyc, Citation2004; McKenney & Reeves, Citation2012; O’Neill, Citation2012; Richey, Klein, & Nelson, Citation2004; Sandoval & Bell, Citation2004; van den Akker, Citation1999). DR recognises that neither theory nor interventions alone are sufficient; theory and interventions drive each other in complex, iterative ways. Existing models of the relationship between research and design, that is, basic research leading to applied research, then to development, and then to products, oversimplifies how real world innovations are created because basic research does not always provide the foundation, nor inexorably lead to, practical application (Clark & Guba, Citation1965; Rogers, Citation2003; Stokes, Citation1997) because “innovative design must occasionally precede theoretical understanding” (O’Neill, Citation2012, p. 130). Alternatively, interventions unguided by theory are likely to be incremental and haphazard. Theory derives its purpose from application and application derives its power from theory.

Although DR promises to merge theory and application, DR still needs work. After three decades of work on DR including: early descriptions by Brown (Citation1992), special issues of Educational Researcher (Kelly, Citation2003), the Journal of Learning Sciences (Barab & Squire, Citation2004), and several edited volumes and books (Kelly, Lesh, & Baek, Citation2008; McKenney & Reeves, Citation2012; Plomp & Nieveen, Citation2007; van den Akker, Citation1999), some have concluded that: “as promising as the methodology is, much more effort … is needed to propel the type of education innovation that many of us feel is required” (Anderson & Shattuck, Citation2012, p. 24). Even advocates within the DR community note that the: “…chorus of voices makes clear that if you are really thinking as a design-based researcher, you regularly wonder what you are up to” (O’Neill, Citation2012, p. 120). Dede put it more bluntly: “…neither policymakers nor practitioners want what the design research community is selling right now. We appropriately don’t match the narrow conceptions of science currently in vogue at the federal level, but have much internal standard-setting to accomplish before we can put forward a defensible alternative” (Dede, Citation2004, p. 114). The benefits of increased methodological consensus warrant a renewed attempt to provide a formal definition of DR (Anderson & Shattuck, Citation2012; Hoadley, Citation2004; Kelly, Citation2004; O’Neill, Citation2012; van den Akker, Citation1999).

Although it is difficult to evaluate an entire research methodology (McKenney & Reeves, Citation2013), as proponents of DR we must take these criticisms as a friendly challenge to more rigorously define DR and its products (Hoadley, Citation2004), especially if we wish researchers from other methodological traditions in education to accept DR as a credible methodology.

Challenges to paradigmatic development of design research

Although DR has “…won many adherents, including both practitioners and career researchers … not all of them necessarily agree on what it is” (O’Neill, Citation2012, p. 120). Many uncertainties about design research (DR) arise because we have not sufficiently defined its logic. Specifically, current accounts have not sufficiently articulated: (1) the processes for conducting DR, (2) what distinguishes DR from other forms of research, (3) what distinguishes DR from design practice, (4) the products of DR, and (5) the characteristics of DR that make it more effective than other methodologies for answering certain questions.

Challenge 1: uncertainty about the design research process

The process of DR remains uncertain. Both “within and without the learning sciences there remains confusion about how to do DR, with most scholarship on the approach describing what it is rather than how to do it” (Sandoval, Citation2014, p. 18).

Articulating the process of DR is necessary to: make coherent decisions about which methods to apply and when; explain the high-level process of DR to new researchers; effectively communicate DR methodology in the concise form required for scholarly publication; and understand similarities and differences across different instantiations of DR which allows us to borrow methods and improve the DR methodology. Without a clearly defined logic of the DR process(es), we cannot justify how DR achieves the twin goals of producing theory and interventions, we cannot distinguish DR from design, and we cannot distinguish DR from other forms of research. Articulating DR process allows us to better research and better communicate.

There seems to be no accepted, precisely described DR process at the level of specificity dedicated to other methodologies such as experiments, case study research, or grounded theory. A precise account of the DR process would define analytically distinct phases describing the different activities and goals of DR projects. Many of the foundational definitions of DR (Sandoval & Bell, Citation2004; van den Akker, Citation1999) do not aim to define the phases of DR and those that do (for example, Richey et al., Citation2004) define the phases at too coarse a level (e.g., design, development, and evaluation) to craft, communicate, replicate, and teach DR as a useful and defensible research methodology. Other foundational accounts of the DR process (Bannan, Citation2007; Bannan-Ritland, Citation2003; McKenney & Reeves, Citation2012; Plomp & Nieveen, Citation2007) have described much of the content of the phases of DR, but not clear, analytically distinct phases.

The phases of the DR process should be defined by their goals, however, many descriptions of DR conflate the goals with time. For example, some descriptions of the DR process might include phases such as: “early prototyping” and “final prototyping”, where the intermediate product created in one phase (e.g., the prototype) is finalised in another phase. Defining phases in this way leads to multiple phases with the same goal (e.g., both early prototyping and final prototyping have the goal of prototyping) and which are therefore not analytically distinct. The same problem occurs when descriptions of the DR process conflate phase with implementation, for example, a design process that has phases such as “evaluation of local impact” and “evaluation of broader impact” (both of which have the goal of testing). In this case, the description of DR defines phases based on the spread of the intervention (local or broad), again leading to phases that are not analytically distinct. When phases are defined by time, then one must follow them in sequential order, which implies lockstep, recipe-like descriptions of the design process, which obscures its iterative nature. DR theorists explicitly argue against these types of misrepresentations of DR (e.g., McKenney & Reeves, Citation2013; O’Neill, Citation2012; Plomp & Nieveen, Citation2007). Using different labels for activities with the same goal also makes it more difficult to understand the building blocks of DR and how they can be flexibly combined for different projects. For example, if a design researcher conducts three rounds of prototyping and testing, the researcher would have to create additional phases such as: “early prototyping”, “intermediate prototyping”, and “final prototyping”. If the design researcher later conducts four rounds of testing, even more phases would need to be created, leading to unnecessary proliferation of phases to describe the same activity, making DR more difficult to understand. Defining phases dependent on time hinders the development of a shared, field-wide understanding of the DR process.

At the more concrete other extreme, some of the most popular design processes used by practitioners like Instructional Systems Design (ISD) (Dick, Carey, & Carey, Citation2014) provide a clearly articulated process and methods for designing instruction but do not attempt to define the general phases of design that can apply to different types of educational design nor how it might be used for research.

Unfortunately, the phases of DR remain uncertain.

Challenge 2: uncertainty about how DR differs from other forms of research

There is general agreement that DR is unique among research methodologies in that it produces both theory and interventions (Brown, Citation1992; Collins et al., Citation2004; McKenney & Reeves, Citation2012; O’Neill, Citation2012; Richey et al., Citation2004; Sandoval & Bell, Citation2004; van den Akker, Citation1999). But upon closer inspection, it is unclear how DR differs from other research methodologies, if at all.

For example, in reconstructive studies, design researchers use case studies, qualitative observation and experiments to retrospectively examine the design process, typically as conducted by designers outside the research team (Type 2 studies in Richey et al., Citation2004; or reconstructive studies in van den Akker, Citation1999). So the methodology of reconstructive DR studies does not differ substantially from existing research methodologies – the only difference is that the object of study is the design process. This (lack of) difference between DR and other methodologies is reflected in claims that: “methods of [design] research are not necessarily different from those in other research approaches” (van den Akker, Citation1999, p. 9) and that DR is the study, rather than the performance, of instructional design (Richey et al., Citation2004). In the case of reconstructive studies, one could argue that DR does not qualify as a distinct methodology. Moreover, one could further argue that the claim that DR produces novel interventions is misleading, because these interventions are the result of the practical activities of designers, with researchers in the role of passive observer.

At the other extreme, design researchers may themselves perform design activities to produce new interventions (Type 1 studies in Richey et al., Citation2004; or formative research in van den Akker, Citation1999). In this case, the methods of DR must be different from other research methodologies such as case studies or experiments that do not produce new interventions. So at certain times, DR is claimed to be no different from other methodologies and at other times it is claimed to result in innovations that cannot be produced using existing methodologies.

The ambiguity about the DR processes creates further uncertainty about the methods and goals of DR. Many imagine DR as a form of qualitative research useful for building theory, that is, for addressing the problem of meaning (Kelly, Citation2004) or used in the context of discovery (Kelly, Citation2006) as opposed to verifying an existing theory. Although qualitative, it is distinct not just from laboratory experiments but also from ethnography and large-scale trials (Collins et al., Citation2004). Others argue that DR can be productively interleaved with quantitative methods, for example, as a mixed methods approach crossing the field and lab (Brown, Citation1992; Kelly, Citation2006), as a point on an interleaved continuum (Hoadley, Citation2004), or as a methodology with an agnostic stance towards quantitative and qualitative perspectives (Bannan-Ritland, Citation2003). Other writings describe DR as a way to integrate other research methods (Collins et al., Citation2004) or disciplines (Buchanan, Citation2001b) and that “methods of development research are not necessarily different from those in other research approaches” (van den Akker, Citation1999, p. 9). These research methods are applied in a stage appropriate manner (Bannan-Ritland, Citation2003; Kelly, Citation2004, Citation2006).

Challenge 3: uncertainty about how DR differs from design

Proponents seek to establish DR as a distinct and valid methodology. However, in arguing for DR, we often ignore how DR differs (if at all) from design as practised in industry. Is DR simply what design practitioners already do, does it sit apart from design practice, or does it require some integration and modification of the two? We need to understand the distinction between DR and design practice because design practice is presumably what allows DR to produce novel interventions, which makes it distinct from other research methodologies. Definitions within educational research often do not clearly distinguish DR from design (Plomp & Nieveen, Citation2007; Sandoval & Bell, Citation2004).

Other definitions draw a clear distinction between design and DR by arguing that there is a difference between performing and studying design. This implies that in the forms of DR that produce the most generalised conclusions, it is not necessary for researchers to design at all (Type 2 studies in Richey et al., Citation2004). As noted earlier in Challenge 2, this undermines the claim that DR produces novel theory and interventions because Type 2 studies do not produce interventions. Presumably, these interventions are the result of design practice or other forms of DR such as Type 1 studies that are not so distinct from design practice.

In other accounts, DR is framed as similar to design practice but with more explicit connection to theory during preliminary investigation, when embedding theory to inform design choices, more empirical testing, and more systematic documentation (van den Akker, Citation1999). Specifically, DR differs from design because it is: (1) research driven, that is, it addresses research questions, references literature, produces theoretical claims, and seeks to generalise; and (2) involves systematic evaluation, including formative data collection, documentation, and analysis necessary for reproducing research (Bannan, Citation2007; Edelson, Citation2002). Bannan-Ritland (Citation2003) claims that these are not attributes of practitioner methodologies like ISD (Dick et al., Citation2014).

Unfortunately, many of these distinctions lead to problematic claims about design practice that would be rejected by practitioners, such as the implication that non-research design projects do not: conduct preliminary investigations, base design decisions on theory, test interventions or reflect on process and outcomes. If these claims were true in the past, they certainly do not characterise current design practices of industry designers (whose aim is not to write academic papers), but who often develop and apply novel, generalisable models described in forms such as software patterns (Gamma, Helm, Johnson, & Vlissides, Citation1995); use qualitative methods from social science (Beyer & Holtzblatt, Citation1998); evaluate qualitative and quantitative data through user-testing labs (Thompson, Citation2007); use interviews and analytics to iteratively test hypotheses and models (Maurya, Citation2016), and conduct large scale experiments such as Google’s A/B testing (Christian, Citation2012). If DR adds nothing beyond design practice, then there is no need to formalise DR as methodological approach distinct from design.

Challenge 4: there is uncertainty about the use-inspired and theoretical products of DR

The lack of clarity about the nature of DR creates uncertainty about the nature of its products. Few have tried to address the nature of an educational intervention or precisely what kind of theories DR produces, and how they differ from other forms of research.

Challenge 5: uncertainty about what might make DR effective (if it is)

The lack of clarity about the nature of DR makes it difficult to justify the effectiveness of DR as a methodology. DR is only useful if it allows researchers to reliably produce useful interventions and, like other research methods, effective theories. Without a clear description of the DR process and its outcomes, we cannot make a coherent argument about the trade-offs between DR and other methodologies.

Defining the logic of design research

To increase the rigour of DR, we need to define its logic. The five challenges arise because we do not have a clear definition of how DR is conducted at the level of specificity in other methodologies such as randomised controlled experiments, case studies, or grounded theory.

As described earlier, foundational definitions of DR (McKenney & Reeves, Citation2012; Richey et al., Citation2004; Sandoval & Bell, Citation2004; van den Akker, Citation1999) are primarily descriptive definitions, meant to highlight important aspects of DR, rather than formal definitions that “identify several causes and bring them all together in a single balanced formulation” (Buchanan, Citation2001b, p. 8) that articulate the logic of DR in a way that resolves the five challenges. And because these definitions make differing claims about the nature of the DR process, its relation to research, and its relation to practice, we cannot simply paste them together.

To better articulate the logic of DR, we build on the foundational definitions to describe a new approach to DR that synthesises methodological work in the Learning Sciences, and its sister disciplines of cognitive science, educational psychology, computer science, anthropology, sociology, information sciences, education, design studies, instructional design, and others (Sawyer, Citation2014), but also draws heavily from advances in new design fields including human-centred design (Norman, Citation2013) human-computer interaction (Zimmerman, Forlizzi, & Evenson, Citation2007), interaction design (Cooper, Reimann, & Cronin, Citation2007), agile software development (Rasmusson, Citation2010), lean organisational design (Maurya, Citation2016), and design theory (Buchanan, Citation2001a, Citation2001b). This approach is based on our collective four decades of experience as design researchers who have widely published both theory and products. This paper was developed out of necessity to give clear guidance to mentees who struggled to reconcile the underspecified, even conflicting, accounts in the literature of how to conduct and justify DR as an effective research methodology to funding agencies. The goal here is not to describe or provide evidence for the efficacy of every possible method. Rather, it is to provide concepts at the right level of abstraction, the phases of the process that can account for DR activities and allow for comparison across DR studies.

Design research process

To define the logic of design research (DR) we must understand how design researchers generate useful products and effective theory for solving individual and collective problems of education. What is the theory that design researchers use to do their work?

Answering this question requires us to understand the process of DR. Relative to other methodological approaches such as experiments or grounded theory, DR requires a comprehensive process that integrates other approaches (as we will later show). As such, we build on the work of Bannan (Citation2007) in defining DR as a meta-methodology that integrates other methods within a process. DR does not commit researchers to a specific theoretical stance, type of data collection, or method of analysis. So to understand DR, it is important to codify the process both at the level of specific methods (such as creating a design argument) and at the more abstract level of phases that describe the goal of a set of methods within a design process. For example, a survey is a method in a data collection phase of a research process.

The phases of DR are carried out iteratively. Our purpose in defining the phases is to make an analytical distinction between the different activities of DR, not to provide a precise, lock-step recipe, which is neither desirable nor possible. Any presentation of the design process in linear prose and diagrams read left-to-right leads to misinterpretation that the design process should be carried out as a linear recipe no matter how oft the authors protest. Although this criticism is unavoidable, we will try to convince the sceptic that our motives are pure by providing examples of how the design phases are applied iteratively in our own DR projects.

We illustrate our points from examples from the Immigrant Voices DR project. Immigrant Voices began as a project to teach immigrant students in urban public schools how to make 5-minute video documentaries about how policy affects their community in order to develop abilities in policy argumentation, civic journalism, and digital media.

Design process: phases

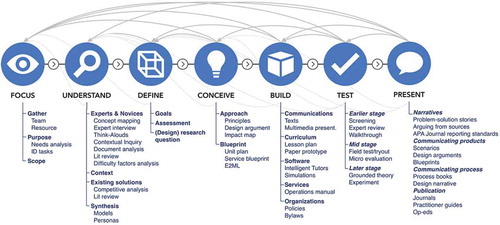

The DR process consists of seven iterative phases in which designers: focus the problem, understand the problem, define goals, conceive the outline of a solution, build the solution, test the solution, and present the solution ().

Figure 1. The design process consists of seven iterative phases: focus, understand, define, conceive, build, test, and present.

Focus

In the focus phase, designers bound the scope of the project. This includes identifying a general problem and initial direction of the project. For example, in the Immigrant Voices DR project, we began with an initial focus as a foundation-funded collaboration between a high school and university to create a civic media programme that develops media literacy and English language arts competencies for students in an urban immigrant community. Bounding the scope of the project requires specifying the stakeholders, their interests, their roles, and the resources of the project. The stakeholders include those who can affect or are affected by the product, including learners, parents, employers, the community, and clients initiating the project. The stakeholders’ interests bound the space of potential problems and solutions that this product should address. Different stakeholders take on different roles in different projects, for example learners and teachers might be members or leaders of the DR team in co- or participatory-design (Sannino, Engeström, & Lemos, Citation2016; Severance, Penuel, Sumner, & Leary, Citation2016; Simonsen & Robertson, Citation2013). Stakeholders might also advise on decisions on a daily or weekly basis, such as in agile design approaches (Rasmusson, Citation2010). Focusing thus impacts who does what in other phases of the process. Project resources include human resources such as the team and its advisors, financial resources, physical resources such as space, and intellectual resources such as technologies or patents owned by the team. Focusing may also include key partners that provide products and services that make the project possible, specifies who is designing the product, and their reasons for participating.

Why

Focusing sets the direction of the project. A design is meant to achieve an intentional goal and there can be no goal without some space for potential problems or opportunities to address. Design projects typically begin with stakeholders’ hope for a better future situation, which may be based on: some ethical rationale (e.g., unequal outcomes for female undergraduates); an analytical rationale, such as when the current situation could be improved by applying social research findings or new technology; or political and electoral rationales that require adjustment to a new reality. Focusing ensures that there is something worth designing (e.g., ethical considerations) and that the team has the resources to potentially succeed.

The scope of a project will often shift due to changes in the stakeholders’ context, their interests and available resources, or in response to insights gained during the design process. Focusing ensures that the design project preserves its importance to stakeholders. However, the scope is typically more stable and broader than the particular, narrower problem that the design team will choose to solve in the define phase. Focusing also restricts the class of problems the design team can select to address. The usefulness of the focus phase is therefore more about identifying what is out of bounds rather than defining the problem that will eventually be solved.

In research

Focusing also includes the academic community as a stakeholder, understanding its interests and identifying its available resources. The research community includes research journals that determine what is published; funders who decide which projects receive support; and research managers (such as senior faculty, graduate advisors or research lab managers) that decide which projects to pursue. For a research project to move forward it must address the interests of the research community. This includes the goal of building theoretical understanding that can guide the design of future educational interventions, as well as expectations of relating to (or explicitly breaking from) previous contributions. Research projects also may have access to additional institutional resources such as access to expertise, research libraries, equipment, facilities, and so on.

DR examples

When designing middle school science classrooms, Edelson (Citation2002) describes bounding the problem in the Progress Portfolio project through initial “problem analysis” identifying that students struggle in “sustaining effective inquiry strategies” (p. 111). In designing networked improvement communities – networks of researchers and educational practitioners – to improve instruction at community colleges, network initiation teams create a network charter and enlist participants to address a common issue (Bryk, Gomez, Grunow, & LeMahieu, Citation2015).

Understand

In the understand phase, designers study learners, domains, contexts, stakeholder needs, and existing solutions. For example, in the Immigrant Voices project, the design team developed models of the knowledge, skills, and dispositions required to create civic media; conducted observations that identify interviewing as a primary learning challenge for students; administered interviews and surveys that identify students’ bilingualism, interest in immigration policy, and access to local sources in the community that can be leveraged in the design; and analysed existing curriculum for teaching video documentary. The understand phase investigates the problem through empirical methods and secondary sources and synthesises that knowledge into a form that can be easily used later. Empirical methods include techniques of observation, interviewing, surveys, and data analytics. Review of secondary sources focuses on: research that helps understand the problem such as models of learning and cultural contexts, and analysis of current solutions to similar or related problems. The empirical data and research literature is commonly synthesised through methods such as identifying themes, building graphical models, and creating learner personas.

Why

Typically a situation for which existing solutions do not work or a novel solution is desirable provides the initial impetus for the project, so designers must work to understand the nature and causes of the current situation. Secondary sources can be helpful in understanding the problem or avoiding dead ends, but typically the problem arises in the first place because the root causes are unclear or because existing knowledge is insufficient to solve the problem. Furthermore, design requires detailed knowledge of user needs and context so designers need empirical methods that can be deployed quickly to understand the problem.

Just as uncovering new constructs through observing nature can be a core contribution in the natural sciences, developing better models of the learning context in the understand phase can be the core innovation of a design or theoretical contribution (diSessa & Cobb, Citation2004), such as building a better model of expertise, identifying the learning challenges in a particular domain, or identifying common participation structures that can be leveraged and designed.

In research

Researchers use all the methods of educational designers as well as methods from educational research required to develop theory. Design researchers, at the very least, must use previous research to identify the open theoretical questions that the design will address. Furthermore, the understand phase can lead to an entire, stand-alone research study. For example, the Immigrant Voices design researchers creating a civic journalism curriculum could have conducted an ethnographic study of learners’ media practices, or a lab-based observational study on immigrant learners’ abilities to critique journalistic products and their parents’ reactions to their work. Whereas a lack of knowledge is a disadvantage in educational design, it presents an opportunity for design researchers to contribute new knowledge.

DR examples

Design researchers use a variety of approaches to understand the problem, for example, van Merriënboer and Kirschner (Citation2012) describe methods for understanding different aspects of expertise such as cognitive strategies, mental models, dispositions, tasks, and tools. In teaching high school students literary analysis, Lee’s (Citation1995) culturally based cognitive apprenticeship project modelled students’ existing cultural knowledge to inform the design that leveraged this knowledge to produce more effective instruction in the conceive phase.

Define

In the define phase, designers delineate the problem, including the learning goals and assessments; constraints; and research question. For example, in the Immigrant Voices project, the team decided that an important learning goal was to teach students how to explain to a peer audience how immigration policies affect people in their local community and assess this competency through students’ 5-minute video documentaries. In service of this practical learning goal, researchers decided that the most interesting research question was how online project-based learning platforms might allow a teacher to orchestrate the activities of multiple student media project teams, community sources, university student mentors, and expert journalist contributors.

Defining means converting an indeterminate problem, which has no solution, into a determinate problem that can be solved (Buchanan, Citation1992). There are many ways to frame a problem. For example, suppose that in the Immigrant Voices project, the designer finds that: (1) the target learners are from immigrant communities, (2) their client wants to improve learners’ performance on Common Core literacy and civic education standards, and (3) there are gaps in research literature about how to leverage learners’ cultural resources. The problem could be defined by questions such as: how might we engage students in debates about legal status?, how might we teach students to construct video documentaries about immigration policy?, or how might we teach students to analyse the political values in English/Spanish-language youth media? By completing these “how might we …?” sentences the designer selects a goal from the infinite and unknown number of goals that could be defined (Parnes, Citation1967). Defining precisely what an interesting question is involves considerations of both the practical value and broader conversation with the research community.

For learning, defining goals and assessments includes specifying the changes in knowledge, skills, and dispositions that one is seeking to promote. These learning goals are relative to a specific set of learners in a given context so the emphasis of the goal may be for whom, for how much, how quickly, and so on. For example, the learning goal in Margolis and Fisher’s (Citation2003) study was to increase computer science abilities for female undergraduates by increasing applications and retention, an equity goal.

Why

A design topic, for example civic journalism, identified in the focus phase and developed in the understand phase, by definition, cannot be solved because it describes no determinate (specific) goal – that is, there is nothing explicit to solve. It is up to the designers to define the goal, taking into account the stakeholder needs that can productively be addressed. Only after the goal has been defined can a design be said to succeed or fail.

In research

The researcher also defines a research question which can take the form “What are the characteristics of an <intervention X> for the purpose/outcome Y (Y1, Y2, …, Yn) in context Z?” (van den Akker, Citation1999, p. 19). The research question is a goal because it defines a question that, if answered, will result in a research contribution, thus defining the theoretical aims of the project just as a learning goal defines the practical aims of the project.

Note that a new framing of learning goals can be the core innovation, because it can lead to novel designs. Applying existing approaches to new problems can create new patterns of use and may prompt design modifications (Arthur, Citation2009, chap. 9) in other phases. Applying existing theories and methods to a new problem can be a theoretical contribution. For example, in Immigrant Voices, defining a Common Core argumentation goal as one of using digital media to persuade peers that immigration policies affect their communities suggests new ways to motivate learners (and also new pedagogical challenges). Redesigning traditional project management software to work in the classroom suggests new ways to support project-based learning and requires different kinds of teacher facilitation. Applying human-centred design to teaching journalism requires new theoretical models for expertise in both domains.

Assessment is typically an important part of DR projects because researchers have an obligation to provide evidence of learning. Assessment for a DR project must take into account the aspects of learning that are most relevant to the research question, which should be related to, but can be different from the overall learning goals of the project. For example, the learning goals of a civic journalism curriculum for immigrant students might be to teach students to create stories that explain how politics affects the community issue, whereas a research question might only ask whether video games in the civic journalism curriculum (a subcomponent of the entire curriculum) can teach policy analysis skills.

DR examples

Lee’s (Citation1995) culturally based cognitive apprenticeship project described earlier sought to teach literary analysis, and Edelson’s (Citation2002) progress portfolio project sought to teach strategies for recording, monitoring, and using evidence. DR goals may be far reaching, for example, the goals of DR social design experiments include a “social agenda of ameliorating and redressing historical injustices and inequities, and the development of theories focused on the organization of equitable learning opportunities” (Gutiérrez & Jurow, Citation2016, p. 567).

Conceive

In the conceive phase, designers imagine the solution. For example, the Immigrant Voices designers created design models for how the curriculum should achieve its learning goals in the form of design arguments further elaborated by service blueprints describing a scenario of how the different components of the learning environment interact including the civic-media lessons, the professional-amateur mentoring model, and the activities conducted on the online learning platform over time. Later after the build and test phases revealed shortcomings of the original model, researchers proposed revised design models for an online project-based learning platform with a combination of online media production guides, computer-supported group critique, and project management tools.

Given a problem definition (even if implicit) from the define phase, the designer can plan a design intended to reach the goal. These design ideas can describe prototypes of vastly different levels of complexity, ranging from communications, to physical artefacts, software, services, programmes, organisations, and systems (Buchanan, Citation2001b; Penuel, Fishman, Haugan Cheng, & Sabelli, Citation2011). Design ideas might include both products-for-use such as the learning environments that directly impact learning and products-for-diffusion such as schools that spread products-for-use (Cole & Packer, Citation2016; Easterday, Rees Lewis, & Gerber, Citation2016a). In this conceive phase, the designer has not yet instantiated the product as a functional prototype, but rather creates a non-functional, symbolic representation that allows the designer to analyse the product by determining its components and their relations.

The distinction between the conceive and build phase is between that of a conceptual plan constrained only by the designer’s knowledge and that of a functional prototype that is at least partially functional, constrained by a medium, and can be used by stakeholders. The analytical distinction between conceive and build does dictate a specific level of fidelity of the design model developed in the conceive phase. Design researchers, including the authors, sometimes choose to build and test with a simple and rapidly constructed design model created in the conceive phase. This rapid cycling through conceive, build, and test (often leading to re-assessing the problem in the define phase) is consistent with approaches advocated by agile software development (e.g., Rasmusson, Citation2010), organisational design (Maurya, Citation2016), and other design researchers (O’Neill, Citation2012).

Why

In some sense, the conceive phase is the heart of the design process – without generating an idea for solving the design problem, there is no “design” to speak of, because there is nothing to build, test, or present, and no purpose for the focus and understand work. Although abridging the other phases might lead to a less effective project, a project that does not generate a new design idea would not be recognisable as a design project at all.

In research

Design researchers usually make their primarily theoretical contribution by developing design models in the conceive phase that make arguments about how we can best promote learning (Easterday et al., Citation2016a). Although all designers must understand the “principle of the thing” (Arthur, Citation2009, p. 33) to create useful products, design researchers have an additional obligation to make the rationale behind the design explicit in the form of design models.

One form of design model, the design argument () specifies the goals, context, substantive focus (intervention characteristics), procedural focus (process required to create the intervention) of the design, along with arguments for why the intervention will achieve the goal. Design arguments thus identify the nature of the intervention and its intended effects.

Figure 2. Design argument form as described by van den Akker (Citation1999).

The design models created in the conceive phase can describe the characteristics of the intervention (substantive emphasis of the design argument) as well as the characteristics of the design process required to create the intervention (procedural emphasis of the design argument) (Easterday et al., Citation2016a).

Design models can also describe the interactions between the components of the interventions, learners and context (in the form of service blueprints) and the causal mechanisms by which interventions achieve their effects (in the form of conjecture maps or driver diagrams).

DR examples

Design researchers use many methods to describe the theoretical products of design research (Easterday et al., Citation2016a). For example, the Community College Pathways project used driver diagrams to sketch out solutions – including creating learning communities, faculty training, and using real-world problems – to meet the goal from the define phase of doubling students who earn college maths credits (Bryk, Gomez, Grunow, & LeMahieu, Citation2015). Other projects have used conjecture maps to describe the experimental technologies, collaborative practices, and epistemic reflection used to teach scientific argumentation in elementary schools (Sandoval, Citation2014, p. 25). Design researchers often propose new design models in the conceive phase in the form of principles such as reciprocal teaching (Brown, Citation1992).

Build

In the build phase, designers instantiate the solution as a usable prototype. For example, in Immigrant Voices, building includes writing the lesson plans and online civic media guides; recruiting, training, and coordinating the mentors; implementing the online learning platform, and so on. Once a design has been conceived, the designer can implement it in a form that can be used. This implementation can be the minimal viable level of fidelity needed to test the designer’s idea, which may concern a particular aspect of the intervention, or whether the entire intervention can achieve the goal, depending on the stage of the project.

Why

A design must be instantiated to achieve a goal, and because a design is never completely finished, each iteration of the build phase produces a prototype that can answer questions about whether the goal has been achieved.

In research

Ideally, research prototypes should be built just like prototypes intended for practical use. However, design researchers are often most interested in testing theoretical claims so they may sacrifice (or not have the resources to implement) features required for a fully effective or commercially viable learning environment. So they will favour implementing features that test theory over features that may make the product more successful for accomplishing the goals of other stakeholders but do not test theory.

DR examples

Examples of the practical products and prototypes developed in the build phase are too numerous to count, but consider the example projects we have discussed so far. These products cut across all orders of design (Buchanan, Citation2001b): multimedia explanations (communication design; Mayer, Citation2009); software to support reflection (software design; Edelson, Citation2002); lesson plans detailing facilitation of collaboration and discursive norms (service design; Lee, Citation1995; Sandoval, Citation2014); and networked organisations linking schools, and the re-design of schools themselves (organisational design; Bryk et al., Citation2015).

Test

In the test phase, designers evaluate the efficacy and behaviour of the solution in context. For example, in Immigrant Voices, designers can test whether the practical learning goals have been achieved and test the validity of the design argument by examining pre/post assessments of the arguments in students’ video documentaries; interviewing students and teachers about the dynamics of the learning environment; qualitative studies of the computer-supported group critique; online log-data about students’ involvement; and so on.

Iterative user testing involves testing successive (often parallel) versions of the design at increasing levels of fidelity. Early testing of prototypes focuses on questions of relevance and consistency and then later on expected practicality, with expert reviews and walkthroughs. Later testing on prototypes constructed in the build phase focus on questions of actual practicality and effectiveness using 1–1, small group, field trials and their variants (Tessmer, Citation1993).

Testing often consists of short formative evaluation, which may not establish warrants of the same strength produced by more costly methods, such as randomised controlled experiments. However, short formative evaluations can quickly reject bad designs or suggest promising designs. This increases the likelihood of finding an effective design that can be re-tested through later evaluations. Later tests can include a range of methods appropriate to researcher commitments. This also allows DR research teams to mitigate risk, for example, avoiding using costly methods such as randomised controlled trials testing until they have developed a more robust design. We consider all these approaches valid forms of testing in DR.

Why

Testing provides the designer with feedback about the success of the design and the validity of the theoretical propositions. It tells the designer whether the design has achieved its practical goals and provides the evidence for judging theoretical claims.

In research

There is often a greater obligation to provide evidence for the effectiveness of the theory/product, and therefore probably a greater value placed on more rigorous and often summative testing. Testing in DR uses the methods of other empirical disciplines, both qualitative and quantitative. However, the focus and amount of testing in DR differs from other types of research. First, testing focuses on interaction of the product with the environment (including the learner). Second, design researchers are specifically interested in producing a desired effect, so although they still act as dispassionate observers in test, their overall intent is to understand whether and how a desired change occurs. Third, designers work in contexts affected by designed products, so during the formative stages of design, design researchers are interested in gathering just enough data to understand success and failures well enough to guide the next change. This means that testing will be iterative, and therefore individual testing cycles are shorter than in other types of research. It also means that testing methods change over the project from quicker methods for detecting large problems in the beginning, to more costly, but sensitive methods at the end as certainty in the effectiveness of the design (hopefully) increases.

DR examples

Examples of testing in design research can be found in the findings section of any empirical research paper studying a design intervention. In Lee’s (Citation1995) culturally based cognitive apprenticeship project teaching literary analysis, Lee employed pre-post tests on essay scores (experimental, control, no-treatment conditions), and discourse analysis on classroom interactions to examine and explain the differences between the conditions.

Present

In the present phase, designers communicate to key stakeholders why and whether the design better solves a problem that addresses their interests. The Immigrant Voices project required a number of communications such as: presentations and reports to school administrators (and other practitioners) about the value of the programme for developing civic, media, and literacy competencies; scientific reports and presentations about the theoretical value and validity of the design arguments developed; reports to the foundation funders about the practical and academic output of the project; public presentations of student work to parents, mentors, and community members involved in the project including the impact on individual students; and handoff of the curricular materials, blueprints, and software to a publisher or some other distributor.

Presenting requires the designers to explain: the problem of concern to the stakeholders; a new product that addresses that problem; evidence showing that the design works and how; and often the process and insights that led to the design. The form of communication often consists of presentations or reports depending on the setting. The present phase includes communication at the end of the project, as well as pitching at the beginning of the project and also throughout the project to build and maintain support.

In the present phase, designers attempt to convince decision makers to continue (or discontinue) the project. Communicating to users is often embedded within another design process, for instance creating a startup that can market products to customers.

Why

Design process descriptions often overlook the present phase. However, in both industry and research, designers undertake the project on behalf of other stakeholders, often with the benefit of those stakeholders’ resources, making designers accountable to those stakeholders. The purpose of the design process is not just to develop a better solution, but also to demonstrate that the new products better address stakeholder needs.

In research

For research, key stakeholders include the research community, reviewers, and funders, so the present phase requires oral presentations, publications, and proposals. Academic researchers aim to develop novel theoretical contributions that build, extend, or replace existing theory, and share these contributions back to their academic community. In contrast, industry researchers may aim to create private intellectual property, a trade secret, or industry knowledge that provides an advantage to their company by improving the market value of a specific product. Although the academic and industry standards for communication may differ in aim and style, both groups share a need for pitching and presenting.

DR examples

One only has to open a design research journal or attend a design research conference to see examples of presentations of design research. While design research presentations typically follow academic research paper conventions (American Psychological Association, Citation2010, Appendix: Journal reporting standards; McKenney & Reeves, Citation2013), design research papers may describe multiple iterations that are repeated sections of: intervention, setting, participants, data collection, analysis, and findings (as in Easterday, Rees Lewis, & Gerber, Citation2016b). This allows the design researcher to explain the design argument space searched across multiple iterations or studies.

Design process: iteration

The phases describe the subgoals of the design process, and while earlier phases generally produce intermediate products used in later phases, this does not mean that the phases are necessarily or always carried out in order. Rather, design phases are carried out iteratively.

For example, after building a project-based, civic journalism curriculum for Immigrant Voices, testing revealed that orchestrating the activities of student teams, undergraduate mentors, community members, and visiting experts was too great, so designers revisited their understanding of how other organisations coordinate project activities to conceive a new project-based learning platform to orchestrate the course. In another project, initial work might concentrate on the present phase such as in making a pitch, or in an academic project by writing a grant. The bulk of another project might centre almost exclusively on rapid cycling through the phases of conceive, build, and test as in agile software (e.g., Rasmusson, Citation2010). Or projects may use a more principled, gated approach iterating between development stages of organisational design (Maurya, Citation2016). As a meta-methodology, this process commits the researcher to iterative development of the theoretical and empirical products of design research on the basis of empirical evidence.

The principle behind rapid iteration is to learn early, learn often (Beckman & Barry, Citation2007). Rapid iteration is a tenet of modern human-centred design (Norman, Citation2013). It decreases the risks of designing interventions that are over-budget and behind schedule by quickly testing the designer’s assumptions. Rather than design an entire intervention and discover only at the end that it does not work, iterative design argues for quickly building low fidelity prototypes, testing them, and re-designing – gradually evolving the intervention over time. Gaining new knowledge through rapid iteration is one of the core lessons that educational DR can take from other, more agile, design disciplines (O’Neill, Citation2012).

Iteration also addresses the problem of over-collecting data. Rather than collect data on “everything that moved within a 15-foot radius of the phenomenon” (Dede, Citation2004, p. 107) designers can focus on core metrics (Maurya, Citation2016) that test a subset of the design argument instantiated in the current iteration. If the design fails, the argument can be adjusted and tested again. If the design passes the test, designers can collect data for a more rigorous test or move on to the next subset or version of the design argument.

There is a delicate balance between planning, iteration, and the medium of the prototype. In cases where planning allows designers to avoid mistakes and the medium makes testing costly (e.g., building bridges), there will be little iteration or at least a greater emphasis on lower-fidelity prototyping and modelling. However, in cases where the ability to avoid bad designs through planning is limited and the medium makes the costs of testing low (e.g., web applications), then iteration is likely to be quick and frequent. Because education is a complex environment, our ability to predict the effect of an intervention is often low. The cost of testing in education is often relatively moderate – although the cost of implementing a lesson is low, the cost of testing may be greater depending on the type of question/evaluation. However, there is another potential cost in providing learners a lower quality intervention than they could otherwise receive (either by implementing a lower quality treatment, or a control group not receiving the new design). Split and now-and-later research study designs can also mitigate these costs, which establishes a high-degree of causal inference (Carver, Citation2006).

Design process: recursively nested processes

Our description of DR to this point better defines the process of DR, but only partially resolves the confusions about what type of methodology DR is (for example the debates about whether DR is qualitative or quantitative) or how DR differs from other scientific research methodologies (Challenge 2). The resolution is not intuitive. We attempt to resolve the confusion by describing how design and research processes interact.

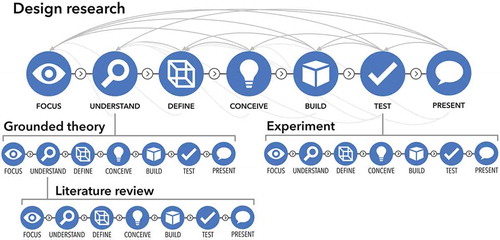

We must first recognise that scientific findings are products created by a design process. For example, scientists may conduct an experiment in which they focus on a topic, understand the background literature, define a hypothesis, conceive of an experiment, build evidence by gathering and analysing data, and finally test the validity of their findings, perhaps through peer-review. Qualitative research methodologies such as grounded theory follow a similar set of phases, except the purpose is to build theory rather than verify a hypothesis.

Products that serve one purpose, such as verification of a hypothesis, can be used as components in the design of another product, such as an educational intervention (). That means that in designing a learning environment, we might conduct other sub-design processes (such as a qualitative study or an experiment) as part of the DR process. For example, a DR study of a journalism curriculum might conduct a qualitative study about learners’ media practices in the understand phase, or an experiment evaluating the curriculum in the test phase.

Figure 3. Scientific research methodologies (both qualitative and quantitative) follow a design process and produce products such as theories and models that can be incorporated into the design of another product such as an educational intervention – DR thus recursively nests different scientific processes to do its work.

In other words, design processes can be recursively nested within each other. As a meta-methodology, DR does not commit researchers to a specific theoretical perspective, type of data collection, or analytical approach, but rather, the researcher must deploy these methods in a way that is appropriate to the iterative, empirical development of the theoretical and empirical products of design research. This explains the shape-shifting nature of DR – DR looks like other forms of research because it incorporates these methodologies to do its work.

Design process: stage-dependent search

Understanding DR as a design process that incorporates other scientific design processes in a nested manner, allows us to make a more compelling argument for why DR can be an effective educational research meta-methodology. DR uses a stage-dependent search strategy (Bannan-Ritland, Citation2003; Kelly, Citation2004, Citation2006), in which designers choose different build and test methods depending on the stage of the design. In early stages of a project, such as when the problem context is poorly understood and there are few effective implementations, researchers are likely to produce unsuccessful designs, so they must choose a research and development strategy that allows them to quickly reject failures and understand the theoretical issues that must be addressed. So in the early stages of a project, researchers should focus on low-fidelity prototyping and collect the minimal amount of data needed to quickly reject failures and identify potential successes (see stage-dependent search using low fidelity prototyping strategy in ). As researchers identify promising prototypes, they can focus on theory building with qualitative methods to better understand the issues a design might address and the mechanism through which it affects learning. Once researchers have a plausible, well-grounded theory and implementation with some evidence of success as judged by the standards of their research community, they can conduct randomised controlled experiments to verify the efficacy of the theory and intervention. If researchers use randomised, controlled experiments at the beginning stages of a complex design problem, they are likely to waste resources verifying a bad design. Likewise, if researchers never advance beyond theory building and radically novel designs, they are unlikely to provide strong evidence for the efficacy of an intervention or principle.

Design process: implementation as process, not phase

Unlike other accounts of DR (e.g., Bannan, Citation2007), we do not consider promoting diffusion (implementation, adoption, spread, dissemination, etc., see Clark & Guba, 1965; Rogers, Citation2003) as a design phase but rather a separate and interleaved design process.

The design process creates a product to achieve some desired goal. The product may be of different sorts: communications, artefacts, services, organisations, policies, and so on (Buchanan, Citation2001b). Although the particular methods for producing these products will differ, the phases of design process are the same.

In some cases, the goal of design is to produce a product (such as a curriculum or online computer tutor) that will be useful/usable/desirable to a group of people. In other cases, the goal is to produce a second product (such as a new organisation) to promote diffusion of the first product (e.g., a curriculum). Both the product-for-use (e.g., curriculum) and the product-for-diffusion (e.g., organisation) are designed via a design process of focusing, understanding, defining, conceiving, building, testing, and presenting. For example, if the product-for-diffusion is a company, the design process (e.g., Maurya, Citation2016) might involve: focusing on an initial market, team, and resources; understanding the market segments and their needs; defining a goal of becoming sustainable; conceiving a business model including value propositions, sales channels, and revenue streams; building different portions of the model, testing the model according to the impact on customers; and presenting to funders. Of course organisations are only one type of product-for-diffusion, one could also design programmes, partnerships, licensing agreements, government policies and so on to disseminate products-for-use.

Rather than see diffusion as a sub-phase of the design process, it is better to think of the goal of educational change as requiring two interleaved design processes, one for creating a product-for-use and one for creating a product-for-diffusion. The design process for creating a product-for-use has varyingly and inconsistently been referred to as design research, research and development, innovation, invention, design, and innovation-development. Similarly, the design process for creating a product-for-diffusion has been referred to as diffusion, adoption, implementation, spread, dissemination, and innovation-decision (see Clark & Guba, 1965; Penuel et al., Citation2011; Rogers, Citation2003). Each process is intimately connected and dependent on the other, but each has a goal of producing a separate product.

Thinking of educational change as requiring two interleaved design processes is consistent with accounts of product development researchers West and Farr (Citation1990) who define innovation as product development and implementation. It also resolves the problem of definitions of DR conflating phase and iteration.

More importantly, thinking of educational change as two interleaved design processes: a research and development process for designing products-for-use and a diffusion design process for designing products-for-diffusion may explain why educational researchers have struggled to scale their interventions – previous accounts of DR process have either ignored diffusion, or relegated it to a sub-phase of designing a product-for-use. Design-Based Implementation Research (DBIR) has emerged as response, arguing that DR must develop an increased capacity to address change in systems (Penuel et al., Citation2011). Yet one of DBIR’s greatest challenges is lack of “research on the processes” of DBIR for achieving systems change (Penuel et al., Citation2011, p. 335). The design process described here, when imagined as twin, interleaved processes of research and development and diffusion, provides the analytic framework for advancing research on the processes of DBIR.

The practical and theoretical products of design research

Although we can say much about the products of DR (Easterday et al., Citation2016a), it is sufficient to make a few points about the nature of these products to provide a formal definition of educational DR.

The products of educational DR are arguments for how people should learn. These include the practical products of design and DR, which are prototypes that promote learning in the real world. These also include the theoretical products of DR, which are the representational design models describing how to design learning environments that help people learn. Design models take the form of blueprints that describe the necessary and sufficient characteristics of the learning environment and the causal mechanisms by which it promotes learning in a given context. These design models also include design arguments and mock-ups, which are supported by principles and frameworks that aid in the construction of design models that guide design.

DR is different from other educational research methodologies in that it studies the effects of previously non-existent interventions on learning. Therefore, it is the approach of choice when current interventions are not sufficient for promoting the desired learning and stakeholders desire interventions to promote that desired learning.

In cases where solutions exist, or it is sufficient merely to understand the nature of existing solutions, other methodologies that do not require designing new interventions, such as grounded theory or experimentation may be sufficient. However, when these other methodologies are applied outside the context of DR, they may not produce theoretical contributions that are helpful for guiding the design of interventions. Therefore, if the goal is to promote learning, then it is best to apply these methodologies as nested sub-processes of DR.

A formal definition of design research

We can now provide a formal definition of DR that captures the logic of design research described previously. The existing foundational definitions we build upon were intended as descriptive definitions that identify “… a single important cause of a subject and point towards how that cause may be explored in greater depth and detail, allowing an individual to create connections among matters that are sometimes not easily connected …” rather than formal definitions that “… identify several causes and bring them all together in a single balanced formulation” (Buchanan, Citation2001b, p. 8). With the new understanding of the DR process described previously, we can now provide a formal definition of educational DR:

Educational design research is a meta-methodology conducted by education researchers to create practical interventions and theoretical design models through a design process of focusing, understanding, defining, conceiving, building, testing and presenting, that recursively nests other research processes to iteratively search for empirical solutions to practical problems of human learning.

This formal definition of DR identifies, the material, form, agents, and purpose of DR:

The material of the meta-methodology, the stuff of which it is made, resides in the breadth of knowledge and activities related to the interaction of learners, contexts, and learning environments designed to support learning. This, almost unlimited application, subject matter includes both the design knowledge and projects to promote learning.

The form this knowledge activity takes is that of design models of how learning environments promote learning (such as design arguments, blueprints, mock-ups) and the iterative, nested design process that integrates the methods of research and design.

The agents immediately responsible for creating the meta-methodology we call design researchers, including practitioners and academics that work through the various associations and institutions to fund, design, research, publish, and train people in DR.

The end purpose of this meta-methodology is to devise how to better create learning environments, including the creation of innovative prototypes, theory and methods that expand the meta-methodology and our ability to promote learning.

Educational DR is thus a design science (Collins et al., Citation2004; Sawyer, Citation2014), like engineering or the sciences of the artificial (Simon, Citation1996) that integrates design and research to simultaneously create new solutions and build theory.

Resolving the uncertainties

Defining the logic of design research in this way resolves the five uncertainties described earlier.

Resolution 1: uncertainty about the design research process

The formal definition resolves the uncertainty about the phases of design by clearly defining the phases of DR and describing diffusion of educational interventions as two interleaved design process of creating a product-for-use and a product-for-diffusion. For example, the different phases of design allow us to more clearly specify the most important objectives in the complex set of activities in the Immigrant Voices project across the phases of focusing, understanding, defining, conceiving, building, testing, and presenting (see ). This clarity allows us to better conduct DR, train new researchers, improve DR meta-methodology, and communicate process within and outside the DR community.

Table 1. The seven phases of design and design research process.

Resolution 2: uncertainty about how DR differs from other forms of research

DR creates products to solve problems of education by using other methodologies as nested processes (sub-phases) of design. The formal definition shows how DR differs from other forms of research because it studies previously non-existing, use-inspired products that are created during the research process. For example, the Immigrant Voices project used existing research methodologies, such as task analysis of journalism expertise and semi-structured interviews on students’ cultural resources, within a design process that developed a novel design argument and intervention. DR is similar to other forms of research because it incorporates those other scientific processes into the design process for creating educational interventions in a recursive, stage-appropriate, and nested manner. However, although other sciences can produce theory used for DR, it is unlikely that other sciences will ask the right question if not engaged in design. That is, when scientific investigations are not initiated as part of a DR process to solve a real-world problem, they will often produce theory that cannot guide design because they do not address “intervenable” design factors or operationalise them for a design context. Thus, DR is particularly well suited for developing theory to guide the design of products that solve educational problems when those products do not yet exist.

Resolution 3: uncertainty about whether design research adds anything beyond design practice

DR differs from design practice in that it does not just produce an educational intervention but makes use of nested scientific processes to produce theory in the form of novel design models of how people learn that do not just promote learning but expand our capacity to promote learning. For example, in the Immigrant Voices project, ongoing work analysing the content of the student video documentaries tests the effectiveness of the design argument (that we can best teach policy argumentation to immigrant students through civic journalism projects that deliberately juxtapose the perspectives of policy makers, community members, and the students themselves, on immigration policy). By incorporating other research processes, DR produces theories connected to literature and more rigorously tests interventions. Of course, there is no hard line separating the work of practitioners and researchers because practitioners use similar methods – the difference is one of degree and intent.

Resolution 4: uncertainty about the products of design research

DR produces theories and interventions that make arguments about how people should learn (Easterday et al., Citation2016a). For example, further iterations of the Immigrant Voices project have led to new theoretical models describing how digital studios can orchestrate project-based learning by helping teachers and students manage the self-directed learning cycle. These arguments about how people learn can be at different scales such as the individual, classroom, organisation, community, or wider network. Although DR incorporates other sciences, it focuses on the interaction between learners and designed interventions to develop new abilities. As such, it preferences theories such as design principles, patterns, and ontological interventions that describe these interactions.

Resolution 5: uncertainty about what might make design research effective (if it is)

DR produces gains by deploying the appropriately nested scientific process in a stage-dependent manner. DR efficiently develops theory by identifying plausible interventions and constructs in early phases that are more rigorously verified in later stages. For example, over several iterations, the Immigrant Voices project designed, implemented, and tested journalism curriculum across multiple schools leading to new questions about orchestrating project-based learning. To address issues of orchestration the team designed a novel online learning platform, its effectiveness then evaluated through early qualitative and later by pre/posttests of learning. This then led to the current iteration investigating the diffusion of the platform across a network of thousands of students. Rather than fully implement the initial concept for an intervention and test it through a randomised, controlled experiment (which is expensive), the DR process advocates testing multiple initial ideas through low cost methods such as paper prototyping to quickly identify failures, then invest more implementation and testing resources in later iterations, only fully implementing prototypes and rigorously testing them in later stages. Furthermore, DR can achieve greater theoretical and practical impact by expanding its focus from products-for-use to products-for-diffusion.

Conclusion

We have defined DR as a process that integrates design and research methods to allow researchers to generate useful educational interventions and effective theory for solving individual and collective problems of education. This definition of the DR process is neither a way, nor the way, to conduct DR, rather, it describes the fundamental nature of all forms of DR in order to help us better communicate and think about DR. This definition is not just an academic exercise, but necessary to establish DR as a meta-methodology, allowing us to better replicate the design process, to apply methods from other design methodologies, to better teach DR to new design researchers, to acquire more resources, and ultimately to accumulate theory relevant to practice.

Defining the logic of DR helps us to better practise DR, which leads to improved learning outcomes, training of new researchers, methodology, and communication of DR within and outside the DR community. Taken together, this work furthers the paradigmatic development of educational design research in the following ways.

Better design

Defining the process of DR helps us to better determine which methods to use and when. For example, when planning DR projects, thinking about the test phase has prevented us from jumping to formal evaluation too early or dwelling in theory building too long. DR projects work under constraints of people, resources, and time, and the phases have allowed us to more deliberately deploy those resources.

Training new researchers and engaging stakeholders

There is a bewildering array of methods applicable to DR projects and it is challenging for new researchers to make sense of these methods. Similarly, the array of different methods and decisions can make DR unclear to stakeholders. We use the DR phases to explain how the DR process works, to help novice researchers organise sets of research methods, and to describe the meta-cognitive strategies we use to conduct design-based research. Just as design phases help researchers think precisely, they also serve as a tool to make design logic explicit to those new to DR.

Improving design research process

A clear definition of the phases also helps us to improve the DR process. In struggling to consolidate learner data gathered in the understand phase, we have used human-centred design methods for synthesising user data, such as personas. The phases allow us to more easily borrow methods from other methodologies.

Communicating research process

We have also used the phases to describe the choices made during a DR project. In publishing research and grant applications, the phases more concisely communicate the past history or future plans of a DR project. Well-defined DR phases allow us to explain the logic of DR to other researchers. For example, quantitative psychologists may see the lack of inter-rater reliability in the early stages of a DR project as a lack of rigour. Researchers from other disciplines will be inclined to judge DR by the methodological standards of their own discipline. However, when design researchers can explain the methodological logic of shifting from an early focus on design concepts and theory building to a later focus on verification, we have found that those outside the discipline are often sympathetic to the aims of DR. Others will only accept DR as a credible methodological approach when design researchers clearly and precisely articulate the rationale behind the DR meta-methodology.

By formally defining the logic DR, we establish its credibility as a legitimate meta-methodology of educational research.

Acknowledgments

We would like to thank the members of Delta Lab for their ongoing support, and Pryce Davis, Bruce Sherin, Stina Krist, and Julie S. Hui, for their feedback on an earlier draft of this work. We thank ISLS for granting permission to reuse portions of: Easterday, M. W., Rees Lewis, D., & Gerber, E. M. (2014). Design-Based research process: Problems, phases, and applications. In Proceedings of the International Conference of the Learning Sciences, June 23-27, 2014, Colorado, USA (pp. 317-324), copyright ISLS. This work was first published in Learning: Research and Practice 17th February 2017 available online at: http://www.tandfonline.com/10.1080/23735082.2017.1286367.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

References

- American Psychological Association. (2010). Publication manual of the American Psychological Association (6th ed.). Washington DC: Author.

- Anderson, T., & Shattuck, J. (2012). Design-based research: A decade of progress in education research? Educational Researcher, 41, 16–25. doi:10.3102/0013189X11428813

- Arthur, W. B. (2009). The nature of technology: What it is and how it evolves. New York, NY: Free Press.

- Bannan, B. (2007). The integrative learning design framework: An illustrated example from the domain of instructional technology. In T. Plomp & N. Nieveen (Eds.), An introduction to educational design research (pp. 53–73). Enschede, Netherlands: Netherlands Institute for Curriculum Development.

- Bannan-Ritland, B. (2003). The role of design in research: The integrative learning design framework. Educational Researcher, 32, 21–24. doi:10.3102/0013189X032001021

- Barab, S., & Squire, K. (2004). Introduction: Design-based research: Putting a stake in the ground. Journal of the Learning Sciences, 13, 1–14. doi:10.1207/s15327809jls1301_1