ABSTRACT

The function of rapid perceptual learning for speech in adult listeners is poorly understood. On the one hand, perceptual learning of speech results in rapid and long-lasting improvements in the perception of many types of distorted and degraded speech signals. On the other hand, this learning is highly specific to stimuli that were encountered during its acquisition. Therefore, it is unclear whether past perceptual learning could support future speech perception under ecological conditions. Here, we hypothesize that rapid perceptual learning is a resource that is recruited when new speech challenges are encountered and used to support perception under those specific conditions. We review three lines of evidence related to aspects of this hypothesis – about the specificity of learning, the general nature of associations between rapid perceptual learning and speech perception and rapid perceptual-learning in populations with poor perception under adverse conditions.

Perceptual learning is defined as a long-lasting increase in the ability to extract information from the environment following experience or practice (Gibson, Citation1969; Green, Banai, Lu, & Bavelier, Citation2018). Although perceptual learning has been observed throughout life, its function in adults is not obvious. For speech stimuli, we believe that a major role for perceptual learning in adults is to support perception in less than optimal conditions (Samuel & Kraljic, Citation2009). Consistent with this idea, multiple studies documented rapid improvements in the perception of various types of degraded and distorted speech such as time-compressed, noise-vocoded, accented and dysarthric speech (Borrie, McAuliffe, & Liss, Citation2012; Davis, Johnsrude, Hervais-Adelman, Taylor, & McGettigan, Citation2005; Dupoux & Green, Citation1997; Gordon-Salant, Yeni-Komshian, Fitzgibbons, & Schurman, Citation2010). But while it is easy to elicit perceptual learning of speech in laboratory conditions, this learning is quite specific to the conditions under which it emerged. Therefore, it is hard to see how past perceptual learning could support future speech perception under realistic conditions in which the acoustic characteristics of the environment and of the communication partners are not fixed. Instead, we hypothesized that rapid perceptual learning is a resource that supports speech perception online, as new speech challenges are met (Manheim, Lavie, & Banai, Citation2018; Rotman, Lavie, & Banai, Citation2020). Consistent with this hypothesis, a recent study of visual tasks and auditory frequency discrimination suggests that a common perceptual learning factor accounts for approximately 30% of the variance across different learning conditions (Yang et al., Citation2020), but whether the same holds true for rapid learning of speech is at present unclear.

In the following sections, we briefly review findings consistent with three predictions derived from the above hypothesis. First, if rapid perceptual learning supports speech perception online, a new challenge should yield rapid learning that allows listeners to adapt to this challenge. Furthermore, this learning should be fairly specific to the circumstances that yield it. Otherwise, past learning could support future speech perception with no need for rapid, online learning to occur, which we know from past auditory training studies not to be the case (Karawani, Bitan, Attias, & Banai, Citation2016; Karawani, Lavie, & Banai, Citation2017; Saunders et al., Citation2016; Song, Skoe, Banai, & Kraus, Citation2012). Second, individual differences in rapid perceptual learning of speech should correlate with individual differences in speech perception under independent challenging speech conditions. Third, based on the idea of falsifiability or refutability, good rapid learning should be impossible under conditions in which speech perception is severely impaired (modus tollens). We review evidence consistent with a softer version of this claim that listeners with speech perception difficulties should exhibit reduced or slower rapid perceptual learning for the conditions that pose perceptual difficulties, even when initial difficulties are controlled for by using different levels of challenge or adaptive procedures. We conclude by discussing some of the implications for perceptual learning in clinical settings and the limitations of current research in deciphering causality. The large literature on speech category learning (e.g., Eisner & McQueen, Citation2005; Kraljic & Samuel, Citation2006, Citation2007; McQueen, Cutler, & Norris, Citation2006) is beyond the scope of the present brief review because these studies generally involve manipulating single features or segments in isolation (with or without lexical guidance). Furthermore, it is not clear if category learning is correlated with the learning on which we focus. For example, Zheng and Samuel (Citation2020) found no correlation between category learning and accent adaptation.

New Acoustic Challenges Yield Rapid but Specific Perceptual Learning

Rapid perceptual learning has been documented for a large range of challenging speech conditions created naturally or artificially. In naturally challenging conditions, rapid learning was documented with rapid (Adank & Janse, Citation2009), accented (Adank & Janse, Citation2010; Baese-Berk, Bradlow, & Wright, Citation2013; Bradlow & Bent, Citation2008), or pathological (Borrie, Lansford, & Barrett, Citation2017) speech. Under lab-created conditions, rapid learning arises with time compression (Dupoux & Green, Citation1997; Pallier, Sebastian-Galles, Dupoux, Christophe, & Mehler, Citation1998), noise vocoding (Davis et al., Citation2005; Huyck & Johnsrude, Citation2012; Loebach & Pisoni, Citation2008), or speech synthesis (Greenspan, Nusbaum, & Pisoni, Citation1988; Lehet, Fenn, & Nusbaum, Citation2020). In a typical experiment on rapid perceptual learning of speech, listeners are presented with a set of perceptually difficult speech stimuli, and their recognition of this speech improves with additional brief experience. For example, Dupoux and Green (Citation1997) presented listeners with 20 sentences that were time-compressed to 35% of their initial duration. On the first five sentences, mean recognition accuracy was 29%, improving to >38% on the final five sentences. Likewise, for noise-vocoded speech, rapid learning also occurs with 20 or fewer sentences (Davis et al., Citation2005; Huyck, Smith, Hawkins, & Johnsrude, Citation2017; Wayne & Johnsrude, Citation2012).

But how general is this rapid learning? As explained above, training-induced learning is quite specific. Nevertheless, if rapid perceptual learning for speech generalized broadly from one acoustic condition to another, past learning could support speech perception under a variety of new conditions even without additional online learning. Sadly, it seems that perceptual learning is quite specific to the acoustics of the challenge that triggered it and does not generalize (or transfer) to new conditions. For example, for time compressed speech, learning generalizes across compression rates (Dupoux & Green, Citation1997; Peelle & Wingfield, Citation2005), but less so across talkers (Dupoux & Green, Citation1997; Tarabeih-Ghanayim, Lavner, & Banai, Citation2020). Furthermore, 2 weeks after exposure to 20 time-compressed sentences, listeners recognized the compressed sentences to which they were previously exposed more accurately than naïve listeners with no previous experience with time compressed speech. This learning was specific and did not generalize to stimuli that were not encountered during the initial exposure phase (Banai & Lavner, Citation2014). Thus, while rapid learning can be retained over time, it does not generalize, perhaps because the stimuli encountered during the exposure period do not sufficiently characterize the structure underlying the new stimuli presented at test (Greenspan et al., Citation1988).

Consistent with the idea that transfer of learning is limited by the range and characteristics of stimuli encountered while learning, transfer of other types of degraded speech learning also depends on the similarity between “learned” and “new” stimuli (Borrie et al., Citation2017; Eisner, Melinger, & Weber, Citation2013; Hervais-Adelman, Davis, Johnsrude, Taylor, & Carlyon, Citation2011). For example, perceptual learning induced by listening to a brief paragraph read by a person with dysarthria (35 phrases) and receiving written lexical feedback, was fairly talker specific (Borrie et al., Citation2017). In this study, participants tested with new speech presented by the same person with dysarthria to whom they listened during exposure were substantially more accurate than participants who were tested with dysarthric speech from new talkers. Furthermore, the amount of learning depended on the acoustic similarity (as judged in previous work) of the different talkers. More transfer was observed across talkers who shared stress rhythm or vocal quality with the talker encountered during the learning phase. Likewise, transfer of the perceptual learning of noise-vocoded speech is also limited by the acoustic characteristics of the carrier signal (Hervais-Adelman et al., Citation2011). Following rapid learning of 20 noise-vocoded sentences, transfer of learning was observed across frequency regions and talkers with different accents (Hervais-Adelman et al., Citation2011; Huyck & Johnsrude, Citation2012). Nevertheless, only partial generalization was observed when the carrier signal used to create the vocoded speech changed (e.g., from noise to sinewave or pulse train), suggesting that learning is at least partially specific to the acoustics of the vocoder (Hervais-Adelman et al., Citation2011).

Finally, outcomes of studies on ambiguous speech-sound learning are also consistent with the current claim that the generalization of perceptual learning for speech is limited and constrained by the acoustic or acoustic-phonetic features of the trained stimuli and talkers (Eisner & McQueen, Citation2005; Kraljic & Samuel, Citation2006, Citation2007). For example, although learning to recognize an ambiguous fricative sound generalizes to new words (McQueen, Cutler & Norris, Citation2006), generalization is talker dependent (Eisner & McQueen, Citation2005; Kraljic & Samuel, Citation2007). This is not the case for stop consonants that do not convey systematic talker information, and for which learning generalized more broadly across speech continua and talkers (Kraljic & Samuel, Citation2006, Citation2007).

Rapid Learning versus Perception of Independent Adverse Speech Conditions

Whether a general speech perception factor accounts for individual differences across different speech conditions is still debated, but the literature indicates that cognitive resources are recruited to support speech perception under different adverse conditions (Banks, Gowen, Munro, & Adank, Citation2015; Bent, Baese-Berk, Borrie, & McKee, Citation2016; Kennedy-Higgins, Devlin, & Adank, Citation2020; McLaughlin, Baese-Berk, Bent, Borrie, & Van Engen, Citation2018; Vermeire, Knoop, De Sloovere, Bosch, & van den Noort, Citation2019). For example, Kennedy-Higgins et al. (Citation2020) reported that a principal component based on both working memory and vocabulary correlated with perception of speech in quiet, speech in noise and time-compressed speech. Against these findings, we ask if rapid perceptual learning of speech might also be a resource that supports speech perception.

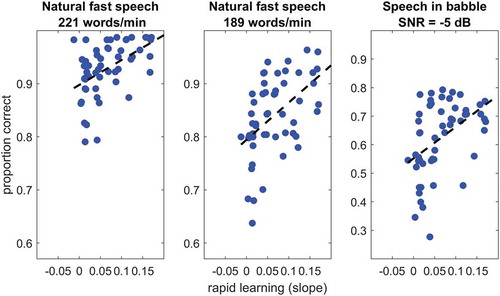

In a series of studies, we found that individual differences in rapid perceptual learning of one type of distorted speech (e.g., time-compressed speech) were consistently related to individual differences in speech perception under different adverse conditions (e.g., speech in noise) (Banai & Lavie, Citation2020; Karawani et al., Citation2017; Manheim et al., Citation2018; Rotman et al., Citation2020). Furthermore, these associations could not be explained by correlations in performance across adverse conditions. One study assessed rapid learning of time-compressed speech, perception of natural-fast speech and speech in noise, hearing, attention, working memory, and vocabulary (Rotman et al., Citation2020). Rapid learning was assessed with 10 (highly) time-compressed sentences. Speech perception was assessed with natural fast speech at two different rates produced by two different talkers and with speech in 4-talker babble noise. shows the correlations between rapid learning and speech perception. Speech perception was modeled as a function of all other variables, including speech perception prior to learning, using mixed-effects modeling. Rapid learning and vocabulary were the only significant predictors with odd ratios ranging from 1.45 to 1.68 for rapid learning slopes and 1.21–1.30 for vocabulary. In other words, speech perception was more accurate in individuals with larger rapid learning slopes and higher vocabulary scores.

Figure 1. Speech perception (proportion correct) vs. rapid learning of time-compressed speech (rate of improvement over 10 sentences, quantified as the slope of the accuracy vs. sentence number curve). Dashed lines show linear fits. From Rotman et al. (Citation2020), CC-BY-NC

Rapid perceptual learning of speech contributes to speech perception in different conditions across age groups, hearing levels and tasks (Manheim et al., Citation2018; Rotman et al., Citation2020). Furthermore, the association is not limited to rapid learning of time-compressed speech. Learning of speech in noise with one type of task (listening to passages) also accounted for unique variance in independent speech-in-noise tasks (sentence verification and pseudo-word discrimination) (Karawani et al., Citation2017). Together, these findings suggest that when listeners encounter a new speech challenge, they may recruit a general rapid learning resource which is at-least partially independent of other cognitive resources such as working memory and vocabulary. Furthermore, findings are not likely to reflect the generalization of past rapid learning to new future challenges because the studies reviewed in the previous section suggest that rapid learning is quite specific to the conditions under which it occurs.

Finally, the neural changes that accompany rapid learning of speech also suggest that perception under challenging conditions recruits general resources (e.g., working memory, prediction error, or language) which could be shared across challenging conditions, although the details are beyond the scope of the present manuscript (Adank & Devlin, Citation2010; Eisner, McGettigan, Faulkner, Rosen, & Scott, Citation2010; Sohoglu & Davis, Citation2016). Furthermore, with further training, plastic changes to sensory processing seem to occur only after perceptual learning has already been established (Reetzke, Xie, Llanos, & Chandrasekaran, Citation2018). For a discussion of why this does not necessarily result in generalization to untrained features see Ahissar, Nahum, Nelken, and Hochstein (Citation2009).

Rapid Perceptual Learning Seems Reduced in Groups with Speech Perception Difficulties

The hypothesis that good rapid perceptual learning contributes to good speech perception in adverse condition also implies that listeners with severe speech perception difficulties should be unlikely to exhibit good rapid learning. This is in line with the idea that negating the second premise of an if-then statement (if A-rapid learning, then B-speech), also negates the first premise (not B, therefore not A). In other words, we can attempt to refute our hypothesis by finding examples of good rapid learning in populations known for their poor speech perception under challenging conditions, such as older adults with hearing loss and nonnative listeners.

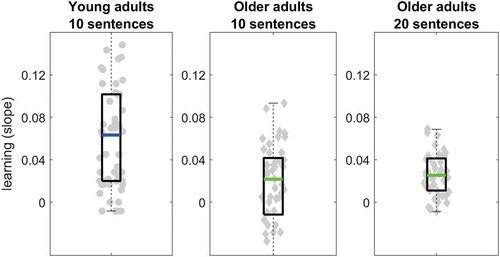

Older adults appear to display reduced and more specific learning than young adults (see Bieber & Gordon-Salant, Citation2020 for a recent review). For time-compressed speech, Peelle and Wingfield (Citation2005) reported intact rapid learning, but reduced transfer of learning across compression rates in older compared to younger adults. To equate starting performance across participants, they administered a calibration session during which listeners were presented with four sentences at nine different compression rates. Then, listeners were given an extra 20 sentences at the compression rate that yielded recognition of approximately 30%. It is possible that the calibration phase masked differences in rapid learning across groups. To test this, we preselected compression rates that would result in similar distributions of starting performances across younger and older adults with intact hearing and older adults with age-related hearing loss (Manheim et al., Citation2018). In this study, a smaller proportion of older adults (with either relatively intact hearing or with age-related hearing loss) improved on time-compressed speech recognition over the course of 20 sentences compared with young adults, despite similar starting distributions in the two groups. Furthermore, the magnitude of learning was smaller in older than in younger adults and in older adults with age-related hearing loss than in older adults with relatively intact hearing. We replicated this finding with new listeners and new speech materials (Rotman et al., Citation2020). As shown in (left and middle panes), learning rates (expressed as slopes) were ~50% slower in older adults with age-related hearing loss, with the overall amount of improvement over the first 10 trials smaller in this population. Given additional trials, older adults continued to improve at approximately the same rate (compare middle and rightmost plots in ), and the proportion of older adults who managed to improve increased. Nevertheless, during a fixed period of listening to time-compressed speech it appears that older adults learn less than younger adults. Real-life differences are probably even larger because in daily communication settings older and younger adults listen to speech at the same rates (e.g., on TV or in conversation).

Figure 2. Rapid learning slopes in young and older adults. Box edges mark the 25th and 75th percentiles; lines within each box mark the median; whiskers are 1.5 the inter-quartile range. Grey symbols mark individual slopes. Re-plotted from the data reported in Rotman et al. (Citation2020), CC-BY-NC

Nonnative speech learning provides another case in which rapid learning is reduced when speech perception is heavily challenged (Banai & Lavner, Citation2016; Cooper & Bradlow, Citation2018; Perrachione, Lee, Ha, & Wong, Citation2011). The perceptual difficulties of nonnative listeners under challenging listening situations are well documented (for review, see Lecumberri, Cooke, & Cutler, Citation2010). As for learning, with similar rates of compression, rapid learning of time-compressed speech is reduced in nonnative compared to native speakers of Hebrew (Banai & Lavner, Citation2016). A recent study on noise-vocoded speech reported that within a group of native Arabic speakers, rapid learning of vocoded speech in Hebrew (participants’ second language) was reduced compared to rapid learning of vocoded speech in Arabic (Bsharat & Karawani, Citation2020). Finally, learning of a new foreign-language phonological contrast may depend on perceptual abilities (Perrachione et al., Citation2011). In this study, native English speakers were asked to learn a new pitch-based phonological contrast (characteristic of tonal languages). Listeners who had better pitch-contour perception prior to learning, learned the new contrast significantly faster than listeners with poorer pitch-contour perception. Additionally, learning in listeners with poorer pitch processing was particularly poor under conditions with high trial-by-trail acoustic-phonetic variability.

Conclusion, Implications, and Limitations

The findings reviewed in this manuscript are consistent with the idea that rapid perceptual learning serves as an individual resource that can support speech perception under adverse conditions. In line with this hypothesis, correlations between learning and perception characterize the full scale of perceptual abilities such that perceptual learning is reduced in groups with perceptual limitations compared to groups with good perceptual abilities (discussed in section II). Furthermore, populations with speech perception difficulties often exhibit abnormal rapid learning for speech, thus failing to refute our hypothesis (discussed in section III).

It is important to note that most of the findings discussed in this review originate in studies from which causality is impossible to infer. Furthermore, with a few exceptions (described in section II), most studies assessed perception and learning along a single dimension of interest, making it hard to discuss the generality of rapid learning. A few studies lend further support to our hypothesis by showing that the correlations between learning and perception are not limited to the same acoustic challenge but, in fact, extend across challenges and are not accounted for by either speech perception or cognitive abilities. Because it is not clear how to test this hypothesis under experimental conditions, future studies should determine whether rapid perceptual learning of speech is indeed correlated across different conditions, as was recently shown for perceptual learning induced by massive training (Yang et al., Citation2020).

The hypothesis presented in this review is quite general, but several types of findings could refute it. First, it assumes that rapid perceptual learning is constrained by the acoustics of the stimuli and environment that elicit it. Evidence for complete “acoustic” or “talker” independence would weaken our claim. Second, if learning is conceived as a general individual resource, learning should correlate across different auditory challenges. Findings for lack of correlations would therefore refute this hypothesis, even though it is hard to prove a “null” effect. Third, as we suggest that good learning should not be observed in groups with severely impaired perception, demonstrating good learning in such groups would also limit the generality and usefulness of the hypothesis.

Finally, the disappointing outcomes of training studies in the area of hearing rehabilitation (Henshaw & Ferguson, Citation2013; Rayes, Al-Malky, & Vickers, Citation2019; Saunders et al., Citation2016), are not surprising when considered under our proposed framework. Rather than being a “bug,” specificity is a feature of learning (as reviewed in section I) that may serve to rapidly support perception under new conditions without compromising that stability of long-term knowledge (Guediche, Blumstein, Fiez, & Holt, Citation2014; Irvine, Citation2018). If this is the case, future research could perhaps focus on training protocols to speed up learning under new conditions rather than on protocols to broaden the scope of generalization.

Acknowledgments

This manuscript is based on a talk at the 2020 meeting of the Auditory Perception & Cognition Society. The National Institute for Psychobiology in Israel and the Israel Science Foundation supported our original work on perceptual learning for speech. We thank Ranin Khayr and Liat Shechter-Shvartzman for their thoughtful comments on the manuscript.

Disclosure Statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Adank, P., & Devlin, J. T. (2010). On-line plasticity in spoken sentence comprehension: Adapting to time-compressed speech. Neuroimage, 49(1), 1124–1132.

- Adank, P., & Janse, E. (2009). Perceptual learning of time-compressed and natural fast speech. Journal of the Acoustical Society of America, 126(5), 2649–2659.

- Adank, P., & Janse, E. (2010). Comprehension of a novel accent by young and older listeners. Psychology and Aging, 25(3), 736–740.

- Ahissar, M., Nahum, M., Nelken, I., & Hochstein, S. (2009). Reverse hierarchies and sensory learning. Philosophical Transactions of the Royal Society of London. Series B, Biological Sciences, 364(1515), 285–299.

- Baese-Berk, M. M., Bradlow, A. R., & Wright, B. A. (2013). Accent-independent adaptation to foreign accented speech. Journal of the Acoustical Society of America, 133(3), EL174–180.

- Banai, K., & Lavie, L. (2020). Perceptual learning and speech perception: A new hypothesis. Proceedings of the International Symposium on Auditory and Audiological Research, 7, 53–60. Retrieved from https://proceedings.isaar.eu/index.php/isaarproc/article/view/2019-07

- Banai, K., & Lavner, Y. (2014). The effects of training length on the perceptual learning of time-compressed speech and its generalization. Journal of the Acoustical Society of America, 136(4), 1908.

- Banai, K., & Lavner, Y. (2016). The effects of exposure and training on the perception of time-compressed speech in native versus nonnative listeners. Journal of the Acoustical Society of America, 140(3), 1686.

- Banks, B., Gowen, E., Munro, K. J., & Adank, P. (2015). Cognitive predictors of perceptual adaptation to accented speech. Journal of the Acoustical Society of America, 137(4), 2015–2024.

- Bent, T., Baese-Berk, M., Borrie, S. A., & McKee, M. (2016). Individual differences in the perception of regional, nonnative, and disordered speech varieties. Journal of the Acoustical Society of America, 140(5), 3775.

- Bieber, R. E., & Gordon-Salant, S. (2020). Improving older adults’ understanding of challenging speech: Auditory training, rapid adaptation and perceptual learning. Hearing Research, 108054. doi:10.1016/j.heares.2020.108054

- Borrie, S. A., Lansford, K. L., & Barrett, T. S. (2017). Generalized adaptation to dysarthric speech. Journal of Speech, Language, and Hearing Research, 60(11), 3110–3117.

- Borrie, S. A., McAuliffe, M. J., & Liss, J. M. (2012). Perceptual learning of dysarthric speech: A review of experimental studies. Journal of Speech, Language, and Hearing Research, 55(1), 290–305.

- Bradlow, A. R., & Bent, T. (2008). Perceptual adaptation to non-native speech. Cognition, 106(2), 707–729.

- Bsharat, D., & Karawani, H. (2020). Bilinguals in challenging listening conditions: How challenging can it be? In 19th Annual Auditory Perception, Cognition, and Action Meeting, Virtual. Retrieved from https://docs.google.com/document/d/1WljOe3RyaNADdt1yNIp0jJtUE1kOte90gp-pPVEqmVY/view

- Cooper, A., & Bradlow, A. (2018). Training-induced pattern-specific phonetic adjustments by first and second language listeners. Journal of Phonetics, 68, 32–49.

- Davis, M. H., Johnsrude, I. S., Hervais-Adelman, A., Taylor, K., & McGettigan, C. (2005). Lexical information drives perceptual learning of distorted speech: Evidence from the comprehension of noise-vocoded sentences. Journal of Experimental Psychology. General, 134(2), 222–241.

- Dupoux, E., & Green, K. (1997). Perceptual adjustment to highly compressed speech: Effects of talker and rate changes. Journal of Experimental Psychology-Human Perception and Performance, 23(3), 914–927.

- Eisner, F., McGettigan, C., Faulkner, A., Rosen, S., & Scott, S. K. (2010). Inferior frontal gyrus activation predicts individual differences in perceptual learning of cochlear-implant simulations. Journal of Neuroscience, 30(21), 7179–7186.

- Eisner, F., & McQueen, J. M. (2005). The specificity of perceptual learning in speech processing. Perception & Psychophysics, 67(2), 224–238.

- Eisner, F., Melinger, A., & Weber, A. (2013). Constraints on the transfer of perceptual learning in accented speech. Frontiers in Psychology, 4, 148.

- Gibson, E. J. (1969). Principles of perceptual learning and development. New York, NY: Appleton-Century-Crofts.

- Gordon-Salant, S., Yeni-Komshian, G. H., Fitzgibbons, P. J., & Schurman, J. (2010). Short-term adaptation to accented English by younger and older adults. Journal of the Acoustical Society of America, 128(4), EL200–204.

- Green, C. S., Banai, K., Lu, Z. L., & Bavelier, D. (2018). Perceptual learning. Stevens’ Handbook of Experimental Psychology and Cognitive Neuroscience, 2, 1–47.

- Greenspan, S. L., Nusbaum, H. C., & Pisoni, D. B. (1988). Perceptual learning of synthetic speech produced by rule. Journal of Experimental Psychology. Learning, Memory, and Cognition, 14(3), 421–433.

- Guediche, S., Blumstein, S. E., Fiez, J. A., & Holt, L. L. (2014). Speech perception under adverse conditions: Insights from behavioral, computational, and neuroscience research. Frontiers in Systems Neuroscience, 7, 126.

- Henshaw, H., & Ferguson, M. A. (2013). Efficacy of individual computer-based auditory training for people with hearing loss: A systematic review of the evidence. PloS One, 8(5), e62836.

- Hervais-Adelman, A. G., Davis, M. H., Johnsrude, I. S., Taylor, K. J., & Carlyon, R. P. (2011). Generalization of perceptual learning of vocoded speech. Journal of Experimental Psychology. Human Perception and Performance, 37(1), 283–295.

- Huyck, J. J., & Johnsrude, I. S. (2012). Rapid perceptual learning of noise-vocoded speech requires attention. Journal of the Acoustical Society of America, 131(3), EL236–242.

- Huyck, J. J., Smith, R. H., Hawkins, S., & Johnsrude, I. S. (2017). Generalization of perceptual learning of degraded speech across talkers. Journal of Speech, Language, and Hearing Research, 60(11), 3334–3341.

- Irvine, D. R. F. (2018). Auditory perceptual learning and changes in the conceptualization of auditory cortex. Hearing Research, 366, 3–16.

- Karawani, H., Bitan, T., Attias, J., & Banai, K. (2016). Auditory perceptual learning in adults with and without age-related hearing loss. Frontiers in Psychology, 6, 2066.

- Karawani, H., Lavie, L., & Banai, K. (2017). Short-term auditory learning in older and younger adults. Proceedings of the International Symposium on Auditory and Audiological Research, 6, 1–8. Retrieved from https://proceedings.isaar.eu/index.php/isaarproc/article/view/2017-01

- Kennedy-Higgins, D., Devlin, J. T., & Adank, P. (2020). Cognitive mechanisms underpinning successful perception of different speech distortions. Journal of the Acoustical Society of America, 147(4), 2728.

- Kraljic, T., & Samuel, A. G. (2006). Generalization in perceptual learning for speech. Psychonomic Bulletin & Review, 13(2), 262–268.

- Kraljic, T., & Samuel, A. G. (2007). Perceptual adjustments to multiple speakers. Journal of Memory and Language, 56(1), 1–15.

- Lecumberri, M. L. G., Cooke, M., & Cutler, A. (2010). Non-native speech perception in adverse conditions: A review. Speech Communication, 52(11–12), 864–886.

- Lehet, M. I., Fenn, K. M., & Nusbaum, H. C. (2020). Shaping perceptual learning of synthetic speech through feedback. Psychonomic Bulletin & Review, 27(5), 1043–1051.

- Loebach, J. L., & Pisoni, D. B. (2008). Perceptual learning of spectrally degraded speech and environmental sounds. Journal of the Acoustical Society of America, 123(2), 1126–1139.

- Manheim, M., Lavie, L., & Banai, K. (2018). Age, hearing, and the perceptual learning of rapid speech. Trends in Hearing, 22, 2331216518778651.

- McLaughlin, D. J., Baese-Berk, M. M., Bent, T., Borrie, S. A., & Van Engen, K. J. (2018). Coping with adversity: Individual differences in the perception of noisy and accented speech. Attention, Perception, & Psychophysics, 80(6), 1559–1570.

- McQueen, J. M., Cutler, A., & Norris, D. (2006). Phonological abstraction in the mental lexicon. Cognitive Science, 30(6), 1113–1126.

- Pallier, C., Sebastian-Galles, N., Dupoux, E., Christophe, A., & Mehler, J. (1998). Perceptual adjustment to time-compressed speech: A cross-linguistic study. Memory & Cognition, 26(4), 844–851.

- Peelle, J. E., & Wingfield, A. (2005). Dissociations in perceptual learning revealed by adult age differences in adaptation to time-compressed speech. Journal of Experimental Psychology. Human Perception and Performance, 31(6), 1315–1330.

- Perrachione, T. K., Lee, J., Ha, L. Y., & Wong, P. C. (2011). Learning a novel phonological contrast depends on interactions between individual differences and training paradigm design. Journal of the Acoustical Society of America, 130(1), 461–472.

- Rayes, H., Al-Malky, G., & Vickers, D. (2019). Systematic review of auditory training in pediatric cochlear implant recipients. Journal of Speech, Language, and Hearing Research, 62(5), 1574–1593.

- Reetzke, R., Xie, Z., Llanos, F., & Chandrasekaran, B. (2018). Tracing the trajectory of sensory plasticity across different stages of speech learning in adulthood. Current Biology, 28(9), 1419–1427 e1414.

- Rotman, T., Lavie, L., & Banai, K. (2020). Rapid perceptual learning: A potential source of individual differences in speech perception under adverse conditions? Trends in Hearing, 24, 2331216520930541.

- Samuel, A. G., & Kraljic, T. (2009). Perceptual learning for speech. Attention, Perception, & Psychophysics, 71(6), 1207–1218.

- Saunders, G. H., Smith, S. L., Chisolm, T. H., Frederick, M. T., McArdle, R. A., & Wilson, R. H. (2016). A randomized control trial: Supplementing hearing aid use with listening and communication enhancement (LACE) auditory training. Ear and Hearing, 37(4), 381–396.

- Sohoglu, E., & Davis, M. H. (2016). Perceptual learning of degraded speech by minimizing prediction error. Proceedings of the National Academy of Sciences of the United States of America, 113(12), E1747-–1756.

- Song, J. H., Skoe, E., Banai, K., & Kraus, N. (2012). Training to improve hearing speech in noise: Biological mechanisms. Cerebral Cortex, 22(5), 1180–1190.

- Tarabeih-Ghanayim, M., Lavner, Y., & Banai, K. (2020). Tasks, talkers, and the perceptual learning of time-compressed speech. Auditory Perception & Cognition, 3, 1–22.

- Vermeire, K., Knoop, A., De Sloovere, M., Bosch, P., & van den Noort, M. (2019). Relationship between working memory and speech-in-noise recognition in young and older adult listeners with age-appropriate hearing. Journal of Speech, Language, and Hearing Research, 62(9), 3545–3553.

- Wayne, R. V., & Johnsrude, I. S. (2012). The role of visual speech information in supporting perceptual learning of degraded speech. Journal of Experimental Psychology. Applied, 18(4), 419–435.

- Yang, J., Yan, F. F., Chen, L., Xi, J., Fan, S., Zhang, P., … Huang, C. B. (2020). General learning ability in perceptual learning. Proceedings of the National Academy of Sciences of the United States of America, 117(32), 19092–19100.

- Zheng, Y., & Samuel, A. G. (2020). The relationship between phonemic category boundary changes and perceptual adjustments to natural accents. Journal of Experimental Psychology. Learning, Memory, and Cognition, 46(7), 1270–1292.