Abstract

Purpose

The Relational Aspects of Care Questionnaire (RAC-Q) is an electronic instrument which has been developed to assess staff’s interactions with patients when delivering relational care to inpatients and those accessing accident and emergency (A&E) services. The aim of this study was to reduce the number of questionnaire items and explore scoring methods for “not applicable” response options.

Patients and methods

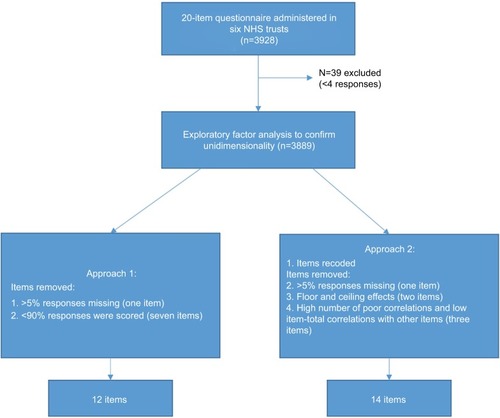

Participants (n=3928) were inpatients or A&E attendees across six participating hospital trusts in England during 2015–2016. The instrument, consisting of 20 questionnaire items, was administered by trained hospital volunteers over a period of 10 months. Items were subjected to exploratory factor analysis to confirm unidimensionality, and the number of items was reduced using a range of a priori psychometric criteria. Two alternative approaches to scoring were undertaken, one treated “not applicable” responses as missing data, while the second adopted a problem score approach where “not applicable” was considered “no problem with care.”

Results

Two short-form RAC-Qs with alternative scoring options were identified. The first (the RAC-Q-12) contained 12 items, while the second scoring option (the RAC-Q-14) contained 14 items. Scores from both short forms correlated highly with the full 20-item parent form score (RAC-Q-12, r=0.93 and RAC-Q-14, f=0.92), displayed high internal consistency (Cronbach’s α: RAC-Q-12=0.92 and RAC-Q-14=0.89) and had high levels of agreement (intraclass correlation coefficient [ICC]=0.97 for both scales).

Conclusion

The RAC-Q is designed to offer near-real-time feedback on staff’s interactions with patients when delivering relational care. The new short-form RAC-Qs and their respective method of scoring are reflective of scores derived using the full 20-item parent form. The new short-form RAC-Qs may be incorporated into inpatient surveys to enable the comparison of ward or hospital performance. Using either the RAC-Q-12 or the RAC-Q-14 offers a method to reduce missing data and response fatigue.

Introduction

Person-centered care is considered to be a key component of high-quality health care in the UK.Citation1–Citation4 Achieving person-centered care, however, can be challenging as it concerns not only how services are delivered but also relationships between health care professionals and patients who have varying levels of dependency.Citation5,Citation6 It is important that experiences of care received are monitored to provide insights into how care delivery can be improved. A number of patient experience measures are currently in use in the National Health Service (NHS). These measures are predominantly focused on measuring transactional or “functional” aspects of care, such as cleanliness, waiting times and pain management.Citation7–Citation10 While functional aspects of care are important, they do not reflect “relational” aspects of care which focus on interactions between health care professionals and patients.Citation11 Fostering a relationship-centered approach to care, particularly in relation to older patients, is believed to be a key in providing positive experiences of care and can contribute to the patients’ emotional comfort.Citation12–Citation14 “Relational” care concerns interpersonal aspects of care, such as communication, providing the space for patients to discuss concerns or fears and treating patients with respect and dignity.Citation11,Citation15

Despite the importance of relational care, a recent independent inquiryCitation16 into the care provided by the UK Mid Staffordshire NHS Foundation Trust identified deficiencies in its delivery. A key recommendation of the report included the need for a suitable instrument to assess relational aspects of care, in particular, for use among older inpatients and those accessing accident and emergency (A&E) services. In addition, the inquiry highlighted the importance of real-time data collection as a key mechanism with which to monitor relational care in a timely and efficient manner. There is currently no electronic instrument with which to measure staff’s interactions with older people or those attending A&E services with regard to relational care. With these considerations in mind, University of Oxford (Oxford, UK) and Picker Institute Europe (Oxford, UK) developed an instrument, the Relational Aspects of Care Questionnaire (RAC-Q),Citation17 for use within this context.

The RAC-Q was designed for administration on a tablet computer to allow responses to be fed back to participating wards in “near real time.” In brief, questionnaire items were constructed following a review of relevant literature, a focus group and eight interviews carried out with recent inpatients and A&E attendees. Eight overarching themes that reflected staff’s interactions with patients when delivering relational care were identified, and the existing questionnaire items were sourced to reflect each theme. Questionnaire items, representing likely manifestations of staff’s interaction style when delivering relational care, were selected in the belief that positive patient responses would indicate the delivery of good relational care.Citation18,Citation19 Existing items were sourced from the 2013 National NHS Inpatient and Emergency Department surveys while additional items were developed, where no existing items were deemed suitable. A total of 62 items were reviewed by an expert advisory group (n=5) consisting of public and patient representatives, hospital patient experience representatives and academics, who reduced the 62 identified items to 22 items. Finally, cognitive interviews (n=30) were conducted with current inpatients and A&E patients at three hospitals in England to identify duplicate items and ensure participants’ understanding. A detailed account of the instrument’s development is outlined elsewhere.Citation17 During testing, the questionnaire was administered via a tablet computer, ensuring its suitability for use in the inpatient context. The process resulted in 20 confirmed items which were regarded as relevant and acceptable when measuring staff’s interactions with regard to delivering relational care. Item responses were coded as 0–100, where 0=worst care and 100=best care. Nine items also included additional response options to indicate that the question was not applicable to them. The 20-item questionnaire was subsequently administered during a 10-month pilot study across six trusts in selected wards which provided care primarily to people aged 75 years and older and among those attending A&E.

A detailed evaluation of the barriers and facilitators to the implementation of RAC-Q is outlined elsewhere.Citation20 While many barriers to collecting real-time data can relate to technology resources and staff engagement, reducing the number of questionnaire items to be administered is one way to reduce burden of questionnaire completion. Fewer questionnaire items are particularly welcome given that older people and A&E attendees can present unique challenges when completing lengthy questionnaires. For example, many are likely to be living with conditions affecting hearing, speech, vision and cognitive processing, and those attending A&E services may be in acute pain, shock and experiencing trauma.Citation39,Citation40 The first aim of the analyses reported in this study aimed to investigate the feasibility of reducing the length of the original 20-item questionnaire through the creation of a short-form RAC-Q. Short-form questionnaires have been developed for a large number of widely used questionnaires and many have been found to be acceptable and informative providing similar results to their original parent form.Citation21–Citation23 Fewer items can be advantageous to studies or evaluations where additional items or measures are administered concurrently or where patient burden should be minimized (e.g., with older patients or those who have recently experienced acute pain or shock).Citation24

A second aim of these analyses sought to explore methods of scoring the questionnaire in the cases of missing or nonevaluative data. This included exploring the feasibility of allocating scores to “not applicable” response options to nine relevant items within the instrument so that information was not lost and missing data reduced. Categorizing “not applicable” responses that are typically left unscored can maximize the number of usable responses and may have the potential to improve interpretation of results for health care providers. Two approaches to analyses were taken to realize the aims outlined. The first approach left the “not applicable” responses unscored, a similar approach to methods used in the NHS patient survey program.Citation25 The second approach introduced a “problem score” approach where responses were rescored to indicate the presence or absence of a problem with staff’s interaction styles in the context of relation care. Problem score approaches have been used successfully in the pastCitation26,Citation27 and, in this instance, “not applicable” responses were recoded from “no score” to “100,” indicating “no problem with care” ().

Table 1 Response option codes for approach 1 and approach 2

Patients and methods

Data source

The purpose of this study was to explore two approaches of item reduction and scoring for the RAC-Q. Study focused on whether reducing the number of items and differences in the scoring of items had any implications for the interpretation of the overall score. Data reported in this study were collected over 10 months in six participating trusts in England during 2015–2016. The instrument, consisting of 20 questionnaire items, was administered by hospital volunteers who had received training on the use of the tablet, the practice of administering questionnaires and training on how to approach patients. Two study sites opted to use a free standing tablet kiosk within their A&E department instead of volunteers due to environmental constraints. All participants provided written informed consent. The East of Scotland Research Ethics Service reviewed this study and provided a favorable opinion in August 2014 (14/ES/1065)

Analysis

Two approaches to item reduction and scoring were applied (). Analyses for both approaches were initially restricted to 3889 patient cases (out of a total of 3928 patient cases) which were deemed “useable” as they included cases where at least four valid item responses were recorded. To optimize efficiency and minimize responder burden, analysis aimed to retain only the most meaningful and relevant items. Following preliminary exploratory factor analysis that confirmed the unidimensionality of responses,Citation28 items were subjected to preliminary data checks to confirm their suitability for inclusion using a range of a priori criteria as outlined for each approach. Questionnaire items were excluded in the cases of more than 5% nonresponse (skipped or missing responses). In the first scoring approach (approach 1), items with high numbers of unscored “not applicable” responses which limited the information were excluded. Items with <90% of scored responses were also excluded.

The second approach to scoring (approach 2) aimed to maximize usable patient data and identify the absence or presence of a problem with the delivery of relational care. Items with “not applicable” categories were recoded as outlined in , and items were removed if they had exceptionally high (>90%) floor or ceiling effects as they indicated poor variation and provided little information. Items that displayed a high number of poor correlations (<0.3) with other items in the questionnaire were also removed. Poor correlations with a large number of items can indicate that a particular item is not measuring a similar construct to other items in the scale.Citation29,Citation30 Finally, reliability analysis was used to identify items with low item-to-total correlations (<0.3) and items that lowered the Cronbach’s α value.Citation29 Items displaying a high number of poor correlations with other items or items that lowered the Cronbach’s α value were iteratively removed.

Score comparisons

For each item, scored response categories were valued from 0 (worst care) to 100 (best care). Scale scores were obtained by calculating the mean item score for cases with complete scored data only (i.e., where a scored response was obtained for all 20 items). In the case of non-normal distributions, scale scores were also standardized using the Blom approach for normalization.Citation31

Differences between the 20-item survey and the new reduced scales were assessed using a paired-sample t-test. Agreement between scales was measured using the intraclass correlation coefficient (ICC) two-way mixed model for consistency and exact agreement, and for ordinal agreement using Spearman’s ρ.Citation32

Scores for the new reduced questionnaires were compared across patient characteristics (sex, age) and hospital trusts to investigate whether the instrument could detect differences between patient groups. Modes of completion were also compared to ensure that patient responses did not differ depending on the device used to administer the survey.

Results

Characteristics

The average length of time taken to complete the survey, when restricted to questionnaire sessions that started and finished on the same day (n=3908), was 8.5 min with an SD of 9.9. Restricting questionnaire sessions to those which were completed in the same day gave a more accurate account of the average time for survey completion as they reduced the number of submissions that were not uploaded at the time of completion.

All further analyses for item reduction were restricted to responses that were deemed “useable” (those having at least four valid responses to scored items). The sample size (n=3889) included 1687 (43.4%) men and 2044 (52.6%) women with a mean age of 65 years (SD 20.9, median 70 years). Further sample characteristics are summarized in . Participants predominantly completed the survey on a tablet (n=3590, 92.3%) while the remainder (n=299, 7.7%) completed the survey using a kiosk.

Table 2 Characteristics (for cases with at least four responses)

Approach 1 analysis

Eight items were removed according to the a priori criteria outlined as follows. One item (item 17) was removed due to high numbers of missing data from nonresponse (6.2%). For all other questions, at least 95% of respondents recorded an answer. While the proportion giving a response of “do not know” was generally low (<2% of responses), numbers for possible “not applicable” responses (e.g., “I have not asked for help”) were high. In this reduction approach, non- applicable responses were left unscored. Taking into account the extent of nonevaluative (not applicable) item data, two further items (items 9 and 16) were removed. The 17 retained items still included some items with relatively high proportions of missing data, which presented potential problems in the computation of an overall score; only 1561 cases had complete data on the reduced 17-item set. A further step of item removal was therefore undertaken and five items (items 7, 8, 12, 14 and 15) with <90% response were removed, leaving 12 items for a new short form RAC-Q to be compared against the full RAC-Q. Removed items are summarized in .

Table 3 Items removed during the study for approaches 1 and 2

Approach 2 analysis

Six items were removed according to the a priori criteria outlined in the “Patients and methods” section. One item (item 17) was removed due to high numbers of missing data (6.2%) and high numbers of poor correlations with other questionnaire items. Two items (items 18 and 20) were removed due to ceiling effects where >90% gave the same answer to items. Following their removal, one item (item 12) was removed as its removal increased the internal consistency of the instrument while also having a high number of poor correlations with other items. Two final items (items 1 and 2) were removed due to high numbers of poor correlation with other items. Removed items are summarized in .

Scale comparisons

Scale statistics are reported in for the cases of complete scored data on both the full 20-item parent form and their respective short forms (approach 1, n=609 and approach 2, n=2967). For both approaches, results showed that agreement and consistency between the parent form and their respective short-form scales were high. The internal consistency for both the 12- and 14-item scales was excellent with item-total correlations ranging from 0.54 to 0.79 and 0.51 to 0.70, respectively. Differences between the parent form and the RAC-Q-12 short form were assessed using a paired samples t-test. The difference was statistically significant in raw score form (t=−11.05, df=608, p<0.0001), but not when both scales were normalized (t=0.37, df=608, p=0.71). Similarly, significant differences were observed between the parent form and the RAC-Q-14 short-form raw scores (t= −19.15, df=2966, p<0.001), but not when both scales were normalized (t=3.00, df=2966, p<0.05). Overall, these results indicate that both the RAC-Q-12 and the RAC-Q-14 short-form scores are reflective of the full 20-item parent form score.

Table 4 Scale descriptive statistics

Approach 2 (RAC-Q-14) resulted in a higher number of retained cases with 3215 respondents providing scored responses to 14 items, while for approach 1 (RAC-Q-12), 3087 respondents provided scored responses for 12 items. Scores displayed a highly skewed distribution toward the top end of the scale (best care). Statistical analyses for the new scales were therefore carried out using nonparametric statistics. Significant differences were found between men and women (men reporting more positive experiences of care than women) for both scales. No differences were found between modes of completion for the RAC-Q-14; however, slight differences were found for the mode of completion with the RAC-Q-12 (). The reduced indexes were not significantly correlated with age (RAC-Q-12: Spearman’s correlation=0.02, p=0.30, n=3021; RAC-Q-14: Spearman’s correlation=–0.02, p=0.34, n=3140). Tests (Kruskal–Wallis k independent samples) for differences between trusts indicated that there was a significant difference between trusts, demonstrating that the reduced questionnaire is capable of detecting differences between trusts ().

Table 5 Differences between sex, mode of completion (Mann–Whitney U-test of significance) and hospital trusts (Kruskal–Wallis k-independent samples test)

Discussion

Measuring staff’s interactions with patients is an important way to assess the delivery of relational care. Monitoring these interactions using a patient self-report instrument in near real time may provide the most efficient way in which hospital staff can address any inadequacies of care.Citation16 In addition to monitoring levels of relational care within wards, administering the RAC-Q offers hospital staff the opportunity to compare performance between wards and has the flexibility to be incorporated into existing data collections which may be ongoing within a trust.

This study aimed to address data completion and scoring challenges experienced when administering the full 20-item RAC-Q in a busy hospital setting. Two new short-form RAC-Qs were identified, the RAC-Q-12 and the RAC-Q-14, consisting of 12 and 14 items, respectively. These short-form RAC-Qs require the patient to complete less questions, yet were found to produce very similar results to that of the parent 20-item RAC-Q. Fewer administered items will reduce patient burden, which is particularly welcome in a busy hospital environment or in incidents where patients are in acute pain or shock. The short-form RAC-Qs also provide more scope for relational care to be monitored alongside other measures, for example, indicators of functional care. Similarly to other established short-form questionnaires.Citation21–Citation23 analyses confirmed that the short-form RAC-Qs have good psychometric properties with excellent levels of internal consistency and high agreement with the parent form. Therefore, while the full 20-item RAC-Q instrument may offer slightly more precision, the short-form RAC-Qs are recommended where brevity is required. The choice between the two newly developed short-form RAC-Q instruments offer some flexibility in choosing the item content of the questionnaire administered. This is important as, while many studies to date have concluded that using short questionnaires can improve response rates,Citation33,Citation34 evidence also exists indicating that the length of questionnaire does not always impact on response rates or data quality.Citation35 The rationale for administering a questionnaire should therefore always be based on content over length.Citation36

A second aim of this study explored potential scoring options for responses to RAC items which may have otherwise been excluded from analysis due to having response options that were left unscored.

While the RAC-Q-12 has the advantage of fewer items to administer to the patient, large amounts of unscored “not applicable” responses can limit total score interpretations. The RAC-Q-14 scoring structure retains and values “not applicable” responses, minimizing missing or “non-evaluative” data. The simple valuing of the “not applicable” responses simplifies the calculation of the total score for the RAC-Q-14 and means minimum training is needed to calculate and interpret scores. Simple scoring algorithms and the reduction in missing data are particularly advantageous when used in clinical settings. Various pitfalls in applying multiple imputation techniquesCitation37 to account for missing responses can be complex and impractical. Alternative techniques for handling missing data, such as “hot decking,” where missing responses are replaced with values obtained from a similar responder (e.g., similar characteristics) also have multiple methods of imputation which can be complex and would benefit from further study to support their application.Citation38

The RAC-Q-12 and RAC-Q-14 are short questionnaires to assess staff’s interactions with patients when delivering relational care. Questions should be applicable to all patients and have been specifically tested for their suitability among older inpatients and A&E attendees. In practice, the short-form RAC-Qs provide a resource for health care providers not only to monitor relational care but also to use information collected to drive improvement in targeted hospital settings. Continuous electronic data collection using real-time feedback can then provide a mechanism with which to evaluate the success of initiatives introduced to address relational care. Evidence collected using these instruments may be of interest to a range of groups including clinical staff, quality improvement teams and board members to provide assurances in the standards of relational care being delivered.

Limitations

While the RAC-Q will provide a valuable means of monitoring staff’s interactions with patients in hospital settings, it is important to note that mean reported scores within the dataset were high. This may have some implications for interpreting scores and for the sensitivity of instruments for detecting continually improving scores. Nonetheless, initial analysis has shown the ability of instruments to detect differences. While results largely indicate good delivery of relational care, differences were detected between the six participating trusts and between scores reported for men and women. There was no difference between questionnaire scores and modes of administration for the RAC-Q-14, going some way to indicate responses do not differ between tablet computer and kiosk completion in terms of their psychometric properties. Slight differences, however, were found for scores and the mode of administration with the RAC-Q-12. This may require further investigation in the future with a larger sample size for kiosk completion. It is also worth noting that the two sites opting to use kiosks for questionnaire completion during the 10-month pilot study stopped using them during data collection due to operational difficulties and poor recruitment uptake. These experiences go some way to suggesting the general unsuitability of standalone kiosks, even in instances where the instrument properties are compatible with tablet computer completion.

Conclusion

The RAC-Q provides a set of questions measuring staff’s interactions with patients when delivering relational care which are applicable across hospital trusts and relevant to all inpatients or those attending A&E. The RAC-Q-12 and RAC-Q-14 have very high associations with the parent questionnaire and can be useful for action planning and policy decisions within a trust or analyzed at individual item level.

Acknowledgments

We thank all volunteers, staff, the study team and advisory group members who worked with us. We also thank the collaborators at the six case study sites. This project was funded by the National Institute for Health Research Health Services and Delivery Research Program (project number 13/07/39). The views and opinions therein are those of the authors and do not necessarily reflect those of the Health Service and Delivery Research Program, National Institute for Health Research (NIHR), NHS or the Department of Health. This article presents independent study funded by the NIHR. The views expressed are those of the author(s) and not necessarily those of the NHS, the NIHR or the Department of Health.

Disclosure

The authors report no conflicts of interest in this work.

References

- Department of HealthThe NHS Plan: A Plan for Investment, A Plan for ReformNorwichHMSO2000

- DarziADepartment of HealthHigh Quality Care for All NHS Next Stage Review Final ReportLondonDepartment of Health2008

- Department of HealthThe NHS Constitution for EnglandLondonDepartment of Health2013

- BerwickDA Promise to Learn – A Commitment to Act: Improving the Safety of Patients in EnglandLondonWilliams Lea2013

- The Health FoundationPerson-Centred Care Made Simple. What Everyone Should Know About Person-Centred CareHealth FoundationLondon2014

- PaparellaGPerson-Centred Care in Europe: A Cross-Country Comparison of Health System Performance, Strategies and StructuresOxford, EnglandPicker Institute Europe2016

- CampbellJSmithPNissenSBowerPElliottMRolandMThe GP Patient Survey for use in primary care in the National Health Service in the UK – development and psychometric characteristicsBMC Fam Pract20091015719698140

- RobertsJISauroKJetteNUsing a standardized assessment tool to measure patient experience on a seizure monitoring unit compared to a general neurology unitEpilepsy Behav2012241545822483643

- GremigniPSommarugaMPeltenburgMValidation of the Health Care Communication Questionnaire (HCCQ) to measure outpatients’ experience of communication with hospital staffPatient Educ Couns2008711576418243632

- DaviesEAMaddenPCouplandVHGriffinMRichardsonAComparing breast and lung cancer patients’ experiences at a UK Cancer Centre: implications for improving care and moves towards a person centered model of clinical practiceEur J Pers Cent Healthc201111177189

- DoyleCLennoxLBellDA systematic review of evidence on the links between patient experience and clinical safety and effectivenessBMJ Open201331e001570

- WilliamsAMIruritaVFTherapeutic and non-therapeutic interpersonal interactions: the patient’s perspectiveJ Clin Nurs200413780681515361154

- WilliamsAMKristjansonLJEmotional care experienced by hospitalised patients: development and testing of a measurement instrumentJ Clin Nurs20091871069107719077011

- BridgesJFlatleyMMeyerJOlder people’s and relatives’ experiences in acute care settings: systematic review and synthesis of qualitative studiesInt J Nurs Stud20104718910719854441

- CoulterAEngaging Patients in HealthcareMcGraw-Hill EducationMaidenhead2011

- FrancisRReport of the Mid Staffordshire NHS Foundation Trust Public InquiryLondonThe Stationery Office2013

- GrahamCKäsbauerSCooperRAn Evaluation of a Near Real-Time Survey for Improving Patients’ Experiences of the Relational Aspects of CareHealth Services and Delivery Research: NIHR Health Technology Assessment ProgrammeSouthampton2017

- FayersPMHandDJFactor analysis, causal indicators and quality of lifeQual Life Res1997621391509161114

- FayersPMHandDJCausal variables, indicator variables and measurement scales: an example from quality of lifeJ R Stat Soc A Stat Soc20021652233253

- KäsbauerSCooperRKellyLKingJBarriers and facilitators of a near real-time feedback approach for measuring patient experiences of hospital careHealth Policy Technol2016615158

- De BruinAFDiederiksJPMDe WitteLPStevensFCJPhilipsenHThe development of a short generic version of the sickness impact profileJ Clin Epidemiol19944744074187730866

- WareJEJrSherbourneCDThe MOS 36-item short-form health survey (SF-36). Conceptual framework and item selectionMed Care19923064734831593914

- BohlmeijerEten KloosterPMFledderusMVeehofMBaerRPsychometric properties of the five facet mindfulness questionnaire in depressed adults and development of a short formAssessment201118330832021586480

- DillmanDASmythJDChristianLMInternet, Phone, Mail, and Mixed-Mode Surveys: The Tailored Design MethodHoboken, NJWiley Publishing2014

- NHS_Surveys [webpage on the Internet]NHS Adult Inpatient Survey 20152015 Available from: http://nhssurveys.org/survey/1641Accessed April 01, 2017

- JenkinsonCCoulterAGyllRLindstromPAvnerLHoglundEMeasuring the experiences of health care for patients with musculoskeletal disorders (MSD): development of the Picker MSD questionnaireScand J Caring Sci200216332933312191046

- JenkinsonCCoulterABrusterSThe Picker Patient Experience Questionnaire: development and validation using data from in-patient surveys in five countriesInt J Qual Health Care200214535335812389801

- FabrigarLRWegenerDTExploratory Factor AnalysisOxfordOxford University Press2012

- NunnallyJBernsteinIHPsychometric Theory3rd edNew YorkMcGraw-Hill1994

- HinkinTRA brief tutorial on the development of measures for use in survey questionnairesOrgan Res Meth199811104121

- KrajaATCorbettJPingARheumatoid arthritis, item response theory, Blom transformation, and mixed modelsBMC Proc20071suppl 1S116S11618466457

- McGrawKOWongSPForming inferences about some intraclass correlation coefficientsPsychol Methods1996113046

- Taylor-WestPSakerJChampionDThe benefits of using reduced item variable scales in marketing segmentationJ Market Commun2014206438446

- IglesiasCTorgersonDDoes length of questionnaire matter? A randomised trial of response rates to a mailed questionnaireJ Health Serv Res Policy20005421922111184958

- JenkinsonCCoulterAReevesRBrusterSRichardsNProperties of the Picker Patient Experience questionnaire in a randomized controlled trial of long versus short form survey instrumentsJ Public Health Med200325319720114575193

- RolstadSAdlerJRydenAResponse burden and questionnaire length: is shorter better? A review and meta-analysisValue Health20111481101110822152180

- SterneJACWhiteIRCarlinJBMultiple imputation for missing data in epidemiological and clinical research: potential and pitfallsBMJ2009338b239319564179

- AndridgeRRLittleRJAA review of hot deck imputation for survey non-responseInt Stat Rev2010781406421743766

- MurrellsTRobertGAdamsMMorrowEMabenJMeasuring relational aspects of hospital care in England with the ‘Patient Evaluation of Emotional Care during Hospitalisation’ (PEECH) survey questionnaireBMJ Open201331 piie002211

- BrostowDPHirschATKurzerMSRecruiting older patients with peripheral arterial disease: evaluating challenges and strategiesPatient Prefer Adherence201591121112826273200