Abstract

Background

Concept maps (CMs) visually represent hierarchical connections among related ideas. They foster logical organization and clarify idea relationships, potentially aiding medical students in critical thinking (to think clearly and rationally about what to do or what to believe). However, there are inconsistent claims about the use of CMs in undergraduate medical education. Our three research questions are 1) What studies have been published on concept mapping in undergraduate medical education; 2) What was the impact of CMs on students’ critical thinking; 3) How and why have these interventions had an educational impact?

Methods

Eight databases were systematically searched (plus a manual and an additional search were conducted). After eliminating duplicate entries, titles and abstracts and full-texts were independently screened by two authors. Data extraction and quality assessment of the studies were independently performed by two authors. Qualitative and quantitative data were integrated using mixed-methods. The results were reported using the STructured apprOach to the Reporting In healthcare education of Evidence Synthesis statement and BEME guidance.

Results

Thirty-nine studies were included from 26 journals (19 quantitative, 8 qualitative and 12 mixed-methods studies). CMs were considered as a tool to promote critical thinking, both in the perception of students and tutors, as well as in assessing students’ knowledge and/or skills. In addition to their role as facilitators of knowledge integration and critical thinking, CMs were considered both a teaching and a learning methods.

Conclusions

CMs are teaching and learning tools which seem to help medical students develop critical thinking. This is due to the flexibility of the tool as a facilitator of knowledge integration, as a learning and teaching method. The wide range of contexts, purposes, and variations in how CMs and instruments to assess critical thinking are used increases our confidence that the positive effects are consistent.

Introduction

Medical students need more than just knowledge. To solve clinical problems, such as establishing a diagnosis and managing patients, students need to develop higher-level cognitive skills (Swanwick et al. Citation2019). Despite the importance of these cognitive skills, the terminology and boundaries between concepts are not clear-cut. Clinical reasoning, critical thinking, decision-making, problem-solving, clinical judgement, and diagnostic reasoning are often used interchangeably (Brentnall et al. Citation2022). However, for this manuscript, we think of clinical reasoning as an overarching concept that can be used to refer to reasoning skills, reasoning performance, reasoning processes, outcomes of reasoning, contexts of reasoning, or purpose of reasoning (Young et al. Citation2020). We define critical thinking as “the application of higher cognitive skills (…) to information (…) in a way that leads to action that is precise, consistent, logical, and appropriate” (Huang et al. Citation2014). In this manuscript, the focus is on the role of concept maps (CMs) as an instructional tool to support critical thinking, one of many reasoning skills under the overarching concept of clinical reasoning.

CMs were created by Novak and used in education since 1984 as tools to visually represent information as interrelated knowledge. They can be defined as a “schematic device for representing a set of concept meanings imbedded in a framework of propositions” (Novak Citation2003). Davies (Citation2011) described six criteria for Novakian CMs: 1) develop a declarative-type focus question; 2) brainstorm concepts and ideas and dispose of the concepts in circles or boxes to designate them as concepts; 3) put concepts in hierarchical order; 4) link lines are then provided between the hierarchical concepts from top to bottom; 5) devise suitable cross-links for key concepts in the map to show the relationship between the key concepts; 6) add examples to the terminal points of a map representing the concepts. However, there is a heterogeneous use of CMs in the literature, and some maps do not meet all the criteria (non-Novakian CMs).

CMs may support the development of critical thinking throughout undergraduate medical training. They are based on the cognitive theory of Ausubel (Ausubel Citation2000), which states that meaningful learning occurs when new knowledge is connected to relevant concepts that the learner has already understood and exist on the learner’s cognitive structure. CMs help students understand the organization and integration of relevant concepts and to help students establish links between basic and clinical sciences (Pinto and Zeitz Citation1997; Daley et al. Citation2016). CMs force students to make thinking explicit and to justify how they arrived at their reasoning; they also slow the pace of learning, enabling students to digest and apply knowledge. These characteristics correspond to three strategies that can be used to teach critical thinking (Huang et al. Citation2014). While much of the CMs literature seems to have been developed with pre-clerkship medical students, CMs may also be useful for developing critical thinking applied to clinical problems. In the clinical setting, there is a need for methods of teaching and learning that provide a pathway to the clinical reasoning process through networks of understanding (Kinchin Citation2008). CMs can help organize knowledge structures that explain how the clinical elements are connected among themselves and with the basic concepts. CMs have been used to develop clinical reasoning skills (Daley et al. Citation2016), and to facilitate efficient teacher-learner interactions from the classroom to the bedside (Pierce et al. Citation2020).

In BEME Guide No.61 (Pierce et al. Citation2020), CMs were considered an effective strategy for teaching clinical reasoning in the clinical setting, but most of the evidence comes from studies with nursing students. Since clinical reasoning is conceptualized differently across health professions, performing a focused review on medical students (Young et al. Citation2020) is relevant. The existing literature reviews on this topic focus on the educational effectiveness of CMs. In addition, health educators need to know what resources are required to implement CMs.

This review is based on evidence from Pierce et al. (Citation2020), BEME Guide No. 61, and Daley et al. (Citation2016). Our goal is to expand the effectiveness of CMs’ understanding in undergraduate medical education by reviewing the current body of research. We aim to answer three research questions:

What studies have been published on concept mapping in undergraduate medical education? (Description)

What was the impact of CMs on students’ critical thinking? (Justification)

How and why have these interventions had an educational impact? (Clarification)

Additionally, we aim to identify and reflect on the resources needed to implement the use of CMs, such as teacher training time and software.

Methods

In this review, we integrated qualitative and quantitative methodologies using mixed-methods. Data from each report was treated with an integrated model to maximize the findings (Crandell et al. Citation2011). We reported the results using the STructured apprOach to the Reporting In healthcare education of Evidence Synthesis (STORIES) statement (Gordon and Gibbs Citation2014) and BEME guidance (Hammick et al. Citation2010). A study protocol was completed a priori and uploaded to the study repository on the BEME website.

Search strategy

After a pilot search, we conducted an electronic search within eight databases (Pubmed, Scopus, Web of Science, Embase, Academic Search Complete, ERIC, b-onFootnote1, and PsycINFO). We conducted a manual electronic search within Academic Medicine, Medical Education, Advances in Health Sciences Education, Medical Teacher, MedEdPublish, and grey literature sources to identify additional relevant articles. Additional research was carried out according to reference lists of the reviews detected in the initial search and from the databases of some authors who have been working with concept mapping for more than 20 years. The search strategy was developed with a medical librarian. The full search strategy for each database can be found in Supplementary Appendix 1. The search was conducted from database inception until May 2022, with the updated search conducted in March 2023. After eliminating duplicate entries, titles and abstracts were independently screened by two authors according to the inclusion criteria described below. Inter-rater reliability was calculated using Cohen’s Kappa statistic. Full-text was obtained for the studies considered as potentially eligible by at least one of the reviewers. The same two authors independently assessed the full-texts to make a final judgment about study eligibility. Disagreements were resolved by discussion involving a third reviewer when needed.

Study selection

The following inclusion criteria were used:

Study design: experimental (randomized trials, nonrandomized trials, interrupted time-series, regression discontinuity designs, pre-test post-test designs, removed treatment designs, repeated treatment designs), observational quantitative (cohort, case-control, cross-sectional), qualitative studies (qualitative descriptive studies, grounded theory, ethnography, phenomenology, action research) and mixed-methods studies.

Participants: studies performed with medical students in any discipline.

Intervention: studies describing the use of Novakian CMs.

Language: studies written in English, Portuguese, French, German, or Spanish.

No date limits were applied to the study selection.

Data extraction

Two authors independently extracted data from the studies. The extraction form was piloted in five studies to ensure consistency between reviewers and to improve the form. Differences were resolved through discussion or involvement of a third author until a full consensus was reached. The data extracted included the following (adapted from a BEME coding sheet):

Administrative (search method, authors, title, journal, publication year);

Evaluation methods (type of research, study design, data collection methods);

The study aims (implied/stated, description);

Context (country/city/university, curriculum type, discipline, sample size, inclusion and exclusion criteria, CM’s definition, how CMs were used, resources, teacher training, CMs evaluation);

The study classification according to its purposes of research in medical education (description, justification, clarification) (Cook et al. Citation2008);

The impact of the intervention (intervention/comparators, Kirkpatrick outcome level (Boet et al. Citation2012), educational impacts);

Conclusions;

Lessons learned;

The strength of findings;

Overall impression of the article.

Quality assessment

This is an important item in the context of a systematic review, however, the lack of a consensus method makes it an even more challenging task, in addition to the diversity of the included studies. Two major areas were considered: 1) quality of reporting and 2) quality of study design. The four scales used are described in Supplementary Appendix 2.

For the first point, we used a visual Red-Amber-Green (RAG) ranking system as previously used by Gordon (Gordon et al. Citation2018) and Stojan (Stojan et al. 2022). The areas assessed included underpinning theories, resources, setting, education (pedagogy), and content. Items were judged to be of high quality (green), unclear quality (yellow), or low quality (red). For the second point, we used the three scales described below. The Critical Appraisal Skills Programme (CASP) checklist (CASP Qualitative Checklist, Critical Appraisal Skills Programme Citation2018) was used as an appraisal tool for qualitative studies. Three sections were considered: section A) “Are the results of the study valid?”; section B) “What are the results?”; section C) “Will the results help locally?”. The Medical Education Research Quality Instrument (MERSQI) score for methodological evaluation of medical education studies (Cook and Reed Citation2015) was used to assess the study design of quantitative research. The MERSQI is a ten-item instrument to assess the methodological quality of experimental, quasi-experimental, and observational medical education studies. The ten items reflect six domains of study quality: study design, sampling, type of data, validity of assessments, data analysis, and outcomes. The total and domain MERSQI scores were calculated. We used the Mixed Methods Appraisal Tool (MMAT) (Hong et al. Citation2018) for mixed-methods studies. As this tool also allows evaluating qualitative and quantitative studies, we decided to apply it to all studies because we found it useful to apply a common tool to all studies.

Two authors extracted data from the studies, and differences were resolved through discussion or involvement of a third author until a full consensus was reached. Quality assessment was conducted to describe studies on the use of CMs in undergraduate medical education. For the justification (what was the impact on critical thinking) and clarification questions (how and why the interventions had an educational impact), no study was excluded from the analysis due to its methodological quality. A lower appreciation does not necessarily mean lower quality, however, it may mean a greater risk that such a poor quality may exist.

Synthesis of evidence

A descriptive synthesis was used to summarize data from studies on the use of concept mapping in medical students (“What was done”). This summary describes the results of the research, timing of publication, geographical location, classification of the studies (according to its methodology and purposes of research), settings and description of the use of CMs (including curriculum type, learner level and disciplines, number of participants, type of CMs and other pedagogical tools), Kirkpatrick outcome level, and quality assessment. To answer the justification question “What was the impact of CMs on students’ critical thinking” a synthesis integrating qualitative and quantitative data was performed. Studies with an impact on students’ critical thinking, whose research purposes were justification or justification and clarification, were considered. To answer the clarification question “How and why have these interventions had an educational impact?” an inductive content analysis of the educational impacts of CMs was performed. Direct quotations were extracted from papers concerning the explicit use of theories, limitations, lessons learned, and conclusions. We close the results with a description of the resources required to implement CMs.

Results

Results of the search

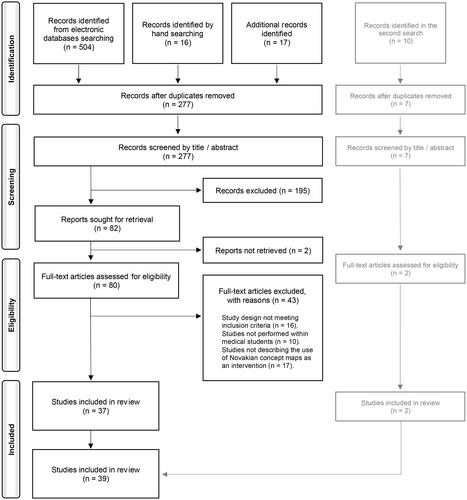

A total of 537 records were identified through electronic database searching, hand searching, and additional research. After the elimination of duplicate entries, 277 records remained. After title and abstract screening, 195 records were excluded, and 82 were selected (Kappa 0.8820). The full-text was unavailable for two studies: one was a poster abstract, and the author did not publish more data; the second was a paper published in 2010, unavailable in the 5 libraries contacted, and the main author no longer had an available contact due to retirement. Eighty studies underwent full-text assessment for eligibility, following which 43 were excluded. Thirty-seven studies from this literature search and 2 additional studies of the updated search were included in the synthesis. Thirty-two studies were retrieved from electronic search, 5 from hand search, and 2 from additional search. Study identification and selection are summarized in a PRISMA flow diagram (adapted from Page et al. Citation2021) in .

Publication date, geographical location, and journal

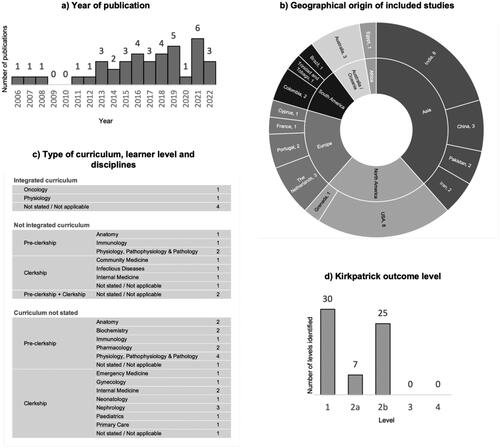

The 39 studies included were published between 2006 and 2022, and 87.2% (n = 34) were published between 2013 and 2022, as shown in . describes the origin by country and continent. Most publications were from India and USA (n = 8, 20.5%, each).

Studies were published in 26 journals, and 19 (48.7%) were published in top-ranked medical education journals according to the impact factor.

Classification of the studies

We found 19 quantitative, 8 qualitative, and 12 mixed-methods studies. Among the quantitative studies, 7 were randomized controlled trials, 1 was a nonrandomized controlled trial, 1 was a randomized cross-over trial, 6 were single-group pre-test and post-test, 1 was a two-group post-test only, and 3 were single-group post-test only.

We identified 74 research purposes in the 39 studies: description was present in 27 (69.2%) studies, justification in 24 (61.5%) studies, and clarification in 23 (59.0%) studies. All the qualitative studies had the purpose of description, and 6 (75.0%) also had clarification, with none whose purpose was justification. In quantitative studies, most had the purpose of justification (n = 17, 89.5%), followed by description (n = 9, 47.4%), and less frequently by clarification (n = 5, 26.3%). All the mixed-methods studies had clarification as a purpose, followed by description (n = 10, 83.3%) and justification (n = 7, 58.3%).

Setting and description of the use of CMs

shows the type of curriculum, learner level and disciplines reported in the studies. There was no reference to the discipline in 3 of the 6 studies reporting an integrated curriculum, and in one study the CMs were applied to create a student’s portfolio for reflective exercises about learning (Sieben et al. Citation2021). Ten (25.6%) studies described the use of CMs in a not integrated curriculum, and 23 (59.0%) studies did not provide enough detail about the curriculum. In these 33 studies, there was no difference regarding the learning level of recruited students (pre-clerkship: n = 16, clerkship: n = 15). The number of participants per study ranged between 6 and 440. In qualitative studies, the number of participants ranged between 6 and 136, and the median was 45 participants, with an interquartile range from 13 to 65. In quantitative studies, the number of participants ranged between 20 and 440, and the median was 75 participants, with an interquartile range from 52 to 122. In two studies, the number of participants was not reported.

Regarding how CMs were used, in 29 studies the students constructed the CMs, in four studies half-filled pre-prepared CMs were used, and in three studies teachers constructed the CMs. In three studies CMs were constructed by students and half-filled CMs were used simultaneously. Leng and Gijlers (Citation2015) and Kumar et al. (Citation2011) referred to the use of half-filled diagrams as a less valuable tool for the more experienced students, who benefited more from drawing diagrams from scratch.

Regarding the evaluation of the CMs built by the students, specific tools were used to assign the maps score in nine studies (Anantharaman et al. Citation2019; Bhusnurmath et al. Citation2017; Brondfield et al. Citation2021; Choudhari et al. Citation2021; Ezequiel et al. Citation2019; Peñuela-Epalza and Hoz 2019; Radwan et al. Citation2019; Wu et al. Citation2014; Wu et al. Citation2016). These tools were quite heterogeneous, and their results were not comparable.

In some studies, CMs were used together with other pedagogical tools. The most used were problem-based learning (PBL) (Addae et al. Citation2012; Anantharaman et al. Citation2019; Leng and Gijlers Citation2015; Rendas et al. Citation2006; Thomas et al. Citation2016), case-based learning (CBL) (Fonseca et al. Citation2020; Peñuela-Epalza and Hoz 2019), and team-based learning (TBL) (Knollmann-Ritschel and Durning Citation2015). In three articles, the CMs were integrated into a cognitive representation tool with argument maps (Wang et al. Citation2018; Wu et al. Citation2014; Wu et al. Citation2016) and in two studies with a portfolio mapping tool to promote effective reflection (Heeneman et al. Citation2019; Sieben et al. Citation2021). In one article, CMs were used together with mind maps (Choudhari et al. Citation2021), in another with wordclouds (Loizou et al. Citation2022) and in another with body painting (Bilton et al. Citation2017). A different context was described in 2 studies (Langer et al. Citation2021; Richards et al. Citation2013) in which the CMs were constructed by faculty members in interactive conferences involving tutors, pre-clerkship, and clerkship students.

Some of these studies are pilot interventions that paved the way for subsequent interventions. Examples are Wu et al. (Citation2014 and 2016) and Wang et al. (Citation2018); Richards et al. (Citation2013) and Langer et al. (Citation2021); Torre et al. (Citation2007) and Torre, Daley, et al. (2017); Heeneman et al. (Citation2019), and Sieben et al. (Citation2021).

A table summarizing the key features of the included studies is presented in Supplementary Appendix 3.

Kirkpatrick outcome level

Kirkpatrick outcome levels of the studies are summarized in . Kirkpatrick level 1 (perception/reaction) was documented in 30 (76.9%) studies. In 7 (17.9%) studies Kirkpatrick level 2a (change in attitudes) was documented, and in 25 (64.1%) studies Kirkpatrick level 2b (change in knowledge) was documented. No study reported outcomes at Kirkpatrick level 3 (changes of behaviour) or 4 (change in professional practice).

Quality of reporting and study methodology

Regarding the quality of reporting, 10 (25.6%) studies were considered of high quality, which means low risk of bias, in all five domains of the RAG ranking system (Addae et al. Citation2012; Anantharaman et al. Citation2019; Fonseca et al. Citation2020; González et al. Citation2008; Kumar et al. Citation2011; Langer et al. Citation2021; Nath et al. Citation2021; Rendas et al. Citation2006; Sieben et al. Citation2021; Torre et al. Citation2007). Thirty-six (92.3%) studies had a clear and relevant description of theoretical models or conceptual frameworks that supported their development; 22 (56.4%) studies had a clear description of the cost/time/resources needed for the development; 25 (64.1%) studies had clear details of the educational context and learner characteristics of the study, and also a clear description of relevant educational methods employed to support delivery; and 21 (53.8%) studies had provision of detailed materials or details of access. Five (12.8%) studies were considered of low quality with a high risk of bias in at least 1 of the 5 domains (Baig et al. Citation2016; Bala et al. Citation2016; Bixler et al. Citation2015; Choudry et al. Citation2019; Surapaneni and Tekian Citation2013). Regarding the study design quality, the CASP checklist was applied to the eight qualitative studies. In question C10 (How valuable is the research?), one study had an excellent value, four had a very good value, and three had a good value. The MERSQI score was applied to the 19 quantitative studies. The minimum value found was nine and the maximum was 14.5. The median was 12.6. MMAT was applied to all 39 studies. According to the screening questions, there were two articles with a negative answer to question 2, not being feasible for further appraisal through the five criteria. The results of the quality assessment are presented in Supplementary Appendix 3.

The impact on students’ critical thinking

The synthesis performed to answer the justification question “What was the impact of CMs on students’ critical thinking” integrates qualitative and quantitative data. . displays the 18 studies that provided results directly related to critical thinking, whose research purposes were justification or justification and clarification, stratified by Kirkpatrick outcome level. This is not a comprehensive summary of all these studies’ results, as we only mentioned results that are related to critical thinking and answered to a justification purpose.

Table 1. Results of the studies with impact on students’ critical thinking, whose research purposes were justification or justification and clarification.

Of the 18 studies, 9 evaluated students’ perceptions and reactions (Kirkpatrick level 1), mainly through Likert-scale questionnaires. Only a few studies used qualitative methods to obtain more comprehensive information about students’ views on using CMs to develop critical thinking skills. Overall, the general findings were positive, indicating that students saw CMs as a valuable means of promoting critical thinking. Veronese et al. (Citation2013) also conducted tutor interviews as part of their evaluation (Kirkpatrick level 2a). Thirteen studies evaluated knowledge and/or skills (Kirkpatrick level 2b). Some studies used critical thinking scales, while others assessed knowledge with multiple-choice questions, open-answer questions, problem-solving questions, and CM scoring. Due to the diversity of instruments applied and the heterogeneity of settings using CMs, no direct comparisons could be made. Nevertheless, most of these studies found statistically significant positive results in critical thinking, except for Bixler et al. (Citation2015), who found similar scores on the California Critical Thinking Skills Test before and after the use of CMs. Bixler et al. (Citation2015) attributed their results to the insufficient duration of the intervention, not allowing the development and consolidation of such complex thinking processes and skills.

Educational impacts of interventions

An inductive content analysis of the educational impacts of CMs was performed to answer the clarification question “How and why have these interventions had an educational impact?”. lists all the educational impacts of CMs described in the 39 selected studies, and in Supplementary Appendix 4 they are listed according to each study. We organized the educational impacts identified into the three main themes described by Torre et al. (Citation2007): CMs can facilitate the integration of knowledge and critical thinking (n = 31, 79.5%), and can be used as a learning (n = 31, 79.5%) and as a teaching (n = 20, 51.3%) methods.

Table 2. Educational impacts of CMs on undergraduate medical students.

Twenty-two (56.4%) studies stated that CMs promote critical thinking skills. Seventeen of these studies were described in the previous section and are shown in . The other five studies answered research purposes of description (Peñuela-Epalza and Hoz 2019; Radwan et al. Citation2019) and clarification (Richards et al. Citation2013; Sannathimmappa et al. Citation2022; Torre et al. Citation2007). CMs were described in 13 (33.3%) studies as a support for clinical reasoning, in 10 (25.6%) studies as promoting the integration of basic sciences and clinical concepts, and in 9 (23.1%) studies as a visual representation of knowledge. There was also a reference to its role in enhancing problem-solving ability, facilitating information synthesis, and as a reflective tool.

As a learning method, CMs stimulated knowledge acquisition (n = 19, 48.7%), allowed a better assessment performance (n = 16, 41.0%), and promoted meaningful and deep learning (n = 15, 38.5% and n = 11, 28.2%, respectively). They also allowed identifying knowledge gaps and progressive construction. Brondfield et al. (Citation2021) described some strategies to expand the benefit of using CMs and maximize their impact: introducing serial CMs earlier in the curriculum, iterative practice and regular tutor feedback, and developing CMs in collaboration with other students. Periods of particularly high academic demand should be avoided.

Finally, in 13 studies, the role of CMs as a teaching tool (n = 13, 33.3%), as stimulating collaborative learning (n = 8, 20.5%), and as an assessment tool (n = 4, 10.3%) was reported. Related to the fact of being a teaching tool, Peñuela-Epalza and Hoz (2019) and Torre et al. (Citation2007) highlighted the interaction between students and teachers, which should occur dynamically, as students build their CMs, and teachers’ ongoing feedback, as essential features for the success of the activity. The contexts were different in the four studies in which the CMs were used as an evaluation tool (Brondfield et al. Citation2021; Knollmann-Ritschel and Durning Citation2015; Loizou et al. Citation2022; Radwan et al. Citation2019). The students built their maps in all of them, mostly as individual tasks but sometimes in groups and using other pedagogical tools. Brondfield et al. (Citation2021) highlighted that concept mapping was a feasible and reliable assessment tool, but additional validity evidence may improve its utility. Radwan et al. (Citation2019) concluded that the significant correlation between the 6th-year medical student’s ability to construct CMs and their clinical reasoning skills was a starting point for the application of CMs as an assessment tool for evaluating clinical reasoning skills.

Resources required to implement CMs

Most studies did not make direct reference to the resources. The need for student training was stated in several references as a prerequisite for the successful implementation of CMs. These sessions varied between studies. Some groups described them as sensitization sessions, where detailed instructions were given and pre-prepared maps were used. In other studies, an introductory video was created, which included sample maps for students’ reference, or explanatory written documents were given to students (Anand et al. Citation2018; Aslami et al. Citation2021; Brondfield et al. 2021; Choudhari et al. Citation2021; Bixler et al. Citation2015; Ezequiel et al. Citation2019; Fonseca et al. Citation2020). Using CMs mostly required curricular time allocated for this purpose (Anand et al. Citation2018; Ben-Haddour et al. Citation2022). Brondfield et al. (Citation2021) stated that these sessions usually occurred in extra time. Reference to teacher training was made in some articles, which occurred as previous sessions specifically oriented to the use of CMs, or with the recommendation of a bibliography on CMs (Bhusnurmath et al. Citation2017; Fonseca et al. Citation2020; Nath et al. Citation2021; Veronese et al. Citation2013). The teacher’s role was also mentioned as a key factor, both as a facilitator and guide, as well as providing feedback to the student (Surapaneni and Tekian Citation2013).

Regarding the support used to build the CMs, there were references to specific software in 18 (46.2%) studies. CmapTools software was used in eight studies, a specific electronic web-based platform was used in two studies, and a specific E-learning system was used in two studies. The other articles referenced other software such as Inspiration, Gephi, cognitive mapping software, and SMART Ideas. In 7 (17.9%) articles, the support was paper and pen or whiteboard. The support was not mentioned in 18 (46.2%) articles.

Discussion

CMs were described by Novak more than 30 years ago and are widely used in health sciences (Daley and Torre Citation2010). Specifically applied to undergraduate medical students, 39 primary studies were published since 2006, mostly in the USA and India. CMs have been used in medical schools as part of an integrated curriculum and of a not integrated curriculum. Curriculum information was not provided in some studies, which likely corresponded to medical schools without an integrated curriculum, based on the description of the context. The number of studies that used CMs in the pre-clerkship and clerkship groups was similar. It is interesting to note that CMs were used at almost all learning levels and in different disciplines with different types of curricula, underscoring the plasticity of CMs as a tool that can be used in a wide variety of settings. In some of the studies, other educational strategies were used in conjunction with CMs. Mostly PBL, CBL, and TBL were used, and the strategies were well aligned and produced good results, but this makes it difficult to assess the actual weight of each of these strategies individually.

Constructing CMs requires that students learn the tool itself, so it is important that they learn how to build CMs beforehand. This tool development process takes time, just like any other pedagogical strategy. Appropriate software can also improve the user experience, and the use of half-filled pre-prepared maps is another strategy often used to reduce the amount of time students spend and avoid the perception that maps may be time-consuming (Baliga et al. Citation2021; Heeneman et al. Citation2019; Veronese et al. Citation2013). Initial resistance often gives way to widespread acceptance as students come to appreciate the benefits of CMs (Veronese et al. Citation2013). However, as a learning method, CMs may benefit some learners and not others, depending on students’ learning preferences, as Torre et al. (Citation2007) stated.

Also noteworthy is the great heterogeneity in the visual representation of the CMs. Although the presented definition of CMs met the inclusion criteria of this review, the diagrams described in some studies were less Novakian and more flexible, sometimes evolving into other layouts with a higher degree of complexity (Wang et al. Citation2018; Wu et al. Citation2014; Wu et al. Citation2016). In two studies, this greater graphic flexibility was also associated with the goal of promoting effective reflection by CMs (Heeneman et al. Citation2019; Sieben et al. Citation2021). In some other studies, the diagrams were not presented, not even in supplementary material, which made it difficult to analyze the data and affected comparisons across studies.

As with students, teacher training is seen as a key factor in maximizing the potential of CMs in teaching. As a graphical representation, CMs enable teachers to observe gradual knowledge acquisition and student difficulties, knowledge gaps and misconceptions, being important teaching tools. CMs were also described as tools that improved collaborative learning. Collaborative conceptual mapping has also been linked to the potential benefits of an active learning approach that stimulates peer feedback and inquisitive competencies (Bixler et al. Citation2015; Richards et al. Citation2013; Torre, Daley, et al. Citation2017).

Regarding the assessment of learning outcomes, some studies have scored CMs. These scoring tools have not yet reached a consensus, and their results are not comparable. Although work has been published in this area (West et al. Citation2000; Torre, Durning, et al. Citation2017), no validated scores can be extrapolated to the different uses of CMs.

The impact of CMs on medical teaching and learning reported in the studies included in this review is similar to that reported in the recent AMEE Guide No. 157 (Torre, German, et al. Citation2023). In this publication, Torre, German et al. (Citation2023) highlighted that CMs are useful tools in teaching and learning because they also encourage peer teaching and allow learners to both view and reflect on their knowledge structures, fostering metacognition and critical reflection. CMs can accompany students’ progress, enabling a graphical representation of knowledge. Their ability to visually organize and connect knowledge allows learners to identify connections between ideas, deepen comprehension, and develop critical thinking skills. By providing a structure of ideas and concepts, CMs, as a scaffold, facilitate complex topics and ideas understanding (Choudhari et al. Citation2021; Leng and Gijlers Citation2015; Wang et al. Citation2018; Wu et al. Citation2016) and support the development of higher metacognitive functioning (Thomas et al. Citation2016). In this review, we found relevant studies that stated a positive effect of CMs on critical thinking, both in terms of students’ and teachers’ perceptions and in terms of quantitative outcomes. However, it became clear that the measurement of critical thinking remained difficult to quantify, largely due to the difficulty of objectively defining this concept and due to the inherent difficulty of measuring mental processes and attitudes. On the other hand, as stated by Huang et al. (Citation2014), to assess attitudes toward critical thinking, teachers may use a range of inventories in addition to direct observation of the learner. Thus, no direct comparisons could be made due to the diversity of the instruments applied and the heterogeneity of the settings using CMs. Nevertheless, this review found a clear relationship between CMs and critical thinking. CMs are pedagogical tools that stimulate a higher metacognitive functioning in medical students, allowing them to connect basic sciences with clinical concepts, at any stage of the learning level, through a visual representation of knowledge, thus promoting reflective process and clinical reasoning. Torre, Chamberland, et al. (Citation2023) also mentioned the concept mapping exercise as one of the clinical reasoning teaching tools in the AMEE Guide No. 150. As stated by Demeester et al. (Citation2010), in a revision about CMs as a strategy to learn clinical reasoning, CMs can help students summarize clinical cases or plan a course of action, helping them develop critical thinking in an analytical form and progress towards the competence of clinical reasoning.

Quality of reporting and study methodology

The broad scope and diversity of the studies under analysis allowed for collecting data related to the specific use of CMs in different contexts. Globally, the studies had a good quality of reporting, particularly regarding the description of theoretical models or conceptual frameworks that supported their development. High-quality studies stand out for their clarity and detail, which allows a better understanding of the specific context reported. On the other hand, better reports on the description of relevant educational methods and the provision of more detailed materials (or details of access) would be desirable to reduce risk of bias and ensure the reproducibility of the interventions.

Regarding the study methodology, qualitative studies had good to excellent quality when assessed by the CASP tool and MMAT. In some, better reporting of participant recruitment, data collection, participant-researcher relationship, and data analysis could have helped make their findings more robust. In the MERSQI score, the main discrepancy between the quantitative studies was related to the study design. The randomized controlled trials, the nonrandomized controlled trial, and the randomized cross-over trial had better scores in domain I. Regarding domain VI, the maximum value obtained was 1.5 due to Kirkpatrick level 2 being the highest. Regarding the mixed-methods studies, the weaknesses identified by the MMAT were the inappropriate use of the mixed methodology, the ineffective integration of the components, and the way of dealing with divergences between the data. Given the heterogeneity in the use of CMs, it is crucial to provide a clear and comprehensive description of how CMs were used, this being the point of improvement in studies whose quality assessment was lower. We expect that this assessment will guide the reader: the methodology adopted in each intervention and how it is written may affect its reproducibility.

Strengths and limitations

This work corresponds to a mixed-methods systematic review carried out following the guidelines of BEME. The search strategy was robust, carried out in several databases, and conducted in collaboration with a medical librarian. A search update was conducted to keep the results current. The process of selecting articles, extracting data, and quality assessment was carried out independently by two authors.

The main limitation of this work is related to the difficulty in defining critical thinking and its related constructs, such as diagnostic reasoning, problem-solving, and clinical reasoning. Our understanding of clinical reasoning (Young et al. Citation2020) and the working definition of critical thinking (Huang et al. Citation2014) helped us make sense of the data. However, the working definition of these concepts was not always clear in primary studies, which led to difficulties in interpreting and synthesizing some results.

Another limitation we felt was related to the performance of the mixed-methods review. The translation of the results of the quantitative synthesis into qualitative themes or vice versa was not possible due to the heterogeneous use of CMs in the different articles. In some studies, CMs were the intervention and assessed by other tools. In other studies, they were the tool used to evaluate the intervention itself, which in some contexts involved simultaneously using other educational tools.

Implications for practice

How CMs are introduced in the undergraduate medical student curriculum may dictate the success of their use. CMs should be a thoughtful tool made available for many disciplines of undergraduate medical education, preferably at the beginning of the course, to allow for continuous integration of basic sciences and clinical concepts. The fact that they should be introduced in the early years allows CMs to be revisited and improved later. CMs should be carried out serially and in significant numbers since it had been documented that an increase in student performance correlates with the number and quality of CMs used in the teaching and learning sessions (Bhusnurmath et al. Citation2017). The possibility for students to improve their CMs over time is positive and promotes critical thinking skills. Construction and discussion of CMs in a group can be beneficial in certain situations. Clear guidelines for CM construction should be given to students at the beginning of the task. The use of complementary pedagogical strategies can also be positive, however, their application should be adapted to the context and used strategically to respond to the purpose of their use (Torre et al. Citation2007). The time for construction should be safeguarded to avoid colliding with other priority demands or clinical activities (Ben-Haddour et al. Citation2022) and to prevent a reduction in student motivation. Resources and strategies should be implemented to reduce the perception of it being a time-consuming activity, such as using specific, user-friendly software with prior training sessions (Torre, Daley, et al. Citation2017). Alternatively, starting with half-filled pre-prepared maps and gradually increasing the degree of autonomy in their construction as students evolve, can also be an option. The teacher’s role as a facilitator, motivator, and guide, which also must be prepared, is fundamental in giving feedback to students.

Implications for research

From a research perspective, several studies point out the need to include a control group, more participants, and randomization to allow for the generalization of results and to abolish the selection bias of voluntary participants. The non-contamination of the intervention and control groups is also an important point, as is the non-contamination from other educational tools used simultaneously.

It would be interesting to extrapolate to other disciplines and systematically conduct multicentre studies (Torre et al. Citation2007) involving collaborative learning. As mentioned by González et al. (Citation2008), longitudinal evaluation may allow for drawing conclusions on the impact of the intervention on the permanence of meaningful learning and the ability to transfer knowledge to other and more complex contexts. This could be studied by tracking the student participants through subsequent semesters.

Many studies present only Kirkpatrick outcome level 1, and their conclusions rely only on the perceptions of students and sometimes tutors. It is useful, as is done in some studies, to compare them with other measures of student performance. One suggestion is to analyze the connection with other knowledge or clinical skills measurements such as USMLE or OSCEs for future studies (Torre et al. Citation2007). The possibility of measuring changes in the behaviour of subjects and/or changes in professional practice remains to be performed.

The score of the CMs continues to be an area to be further explored to allow its use as an assessment tool. Clear evidence of reliability and validity is still necessary (Wang et al. Citation2018).

Conclusions

CMs are teaching and learning tools which seem to help medical students develop critical thinking. This is due to the flexibility of the tool as a facilitator of knowledge integration, as a learning and teaching method. The wide range of contexts, purposes, and variations in how CMs and instruments to assess critical thinking are used increases our confidence that the positive effects are consistent. At the same time, this large variation makes it unclear how large the effect of CMs on critical thinking is.

We encourage medical education professionals to explicitly mention all outcomes, including relevant educational theories, so they can serve as a scaffold for debating and acquiring new knowledge. Careful reporting of sound educational innovations is essential for reproducibility and advancement of scientific knowledge.

Supplemental Material

Download Zip (920.5 KB)Acknowledgements

The authors would like to thank Teresa Costa (PhD, Head Medical Librarian, NOVA Medical School, Portugal) and Susana Oliveira Henriques (Medical Librarian, Center for Information and Documentation of the University of Lisbon School of Medicine, Portugal) for their expert contribution to the search strategy and assistance in executing the database search.

The authors would also like to thank the reviewers for their comments and suggestions, which allowed to improve the manuscript until this final version.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Correction Statement

This article has been corrected with minor changes. These changes do not impact the academic content of the article.

Additional information

Funding

Notes on contributors

Marta Fonseca

Marta Fonseca, MD, is a Pathophysiology Lecturer and a PhD student at NOVA Medical School, where she has been teaching Pathophysiology to medical students using problem-based learning and concept maps since 2002. She is also a board-certified practicing Family Physician.

Pedro Marvão

Pedro Marvão, PhD, is an Assistant Professor of Physiology and Head of Office of Education. Has been involved in setting up and running an integrated medical curriculum taught with problem-based learning from 2009 till 2016. Presently is leading a major overhaul of NOVA Medical School curriculum.

Beatriz Oliveira

Beatriz Oliveira, MD, is a Urology Resident at Centro Hospitalar Universitário de Santo António. Has been collaborating with the Pathophysiology Department of NOVA Medical School, as a medical student and as Pathophysiology Lecturer, regarding application of concepts maps in medical learning, since 2017.

Bruno Heleno

Bruno Heleno, MD, PhD, is an Assistant Professor of Family Medicine and Family Physician. He has been teaching clinical reasoning since 2016. He has published two systematic reviews and three scoping reviews.

Pedro Carreiro-Martins

Pedro Carreiro-Martins, MD, PhD, is an Assistant Professor of Pathophysiology and consultant of Allergy and Clinical Immunology. In the last 15 years has teach Pathophysiology to medical students using problem-based learning and concept maps.

Nuno Neuparth

Nuno Neuparth, MD, PhD, is Vice dean and President of the Pedagogical Council. Full Professor of Pathophysiology and Head of the Department. He is a clinician specialized in Immunoallergology with a main interest in respiratory pathophysiology. He has been teaching pathophysiology to medical students for the last 40 years, using problem-based learning and concept maps.

António Rendas

António Rendas, MD, PhD, Emeritus Professor of Pathophysiology. After his PhD in Experimental Pathology, University of London, Cardiothoracic Institute, Brompton Hospital, he founded the Department of Pathophysiology at NOVA Medical School, committed to teaching and learning methods having introduced problem-based learning and concept mapping in the undergraduate medical curriculum in Portugal.

Notes

1 b-on, being a national consortium, provides a discovery service based on EBSCO's Discovery Service (EDS) that allows simultaneous research in several resources such as, for example, 1) the Freedom Collection by Elsevier; 2) the periodical collection from Springer, Wiley, Taylor & Francis, and Sage; and 3) the databases Academic Search Complete and Eric from EBSCO.

References

- Addae JI, Wilson JI, Carrington C. 2012. Students’ perception of a modified form of PBL using concept mapping. Med Teach. 34(11):e756–e762. doi: 10.3109/0142159X.2012.689440.

- Anand MK, Singh O, Chhabra PK. 2018. Learning with concept maps versus learning with classical lecture and demonstration methods in Neuroanatomy - A comparison. Natl J Clin Anat. 7(02):95–102. doi: 10.1055/s-0040-1701785.

- Anantharaman LT, Shankar N, Rao M, Pauline M, Nithyanandam S, Lewin S, Stephen J. 2019. Effect of two-month problem-based learning course on self-directed and conceptual learning among second year students in an indian medical college. JCDR. 13(5):5–10. doi: 10.7860/JCDR/2019/40969.12874.

- Aslami M, Dehghani M, Shakurnia A, Ramezani G, Kojuri J. 2021. Effect of concept mapping education on critical thinking skills of medical students: a quasi-experimental study. Ethiop J Health Sci. 31(2):409–418. doi: 10.4314/ejhs.v31i2.24.

- Ausubel DP. 2000. The acquisition and retention of knowledge: a cognitive view. Boston, MA: Kluwer. doi: 10.1007/978-94-015-9454-7.

- Baig M, Tariq S, Rehman R, Ali S, Gazzaz ZJ. 2016. Concept mapping improves academic performance in problem solving questions in biochemistry subject. Pak J Med Sci. 32(4):801–805. doi: 10.12669/pjms.324.10432.

- Bala S, Dhasmana D, Kalra J, Kohli S, Sharma T. 2016. Role of concept map in teaching general awareness and pharmacotherapy of HIV/AIDS among second professional medical students. Indian J Pharmacol. 48(Suppl 1):S37–S40. doi: 10.4103/0253-7613.193323.

- Baliga SS, Walvekar PRW, Mahantshetti GJ. 2021. Concept map as a teaching and learning tool for medical students. J Educ Health Promot. 10:35. doi: 10.4103/jehp.jehp_146_20.

- Ben-Haddour M, Roussel M, Demeester A. 2022. Étude Exploratoire De L’Utilisation Des Cartes Conceptuelles Pour Le Développement Du Raisonnement Clinique Des Étudiants De Deuxième Cycle En Stage Dans Un Service D’Urgence [exploratory study of the use of concept maps for the development of clinical reasoning in graduate students practicing in an emergency Department]. Ann Fr Med Urgence. 12(5):285–293. doi: 10.3166/afmu-2022-0423.

- Bhusnurmath S, Bhusnurmath B, Goyal S, Hafeez S, Abugroun A, Okpe J. 2017. Concept map as an adjunct tool to teach pathology. Indian J Pathol Microbiol. 60(2):226–231. doi: 10.4103/0377-4929.208410.

- Bilton N, Rae J, Logan P, Maynard G. 2017. Concept mapping in health sciences education: conceptualizing and testing a novel technique for the assessment of learning in anatomy [version 1]. MedEdPublish. 6(:131. doi: 10.15694/mep.2017.000131.

- Bixler GM, Brown A, Way D, Ledford C, Mahan JD. 2015. Collaborative concept mapping and critical thinking in fourth-year medical students. Clin Pediatr . 54(9):833–839. doi: 10.1177/0009922815590223.

- Boet S, Sharma S, Goldman J, Reeves S. 2012. Review article: medical education research: an overview of methods. Can J Anaesth. 59(2):159–170. doi: 10.1007/s12630-011-9635-y.

- Brentnall J, Thackray D, Judd B. 2022. Evaluating the clinical reasoning of student health professionals in placement and simulation settings: a systematic review. IJERPH. 19(2):936. doi: 10.3390/ijerph19020936.

- Brondfield S, Seol A, Hyland K, Teherani A, Hsu G. 2021. Integrating concept maps into a medical student Oncology curriculum. J Cancer Educ. 36(1):85–91. doi: 10.1007/s13187-019-01601-7.

- Choudhari SG, Gaidhane AM, Desai P, Srivastava T, Mishra V, Zahiruddin SQ. 2021. Applying visual mapping techniques to promote learning in community-based medical education activities. BMC Med Educ. 21(1):210. doi: 10.1186/s12909-021-02646-3.

- Choudry A, Shukr I, Sabir S. 2019. Do concept maps enhance deep learning in medical students studying puberty disorders? Pak Armed Forces Med J. 69(5):959–964.

- Crandell JL, Voils CI, Chang YK, Sandelowski M. 2011. Bayesian data augmentation methods for the synthesis of qualitative and quantitative research findings. Qual Quant. 45(3):653–669. doi: 10.1007/s11135-010-9375-z.

- Critical Appraisal Skills Programme. 2018. CASP qualitative checklist. Online. Available from: https://casp-uk.net/%0Ahttp://www.casp-uk.net/casp-tools-checklists. Accessed: 19/04/2022.

- Cook DA, Bordage G, Schmidt HG. 2008. Description, justification and clarification: a framework for classifying the purposes of research in medical education. Med Educ. 42(2):128–133. doi: 10.1111/j.1365-2923.2007.02974.x.

- Cook DA, Reed DA. 2015. Appraising the quality of medical education research methods: the medical education research study quality instrument and the newcastle-ottawa scale-education. Acad Med. 90(8):1067–1076. doi: 10.1097/ACM.0000000000000786.

- Daley BJ, Durning SJ, Torre DM. 2016. Using concept maps to create meaningful learning in medical education. MedEdPublish. 5(1):19. doi: 10.15694/mep.2016.000019.

- Daley BJ, Torre DM. 2010. Concept maps in medical education: an analytical literature review. Med Educ. 44(5):440–448. doi: 10.1111/j.1365-2923.2010.03628.x.

- Davies M. 2011. Concept mapping, mind mapping and argument mapping: what are the differences and do they matter? High Educ. 62(3):279–301. doi: 10.1007/s10734-010-9387-6.

- Demeester A, Vanpee D, Marchand C, Eymard C. 2010. Formation au raisonnement clinique: perspectives d’utilisation des cartes conceptuelles [Clinical reasoning training: perspectives on using concept maps]. Pédagogie Médicale. 11(2):81–95. doi: 10.1051/pmed/2010013.

- Ezequiel OS, Tibiriçá SHC, Moutinho ILD, Lucchetti ALG, Lucchetti G, Grosseman S, Marcondes-Carvalho P. Jr 2019. Medical students’ critical thinking assessment with collaborative concept maps in a blended educational Strategy. Educ Health . 32(3):127–130. doi: 10.4103/efh.EfH_306_15.

- Fonseca M, Oliveira B, Carreiro-Martins P, Neuparth N, Rendas A. 2020. Revisiting the role of concept mapping in teaching and learning pathophysiology for medical students. Adv Physiol Educ. 44(3):475–481. doi: 10.1152/advan.00020.2020.

- González HL, Palencia AP, Umaña LA, Galindo L, Villafrade LA. 2008. Mediated learning experience and concept maps: a pedagogical tool for achieving meaningful learning in medical physiology students. Adv Physiol Educ. 32(4):312–316. doi: 10.1152/advan.00021.2007.

- Gordon M, Gibbs T. 2014. STORIES statement: publication standards for healthcare education evidence synthesis. BMC Med. 12(1):143. doi: 10.1186/s12916-014-0143-0.

- Gordon M, Hill E, Stojan JN, Daniel M. 2018. Educational interventions to improve handover in health care: an updated systematic review. Acad Med. 93(8):1234–1244. doi: 10.1097/ACM.0000000000002236.

- Hammick M, Dornan T, Steinert Y. 2010. Conducting a best evidence systematic review. Part 1: from idea to data coding. BEME Guide No. 13. Med Teach. 32(1):3–15. doi: 10.3109/01421590903414245.

- Heeneman S, Driessen E, Durning SJ, Torre D. 2019. Use of an e-portfolio mapping tool: connecting experiences, analysis and action by learners. Perspect Med Educ. 8(3):197–200. doi: 10.1007/s40037-019-0514-5.

- Hong QN, Pluye P, Fàbregues S, Bartlett G, Boardman F, Cargo M, Dagenais P, Gagnon M-P, Griffiths F, Nicolau B, et al. 2018. Mixed Methods Appraisal Tool (MMAT), version 2018. Registration of Copyright (#1148552), Canadian Intellectual Property Office, Industry Canada. accessed 2022 Apr 19]. http://mixedmethodsappraisaltoolpublic.pbworks.com/w/file/fetch/127916259/MMAT_2018_criteria-manual_2018-08-01_ENG.pdf.

- Huang GC, Newman LR, Schwartzstein RM. 2014. Critical thinking in Health Professions Education: summary and consensus statements of the millennium conference 2011. Teach Learn Med. 26(1):95–102. doi: 10.1080/10401334.2013.857335.

- Kinchin IM. 2008. The qualitative analysis of concept maps: some unforeseen consequences and emerging opportunities. Proceedings of the Third International Conference on Concept Mapping; June 1–8; Tallin, Estonia & Helsinki, Finland.

- Knollmann-Ritschel BEC, Durning SJ. 2015. Using concept maps in a modified team-based learning exercise. Mil Med. 180(4 Suppl):64–70. doi: 10.7205/MILMED-D-14-00568.

- Kumar S, Dee F, Kumar R, Velan G. 2011. Benefits of testable concept maps for learning about pathogenesis of disease. Teach Learn Med. 23(2):137–143. doi: 10.1080/10401334.2011.561700.

- Langer AL, Block BL, Schwartzstein RM, Richards JB. 2021. Building upon the foundational science curriculum with physiology-based grand rounds: a multi-institutional program evaluation. Med Educ Online. 26(1):1937908. doi: 10.1080/10872981.2021.1937908.

- Leng BD, Gijlers H. 2015. Collaborative diagramming during problem based learning in medical education: do computerized diagrams support basic science knowledge construction? Med Teach. 37(5):450–456. doi: 10.3109/0142159X.2014.956053.

- Loizou S, Nicolaou N, Pincus BA, Papageorgiou A, McCrorie P. 2022. Concept maps as a novel assessment tool in medical education [version 3]. MedEdPublish. 12:21. doi: 10.12688/mep.19036.3.

- Nath S, Bhattacharyya S, Preetinanda P. 2021. Perception of students and faculties towards implementation of concept mapping in Pharmacology: a cross-sectional interventional study. JCDR. 15(4):8–13. doi: 10.7860/JCDR/2021/48561.14797.

- Novak JD. 2003. The promise of new ideas and new technology for improving teaching and learning. Cell Biol Educ. 2(2):122–132. doi: 10.1187/cbe.02-11-0059.

- Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, Shamseer L, Tetzlaff JM, Akl EA, Brennan SE, et al. 2021. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ. 372:n71. doi: 10.1136/bmj.n71.

- Peñuela-Epalza M, De la Hoz K. 2019. Incorporation and evaluation of serial concept maps for vertical integration and clinical reasoning in case-based learning tutorials: perspectives of students beginning clinical medicine. Med Teach. 41(4):433–440. doi: 10.1080/0142159X.2018.1487046.

- Pierce C, Corral J, Aagaard E, Harnke B, Irby DM, Stickrath C. 2020. A BEME realist synthesis review of the effectiveness of teaching strategies used in the clinical setting on the development of clinical skills among health professionals: BEME Guide No. 61. Med Teach. 42(6):604–615. doi: 10.1080/0142159X.2019.1708294.

- Pinto AJ, Zeitz HJ. 1997. Concept mapping: a strategy for promoting meaningful learning in medical education. Med Teach. 19(2):114–121. doi: 10.3109/01421599709019363.

- Radwan A, Abdel Nasser A, El Araby S, Talaat W, 2019. Correlation between assessment of concept maps construction and the clinical reasoning ability of final year medical students at the faculty of medicine, Suez Canal University, Egypt. EIMJ. 10(4):43–51. doi: 10.21315/eimj2018.10.4.5.

- Rendas AB, Fonseca M, Pinto PR. 2006. Toward meaningful learning in undergraduate medical education using concept maps in a PBL pathophysiology course. Adv Physiol Educ. 30(1):23–29. doi: 10.1152/advan.00036.2005.

- Richards J, Schwartzstein R, Irish J, Almeida J, Roberts D. 2013. Clinical physiology grand rounds. Clin Teach. 10(2):88–93. doi: 10.1111/j.1743-498X.2012.00614.x.

- Saeidifard F, Heidari K, Foroughi M, Soltani A. 2014. Concept mapping as a method to teach an evidence-based educated medical topic: a comparative study in medical students. J Diabetes Metab Disord. 13(1):86. doi: 10.1186/s40200-014-0086-1.

- Sannathimmappa MB, Nambiar V, Aravindakshan R. 2022. Concept maps in immunology: a metacognitive tool to promote collaborative and meaningful learning among undergraduate medical students. J Adv Med Educ Prof. 10(3):172–178. doi: 10.30476/JAMP.2022.94275.1576.

- Sieben JM, Heeneman S, Verheggen MM, Driessen EW. 2021. Can concept mapping support the quality of reflections made by undergraduate medical students? A mixed method study. Med Teach. 43(4):388–396. doi: 10.1080/0142159X.2020.1834081.

- Stojan J, Haas M, Thammasitboon S, Lander L, Evans S, Pawlik C, Pawilkowska T, Lew M, Khamees D, Peterson W, et al. 2021. Online learning developments in undergraduate medical education in response to the COVID-19 pandemic: a BEME systematic review: BEME Guide No. 69. Med Teach. 44(2):109–129. doi: 10.1080/0142159X.2021.1992373.

- Surapaneni KM, Tekian A. 2013. Concept mapping enhances learning of biochemistry. Med Educ Online. 18(1):1–4. doi: 10.3402/meo.v18i0.20157.

- Swanwick T, Forrest K, O’Brian BC. 2019. Understanding medical education: evidence, theory, and practice. Hoboken, NJ: Wiley-Blackwell.

- Thomas L, Bennett S, Lockyer L. 2016. Using concept maps and goal-setting to support the development of self-regulated learning in a problem-based learning curriculum. Med Teach. 38(9):930–935. doi: 10.3109/0142159X.2015.1132408.

- Torre D, Chamberland M, Mamede S. 2023. Implementation of three knowledge-oriented instructional strategies to teach clinical reasoning: self-explanation, a concept mapping exercise, and deliberate reflection: AMEE Guide No. 150. Med Teach. 45(7):676–684. doi: 10.1080/0142159X.2022.2105200.

- Torre D, German D, Daley B, Taylor D. 2023. Concept mapping: An aid to teaching and learning: AMEE Guide No. 157. Med Teach. 45(5):455–463. doi: 10.1080/0142159X.2023.2182176.

- Torre D, Daley BJ, Picho K, Durning SJ. 2017. Group concept mapping: An approach to explore group knowledge organization and collaborative learning in senior medical students. Med Teach. 39(10):1051–1056. doi: 10.1080/0142159X.2017.1342030.

- Torre DM, Daley B, Stark-Schweitzer T, Siddartha S, Petkova J, Ziebert M. 2007. A qualitative evaluation of medical student learning with concept maps. Med Teach. 29(9):949–955. doi: 10.1080/01421590701689506.

- Torre DM, Durning SJ, Daley BJ. 2017. Concept maps: definition, structure, and scoring. Acad Med. 92(12):1802–1802. doi: 10.1097/ACM.0000000000001969.

- Veronese C, Richards JB, Pernar L, Sullivan AM, Schwartzstein RM. 2013. A randomized pilot study of the use of concept maps to enhance problem-based learning among first-year medical students. Med Teach. 35(9):e1478–84–e1484. doi: 10.3109/0142159X.2013.785628.

- Wang M, Wu B, Kirschner PA, Spector JM. 2018. Using cognitive mapping to foster deeper learning with complex problems in a computer-based environment. Comput Human Behav. 87:450–458. doi: 10.1016/j.chb.2018.01.024.

- West DC, Pomeroy JR, Park JK, Gerstenberger EA, Sandoval J. 2000. Critical thinking in graduate medical education. A role for concept mapping assessment? JAMA. 284(9):1105–1110.

- Wu B, Wang M, Grotzer TA, Liu J, Johnson JM. 2016. Visualizing complex processes using a cognitive-mapping tool to support the learning of clinical reasoning. BMC Med Educ. 16(1):216. doi: 10.1186/s12909-016-0734-x.

- Wu B, Wang M, Johnson JM, Grotzer TA, Lazarus CJ. 2014. Improving the learning of clinical reasoning through computer-based cognitive representation. Med Educ Online. 19(1):25940. doi: 10.3402/meo.v19.25940.

- Young ME, Thomas A, Lubarsky S, Gordon D, Gruppen LD, Rencic J, Ballard T, Holmboe E, Silva AD, Ratcliffe T, et al. 2020. Mapping clinical reasoning literature across the health professions: A scoping review. BMC Med Educ. 20(1):107. doi: 10.1186/s12909-020-02012-9.