ABSTRACT

This paper describes the Centers of Excellence in Primary Care Education (CoEPCE), a seven-site collaborative project funded by the Office of Academic Affiliations (OAA) within the Veterans Health Administration of the United States Department of Veterans Affairs (VA). The CoEPCE was established to fulfill OAA’s vision of large-scale transformation of the clinical learning environment within VA primary care settings. This was accomplished by funding new Centers within VA facilities to develop models of interprofessional education (IPE) to teach health professions trainees to deliver high quality interprofessional team-based primary care to Veterans. Using reports and data collected and maintained by the National Coordinating Center over the first six years of the project, we describe program inputs, the multicomponent intervention, activities undertaken to develop the intervention, and short-term outcomes. The findings have implications for lessons learned that can be considered by others seeking large-scale transformation of education within the clinical workplace and the development of interprofessional clinical learning environments. Within the VA, the CoEPCE has laid the foundation for IPE and collaborative practice, but much work remains to disseminate this work throughout the national VA system.

Introduction

Despite efforts to transform education and clinical care from occurring in professional silos to interprofessional collaborative learning and practice, such transformations remain complex and challenging (Brienza, Citation2016; Brienza, Zapatka, & Meyer, Citation2014; Cox, Citation2013; Institute of Medicine, Citation1972). Most reports describe new models of education and clinical care at single programs (Long, Dann, Wolff, & Brienza, Citation2014; Mazanec et al., Citation2015; Murray, Christen, Marsh, & Bayer, Citation2012; Nasir, Goldie, Little, Banerjee, & Reeves, Citation2017; Pare, Maziade, Pelletier, Houle, & Iloko-Fundi, Citation2012; Ruddy, Borresen, & Myerholtz, Citation2013). However, implementation of interprofessional education (IPE) programs across multiple clinical care sites provides the opportunity to describe a process used for large-scale system transformation. This paper describes the first six years of a coordinated initiative within the Department of Veterans Affairs (VA) to implement the vision of the Office of Academic Affiliations (OAA) to transform education and clinical care within VA primary care settings to interprofessional team-based learning and practice to improve health professions education and primary care outcomes for Veterans. Lessons learned from our experiences may guide other large-scale transformational efforts to improve the educational preparation of our national healthcare workforce.

Background

The VA’s nationwide implementation of Patient Aligned Care Teams (PACT) in 2010 introduced fundamental changes in VA primary care based on the patient-centered medical home model (Nelson et al., Citation2014; Rosland et al., Citation2013). Soon afterwards, OAA recognized the need to redesign primary care education to maintain alignment with primary care delivery (Bowen & Schectman, Citation2013; Cox, Citation2013; Gilman, Chokshi, Bowen, Rugen, & Cox, Citation2014). In 2010, OAA released the first of three national requests for proposals (RFP) for VA facilities to seek funding to develop and implement interprofessional team-based curricula to achieve clinical practice-education integration (Department of Veterans Affairs, Citation2010). The RFP had several requirements to ensure consistency. Requirements included: partnerships with academic affiliates; incorporation of physician residents and nurse practitioner (NP) students; the inclusion of other professions as resources and expertise became available; curriculum development in four core educational domains (shared decision-making, interprofessional collaboration, sustained relationships, and performance improvement); and workplace learning as an instructional strategy. Staffing requirements included leadership teams consisting of a physician and nurse practitioner co-director and Center faculty including at least four clinician educators with protected time to fulfill curriculum development, teaching, and mentoring responsibilities. Centers were free to create strategies to fit within their local VA context. Five VA facilities (Boise, Idaho; Cleveland, Ohio; San Francisco, California; Seattle, Washington; and West Haven, Connecticut) were awarded $1 million per Center per year in January 2011 (Stage 1, 2010–2015) for training activities beginning in July 2011.

To guide multisite activities, OAA established a National Coordinating Center (NCC) consisting of individuals with expertise in education, program administration, and evaluation across the health professions. The role of the NCC was to develop IPE national policy, distribute and monitor resources, guide and facilitate Center work, identify promising educational practices to incorporate into common curricula across Centers, conduct cross-site evaluation, develop performance improvement and population registries, and communicate results to OAA, other VA staff and leadership, and the broader academic and health professions communities.

In 2015, OAA launched two additional RFPs for Stage 2, 2015–2019 (Department of Veterans Affairs, Citation2015a, Citation2015b). The five original Centers, plus two new Centers at VA facilities in Los Angeles, California, and Houston, Texas were funded for another four years at $750,000 per site per year.

Methods

Enterprise evaluation across Centers was implemented early in Stage 1. Using a mixed-methods approach, NCC evaluators conducted both formative and summative evaluation to inform ongoing intervention development and implementation. The enterprise evaluation was guided by a logic model and consisted of individual projects each guided by its own evaluation question. In Stage 2, evaluation capacity was enhanced by the engagement of an external health services research group at the Portland VA to further evaluate impacts on patients, including clinical outcomes, clinical staff, and the VA healthcare system.

This evaluation is categorized as an operation's improvement activity based on VHA Handbook 1058.05, where information generated is used for business operations and quality improvement. The overall project was subject to administrative oversight rather than oversight from a Human Subjects Institutional Review Board.

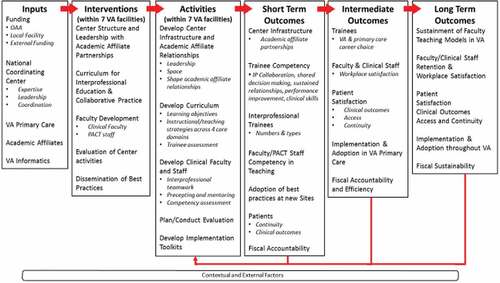

CoEPCE logic model

The logic model in illustrates the inputs for CoEPCE development, the multicomponent CoEPCE “intervention”, and activities undertaken to develop the intervention leading to short, intermediate, and long-term outcomes (Petersen, Taylor, & Peikes, Citation2013). included funding from OAA, local VA facilities and affiliates, NCC expertise and leadership, VA Office of Primary Care that directs clinical care received by Veterans, and VA informatics including the electronic medical record system. The multicomponent intervention was instituted at each of the seven VA facilities and included the Center structure and leadership, the IPE curriculum, faculty development, Center evaluation, and dissemination of best practices. Over the first six years, activities focused on the establishment of individual Center infrastructure, engagement with academic affiliates to develop new logistics required for IPE, planning and implementation of faculty development programs, development and implementation of IPE curriculum, and development and implementation of individual Center evaluation activities. These activities were undertaken by the Centers with NCC input to develop interventions that fit within the local facility context but were standardized across Centers.

Data sources

We conducted descriptive analyses of evaluation reports and administrative data collected and maintained by the NCC. Data sources included the following:

Coordinating Center Annual Report 2011–2012: This document describes clinical transformation, operational and evaluation activities during the first two years of Stage 1.

Semi-annual evaluation reports: Reports were submitted by all Centers to assess Center infrastructure, curriculum, faculty, and trainees. Semi-annual reports from 2013–2014 and 2015–2016 were used in this analysis.

Trainee data: These data were collected from Centers twice a year, and were used to describe numbers and types of trainees over the six-year period.

Nurse Practitioner Resident Competency Tool: This tool was administered at each Center to evaluate competency of NP residents at one, six, and twelve months in domains of clinical, leadership, interprofessional team collaboration, patient-centered care, shared decision-making, sustained relationships, and performance improvement (Rugen, Speroff, Zapatka, & Brienza, Citation2016). NP residents rated items within each domain on a scale ranging from 0 (not performed/not observed) to 5 (able to supervise others). There was also a qualitative component where NP residents answered open-ended questions about things they do well, things they would like to improve, and short/long-term goals. Psychometric analysis of the quantitative component demonstrates high internal consistency reliability for each of the seven domains with Cronbach’s alpha ranging from 0.86–0.95 (Rugen, Dolansky, Dulay, King, & Harada, Citation2017).

Participant survey: This survey assessed trainee satisfaction with the overall program, the curriculum including the four core domains, and interest in VA and/or primary care employment. The survey is administered during the spring of each year and the findings are communicated back to Centers for program quality improvement purposes. Internal consistency reliability is high with Cronbach’s alpha ranging from .83 to .93 for subscales measuring learning in each of the four core domains, program satisfaction, program practices, and systems impact (unpublished data).

Data analysis

Content analysis of textual data. The NCC Annual Report and semi-annual reports were analyzed using qualitative content analysis. Reports were uploaded into Atlas.ti software version 7 (Atlas.ti, Citation2017) and portions of text coded based on the logic model and time point (baseline [2011–2012], intermediate [2013–2014], or current [2016]). Queries were run in Atlas.ti to group text by code and time point. Two authors (NH and LT) reviewed query reports and abstracted key data into tables for each time point. A third co-author (KWR) reviewed the tables to confirm accuracy.

Quantitative analysis

The quantitative data from the semi-annual reports and trainee data were analyzed using Excel 2010 to generate frequencies and graphs. Statistical analyses of data from the NP Resident Competency Tool are described elsewhere (Rugen et al., Citation2017). Participant Survey data were analyzed using IBM SPSS version 23 (IBM Corp, Citation2015).

Results

Development of the CoEPCE multicomponent intervention

Center Infrastructure and Leadership

Over the six-year period, Center infrastructure was established within seven VA facilities. Initial Center leadership requirements were for a director and co-director, one physician and one NP representing the interprofessional trainee focus. The NCC realized that this implied a hierarchy, and modified the requirement to a co-director leadership model where the physician and NP were to be equal partners. However, this proved to be a difficult leadership model to sustain as there was high turnover in the NP co-director position at four of the Centers.

In Stage 2, Centers were given the freedom to change their leadership structure to a director only model, a director/co-director model, or maintain the Stage 1 co-director model. Three Centers proposed a physician director model, two proposed a director/co-director model, and two proposed co-director models.

Based on Stage 1 experiences demonstrating the importance of interprofessional leadership for clinical and educational endeavors, Stage 2 introduced new requirements for the inclusion of associate director positions for newly mandated professions of RN care managers, pharmacists, psychologists, and evaluators. Two Centers also opted to include a social work associate director.

Center space

All Centers identified space limitations as a barrier to integration of clinical care and educational activities. All required additional space for incorporation of trainees from multiple professions to participate in integrated activities such as didactic sessions, team huddles and group visits. Workplace learning was enhanced when interprofessional trainees were co-located “backstage” in a teamwork room. To address these needs, the NCC provided additional funding during the first year for the Centers to restructure their space and engaged the VA Office of Primary Care leadership to attend site visits for space assessments and development of new VA policy for architectural standards in academic primary care.

VA and academic affiliate partnerships

Over the first six years, the Centers worked to increase the number of academic affiliate partnerships. In 2011, the five Centers reported a total of 11 affiliate partnerships with schools of nursing and schools of medicine. By 2016, there were a total of 33 affiliate partnerships across seven Centers. New affiliations were established with schools of pharmacy, schools of social work, programs in management, and health services research.

One of the biggest challenges was scheduling for collaborative educational activities with different trainee professions while providing continuity between trainees and patients, trainees and PACT teams, and trainees and faculty supervisors. Consequently, the Centers had to work closely with their academic affiliates to facilitate logistics required for IPE. Academic affiliates had to adopt new ways of scheduling to accommodate learners of different professions, such as adjustment of block immersion models for physician residents so that they were present at the same time as NP students who were in clinic 1 to 2 days per week for 6–15 weeks, and NP residents who were present full time for 1 year.

Interprofessional faculty

In Stage 1, interprofessional clinical faculty consisted mainly of physicians and NPs with fewer pharmacists and psychologists. Because of the longstanding emphasis on physician training in the VA, most physician faculty had academic appointments and protected time for education. NP faculty, however, were generally clinicians with little experience in academic roles and VA facilities did not have a culture of providing NPs with protected time from their clinical role for education, leadership, faculty development, or scholarly activities. Across Centers, all physician co-directors and most physician faculty had academic appointments at their affiliated school of medicine, whereas only two NP co-directors had academic faculty appointments at their affiliated school of nursing.

By 2016, the interprofessional faculty and staff at each Center included NPs, RN Care Managers, pharmacists, physicians (internal medicine, psychiatry), psychologists, social workers, and evaluators/researchers. Four Centers reported working with their affiliates to initiate new academic appointments for their RN, NP, pharmacy, social work, and/or psychology faculty. There was variability in the rate of academic appointments by profession that followed training priorities historically set by VA, ranging from 84% for physician faculty to none for the social work faculty ().

Table 1. CoEPCE faculty academic appointments, 2016.

Interprofessional teaching activities

In Stage 1, the Centers began developing curricula by adapting existing didactic activities or courses to address CoEPCE curricular goals. For example, most of the Centers implemented Team Strategies and Tools to Enhance Performance and Patient Safety (TeamSTEPPS®), a course to teach communication and teamwork skills (Clancy & Tornberg, Citation2007). The Centers focused on the development of teaching activities within a single domain, such as team conferences to develop interprofessional collaboration skills and lectures on motivational interviewing to teach shared decision-making skills.

To achieve the aim of aligning IPE with clinical care, the Centers were challenged with developing new workplace learning strategies in which teaching occurs within the context of clinical practice, linking the didactic to clinical instruction within and across professions. Each Center approached the development of workplace learning strategies to fit within their local VA context. For example, one Center developed a standardized huddle checklist because their trainees were spread throughout several PACT teams and they needed more uniformity of experience (Shunk, Dulay, Chou, Janson, & O’Brien, Citation2014). Another Center developed a panel management curriculum because proactive chronic disease management was an institutional priority and they had the availability of an ideal training space with technology support (Kaminetzky & Nelson, Citation2015). This led to each Center taking ownership of a promising practice (huddles, panel management, PACT interdisciplinary care update, polypharmacy clinic, or dyads) that could be disseminated throughout the VA system (Centers of Excellence in Primary Care Education, Citation2017).

In 2015, the NCC advanced a new framework based on a three-element model for non-health care settings to guide further development of interprofessional curriculum (Fink, Citation2003). The framework considers curriculum to include the development of learning objectives, instructional strategies (i.e., workplace learning, didactics, and reflective practice) and trainee assessment of accomplishment of learning objectives. This curriculum framework was applied across the four core domains of shared decision-making, interprofessional collaboration, performance improvement, and sustained relationships, and was also used to integrate mental health into the primary care curriculum.

By 2016, the curricula evolved so that many teaching activities integrated learning objectives across the four core domains, mental health, and instructional strategies within a single teaching activity. Examples of integrated teaching activities are listed in .

Table 2. Examples of center reported teaching activities integrating instructional strategies and core domains.

Center (single-site) evaluation

In Stage 1, each Center established their own evaluation program that initially focused primarily on trainees and assessment of teamwork components, such as team structure, communication, attitudes, team development, and team performance. Centers used established instruments such as the Team Development Measure (Salem-Schatz, Ordin, & Mittman, Citation2010) and the TeamSTEPPS® Teamwork Attitudes Questionnaire (T-TAQ) (Baker, Krokos, & Amodeo, Citation2008). Centers also initiated evaluation in the performance improvement domain, using instruments such as the Quality Improvement Knowledge Application Tool (QIKAT) (Singh et al., Citation2014) and panel management registry tools from the VA electronic record (Kaminetzky & Nelson, Citation2015). Trainee competency assessment within the Centers focused on NP residents using a tool developed for cross-site use, the NP Resident Competency Tool (Rugen et al., Citation2017), while physician residents were assessed through traditional professional accreditation methods. By Stage 2, The Centers were evaluating faculty performance on teaching activities, precepting, and mentoring. Some were evaluating patient satisfaction, and one site began to evaluate impacts of specific teaching activities on health system outcomes, dissemination, and adoption by other sites (King et al., Citation2017).

Short-term outcomes of enterprise evaluation

Interprofessional trainees: numbers and types

The first cohort of trainees began in academic year 2011–2012 with two mandated trainee professions, physician residents, and NP students. The Centers were encouraged to include other trainee professions such as pharmacists, psychologists, and social workers. Physician residents, primarily from internal medicine residency programs, and NP students were assigned to the CoEPCE by their affiliate training program. These trainees had to spend at least 30% of their total training time in the CoEPCE, a requirement set by the NCC to ensure an adequate “dose” of interprofessional training based on the review of physician residency program requirements.

Early in Stage 1, NP residency programs in primary care were established at each Center as core CoEPCE training programs (Rugen et al., Citation2014). NP residents were graduates of masters or doctor of nursing practice NP programs who participated in year-long specialized training in primary care delivery, leadership, and scholarly activities (Rugen, Gilman, & Traylor, Citation2015; Rugen et al., Citation2016, Citation2014).

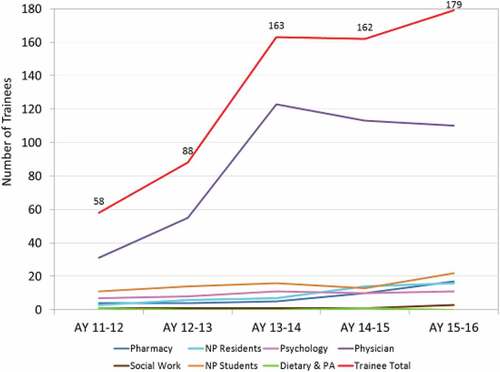

The types and numbers of trainees by profession are displayed in . Prior to the CoEPCE, there were no trainees receiving IPE in primary care. Beginning in 2011–2012 there were 58 trainees receiving IPE, increasing to 179 trainees in 2015–2016. Physician residents comprised two-thirds of the total CoEPCE trainees because of the historical emphasis on physician training in the VA. In addition, space limitations and preceptor availability within professions restricted the growth of other trainee professions such as NP residents and social work interns.

Trainee competencies

n Stage 1, cross-site evaluation of professional competencies focused on NP residents using the NP Resident Competency Tool. Results from three cohorts of NP residents show significant improvement in each competency domain (p < .0001) over the year-long program as measured by both trainees and their mentors (Rugen et al., Citation2017). Furthermore, NP residents were rated by their mentors as able to practice without supervision in all competency domains by the end of the program (Rugen et al., Citation2017). The aggregated results have been used as feedback for program improvement. For example, based on quantitative findings that the clinical competency domain was among the lowest scored and qualitative findings that NP residents wanted to improve differential diagnosis skills, the NCC developed a course on this topic for NP faculty (Rugen et al., Citation2017).

Trainee perceptions of the CoEPCE program

Results from the Participant Survey administered to three consecutive trainee cohorts covering 2014 and earlier, 2014–2015, and 2015–2016 show an upward trend in the extent to which the CoEPCE had met its overall mission of fostering transformation of clinical education by preparing graduates of health professional programs to work in, lead and improve Veteran/patient-centered interprofessional teams that provide coordinated, longitudinal care with mean ratings of 3.7, 3.8, and 4.0, respectively, on a scale of 1–5 where 5 is best. There is also an upward trend in ratings of the overall CoEPCE learning experience with mean ratings of 3.9, 4.0, and 4.2, respectively. Similar upward trends are seen for interest in future primary care or VA employment. The 2015–2016 survey also showed differences in learning specific skills between professions, e.g., psychologists reported lower rates of learning than other professions in performance improvement skills such as using information technology to manage patient panels. More detailed longitudinal analyses are currently ongoing to explore trends in ratings on global indicators, program satisfaction, and curriculum over time.

Impacts on primary care clinic staff and patients

Cross-site evaluation of clinic staff and patients was initiated at the beginning of Stage 2. Impacts of primary care clinic staff were evaluated through semi-structured qualitative interviews with clinic staff (n = 35) working in CoEPCE teams. The overall finding was that working with trainees contributed positively to work experience, and the inclusion of clinic staff in workplace learning activities such as huddles contributed towards effective interpersonal working relationships with trainees (Newell et al., Citation2017). Evaluation of patient impacts come from a cohort study of primary care patients comparing patients assigned to CoEPCE teams compared to a control group of patients assigned to non-CoEPCE teams (Edwards et al., Citation2017). This study found that the likelihood of poor diabetic A1c control declined by 1.9% per patient per year, the likelihood of timely mental health referral increased among CoEPCE patients by 2.1%, and the likelihood of PCMHI visits increased by 1.9% per patient per year (Edwards et al., Citation2017).

Discussion

The introduction of PACT within VA facilities provided the opportunity for OAA to begin the transformation of the clinical education workplace to develop a collaborative-ready health workforce for the future. Our work over the first six years of CoEPCE implementation can be summarized as laying a foundation for the interprofessional clinical learning environment that is described by Simpson and colleagues (Citation2017) as comprised of people, facilities, and processes. The “people” are the interprofessional clinical leaders and faculty, “facilities” are the clinical sites and provider readiness for IPE that are patient-centered and team-oriented, and “processes” are based on workplace-based IPE, performance improvement, and trainee placement. Our work has implications for lessons learned that may be helpful for others seeking large-scale system transformation ().

Table 3. Lessons learned for development of the interprofessional clinical learning environment within a national healthcare system.

The first lesson is that building the foundation for IPE in VA primary care settings required an initial outlay of financial resources. Because primary care IPE was a new educational model, protected time of clinicians and staff was necessary to identify needs, develop interprofessional workplace learning strategies and faculty, and shape the interprofessional clinical learning environment within each Center. Experiences across Centers could then be used to identify important features of the interprofessional clinical learning environment that could be generalized for future educational transformation.

In addition to financial resources, OAA established the NCC to link individual Centers with system transformation efforts, monitor fidelity to project goals, and monitor appropriate use of funding. The NCC facilitated interaction between individual Centers to advance a standardized enterprise-wide curriculum that could be disseminated nationally. The NCC shaped the development of national VA IPE policy informed first-hand by individual Center experiences. For example, based on identified barriers encountered by Centers, the NCC successfully modified national business rules within the VA’s electronic health record to facilitate interprofessional graduated supervision and to properly relate trainee clinical effort to workload calculations and staffing policies. Finally, the NCC contributed to national policy and professional accreditation discussions to advance IPE, such as the establishment of IPE competencies for NP resident training.

Another lesson is that strong collaborative relationships between the VA and academic affiliates, as well as academic affiliates with each other, are required to develop IPE training programs. These relationships support logistics and resource sharing, curriculum innovation, faculty development, and educational/program evaluation. The differential rate of academic faculty appointments by profession among VA interprofessional faculty highlight the need to further explore profession-specific barriers to the faculty appointment at the academic affiliate.

With respect to the multicomponent intervention, we learned that interprofessional leadership is vital to the development and delivery of effective interprofessional curricula, as well as for role modeling of leadership behaviors to trainees across professions. The high rate of turnover in leadership points to the need for deeper understanding of effective interprofessional leadership models that may be influenced by profession-specific cultural issues. Space was a key resource enabling IPE. We sought space design consultation for more efficient ways to utilize existing space. However, since actual space construction was a long-term solution we engaged the VA Office of Primary Care to address policy for architectural standards to enable IPE in VA settings. The Centers built interprofessional curricula that eventually integrated three instructional strategies, i.e., didactic, workplace learning, and reflection across four core domains of interprofessional collaboration, shared decision-making, sustained relationships, performance improvement. These curricula are exportable to the rest of the VA and the national health care system. Finally, within clinical workplace settings, interprofessional faculty include not only profession-specific faculty but all clinic staff who work collaboratively to deliver quality patient care. Faculty development for IPE should include profession-specific faculty and clinic staff who work with trainees.

Our analyses have several limitations. First, the CoEPCE Annual Report and the semi-annual reports were written by the NCC and the Centers, respectively, and therefore may be biased towards NCC or a Center’s interpretation of events. These limitations were minimized by triangulating across data sources and types of data, i.e., qualitative and quantitative to arrive at valid descriptions of what occurred. This project takes place in the VA and some of these experiences and results may not generalize beyond VA. However, the VA is the largest education and training effort for health professionals in the United States and provides education in collaboration with 135 of 144 allopathic medical schools, 30 of 33 osteopathic medical schools, and more than 40 other clinical health professions education programs (Office of Academic Affiliations, Citation2016), Finally, for various reasons the enterprise evaluation was initiated later than other NCC functions, and assessment of some short term outcomes was not fully initiated until the beginning of Stage 2, underscoring the need to initiate the enterprise evaluation strategy early and with a clear purpose (Reeves, Boet, Zierler, & Kitto, Citation2015). As we move forward, we will continually review and monitor the enterprise evaluation strategy to evaluate IPE impacts on trainees, faculty, patients, the VA population, and the health care delivery system (Cox, Cuff, Brandt, Reeves, & Zierler, Citation2015).

Concluding comments

Large-scale systems transformation of education and clinical care is a complex undertaking that requires dedicated work of national leadership and policymakers, clinical and academic leadership, faculty, clinical staff and the trainees themselves. Future work will focus on improving our understanding of the interprofessional clinical learning environment to support workplace learning leading to quality Veteran care. Ultimately, these models will be disseminated throughout VA to achieve system-wide transformation.

Declaration of interest

The authors report no conflicts of interest. The authors alone are responsible for the writing and content of this paper.

Acknowledgements

The authors would like to thank the following individuals for their contributions to the project: Malcolm Cox, MD; Ward Newcomb, MD; David Latini, PhD, LMSW; and Jennifer Hayes, EdM. Logic model development was informed by Bridget O’Brien, PhD, Anne Poppe, PhD, Anais Tuepker, PhD, and Mimi Singh, MD. Bridget O’Brien, PhD and Mary Dolansky, PhD, RN, FAAN provided thoughtful reviews of this manuscript. In addition, this project could not be where it is today without the leadership and staff within VA’s Office of Academic Affiliations and Office of Primary Care. Special thanks to the interprofessional leadership, faculty, clinical staff, trainees and academic affiliates of CoEPCE Centers at Boise, Idaho; Cleveland, Ohio; Houston, Texas; Los Angeles, California; San Francisco, California; Seattle, Washington and West Haven, Connecticut.

References

- Atlas.ti. (2017). Qualitative data analysis (Version 7). Berlin, Scientific Software Development. Retrieved from http://atlasti.com/product/v7-windows/

- Baker, D., Krokos, K., & Amodeo, A. (2008). TeamSTEPPS Teamwork Attitudes Questionnaire Manuel. Retrieved from https://www.ahrq.gov/teamstepps/instructor/reference/teamattitudesmanual.html

- Bowen, J. L., & Schectman, G. (2013). VA Academic PACT: A blueprint for primary care redesign in academic practice settings. Washington, DC: Department of Veterans Affairs, Offices of Primary Care and Academic Affiliations.

- Brienza, R. S. (2016). At a crossroads: The future of primary care education and practice. Academic Medicine, 91(5), 621–623. doi:10.1097/acm.0000000000001119

- Brienza, R. S., Zapatka, S., & Meyer, E. M. (2014). The case for interprofessional learning and collaborative practice in graduate medical education. Academic Medicine, 89(11), 1438–1439. doi:10.1097/acm.0000000000000490

- Centers of Excellence in Primary CareEducation. (2017). Compendium of Five Case Studies: Lessons for Interprofessional Teamwork in Education and Workplace Learning Environments 2011-2016. In S. Gilman & L. Traylor (Eds.). Washington, DC: United States Department of Veterans Affairs, Veterans Health Administration, Office of Academic Affiliations. Retrieved from https://www.va.gov/OAA/docs/VACaseStudiesCoEPCE.pdf

- Clancy, C. M., & Tornberg, D. N. (2007). TeamSTEPPS: Assuring optimal teamwork in clinical settings. American Journal of Medical Quality, 22(3), 214–217. doi:10.1177/1062860607300616

- Cox, M. (2013). Patient-centered model for continuous improvement in clinical education and practice. Paper presented at the Interprofessional Education for Collaboration: Learning How to Improve Health From Interprofessional Models Across the Continuum of Education to Practice: Workshop Summary, New York, NY.

- Cox, M., Cuff, P., Brandt, B. F., Reeves, S., & Zierler, B. (2015). Measuring the impact of interprofessional education on collaborative practice and patient outcomes. Washington, DC: Institute of Medicine.

- Department of Veterans Affairs. (2010). Request for proposals: VA centers of excellence in primary care education. Washington, DC: Office of Academic Affiliations. Retrieved from https://www.va.gov/oaa/trainingannouncements.asp

- Department of Veterans Affairs. (2015a). Request for proposals, new sites-Stage II, Centers of excellence in primary care education/I-APACT, FY16-FY19. Washington, DC: Office of Academic Affiliations. Retrieved from https://www.va.gov/OAA/docs/CoEPCE_Stage_2_RFP_Continuation_FY16_FY19.pdf

- Department of Veterans Affairs. (2015b). Request for proposals, Stage 2 continuation support, centers of excellence in primary care education, FY15-FY19. Washington, DC: Office of Academic Affiliations. Retrieved from https://www.va.gov/OAA/docs/CoEPCE_Stage_2_RFP_Continuation_FY16_FY19.pdf

- Edwards, S., Kim, H., Forsberg, C., Shull, S., Harada, N., & Tuepker, A. (2017). Association of participation in the Office of Academic Affiliations’ Centers of Excellence in Primary Care Education Initiative with Quality of Care and Utilization. Paper presented at the HSR&D/QUERI National Conference: Accelerating Innovation and Implementation in Health System Science, Crystal City, VA.

- Fink, L. D. (2003). Creating significant learning experiences: an integrated approach to designing college courses. San Francisco: John Wiley & Sons, Inc.

- Gilman, S. C., Chokshi, D. A., Bowen, J. L., Rugen, K. W., & Cox, M. (2014). Connecting the dots: Interprofessional health education and delivery system redesign at the Veterans Health Administration. Academic Medicine, 89(8), 1113–1116. doi:10.1097/acm.0000000000000312

- IBM Corp. (2015). IBM SPSS statistics for windows, Version 23.0. Armonk, NY: Author.

- Institute of Medicine. (1972). Educating for the health team. Paper presented at the Interrelationships of Educational Programs for Health Professionals, National Academy of Sciences, Washington, D.C.

- Kaminetzky, C. P., & Nelson, K. M. (2015). In the office and in-between: The role of panel management in primary care. Journal of General Internal Medicine, 30(7), 876–877. doi:10.1007/s11606-015-3310-x

- King, I. C., Strewler, A., Wipf, J. E., Singh, M., Painter, E., Shunk, R., … Smith, C. S. (2017). Translating innovation: Exploring dissemination of a unique case conference. Journal of Interprofessional Education & Practice, 6, 55–60. doi:10.1016/j.xjep.2016.12.004

- Long, T., Dann, S., Wolff, M. L., & Brienza, R. S. (2014). Moving from silos to teamwork: Integration of interprofessional trainees into a medical home model. Journal of Interprofessional Care, 28(5), 473–474. doi:10.3109/13561820.2014.891575

- Mazanec, P., Arfons, L., Smith, J., Curry, S., Berman, S., Dimick, J., … Latini, D. M. (2015). Preparing trainees to deliver patient-centered care in an ambulatory cancer clinic. Journal of Cancer Education, 30(3), 460–465. doi:10.1007/s13187-014-0719-6

- Murray, O., Christen, K., Marsh, A., & Bayer, J. (2012). Fracture clinic redesign: Improving standards in patient care and interprofessional education. Swiss Medical Weekly, 142, w13630. doi:10.4414/smw.2012.13630

- Nasir, J., Goldie, J., Little, A., Banerjee, D., & Reeves, S. (2017). Case-based interprofessional learning for undergraduate healthcare professionals in the clinical setting. Journal of Interprofessional Care, 31(1), 125–128. doi:10.1080/13561820.2016.1233395

- Nelson, K. M., Helfrich, C., Sun, H., Hebert, P. L., Liu, C. F., Dolan, E., … Fihn, S. D. (2014). Implementation of the patient-centered medical home in the Veterans Health Administration: Associations with patient satisfaction, quality of care, staff burnout, and hospital and emergency department use. JAMA Internal Medicine, 174(8), 1350–1358. doi:10.1001/jamainternmed.2014.2488

- Newell, S., Brienza, R., Dulay, M., Strewler, A., Manuel, J., O’Brien, B., & Tuepker, A. (2017). Experiences of patient centered medical home team members working in interprofessional training environments. Paper presented at the Academy Health, New Orleans.

- Office of Academic Affiliations. (2016). 2016 Statistics: Health Professions Trainees. Washington, DC: U.S. Department of Veterans Affairs, Office of Academic Affiliations.

- Pare, L., Maziade, J., Pelletier, F., Houle, N., & Iloko-Fundi, M. (2012). Training in interprofessional collaboration: Pedagogic innovation in family medicine units. Can Fam Physician, 58(4), e203–209.

- Petersen, D., Taylor, E., & Peikes, D. (2013). Logic models: The Foundation to implement, study, and refine patient centered medical home models. Rockville, MD: Agency for Healthcare Research and Quality.

- Reeves, S., Boet, S., Zierler, B., & Kitto, S. (2015). Interprofessional education and practice Guide No. 3: Evaluating interprofessional education. Journal of Interprofessional Care, 29(4), 305–312. doi:10.3109/13561820.2014.1003637

- Rosland, A. M., Nelson, K., Sun, H., Dolan, E. D., Maynard, C., Bryson, C., … Schectman, G. (2013). The patient-centered medical home in the Veterans Health Administration. The American Journal of Managed Care, 19(7), e263–272.

- Ruddy, N. B., Borresen, D., & Myerholtz, L. (2013). Win/win: Creating collaborative training opportunities for behavioral health providers within family medicine residency programs. International Journal of Psychiatry in Medicine, 45(4), 367–376. doi:10.2190/PM.45.4.g

- Rugen, K., Dolansky, M. A., Dulay, M., King, S., & Harada, N. (2017). Evaluation of veterans affairs primary care nurse practitioner residency: Achievement of competencies. Nursing Outlook. doi:10.1016/j.outlook.2017.06.004

- Rugen, K., Gilman, S., & Traylor, L. (2015). Interprofessional education as the future of education. In C. Rick, & P. Kritek (Eds.), Realizing the future of nursing: VA nurses tell their story. Washington, D.C.: Government Printing Office.

- Rugen, K., Speroff, E., Zapatka, S. A., & Brienza, R. (2016). Veterans affairs interprofessional nurse practitioner residency in primary care: A Competency-based program. The Journal for Nurse Practitioners, 12(6), e267–e273. doi:10.1016/j.nurpra.2016.02.023

- Rugen, K., Watts, S. A., Janson, S. L., Angelo, L. A., Nash, M., Zapatka, S. A., … Sax, J. M. (2014). Veteran affairs centers of excellence in primary care education: Transforming nurse practitioner education. Nursing Outlook, 62, 78–88. doi:10.1016/j.outlook.2013.11.004

- Salem-Schatz, S., Ordin, D., & Mittman, B. (2010). Guide to the team development measure. http://www.queri.research.va.gov/tools/TeamDevelopmentMeasure.pdf

- Shunk, R., Dulay, M., Chou, C. L., Janson, S., & O’Brien, B. C. (2014). Huddle-coaching: A dynamic intervention for trainees and staff to support team-based care. Academic Medicine, 89(2), 244–250. doi:10.1097/acm.0000000000000104

- Simpson, D., Brill, J., Hartlaub, J., Rivera, K., Rivard, H., Hageman, H., & Huggett, K. (2017). Interprofessional education and the clinical learning environment: Key elements to consider. Paper presented at the Alliance of Independent Academic Medical Centers Annual Meeting, Amelia Island, Florida.

- Singh, M., Ogrinc, G., Cos, K., Dolansky, M., Brandt, J., Morrison, L., … Headrick, L. (2014). The quality improvement knowledge application tool revised (QIKAT-R). Academic Medicine, 89, 1386–1391. doi:10.1097/ACM.0000000000000456