ABSTRACT

At the heart of changing institutional assessment and feedback practices is the need to transform university teachers’ ways of thinking about feedback and assessment. In this article, we present a case study of a three-year Master’s in Education offered to UK STEMM university teachers as an opportunity to develop critically reflective and theoretically underpinned approaches to their practice. We outline the extent to which, in Mezirow’s terms, through a disorientating combination of studentship, self-reflection and paradigm crossing, the programme has the potential to change the participants’ frames of reference. Drawing on our experiences of working with these students and in-depth interviews we discuss the impact the programme has had on the participants’ assumptions around feedback and assessment, their identity, own practice and wider institutional perspectives and practice. Barriers identified by participants that inhibit assessment and feedback change are also explored.

Introduction

Persuading busy university teachers of the need to evolve long-established teaching practices into more effective and inclusive approaches can be challenging. This task becomes even more difficult if the focus of practice is assessment and feedback (Ferrell, Citation2012) and further still in a Science, Technology, Engineering, Maths and Medicine (STEMM) context, where more traditional, teacher-centric approaches still dominate (Stains et al., Citation2018). Whilst top-down initiatives can potentially standardise practice across an institution, they tend to ignore persistent material and socio-political structures within departmental communities (Goodyear, Casey, & Kirk, Citation2017). They are often not effective in inspiring university teachers to genuinely transform assessment and feedback practices in a way that is embedded and sustainable. In this paper, we argue that at the heart of transforming assessment in higher education (HE) is the need to transform the way that university teachers perceive assessment. This builds on research around the value of teacher development programmes (Cilliers & Herman, Citation2010; Gibbs & Coffey, Citation2004; Knight, Citation2006). Drawing on Jack Mezirow’s transformative learning, we will use his concepts of frames of reference, habits of mind, points of view and disorientating dilemmas to explore to what extent and how assumptions and practice around assessment in HE can be transformed. Mezirow (Citation2009) describes transformative learning as ‘the process by which we transform problematic frames of reference … – sets of assumption and expectation – to make them more inclusive, discriminating, open, reflective and emotionally able to change’ (p. 92). We understand problematic frames of reference to include over-emphasis of summative assessment leading to overload and bunching; prioritising summative over formative assessment; over-insistence on high reliability of assessment compromising creativity (Waterfield & West, Citation2006); assessment rewarding competition over collaboration; feedback as purely justification of the mark given. According to Mezirow a frame of reference comprises habits of mind, ‘habitual ways of thinking, feeling and acting influenced by assumptions that constitute a set of codes’ and resulting points of view. Points of view are based on ‘beliefs, memory, value judgement, attitude and feeling that shapes a particular interpretation’ (Mezirow, Citation2009, p. 92) and are more open to influence based on self-reflection and external feedback. Mezirow cites ethnocentrism, the belief in the inherent superiority of one’s own culture, as an example of a habit of mind. Applying this example to HE we regularly see evidence of international (Carroll, Citation2008) and disciplinary cultural differences (Iannone & Simpson, Citation2016; Neuman, Parry, & Becher, Citation2002) that determine epistemological beliefs and assumptions about what is to be assessed and how, as well as notions of validity, reliability and fairness that result in negative points of view regarding those that believe differently. A disorientating dilemma provides the catalyst for transformation by causing the individual to question their current understanding and views (Mezirow, Citation2009) in this case regarding the purpose and process of assessment.

Table 1. Participants’ characteristics.

Context

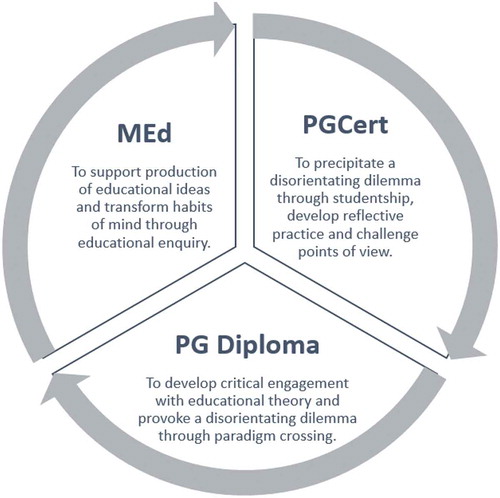

This case study centres on a social sciences-informed Master’s in University Learning and Teaching (MEd) designed for STEMM university teachers in a research-intensive university. The three-year part-time programme with 96 students enrolled at the time of this study consists of the PGCert, PG Diploma and MEd stages, each with an exit point. It is offered as a non-compulsory opportunity for academics to develop a critically reflective and theoretically underpinned approach to university teaching and learning (). These MEd students come from all four faculties and the cross-university departments and range from senior academics with substantial programme management responsibilities to early academics with varied teaching loads. They are taught in small groups with class sizes ranging between 10 (on optional PGCert modules) and 24 (in Diploma block mode sessions) allowing an opportunity for dialogue and rapport building between peer and tutors.

Although by no means homogenous, the predominantly STEMM focussed nature of our cohort affords us the opportunity to open the horizons of academics whose practice may tend towards teacher-centred approaches (Lindblom-Ylänne, Trigwell, Nevgi, & Ashwin, Citation2006). Student evaluations, reflection activities and dialogue indicate that they also unanimously appreciate the attention we devote to developing their academic literacy around reading and writing in a social science. In this way, the programme creates great potential for personal and disciplinary points of view and habits of mind to be compared and critically examined. By scaffolding participants’ experiences of crossing paradigms between science and social science, we attempt to transform their thinking and augment their identity. When considering identity here we are guided by Illeris’ (Citation2014) argument that transformative learning gains currency and utility when the target area for transformation is defined as an individual’s identity. Put simply, our aim is to create a network of dispersed expertise, across the institution, of practitioners who identify as, and are identified by others, as combining educational expertise with being a disciplinary expert (see Kinchin, Kingsbury, & Buhmann, Citation2018). As identified by Bush, Rudd, Stevens, Tanner, and Williams (Citation2016) in their study of Science Faculty with Education Specialities in US universities it is anticipated they will become ‘local change agents’ (Bush et al., Citation2016, p. 15) with impact on their colleagues’ assessment beliefs and practices, programme-level assessment transformation and disciplinary-specific research to provide an evidence base for future enhancement. The network is significant as, despite their disciplinary differences, they draw strength from their collective experience of undergoing a transformation in understanding and identity; the explicitly socially-constructivist-underpinned programme is designed to facilitate this. As identified by van Lankveld, Schoonenboom, Volman, Croiset, and Beishuizen (Citation2017) in their discussion about the positive impact of connectedness on university teacher identity development, in departments where research dominates ‘teachers may therefore be more likely to find like-minded colleagues in other departments’ (p334). This concurs with our observations.

Our MEd aims to encourage and equip participants to design and facilitate a more effective, varied, inclusive, transparent and constructively aligned assessment diet; including student-centred feedback approaches (Boud and Falchikov, Citation2007; Bloxham & Boyd, Citation2007). We achieve this through: introducing the participants to the theory underpinning assessment and feedback practices; experiential learning, by consciously exposing them to a variety of assessment approaches (see Appendix); and individual or peer/tutor supported reflection on their experiences. This means that regardless of participants’ initial assumptions and beliefs they are required to ‘suck it’ and experience those generally new techniques just like their own students would. Based on this experience and reflection they are encouraged to assess the value of these interventions for their contexts.

As outlines, each stage of the MEd is designed to contribute to the transformative learning process by catalysing and supporting individual and collective disorientating dilemmas and challenging, through increasingly scholarly dialogue, easier-to-influence points of view and more entrenched habits of mind. Our intention is that over the course of the three-year programme and beyond we facilitate, in Mezirow’s (Citation2009) words, the two major elements of transformative learning … ‘first, critical reflection or critical self-reflection on assumptions … and second, participating fully and freely in dialectical discourse to validate a best reflective judgement’ (p. 94).

Methodology

Oakley (Citation1999) argues that the choice of a research paradigm is an interplay between the questions, the characteristics of the context as well as researchers’ philosophical positions. Our ontological and epistemological beliefs, as well as the nature of the research and our context, align with the interpretive paradigm. Our aim is to gain an understanding of the MEd participants’ lived experiences of being assessed again and what impact being on the programme has on them (i.e. in terms of transforming their frames of reference) and their wider context. We focus on the following questions:

What is the evidence of changes in participants’ assumptions and practices around assessment and feedback?

What is the evidence of transforming assessment more widely?

What are the barriers to sustainable and manageable assessment change at programme, faculty and institutional level?

We draw on several data sources. Since we are both teachers on the programme and it is impossible for the researchers to be detached from the reality they are investigating (Guba & Lincoln, Citation1994), first we have drawn on our experience of working with our students, including marking their reflective assignments. Second, in order to gain an in-depth understanding of our students’ unique lived experiences, we conducted one-to-one semi-structured interviews. The 56 MEd students who had completed at least one stage of the programme were invited to participate in interviews. Nine volunteers that represented each level of the programme plus graduates were interviewed (pleasesee for interviewee profiles). Given that these were our own students and in Foucauldian terms we are aware of the power we exercise over them through the regulatory act of assessing and grading the way they make sense of knowledge in their assignments (Burke, Bennett, Burgess, Gray, & Southgate, Citation2016), we were very mindful of ethical issues related to power imbalance. To reduce the influence of our dual researcher-assessor roles on both interview responses and assessment processes we did not assess interviewees’ subsequent summative assessments. The data was anonymised and pseudonyms that retain the gender distinction are used throughout. The research gained institutional ethical approval and was conducted in line with BERA guidelines. The transcripts were firstly coded independently by both researchers; the codes were discussed and compared, then organised into themes. To make the process of thematic analysis more robust we were guided by Braun and Clarke’s (Citation2006) decision-making advice. Therefore, our analysis was theoretically driven, as opposed to inductive, and focused on a detailed account of particular aspects of our data set linked to our research questions (Braun & Clarke, Citation2006).

Findings and discussion

Owing to our approach, the thematic analysis identified themes closely linked to our research questions. In terms of evidencing a change in our interviewees’ assumptions and practices, themes identified were linked to changes in the understanding of the purpose of assessment and what it might mean to the learners, changes in identity and, as a result, change in practice at a local and wider departmental level. The themes identified in relation to the question about the barriers preventing change focused on pragmatic issues as well as problematic frames of reference of various stakeholders. The discussion below unpacks these themes and is organised around our research questions.

Evidence of changes in participants’ assumptions and practices

Understanding of the purpose of assessment and learner perspectives

Interviewees talked about a shift in their conception of assessment from a way of measuring individual achievement, to creating an integrated opportunity to help learners develop. Brian demonstrates a significant change in his habit of mind:

I suppose with the exposure that I’ve had on the MEd in the three years is to see … it’s not about marking and it’s not necessarily just about feedback. … I see my assessment now as quite a collaboration between me and the course lead and the students doing the pieces of work that they do … So, I see it much more of a way of understanding where we both are, the learner and the teacher, rather than a hurdle that the student needs to, sort of, jump over. (Brian, MEd student)

Traditionally academics tend to think about the time burden that implementation and delivery of new assessment and feedback interventions impose on them. The realisation that students also need ‘the time to reflect’ was one of Ken’s ‘big learnings’. This shift was facilitated by an opportunity (and challenge) to become a student again. While this was valued, it surfaced contradictions in interviewees’ points of view as teachers and their habits of mind as learners, as Brad reveals: ‘But with peer feedback, it’s funny coz I always try to promote it with my students and when I have to do it myself, “can I just get it from the tutor.”’ (Brad, PGDip student)

This resonates with Burke and Crozier’s (Citation2012) observations that ‘post-structural insight suggests that students (and teachers) might have different and contradictory responses and emotions at play’ (p. 8). Interviewees often talked about the emotions that experiencing being on the receiving end of assessment and feedback provoked. Karla recalled peer feedback being ‘quite tough and a bit destructive’ reflecting ‘so maybe in the same way I was kind of upset and maybe my feedback upset people’. As teachers, we are tempted to design out discomfort, but we would argue this emotional disruption may be the catalyst for changing points of view and even habits of mind. Kinchin et al. (Citation2017) acknowledge the need for teaching teams to take into account the management of learner discomfort when designing such a Master’s in Education. Indeed, they have inspired us to reflect on whether we frame learner emotion negatively when actually we believe that the surfacing of emotions is a positive outcome and should be made more explicit to learners. In addition, our findings suggest a need to achieve a balance between offering a variety of ways to engage learners with feedback but repeated over a period of time to enable familiarity, confidence and impact to grow.

Changes in practice

Like Cilliers and Herman’s (Citation2010), our programme adopts a ‘practice what you preach’ approach. Analysis of data revealed a strong impact of the programme in terms of interviewees adopting techniques that we modelled in their local practice. This included reported increase in formative assessment generally and specifically the integration of technology (e.g. Mentimeter, Padlet), explicit development of their learners’ academic literacy, team-based learning (TBL), enhanced use of assessment criteria and marking schemes, feedback as dialogue and peer assessment. It was also recognised by interviewees that principles underpinning good assessment were strongly and explicitly embedded within the programme and as a result, most demonstrated theoretically underpinned rationale for the assessment-related changes they made. At a basic level, exposure to the theory behind effective practice made interviewees more aware of where connections between their practice and theoretical understanding had developed, as illustrated by Richard and his newfound appreciation for explicit constructive alignment: ‘I think there’s a much closer link in my mind about the signposting you give to people about learning objectives, that kind of thing up front, linking that to the assessment.’ (Richard, graduate)

As Jennifer described, the change she recognised in herself was useful for justifying and explaining changes in practice to colleagues:

[Before] I didn’t necessarily have a justification, or I couldn’t necessarily go through and find a relevant theory that would support what I was doing. Now I have all that at my fingertips. So I can say something crazy and I don’t have it tough it up. I can actually justify it. I can discuss it with my colleague more easily. (Jennifer, graduate)

Furthermore, we were pleasantly surprised to find that approaches used to develop our learners’ academic literacy and ability to develop narrative arguments in education were crossing paradigms and being accepted in different disciplinary contexts, as Ken explained:

even talking to my students with some of the projects that they do, I find myself talking about narratives and arguments now in that context too. (Ken, MEd student)

This cycle of exposure and reflection seems especially important as we sometimes see in STEMM academics rejection of an idea not immediately applicable to one’s context. Reflection allowed interviewees to step back and consider the ideas and rationale underlying practical implementation of our interventions and how those could be adopted in their contexts. One example of this is how our approach of using a marking form which asks students to specify what they would like to receive feedback on was repurposed by Jennifer:

rather than in the assignment say, ‘what do you want feedback on in this assignment?’, I ask the students at the start of the course what they’re good at and what they’re gonna need more help with. So I started that conversation sort of divorced from any assignments to get more of a sense of each student. I think that then helps me to tailor their feedback … I recognised that that form is important but … I couldn’t make it work for me and I know that with my students they all want to know ‘have I got a certain grade?’. They don’t want to know about a particular element. So it’s finding a different way to ask that same question coz I think that question is still important. (Jennifer, graduate)

Jennifer, therefore, recognised the value of our technique but adapted it to fit the habits of mind she identified in her students. Taking our ideas and developing them further was common, particularly for MEd stage students, who had designed an essay-a-thon using the ongoing feedback discussions that we model, employed technology to document formative assessment of group work and adapted TBL.

Interviewees’ responses revealed that they tended to attribute their change in habits of mind to a combination of factors but principally re-experiencing what being assessed as a learner felt like, receiving feedback, reflecting and identifying a significant opportunity to experiment with new ideas:

I think when you hear these things and the name Team-based Learning, to me it sounds a bit wishy-washy. But when you start doing it, because I was redesigning a course at the time, and when I was doing that, that started off during my PGCert, and kind of carried through. (Ken, MEd student)

The combination of discussion and experimentation appeared to be particularly powerful, but it takes time to learn from and recognise the value of what Mezirow (Citation2009) describes as ‘dialectical discourse to validate a best reflective judgement’ (p. 94). This was illustrated by Jennifer:

I didn’t realize how useful it was just sitting and listening to everybody else’s ideas and listening to what they were stuck with and what solutions they were coming up with so I think that’s probably the bit I didn’t get before and once I’ve done it once or twice I thought that’s really useful. (Jennifer, graduate)

Changes to identity

To claim to have an impact on someone’s identity is bold but our data did suggest a change in the way our interviewees saw themselves, as well as how others saw them. Angie talked about valuing the dual identity as a scientist with social science expertise described earlier:‘[I]t was a completely new area of learning for me because I’m a hard scientist and I think I have a very, very strong grounding in qualitative research as a result of the whole experience.’ (Angie, graduate)

Karla could pinpoint when the MEd enabled her to become aware of a transformation in identity but also indicated the potentially destabilising effect of this:

when I compared mine [my assignment] to the others it was completely different and at that point I started to realise I’m more on your side than on the other side … it was good because I expected to still be on the scientist side, so it was a bit like ‘hold on a moment, who are you?’ (Karla, MEd student)

Jennifer talked about the transition from being considered secretive to becoming recognised institutionally as an award-winning teaching role model:

So when I first started … [I]t was almost as if I was doing some secret thing that no one could know about and it wasn’t like that at all … I just didn’t have an opportunity to tell people so now I get out more and I can be more open about it. (Jennifer, graduate)

This personal story reflects wider changes to points of view regarding the relative prestige of teaching across an institution where traditionally research has occupied a significantly higher status. This is largely as a result of a university-wide, well-funded curriculum review and development project which it is hoped will transform habits of mind to effect more embedded and sustainable change.

Evidence of transforming assessment more widely

By changing their own practice, interviewees reported having a further impact on colleagues:

It’s brilliant. You have an idea. You do all the hard work to start with. And then, by doing it successfully, you convince other course directors that it’s a good thing. … And that works really well because then you give scientists evidence that it works and even better if you do it with their students. (Lexy, MEd student)

Therefore, the ‘lead by example’ approach also worked within interviewees’ departments. Successful interventions generated evidence of effectiveness that appealed to their colleagues, challenged existing frames of reference and inspired change at the departmental/programme level. This was undoubtedly helped by the catalyst of curriculum review and re-design.

The increase in our interviewees’ self-efficacy, also observed by Postareff, Lindblom-Ylanne, and Nevgi (Citation2007), was noted in terms of impacting on their ability to offer support to colleagues open to change but lacking confidence:

He has all the ideas, but he just didn’t have the confidence … and he felt really liberated once he’d done it … He said, ‘we don’t need all of these small assessments’. … and I think we managed to get it back to three really important, deeper assessments. (Brian, MEd student)

Barriers to sustainable and manageable assessment change

Pragmatic resistance – lack of time and scalability

The most commonly mentioned barriers to change related to aspects of time and additional workload that suggested interventions impose, what Deneen and Boud (Citation2014) refer to as pragmatic resistance. From interviewees’ own experience but also from observing us modelling good practices there was recognition from Angie, and others, that impactful feedback ‘takes willingness, it takes engagement from both sides and all of these require time.’ Similarly, better approaches to assessment or feedback were often associated with issues related to scalability. For example, increases in marking load, which, given competing priorities of academics, can result in resistance. However, having been persuaded by her experiences of receiving feedback Lexy had developed a time-efficient approach; combining dictation software and dialogic feedback for her own students. In doing so she had inadvertently found the freedom to challenge her deeper seated habits of mind:

Having cut the time typing makes, it then gives you the freedom to actually say, right, fine, I can sit down and I can actually think what I’m trying to tell them. And having to face the student, typing a proper summary of how they’ve done, you actually have to think why didn’t you give them a merit when they only get the pass. (Lexy, MEd student)

Problematic frames of reference of participants, colleagues and students

Many of the barriers interviewees talked about related to problematic frames of reference in themselves, colleagues and students. A problematic frame of reference amongst our students could be attributed to interpretivist vs positivist ‘paradigm wars’:

I found it really hard to find basically solid knowledge in the field, so I found a paper that says something and then I would find another paper that says something else and none of them have virtually any evidence behind them … I would want to see something more quantitative which would help with discriminating which one was more trustworthy. (Kostas, PGert graduate)

As this quotation suggests, Kostas’ resistance to changing his frames of reference was linked to his inability to comprehend and relate to the rules governing what counts as evidence in a new paradigm. When new rules are rejected, even if points of view are challenged by exposure to instinctively appealing assessment techniques, habits of mind are not sufficiently changed for the desired transformation to happen. Kostas’ persisting belief in the superiority of quantitative evidence and his current inability to recognise qualitative data as valid and reliable thus far limits the programme’s impact on him and his practice. From our observations and interactions with students, we notice that problematic frames of reference tend to transform by the end of the Diploma year when the participants have a better understanding of what constitutes evidence in education.

Traditionalism, and more specifically a belief that things had always been done that way for a very valid, if tacit, reason was cited as a problematic frame of reference that took courage to overcome: ‘Most people who are passionate about education, they just don’t feel they can speak up and the safest thing to do is to do what’s come before.’ (Brian, MEd student)

An enduring institutional belief that research is a more prestigious activity than teaching (van Lanveld et al., Citation2017) appeared to result in a habit of mind that dismissed teaching as a trivial endeavour, rather than a complex process requiring effort to develop. This is exemplified by Ken’s quote relating to his success in teaching: ‘it was never said directly but it was “because Ken’s friendly, young, and he makes it fun.” It wasn’t “because Ken’s using different techniques.”’ (Ken, MEd student)

This problematic frame of reference of Ken’s colleagues creates a barrier to wider change as his success in applying new techniques to transform his own practice is not recognised or adopted but attributed only to his personal characteristics.

The issue with external examiners’ beliefs limiting our interviewees’ creativity with assessment design emerged as an important theme. Some described proposing interventions considered to be in line with good assessment practice, such as continuous formative assessment or assessing skills and processes as well as knowledge (Gibbs & Simpson, Citation2004; Nicol & Macfarlane-Dick, Citation2006); however, this would often be met with disapproval:

For me, it’s more about skills … And all five external examiners said, ‘this is an MSc in X’, they have to know x facts and therefore you should keep the short-answer question … And I think this is not … how it’s done. And then they said, maybe you can have six, four out of a choice of six. And then I said, how does then that test their breadth of knowledge if you then give them the choice? So then we agreed to keep that. (Lexy, MEd student)

While external examiners’ frames of reference could present barriers, those participants with transformed frames of reference appeared equipped with a knowledge-base that enabled them to question recommendations and negotiate. Conversely, examples exist across the sector of external examiners encouraging academics to be less conservative in their persisting preference for exams only to fail in changing even points of view. Kinchin et al. (Citation2018) highlight the limitations of existing external examiner involvement due to over-emphasis on the mechanics of assessment processes and constraints of academic calendar timings. The possibilities they present for dialogue around better linking of teaching and research offer exciting opportunities for collective transformation of frames of reference on the part of external examiners, teaching staff and students.

A final barrier related to students’ problematic frames of reference and beliefs about assessment. In a sense, we identified a meeting of habits of mind when competitive students find themselves together in an environment that they perceive to reward competition. This means even when an assessment is intended to be formative and collaborative, as Brian identified: ‘some of the students, I think, can’t get out of the mode of feeling like they’re on a course that’s extremely competitive.’ Interestingly similar problematic habits of mind were noted by some interviewees when speaking about fellow MEd students:

I think it’s completely useless to be competitive because we already survived and we are here so what’s the point saying ‘I have 75. You have 74. I am better than you!’ I don’t care. And this part is what I didn’t like over the Diploma. (Karla, MEd student)

As Karla explains, the competitive nature of the institutional environment participants are immersed in also influenced the realms of their learning. Hence, what the interviewees criticised in their own students is also a pattern in their behaviour although one that they might not yet recognise.

Students were reported as having particularly strong habits of mind about what made assessment reliable and valid and this resulted in their negative points of view about peer assessment, like our interviewees had themselves voiced. Interviewees reported varying degrees of success in influencing their students’ habits of mind as, like Richard explains, this felt like a complex and risky decision:

I’d wanted to include a peer contribution to the overall mark and that’s a difficult thing to try and include actually because you have the … pedagogical reasoning that people should, if they’re in a team in the TBL stuff you need to be able to tell the other team mates that they aren’t pulling their weight, but equally you have a slightly conflicting view that, or your traditional view, that your mark can’t be tied to somebody else’s performance or somebody else’s view who isn’t an academic member of staff. (Richard, graduate)

We hope that this presentation of our key findings, alongside discussion that frames their interpretation through Mezirow’s transformative learning concepts, offers additional insight for considering the possibilities and challenges for transforming assessment and feedback practices in HE. The dual identity of our students as both teaching staff and MEd students highlights the complex, shifting and contradictory emotions and positionings that assessing and being assessed provokes and allows frames of reference to be critically examined.

We recognise that our dual identity as teachers and researchers in this context presents both strengths and limitations. In terms of strengths, we have great insight (through observations, conversations, evaluations, supervision and marking) into our students’ experiences of our programme and their attempts to transfer their learning to transform practice. In a sense, this wider contextual understanding reduces the potential limitation of a small number of interviewees, as interviews are not the only source of insight but merely provide illuminating depth. That said, we also recognise the potential for our role as teachers to affect what interviewees are willing to share. This data set size did not enable us to investigate whether catalysts for disorientating dilemmas were more likely to be awareness of new theory, student-ship and experiencing practice being modelled or participationg in practice, and this could be an interesting aspect for further exploration with a larger number of programme graduates.

Conclusions and implications

In this article, we have argued that the key to sustainable and manageable change to assessment and feedback practice at programme, department, and/or institutional-level is to change teaching staff’s assessment-related frames of reference through the process of transformative learning. Inspired by Mezirow we combine critical reflection on assumptions and dialectical discourse to support colleagues through the disorientating dilemmas of studentship and learning to use theory and evidence from the social science paradigm to inform and justify their assessment and feedback choices. In doing so we can transform their identity so that they see themselves and are seen by others as educational experts and change agents within their disciplinary community of staff and students. We hope to have illustrated the extent to which we achieve this through our case study in which we outline where the participants’ frames of reference around the purpose and process of assessment have been challenged and often transformed. Furthermore, we can see assessment techniques that MEd participants' experience is being cascaded to their colleagues; this suggests a step towards institutional change. While barriers still occur, those interviewees who have transformed their frames of reference and augmented their identities appear equipped to address those barriers. Based on our experience and analysis we recommend that the emotional impact of the disorientating dilemma should not be underestimated but carefully managed. Given the evidence of personal transformation and potential for our disciplinary experts with educational expertise to transform their colleagues’ and students’ frames of reference identified in this study we now recognise a need to better support them in this process. To do this we recommend giving attention to fostering supportive, interdisciplinary networks of people with educational expertise, including meaningful engagement with external examiners. Finally, from our survey of comparable institutions’ programme specifications, we conclude that offering just the PGCert (or PGCAP) is the predominant model within the UK. While as Postareff et al. (Citation2007) report those one-year interventions do have an impact on self-efficacy and practice, we would recommend that for participants to undergo meaningful transformation they need to further engage in critiquing educational theories and interrogating social science evidence through active enquiry. As we hope to have illustrated these latter stages of the full MEd programme helps participants to transform habits of mind in addition to points of view. Hence, we recommend that there is value in extending structured development opportunities beyond the PGCert and widening the offer of optional MEd programmes to university teachers. This may sound counter-cultural in a sector where equivalent programmes, particularly at research-intensive universities, are being withdrawn, but we believe the non-compulsory nature of this programme, combined with a promotion system that increasingly values teaching activity, is key to its successful recruitment and outcomes. Despite this being a single case study we hope that evidence presented of the transformative potential of our extended experiential, reflective and evidence-based approach will be useful for readers involved in assessment change and, more particularly, in the design and the delivery of MEd programmes at other institutions. Our approach might also be of interest to HE managers working with educational developers to implement change through inspiring transformation.

Acknowledgments

The authors wish to thank participants, particularly interviewees, for their time and insight and the Centre for Higher Education Research and Scholarship, Imperial College London, particularly Professor Martyn Kingsbury, for their guidance.

Disclosure statement

No potential conflict of interest was reported by the authors.

References

- Bloxham, S., & Boyd, P. (2007). Developing effective assessment in higher education: A practical guide. Maidenhead: University Press.

- Boud, D., & Falchikov, N. (2007). Introduction: Assessment for the longer term. In D. Boud & N. Falchikov (Eds.), Rethinking assessment for higher education: Learning for the longer term (pp. 4-13). London: Routledge.

- Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3, 77–101. doi:10.1191/1478088706qp063oa

- Burke, P.J., Bennett, A., Burgess, C., Gray, K., & Southgate, E. (2016). Capability, belonging and equity in higher education: Developing inclusive approaches. Report submitted to the National Centre for Student Equity in Higher Education (NCSEHE), Curtin University. Perth.

- Burke, P.J., & Crozier, G. (2012). Teaching inclusively: Changing pedagogical spaces. Higher Education Academy. Retrieved from https://www.newcastle.edu.au/__data/assets/pdf_file/0004/305968/UN001_Teaching_Inclusively_Resource_Pack_Online.pdf

- Bush, S.D., Rudd, J.A., II, Stevens, M.T., Tanner, K.D., & Williams, K.S. (2016). Fostering change from within: Influencing teaching practices of departmental colleagues by science faculty with education specialties. PloS One, 11(3). doi:10.1371/journal.pone.0150914

- Caroll, J. (2008). Assessment issues for international students and for teachers of international students. HEA. Retrieved from https://www.heacademy.ac.uk/system/files/carroll.pdf

- Cilliers, F.J., & Herman, N. (2010). Impact of an educational development programme on teaching practice of academics at a Research-Intensive University. International Journal for Academic Development, 15(3), 253–267. doi:10.1080/1360144X.2010.497698

- Deneen, C., & Boud, D. (2014). Patterns of resistance in managing assessment change. Assessment & Evaluation in Higher Education, 39(5), 577–591. doi:10.1080/02602938.2013.859654

- Ferrell, G. (2012). A view of the assessment and feedback landscape: Baseline analysis of policy and practice from the JISC assessment and feedback programme. JISC. Retrieved from https://www.heacademy.ac.uk/knowledge-hub/marked-improvement

- Gibbs, G., & Coffey, M. (2004). The impact of training on University teachers on their teaching skills. Active Learning in Higher Education, 5(1), 87–100. doi:10.1177/1469787404040463

- Gibbs, G., & Simpson, C. (2004). Conditions under which assessment supports students’ learning. Learning and Teaching in Higher Education, 1, 3–31.

- Goodyear, V.A., Casey, A., & Kirk, D. (2017). Practice architectures and sustainable curriculum renewal. Journal of Curriculum Studies, 49(2), 235–254. doi:10.1080/00220272.2016.1149223

- Guba, E.G., & Lincoln, Y.S. (1994). Competing paradigms in qualitative research. In N.K. Denzin & Y.S. Lincoln (Eds.), Handbook of qualitative research (pp. 105–117). Thousand Oaks, CA: Sage.

- Iannone, P., & Simpson, A. (2016). University students’ perceptions of summative assessment: The role of context. Journal of Further and Higher Education, 41(6), 785-801.

- Illeris, K. (2014). Transformative learning and identity. Journal of Transformative Education, 12(2), 148–163. doi:10.1177/1541344614548423

- Kinchin, I., Hosein, A., Medland, E., Lygo-Baker, S., Warburton, S., Gash, D., … Usherwood, S. (2017). Mapping the development of a new MA programme in higher education: Comparing privately held perceptions of a public endeavour. Journal of Further and Higher Education, 41(2), 155–171. doi:10.1080/0309877X.2015.1070398

- Kinchin, I.M., Kingsbury, M., & Buhmann, S.Y. (2018). Research as pedagogy in academic development. In E. Medland, R. Watermeyer, A. Hosein, I.M. Kinchin, & S. Lygo-Baker (Eds.), Pedagogical peculiarities: Conversations at the edge of University teaching and learning (pp. 49–67). Rotterdam: Brill/Sense.

- Knight, P. (2006). The effects of post-graduate certificates: A report to the project sponsors and partners. Retrieved from http://www.inspire.anglia.ac.uk/e107_files/downloads/knight_2006_epgc_report_september_2006.pdf

- Lindblom-Ylänne, S., Trigwell, K., Nevgi, A., & Ashwin, P. (2006). How approaches to teaching are affected by discipline and teaching. Studies in Higher Education, 31(3), 285–298. doi:10.1080/03075070600680539

- Mezirow, J. (2009). An overview on transformative learning. In K. Illeris (Ed.), Contemporary theories of learning. Learning theorists … in their own words (pp. 90-105). London: Routledge.

- Neuman, R., Parry, S., & Becher, T. (2002). Teaching and learning in their disciplinary contexts: A conceptual analysis. Studies in Higher Education, 27, 4.

- Nicol, D.J., & Macfarlane-Dick, D. (2006). Formative assessment and self-regulated learning: A model and seven principles of good feedback practice. Studies in Higher Education, 31(2), 199–218. doi:10.1080/03075070600572090

- Oakley, A. (1999). Paradigm wars: Some thoughts on a personal and public trajectory. International Journal of Social Research Methodology, 2(3), 247–254. [Online]. Retrieved from http://www.soc.uoc.gr/socmedia/papageo/paradigm%20wars-why%20researchers%20choose%20their%20methods.pdf

- Postareff, L., Lindblom-Ylanne, S., & Nevgi, A. (2007). The effect of pedagogical training on teaching in higher education. Teaching and Teacher Education, 23, 557–571. doi:10.1016/j.tate.2006.11.013

- Stains, M., Harshman, J., Barker, M.K., Chasteen, S.V., Cole, R., DeChenne-Peters, S.E., … young, A.M. (2018). Anatomy of STEM teaching in North American universities. Science, 359(6383), 1468–1470. doi:10.1126/science.aap8892

- van Lankveld, T., Schoonenboom, J., Volman, M., Croiset, G., & Beishuizen, J. (2017). Developing a teacher identity in the university context: A systematic review of the literature. Higher Education Research & Development, 36(2), 325–342. doi:10.1080/07294360.2016.1208154

- Waterfield, J., & West, B. (2006). Inclusive assessment in higher education: A resource for change. University of Plymouth. Retrieved from https://www.plymouth.ac.uk/uploads/production/document/path/3/3026/Space_toolkit.pdf