Abstract

A growing body of research has recognized the importance of students’ having active roles in feedback processes. Feedback literacy refers to students’ understandings of and participation in feedback processes, and research on students’ feedback literacy has so far focused on higher education; secondary schools have not received attention. This case study investigates secondary students’ feedback literacy and its development in the context of formative peer assessment. From various data sources, three categories of students’ feedback literacy were identified, and criteria for the levels of literacy in each category were created. The criteria were used in the coding of seventh- and eighth-grade students’ skills. The results show that students were able to develop their feedback literacy skills. Thus, secondary school students should be introduced to feedback literacy via, for example, formative peer assessment.

Introduction

Feedback can significantly enhance or inhibit learning (Hattie & Timperley, Citation2007), and it has therefore generated great interest and considerable research. Ideally, feedback helps students achieve their goals (Hattie & Timperley, Citation2007). The studies on feedback have mainly concentrated on the features of efficient feedback, thus implying that the responsibility of a successful feedback process lies on the provider of feedback, who must consider its content, tone, and timing. Similar attention to the quality of feedback can be seen in research on peer assessment. Only recently has receiving feedback generated steady attention from scholars (Boud & Molloy, Citation2013; Delva et al., Citation2013; Jonsson, Citation2013; McLean et al., Citation2015; Sutton, Citation2012; Wiliam, Citation2012; Winstone et al., Citation2017). Carless and Boud (Citation2018) introduced a framework of feedback literacy describing the competences necessary to participate in feedback processes; it has generated considerable interest and encouraged further research. Studies have investigated students of higher education (Han & Xu, Citation2019a, Citation2019b; Hey-Cunningham et al., Citation2020; Molloy et al., Citation2019) and academics (Gravett et al., Citation2020), but so far no study has investigated the feedback literacy of a much larger group: secondary students. Exploring the feedback literacy of secondary school students is the first goal of this study.

Carless and Boud (Citation2018) expressed the need for research on the development of students’ feedback literacy combined with intervention. This article is such a study, and its second goal is to investigate the development of lower secondary school students’ feedback literacy, when formative peer assessment is repeatedly used in students’ physics and chemistry lessons. Peer assessment is used not only as a tool to uncover students’ perceptions about assessment and feedback, but also as an intervention that provides students with the opportunities to reflect and practice feedback processes and hence to develop their feedback literacy.

Feedback literacy

Sutton (Citation2012) conceptualized feedback literacy as “the ability to read, interpret and use written feedback” (p. 31). Carless and Boud (Citation2018) built on his work and defined feedback literacy as “the understandings, capacities and dispositions needed to make sense of information and use it to enhance work or learning strategies” (p. 1316). Feedback literacy highlights the need for students’ own activity in feedback processes. Though teachers provide feedback, no matter how useful it is, it does not automatically benefit the receiver. The feedback needs to be accepted, processed, and acted on by the receiver. Feedback literacy denotes these competences.

The framework of Carless and Boud (Citation2018) presents four features of students’ feedback literacy, which are appreciating feedback, making judgments, managing affect, and taking action. Appreciating feedback includes understanding that feedback is for improvement, understanding that the recipient must have an active role in the feedback process, appreciating different forms and sources of feedback, and the ability to use technology in feedback processes. Making judgments comprises capacities of judging one’s own and others’ work, participating productively in peer feedback processes and developing self-evaluative skills. Managing affect includes maintaining emotional balance, avoiding defensiveness, having dialogue about feedback, and striving for continuous improvement. These three features (appreciating feedback, making judgments, and managing affect) add students’ possibilities for the fourth feature, which is taking action. Taking action comprises understanding that using feedback requires the recipient’s activity and developing strategies for acting on feedback.

The work of Carless and Boud (Citation2018) has induced further research. Molloy et al. (Citation2019) explored higher education students’ feedback literacy from the students’ perspective. Their results present seven groups containing altogether 31 categories of knowledge, capabilities, and skills of feedback literacy. Hey-Cunningham et al. (Citation2020) designed a pilot of blended learning for postgraduate research students in academic writing that contained principles, exemplars, self-assessment, and peer assessment, and noticed that it improved students’ feedback literacy. In particular, students felt that they learned skills and gained experience about how to proceed with the received feedback. Han and Xu (Citation2019b) explored the development of higher education students’ feedback literacy, and used teachers’ feedback on peer feedback as an intervention. Researchers reported a growth in feedback literacy, especially in students’ ability to assess their peers’ work, that is, judging the quality and providing feedback for learning. They reported a considerable individual variation in students’ development. Han and Xu (Citation2019a) investigated higher education students’ feedback literacy and its impact on engagement in the context of teachers’ written corrective feedback. They found that individual students’ skills of feedback literacy were unequally developed, which limited students’ engagement with feedback. The researchers noticed not only that students’ feedback literacy was dynamic and developed in the feedback processes, but also that the development appeared rather randomly.

Peer assessment

Building on previous research (Carless & Boud, Citation2018; Sadler, Citation1989; Topping, Citation2013), formative peer assessment is defined as a process in which students evaluate or are evaluated by their peers with the intention that both the assesses and assessees enhance their work or learning strategies in the process. When discussing peer assessment, the concept of feedback must be understood broadly, as both assessing and being assessed provide opportunities for receiving feedback. Carless and Boud defined feedback as “a process through which learners make sense of information from various sources and use it to enhance their work or learning strategies” (p. 1315). In peer assessment, the potential sources of information are many, including assessment criteria, assessed work, received feedback, interaction with classmates, and coaching from the teacher.

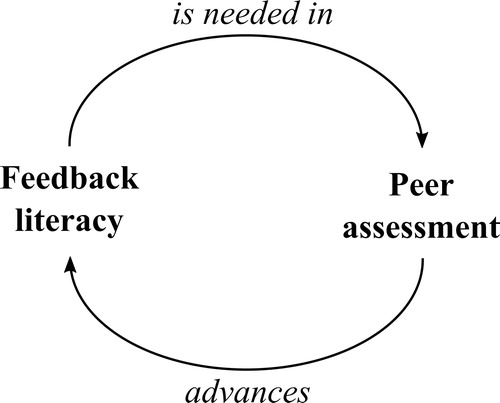

Relationship of peer assessment and feedback literacy

Peer assessment and feedback literacy are inter-related (). Participating productively in peer assessment requires feedback literacy (Han & Xu, Citation2019b), and peer assessment provides opportunities to advance it. In the best case, feedback literacy and peer assessment support each other, as positive experiences of peer assessment predict teachers’ frequent use of peer assessment (Panadero & Brown, Citation2017). Students’ advancement in feedback literacy makes peer assessment a more applicable tool; therefore, feedback-literate students are more likely to get further opportunities to develop their feedback literacy with peer assessment. Researchers have a common understanding that peer assessment requires training (Gielen et al., Citation2010; Hovardas et al., Citation2014; Lu & Law, Citation2012; Topping, Citation2009; van Zundert et al., Citation2010), which implies that a certain level of feedback literacy is needed to enable a productive peer assessment process. If students are not feedback-literate enough to benefit from peer assessment, the practice is more likely experienced as malfunctioning and rejected.

The prior research on peer assessment does not generally mention feedback literacy but recognizes its features. The next sections introduce research that presents how each of four features of feedback literacy (Carless & Boud, Citation2018) are needed in peer assessment as well as research that shows that peer assessment has the potential to advance each feature of feedback literacy.

Appreciating feedback

When students understand that feedback is a learning tool, they are more likely to benefit from peer assessment (Ketonen et al., Citation2020). Incomprehension in this area may result in unproductive practices, such as the provision of superficial positive feedback (Tasker & Herrenkohl, Citation2016) or friendship marking (Foley, Citation2013; Panadero et al., Citation2013), which occurs when students let social relationships affect the feedback they provide. Peer assessment can help tackle these issues because it is a tool for discussing the different aims of summative and formative assessment (Davis et al., Citation2007). Tasker and Herrenkohl (Citation2016) reported that peer assessment provides a context for training and reflection that advances students’ appreciation of critical feedback and leads them from providing positive, superficial peer feedback to providing guidance. Another essential component of feedback literacy in peer assessment is appreciating feedback from different sources. Students tend to undervalue and disregard feedback from their peers (Foley, Citation2013). Peer assessment can help with this issue as well because it advances students’ appreciation of peers as a source of feedback (Crane & Winterbottom, Citation2008).

Making judgments

Peer assessment requires the skills of judging received feedback and judging others’ work. Concerning judgments, Carless and Boud’s (Citation2018) framework for feedback literacy concentrates on judging one’s own and others’ work, and Molloy et al. (Citation2019) further included the aspect of processing received feedback. The ability to critically interpret feedback is an important skill that enhances the benefits of peer assessment (To & Panadero, Citation2019). Guiding students to be active in the assessee’s role by evaluating received feedback improves their motivation to engagement in peer assessment (Minjeong, Citation2009). Students need to understand the assessment criteria in order to judge their peers’ work (Cartney, Citation2010; Foley, Citation2013; Panadero et al., Citation2013). Moreover, a shared understanding of the criteria increases students’ comfort with peer assessment (Panadero et al., Citation2013). Peer assessment can be used to train students to deepen their understanding of the criteria (Anker-Hansen & Andrée, Citation2019; Black & Wiliam, 2018) and develop the ability to judge others’ work (Han & Xu, Citation2019b).

Managing affect

Peer feedback may raise negative emotions (Cartney, Citation2010; Panadero, Citation2016). Assessees can be defensive against corrective peer feedback (Anker-Hansen & Andrée, Citation2019; Ketonen et al., Citation2020; Tasker & Herrenkohl, Citation2016), and assessors may worry about assessees’ negative emotions (Cartney, Citation2010; Davis et al., Citation2007). However, because of peer assessment’s interactive nature, affective issues cannot be avoided (Panadero, Citation2016). Managing affections is hence necessary, and for productive peer assessment, students need support with the emotional aspects related to both the assessors’ and assessees’ roles (Cartney, Citation2010). Fortunately, peer assessment improves psychological safety within a group (van Gennip et al., Citation2010), meaning that group members feel safer sharing their opinions and asking for help. This promotes elicitation of suggestions from peers and discussions about them, which relate to managing affect in Carless and Boud’s (Citation2018) framework.

Taking action

Studies have noted students’ reluctance to revise their work according to peer feedback (Anker-Hansen & Andrée, Citation2019; Tsivitanidou et al., Citation2011; Citation2012). As theorized by Carless and Boud (Citation2018), this reluctance is the result of insufficient feedback literacy, such as an insufficient understanding of assessment criteria (Tsivitanidou et al., Citation2011) or formative assessment (Ketonen et al., Citation2020). As explained previously, peer assessment can help address these issues and is generally considered useful in encouraging students to act on feedback (Jonsson, Citation2013), especially when integrated in a deeper conversation about assessment (Cartney, Citation2010).

Research questions

Feedback literacy seems an inherent requirement of productive peer assessment, but it is little researched at the secondary level. In order to investigate secondary students’ feedback literacy and its development, the following research questions were addressed:

Research Question 1:What kind of skills of feedback literacy do students have in the context of formative peer assessment?

Research Question 2:How does students’ feedback literacy develop during one year of using peer assessment repeatedly?

Method

Participants

The study was carried out in an ordinary urban school in Finland. The intervention was conducted in cooperation with an experienced subject teacher and her two science classes (15 and 16 students, respectively); the study started at the beginning of the students’ Grade 7 year and lasted until the middle of their Grade 8 year. At the beginning of the intervention, the students did not know each other well, but most had at least one friend in the class. In Finland, students study general science in Grades 1–6 with a class teacher and begin physics and chemistry in Grade 7 with a subject teacher. This transition into more specialized learning seemed like an ideal moment to introduce a new practice. Peer assessment is not yet a well-established practice in Finland (Atjonen et al., Citation2019), and when asked, approximately half the students had experiences with peer assessment, though none had used it regularly.

The teacher was asked to join the study because of her well-organized, thoughtful working style, which enabled the intervention’s careful co-planning, and for her considerate but not overly individual teaching style. The study aimed at understanding and explaining the phenomena of peer assessment and feedback literacy instead of making broad generalizations, and the number of participants was kept small in order to enable knowing the students and gathering of rich data. Two students chose not to participate in the study; the total number of participants was 29. Twenty-two students participated in all parts of the first peer assessment, and 15 of those 22 participated in all parts of the second peer assessment, which allowed the researcher to track their development. Two of 15 students missed three or more of the five peer assessments that were arranged between the abovementioned two peer assessments, which diluted the intervention, and they were therefore left out of the study. Altogether, 13 students provided a satisfying dataset, and they were included in the analysis of the study.

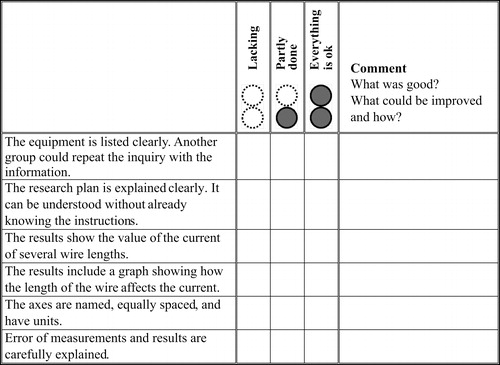

Procedure

The study examined students in two similar peer assessments. The first (PA1) was conducted during the fall semester of Grade 7, and the second (PA2) was conducted a year later. In PA1 and PA2, the students conducted and reported on a physics inquiry. The topics (determining the speed of an object and determining the resistance of a wire) were different due to the curricula, but the difficulty of the tasks, the form of the lab reports, and the assessment criteria were similar. The students conducted the inquiry in groups but created the reports individually. After the reports were finished, they were assessed by their peers. The researcher and the teacher planned the pairings so that students were not partnered with someone they conducted the inquiry with and with sensitivity to social issues, such as pairing vulnerable students with considerate classmates. The students were provided with premade criteria in which each criterion was assessed with a 3-point Likert scale and optional written comments (). After the peer assessment, the reports and feedback were returned to the students, and they had time to improve their work before returning it to the teacher for summative assessment.

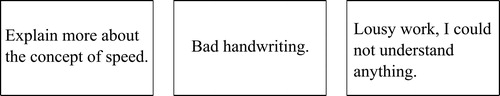

In addition to these two peer assessments, training sessions and several other peer assessments were arranged. Before PA1, the students had six training sessions, and they practiced peer assessment once. Between PA1 and PA2, they had five more peer assessments and one training session. All parts of the interventions are presented in . The training sessions and peer assessments were embedded in the curricula and consisted of class discussions led by the researcher or the teacher, both of whom used cards displaying examples of feedback (), principles of peer assessment, written tasks (e.g., creating or testing assessment criteria), and actual peer assessments. The training focused on issues that previous research noted as important for successful peer assessment, specifically understanding the idea of formative assessment, understanding of the qualities of useful feedback, internalizing assessment criteria, comparing the criteria with the work, social rules, and practicing actual peer assessment. The key message was that peer assessment is for helping others learn and receiving help from others.

Figure 3. The feedback example cards discussed in one training session. The class agreed that the first critique was worth considering, whereas the third was unhelpful, offensive, and not worth keeping.

Table 1. Interventions of the study.

The students remained focused during the class discussions and seemed to find the topics interesting. The intention of the discussions was not to find “the right answer,” but rather to encourage the students to share their thoughts. The training was flexible, as the intent was to respond to the students’ needs. For example, when peer assessment was first practiced, the students struggled to deal with critical feedback; the issue was therefore included in the training and discussed before PA1. The teacher and the researcher had prolonged experience in co-teaching at the school, which helped co-planning the interventions and made sharing the responsibility in the classroom natural. During the written tasks and peer assessments, they circulated throughout the classroom and held discussions with students who needed help, encouraging them to use and trust their own judgment. Even though class sessions were planned so that the students were equipped with the necessary information, discussions with individual students and working groups appeared necessary and influential.

Research design and data

A case study design was adopted for the exploratory nature of the research. The case study approach allowed us to know all the students and gather versatile data. The data include field notes and audio recordings of lessons, student interviews, and students’ written work and feedback. The researcher placed recorders on the tables of each student pair and took field notes on each lesson in which she participated (approximately 60 lessons of 1.5 hr each). Students’ original and revised work and provided feedback were scanned. Besides the analysis, these documents were used as a basis of conversation in semi-structured, audio-recorded student interviews soon after PA1 and PA2.

Analysis

The analysis comprised three parts. In the first and second parts, the data were analyzed using a thematic analysis (Braun & Clarke, Citation2006) to identify and report patterns in the data. A thematic analysis was chosen for its flexibility: it is not bound to any specific theoretical framework, and it works well in analyzing data consisting of various forms. It can be used to find similarities and differences within the data, and it can produce unexpected insights. The patterns can be driven by either data or theory.

The first part of the analysis was driven by theory. The aim was to recognize the features of feedback literacy that appeared in the data during peer assessment and to adjust the features of Carless and Boud’s (Citation2018) framework to the context of formative peer assessment. We looked for data extracts that contained information on the students’ feedback literacy and attached codes to them. The codes described the students’ actions and attitudes relating to the elements of feedback literacy: appreciating feedback, making judgments, managing affect, and acting on feedback. After coding the whole dataset, the codes were gathered into preliminary categories. The distinctiveness of the categories was then examined, and the categories were adjusted and named. The data were recoded, and categories were re-examined and readjusted until no further changes were undertaken. In the end, three categories of feedback literacy skills were discerned.

The goal of the second part of the analysis was to examine the skills that students had in the three categories of feedback literacy. During the first part of the analysis, we noticed students had varying feedback literacy skills, and we defined and described them in an iterative process. The analysis was driven by data, but its scope was limited to the previously identified categories. Within each category, we formed case groups with similar skill levels. Then, we examined and described the groups and returned to the data with revised descriptions. We revised the groups until new rounds did not produce changes to the groups or their descriptions. While remaining sensitive to the theory, we organized the groups from the most basic to the most advanced, thereby creating a criteria-based rubric for feedback literacy skills. In two cases, a skill level was created from the data of only one student, and we enriched that data with interviews with additional students in the spring of Grade 7 (). This enabled us to test our rubric and find more examples to describe the levels.

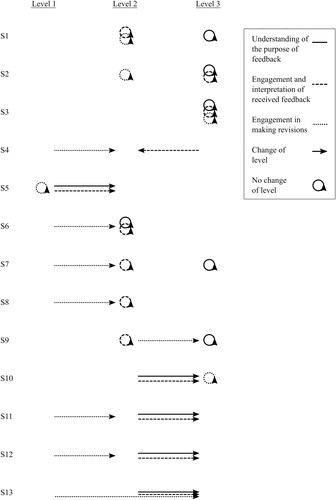

In the third phase of analysis, the category criteria were used in evaluating seventh- and eighth-grade students’ feedback literacy, and multiple data sources were also used. We coded students’ “Understanding of the purpose of feedback” and “Engagement and interpretation of received feedback” skills using interviews as a primary data source, and to ensure reliability, we compared students’ narratives to their own work, their received and provided feedback, and the work that they had assessed. If the findings needed further affirmation, we used field notes from and audio recordings of the lessons. In two cases (Students 4 and 8), even these data did not provide enough information to determine the category level, and these skills were left out of these students’ results (). The “Engagement in making revisions” skill was coded using students’ original and revised work to recognize the changes they had made. Their engagement in making these changes was interpreted using interviews with students, field notes from the lessons, and audio recordings of the lessons.

This study’s authors engaged in peer negotiation to test the levels and categories. Coding students’ skills was a complex task that required precise interpretation and the management of versatile data because students expressed their feedback literacy skills in diverse parts of the interview, and as explained previously, the comments were interpreted with other data. Therefore, negotiation among the authors was considered appropriate to examine the reliability of coding. Laura Ketonen, who was the only one involved in teaching, prepared the data of three regular and two specifically challenging cases for negotiation. The data from each student were gathered into one document, and the data extracts used in defining the levels of the categories were discussed. Pasi Nieminen and Markus Hähkiöniemi explained their views on the Laura Ketonen’s coding, and discrepancies were carefully discussed. After the regular cases, the challenging ones were examined. We had a mutual agreement that, due to scant interviewee input from students, these cases were considerably more challenging than the regular cases, and definitively determining the level of students’ skills was not possible. The decision to leave one skill out of the results in two cases (Students 4 and 8) was made in the meeting.

Results

The results are twofold. The first part of the results consists of the categories with levels of students’ feedback literacy (, and ), and the second part consists of the paths of development of secondary students’ feedback literacy.

Table 2. Understanding of the purpose of feedback.

Table 3. Engagement and interpretation of received feedback.

Table 4. Engagement in making revisions.

Categories and levels for students’ feedback literacy

Three categories of students’ skills of feedback literacy were recognized in the context of formative peer assessment: understanding of the purpose of feedback, engagement and interpretation of received feedback, and engagement in making revisions. Understanding of the purpose of feedback () relates to students’ dispositions to feedback. The students on the first level do not appreciate any kind of feedback. The second level, appreciation of positive feedback, reflects the understanding of feedback as a judgment. By this conception, corrective feedback communicates a shortcoming or a failure, and it is not wanted. Conversely, students on the third level welcome corrective feedback, as they see it as a mediator of learning and improvement.

Engagement and interpretation of received feedback () describes students’ activity in judging the received feedback. The first level is not being interested in feedback at all, and it recalls the first level of the previous category. This is natural, as students’ passive role and reluctance to accept feedback is reflected in several aspects of feedback literacy. The second level describes the student reading the feedback but staying in the passive role of a receiver. The third level comprises active interpretation and evaluation of the received feedback.

Engagement in making revisions () describes students’ active engagement in making revisions. Do students improve their work, and if so, do they merely follow the instructions of the feedback, or do they have an active role in finding new knowledge or feedback, for example from the teacher, the material or their peers?

Development of students’ feedback literacy skills

The development of students’ feedback literacy in Grades 7 and 8 is presented in . Different line types represent the different categories of feedback literacy. Arrows show the changes in students’ feedback literacy from Grades 7 to 8, and circles indicate staying on the same level. Changes primarily happened from the lower to the higher level. Improvement was noticed in all categories: understanding of the purpose of feedback (five cases), engagement and interpretation of received feedback (four cases), and engagement in making revisions (seven cases). One transition happened from the higher to the lower level in engagement and interpretation of received feedback.

Next, we will present students’ development of feedback literacy with two examples. The first example is used to demonstrate improvement in the understanding of the purpose of feedback and the second students’ engagement and interpretation of received feedback.

Example: Improving the understanding of the purpose of feedback

Student 10 showed strong development in feedback literacy. She was already on the highest of three levels on “engagement in making revisions” in PA1, but in “understanding of the purpose of feedback” and “engagement and interpretation of received feedback,” she advanced from Level 2 to Level 3. In PA1, the researcher inquired into the student’s feelings about the received, predominantly positive feedback:

R: When you read the feedback, how did you feel about it?

S10: It was good, like I had thought about the task quite a lot and tried to make it, like, as good as possible, so it is encouraging when you kind of succeed.

R: Was the feedback useful to you in some way?

S10: Yeah. Or it was fun, like, have your classmate assess you. That was sort of useful. I don’t know. It was nice anyway.

The student stated that she had enjoyed the positive feedback. She could not actually say that it was useful, but nevertheless, she was contented with receiving positive feedback. A year later, the researcher asked student the same question about PA2:

R: You received this feedback. So what did you think about it?

S10: Like, it was pretty good, like I received positive feedback, but maybe, like, it could have been, like, I don’t know, cause there was nothing that I could have improved or anything.

R: Would you have hoped for something like that?

S10:Well, maybe.

R: Mm?

S10: Yes. So I could have used it for improvement.

A year later, the student was no longer happy with positive feedback. She stated that she had hoped for guiding feedback that would have helped her to improve her work. Her expectations for feedback had changed as she had learned to appreciate corrective feedback.

The student’s development in “engagement in making revisions” was connected to learning to appreciate critical feedback. In PA1, the student only made the one change that was suggested in the feedback. In PA2, she actively looked for feedback. Even though her peer assessor did not provide guidance, she utilized the feedback that her work partner had received and the feedback that assessing the other student’s work provided her. She had adopted a view of feedback as being meant for improvement and become more active in making revisions.

Example: Improving engagement and interpretation of received feedback

Student 12 was less feedback-literate than Student 7 in the earlier example. In PA1, Student 12 was on Level 1 on “engagement in making revision” and Level 2 on “understanding of the purpose of feedback” and “engagement and interpretation of received feedback.” This meant that he superficially performed his role as a peer assessor and did not revise his work. After PA1, the researcher asked the student’s thoughts about the received feedback:

R: Was it useful for you, the feedback?

S12: Yes, it was.

R: Tell me how?

S12: Well, there is, for example, that I could tell more clearly how to make the measurements … there was, for example, that it [conducting measurement] was told clearly, so it points out how to make it in the future.

The student’s narration of the feedback is contradictory. He had received a feedback comment that the work was hard to interpret. Student 12 had looked at the feedback but not worked to understand its meaning. Instead of comparing the feedback to his work and deciding to reject the feedback or to improve the work, he explained that he had taken the feedback as general guidance for the future (Level 2). A year later, after PA2, the student explained his interpretations of his feedback as follows:

S12: I had thought that if I received good tips, I could improve my work a little, since I hadn’t come up with ideas myself. But then [after receiving feedback] I started figuring out what I should do and, erm, like, I was running out of time, so I finished this [shows a part of the task]. And, erm, I added here …

R: Yes?

S12: I took, like, at least the most important ones [feedback comments] and then …

R: Yes?

S12: Well, the others I ignored, there was not time [for] everything.

The student explained that he had evaluated which were the most important feedback comments and ignored less important ones. In addition, he stated that he had considered part of the feedback comments wrong and therefore ignored them (Level 3). When the researcher inquired about an example of such a comment, he answered:

S:Well, maybe this one, since it said, “how many wires”, since, erm, usually you can take enough and, erm, like, see how many you will use.

The student’s engagement and active interpretation of received feedback advanced from PA1 to PA2. In PA2, the student had several strategies for dealing with feedback: to approve, to prioritize, and to reject. The student did not improve his work after PA1, but after PA2, he made two meaningful improvements that he had judged important to make. Active interpretation of received feedback appeared to enable him to revise his work meaningfully.

Discussion

The present study explored the secondary students’ use of formative peer assessment and showed that seventh-grade students developed feedback literacy skills after one year of practicing peer assessment. The research on feedback literacy has so far focused on higher education, but these results demonstrate that Carless and Boud’s (Citation2018) framework is relevant at the secondary level. Prior studies did not explicitly describe the levels of students’ feedback literacy (Han & Xu, Citation2019b; Hey-Cunningham et al., Citation2020), but this study identified three levels of development in three categories of feedback literacy. Building an understanding of the levels of development in feedback literacy will enable the tracking of students’ longitudinal development across disciplines and school sectors, and it will help educators identify students’ strengths and needs. In this study, the levels were used to determine seventh- and eighth-grade students’ feedback literacy. On average, advancement was noticed in all three categories throughout the year of practicing peer assessment in their science studies, but individual variations were noticed, as they were in the context of higher education (Han & Xu, Citation2019b). Han and Xu (Citation2019b) and Hey-Cunningham et al. (Citation2020) explored and reported development in graduate and postgraduate students’ feedback literacy, respectively. Features in common with the present study were the explicit discussion of the feedback processes and the use of peer feedback, implying that these are promising practices to promote the development of students’ feedback literacy.

The identified categories of feedback literacy in which the students showed different skill levels during peer assessment were “understanding the purpose of feedback,” “engagement and interpretation of received feedback,” and “engagement in making revisions.” The levels of the first category—“understanding of the purpose of feedback” ()—described the students’ expectations of feedback: did they want it, and if so, did they want to be told they had done a good job or find opportunities for further improvement? The students’ development was shown through their increased interest in feedback and appreciation of criticism. Other studies have presented similar findings. Tasker and Herrenkohl (Citation2016) found that students learn to value critical feedback, and Han and Xu (Citation2019b) reported a cultivated willingness in students’ to ask for peers’ opinions. Assessment practices in Finland are prevalently summative (Atjonen et al., Citation2019), and students are accustomed to receiving evaluative feedback at the end of courses when criticism cannot be used to improve their performance. In this study, peer assessment was used to emphasize formative assessment. Peer feedback was specifically designated for improvement, and the time given for revising work strengthened this message. In formative peer assessment, students are persuaded to focus on improvement and hence gain experience with corrective feedback and find it desirable.

The levels of the second category—“engagement and interpretation of received feedback” ()—related to the students’ actions when receiving peer feedback: did they read the feedback, and if so, were they active in interpreting it and able to reject it when reasonable? Hey-Cunningham et al. (Citation2020) noticed a similar scale of conception with postgraduate students. A considerable portion of students in their study indicated that they would not question supervisors’ feedback, while some expressed that they would think about it critically. After attending a program concerning the use of feedback, all students understood the value of critically considering feedback. In the present study, the critical reception of feedback appeared challenging to the students. They tended to expect clear, unambiguous feedback that did not require active interpretation, a factor also found by O’Donovan (Citation2017) and one that stems from becoming accustomed to teacher feedback that is not expected to be questioned or rejected. Receiving feedback from a peer exposed the students to the need to judge it. Additionally, the topic was explicitly addressed, and the appraisal of feedback was encouraged.

The levels of the third category— “engagement in making revisions” ()—described whether the students improved their work and, if so, whether that was due to their following the explicit advice of the feedback or as a result of their own activity. Hey-Cunningham et al. (Citation2020) found similar levels of feedback literacy, stating that postgraduate students learned to understand revision as a process that was not about following feedback but engaging in learning. Carless and Boud (Citation2018) theorized that the ability to take action stems from developing other feedback literacy skills. Understanding corrective feedback as a mediator of improvement is pivotal in peer assessment (Ketonen et al., Citation2020; McConlogue, Citation2015; Tasker & Herrenkohl, Citation2016), and the results of this study support that idea. Several studies on secondary students mentioned their struggles to act on peer feedback (Anker-Hansen & Andrée, Citation2019; Hovardas et al., Citation2014; Tseng & Tsai, Citation2007), and we suggest that the reason might be students’ undeveloped feedback literacy skills.

This study has implications for practice. The levels of the first category show that the students were not necessarily inclined to read feedback or use it for improvement. In such cases, there is no point in pushing feedback, but the issues concerning students’ goals and their understanding of feedback must be dealt with. Feedback cannot help students achieve their goals if they do not have any or if students construe guiding feedback as offensive criticism. The levels of the second category revealed the students’ passive approach to feedback. Even when interested in it, most of the students were not initially prepared to invest in interpreting it but expected the feedback to tell them unambiguously how to proceed. If students are not accustomed to judging feedback, they need to be encouraged to do so. The freedom of whether to act on feedback may assist in seeing critical feedback as a gift that potentially advances learning instead of a burden that automatically leads to more work.

We acknowledge several study limitations. The first is that the research is not experimental; therefore, it is unclear to what extent the use of peer assessment affected the development of students’ feedback literacy. Physics and chemistry contexts comprise only 5% of studies in lower secondary schools, and during the school year, the students naturally faced experiences other than this intervention. The second limitation is this study’s specific context, as it focused on two student groups at the same school. The third limitation relates to the method. Methodologically, this study differs from previous ones exploring the development of feedback literacy (Han & Xu, Citation2019b; Hey-Cunningham et al., Citation2020) because it created criteria for the levels of feedback literacy and thus made the features of development explicit. However, other feedback literacy categories could probably be found with more data sources. For example, the managing of affect could not be detected, as the students were predominantly private about their negative feelings. Hence, further research on the variety of secondary students’ feedback literacy skills is needed. The criteria for the levels of the students’ feedback literacy were found functional in the coding of their skills, and these criteria can be used and elaborated on in other studies.

The present study shows that secondary students can be led to develop their feedback literacy skills. The development of their feedback literacy will be of value in their university studies (O’Donovan, Citation2017) and workplaces (Carless & Boud, Citation2018). Given that most people will not attend higher education but that feedback literacy skills are relevant to everyone, feedback literacy should be developed in secondary education.

Supplemental Material

Download PDF (1.6 MB)References

- Anker-Hansen, J. , & Andrée, M. (2019). Using and rejecting peer feedback in the science classroom: A study of students’ negotiations on how to use peer feedback when designing experiments. Research in Science & Technological Education , 37 (3), 346–365.

- Atjonen, P. , Laivamaa, H. , Levonen, A. , Orell, S. , Saari, M. , Sulonen, K. , Tamm, M. , Kamppi, P. , Rumpu, N. , Hietala, R. , & Immonen, J. (2019). Että tietää missä on menossa . Oppimisen ja osaamisen arviointi perusopetuksessa ja lukiokoulutuksessa . The publications of the Finnish Education Evaluation Centre.

- Black, P., & Wiliam, D. (2018). Classroom assessment and pedagogy. Assessment in Education: Principles, Policy & Practice 25(6), 551–575.

- Braun, V. , & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology , 3 (2), 77–101. [Database] https://doi.org/10.1191/1478088706qp063oa

- Boud, D. , & Molloy, E. (2013). What is the problem with feedback? In D. Boud and W. Molloy (Eds.), Feedback in Higher and Professional Education (pp. 1–10). Routledge.

- Carless, D. , & Boud, D. (2018). The development of student feedback literacy: Enabling uptake of feedback. Assessment & Evaluation in Higher Education , 43 (8), 1315–1325.

- Cartney, P. (2010). Exploring the use of peer assessment as a vehicle for closing the gap between feedback given and feedback used. Assessment & Evaluation in Higher Education , 35 (5), 551–564.

- Crane, L. , & Winterbottom, M. (2008). Plants and photosynthesis: Peer assessment to help students learn. Journal of Biological Education , 42 (4), 150–156. https://doi.org/10.1080/00219266.2008.9656133

- Davis, N. , Kumtepe, E. G. , & Aydeniz, M. (2007). Fostering continuous improvement and learning through peer assessment: Part of an integral model of assessment. Educational Assessment , 12 (2), 113–135. https://doi.org/10.1080/10627190701232720

- Delva, D. , Sargeant, J. , Miller, S. , Holland, J. , Alexiadis Brown, P. , Leblanc, C. , Lightfoot, K. , & Mann, K. (2013). Encouraging residents to seek feedback. Medical Teacher , 35 (12), e1625–1631. https://doi.org/10.3109/0142159X.2013.806791

- Foley, S. (2013). Student views of peer assessment at the International School of Lausanne. Journal of Research in International Education , 12 (3), 201–213.

- Gielen, S. , Peeters, E. , Dochy, F. , Onghena, P. , & Struyven, K. (2010). Improving the effectiveness of peer feedback for learning. Learning and Instruction , 20 (4), 304–315. https://doi.org/10.1016/j.learninstruc.2009.08.007

- Gravett, K. , Kinchin, I. , Winstone, N. , Balloo, K. , Heron, M. , Hosein, A. , Lygo-Baker, S. , & Medland, E. (2020). The development of academics’ feedback literacy: Experiences of learning from critical feedback via scholarly peer review. Assessment & Evaluation in Higher Education. Advance Online Publication , 45 (5), 651–665.

- Han, Y. , & Xu, Y. (2019a). Student feedback literacy and engagement with feedback: A case study of Chinese undergraduate students. Teaching in Higher Education . Advance Online Publication, 1–16. https://doi.org/10.1080/13562517.2019.1648410

- Han, Y. , & Xu, Y. (2019b). The development of student feedback literacy: The influences of teacher feedback on peer feedback. Assessment & Evaluation in Higher Education , 45 (5), 680–696.

- Hattie, J. , & Timperley, H. (2007). The power of feedback. Review of Educational Research , 77 (1), 81–112. [Database] https://doi.org/10.3102/003465430298487

- Hey-Cunningham, A. J. , Ward, M.-H. , & Miller, E. J. (2020). Making the most of feedback for academic writing development in postgraduate research: Pilot of a combined programme for students and supervisors. Innovations in Education and Teaching , Advance Online Publication. https://doi.org/10.1080/14703297.2020.1714472

- Hovardas, T. , Tsivitanidou, O. E. , & Zacharia, Z. C. (2014). Peer versus expert feedback: An investigation of the quality of peer feedback among secondary school students. Computers & Education , 71, 133–152.

- Jonsson, A. (2013). Facilitating productive use of feedback in higher education. Active Learning in Higher Education , 14 (1), 63–76.

- Ketonen, L. , Hähkiöniemi, M. , Nieminen, P. , & Viiri, J. (2020). Pathways through peer assessment: Implementing peer assessment in a lower secondary physics classroom. International Journal of Science and Mathematics Education , 18 , 1465–1484. https://doi.org/10.1007/s10763-019-10030-3

- Lu, J. , & Law, N. (2012). Online peer assessment: Effects of cognitive and affective feedback. Instructional Science , 40 (2), 257–275. https://doi.org/10.1007/s11251-011-9177-2

- McConlogue, T. (2015). Making judgements: Investigating the process of composing and receiving peer feedback. Studies in Higher Education , 40 (9), 1495–1506.

- McLean, A. J. , Bond, C. H. , & Nicholson, H. D. (2015). An anatomy of feedback: A phenomenographic investigation of undergraduate students' conceptions of feedback. Studies in Higher Education , 40 (5), 921–932. https://doi.org/10.1080/03075079.2013.855718

- Minjeong, K. (2009). The impact of an elaborated assessee’s role in peer assessment. Assessment & Evaluation in Higher Education , 34 (1), 105–114.

- Molloy, E. , Boud, D. , & Henderson, M. (2019). Developing a learning-centered framework for feedback literacy. Assessment & Evaluation in Higher Education , 45 (4), 527–540. https://doi.org/10.1080/02602938.2019.1667955

- O’Donovan, B. (2017). How student beliefs about knowledge and knowing influence their satisfaction with assessment and feedback. Higher Education , 74 (4), 617–633.

- Panadero, E. (2016). Is it safe? Social, interpersonal, and human effects of peer assessment: A review and future directions. In G. T. L. Brown , & L. R. Harris (Eds.), Human factors and social conditions of assessment (pp. 1–39). Routledge.

- Panadero, E. , & Brown, G. T. L. (2017). Teachers’ reasons for using peer assessment: Positive experience predicts use. European Journal of Psychology of Education , 32 (1), 133–156. https://doi.org/10.1007/s10212-015-0282-5

- Panadero, E. , Romero, M. , & Strijbos, J. W. (2013). The impact of a rubric and friendship on peer assessment: Effects on construct validity, performance, and perceptions of fairness and comfort. Studies in Educational Evaluation , 39 (4), 195–203. https://doi.org/10.1016/j.stueduc.2013.10.005

- Sadler, D. R. (1989). Formative assessment and the design of instructional systems. Instructional Science , 18 (2), 119–144.

- Sutton, P. (2012). Conceptualizing feedback literacy: Knowing, being, and acting. Innovations in Education and Teaching International , 49 (1), 31–40.

- Tasker, T. , & Herrenkohl, L. (2016). Using peer feedback to improve students’ scientific inquiry. Journal of Science Teacher Education , 27 (1), 35–59. https://doi.org/10.1007/s10972-016-9454-7

- To, J. , & Panadero, E. (2019). Peer assessment effects on the self-assessment process of first-year undergraduates. Assessment & Evaluation in Higher Education , 44 (6), 920–932.

- Topping, K. J. (2009). Peer assessment. Theory into Practice , 48 (1), 20–27.

- Topping, K. J. (2013). Peers as a source of formative and summative assessment. In J. H. McMillan (Ed.), SAGE handbook of research on classroom assessment (pp. 394–412). Sage Publications, Inc.

- Tseng, S. C. , & Tsai, C. C. (2007). On-line peer assessment and the role of the peer feedback: A study of high school computer course. Computers & Education , 49 (4), 1161–1174. https://doi.org/10.1016/j.compedu.2006.01.007

- Tsivitanidou, O. E. , Zacharia, Z. C. , Hovardas, T. , & Nicolaou, A. (2012). Peer assessment among secondary school students: Introducing a peer feedback tool in the context of a computer supported inquiry learning environment in science. Journal of Computers in Mathematics and Science Teaching , 31 (4), 433–465.

- Tsivitanidou, O. E. , Zacharia, Z. C. , & Hovardas, T. (2011). Investigating secondary school students’ unmediated peer assessment skills. Learning and Instruction , 21 (4), 506–519. https://doi.org/10.1016/j.learninstruc.2010.08.002

- van Gennip, N. A. E. , Segers, M. S. R. , & Tillema, H. H. (2010). Peer assessment as a collaborative learning activity: The role of interpersonal variables and conceptions. Learning and Instruction , 20 (4), 280–290. https://doi.org/10.1016/j.learninstruc.2009.08.010

- van Zundert, M. , Sluijsmans, D. , & van Merriënboer, J. (2010). Effective peer assessment processes: Research findings and future directions. Learning and Instruction , 20 (4), 270–279. https://doi.org/10.1016/j.learninstruc.2009.08.004

- Wiliam, D. (2012). Feedback: Part of a system. Educational Leadership , 70 (1), 30–34.

- Winstone, N. E. , Nash, R. A. , Rowntree, J. , & Parker, M. (2017). It’d be useful, but I wouldn’t use it: Barriers to university students’ feedback seeking and recipience. Studies in Higher Education , 42 (11), 2026–2041. https://doi.org/10.1080/03075079.2015.1130032