ABSTRACT

The Collaborative Knowledge Practices Questionnaire (CKP) is an instrument designed to measure the learning of knowledge-work competence in education. The focus is on qualities of knowledge work which can be learned and taught in multiple educational settings and which may be especially important for courses with collaborative assignments. The original instrument was theoretically based on the knowledge-creation metaphor of learning. The instrument has been validated in Finnish based on student responses from a large number of higher education courses. The validation of the instrument resulted in seven scales relating to different aspects of interdisciplinary, collaborative development of knowledge-objects using digital technology. This study aimed to cross-culturally translate and adapt the original instrument into English and perform an exploratory structural equation modelling (ESEM) analysis in order to investigate whether the same factorial solution of the instrument also works in English in higher education courses in international settings. The original instrument was translated according to established guidelines for cross-cultural adaptation of self-report measures. The translated version has been tested in courses in medical education, online teaching and problem solving. The results provided evidence that the latent factor model found in the original instrument provided a good fit also for the adapted questionnaire.

Background

There is an increased interest in generic, subject-independent skills in higher education and especially such skills that are important during work life and which could be better addressed already during education (Hyytinen, Toom, and Postareff Citation2018). Today’s students will have to tackle jobs that are profoundly different from existing ones involving requirements on developing competence in various practices which are typically not taught in higher education (European Union Citation2010). It has been pointed out that educational systems need to equip young people with new skills and competence adapted to the developments in society and economy which have come to view knowledge as the main asset (Ananiadou and Claro Citation2009). There is also a mismatch between formal education and the challenges of society regarding fostering skills for innovation (Clarke and Clarke Citation2009; Cobo Citation2013; Klusek and Bernstein Citation2006). In order to manage the changing requirements in the society and in the work life, new type of skills are therefore needed, such as collaborative learning, cultural awareness, self-leadership and flexibility, besides the ‘traditional’ work life skills, such as teamwork and social skills (Ilomäki, Lakkala, and Kosonen Citation2013). In addition, the transforming labour market generates new professions and changes in existing professions. For instance, outsourcing and entrepreneurship require competencies, which typically are not taught in higher education (European Union Citation2010). Most such skills – often referred to as ‘21st century skills and competencies’ (Voogt and Roblin Citation2012) to indicate that they are related to emerging models of economic and social development – can either be supported or enhanced by information technology (Ananiadou and Claro Citation2009). Formal education is also expected to support students in acquiring abilities to use technologies for collaboration and innovation, but research indicates that pedagogical changes have not actualised as expected, and this is a concern for both higher education and upper secondary schools (Clarke and Clarke Citation2009; Klusek and Bernstein Citation2006; Tynjälä Citation2008). While there is an emerging theoretical trend to highlight knowledge creation practices as a basis for understanding modern knowledge work (Knorr Cetina Citation2001), prevalent pedagogical methods and practices focus on content learning rather than on fostering the learning of professional knowledge work practices (Muukkonen et al. Citation2010). Students are reported to leave higher education with underdeveloped abilities to collaborate, manage their work processes, use computers, or solve open-ended problems (Arum and Roksa Citation2011; The National Commission on Accountability in Higher Education Citation2005; Tynjälä Citation2008).

Previous research has addressed generic skills related to collaboration and group work in various ways by, e.g. asking students to assess their skills and attitudes to collaboration, by asking them to assess activities influencing group work and by asking them to self-evaluate their skills at the end of study programmes (Muukkonen et al. Citation2019). Badcock and colleagues (Badcock, Pattison, and Harris Citation2010) found that university students’ grade point averages (GPAs) were significantly related to four generic skills – critical thinking, interprofessional understandings, problem solving and written communication. But while a grade point average provides some measure of students’ generic skills levels, the authors conclude that GPA should be considered an imperfect indicator of levels of skills attainment. More precise instruments are needed for addressing how the skills develop and for explicating which activities are involved in such development.

For example, in the field of engineering, little is known about how students perceive generic skills in the context of their discipline and there has been a lack of instruments for assessing students’ perceptions of these skills (Chan, Zhao, and Luk Citation2017). A questionnaire intended for self-assessment of first-year engineering students’ perceived levels of competency in generic skills within an engineering context was therefore developed by Chan and colleagues; the Generic Skills Perception Questionnaire was made up of eight scales and 35 items and exploratory and confirmatory factor analyses found the questionnaire to be a reliable and valid instrument for the target group. Similarly, but in a business school context, de la Harpe and colleagues described the process of identifying a set of generic skills – communication, computer literacy, information literacy, team working and decision-making – and how these were implemented in teaching (de la Harpe, Radloff, and Wyber Citation2010). The authors argued that universities may have to change their curricula to ensure quality and better meet the requirements of workplaces and, therefore, measures of effectiveness such as student questionnaires addressing such changes need to be developed (ibid.).

In a study by Crebert and colleagues, university graduates from three different study areas with work placement as a part of their studies perceived teamwork, being given responsibility and collaborative learning, as the most important factors for effective learning not only at university but during work placement and employment (Crebert et al. Citation2004). Crebert et al. pointed out the contrast between learning at universities where emphasis is on the individual student’s needs whereas learning in the workplace has emphasis on the organisation’s or the client’s needs. While much research has focused on investigating the individual dimensions of generic skills, collective dimensions such as collaborative idea generation or knowledge production and skills associated with shared knowledge advancement have not been addressed to the same extent by existing questionnaire instruments (Muukkonen et al. Citation2017). In addition, the existing instruments are often multiple-choice tests focusing on measuring only some aspects of generic skills, for example critical thinking (Hyytinen et al. Citation2015). They also lack the link to the authentic competence learning situations and pedagogical practices utilised in courses.

To address the above-mentioned shortcomings in previous instruments, Muukkonen et al. have developed the Collaborative Knowledge Practices Questionnaire (CKP) which is an instrument designed to evaluate self-assessed competence development during courses that specifically use group work or collaborative knowledge creation assignments as instructional approaches (Muukkonen et al. Citation2019, Citation2017). The instrument is not tied to a specific discipline and has a theoretical basis in socio-cultural theories of learning and particularly the knowledge creation metaphor and the Trialogical learning approach (Muukkonen et al. Citation2019; Paavola and Hakkarainen Citation2005). While many learning theories have emphasised how individual learners assimilate prevailing knowledge, or, how learners adapt to existing cultural and communal practices (Sfard Citation1998), Trialogical learning theory adds the object-centred approach to learning and development of expertise, typical of knowledge work. This approach draws attention to the presence of artefacts, practices, and products – ‘objects’ – and shared work on advancing them.

Such objects are viewed as being able to mediate knowledge advancement in several ways, e.g. by externalising ideas, supporting collaborative work practices and encouraging reflection on these (Hakkarainen Citation2009; Paavola and Hakkarainen Citation2005; Paavola et al. Citation2011) and important for knowledge creation is, therefore, to set up collaborative work practices around the objects in ways that promote knowledge advancement. A set of design principles have been developed to characterise the main features of trialogical learning in order to promote them theoretically in pedagogical practices and for the design of educational technology (Paavola et al. Citation2011). The design principles are the following: (1) Organising activities around shared ‘objects’; (2) Supporting integration of personal and collective agency and work through developing shared objects; (3) Emphasising development and creativity in working on shared objects through transformations and reflection; (4) Fostering long-term processes of knowledge advancement with shared objects (artefacts and practices); (5) Promoting cross-fertilisation of various knowledge practices and artefacts across communities and institutions and (6) Providing flexible tools for developing artefacts and practices (Paavola et al. Citation2011). The set of principles provided a starting point for the development of the CKP questionnaire (Muukkonen et al. Citation2019). There was however not a one-to-one correspondence between the design principles and the scales of the CKP; while the former were developed for designing and developing educational practices, the exact same structure was not found in the items addressing students’ learning experiences.

Whereas many instruments attempt to measure the level of competences in general, the CKP questionnaire addresses the self-evaluated learning of knowledge work practices in context, typically at the end of a course. The instrument focuses on a number of different aspects relating to course-related learning of collaborative practices and consists of seven scales, namely (1) Learning to collaborate on shared objects, (2) Integrating individual and collaborative working, (3) Development through feedback, (4) Persistent development of knowledge objects, (5) Understanding various disciplines and practices, (6) Interdisciplinary collaboration and communication and (7) Learning to exploit technology. The construct validity of the instrument has been investigated and measurement invariance was obtained in two disciplinary fields, media engineering and life sciences. The instrument has, however, not been systematically translated and tested in English.

Cross-cultural translation, adaptation and factorial evaluation

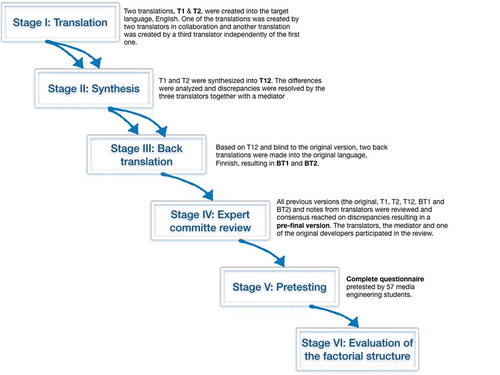

Numerous papers have addressed the challenges associated with translating instruments from one language and cultural context to another one and have proposed procedures and recommendations to ensure quality of the instruments (Beaton et al. Citation2000, Citation2007; Borsa, Damásio, and Bandeira Citation2012; Chen and Boore Citation2010; Guillemin, Bombardier, and Beaton Citation1993; Morales Citation2001; Schmidt, Power, and Bullinger Citation2002; van Widenfelt et al. Citation2005). Translation is viewed as the first step, but when an instrument is intended to be used in a different context, cultural, idiomatic, linguistic and contextual aspects need to be considered when adapting it to the new context (Borsa, Damásio, and Bandeira Citation2012). The literature suggests a number of essential stages for performing the cross-cultural translation and adaptation: (1) Translation of the instrument from the source language into the target language involving at least two independent, bilingual translators (Beaton et al. Citation2007; Borsa, Damásio, and Bandeira Citation2012) to minimise risks for biases and to detect ambiguous wording in the original language or discrepancies in translations. (2) Creating a synthesis during which semantic, idiomatic, conceptual, experiential, linguistic and contextual aspects are compared in the different translations. (3) Back-translation of the synthesised version into the original language by two new translators who are blind to the original version. This stage is a kind of validity checking ensuring that the translation reflects the item content of the original version and may detect unclear wordings in the translations (Beaton et al. Citation2007). (4) Next, the translations should be reviewed by an expert committee to develop a pre-final version of the questionnaire including instructions and scoring documentation (Beaton et al. Citation2007). The original developers should be involved as well as all translators. Decisions need to made regarding semantic equivalence (checking that words mean the same thing), idiomatic equivalence (colloquialisms or idioms may need to be replaced), experiential equivalence (as daily experiences may differ in different cultures, items may have to be replaced by something addressing a similar intent in the target culture), and conceptual equivalence (checking that the ‘same’ word does not hold a different conceptual meaning in the culture) (Beaton et al. Citation2007). (5) The final step is to pre-test the new instrument with a small sample reflecting the target population. Beaton et al. recommended using 30–40 persons. Variants of these sequences exist, e.g. Borsa et al. described the expert review as taking place before the back-translation and also suggested an instrument evaluation by the target population (Borsa, Damásio, and Bandeira Citation2012). Borsa et al. also recommended an additional stage that is normally not included in the adaptation process to confirm whether the instrument structure is stable compared to the original instrument, namely evaluation of the factorial structure of the instrument accomplished by exploratory and confirmatory factor analyses. Recent research has suggested that exploratory structural equation modelling (ESEM) combines the flexibility of exploratory factor analysis with access to the typical parameters and statistical advances of confirmatory factor analysis/structural equation modelling (Morin, Marsh, and Nagengast Citation2013). While confirmatory factor analysis has largely superseded exploratory factor analysis, ESEM has been argued to be a more general framework and less restricted than confirmatory factor analysis and structural equation modelling and, therefore, preferable in clinical psychology research (Marsh et al. Citation2014).

Knowledge gap identified in instruments for assessing generic skills

To sum up the knowledge gap, there is a lack of validated instruments in English for assessing the learning of generic skills, especially instruments which cover the collaborative aspects of modern knowledge work practised in authentic course settings. Poorly adapted instruments may risk generating inconsistent and unreliable data (Borsa, Damásio, and Bandeira Citation2012). The trustworthiness of research collected in one language and presented in another relies heavily on translation-related decisions (Chen and Boore Citation2010). Using established measures allows for comparison of findings in different countries, but usually little is reported about the translation and adaptation process of the instruments used, making it difficult to evaluate the equivalency and quality of the instruments and the data that they have generated (van Widenfelt et al. Citation2005). The Collaborative Knowledge Practices Questionnaire (CKP) has been demonstrated to be a valid tool in Finnish but a validated, English version is lacking.

Aim

This study aimed to cross-culturally translate and adapt the original instrument into English and perform an exploratory structural equation modelling (ESEM) analysis in order to investigate whether the factorial solution of the original instrument also works in English in higher education courses in international settings.

The cross-cultural translation and adaptation of the instrument examined the methodological questions about the steps, criteria, and challenges of the translation and adaptation of the questionnaire to another context while the analysis of the factorial solution aimed to answer whether the same factorial solution could be found for the data in the new context.

Methods

Cross-cultural translation and adaptation of the original instrument

The original instrument was translated into English in line with the guidelines proposed for cross-cultural adaptation of self-report measures (Beaton et al. Citation2000; Borsa, Damásio, and Bandeira Citation2012). First, two translations of the instrument were created independently (see ). One of the forward translations (T1) was done by two bilingual translators in collaboration while the other forward translation (T2) was done independently by a third bilingual translator. All three translators were knowledgeable of the topic. To synthesise the results, a fourth bilingual person was added to the team who acted as a mediator in discussions of the differences in the translations. Issues were resolved by consensus and one common translation (T-12) was produced. Working from the T-12 version of the questionnaire, and blind to the original version, two new bilingual translators translated back from English into Finnish to ensure validity by enabling comparison of the new translation to the original version. The back-translations (BT1 and BT2) were produced by two bilingual persons with Finnish as their mother tongue. The two translators worked independently of each other and were neither aware nor informed of the concepts explored to avoid information bias and to elicit unexpected meanings of the items in the translated questionnaire (T-12) (Beaton et al. Citation2007; Guillemin, Bombardier, and Beaton Citation1993). All different versions of the instruments were collected in a document and the different versions were colour-coded and organised in one document for easier comparison. An expert committee consisting of the translators, the mediator as well as an additional person who is one of the original developers reviewed the material. The importance of involving the original instrument’s authors in the evaluation has been emphasised by Borsa and colleagues (Borsa, Damásio, and Bandeira Citation2012). The differences between the different versions were discussed until reaching a consensus on a pre-final version.

Altogether 57 students taking part in three media engineering courses in Finland participated in pretesting of the instrument. The instrument worked in a satisfactory manner; no complaints about the comprehensibility of items were expressed and all respondents responded to each item; therefore, the pilot did not lead to any further modifications of the instrument.

Participants

In the evaluation of the factorial solution, data were collected from two research-intensive universities and one applied sciences university. Students (n = 169) in seven courses responded to the CKP questionnaire at the end of their courses. Four courses were organised in Sweden (medical education and online tools for teaching, respectively) and three courses in Finland (two problem solving courses in the educational field and one in engineering) for international students in English. In all, 59 participants were male, 108 female (63.9%) and 2 responded as other (1.2%). Age varied from 19 to 61 years (M = 39.3, SD 11.07, Median = 40). The response rate was 37.3%.

The sample included courses with intensive virtual or face-to-face collaboration with participants from diverse international backgrounds.

Data collection

All documents from the translators, observations made at review meetings, different versions of the questionnaire items and suggestions for changes and comments were stored using a web-based software with a revision history functionality.

The CKP questionnaire (Muukkonen et al. Citation2019, Citation2017) was used for data collection. Students evaluated how 27 statements corresponded to their learning during the course. ‘During the course I have learned … ’ e.g. ‘to develop ideas further together with others’. The statements were assessed using a five-point Likert-scale ranging from 1 (not at all) to 5 (very much). The statements make up the seven scales of the questionnaire: (1) Learning to collaborate on shared objects, (2) Integrating individual and collaborative working, (3) Development through feedback, (4) Persistent development of knowledge objects, (5) Understanding various disciplines and practices, (6) Interdisciplinary collaboration and communication and (7) Learning to exploit technology.

Responses to the questionnaires were collected at the end of each course using web-based surveys. Participants who gave informed consent were included in the study.

Data analysis

The data were screened for outliers and unmotivated responses which were removed, resulting in N = 163 included in the analysis. We conducted an exploratory structural equation modelling (ESEM) (Asparouhov and Muthén Citation2009; Marsh et al. Citation2014, Citation2009) factor analysis with the CKP items as ordered categorical variables. When a structural model includes more than a few cross-loadings recognised by theory or by item content, ESEM can be preferred to conventional confirmatory factor analysis (CFA) which usually constrains items to load only on one factor and can lead inflated factor correlations in applied research (Marsh et al. Citation2014, Citation2009). Since none of the respondents had selected the alternative ‘Not at all’ as responses to two of the items (10 and 12), these only had four ordered categories while the other 25 items had five categories. In the rotation, target loadings of one was designated to items belonging to the same hypothesised factor and target loadings of zero to other items (see ).

Table 1. ESEM solution for a target rotated 7 factor model: standardised loadings, factor variances, and correlations.

Results

In certain cases, the different translators consistently selected differing terms in the translations. For instance, the Finnish word ‘arvioida’ was translated as either ‘assess’, ‘evaluate’ and ‘consider’ and, depending on the part of the instrument, decisions needed to be made about which translation corresponded best to the original, intended meaning (e.g. ‘to evaluate the development of a shared product’ was selected), or which translation worked best stylistically in English (‘to understand how important the expertise of others is … ’). Another observation was that decisions needed to be made whether to follow the original instrument strictly or strive for consistency in the new translation. For instance, during the translation process, discussions were conducted about the importance of keeping (seemingly unnecessary) variations of the ‘same’ concept in the original instruments such as mixing singular and plural forms of a concept in different items. Similar decisions needed to be made in several other cases of observed differences in the translations regarding closely related concepts such as expertise/competency/skills; develop/produce/construct/prepare; field/domain; understanding/seeing/perceiving; knowledge/information/what I have learned, etc.

Certain concepts in the original CKP were particularly challenging to translate because a corresponding word did not exist or because a translation would place emphasis on an aspect which was not intended in the original language. One example of this was one of the most central phrases, namely ‘kehittää tuotoksia’ in the original instrument. The phrase is roughly equivalent to ‘developing products’ or ‘developing outputs’. Numerous translations needed to be considered. For instance, the word ‘product’ was a strong candidate but was considered to sound slightly more businesslike in English and not as being obvious in an educational context as the original phrase. ‘Product’ as well as ‘object’ or ‘thing’ also imply something more concrete than the original phrase does and thereby risked excluding more abstract results such as plans and reports. Alternative terms that were considered were the more general ‘artifact’, ‘knowledge object’ and ‘object’, but these were considered to be more theory-laden and risked appearing unfamiliar to respondents. Another possible translation was ‘assignment’ but this word was perceived as sounding too school-related with too little focus on the concrete outcomes that the original included. Yet another candidate word was ‘production’ but it was also considered abstract and risked unintendedly directing respondents’ thinking to the outputs of specific businesses such as film or television. It was finally decided that the best translation was to use ‘product’ in combination with examples to help respondents understand the intended meaning: ‘the development of products (e.g. plans, reports, models)’. The use of such examples was also included in the original version of the instrument.

The results of the ESEM solution for the target rotated seven factor model are presented in . The fit of the ESEM model was good (root mean square error of approximation [RMSEA] = .047, comparative fit index [CFI] = .989, Tucker–Lewis index [TLI] = .979). Threshold levels RMSEA < .07, CFI > .95, and TLI > .95 are considered indicative of good fit (Hooper, Coughlan, and Mullen Citation2008). All items except one (item 26, which nevertheless had a loading of .206), loaded on their respective factors although many cross-loadings were also present (). The factors correlated somewhat strongly, but this was expected based on the original factorial solution.

Discussion

Translation of an instrument calls to attention the fact that different languages cover phenomena in differing ways. While striving for equivalence to the original instrument and at the same time attempting to achieve comprehensibility and consistency in the English version, the translation work needed to balance goals that were occasionally in conflict. Firstly, the main goal was naturally to follow the phrasing of the original instrument. At the same time, literal translation is not always ideal as such translation may result in a lack of language fluency in the target language and therefore a literal translation needed to be replaced with a more appropriate and comprehensible wording in certain items. A second issue concerned the opposite case, when observations were made that the original instrument was not entirely consistent in its phrasing or form. Should the new instrument keep seemingly unintentional variation of wordings or should it strive for being consistent in its use of a term? A consistent use of wording may in itself be helpful for future respondents but such consistency would mean taking a step away from following the original version. The third issue concerned the need to balance the tension between creating an accessible instrument without jargon versus retaining the precision of the theoretical concepts underlying the original item. This posed a challenge which was different to the cases usually discussed in the literature about cross-cultural translation and adaptation as it was not related to difficulties related to idiomatic, linguistic, contextual or otherwise cultural adaptation but, instead, having to do with the fact that the phenomenon referred to by the questionnaire item was novel. The difficulty was thus not a difficulty of identifying the appropriate everyday language expression in English but rather the fact that the concept itself is innovative in educational context in a way which diverges from everyday language. Conceptualising ‘learning’ as a collaborative process involving the creation of shared objects over time, as the Trialogical approach to learning (Paavola and Hakkarainen Citation2005) does, is atypical and not quite the ordinary, everyday understanding of what ‘learning’ refers to. As the approach reconceptualises or expands the concept of learning, individual questionnaire items probing about learning are difficult to phrase in ‘ordinary language’ if they are to keep the intended meaning. Therefore, certain such terms may have to, at least to a certain degree, initially be experienced as unfamiliar by the target group. This challenge was handled by accompanying such questionnaire items with explanations and examples. Such modifications were also done in the original instrument, thereby being a case of ‘decentering’, i.e. opening the original instrument for improvement during the translation procedure (van Widenfelt et al. Citation2005).

As mentioned in the background, cultural and contextual aspects may also need to be considered when adapting an instrument into a new context (Borsa, Damásio, and Bandeira Citation2012). The organisation of courses and the design of learning activities may vary significantly between different educational contexts. Rules and legislation as well as expectations and attitudes towards students, learning and teaching may differ considerably. Innovation and creativity among learners may be more or less encouraged by teachers and embraced by the students themselves. Attitudes towards the use of technologies may differ. And examinations may be strictly individual or allow different degrees of collaboration among students. Such attitudes and expectations may affect students’ interactions and therefore the possibilities of implementing the learning activities addressed by the CKP questionnaire. It is therefore conceivable that very competitive university cultures may undermine the development of fruitful collaborative interaction. Moreover, if students have little agency and initiative in orchestrating their studying and learning practices, the setting may not be appropriate for the activities indicated by the items of the questionnaire.

Recent literature on the adaptation of instruments stresses adding an evaluation of the instrument’s factorial structure to the adaptation process (Borsa, Damásio, and Bandeira Citation2012); consequently, an ESEM analysis was added to the process of developing an international version of the CKP. A test of the factorial solution of CKP provided evidence that the factorial solution found in the Finnish language sample could be applied to the international English language sample, as the ESEM solution model provided a good fit for the data. We found that items designated to factors 5 (Understanding various disciplines and practices) and 6 (Interdisciplinary collaboration and communication) in the present data loaded more onto both factors than in the original data. In the original data, students came more often from one discipline, i.e. media engineering and life sciences, even though there were cases where teachers were from different disciplines or external customers provided experiences from their respective organisational practices. A true case of interdisciplinary collaboration would entail that students worked in interdisciplinary teams during the course. We expect that the reason for the difference in loadings lies in that most of the courses in the present data involved interdisciplinary collaboration among the students and, therefore, the participants did not differentiate between the two factors regarding understanding various disciplines and interdisciplinary collaboration. To further examine the differences between these two factors, additional data is needed representing both types of pedagogical designs.

Conclusion

The CKP questionnaire aims to assess new aspects of a generic competence related to collaboration, interdisciplinarity and technology use. In the literature, competencies are mostly defined and measured as pertaining to solely individual skills or abilities. Here, the aim is to advance understanding of collective aspects of competence in knowledge work, how these develop in various pedagogical settings and cultural contexts. The questionnaire provides an instrument to make comparisons across local and intercultural contexts. The questionnaire adaptation process described the conceptual challenges related to adjusting the theoretically grounded questionnaire items to another language. However, this also aided to clarify the intended meaning of the items in both languages and cultural contexts. The results of the statistical validation provided evidence that the latent factor model found in the original instrument provided a good fit also for the adapted questionnaire. Next, it would be valuable to empirically test the questionnaire in diverse contexts.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

Notes on contributors

Klas Karlgren

Klas Karlgren is a senior researcher in the areas of simulation and technology-enhanced learning. He leads the MINT research team at the department of Learning, Informatics, Management and Ethics at Karolinska Institutet, a medical university in Stockholm, Sweden. He is also a researcher and research coordinator at the Södersjukhuset hospital in Stockholm, Sweden and associate professor at the SimArena simulation center at the Western Norway University of Applied Sciences, Ber

Minna Lakkala

Minna Lakkala Ph.D. (psychology), works as a university researcher at the University of Helsinki. She is specialized in technology-enhanced teaching and learning, knowledge creation pedagogy and competence learning in all educational levels. She has participated in several national and international research and development projects concerning educational practices since 1998.

Auli Toom

Auli Toom PhD, is a professor of higher education and director of the Centre for University Teaching and Learning (HYPE) at the University of Helsinki, Finland. She is a visiting professor at the University of Tartu, Estonia. Dr. Toom is also the director of the doctoral programme PsyCo (Psychology, Learning and Communication). Dr. Toom is the vice president of the Finnish Educational Research Association. Her research interests include teacher knowing, competence, expertise and agency among students and teachers. She investigates them in basic education, teacher education and higher education contexts. She leads several research projects on higher education and teacher education.

Liisa Ilomäki

Liisa Ilomäki EdD., docent (adjunct professor) in education and technology, works at the University of Helsinki, Finland. Her research interests include the use of digital technologies in learning and teaching and the development of students’ knowledge work competence. Ilomäki has been involved in several European and national research projects in technology-enhanced learning.

Pekka Lahti-Nuuttila

Pekka Lahti-Nuuttila is a product manager at the Hogrefe Psykologien Kustannus Oy. Currently he also does research on developmental language disorder in the Helsinki Longitudinal SLI Study at the department of Otorhinolaryngology and Phoniatrics, Head and Neck Surgery at the Helsinki University Hospital and the University of Helsinki. The research is part of his doctoral studies at the department of Psychology and Logopedics in the Faculty of Medicine, University of Helsinki

Hanni Muukkonen

Hanni Muukkonen is a professor in educational psychology at the Faculty of Education, University of Oulu, Finland. Her current research focuses on collaborative learning, knowledge creation, learning analytics, and technology in education.

References

- Ananiadou, K., and M. Claro. 2009. “21st Century Skills and Competences for New Millennium Learners in OECD Countries.” OECD Education Working Papers, OECD Publishing, no. 41.

- Arum, R., and J. Roksa. 2011. Academically Adrift. Limited Learning on College Campuses. Chicago: University of Chicago Press.

- Asparouhov, T., and B. Muthén. 2009. “Exploratory Structural Equation Modeling.” Structural Equation Modeling: A Multidisciplinary Journal 16 (3): 397–438. doi:10.1080/10705510903008204.

- Badcock, P. B. T., P. E. Pattison, and K.-L. Harris. 2010. “Developing Generic Skills through University Study: A Study of Arts, Science and Engineering in Australia. Higher Education.” The International Journal of Higher Education and Educational Planning 60 (4): 441–458.

- Beaton, D., C. Bombardier, F. Guillemin, and M. B. Ferraz. 2000. “Guidelines for the Process of Cross-cultural Adaptation of Self-report Measures.” Spine 25 (24): 3186–3191. doi:10.1097/00007632-200012150-00014.

- Beaton, D., C. Bombardier, F. Guillemin, and M. B. Ferraz. 2007. “Recommendations for the Cross-Cultural Adaptation of the DASH & QuickDASH Outcome Measures.” Accessed February 3 2019. http://dash.iwh.on.ca/sites/dash/files/downloads/cross_cultural_adaptation_2007.pdf

- Borsa, J. C., B. F. Damásio, and D. R. Bandeira. 2012. “Cross-Cultural Adaptation and Validation of Psychological Instruments: Some Considerations.” Paidéia 22 (53): 423–432.

- Chan, C. K. Y., Y. Zhao, and L. Y. Y. Luk. 2017. “A Validated and Reliable Instrument Investigating Engineering Students’ Perceptions of Competency in Generic Skills.” The Research Journal for Engineering Education 106 (2): 299–325. doi:10.1002/jee.20165.

- Chen, H.-Y., and J. R. Boore. 2010. “Translation and Back-translation in Qualitative Nursing Research: Methodological Review.” Journal of Clinical Nursing 19 (1–2): 234–239. doi:10.1111/j.1365-2702.2009.02896.x.

- Clarke, T., and E. Clarke. 2009. “Born Digital? Pedagogy and Computer-assisted Learning.” Education & Training 51 (5/6): 395–407. doi:10.1108/00400910910987200.

- Cobo, C. 2013. “Skills for Innovation: Envisioning an Education that Prepares for the Changing World.” Curriculum Journal 24 (1): 67–85. doi:10.1080/09585176.2012.744330.

- Crebert, G., M. Bates, B. Bell, C. J. Patrick, and V. Cragnolini. 2004. “Developing Generic Skills at University, during Work Placement and in Employment: Graduates’ Perceptions.” Higher Education Research & Development 23 (2): 147–165. doi:10.1080/0729436042000206636.

- de la Harpe, B., A. Radloff, and J. Wyber. 2010. “Quality and Generic (professional) Skills.” Quality in Higher Education 6 (3): 231–243. doi:10.1080/13538320020005972.

- European Union. 2010. “2010 Joint Progress Report of the Council and the Commission on the Implementation of the ‘education and Training 2010 Work Programme’.” Official Journal of the European Union C 117: 1–7.

- Guillemin, F., C. Bombardier, and D. Beaton. 1993. “Cross-Cultural Adaptation of Health-Related Quality of Life Measures: Literature Review and Proposed Guidelines.” Journal of Clinical Epidemiology 46 (12): 1417–1432. doi:10.1016/0895-4356(93)90142-n.

- Hakkarainen, K. 2009. “A Knowledge-practice Perspective on Technology-mediated Learning.” International Journal of Computer-Supported Collaborative Learning 4 (2): 213–231. doi:10.1007/s11412-009-9064-x.

- Hooper, D., J. Coughlan, and M. R. Mullen. 2008. “Structural Equation Modelling: Guidelines for Determining Model Fit.” The Electronic Journal of Business Research Methods 6 (1): 53–60.

- Hyytinen, H., K. Nissinen, J. Ursin, A. Toom, and S. Lindblom-Ylänne. 2015. “Problematising the Equivalence of the Test Results of Performance-based Critical Thinking Tests for Undergraduate Students.” Studies in Educational Evaluation 44: 1–8. doi:10.1016/j.stueduc.2014.11.001.

- Hyytinen, H., A. Toom, and L. Postareff. 2018. “Unraveling the Complex Relationship in Critical Thinking, Approaches to Learning and Self-efficacy Beliefs among First-year Educational Science Students.” Learning and Individual Differences 67: 132–142. doi:10.1016/j.lindif.2018.08.004.

- Ilomäki, L., M. Lakkala, and K. Kosonen. 2013. “Mapping the Terrain of Modern Knowledge Work Competencies.” Paper presentat at the 15th Biennial EARLI conference for Research on Learning and Instruction, Munich, Germany, August 27–31.

- Klusek, L., and J. Bernstein. 2006. “Information Literacy Skills for Business Careers - Matching Skills to the Workplace.” Journal of Business & Finance Librarianship 11 (4): 3–21. doi:10.1300/J109v11n04_02.

- Knorr Cetina, K. 2001. “Objectual Practice.” In The Practice Turn in Contemporary Theory, edited by T. R. Schatzki, K. Knorr Cetina, and E. von Savigny, 184–197. London and NY: Routledge.

- Marsh, H. W., A. J. S. Morin, P. D. Parker, and G. Kaur. 2014. “Exploratory Structural Equation Modeling: An Integration of the Best Features of Exploratory and Confirmatory Factor Analysis.” Annual Review of Clinical Psychology 10: 85–110. doi:10.1146/annurev-clinpsy-032813-153700.

- Marsh, H. W., B. Muthén, T. Asparouhov, O. Lüdtke, A. Robitzsch, A. J. S. Morin, and U. Trautwein. 2009. “Exploratory Structural Equation Modeling, Integrating CFA and EFA: Application to Students’ Evaluations of University Teaching.” Structural Equation Modeling: A Multidisciplinary Journal 16: 439–476. doi:10.1080/10705510903008220.

- Morales, L. S. 2001. “Cross-Cultural Adaptation of Survey Instruments: The CAHPS.” Chap. 2 in Assessing Patient Experiences with Healthcare in Multi-Cultural Settings. http://www.Rand.org

- Morin, A. J. S., H. W. Marsh, and B. Nagengast. 2013. “Exploratory Structural Equation Modeling.” In Structural Equation Modeling: A Second Course, edited by G. R. Hancock and R. O. Mueller, 395–436. Charlotte, NC, US: IAP Information Age Publishing.

- Muukkonen, H., M. Lakkala, J. Kaistinen, and G. Nyman. 2010. “Knowledge Creating Inquiry in a Distributed Project Management Course.” Research and Practice in Technology Enhanced Learning 5 (2): 1–24. doi:10.1142/S1793206810000827.

- Muukkonen, H., M. Lakkala, P. Lahti-Nuuttila, L. Ilomäki, K. Karlgren, and A. Toom. 2019. “Assessing the Development of Collaborative Knowledge Work Competence: Scales for Higher Education Course Contexts.” Scandinavian Journal of Educational Research 1–19. doi:10.1080/00313831.2019.1647284.

- Muukkonen, H., M. Lakkala, A. Toom, and L. Ilomäki. 2017. “Assessment of Competences in Knowledge Work and Object-bound Collaboration during Higher Education Courses.” In Higher Education Transitions: Theory and Research, edited by E. Kyndt, V. Donche, K. Trigwell, and S. Lindblom-Ylänne, 288–305, London: Routledge.

- The National Commission on Accountability in Higher Education. 2005. Accountability for Better Results: A National Imperative for Higher Education. Boulder, CO: State Higher Education Executive Officers’ Association.

- Paavola, S., and K. Hakkarainen. 2005. “The Knowledge Creation Metaphor – An Emergent Epistemological Approach to Learning.” Science and Education 14 (6): 535–557. doi:10.1007/s11191-004-5157-0.

- Paavola, S., M. Lakkala, H. Muukkonen, K. Kosonen, and K. Karlgren. 2011. “The Roles and Uses of Design Principles for Developing the Trialogical Approach on Learning.” Research in Learning Technology 19 (3): 14. doi:10.3402/rlt.v19i3.17112.

- Schmidt, S., M. Power, and M. Bullinger. 2002. Cross-Cultural Analysis of Relationships of Health Indicators across Europe: First Results based on the EUROHIS Project. Copenhagen: WHO Regional Office for Europe.

- Sfard, A. 1998. “On Two Metaphors for Learning and the Dangers of Choosing Just One.” Educational Researcher 27 (2): 4–13. doi:10.3102/0013189X027002004.

- Tynjälä, P. 2008. “Perspectives into Learning at the Workplace.” Review: Educational Research: 3: 130–154.

- van Widenfelt, B. M., P. D. A. Treffers, E. de Beurs, B. M. Siebelink, and E. Koudijs. 2005. “Translation and Cross-Cultural Adaptation of Assessment Instruments Used in Psychological Research with Children and Families.” Clinical Child and Family Psychology Review 8 (2): 135–147.

- Voogt, J., and N. P. Roblin. 2012. “A Comparative Analysis of International Frameworks for 21st Century Competences: Implications for National Curriculum Policies.” Journal of Curriculum Studies 44 (3): 299–321. doi:10.1080/00220272.2012.668938.