?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Discrimination and fairness are major concerns in algorithmic models. This is particularly true in insurance, where protected policyholder attributes are not allowed to be used for insurance pricing. Simply disregarding protected policyholder attributes is not an appropriate solution as this still allows for the possibility of inferring protected attributes from non-protected covariates, leading to the phenomenon of proxy discrimination. Although proxy discrimination is qualitatively different from the group fairness concepts discussed in the machine learning and actuarial literature, group fairness criteria have been proposed to control the impact of protected attributes on the calculation of insurance prices. The purpose of this paper is to discuss the relationship between direct and proxy discrimination in insurance and the most popular group fairness axioms. We provide a technical definition of proxy discrimination and derive incompatibility results, showing that avoiding proxy discrimination does not imply satisfying group fairness and vice versa. This shows that the two concepts are materially different. Furthermore, we discuss input data pre-processing and model post-processing methods that achieve group fairness in the sense of demographic parity. As these methods induce transformations that explicitly depend on policyholders' protected attributes, it becomes ambiguous whether direct and proxy discrimination is, in fact, avoided.

1. Introduction

1.1. Problem context

For legal and societal reasons, there are several policyholder attributes that are not allowed to be used in insurance pricing (Avraham et al., Citation2014; Chibanda, Citation2021; European Commission, Citation2012; European Council, Citation2004; Prince & Schwarcz, Citation2020); for instance European law does not allow the use of information on sex in insurance pricing. Furthermore, ethnicity is a critical attribute that is typically viewed as a protected characteristic. In the actuarial and insurance literature, Charpentier (Citation2022), Frees and Huang (Citation2022) and Xin and Huang (Citation2021) give extensive overviews on the potential use (direct or indirect) of policyholders' protected attributes and the implications for insurance prices, while Avraham et al. (Citation2014), Prince and Schwarcz (Citation2020) and Maliszewska-Nienartowicz (Citation2014) provide legal viewpoints on this topic. Closely related is the recent report of the European Insurance and Occupational Pension Authority (EIOPA) (EIOPA, Citation2021), which discusses governance principles towards an ethical and trustworthy use of artificial intelligence in the insurance sector.

A critical observation from this literature is that just ignoring (being unaware of) protected information does not guarantee a lack of discrimination in pricing. In the presence of statistical associations between covariates used in pricing, it can occur that protected attributes are inferred from non-protected covariates, which thus act as undesirable proxies for, e.g. sex or ethnicity. As a result, the calculated insurance prices are subject to proxy discrimination; for a wide-ranging overview of this idea see (Tschantz, Citation2022).

Defining, identifying and addressing proxy discrimination presents a number of interrelated challenges and here we outline but a few. First, such discrimination need not be intentional, as the inference of protected attributes can take place implicitly through the fitting procedure of a predictive model. The complexity of models often used in insurance pricing can make this inference process quite opaque to the user. Second, the non-protected covariates implicitly used as proxies cannot just be removed from models, as, besides their proxying effect, they are typically considered legitimate predictors of policyholders' risk (e.g. smoking status can correlate with sex, while at the same time having a clear and established link to health outcomes). Third, proxy discrimination relates to the way that prices are calculated and does not necessarily imply adverse outcomes for any protected demographic group – in fact, in some situations proxy discrimination can mask rather than exacerbate demographic disparities (see Remark 9 in Lindholm et al. (Citation2022).)

The third challenge above can be a source of confusion when discussing indirect discriminatory effects, as it relates to the complex relation between proxy discrimination and notions of group fairness, which place requirements on the joint statistical behaviour of insurance prices, protected attributes and actual claims (for example, independence between prices and protected attributes is known as demographic parity). Common definitions of indirect discrimination appear to require – and maybe even conflate with each other – both the proxying of protected attributes and an adverse impact on protected groups; see (Maliszewska-Nienartowicz, Citation2014), but also the broader discussion of Barocas et al. (Citation2019), Chapter 4.

There have been several approaches to prevent proxy discrimination, including restrictions in the use of covariates, discussed in Section 6 of Frees and Huang (Citation2022). More technical approaches and price adjustments include: a counter factual approach drawing from causal inference, see see (Araiza Iturria et al., Citation2022; Charpentier, Citation2022; Kusner et al., Citation2017); the probabilistic approach of Lindholm et al. (Citation2022) focussing specifically on implicit inferences; and the projection method of Frees and Huang (Citation2022). The latter approach finds itself within in a broader literature which considers adjustments to covariates, which produce independence of protected attributes from non-protected covariates; see also (Grari et al., Citation2022). On the face of it, this seems an attractive proposition: by breaking the dependence between protected attributes and their potential proxies, proxy discrimination is prevented. In other words: satisfying a group fairness perspective may also have the additional beneficial effect of addressing proxy discrimination. In the sequel, we will take a critical perspective to this particular rationale.

1.2. Aims and outline of the paper

In this paper, we aim to investigate the relationship between proxy discrimination – and the requirement to avoid it – and notions of group fairness. In particular, we will focus on the question of whether standard notions of group fairness (namely: demographic parity, equalized odds, and predictive parity) are consistent with avoiding proxy discrimination. This is a pertinent question, not least in the context of literature advocating the former as a solution to the latter.

In Section 2, we provide a technical definition of avoiding proxy discrimination as an individual fairness property. Individual fairness, broadly, requires that policyholders with the same characteristics receive the same premium (Charpentier, Citation2022; Dwork et al., Citation2012). In our context, we require that whether or not policyholder profiles are treated as equivalent should not hinge upon the dependence structure between protected attributes and non-protected covariates. We show through examples how standard unawareness pricing, arising from optimal claims prediction by ignoring protected information, leads to proxy discrimination, and how this issue can be addressed by the approach of Lindholm et al. (Citation2022).

Then, we turn our attention to the compatibility of the individual fairness property of avoiding proxy discrimination with standard group fairness properties. We show that avoiding proxy discrimination does not imply satisfying any of the three group fairness properties considered. Conversely, satisfying demographic parity does not imply avoiding proxy discrimination. These results indicate that neither of the two requirements of group fairness or avoiding proxy discrimination is strictly stronger then the other; hence the former cannot be viewed as a quick fix for the latter. As these results are negative, they are derived by designing concrete (counter-)examples that demonstrate potential trade-offs and incompatibilities.

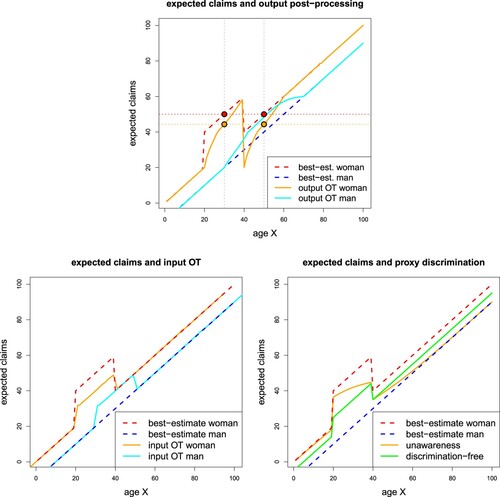

In Section 3, we discuss in more detail the impact that strategies to effect group fairness have on insurance prices, focussing specifically on demographic parity. The theory of optimal transport has recently been promoted to make statistical models fair, via its application in input pre-processing and model post-processing methods, see (Chiappa et al., Citation2020; del Barrio et al., Citation2019); an early application of these ideas in an insurance context w.r.t. creating gender-neutral policies in life insurance using mean-field approximations can be found in Example 5.1 of Djehiche and Lofdahl (Citation2016). We study these pre- and post-processing methods, and conclude that they may be helpful tools for achieving fairness objectives in insurance pricing. Specifically, model post-processing, which is more frequently used in machine learning, is simpler to apply and allows for optimal modelling choices from the perspective of predictive accuracy. However, model post-processing can lead to results that are not easily explainable to insurance customers and policymakers. In addition, the adjustments made by these methods depend on the statistical relations between protected attributes and non-protected covariates. As these relations are often driven by portfolio composition rather than causal relations, their strength and direction remains portfolio-specific. This means that any adjustments (e.g. to model inputs) in order to achieve group fairness will have to be different from insurer to insurer. Such arbitrariness is hard to imagine in practice, for both regulatory and commercial reasons.

Furthermore, the extent to which the resulting prices can be considered free of discrimination is a matter of interpretation. Focusing on the case where model inputs are transformed to achieve independence, these adjustments are explicit functions of protected attributes and hence subject to direct discrimination. Unless the transformed inputs have an interpretation that is justifiable in its own right, we would end up in a paradoxical situation where proxy discrimination appears addressed (by independence between transformed protected and unprotected attributes), at the price of introducing direct discrimination. But this of course does not make sense, since the whole idea of avoiding proxy discrimination is conceptually predicated on the lack of direct discrimination.

In Section 4, we discuss our overall conclusions and further aspects of the problem. Mathematical results are proved in Appendix 1.

1.3. Relation to the machine learning literature

The issues we address in this paper from an insurance perspective are closely related to extensive discussions in the machine learning literature; for wide overviews of those discussions see (Barocas et al., Citation2019; Mehrabi et al., Citation2019; Tschantz, Citation2022). One particular difference of the discussions of fairness in the insurance pricing and machine learning contexts is that, in the former, responses of predictive models are discrete numerical or continuous, while in the latter they are typically binary/categorical. This means that one cannot assume that proofs and technical arguments developed in the machine learning literature on the relation between different notions of fairness necessarily transfer to the insurance context. Furthermore, the regulatory emphasis in insurance is more on avoiding direct and indirect (or proxy) discrimination, rather than comparing the outcomes on different demographic groups (European Commission, Citation2012; European Council, Citation2004).

We consider proxy discrimination as a type of individual fairness – since its focus is on the way similar policyholders should be treated – and we introduce a suitable notion of similarity. Our perspective on proxy discrimination is essentially the same as omitted variable bias; see (Mehrabi et al., Citation2019; Tschantz, Citation2022). We note that a substantial variety of alternative notions of proxy discrimination exist and these are typically formulated via the rich framework of causal inference, e.g. (Kilbertus et al., Citation2017; Kusner et al., Citation2017; Qureshi et al., Citation2016). In contrast, we make no assumptions regarding causality. There are three reasons for this. First, our focus is on indirect inference of protected attributes and this is an issue of statistical association, rather than causality. Second, the statistical relations between covariates are often not the result of any causal relations, but instead artefacts of the composition of insurance portfolios. Third, any causal relations that do exist between covariates are not necessarily well understood in practice, particularly in high-dimensional insurance pricing applications. Hence our approach is motivated by a mix of conceptual and pragmatic arguments that apply in the insurance context.

Substantial literature exists on the incompatibility of different notions of fairness, see for example the seminal contribution of Kleinberg et al. (Citation2016) and the related discussion by Hedden (Citation2021). Our contribution to this literature thus consists of demonstrating incompatibility of avoiding proxy discrimination with group fairness notions, from an insurance perspective. In a sense, such incompatibility is not particularly surprising, given the rather different scope of individual and group fairness. The potential conflict between those two classes of fairness criteria is discussed in Binns (Citation2020) and Friedler et al. (Citation2016), using, respectively, discursive and technical arguments but reaching consistent conclusions: that such conflicts demonstrate the need to clarify ideas about justice and the particular types of harm that should be prevented in specific contexts. While we do not examine the moral foundations of the technical fairness criteria, this is a conclusion we support. More practically, trade-offs between individual and group fairness are operationalized by reflecting them within model fitting processes, see for example (Awasthi et al., Citation2020; Lahoti et al., Citation2019; Zemel et al., Citation2013), noting that these papers do not specifically consider proxy discrimination as a type of individual (un)fairness.

Finally, the applications of methods from Optimal Transport has received prominence both in the machine learning literature, see (Chiappa et al., Citation2020; del Barrio et al., Citation2019), and more recently in actuarial science, e.g. (Charpentier et al., Citation2023). Our contribution to this strand of literature is primarily conceptual. We show how the incompatibility between avoiding proxy discrimination and group fairness manifests through the generation of directly discriminatory prices, when optimal transport methods are deployed to achieve demographic parity in insurance. Furthermore, we highlight the communication challenges associated with the transformations of model inputs and outputs.

2. Discrimination and fairness in insurance pricing

2.1. Proxy discrimination

To set the stage, we fix a probability space with

describing the real world probability measure. We consider the random triplet

on this probability space. The response variable Y describes the insurance claim that we try to predict (and price). The vector

describes the non-protected covariates (non-discriminatory characteristics), and

describes the protected attributes (discriminatory characteristics). We assume that the partition into non-protected covariates

and protected attributes

is given exogenously, e.g. by law or by societal norms and preferences. We use the distribution

to describe an insurance portfolio and its claims, in particular, the random selection of a policyholder from the insurance portfolio, based on their characteristics, is given by the distribution

. Different insurance companies may have different insurance portfolio distributions

, and this insurance portfolio distribution typically differs from the overall population distribution in a given society because the insurance penetration is not uniform across the entire population. For simplicity, in this paper, we assume that the protected attributes

are discrete and finite, only taking values in a finite set

.

In our context, concern for proxy discrimination arises from the understanding that even when the protected attributes are not used explicitly in pricing, they may still be used implicitly, because the pricing mechanisms deployed may include inference of

from the non-protected covariates

. Hence, we require that insurance prices do not depend on the conditional distribution

, such that a modification of that conditional distribution does not impact the individual prices. To formalize this concern, we first note that the distribution

is specific to a particular portfolio and insurance company. Let

be the set of all distributions over

, such that any alternative insurance portfolio can be identified with a distribution

; one may think of

as a modification of the portfolio distribution

or as another portfolio in the same idealized insurance market. Further, assume that

takes values in a set

, i.e.

for all

. To start with, we consider proxy discrimination as a property of pricing functionals, defined as follows.

Definition 2.1

A pricing functional π is a mapping , such that for a portfolio

, a policyholder with non-protected covariates

is charged the insurance price

.

Note that, by construction, a pricing functional as defined above avoids direct discrimination since is not an explicit input to it. Avoiding proxy discrimination is a more stringent requirement, given as follows.

Definition 2.2

A pricing functional π on avoids proxy discrimination if for any two portfolios

that satisfy

,

and

, we have

(1)

(1)

Definition 2.2 of (lack of) proxy discrimination requires that in comparable insurance portfolios, prices should be identical. Comparability means that the portfolio distributions and

should be identical in all aspects apart from the dependence structure between

and

, which is precisely the source of potential proxy discrimination. We may thus view the property of avoiding proxy discrimination as a particular form of individual fairness. That is, broadly, the requirement that policyholders with similar profiles regarding non-protected covariates

, receive in similar circumstances the same premium (Charpentier, Citation2022; Dwork et al., Citation2012). In the current context ‘similar circumstances’ refers to the insurance portfolios having the same structure, except for the dependence between the protected attributes

and the non-protected covariates

. This dependence is insurance company specific and originates from the specific structure of the insurance portfolio.

In Definition 2.2 no specific pricing (or predictive) model is assumed – the definition can be applied to any functional of non-protected covariates and portfolio distribution. We note that a pricing functional violating (Equation1(1)

(1) ) in general does not allow us to conclude that such violations will be material in the context of a specific portfolio. To talk about materiality of proxy discrimination we need to consider a reference portfolio structure

that is comparable to

. By convention, we will choose

such that under that measure

are independent.

Definition 2.3

Proxy discrimination is material for the pricing functional π and the portfolio , if, for the measure

with

it holds that

(2)

(2)

The positive probability in (Equation2(2)

(2) ) is calculated with respect to the distribution of

which is the same under

and

. This formulation aims to avoid assigning materiality to scenarios where

for policies with

that do not actually occur in the portfolio.

Our aim is to examine standard types of insurance prices from the perspective of proxy discrimination.

2.2. Discrimination-free insurance prices

Best-estimate price: For insurance pricing, one aims at designing a regression model that describes the conditional distribution of Y, given the explanatory variables . Moreover, the main building block for technical insurance prices is the conditional expectation of claims, given the policyholder characteristics. This motivates the following definition.

Definition 2.4

For a portfolio the best-estimate price of Y, given full information

, is given by

(3)

(3)

This price is called ‘best-estimate’ because it has minimal mean squared error (MSE), i.e. it is the most accurate predictor for Y, given , in the

-sense; for simplicity, we assume that all considered random variables are square-integrable with respect to

.

In general, the best-estimate price directly discriminates because it uses the protected attributes as an input, see (Equation3

(3)

(3) ). As such, it does not provide a pricing functional in the sense of Definition 2.1.

Unawareness prices : The simplest response to the direct discrimination of best-estimate prices is to obtain a pricing functional by conditioning on the non-protected covariates only. This approach corresponds to the concept of fairness through unawareness (FTU) in machine learning, motivating the following definition.

Definition 2.5

For a portfolio the unawareness price of Y, given

, is defined by

(4)

(4)

The unawareness price does not directly discriminate because it does not use protected attributes as explicit inputs. However, the unawareness price is generally not free from proxy discrimination, as it allows implicit inference of

through the tower property

(5)

(5) From Equation (Equation5

(5)

(5) ) it is apparent that a modification of the conditional distribution

would generally impact the calculation of

and Equation (Equation1

(1)

(1) ) will not generally be satisfied. If there is statistical dependence (association) between

and

with respect to

, unawareness prices implicitly use this dependence for inference of

from

; in Example 2.12, below, we illustrate this inference on an explicit example.

Nonetheless, in practice one still needs to establish whether, under the unawareness price and for a specific portfolio distribution , proxy discrimination is material. Hence, we need to compare

, given in (Equation5

(5)

(5) ), to the corresponding formula under

, given by

(6)

(6) The comparison of formulas (Equation5

(5)

(5) ) and (Equation6

(6)

(6) ) highlights that there are two necessary conditions for proxy discrimination becoming material for

; note that

by assumption. First, we need to have, for some

, a conditional probability

(7)

(7) i.e. we need to have dependence between

and

that allows us to (partly) infer the protected attributes

from the non-protected covariates

, such that

is used as a proxy for

. Second, the functional

needs to have a sensitivity in

, otherwise, if

(8)

(8) the inference potential from

to

is not exploited in the construction of

, and there is no proxy discrimination, see (Equation5

(5)

(5) ). In fact, under property (Equation8

(8)

(8) ) we may choose any portfolio distribution

and we receive equal unawareness and best-estimate prices. In that case, there cannot be any material proxy discrimination because

is sufficient to compute the best-estimate price (Equation3

(3)

(3) ). As an example, we suppose that (non-protected) telematics data

makes gender information

superfluous to predict automobile claims Y. This would imply a (causal) graph

, which means that

does not carry any additional information to predict claims Y, given

. Therefore, (Equation8

(8)

(8) ) holds in this telematics data example.

We summarize this discussion in the following proposition.

Proposition 2.6

The unawareness price µ on

is a pricing functional that generally does not avoid proxy discrimination.

For the unawareness price µ and a given portfolio

, consider the subset of policyholders with attributes

, such that:

for each

.

for each

, where

.

is a necessary condition for proxy discrimination for µ in portfolio

to be material.

The previous proposition gives a necessary condition for proxy discrimination to be material. Note that in the binary case this necessary condition is also sufficient, but in the general case this may not be true.

Discrimination-free insurance price : In order to address the issue of proxy discrimination, Lindholm et al. (Citation2022) proposed to break the inference potential in (Equation5(5)

(5) ), to arrive at what they term a discrimination-free insurance price. The idea is to replace the conditional distribution

in (Equation5

(5)

(5) ) by a (marginal) pricing distribution

, which thus breaks the statistical association between

and

.

Definition 2.7

For a portfolio , a discrimination-free insurance price (DFIP) of Y, given

, is defined by

(9)

(9) where the distribution

is dominated by

.

It follows directly from the construction of Definition 2.7 that the DFIP avoids proxy discrimination.

Proposition 2.8

Let be either exogenously given or, alternatively,

. In either of these cases, the DFIP

on

is a pricing functional that avoids proxy discrimination.

Remarks 2.9

A number of observations regarding Definition 2.7 and Proposition 2.8 apply.

The price (Equation9

(9)

(9) ) can be viewed as a conditional expectation under a pricing measure that satisfies

such that the covariates

and

are independent under

, and

. If we set

, then

and

; see also Proposition 2.10 below. If the distribution

is exogenous, then Definition 2.7 does not pose a specific requirement on how to choose it, except its support being dominated by

, since to make the DFIP (Equation9

(9)

(9) ) well-defined we need to assume that

exists for all

,

-a.s.

Under (Equation8

(8)

(8) ), i.e. if

does not have any sensitivity in

, the best-estimate price

, the unawareness price

and the DFIP

all coincide. In such a model proxy discrimination is not a material concern for the calculation of insurance prices – and even the best-estimate price avoids both direct and proxy discrimination. This is because

becomes sufficient to compute the best-estimate price and the specific dependence structure between

and

becomes irrelevant.

Under additional assumptions on causal graphs, the DFIP (Equation9

(9)

(9) ) coincides with the causal impact of

on Y, see (Araiza Iturria et al., Citation2022; Lindholm et al., Citation2022). However, as discussed in the introduction causal considerations are often too restrictive in insurance pricing as, generally, they require that there are no unmeasured confounders or that these unmeasured confounders satisfy additional restrictive causal assumptions, otherwise one cannot adjust for the protected attributes

; we refer to Pearl (Citation2009). In an insurance pricing context there are always policyholder attributes that cannot be observed and act as unmeasured confounders for which it is difficult/impossible to verify the necessary causal assumptions; e.g. in car driving the current health and mental states may matter to explain propensity to claims.

Motivated by the observation that the DFIP can be understood as an expectation under a change of probability measure, we note that we may then view as the

-optimal

-measurable price of Y in a model where

and

are independent. Following this argument, the DFIP can be represented according to the following proposition.

Proposition 2.10

Let , such that

Then, the DFIP of (Equation9

(9)

(9) ) can be represented as

for

-almost every

.

The proof of Proposition 2.10 is given in Appendix 1.

Remark 2.11

The DFIPs (Equation9(9)

(9) ) require the knowledge of

, hence they require collection and modelling of protected attributes

, a form of ‘fairness through awareness’, see (Dwork et al., Citation2012). When data on protected attributes are only partially available, then calculation of

is challenging; see (Lindholm et al., Citation2023) for a technical solution to this issue. Proposition 2.10 gives us a different means of addressing this problem, as it implies that we can estimate the DFIP directly from an i.i.d. sample

of

, without going via the best-estimate price. Let us consider here the case that

, and assume that we have access to (estimated) population probabilities

and

. Then, we can find an estimate for the DFIP by solving the weighted square loss problem

(10)

(10) where

is a restricted class of regression functions on

(e.g. GLMs); the solution

estimates the DFIP

. Naturally, this approach requires reliable estimation of the conditional distribution,

, using a partial but representative sample – otherwise it may introduce a different kind of bias and discrimination.

Furthermore, notice that calculation of the DFIP via (Equation9(9)

(9) ), that is, by first estimating

and then averaging out

, is a form of model-post processing. On the other hand, estimating DFIP via (Equation10

(10)

(10) ), is an in-process adjustment of the model, since proxy discrimination is removed as part of the estimation process. In Section 3, we will see how model pre- and post-processing is used to address a different criterion, demographic parity.

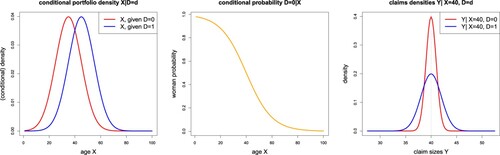

Examples

To illustrate the ideas of this section, and to set the stage for concepts discussed in later sections, we introduce two examples. First, we consider a situation where we have a response variable Y whose conditional expectation is fully described by the non-protected covariates , and the protected attributes

do not carry any additional information about the mean of the response Y. Therefore, for this model, proxy discrimination is immaterial and the best-estimate price is identical with the unawareness price and the DFIP, as discussed in the second item of Remarks 2.9. Moreover, this model is simple enough to be able to calculate all quantities of interest, and, even if it is unrealistic in practice, it allows us to gain intuition about the relationship between proxy discrimination and the group fairness concepts that will be introduced in the sequel.

Note that from now on, we will drop the dependence of various functionals on when there is no danger of confusion, e.g.

and

.

Example 2.12

No discrimination despite dependence of

Assume we have two-dimensional covariates having a mixture Gaussian portfolio density

(11)

(11) with

,

,

,

,

, and where we set

Thus, D is a Bernoulli random variable taking the values 0 and 1 with probability 1/2, and X is conditional Gaussian, given D = d, with mean

and variance

. Below, we make explicit choices for

and

which are kept throughout all examples. To make our examples more concrete, here and in subsequent sections, let X be the age of the policyholder, and D the gender of the policyholder with D = 0 for women and D = 1 for men.

For the response Y we assume conditionally, given ,

(12)

(12) That is, the mean of the response does not depend on the protected attributes

, but only on the non-protected covariates

. This means that

is sufficient to describe the mean of Y and Proposition 2.6 directly tells us that the corresponding unawareness prices are not subject to proxy discrimination. In fact, the best-estimate, unawareness, and discrimination-free insurance prices coincide in this example and they are given by

(13)

(13) Therefore, in this example, we do not have proxy discrimination and the best-estimate price is itself discrimination-free, see second item of Remarks 2.9.

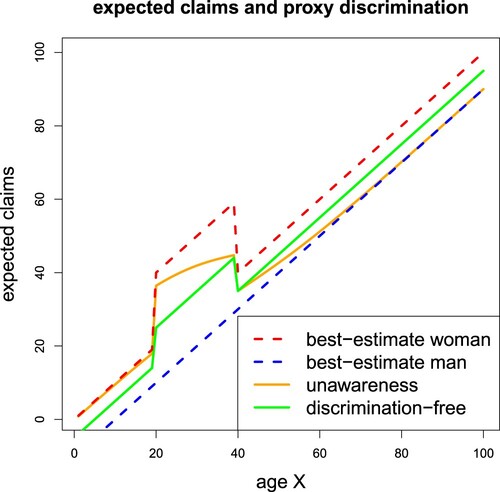

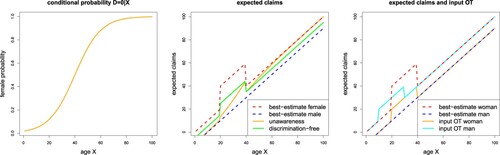

In Figure (lhs) we give an explicit example for model (Equation11(11)

(11) ). This plot shows the conditional Gaussian densities of X, given

; we select

, age gap

(providing

), and

. We can easily calculate the conditional probability of D = 0 (being woman), given age X,

(14)

(14) Figure (middle) shows these conditional probabilities as a function of the age variable X = x. For small X we have likely a woman, D = 0, and for large X a man, D = 1. Figure (rhs) shows the Gaussian densities of the claims Y at the given age X = 40 and for both genders D = 0, 1. The vertical dotted line shows the resulting means (Equation13

(13)

(13) ). These means coincide for both genders D = 0, 1, and the protected attribute D only influences the width of the Gaussian densities, see (Equation12

(12)

(12) ).

We give some general remarks on Example 2.12.

Figure 1. (lhs) Conditional Gaussian densities for

; (middle) conditional probability

as a function of

; (rhs) densities of claims Y for age X = 40 and genders D = 0, 1.

Remarks 2.13

A crucial feature of Example 2.12 is that the non-protected covariates

are sufficient to describe the mean of the response Y, and the protected attributes

only impact higher moments of Y. Therefore, no material proxy discrimination arises in this example from using the unawareness price, because (Equation13

(13)

(13) ) holds. From a practical point of view we may question such a model, but it has the advantage for the subsequent discussions that we do not need to rely on any type of proxy discrimination debiasing for stating the crucial points about group fairness and discrimination. We could modify (Equation12

(12)

(12) ) to include

also in the first moment of Y and derive similar conclusions, but then we would first need to convince the reader that the DFIP

is indeed the right way to correct for proxy discrimination.

A situation where protected attributes

only impact higher moments may arise in the case of a lack of historical data of a demographic group. This may lead to higher uncertainty, reflected in higher moments, but not the means. From an insurance pricing point of view, this manifests in higher risk loadings, which may then be subject to discrimination. Even though the use of risk loadings is not inconsistent with our Definitions 2.1 and 2.2, pricing functionals involving loadings are not discussed further in this paper. The situation where predictions for different demographic groups are subject to higher uncertainty finds parallels in the machine learning literature, where there is concern about poor performance of predictive models for populations that are under-represented in training samples, e.g. in the context of facial recognition see (Buolamwini & Gebru, Citation2018). The crucial point is whether such increased uncertainty has adverse impacts on these demographic groups, such as a higher likelihood of misidentification leading to systematic penalties, see, e.g. (Vallance, Citation2021).

We now present a variation of the previous example, where the dependence of leads to proxy discrimination, which requires correction in the sense of Equation (Equation9

(9)

(9) ).

Example 2.14

Proxy discrimination and DFIP

We again assume two-dimensional covariates having the same mixture Gaussian distribution as in (Equation11

(11)

(11) ). For the response variable Y we now assume that conditionally, given

,

(15)

(15) For Y representing health claims, the interpretation of this model is that female policyholders (D = 0) between ages 20 and 40 generate higher costs due to a potential pregnancy,Footnote1 and male policyholders generally have lower costs.

The resulting best-estimate prices, illustrated in Figure by the red and blue dotted lines, are given by

Hence, the above best-estimate prices have a sensitivity in

and

, and Proposition 2.6 directly tells us that the corresponding unawareness prices are subject to proxy discrimination. Another crucial difference of these best-estimate prices compared to the ones in Example 2.12 is that we do not have monotonicity in

for women, e.g. there is not a unique age x that leads to the best-estimate price

. This feature will become important later, when in Example 3.6 we apply output Optimal Transport methods to the same model.

We calculate the unawareness price

where we have used (Equation14

(14)

(14) ). This unawareness price is illustrated in orange colour in Figure . Not surprisingly, it closely follows the best-estimate prices for woman policyholders for small ages and men for large ages, because we can infer the gender D from the age X quite well, see Figure (middle). Thus, except in the age range from 20 to 60, we almost charge the best-estimate price to the corresponding genders, except to a few ‘mis-allocated’ men at small ages and women at high ages. This is precisely proxy discrimination and, in our understanding, consistent with what is described in paragraph 5 of Section 2 of Maliszewska-Nienartowicz (Citation2014), and can be interpreted as generating a disproportionate impact on (woman) policyholders.

Subsequently, the DFIP, using the choice , is shown in green colour in Figure and reads as

The price

exactly interpolates between the two best-estimate prices for women and men. As a result we have a cost reallocation between different ages which leads to a loss of predictive power and to cross-financing of claim costs within the portfolio.

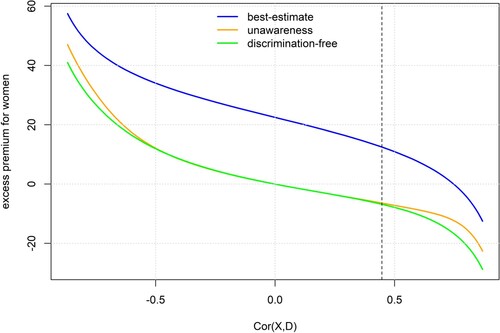

We now turn our attention to the differential outcomes for each gender, under each of the pricing mechanisms considered. Specifically, we calculate the ‘excess premium’ for women, as the difference of the average price for women (prices conditional on D = 0, minus the average price for men (prices conditional on D = 1). Furthermore, we consider how this excess premium varies in the correlation , which we can control via the model parameter δ (age gap) and plot the results in Figure . We observe that, as correlation increases, there is a sharper distinction between older male and younger female policyholders, which, given the effect of age on claims, reduces the excess premium for women. Furthermore, as expected, the excess premium is reduced by switching from best-estimate (blue) to either unawareness prices (green) or DFIP (orange). Furthermore, for all correlation values, the excess premium for the unawareness price dominates that for the DFIP, since the proxying of gender by age (more pronounced for correlation close to

), increases prices for women. However, this does not mean that using the DFIP produces more equal outcomes for each gender. Specifically, for high correlation values we see that the excess premium for

is the highest in absolute value.

Figure 3. Average excess premium for women D = 0 compared to men D = 1, in Example 2.14, as a function of . The dashed vertical line corresponds to the baseline scenario of

,

.

Finally, it is also of interest to establish how the different pricing functionals we consider perform as predictors of Y. Let Π be a random variable, representing the statistical behaviour under of insurance prices derived by a given pricing functional. For example, if

is the unawareness price,

. Then, the performance of the price Π as a predictor of Y can be measured by the mean squared error (MSE), given by

. We also consider a potential bias by providing the average prediction

of the prices, over the portfolio distribution. We calculate the resulting MSEs using Monte Carlo simulation with a pseudo-random sample of size 1 million. The results in Table show the negative impact of deviating from the optimal predictors, based on

and

, respectively. This is the price we pay for avoiding proxy discrimination with respect to the protected attributes

. Our pricing measure choice

produces a bias as can be seem from the last column of Table .

2.3. Group fairness axioms

As discussed in Section 2.1, the property of avoiding proxy discrimination can be understood as an individual fairness property, in the sense that it requires that similar policyholders, in the sense specified by Definition 2.2, be treated similarly. This has implications on how the pricing functionals (Equation9(9)

(9) ) avoiding proxy discrimination are constructed, without exploiting the dependence structure of

and

. On the other hand, as demonstrated in Example 2.14, Figure , addressing proxy discrimination does not consider at all the statistical properties of DFIPs; for example, for

, it will generally hold that

(16)

(16) such that different demographic groups, on average, are charged different premiums.

Table 1. MSEs and average prediction of the different prices in Example 2.14.

To address concerns about the implications of using any pricing method for the outcomes for different demographic groups, we need to consider the resulting prices as random variables. As an example, the right-hand side of (Equation16(16)

(16) ) uses the random selection of an insurance policy

and its related price

, respectively, from the insurance portfolio, conditioned on selecting an insurance policy with protected attributes

. Throughout this section, we denote the prices in an insurance portfolio by the random variable Π. We may interpret

as the price for a policyholder with profile

,

. If π is a pricing functional, then we can set

, such that Π is

-measurable; note however that the definitions of the group fairness properties below do not rely on such a measurability condition on Π.

We now introduce the three most popular group fairness properties in the machine learning literature, which are essentially properties of the joint distribution of . The properties we consider here are demographic parity, equalized odds and predictive parity; we refer to Barocas et al. (Citation2019), Xin and Huang (Citation2021) and Charpentier (Citation2022). In the next section, we show that the DFIP of Example 2.12, given in Equation (Equation13

(13)

(13) ), violates all three of these group fairness axioms. These three notations of group fairness are collected next definition.

Definition 2.15

The prices Π, in the context of portfolio distribution , satisfy:

Demographic parity, if Π and

are independent under

, implying that

-a.s.,

(17)

(17)

Equalized odds, if Π and

are conditionally independent under

, given Y, implying that

-a.s.,

(18)

(18)

Predictive parity, if Y and

are conditionally independent under

, given Π, implying that

-a.s.,

(19)

(19)

We comment on each of the three group fairness notions of Definition 2.15 below, focussing on the conditions needed for pricing mechanisms to satisfy them and whether they can be realistically expected to hold within insurance portfolios. We note that in the fairness and machine learning literature, see, e.g. (Barocas et al., Citation2019), the equalized odds and predictive parity properties are primarily used for binary responses, which is of less relevance for actuarial pricing applications.

Remarks 2.16

Demographic parity (Agarwal et al., Citation2019; also: statistical parity, independence axiom) is the simplest notion to interpret. If Π satisfies demographic parity, this directly implies

for all

, which can be contrasted with (Equation16

(16)

(16) ). Hence, policyholders in different protected demographic groups are on average charged the same premium. If the prices Π are

-measurable, then a sufficient (but not necessary) condition for Π to satisfy demographic parity is that

and

are independent. In practice that would mean that the insurance portfolio is composed in a way such that the conditional distribution of the non-protected covariates

, given

, is the same for all demographic groups

. This condition is hard to achieve in a portfolio, even by design. If

describes gender, there may be general insurance products where this is feasible (property insurance). However, e.g. in commercial accident insurance this may not be possible, because the genders are represented with different frequencies in different job profiles, which may make it impossible to compose a portfolio such that the selected jobs have the same distribution for both genders.

Moreover, we may have two different insurance companies with portfolio distributions

and

that only differ in the dependence structure, and which apply the same pricing mechanism Π to the same insurance product Y. It may happen, under specific assumptions on

and

, that one company satisfies demographic parity and the other one not. This seems difficult to explain and accept.

Equalized odds (Hardt et al., Citation2016; also: disparate mistreatment, separation axiom) implies that within groups of policyholders that produce the same level claims, the prices are independent of protected attributes. In general, independence between

and

is not sufficient to receive equalized odds for a

-measurable predictor Π. It is generally difficult for prices to satisfy equalized odds, as – particularly in the non-binary response case of insurance portfolios – this property depends on the structure of the predictors. Specifically, there are scenarios where conditional independence is impossible, as when

and Y jointly fully determine

(and hence a

-measurable price Π), e.g. in the case of sex-specific claims that only occur within disjoint age groups. The key limitation is that, while the portfolio composition

is to an extent in the hands of the insurers, risk factor design is not always possible through insurance cover design.

The notion of predictive parity (Barocas et al., Citation2019; also: sufficiency axiom) can be motivated by the definition of a sufficient statistic in statistical estimation theory. We can interpret

as a family of distributions of Y being parameterized by

. If prices Π are

-measurable and we interpret statistically Π as a predictor of Y, then Π is called sufficient for

if (Equation19

(19)

(19) ) holds. Essentially, this means that Π carries all the necessary information needed to predict Y, such that explicit knowledge of

becomes redundant. However, such an assumption seems unrealistic in an insurance pricing context, because there is hardly any example in which all relevant information for claims prediction can be fully condensed into a single predictor Π. Note that even in the case that

are conditionally independent given

, it does not follow that (Equation19

(19)

(19) ) holds true.

Regardless of the actuarial relevance of the fairness notions of Definition 2.15 it is clear that rather special conditions are needed in order for all of them to hold jointly. The following proposition provides such a sufficient condition:

Proposition 2.17

Assume that the prices Π, in the context of portfolio distribution , satisfy

The prices Π then satisfies fairness notions (i)–(iii) from Definition 2.15.

The proof is given in Appendix 1.

2.4. Discrimination-free vs. fair insurance prices

In Example 2.14 and Section 2.3, we discussed how avoiding proxy discrimination and achieving outcomes across demographic groups that satisfy a group fairness criterion are rather different requirements. We now formalize this insight via the following two propositions.

Proposition 2.18

Consider the pricing functional π and the respective prices . If π avoids proxy discrimination, it is not implied that Π satisfies any of demographic parity, equalized odds or predictive parity.

Proposition 2.19

Consider the pricing functional π and the respective prices . If Π satisfies demographic parity, it is not implied that π avoids proxy discrimination.

A particular implication of Propositions 2.18 and 2.19 is that avoiding proxy discrimination is generally not a stronger requirement than avoiding group fairness notions (and vice versa). As both propositions are negative results, they can be proved by counter-examples. For Proposition 2.18 this is Example 2.12. In that example, the DFIP produces violations of all three group fairness properties considered here. The required derivations to show this are somewhat laborious and, thus, are delegated to Appendix 1. As the DFIP in that example is identical to the unawareness price, one cannot claim that these violations are specific to the construction of . The crucial feature of Example 2.12 is that the non-protected covariates

are sufficient for describing the conditional expectation of the response Y, but they are not sufficient to describe the full conditional distribution of Y, given

.

To prove Proposition 2.19, a suitable counter-example is given in Example 2.20, below; here we provide a situation where the unawareness price does not materially avoid proxy discrimination, while at the same time it satisfies demographic parity. Furthermore, in an additional Example 2.21, below, we offer a situation which produces prices that satisfy all of demographic parity, equalized odds and predictive parity, but which directly discriminate, in the sense that they are explicit functions of protected attributes . Of course, if such direct discrimination takes place, one cannot meaningfully say that proxy discrimination is avoided.

Remark that in the following examples, we consider a real-valued Gaussian distributed protected attribute . This is in contrast to assuming that

is finite, see Section 2.1. The reason for this different choice is a computational one because in a multivariate Gaussian setting all quantities of interest can be calculated explicitly. In the examples the protected attribute

and the non-protected covariates will be positively correlated, which allows inferring one from the other.

Example 2.20

Demographically fair prices that produce proxy discrimination

We choose three-dimensional Gaussian covariates

(20)

(20) For the response variable we assume

This gives us the best-estimate price

(21)

(21) A standard result on multivariate Gaussian random variables tells us, see, e.g. Corollary 4.4 in Wüthrich and Merz (Citation2015),

This allows us to calculate the unawareness price by

(22)

(22) which is different to the DFIP,

. We know that the unawareness price in general does not avoid proxy discrimination. Since the best-estimate price has a sensitivity in D and because there is dependence between

and D, proxy discrimination is material; recall Proposition 2.6. In fact, not considering non-protected covariates

leads to a prediction of the protected attribute D of

. Since

and D are positively correlated, we can (partly) infer D from

by using the (informed) prediction

, e.g. if both

and

take positive values, we get a positive predicted value for D, given

.

The random vector is two-dimensional Gaussian with independent components because

This implies that the unawareness price

is independent of D, hence, it satisfies demographic parity. This also proves Proposition 2.19.

We now give an example that satisfies all three group fairness criteria of demographic parity, equalized odds and predictive parity, but at the same time directly discriminates.

Example 2.21

Group fair prices that directly discriminate

Assume the non-protected covariates and the protected attribute

are real-valued. We choose a three-dimensional Gaussian distribution

with fixed covariance parameter

. The best-estimate is given by

(23)

(23) this uses again Corollary 4.4 of Wüthrich and Merz (Citation2015). This best-estimate price directly discriminates because it uses D as an input. We now show that

satisfies all three notions of group fairness. For this, we derive the joint distribution of

. Note that

Hence,

This shows that

and D are independent, which is precisely the sufficient condition presented in Proposition 2.17. As a result, all three group fairness axioms above are fulfilled for the best-estimate price

. On the other hand, this best-estimate directly discriminates as can be seen from (Equation23

(23)

(23) ).

We now give some additional remarks on Propositions 2.18 and 2.19 and Example 2.21.

Remarks 2.22

Propositions 2.18 and 2.19 indicate that avoiding proxy discrimination and satisfying group fairness are rather different concepts, and, in general, one does not imply the other. For this reason, satisfying simultaneously both is more restrictive than just complying with one of them – and sometimes even impossible if one wants to have a non-trivial predictor. Currently, many regulators focus on proxy discrimination, though corresponding legislation leaves room for interpretation. Therefore, constraining pricing models with group fairness criteria does not seem to solve this particular regulatory problem.

Proxy discrimination is caused by two factors that need to hold simultaneously, namely, (1) there needs to be a dependence between the non-protected covariates

and the protected attributes

, and (2) there needs to be a sensitivity of the best-estimate price

in

, recall Proposition 2.6. These conditions (or the lack of them) do not tell us anything about the dependence structure between a DFIP

and

. In general,

and

are correlated, namely, observe that the dependence structure between

and

is completely irrelevant for the calculation of the DFIP from (Equation9

(9)

(9) ). Therefore, we can always find a portfolio distribution

under which the price

and the protected attributes

are dependent, unless

does not depend on

.

Focusing on the example of demographic parity fairness, this notion solely relates to the independence of the resulting prices Π and protected attributes

. Let

, such that prices are

-measurable. If this price Π satisfies demographic parity, then

can be interpreted as a projection that only extracts the information from

that is orthogonal to/independent of

; this is similar to the linear adversarial concept erasure of Ravfogel et al. (Citation2020, Citation2022); see also Example 2.20. That Π becomes independent of

is a specific property of the pricing functional

in relation to

, but this does not account for the full dependence structure in

nor for the properties in the best-estimate price

. Therefore, in general, demographic parity does not constitute evidence regarding proxy discrimination.

If we wanted all participants in an insurance market to comply with demographic parity, we would need to choose projections

that vary from company to company because they all have different portfolio distributions

. As a result, every company would consider non-protected covariates in a different way. This would be difficult to explain to customers and may be impossible to regulate; we also refer to the first item of Remark 2.16 (last paragraph). Therefore, stronger assumptions are typically explored, like aiming at full independence between

and

, see Section 3.2, below.

A crucial feature of Example 2.20 is that independence between

and

is a sufficient condition to have demographic parity fairness, but not a necessary one. This is used in an essential way, namely,

and

are dependent, but the projection

only extracts a part of information from

that is independent of

. Example 2.21 goes even further, by demonstrating a situation where a price that satisfies demographic parity, equalized odds and predictive parity directly discriminates.

Examples 2.20 and 2.21 use multivariate Gaussian distributions, since these make all relevant calculations straightforward. This is not a limitation, as similar examples can be constructed with discrete protected attributes

. However, such discrete examples typically become more demanding computationally, making them less transparent in terms of exposition. Note that the counter-examples are only used to prove the negative results of Propositions 2.18 and 2.19 and this is mathematically correct regardless of whether these counter-example are realistic or not. If we restrict our attention to demographic parity and proxy discrimination it is easy to construct non-Gaussian counter-examples verifying the statements (in this restricted sense) of Propositions 2.18 and 2.19. This is done in Appendix 2.

3. Achieving demographic parity by optimal transport methods

3.1. Rationale

In Section 2, we formalized our view of direct and proxy discrimination, and we discussed pricing functionals that avoid them. Furthermore, we established that group fairness concepts are not generally consistent with the requirement of avoiding direct and proxy discrimination; essentially they provide answers to different problems. Next, we focus on methods to create pricing functionals that satisfy group fairness and discuss their implications for both direct and proxy discrimination.

In this section, we will specifically focus on demographic parity as a group fairness concept. The reason for this is three-fold:

Let us take as a starting point the need to avoid proxy discrimination. We have noted that in the special case where

and

are independent, the unawareness price is identical to the DFIP, henceforth, using the unawareness price would not introduce material proxy discrimination. This motivates the question: if

and

are not independent, is there a way to make them so? We will show in this section how optimal transport (OT) methods can help to achieve precisely that. But note also that independence of

and

implies the independence of any

-measurable price from the protected attributes

and, hence, demographic parity. This means that, despite the conflict between the two concepts we already discussed, there is further scope to interrogating their relationship.

Demographic parity is a much simpler concept to explain to stakeholders, including policyholders. While no form of group fairness is mandated by regulators, insurers will remain sensitive to reputational risk, which itself derives from those violations of group fairness that are most easily monitored; see, e.g. the Citizens Advice report (Cook et al., Citation2022). We do not envisage that insurance companies will or indeed should aim to satisfy demographic parity and, in fact, we argue against this in the sequel. But companies may be motivated to monitor demographic disparities and in some cases partially smooth out these effects, e.g. using the methods of Grari et al. (Citation2022).

As argued in Remarks 2.16, demographic parity may sometimes be achieved by a careful selection of the policyholders in the portfolio (aiming to have

independent of

under

) or by introducing direct discrimination. The latter approach is reflected in Example 2.21 and, in a sense, underlies the methods of the current section (which can be criticized on precisely that basis). Therefore, verifying/satisfying demographic parity is often easier than equalized odds and predictive parity. In particular, it requires less insurance policy engineering.

In the rest of this Section, we will use the theory of optimal transport (OT) for input pre-processing and output post-processing, see (Chiappa et al., Citation2020; del Barrio et al., Citation2019), with the aim of achieving demographic parity. By using these techniques it will also be possible to relate the price deformations needed in order to achieve demographic parity to the construction of DFIPs. For both types of OT, independence of prices from protected attributes is achieved by a -dependent transformation of the non-protected covariates

. An important difference between input pre-processing and model post-processing is that the former transforms the inputs

, and retains the dimension of the original non-protected covariates

. In fact, up to technical conditions (continuity), the OT input transformation

is one-to-one (for given

) which allows us to reconstruct the original features

from the pre-processed ones

. Model post-processing, using an OT map, transforms the (one-dimensional) regression output

, by making

independent of the protected attributes

. We have already seen in Example 2.21 a situation where the best-estimate price

is independent of

, hence satisfies demographic parity. In that example the best-estimate price can be identified with

and the OT output map is the identity map.

3.2. Input (data) pre-processing

A sufficient way to make an insurance price satisfy demographic parity is to pre-process the non-protected covariates such that the transformed version

becomes independent of the protected attributes

under

. First, we emphasize that this pre-processing is only performed on the input data

(and using

), but it does not consider the response Y. Second, independence between

and

is a sufficient condition for satisfying demographic parity with respect to

, but not a necessary one, see Example 2.20.

One method of input pre-processing is to apply an OT map to obtain a covariate distribution that is independent of the protected attributes; for references see (Chiappa et al., Citation2020; del Barrio et al., Citation2019). More specifically, for given , we change the conditional distribution

(24)

(24) to an unconditional distribution

for the non-protected covariates

(25)

(25) meaning that the transformed covariates

are independent of

. Intuitively, to minimally change the predictive power by this transformation from (Equation24

(24)

(24) ) to (Equation25

(25)

(25) ), the unconditional distribution

should be as similar as possible to the conditional ones

, for all

; we come back to this in Remark 3.4, below. In this approach, the covariates

and

preserve their meanings because they live on the same covariate space, but the OT map locally perturbs the original covariate values

, based on

.

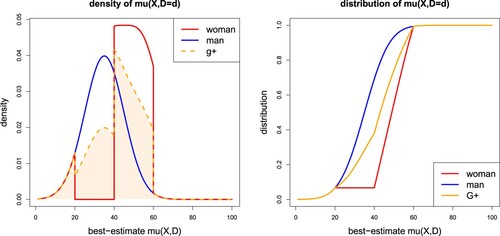

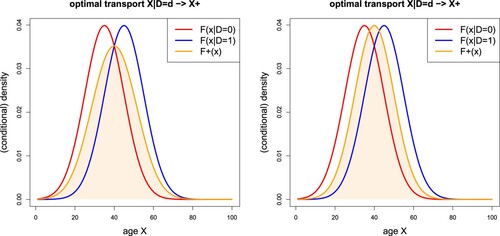

We revisit Examples 2.12 and 2.14 illustrated in Figure , and we give two different proposals for in Figure . The plot on the left hand side shows the average density

of the two Gaussian densities

, given

, i.e. we have a Gaussian mixture for

on the left hand side of Figure . The plot on the right hand side shows the Gaussian density for

, that averages the means

and

; we also refer to (Equation31

(31)

(31) )–(Equation32

(32)

(32) ), below. For the moment, it is unclear which of the two choices for

gives a better predictive model for Y; we also refer to Remark 3.4, below.

Assume we have selected an unconditional distribution to approximate

,

, and we would like to optimally transform the random variable

to its unconditional counterpart

. This is precisely where OT comes into play. Choose a distance function ϱ on the covariate space. The (2-)Wasserstein distance between

and

w.r.t. ϱ is defined by

(26)

(26) where

is the set of all joint probability measures having marginals

and

, respectively. The Wasserstein distance (Equation26

(26)

(26) ) measures the difference between the two probability distributions

and

by optimally coupling them. Colloquially speaking, this optimal coupling means that we try to find the (optimal) transformation

such that we can perform this change of distribution at a minimal effort;Footnote2 this optimal transformation

is called an OT map or a push forward. Under additional technical assumptions, determining the OT map

is equivalent to finding the optimal coupling

.

Figure 4. Example 2.14, revisited: conditional densities , for

, and two different choices for

,

; for a formal definition we refer to (Equation31

(31)

(31) )–(Equation32

(32)

(32) ).

Remarks 3.1

The input OT approach can also be thought of in relation to context-sensitive covariates. For example, the European Commission (European Commission, Citation2012), footnote 1 to Article 2.2(14) – life and health underwriting – mentions the waist-to-hip ratio as a non-protected (useful) context-sensitive covariate for health prediction. Note that the waist-to-hip ratio is gender-, age- and race- dependent. Furthermore the impact of the waist-to-hip ratio on predictions of health outcomes depends specifically on factors like gender, age, and race, that is, the same value should be interpreted differently depending on the demographic group the policyholder belongs to. This means that a

-dependent transformation of the waist-to-hip ratio is desirable to achieve consistency.

Applying an OT map will modify the waist-to-hip ratio such that it has the same distribution for both genders, which can then be treated coherently as an input to a predictive model. However, this does not mean that the transformed variable will reflect health impacts in a demographic-group-appropriate way, if the OT map produces a transformation specifically with the aim of removing dependence between

and

and, therefore, reflects the rather arbitrary dependence of those features in a particular portfolio. This also means that care should be taken more generally when considering OT-transformed covariates

, since their interpretation may not be straightforward. Still, if a transport map is derived from a population distribution of

(e.g. of policyholders across a market), then demographic parity is expected to hold across the market (rather than on individual portfolios), and the transformed variables

can be interpreted as

-agnostic versions of features

.

In many situations the OT map

,

, can be explicitly calculated, e.g. in the discrete covariate case it requires solving a linear program (LP); see (Cuturi & Doucet, Citation2014). The only difficulty in this discrete case is a computational one. Furthermore, the OT map is deterministic for continuous distributions, while in the case of discrete distributions we generally have a random OT map, see also (Equation29

(29)

(29) ) below.

The Wasserstein distance (Equation26

(26)

(26) ) can also be defined for categorical covariates. The main difficulty in that case is that one needs to have a suitable distance function ϱ that captures the distance between categorical levels in a meaningful way.

In general, this OT map should be understood as a local transformation of the covariate space, so that the main structure remains preserved, but the local assignments are perturbed differently for different realizations of

. In that, the non-protected covariates

and

keep their original interpretation, e.g. age of policyholder, but through a local perturbation some policyholders receive a slightly smaller or bigger age to make their distributions identical for all

,

; note that these perturbations do not use the response Y, i.e. it is a pure input data transformation.

Assume we have a (one-dimensional) real-valued non-protected covariate

and we choose the Euclidean distance for ϱ. The dual formulation of the Wasserstein distance (Equation26

(26)

(26) ) gives in this special case the simpler formula

(27)

(27) where U has a uniform distribution on the unit interval

. The OT map

,

, is then in the one-dimensional continuous covariate case given by

(28)

(28) This justifies the statement in the previous bullet point that the OT map is a local transformation, since the topology is preserved by (Equation28

(28)

(28) ). In the case of a non-continuous

, the OT map needs randomization. In the one-dimensional case we replace the last term in (Equation28

(28)

(28) ) by

(29)

(29) where U is independent of everything else and uniform on

, and where we set for the left limit

in X. As a result, V is uniform on

, and we set

.

We emphasize that (Equation28

(28)

(28) ) and (Equation29

(29)

(29) ) reflects the OT map only in the one-dimensional case, and for the multi-dimensional (empirical) case we have to solve a linear program, as indicated in the second bullet point of these remarks.

Next, we state that the OT input pre-processed version of the non-protected covariates satisfies demographic parity and avoids proxy discrimination with respect to the transformed inputs . Also, interestingly, these notions do not touch the response Y, but it is sufficient to know the best-estimate price

. The proof of the next proposition is straightforward.

Proposition 3.2

OT input pre-processing

Consider the triplet and choose the OT maps

,

, with

being independent of

(under

). The unawareness price

avoids proxy discrimination with respect to

and satisfies demographic parity.

We emphasize that Proposition 3.2 makes a statement about the transformed input and not about the original covariates

. Hence, whether we can consider the price

to be truly discrimination-free depends on the interpretation we attach to the transformed inputs

, see the first bullet in Remarks 3.1. Moreover, Proposition 3.2 applies to any transformation

,

that makes

independent of

, and which does not add more information to

with respect to the prediction of Y; this is what we use in the last equality statement.

Now, we consider one-dimensional OT in the context of our Example 2.14. The method is similar to the (one-dimensional) proposals in Section 4.3 of Xin and Huang (Citation2021), called there ‘debiasing variables’. However, the OT approach works in any dimension, and also takes care of the dependence structure within , given

. Nevertheless, we consider a one-dimensional example for illustrative purposes.

Example 3.3

Application of input OT

We apply the OT input pre-processing to the situation of Example 2.14, which considered age- and gender-dependent costs, including excess costs for women between ages 20 and 40. Our aim is to obtain an insurance price that both satisfies demographic parity and avoids proxy discrimination (with respect to the transformed inputs). In this set-up we have a real-valued non-protected covariate , and we can directly apply the one-dimensional OT formulations (Equation27

(27)

(27) ) and (Equation28

(28)

(28) ). The conditional distributions satisfy for d = 0, 1 and for given

and

, see (Equation11

(11)

(11) ),

(30)

(30) where Φ denotes the standard Gaussian distribution. For the transformed distribution

we select the two examples of Figure ; the first one is given by

(31)

(31) and the second one by

(32)

(32) Selections (Equation31

(31)

(31) ) and (Equation32

(32)

(32) ) are two possible choices by the modeller, but any other choice for

which does not depend on D is also possible. The first choice is the average of the two conditional distributions (Equation30

(30)

(30) ), the second one is their Wasserstein barycenter; we refer to Proposition 3.8 and Remarks 3.4 and 3.9, below.

We start by calculating the Wasserstein distances (Equation27(27)

(27) ) using Monte Carlo simulation and a discretized approximation to

in the case of the Gaussian mixture distribution (Equation31

(31)

(31) ). The results are presented in Table . We observe that the second option (Equation32

(32)

(32) ) is closer to the conditional distributions

, d = 0, 1, in Wasserstein distance; in fact, in this second option we have

for all

, and there is no randomness involved in the calculation of the expectation in (Equation27

(27)

(27) ).

Table 2. Wasserstein distances for the two examples (Equation31

(31)

(31) )–(Equation32

(32)

(32) ) for

.

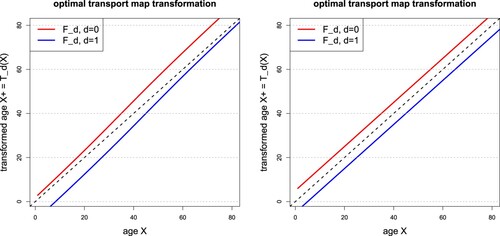

Figure shows the OT maps (Equation28(28)

(28) ) for the two choices of

given by (Equation31

(31)

(31) )–(Equation32

(32)

(32) ). We observe that in the second option we generally make women older by

years, and we generally make men younger by

years, so that the distributions

of the OT transformed ages

coincide for both genders d = 0, 1. The first option (Equation31

(31)

(31) ) leads to an age dependent transformation. If we focus on the y-axis in Figure , we can identify the ages of women and men that are assigned to the same age cohort. For instance, following the horizontal grey dotted line at level

, we find for the second option (Equation32

(32)

(32) ) that women of age 35 and men of age 45 will be in the same age cohort (and hence same price cohort). This seems a comparably large age shift which may be difficult to explain to customers. However, in real insurance portfolios we expect more similarity between women and men so that we need smaller age shifts. Additionally, this picture will be superimposed by more non-protected covariates which will require the multi-dimensional OT map framework.

Based on this OT input transformed data, we construct a regression model . In this (simple) one-dimensional problem

we simply fit a cubic spline to the data

using the locfit package in R; see (Loader et al., Citation2022).

Figure 5. OT maps for examples (Equation31

(31)

(31) )–(Equation32

(32)

(32) ) of

with the original age X on the x-axis and the transformed ages

on the y-axis; the black dotted line is the 45

diagonal.

Table presents the prediction accuracy of the OT input transformed models. At first sight it is surprising that the input OT transformed model has a better predictive performance than the unawareness price model

. However, by considering the details of the true model, this is not that surprising. Women have generally higher costs than men at the same age

under model assumption (Equation15

(15)

(15) ), and considering the age shifts of the OT maps makes women and men more similar with respect to claim costs in this example. The MSE of the unawareness price

is calculated as

The first term on the right hand side is the MSE of the best-estimate predictor

based on all information

, and the second term corresponds to the loss of accuracy by using the unawareness price

. The OT maps (Equation31

(31)

(31) ) and (Equation32

(32)

(32) ) make women older and men younger, and as a result their risk profiles with respect to the transformed inputs

become more similar in this example. This precisely leads, in this case, to a smaller MSE of

over

. Namely, we have

(33)

(33) with the last term being smaller than the last one in the unawareness price case because the

-dependent transformation

makes

more similar to

compared to

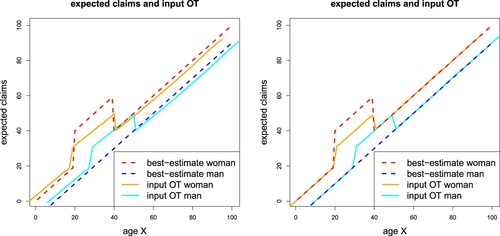

. This is specific to our example which can be better understood by discussing Figure . Figure illustrates the OT input transformed model prices

for choices (Equation31

(31)

(31) )–(Equation32

(32)

(32) ) for

. For Figure we map these prices back to the original features

, separated by gender

. This back-transformation can be done because the OT maps

are one-to-one under continuous non-protected covariates

, and for given

, see Remarks 3.1. Figure then evaluates the prices

, where we consider

as a function of age

for fixed gender

. The right hand side shows choice (Equation32

(32)

(32) ) for

, which leads to parallel shifts for the transformed age assignments

, see Figure (rhs). As a consequence, the excess pregnancy costs of women with ages in

are shared with men having ages in

in our example, see orange and cyan lines in Figure (rhs). This should be contrasted to the DFIP

(green line in Figure ) which shares the excess pregnancy costs within the age class

for both genders. The transformation for choice (Equation31

(31)

(31) ) for

leads to a distortion along the age cohorts as we do not have parallel shifts, see Figures (lhs) and (lhs).

Coming back to (Equation33(33)

(33) ) and focusing on choice (Equation32

(32)

(32) ) for

, which corresponds to Figure (rhs), we observe that the age shifts of 5 years lead to OT input transformed prices

that rather perfectly match the best-estimates

. In fact, the age shifts of 5 years exactly compensate the term

in (Equation15

(15)