?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Loss reserving generally focuses on identifying a single model that can generate superior predictive performance. However, different loss reserving models specialise in capturing different aspects of loss data. This is recognised in practice in the sense that results from different models are often considered, and sometimes combined. For instance, actuaries may take a weighted average of the prediction outcomes from various loss reserving models, often based on subjective assessments. In this paper, we propose a systematic framework to objectively combine (i.e. ensemble) multiple stochastic loss reserving models such that the strengths offered by different models can be utilised effectively. Our framework contains two main innovations compared to existing literature and practice. Firstly, our criteria model combination considers the full distributional properties of the ensemble and not just the central estimate – which is of particular importance in the reserving context. Secondly, our framework is that it is tailored for the features inherent to reserving data. These include, for instance, accident, development, calendar, and claim maturity effects. Crucially, the relative importance and scarcity of data across accident periods renders the problem distinct from the traditional ensembling techniques in statistical learning. Our framework is illustrated with a complex synthetic dataset. In the results, the optimised ensemble outperforms both (i) traditional model selection strategies, and (ii) an equally weighted ensemble. In particular, the improvement occurs not only with central estimates but also relevant quantiles, such as the 75th percentile of reserves (typically of interest to both insurers and regulators). The framework developed in this paper can be implemented thanks to an R package, ADLP, which is available from CRAN.

1. Introduction

1.1. Background

The prediction of outstanding claims is a crucial process for insurers, in order to establish sufficient reserves for future liabilities, and to meet regulatory requirements. Due to the stochastic nature of future claims payments, insurers not only need to provide an accurate central estimate of the reserve, but they also need to forecast the distribution of the reserve accurately. Furthermore, in many countries, provision of the predictive distribution of outstanding claims is required by regulatory authorities. For instance, the Australian Prudential Regulation Authority (APRA) requires general insurers to establish sufficient reserves to cover outstanding claims with at least probability (APRA, Citation2019). Therefore, insurers need to ensure that their models achieve satisfactory predictive performance beyond the central estimate (expectation) of outstanding claims. This is sometimes referred to as ‘stochastic loss reserving’ (Wüthrich & Merz, Citation2008).

Early stochastic loss reserving approaches include the distribution-free stochastic Chain-Ladder model proposed by Mack (Citation1993). As computational power increased, Generalised Linear Models (GLMs) became the classical stochastic modelling approach (Taylor & McGuire, Citation2016). They have been widely applied in both practice and loss reserving literature due to their stochastic nature and high flexibility in modelling various effects on claims payments, such as accident period effects, development period effects, calendar period effects, and effects of claims notification and closure. Common distribution assumption for GLMs include the log-normal distribution (Verrall, Citation1991), over-dispersed Poisson (ODP) distribution (Renshaw & Verrall, Citation1998), and gamma distribution (Mack, Citation1991). Parametric curves to describe claim development patterns, such as the Hoerl curve and the Wright curve (Wright, Citation1990) can also be incorporated in the GLM context.

In recent years, machine learning models have become popular in loss reserving due to their potential in prediction accuracy as well as the potential for saving human labour costs. Notable examples include the smoothing spline (England & Verrall, Citation2001), Generalised Additive Model for Location, Scale and Shape (GAMLSS) (Spedicato et al., Citation2014), and Neural Networks (Al-Mudafer et al., Citation2022; Kuo, Citation2019; Wüthrich, Citation2018).

Despite the sophisticated development of loss reserving models, the main focus of current loss reserving literature is to identify a single model that can generate the best performance according to some measure of prediction quality. This approach is usually referred to as the ‘model selection strategy’. However, due to the difference in model structures and assumptions, different loss reserving models are usually specialised at capturing specific claims effects, which are different from one model to the other. However, multiple claims effects are typically present in reserving data. Furthermore, these effects are likely to appear inhomogenously accross accident and/or development years, which are noted in Friedland (Citation2010) and CitationTaylor (Chapter 5 of Citation2000, pp. 151–165). This makes it challenging for a single model to capture all of them. Using a single model to fit claims payments occurred in all accident periods may not be the most effective way to utilise the strengths offered by all the different available models.

Those concerns highlight the potential to develop a model combination (as opposed to selection) strategy.

In practice, ensembles of different deterministic loss reserving models may have been typically constructed based on ad-hoc rules. The weights allocated to component models are commonly selected subjectively based on the model properties (see, e.g. Friedland, Citation2010; Taylor, Citation2000). However, due to the subjective nature of this combination strategy, experience based on model properties may not generalise well to new claims data, and the weights allocated to component models are not optimised (Friedland, Citation2010). Therefore, the information at hand may not be utilised most effectively. The subjective model combination strategy can further cause complications in cases where one wants information on the random distribution of claims. To address this, a more rigorous framework for combining stochastic loss reserving models was developed by CitationTaylor (Chapter 12 of Citation2000, pp. 151–165), who proposed to select model weights by minimising the variance of total estimated outstanding claims. While a great step forward, this approach only considers the first and the second moments of the claims distribution. This may not be sufficient to derive the required quantiles from the distribution of reserves accurately.

Beyond the loss reserving literature, stacked regression has been recently applied in combining mortality forecasts (Kessy et al., Citation2022; Li, Citation2022). Under the stacked regression approach, the combination weights are determined by minimising the Mean Squared Error (MSE) achieved by the ensemble. Despite showing success for combining point forecasts, this approach does not take into account the ensemble's performance over the entire distribution, which is critical for loss reserving purposes.

The so-called ‘linear pool’ (Gneiting et al., Citation2005), which has been widely applied in combining distributional forecasts outside the field of loss reserving, may offer an alternative model combination approach that could overcome the aforementioned limitations of model selection strategy, and of traditional combination strategies for loss reserving models. The linear pool uses a proper scoring rule to determine the weights allocated to component distributional forecasting models. A proper scoring rule assigns a numeric score to a distributional forecasting model when an event materialises. This presents a number of advantages when assessing the quality of distributional forecasts (Gneiting & Raftery, Citation2007). Literature in the areas of weather forecast (Baars & Mass, Citation2005; Gneiting & Raftery, Citation2007; Gneiting et al., Citation2005; Raftery et al., Citation2005; Vogel et al., Citation2018), stocks return forecast, and GDP growth rate forecast (Hall & Mitchell, Citation2004, Citation2007; McDonald & Thorsrud, Citation2011; Opschoor et al., Citation2017) all suggest that linear pool ensembles generate more accurate distributional forecast than any single model in their respective contexts. A common choice for the proper score is the Log Score. Furthermore, linear pools are akin to finite mixture models in producing a weighted average of predictive distributions, yet they differ in weight determination. Finite mixture models simultaneously train model parameters and weights using the same dataset (Baran & Lerch, Citation2018). Conversely, the linear pooling method first calibrates model parameters on a training set and then optimises combination weights on a separate validation set. This is likely to improve the ensemble's overall predictive accuracy compared to standard finite mixture models. In this paper, we start by developing a linear pool approach to loss reserving, and extend the methodology to best fit the reserving context, as detailed in the following section.

1.2. Statement of contributions

To our knowledge, linear pools have not appeared in the loss reserving literature. Unfortunately, existing linear pool implementations cannot be applied in this context directly for a number of reasons outlined below. This paper aims to fill this gap by developing an adjusted linear pool ensemble forecasting approach that is particularly suitable to the loss reserving context. Although actuaries may combine different loss reserving models in practice, we argued earlier that the model combination is then generally performed subjectively and may not optimise the ensemble's performance. Compared with the subjective model combination approach, our proposed scheme has two advantages. Firstly, data driven weights reduce the amount of manual adjustment required by the actuary, particularly in cases where a new experience has emerged. Secondly, by using a proper score as the objective function, the distributional forecast accuracy of the ensemble can be optimised. As Shapland (Citation2022) briefly suggests in his monograph, the future development of model combination strategies for loss reserving models should consider the relative predictive power of component models, and the weighted results should ‘reflect the actuary's judgements about the entire distribution, not just a central estimate’. Since the proper score can formally assess the quality of distributional forecast, the linear pools satisfy the two conditions proposed by Shapland (Citation2022) by using a proper score as the optimisation criterion.

In this paper, we introduce a distributional forecast combination framework that is tailored to the loss reserving context. Although the standard ensemble modelling approach has been extensively studied in the literature, it can not be applied directly without modification due to the special data structures and characteristics of the loss reserving data. Specifically, we introduce ‘Accident and Development period adjusted Linear Pools (ADLP)’, which have the following attributes:

| – | Triangular shape of aggregate loss reserving data: To project out-of-sample incremental claims, the training data must have at least one observation from each accident period and development period in the upper triangle. Our proposed ensemble ensures that this constraint is satisfied when partitioning the training and validation sets. | ||||

| – | Time-series characteristics of incremental claims: Under the standard ensemble approach, the in-sample data is randomly split into the training set for training component models and the validation set for estimating combination weights (Breiman, Citation1996). However, since the main purpose of loss reserving is to predict future incremental claims, a random split of the data can not adequately validate the extrapolating capability of loss reserving models. In light of the above concern, we allocate incremental claims from the most recent calendar periods to the validation set (rather than a randomly selected sample) as they are more relevant to the future incremental claims. This is a common partition strategy used in loss reserving literature (e.g. Taylor & McGuire, Citation2016). Therefore, the combination weights estimated from the validation set more accurately reflect the component models' predictive performance on the most recent out-of-sample data. | ||||

| – | Accident Period effects: The standard ensemble strategy usually derives its combination weights by using the whole validation set, which implies each component model will receive a single weight for a given data set. However, it is a very well known fact that the performance of loss reserving models tends to differ by accident periods (Chapter 5 of Taylor, Citation2000, pp. 151–165). Hence, assigning the same weights (for each model) across all accident years might not be optimal, and it may be worthwhile allowing those to change over time. To address this, we allow the set of weights to change at arbitrary ‘split points’. For instance, the set might be calibrated differently for the 10 most recent (immature) accident years. More specifically, we further partition the validation set based on accident periods to train potentially different combination weights, such that the weights can best reflect a models' performance in the different accident period sets. Our paper shows that the ensemble's out-of-sample performance can be substantially improved – based on the whole range of evaluation metrics considered here – by adopting the proposed Accident-Period-dependent weighting scheme. Additionally, we also empirically discuss the choice of number and location of accident year split points. | ||||

| – | Development Period effects: The accident year split described in the previous item requires some care. One cannot strictly isolate and focus on a given set of accident years (think of a horizontal band) and use the validation data of that band only as this will lack data from later development years which are required for the projection of full reserves. Hence, we start with the oldest accident year band (or split set), and when moving to the next band, we (i) change the training set according to the accident year effect argument discussed above, but (ii) add the new layer of validation points to the previously used set of validation data when selecting weights for that band. Note that the data kept from one split to the next is the validation data only, which by virtue of the second item above is always the most recent (last calendar year payments). Hence, this tweak does not contradict the probabilistic forecasting literature's recommendation of using only the data in the most recent periods (see, e.g. Opschoor et al., Citation2017) when selecting weights. | ||||

| – | Data Scarcity: Data is scarce for aggregate loss reserving, particularly for immature accident periods (i.e. the recent accident periods), where there are only a few observed incremental claims available. A large number of component models relative to the number of observations may cause the prediction or weights estimation less statistically reliable (Aiolfia & Timmermann, Citation2006). Our proposed ensemble scheme mitigates this data scarcity issue by incorporating incremental claims from both mature and immature accident periods to train the model weights in immature accident periods. | ||||

The out-of-sample performance of the ADLP is evaluated and compared with single models (model selection strategy), as well as other common model combination methods, such as the equally weighted ensemble, by using synthetic data sets that possess complicated features inspired by real world data.

Overall, we show that our reserving-oriented ensemble modelling framework ADLP yields more accurate distributional forecasts than either (i) a model selection strategy, or (ii) the equally weighted ensemble. The out-performance of the proposed ensembling strategy can be credited to its ability to effectively combine the strengths offered by different loss reserving models and capture the key characteristics of loss reserving data as discussed above. In fact, the mere nature of the challenges described above justify and explain why an ensembling approach is favourable. Finally, we have also developed an R package ADLP, which has been published in CRAN, to promote the usability of the proposed framework.

1.3. Outline of the paper

In Section 2, we discuss the design and properties of component loss reserving models that will constitute the different building blocks for the model combination approaches discussed in this paper. Section 3 discusses how to best combine those component models, and describes the modelling frameworks of the standard linear pool ensemble and the Accident and Development period adjusted Linear Pools (ADLP) that are tailored to the general insurance loss reserving data. The implementation of our framework is not straighforward, and is detailed in Section 4. Section 5 discusses the evaluation metrics and statistical tests used to assess and compare the performance of different models. The empirical results are illustrated and discussed in Section 6. Section 7 concludes.

2. Choice of component models

Given the extensive literature in loss reserving, which offers a plethora of modelling options, we start by defining criteria for choosing models to include in the ensemble – the ‘component models’. We then describe the structure of each of the component models we selected, as well as the characteristics of loss data that they aim to capture.

2.1. Notation

We first define the basic notation that will used in this paper:

i: the index for accident periods, where

j: the index for development periods, where

and J=I

t: the calendar period of incremental claims; t=i+j−1, where i and j denote the accident period and the development period, respectively

: the out-of-sample loss data (i.e. the lower part of an aggregate loss triangle)

: the loss data used for training component models

: the validation loss data for selecting component models and optimising the combination weights;

and

constitute the in-sample loss data (i.e. the upper part of an aggregate loss triangle)

: the observed incremental claims paid in accident period i and development period j

: the estimated incremental claims in accident period i and development period j

: the true reserve, defined as

: the estimated reserve, defined as

: the observed reported claims count occurred in accident period i and development period j

: the observed number of finalised claims in accident period i and development period j

: the predicted mean for the distribution of

(or

for the Log-Normal distribution)

: the predicted variance for the distribution of

(or

for the Log-Normal distribution)

ϕ: the dispersion parameter for the distribution of

; for distributions coming from Exponential Distribution Family (EDF),

is a function of ϕ and

: the linear predictor for incremental claims paid in accident period i and development period j that incorporates information from explanatory variables into the model;

, where

is a link function

: the predicted density by model m evaluated at the observation

m: the index for component models, where

2.2. Criteria for choosing component models

There is a wide range of loss reserving models available in the literature. With an aim to achieve a reasonable balance between diversity and computational cost, we propose the following criteria to select which component models to include in our ensembles:

The component models should be able to fit mechanically without requiring substantial user adjustment in order to avoid the potential subjective bias in model fitting, and to save time spent on manually adjusting each component model.

This can be of particular concern when there is a large number of component models contained in the ensemble. This criterion is consistent with the current literature on linear pool ensembles, where parametric time-series models with minimum manual adjustments being required are used as component models (Gneiting & Ranjan, Citation2013; Opschoor et al., Citation2017).

The component models should have different strengths and limitations so as to complement each other in the ensemble.

This criterion ensures that different patterns present in data can be potentially captured by the diverse range of component models in the ensemble. As Breiman (Citation1996) suggests, having diverse component models could also reduce the potential model correlation, thus improving the reliability and accuracy of the prediction results.

The component models should be easily interpretable and identifiable, and hence are restricted to traditional stochastic loss reserving models and statistical learning models with relatively simple structures.

Although advanced machine learning models, such as Neural Networks, have demonstrated outstanding potential in forecast accuracy in literature (Kuo, Citation2019), they might place a substantial challenge on the interpretability of the ensemble and the data size requirement if they are included in the ensemble. Therefore, their implementation into the proposed ensemble has not been pursued in this paper.

In summary, the ensemble should be automatically generated (1), rich (2) and constituted of known and identifiable components (3).

Note, however, that our framework works for any number and type of models in principle, provided the optimisation described in Section 3 can be achieved.

2.3. Summary of selected component models

Based on the criteria for choosing component models introduced in the previous section, we have selected eighteen reserving models with varying distribution assumptions and model structures from the literature. These include GLM models with various effects and various dispersions, zero-adjusted models, smoothing splines, as well as GAMLSS models with varying dispersions. While Appendix 1 provides a detailed description of those models, Table summarises the common patterns in claim payments data that actuaries could encounter in modelling outstanding claims, and the corresponding component models that can capture those patterns.

Table 1. Summary of component models.

Remark 2.1

Another group of models that are easily interpretable and could be fitted mechanically is the age-period-cohort type of models proposed in Harnau and Nielsen (Citation2018) and Kuang et al. (Citation2008). These models have a close connection to the model with calendar period effects (i.e. ) and the cross–classified model (i.e.

), which will be discussed in more details in Appendix A.2.

3. Model combination strategies for stochastic ensemble loss reserving

In this section, we first define which criterion we will use for determining the relative strength of one model when compared to another. We then review the two most basic ways of using models within an ensemble: (i) the Best Model in the Validation set (BMV), and (ii) the Equally Weighted ensemble (EW). Both are deficient, in that (i) considers the optimal model, but in doing so discards all other models, and (ii) considers all models, but ignores their relative strength. To improve these and combine the benefits of both approaches, both the Standard Linear Pool (SLP) and Accident and Development period adjusted Linear Pools (ADLP) are next developed and tailored to the reserving context. The section concludes with details of the optimisation routine we used, as well as how predictions were obtained.

3.1. Model combination criterion

3.1.1. Strictly proper scoring rules and probabilistic forecasting

We consider ‘strictly proper’ scoring rules, which have wide application in probabilistic forecasting literature (Gneiting & Katzfuss, Citation2014), to measure the accuracy of distributional forecast. Scoring rules assign an numeric score to a distributional forecasting model when an event materialises. Define as the score received by the forecasting model P when the event x is observed, and

as the score assigned to the true model Q. A scoring rule is defined to be ‘strictly proper’ if

always holds, and if the equality holds if and only if P=Q. The definition of proper scoring rules ensures the true distribution is always the optimal choice, thus encouraging the forecasters to use the true distribution (Gneiting & Katzfuss, Citation2014; Gneiting & Raftery, Citation2007). This property is crucial as improper scoring rules can give misguided information about the predictive performance of forecasting models (Gneiting & Katzfuss, Citation2014; Gneiting & Raftery, Citation2007).

Furthermore the performance of a distributional forecasting model can be evaluated by its calibration and sharpness. Calibration measures the statistical consistency between the predicted distribution and the observed distribution, while sharpness concerns the concentration of the predicted distribution. A general goal of distributional forecasting is to maximise the sharpness of the predictive distributions, subject to calibration. By using a proper scoring rule, the calibration and sharpness of the predictive distribution can be measured at the same time. For introduction to scoring rules and the advantages of using strictly proper scoring rules, we refer to Gneiting and Katzfuss (Citation2014); Gneiting and Raftery (Citation2007).

Actuaries commonly employ goodness-of-fit criteria such as the Kolmogorov–Smirnov or Anderson–Darling statistics, to assess the level of agreement between theoretical distributions and empirical distributions. However, it is crucial to recognise the fundamental distinction between evaluating probabilistic forecasts and assessing the goodness-of-fit of a theoretical distribution. In probabilistic forecasting, our objective is to evaluate the predictive distribution based on a single value, as the true distribution of a particular instance is typically unobservable in practical scenarios. Given this consideration, proper scoring rules are better suited for our purposes.

3.1.2. Log score

In this paper, we use the Log Score, which is a strictly proper scoring rule proposed by Good (Citation1992), to assess our models' distributional forecast accuracy. Log score is also a local scoring rule, which is any scoring rule that depends on a density function only through its value at a materialised event (Parry et al., Citation2012). Under certain regularity conditions, it can be shown that every proper local scoring rule is equivalent to the Log Score (Gneiting & Raftery, Citation2007). For full discussion of the advantages of Log Score, we refer to (Gneiting & Raftery, Citation2007; Roulston & Smith, Citation2002).

For a given dataset D, the average Log Score attained by a predictive distribution can be expressed as:

(1)

(1) where

is the predicted density at the observation

, and

is the number of observations in the dataset D.

Remark 3.1

Here, the Log Score is chosen to calibrate model component weights in the validation sets (the latest diagonals). Strictly proper scoring rules are also used to assess the quality if the distributional forecasting properties of the ensembles developed in this paper, but this time applied in the test sets (in the lower triangle). This is further described in Section 5.

3.2. Best model in the validation set

BMV

BMV

Traditionally, a single model, usually the one with the best performance in the validation set, is chosen to generate out-of-sample predictions. In this paper, the Log Score is used as the selection criterion, and the selected model is referred to as the ‘best model in validation set’ – abbreviated ‘BMV’. The model selection strategy can be regarded as a special ensemble where a weight of is allocated to a single model (i.e. the BMV). Although model selection is based on the relative predictive performance of various models, it is usually hard for a single model to capture all the complex patterns that could be present in loss data (Friedland, Citation2010). In our alternative ensembles, we aim to incorporate the strengths of different models by combining the prediction results from various models.

3.3. Equally weighted ensemble

EW

EW

The simplest model combination strategy is to assign equal weights to all component models, which is commonly used as a benchmark strategy in literature (Hall & Mitchell, Citation2007; McDonald & Thorsrud, Citation2011; Ranjan & Gneiting, Citation2010). For this so-called ‘equally weighted ensemble’ – abbreviated ‘EW’, the combined predictive density can be specified as

(2)

(2) where M is the total number of component models, and

is the predicted density from the component model m evaluated at the observation

. As the equally weighted ensemble can sometimes outperforms more complex model combination schemes (Claeskens et al., Citation2016; Pinar et al., Citation2012; Smith & Wallis, Citation2009), we also consider it as a benchmark model combination strategy in this paper.

3.4. Standard linear pool ensembles

SLP

SLP

A more sophisticated, while still intuitive approach is to let the combination weights be driven by the data so that the ensemble's performance can be optimised. A prominent example of such combination strategies in probabilistic forecasting literature is the linear pool approach (Gneiting & Ranjan, Citation2013), which seeks to aggregate individual predictive distributions through a linear combination formula. The combined density function of such ‘standard linear pool’ – abbreviated ‘SLP’ can be then specified as

(3)

(3) at observation

, where

is the weight allocated to predictive distribution m (Gneiting & Ranjan, Citation2013). The output of linear pools, as defined in (Equation3

(3)

(3) ), closely resembles that of finite mixture models, with both being a weighted average of an ensemble of predictive distributions. However, unlike finite mixture models, the calibration of a linear pool ensemble usually involves two stages. In the first stage, the component models are fitted in the training data, and the fitted models will generate predictions for the validation set, which constitute the Level 1 predictions. In the second stage, the optimal combination weights are learned from the Level 1 predictions by maximising (or minimising) a proper score. Since the weights allocated to component models should reflect their out-of-sample predictive performance, it is important to determine the combination weights by using models' predictions for the validation set instead of the training set.

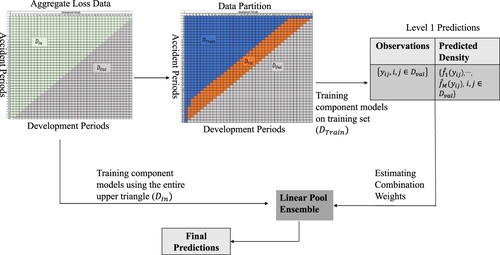

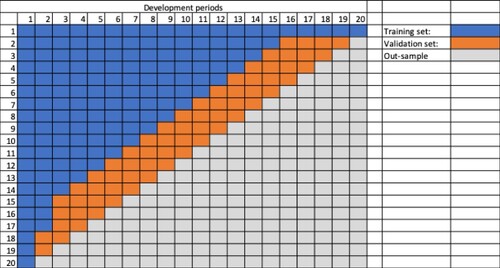

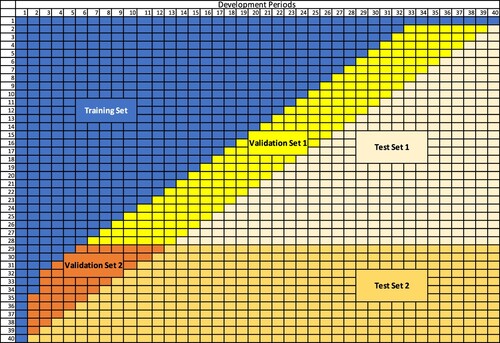

Figure illustrates the modelling framework for constructing a standard linear pool (SLP) ensemble, with the following steps:

Partition the in-sample data (i.e. the upper triangle

) into training set

(shaded in blue) and validation set

(shaded in orange). We allocate the latest calendar periods to the validation set to better validate the projection accuracy of models, which is also common practice in the actuarial literature.

Fit the M component models to the training data (i.e.

), that is, find the model parameters that maximise the Log Score within

(equivalent to Maximum Likelihood estimation).

Use the fitted models to generate predicted densities for incremental claims in the validation set, which forms the Level 1 predictions; Denote the Level 1 predictions as

Estimate the optimal combination weights from the Level 1 predictions by maximising the mean Log-Score achieved by the ensemble, subject to the constraint that combination weights must sum up to one (i.e.

) and each weight should be non-negative (i.e.

). We have then

(4)

(4) where

.

The sum-to-unity and non-negativity constraint on model weights are important for two main reasons. Firstly, those constraints ensure the ensemble not to perform worse than the worst component model in out-of-sample data (proof in Appendix C.2 ). Additionally, the non-negativity constraint can mitigate the potential issue of model correlation by shrinking the weights of some of the highly correlated models towards zero (Breiman, Citation1996; Coqueret & Guida, Citation2020).

Fit the component models in the entire upper triangle

, and then use the fitted models to generate predictions for out-of-sample data

. The predictions are combined using the optimal combination weights determined in step 4.

3.5. Accident and development period adjusted linear pools

ADLP

ADLP

driven by general insurance characteristics

driven by general insurance characteristics

The estimation of the SLP combination weights specified in (Equation4(4)

(4) ) implies that each model will receive the same weight in all accident periods. However, as previously argued, loss reserving models tend to have different predictive performances in different accident periods, making it potentially ideal for combination weights to vary by accident periods. In industry practice, it is common to divide the aggregate claims triangle by claims maturity and fit different loss reserving models accordingly (Friedland, Citation2010).

For instance, the PPCF model tends to yield better predictive performance in earlier accident periods (i.e. the more mature accident periods) by taking into account the small number of outstanding claims. In contrast, the PPCI model and the Chain-Ladder tend to have better performance in less mature accident periods as they are insensitive to claims closure (Chapter 5 of Taylor, Citation2000, pp. 151–165). Therefore, in practice, actuaries may want to assign a higher weight to the PPCF model in earlier accident periods and larger weights to the PPCI or Chain-Ladder model in more recent accident periods (Chapter 5 of Taylor, Citation2000, pp. 151–165).

The argument above can be applied to other loss reserving models. To take into account the accident-period-dependent characteristics of loss reserving models, we partition the loss reserving data by claims maturity, with the first subset containing incremental claims from earlier accident periods (i.e. the mature data) and the second subset containing incremental claims from more recent accident periods (i.e. the immature data). The data is thus split an arbitrary number of times K. The weight allocated to component model m for projecting future incremental claims in the kth subset (i.e. ) is then optimised using the kth validation subset (denoted as

):

(5)

(5) subject to

. The choice of split points, as well as

require care, as discussed below. We will mainly focus on the case K=2, but this can be easily extended to K>2; see Remark 3.4 and Appendix D.1, which consider K=3 and briefly discuss how K could be chosen.

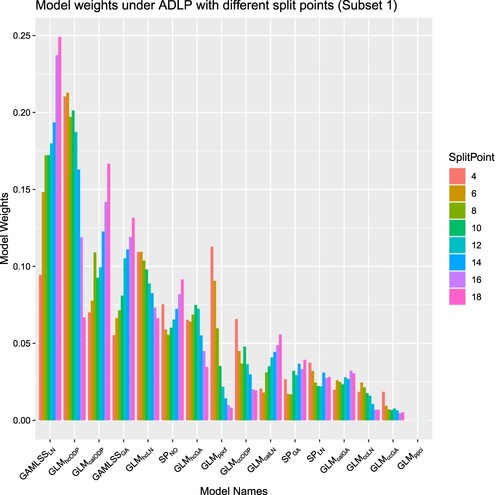

Table summarises multiple ensembles constructed by using different split points between the two subsets and the corresponding proportion of data points in the upper triangle in the subset 1. We do not test split points beyond accident period 33 due to the scarcity of data beyond this point. Results for all those options will be compared in Section 6, with the objective of choosing the optimal split point.

Table 2. Details of two-subsets data partition strategies.

Remark 3.2

The ‘empiricalness’ of the choice of split point might be seen as a limitation. It is hard to avoid as there might not be a closed formula for the best split point. While this does require extra work each period, the additional computation time is not significant. Also, many reserving models are iterative, suggesting minimal shifts in optimal partitions with a period's worth of extra experience. This would suggest that a relatively stable choice of split point can be made early in the analysis, and maintained over future periods, assuming consistent historical experience.

We now turn our attention to the definition of the validation sets to be used in (Equation5(5)

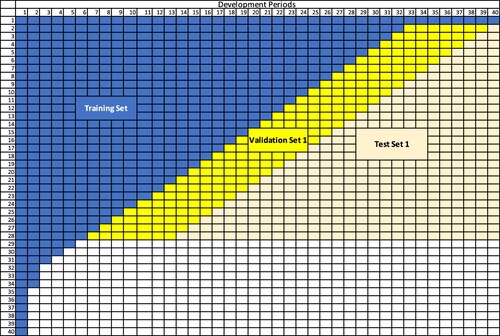

(5) ). This pure ‘band’ approach leads to the definitions depicted in Figure . Unfortunately this may ignore very important development period effects, because the second validation set (in orange) does not include any of the development periods beyond 12.

Figure 2. Data Partition Diagram: An illustrative example for ensembles that only consider impacts from accident periods.

More specifically, the training scheme for combination weights specified by (Equation5(5)

(5) ) implies the weights in Subset 2 (i.e.

) only derive from Subset 2 validation data (i.e.

). However,

does not capture the late development period effects in out-of-sample data: as shown in Figure ,

(shaded in orange) contains incremental claims from Development Period 2 to 12. However,

(shaded in light orange) comprises incremental claims from Development Period 2 to 40. Depending on the lines of business, the impact from late development periods on claims could be quite significant. For example, for long-tailed lines of business, such as the Bodily Injury liability claims, it usually takes a considerably long time for the claims to be fully settled, and large payments can sometimes occur close to the finalisation of claims (Chapter 4 of Taylor, Citation2000, pp. 151–165). In such circumstances, adequately capturing the late development period effects is critically important as missing those effects tends to substantially increase the risk of not having sufficient reserve to cover for those large payments that could occur in the late development periods.

This situation can be exacerbated by the data scarcity issue in for ensembles with late split points. For instance, partition strategy 17 only uses claims from Accident Period 34 to 40 in

, which corresponds to only 20 observations. However, those 20 observations are used to train the 18 model weights. When the data size is small relative to the number of model weights that need to be trained, the estimation of combination weights tend to be less statistically reliable due to the relatively large sampling error (Aiolfia & Timmermann, Citation2006; Bellman, Citation1966).

In light of the above concerns, we modify the training scheme such that the mature claims data in , which contains valuable information about late development period effects, can be utilised to train the weights in less mature accident periods. Additionally, since both

and

are used to train the weights in the second subset, the data scarcity issue in

can be mitigated. Under the modified training scheme, (Equation5

(5)

(5) ) is revised as

(6)

(6) subject to

. Since the combination weights estimated using (Equation6

(6)

(6) ) take account into impacts and features related to different Accident and Development period combinations, we denote the resulting ensembles as the Accident and Development period adjusted Linear Pools (ADLP).

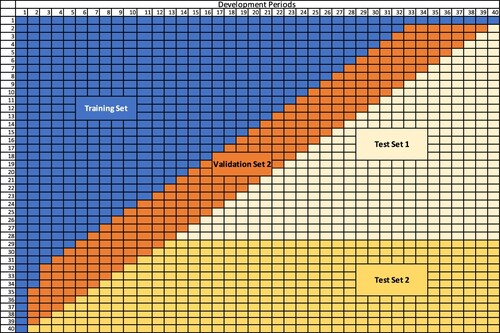

An example of data partition strategy under ADLP ensembles with K=2 is given in Figure and , where the new second validation set overlaps the previous first and the second validation set. Since the bottom set uses validation data from all accident periods, its results will hence correspond to the SLP results.

Based on the different partition strategies in Table , we have 17 ADLP ensembles in total. We use to denote the ADLP ensemble constructed from the

partition strategy. The fitting algorithm for ADLP is similar to the algorithm for fitting SLP ensembles described in Section 3.4, except for Step 4, where the combination weights are estimated using (Equation6

(6)

(6) ) instead of (Equation5

(5)

(5) ).

Remark 3.3

The partition of training and validation data is not new in the aggregate loss reserving context. In Lindholm et al. (Citation2020) and Gabrielli et al. (Citation2020), the data partition is performed on the individual claims level. The loss triangle for models training is constructed by aggregating half of the individual claims, and the validation triangle is built by grouping the other half of the individual claims. This is to accommodate the fact that only aggregate reserving triangles with size 12 x 12 are available in the original study (Gabrielli et al., Citation2020), which makes partitioning based on the aggregate level difficult due to data scarcity issue. In such scenario, this approach is advantageous as it efficiently uses the limited loss data by using the whole triangle constructed on the validation set to assess the model. Since a relatively large reserving triangle (i.e. 40 x 40) is available in this study and we are more interested on the component models' performance on the latest calendar periods to allocate combination weights based on individual projection accuracy, we decide to directly partition the loss triangle on the aggregate level.

Another approach for partitioning the aggregate loss reserving data is the rolling origin method (Al-Mudafer et al., Citation2022; Balona & Richman, Citation2020), which does not rely on the availability of individual claims information. However, this approach is mainly designed for selecting candidate models based on their projection accuracy rather than model combination.

The data partition strategy proposed in this paper is novel in three different ways. Firstly, it is dedicated to the combination of loss reserving models, as it explicitly considers the difference in component models' performance in different accident periods by partitioning the data by claims maturity. The additional partition step is generally unnecessary for model selection and has not been undertaken in the aforementioned loss reserving literature to our knowledge. However, as discussed in Section 3.5 and further illustrated in Section 6.2.2, this step is critical for combining loss reserving models as it allows the ensemble to effectively utilise the strengths of component models in different accident periods. Secondly, the proposed partition strategy takes into account the combination of effects from accident periods and development periods in the triangular data format by bringing forward the validation data in older accident periods to train the combination weights in subsequent accident periods. Thirdly, this paper is also the first one that investigates the impact of various ways of splitting the validation data on the ensemble's performance in the aggregate loss reserving context. This paper has found that the ensemble's performance can be sensitive to the choice of split point, which has not been explicitly addressed in the current literature. Motivated by the findings above, the optimal point to split the validation data (see detailed illustrations in Section 6.2.2) has been proposed in this paper, which might provide useful guidance for implementation.

Remark 3.4

This paper focuses on the case when K=2 (i.e. partitioning the data into two subsets). However, one can divide the data into more subsets and train the corresponding combination weights by simply using K>2 in (Equation6(6)

(6) ). To illustrate this idea, we provide an example when K=3. For instance, for

, another split point, say accident period 15, can be introduced after the first split at the accident period 5. We denote the resulting ensemble as

. Similarly, the third subset can be introduced to other ADLP ensembles. Table shows some examples of the three-subsets partition strategies based on the current ADLP ensembles.

Table 3. Details of three-subsets data partition strategies.

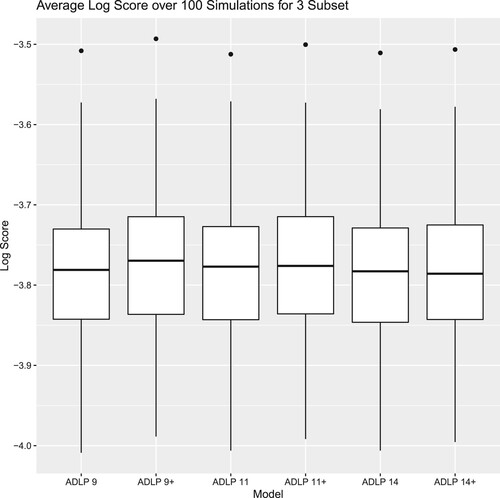

The details of the performance analysis of the ensembles listed in Table is given in Appendix D.1. Overall, there is no significant difference between the out-of-sample performance of the ADLP ensembles and the corresponding

ensembles. At least with the data set used in this study, we do not find enough evidence to support the improvement over the current ADLP ensembles by introducing more subsets for training the combination weights. This phenomenon might be explained by the small difference in the performance of component models after the accident period 20, limiting the ensemble's potential benefit gained from the diversity among the performance of component models (Breiman, Citation1996). Therefore, adding additional subsets in the later accident periods seems redundant at least in this example.

3.6. Relationship to the stacked regression approach

Another popular ensemble scheme is the stacked regression approach proposed by Breiman (Citation1996), which uses the Mean Square Error (MSE) as combination criterion. The idea of stacked regression approach has been applied in the mortality forecasts literature. For detailed implementation, one may refer to Kessy et al. (Citation2022) and Li (Citation2022). In Lindholm and Palmborg (Citation2022), the authors propose averaging the predictions from an ensemble of neural networks with random initialisation and training data manipulation to obtain the final mortality forecast, which could be thought of as a special case of stacked regression approach where an equal weight is applied to each component model.

In summary, under the stacked regression approach, the weights allocated to component models are determined by minimising the MSE achieved by the combined forecast:

(7)

(7) where

is the predicted mean from component model m.

As Yao et al. (Citation2018) suggest, both stacked regression and linear pools correspond to the idea of ‘stacking’. When MSE is used as optimisation criterion (i.e. the stacked regression approach proposed by Breiman (Citation1996)), the resulting ensemble is a stacking of means. When using the Log Score, the corresponding ensemble is a stacking of predictive distributions.

Although both the stacked regression and linear pools are stacking methods, their objectives are different: the stacked regression seeks to optimise the ensemble's central forecast accuracy, whereas linear pools strive to optimise the calibration and sharpness of distributional forecast. Despite showing success in combining point forecasts, stacked regression is not recommended to combine distributional forecasts (Knüppel & Krüger, Citation2022; Yao et al., Citation2018). The reasons are twofold as explained below.

Firstly, the combination criterion under the stacked regression approach, which is the Mean Squared Error, is not a strictly proper scoring rule (Gneiting & Raftery, Citation2007; Knüppel & Krüger, Citation2022). As discussed in Section 3.1, a strictly proper scoring rule guarantees that the score assigned to the predictive distribution is equal to the score given to the true distribution if and only if the predictive distribution is the same as the true distribution. However, since the MSE only concerns the first moment, a predictive distribution with misspecified higher moments could potentially receive the same score as the true distribution. Therefore, using MSE as the evaluation criterion does not give forecasters the incentives to correctly model higher moments. For an illustrative example, one might refer to Appendix C.3. In contrast, the Log Score is a strictly proper scoring rule, which evaluates the model's performance over the entire distribution, and encourages the forecaster to model the whole distribution correctly (Gneiting & Raftery, Citation2007). This property is particularly desirable for loss reserving, where the model's performance over the higher quantile of the loss distribution is also of interest to insurers and regulators.

The second advantage of using the linear pooling scheme over the stacked regression approach in combining distributional forecasts comes from the potential trade-off between the ensemble's performance on the mean and variance forecasts. In short, minimising the MSE will always improve the mean forecasts, but might harm the ensemble's variance forecasts when the individual variances are unbiased or over-estimated (Knüppel & Krüger, Citation2022). An illustrative proof of this idea can be found in Appendix C.3. To balance the trade-off between mean and variance forecasts, the combination criterion should take into account the whole distribution (or at least the first two moments). This can be achieved by using a strictly proper scoring rule (e.g. the Log Score).

4. Implementation of the SLP and ADLP

This section is dedicated to the implementation of the SLP and ADLP framework. It provides theoretical details for such implementation, and it complements the codes available at https://github.com/agi-lab/reserving-ensemble.

4.1. Minorisation–Maximisation strategy for log score optimisation

To solve the optimisation problem specified in (Equation5(5)

(5) ) and (Equation6

(6)

(6) ), we implement the Minorisation–Maximisation strategy proposed by Conflitti et al. (Citation2015) which is a popular and convenient strategy for maximising the Log Score. Instead of directly maximising the Log Score, a surrogate objective function is maximised. Define

(8)

(8) where

is an arbitrary weight, λ is a Lagrange Multiplier, and where

. Note that for the SLP, we have

. The weights are then updated iterativily by maximising (Equation8

(8)

(8) ):

(9)

(9) By setting

we have

Now, using the constraint

yields

Therefore,

, and the updated weights in each iteration become:

(10)

(10) where the weights will be initialised as

such that the constraints

and

can be automatically satisfied in each iteration. The iterations of weights are expected to converge due to the monotonically increasing property of the surrogate function (Conflitti et al., Citation2015). To promote computational efficiency, the algorithm is usually terminated when the difference between the resulting Log Scores from the two successive iterates is less than a tolerable error ϵ (Conflitti et al., Citation2015). We set

, which is a common tolerable error used in the literature. An outline for the Minorisation–Maximisation Strategy is given in Algorithm 1.

Note that this process can be used to optimise Equation4(4)

(4) , as this is a special case of the ADLP with K=1.

4.2. Prediction from ensemble

Based on (Equation3(3)

(3) ), the central estimate of incremental claims by the ensemble can be calculated as follows:

(11)

(11) To avoid abuse of notation,

in this section can be either the weight under SLP or the weight under ADLP. For ADLP ensembles,

is set to

in the calculation below if

.

To provide a central estimation for reserve, we simply aggregate all the predicted mean in the out-of-sample data: . Since insurers and regulators are also interested in the estimation of

reserve quantile, simulation is required. The process for this simulation is outlined in Algorithm 2.

5. Comparison of the predictive performance of the ensembles

5.1. Measuring distributional forecast accuracy: log score

To assess and compare the out-of-sample distributional forecast performance of different models, the Log Score is averaged across all the cells in the lower triangle :

(12)

(12) Since loss reserving models tend to perform differently in different accident periods, we also summarise the Log Score by accident periods:

(13)

(13) where

denotes the set of out-of-sample incremental claims in accident period i.

Remark 5.1

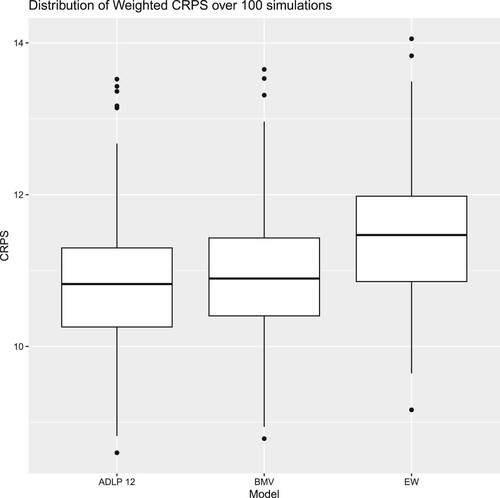

Besides Log Score, another popular proper score in literature is the Continuously Ranked Probability Score (CRPS) (Gneiting & Ranjan, Citation2011; Opschoor et al., Citation2017). This paper (and associated R package) also incorporates the CRPS as an alternative evaluation metric for the methods proposed. Further details and illustrative results are provided in Appendix D.3. Both scores generally lead to consistent conclusions.

5.2. Statistical tests

To determine whether the difference between the performance of two competing forecasting models is statistically significant, statistical tests are necessary. We consider the Diebold-Mariano test in this paper due to its high flexibility and wide application in probabilistic forecasting literature (Diebold & Mariano, Citation2002; Gneiting & Katzfuss, Citation2014). Diebold-Mariano test is one of the few tests that does not require loss functions to be quadratic and the forecast errors to be normally distributed, which is what we need in our framework. Furthermore, the Diebold-Mariano test is specifically designed to compare forecasts and inherently emphasises out-of-sample performance (Diebold, Citation2015). This feature aligns well with our objectives, as actuaries conducting reserving exercises are primarily concerned with the accuracy of projections. The performance of the Diebold-Mariano test has been discussed in Costantini and Kunst (Citation2011), who discovered a tendency for the test to favour simpler benchmark models. As a result, the Diebold-Mariano test serves as a conservative criterion for evaluating the performance of our proposed ensemble strategies.

Consider the null hypothesis stating that model G has equal performance as model F. The Diebold–Mariano test statistic can then be specified as Diebold and Mariano (Citation2002)

(14)

(14) where

and

are the average scores (here, Log Score) received by models F and G in out-of-sample data, and where

is the estimated standard deviation of the score differential. That is,

(15)

(15) Assuming the test statistic to asymptotically follow a standard Normal distribution, the null hypothesis will be rejected at the significance level of α if the test statistic is greater than the

quantile of the standard Normal distribution.

5.3. Measuring performance on the aggregate reserve level

On the aggregate level, an indication of the performance of the central estimate for reserve by model m can be provided by the relative reserve bias:

(16)

(16) Since insurers and regulators are also interested on the estimation of the

quantile of reserve, a model's performance in this quantile can be indicated by its relative reserve bias at the

quantile:

(17)

(17) where

is the

quantile of the true outstanding claims, and

is the estimated

quantile by model m. The calculation details for

can be found in Appendix C.1.

Remark 5.2

Of course, and

are not observable in practice. Thanks to our use of simulated data (see Section 6.1) though, we are able to calculate those from the full simulations and here have been calculated using simulated data in the bottom triangles. Furthermore, as these metrics are not proper scoring rules, care should be taken in usage of these results in ranking models.

6. Results and discussion

After presenting the ensemble modelling framework, this section provides numerical examples to illustrate the out-of-sample performance of the proposed linear pool ensembles SLP and ADLP, as well as the BMV and EW. Following the introduction of the synthetic loss reserving data in Section 6.1, Section 6.2.1 and Section 6.2.2 analyse the distributional forecast performance of the SLP and the ADLP ensembles on incremental claims level, respectively. Section 6.2.3 analyses the difference in models' performance using statistical test results. The performance of the proposed ensembles is also analysed at the aggregate reserve level in Section 6.3. Finally, we share some insights on the properties of component models by analysing the optimal combination weights yielded by our framework.

6.1. Example data

Our models are tested using the SynthETIC simulator (Avanzi et al., Citation2021), from which triangles for aggregate paid loss amounts, reported claim counts, and finalised claim counts are generated and aggregated into 40x40 triangles. To better understand the models' predictive performance and test the consistency of the performance, 100 loss data sets are simulated, and models are fit to each of the 100 loss data sets.

Simulated data is preferred in this study as it allows the models' performance to be assessed over the distribution of outstanding claims, and the quality of distribution forecast is the main focus of this paper. This paper uses the default version of SynthETIC, with the following key characteristics (Avanzi et al., Citation2021):

Claim payments are long-tailed: In general, the longer the settlement time, the more challenging it is to provide an accurate estimate of the reserve due to more uncertainties involved; (Jamal et al., Citation2018)

High volatility of incremental claims;

Payment size depends on claim closure: The future paid loss amount generally varies in line with the finalisation claims count (i.e. the number of claims being settled), particularly in earlier accident periods.

The key assumptions above are inspired by features observed in real data and aim to simulate the complicated issues that actuaries can encounter in practice when modelling outstanding claims (Avanzi et al., Citation2021). With such complex data patterns, it is challenging for a single model to capture all aspects of the data. Therefore, relying on a single model might increase the risk of missing important patterns present in the data.

6.2. Predictive performance at the incremental claims level

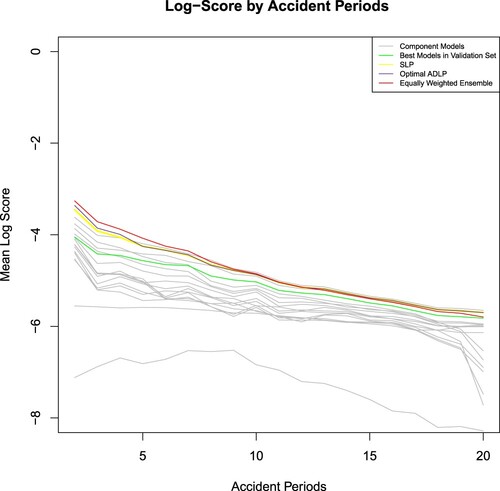

In terms of distributional forecast accuracy, the linear pool ensembles SLP and ADLP outperform both the EW ensemble and the BMV at the incremental claims level, measured by the average Log Score over 100 simulations. Furthermore when appropriately partitioning the validation data into two subsets, the performance of the ADLP is better than that of the SLP.

6.2.1. SLP: the standard linear pool ensemble

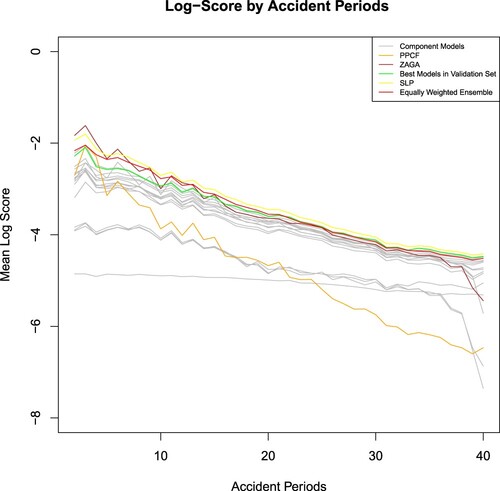

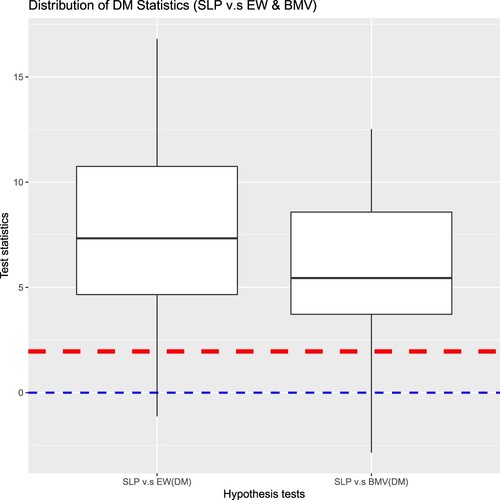

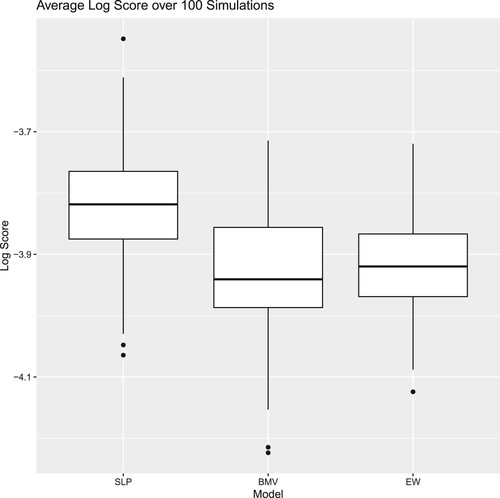

The distribution of the Log Score received by SLP, BMV, EW (i.e. the equally weighted ensemble) is illustrated in Figure . Even without any partition of the validation set for training combination weights, the linear pool ensemble achieves a higher average Log Score than its competing strategies (which means it is preferred), with similar variability in performance.

Figure 5. Distribution of Log Score over 100 simulations (higher is better): comparison among SLP, BMV and EW.

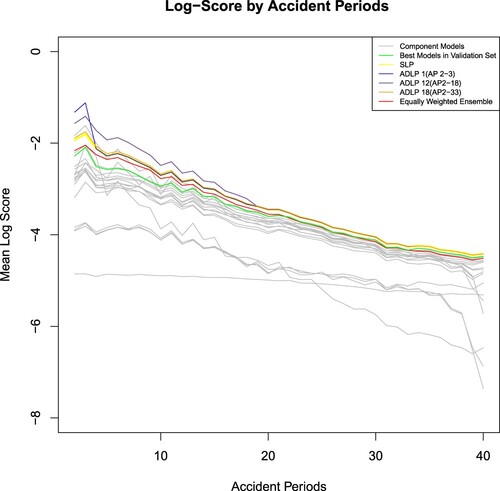

The performance of different methods in each accident period is illustrated in Figure , which shows the Log Score by accident periods being calculated using (Equation13(13)

(13) ), averaging over 100 simulations. The SLP strictly outperforms the BMV in all accident periods. Although the BMV, which is the GAMLSS model with Log-Normal distributional assumption (i.e.

) in this example, has the highest average Log Score among the component models, it does not yield the best performance in every accident period. For instance, the BMV under-performs the PPCF model in accident periods 3, and it is beaten by ZAGA GLM in earlier accident periods. Therefore, the common model selection strategy, which relies on the BMV to generate predictions for all accident periods, might not be the optimal solution.

Additionally, the SLP strictly outperforms the equally weighted ensemble after accident period 20. The under-performance of the equally weighted ensemble might be explained by the relatively poor performance of several models in late accident periods, which drag down the Log Score attained by the equally weighted ensemble. This relative poor performance is due to the fact that some models are more sensitive to data scarcity in more immature (later) accident years. For instance, the cross-classified models assume a separate parameter for each accident period, and later accident periods have little data available for training. Therefore, despite the simplicity of the equally weighted ensemble, blindly assigning equal weight to all component models tends to ignore the difference in the component models' strengths and might increase the risk of letting a few inferior models distort the ensemble's performance.

However, there is no substantial improvement over the equally weighted ensemble brought by SLP at the earlier half of the accident periods. The unsatisfactory predictive performance of SLP at earlier accident periods (i.e. mature accident periods) might be explained by the fact that a component model receives the same weight across all accident periods under the SLP, which is what motivated the development of the ADLP in Section 3.5. More specifically, and in this example, although the PPCF and the ZAGA model have relatively low overall Log Score due to their poor performance in immature accident periods, they have the best predictive performance among the component models at mature accident periods. However, since the weight allocated to each model reflects its overall predictive performance in all accident periods, the PPCF and the ZAGA GLM are assigned small weights as shown in Section 6.4, regardless of their outstanding predictive performance in earlier accident periods. The ADLP offers a solution to this problem, as illustrated in the following section.

6.2.2.

to

to

: linear pool ensembles with different data partition strategies

: linear pool ensembles with different data partition strategies

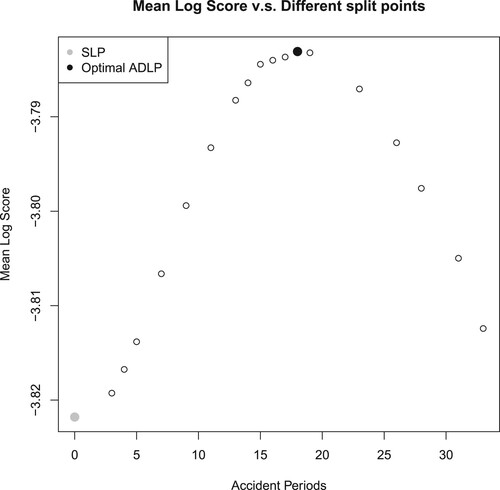

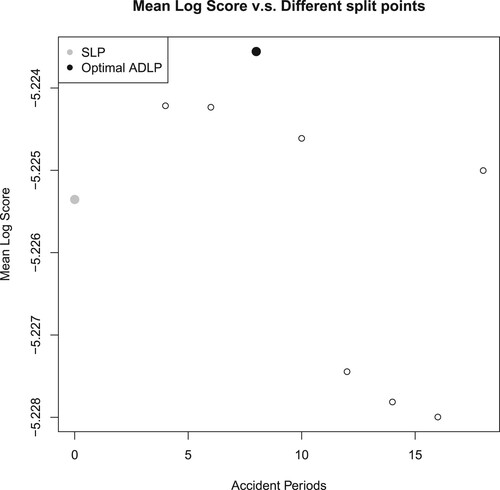

Figure plots the out-of-sample Log Score attained by ADLP ensembles with the different split points specified in Table , averaging over 100 simulations. The grey point represents the mean Log Score attained by SLP, which is a special case of ADLP ensemble with split point at accident period 0 (i.e. there is no subset). All the ADLP ensembles outperform SLP based on Log Score, supporting the advantages of data partitioning as discussed in previous sections.

As per Figure , the mean Log Score forms an inverse U shape, with the peak when splitting at accident period 18. The shape of the mean Log Score can be explained by the following reasons. If the split point is too early (e.g. split point at accident period 3), there is little improvement brought by ADLP over SLP due to the small number of data points in the first subset. This idea can also be illustrated in Figure , which dissects the ensembles' performance into accident periods. Although , represented by the orange line in Figure , has the best performance in the second and third accident period, its Log Score falls to the SLP's level afterward. Therefore, the overall difference between

and SLP is relatively tiny. As more accident periods are incorporated into the first subset, there is a greater overall difference between ADLP ensembles and SLP, which explains the increasing trend of mean Log Score before accident period 18.

Figure 8. Log score plot by accident periods (higher is better): comparison among different partition strategies.

However, for late split points (e.g. the split point at accident period 33), the performance of ADLP ensembles also tends to SLP as shown in Figure . This is because the first subset of those ADLP ensembles with late split points covers most of the accident periods, and the SLP can be interpreted as a special case of ADLP ensembles with one set that includes all accident periods. Additionally, late split points might make it hard for the ensemble to focus on and thus take advantage of the high-performing models in earlier accident periods.

Based on the above reasoning, an ideal partition strategy should balance the ensemble's performance in both earlier and later accident periods. In this study, the optimal split point is attained at accident period 18. As per Figure , , which has a split point on accident period 18, substantially outperforms its competitors in the first fifteen accident periods by effectively capturing the high Log Score attained by a few outstanding models.

However, we want to acknowledge that this example is for illustration purposes. The recommendation made above may be more relevant to the loss data sets that share similar characteristics with the synthetic data used in this paper. For practical application, if there is no substantial difference among the performance of component models in earlier accident periods, one may consider simply allocating the first half of accident periods to the first subset.

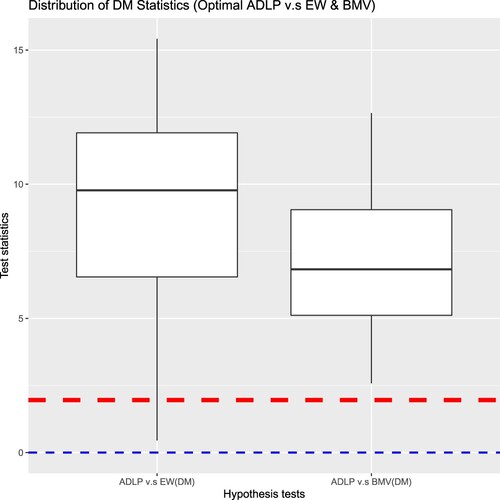

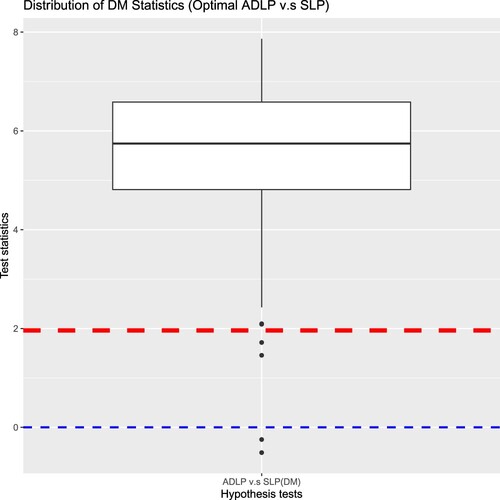

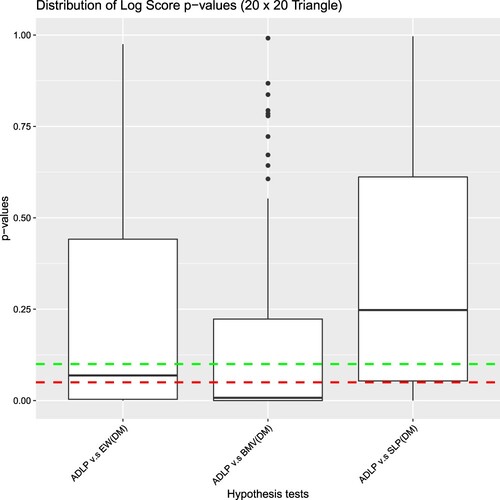

6.2.3. Analysing the difference in distributional forecast performance using statistical tests

The Log Scores of the ADLP in Figure are clearly higher than that of the SLP, but we haven't established whether this difference is statistically significant yet. To analyse the statistical significance of the improvement over the traditional approaches brought by the ADLP ensembles, we run the Diebold-Mariano test on each simulated dataset (see (Equation14(14)

(14) )), with the null hypothesis stating that the two strategies have equal performance. In particular, we test

, which has the best predictive performance measured by Log Score, against the EW and BMV, respectively. If the null hypothesis is rejected, the

should be favoured.

Figure illustrates the distribution of test statistics under the Diebold-Mariano test, with the red dashed line marking the critical value at the customary significance level. The test statistics above the red dashed line indicate those datasets where the null hypothesis has been rejected, and the number of rejections under each test is summarised in Table . Since the null hypothesis is rejected for most simulations and almost all the test statistics are above zero (as marked by the blue dashed line),the DM test implies a decision in favour of

.

Table 4. The number of datasets (out of 100) when is rejected under

significance level.

We now investigate where the difference in the performance between and BMV or the EW comes from. Since we expect the improvement over the traditional approaches to partially come from the ability of linear pools to optimise the combination of component models based on Log Score, firstly, we test the Standard Linear Pool (SLP) against the traditional approaches for each simulated data set. As per Figure and Table , the null hypothesis is rejected for most simulated data sets, and most of the test statistics are positive, SLP is favoured over BMV and the EW in most cases.

As per the discussion in Section 3.5, we expect to improve SLP by better taking into the typical reserving characteristics found in the simulated data set. Based on the results shown in Table and Figure ,

is favoured by the statistical tests in most simulated data sets.

Therefore, the outstanding performance of can be decomposed into two parts. Firstly, it improves the performance of the model selection strategy and the equally weighted ensemble, which can be credited to the advantages of linear pools in model combination. Secondly, as a linear pool ensemble being specially tailored to the general insurance loss reserving purpose,

further improves the performance of SLP by considering the impact from accident periods and development periods on the ensemble's performance.

6.3. Predictive performance on aggregate reserve level

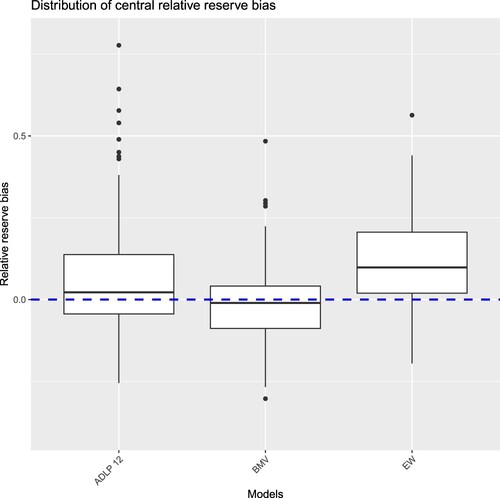

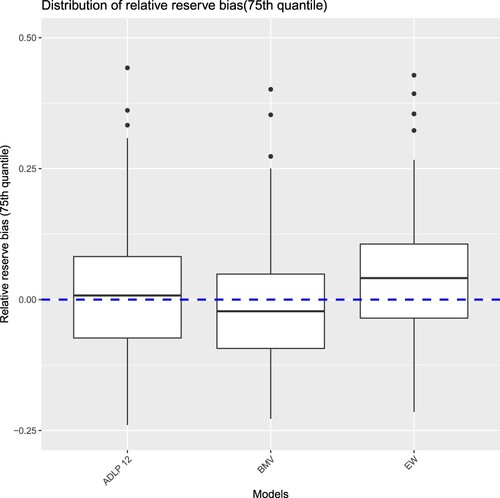

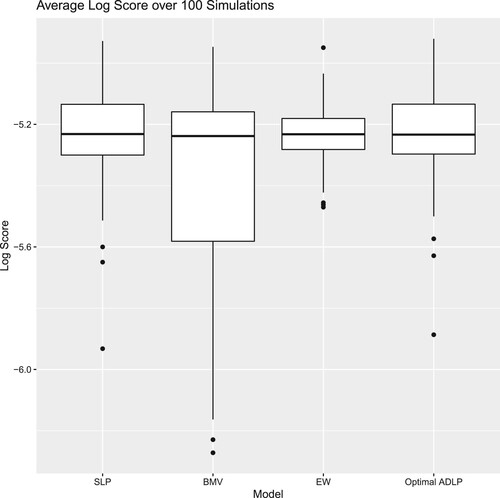

Since the central estimation of reserve and its corresponding risk margin at the quantile are required to be reported by insurers under Australian prudential regulatory standards (APRA, Citation2019), we took this as an example of actuarial application and have also calculated those two quantities following the methodology specified in Section 5.3 in order to gain some insights regarding the performance of the three different models (ADLP, EW, and BMV). The box plots in Figures and illustrate the distributions of relative reserve bias for central and quantile estimations, respectively, across 100 simulations. A blue dashed line indicates the point of

bias in each plot.

The equally weighted ensemble displays a notably significant inclination towards overestimation, evident under both central and quantile estimation approaches. Although the BMV demonstrates relatively good performance in central reserve estimation, it frequently under-predicts at the quantile. In contrast, the ADLP ensemble experiences fewer instances of underestimation or overestimation biases under both central and quantile reserve estimation methods.

Remark 6.1

Note that, as discussed in Section 5.3, the reserve bias metrics are not proper scoring rules, and so care needs to be taken in interpreting the above results. Nevertheless, we consider the above useful in terms of developing intuition regarding the performance of each model.

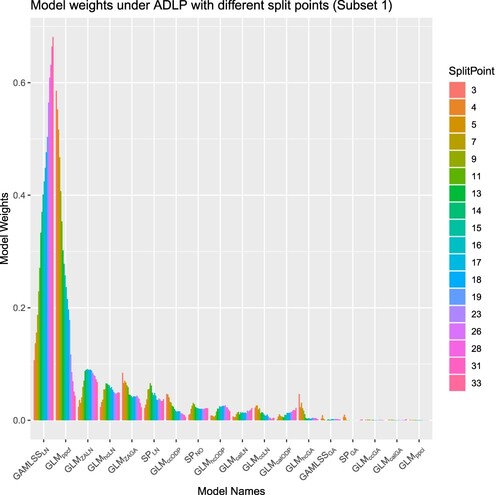

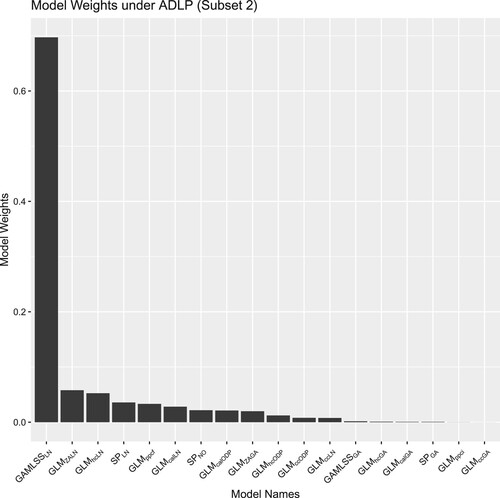

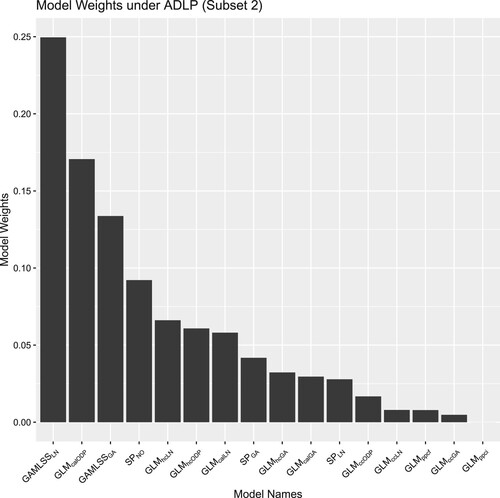

6.4. Analysis of optimal model weights

Analysing the combination weights can potentially help modelers gain insights about the component models' properties, and examples can be found in CitationTaylor (Chapter 5 of Citation2000, pp. 151–165) and Kessy et al. (Citation2022). Therefore, we extract the combination weights from ADLPs, with Figure plotting the model weights in subset 1 using different split points, and Figure illustrating the model weights in subset 2. Since model weights under ADLPs in the second subset are trained using the whole validation data – remember (Equation6(6)

(6) ), they will be identical to the combination weights under the SLP.

Several models are worth highlighting. The weight allocated to , which is the BMV (identified as the model with the highest Log Score averaging over different accident periods) in this example, is increasing with the split point as shown in Figure . Since the combination weights reflect the relative validation performance of component models in the ensemble,

tends to have better performance in more recent accident periods as its weight increases when data from later accident periods are added to the validation set. Indeed, as per Figure ,

is allocated with the largest weight in the second subset. However, the weight allocated to

has demonstrated an opposite pattern: there is a substantial weight given to

with the earliest split point, whereas the weight decreases to a small value as the split point increases. This phenomenon might be explained by the ability of

to capture claims finalisation information. Since claims in earlier accident periods are close to being finalised,

tends to yield better predictive performance in earlier accident periods by taking this factor into account. This property of PPCF models has also been noted by CitationTaylor (Chapter 5 of Citation2000, pp. 151–165).

Besides analysing individual models, we also analyse the weights' pattern at the group level by clustering the component models based on their structure and distributional assumptions. In terms of model structure, all models with standard cross-classified structures are assigned with small weights based in Figures and . Due to the relatively complex characteristics of the simulated data, the standard cross-classified models tend to perform poorly as they only consider the accident and development period effects. We have also found that only one of them will be allocated with a large weight for those models with similar structures. In fact, the models with the four largest weights in both Figures and all have different structures. This finding highlights the advantage of using linear pools as the weights of potential correlated models can be shrunk towards zero by imposing the non-negativity constraint on weights.

From the angle of distributional assumptions, all models with ODP distributional assumptions are assigned relatively small weights, whereas models with Log-Normal distributional assumptions are allocated larger weights. Due to the relatively large tail-heaviness of incremental claims' distribution, Log-Normal tends to provide a better distributional fit as it has a heavier tail than the ODP distribution. Additionally, we have also found that Zero-Adjusted Gamma distribution (ZAGA) is assigned with a larger weight when the split occurs earlier as shown in Figure . The weight allocation might be explained by the tendency for a greater proportion of zero incremental claims in earlier accident periods as the claims from earlier accident periods are usually closer to their maturity: these are the ones that are the most likely to have 0 claims in the very late development periods (the later accident years haven't reached that stage in the training set). Since the design of the ZAGA model allows the probability mass of zero incremental claims to be modeled as a function of development periods (i.e. the closeness of claims to their maturity), ZAGA tends to yield better performance on when only the most mature accident periods are used. Interestingly, the Zero-Adjusted Log-Normal (ZALN) model has been assigned with small weights for earlier accident periods splits, which might be explained by the fact that it provides overlapping information with the ZAGA model.

6.5. Evaluating performance across varying data sizes

To assess the robustness of our results against different data sizes, we conducted additional tests using a reserving triangle, which represents the aggregation of claims at semi-annual levels. To ensure consistency, we employed the same sets of simulation parameters from the SynthETIC generator to simulate the claims payments in both cases.

Using a similar partition strategy as the case, the

triangle is split into a training set, a validation set and a out-of-sample set to build the ensembles and evaluate models' performance. This split is illustrated in Figure in Appendix D.2.

Following the same modelling framework as in Section 3, the results for the triangle are obtained and listed in Appendix D.2. In summary, the ADLP ensembles continue to out-perform the SLP, the equally weighted ensemble and the BMV in most simulations based on the Log Score, even when considering the smaller triangle size. Based on the statistical test results, the majority of p-values falls below the conventional

level when assessing the score differential between the ADLP and the BMV (indicating statistical significance). Although the count of statistically significant outcomes experiences a slight reduction when assessing the performance difference between the ADLP and the equally weighted ensemble, it remains substantial, constituting over half of the total simulations. However, the difference between SLP and ADLP becomes less significant as the triangle size decreases. This suggests that (unsurprisingly) simpler data partition strategies may be suitable when dealing with relatively small triangle sizes.

As for the change in the composition of the ensembles, we have found (again unsurprisingly) that more parsimonious models receive larger weights on the triangle. Since there are no instances of zero incremental claims at higher aggregation levels, models utilising zero-adjusted distributions (specifically, ZALN and ZAGA) are excluded from the ensembles before fitting.

The results above suggest that, while it may be possible to apply our ensembling framework to even smaller triangles, the added value of doing so might not be as significant. In practice and nowadays, we expect that most companies would be in a position to analyse data at sufficient level of granularity in order to reap the potential benefits of the approach developed in this paper.

7. Conclusions

In this paper, we have designed, constructed, analysed and tested an objective, data-driven method to optimally combine different models for reserving. Using the Log Score as the combination criterion, the proposed ensemble modelling framework has demonstrated its potential in yielding high distributional forecast accuracy.

Although the standard ensembling approach (e.g. the standard linear pool) has demonstrated success in non-actuarial fields, it does not yield the optimal performance in the loss reserving context considered in this paper. To address the common general insurance loss data characteristics as listed in Section 1.2, we introduced the Accident and Development period adjusted Linear Pools (ADLP) modelling framework. This includes a novel validation strategy that takes account into the triangular loss reserving data structure, impacts and features related to different Accident and Development period combinations.

In particular, the partitioning strategy not only effectively utilises variations in component models' performance in different accident periods, but also captures the impact from late development periods on incremental claims, which can be particularly important for long-tailed lines of insurance business. Based on the illustrative examples which use simulated aggregate loss reserving data, the ADLP ensembles outperform traditional approaches (i.e. the model selection strategy BMV and the equally weighted ensemble EW) and the standard linear pool ensemble, when the Log Score, CRPS, or relative reserve bias at the quantile are used for the assessment.

Furthermore, we also investigate the impact of a split point on the ensemble's performance. Given the composition of models included in the ensemble, we have found that splitting data into two subsets close to the middle point (i.e. between accident period 15 and 20 for a 40 x 40 loss triangle) can generally yield satisfactory performance by taking the advantages of several high-performing models in earlier accident periods while maintaining sufficient data size for training the combination weights in the both subsets.

Acknowledgments

Authors are very grateful for comments from two anonymous referees, which led to significant improvements of the paper. Earlier versions of this paper were presented at the Actuaries Institute 2022 All Actuaries Summit, the 25th International Congress on Insurance: Mathematics and Economics (Online), the 2022 Virtual ASTIN/AFIR Colloquium, the One World Actuarial Research Seminars, as well as at the 2023 CAS Annual Meeting in Los Angeles. The authors are grateful for constructive comments received from colleagues who attended those events.