ABSTRACT

Studies have shown the potential of explainer videos. An alternative is a written explanation, as found in science textbooks. Prior research suggests that instructional explanations sometimes lead to the belief that a topic has been fully understood, even though that is, not the case. This ‘illusion of understanding’ may be affected by the medium of the explanation. Also, prior studies comparing the achievement from explainer videos and writen explanations come to ambiguous results. In the present experimental study (video group: nV = 78, written explanation group nW = 72), we compared the effects of an explainer video introducing the concept of force to a written explanation containing the script of the video, as though it is a page from a textbook. Both groups achieved comparable degrees of declarative knowledge, however, the written explanation video group had a significantly higher belief of understanding (partial η2 = 0.043) that did not correspond with their actual learning progress. Consequences of this may include lower cognitive activation and less motivation in science classrooms if learning environments exclude further learning tasks that allow for a more realistic picture of understanding. That might suggest that it is sometimes potentially harmful to leave physics learners to their own devices with instructional material.

Introduction

In both informal and formal science education, explainer videos have gained immense popularity in recent years. Students watch explainer videos online, for example, to prepare for exams or simply for entertainment (Wolf & Kratzer, Citation2015; Wolf & Kulgemeyer, Citation2016). Formats, such as the flipped classroom, have further fostered the use of explainer videos. Many teachers now produce explainer videos for students who watch them at home and work on related learning tasks in the classroom afterwards (Wagner & Urhahne, Citation2021). Lately, the various purposes of explainer videos have led to numerous studies in science education and psychology on their effectiveness (e.g. Findeisen et al., Citation2019; Kulgemeyer, Citation2018a; Mayer, Citation2021). Such studies have addressed not only the potential of explainer videos for science teaching but also their limitations, including a potential shift to non-constructivist learning environments (Kulgemeyer, Citation2018a).

Kulgemeyer and Wittwer (Citation2022) showed that, similar to instructional explanations, some physics explainer videos presenting common misconceptions as explanations fostered an ‘illusion of understanding’ – the false belief that an explanation was easy, a topic has been thoroughly understood and requires no further instruction. The illusion of understanding has potentially harmful consequences for further teaching. It might lead to perceiving further instruction as redundant and irrelevant (similar to Acuna et al., Citation2011) and, ultimately, a lower cognitive activation in science teaching (Kulgemeyer & Wittwer, Citation2022). Psychological research suggests that pictures may influence one’s sense of how much has been understood (Salomon, Citation1984), thus leading to an inaccurate estimation of one’s own learning progress. Students sometimes assume themselves to be performing better in a knowledge test while learning with video compared to print media (Dahan Golan et al., Citation2018). The ‘shallowing hypothesis’ suggests that the medium is important to the perception of the explanation. For example, learners are used to superficially interacting with online media regularly, aiming for quick rewards such as a ‘thumbs up’ (Salmerón et al., Citation2020). This may overshadow how they interact with the explainer videos in the context of learning. Explainer videos, involving complex principles (as in physics), may be more likely to lead to an illusion of understanding than written explanations. However, it is also possible that written explanations, especially in the form of textbook entries, are more likely to lead to an illusion of understanding. A (reverse) shallowing hypothesis may result in textbook entries and written explanations appearing to be more reliable and formal to learners. Supposedly, learners’ regular interactions with textbook entries are less superficial as the latter appear mostly in a formal learning context. Thus, they may even be convinced that they have learned more from textbook entries than they actually did, again resulting in an illusion of understanding. Regarding achievement, Kulgemeyer (Citation2018a) suggests that explainer videos primarily foster the achievement of declarative knowledge. Studies, however, come to ambiguous results. Lee and List (Citation2019) found an advantage of learning from text, List and Ballenger (Citation2019) did not find a clear difference.

Since explainer videos have become an increasingly established medium in science teaching, their limitations should be examined as closely as their potential, and the present study contributes to this goal precisely. In the present experimental study, we address the question of whether physics explainer videos, compared to written explanations (as present in science textbooks), are more likely to result in the ‘illusion of understanding’. Also, we explore the effects on the achievement of declarative knowledge for the case of a short introduction into the concept of force. The comparison between written explanations and explainer videos is noteworthy for science education as it may help teachers choose between an explainer video and a textbook, when the need arises. Consequences for science teaching are also discussed.

Literature review

Learning science with explainer videos

Explainer videos are usually short videos, providing comprehensible explanations of principles (Findeisen et al., Citation2019; Kulgemeyer, Citation2018a). According to Findeisen et al. (Citation2019), explainer videos should be limited to a length of 6 min, taking into consideration the limitations of one’s working memory. In recent studies, they have alternatively been referred to as, e.g. educational videos (Brame, Citation2016), or instructional videos (Schroeder & Traxler, Citation2017).

Wolf and Kulgemeyer (Citation2016) argue that explainer videos can contribute to science education in many different ways depending on who the explainer and explainee are (science teacher/online source versus science learner and science teacher versus science learner, respectively). Learning from videos is a common sight in (flipped) science classrooms. Wolf and Kulgemeyer (Citation2016), however, remind us that tasks in which students produce their own videos also have high educational value. They are potential learning opportunities for both science content knowledge and science communication skills. Wolf and Kulgemeyer (Citation2016) further argue that analysing explainer videos can be useful for science teachers (e.g. to learn alternative explaining approaches) and for learners (e.g. to see alternatives to their teachers’ explanations). In an analysis of the literature since 1957, Pekdag and Le Marechal (Citation2010) highlight the potential benefits of including videos in learning environments in chemistry education. They argue that explainer videos might even replace written explanations in textbooks (Pekdag & Le Marechal, Citation2010, 14) – a question the present study addresses.

Over the years, numerous studies have examined the videos’ effects on students’ achievements. Hartsell and Yuen (Citation2006) remind us that online explainer videos have the potential to deconstruct teachers’ monopoly of knowledge presentation by offering alternative explanations that are easily accessible. Other studies provide evidence for interactivity being key to learning (e.g. Delen et al., Citation2014; Hasler et al., Citation2007).

One of the main research topics in this area involves the design principles underlying explainer videos, and the impact of the same on the videos’ effectiveness. These design principles have mostly been derived from multimedia learning (Findeisen et al., Citation2019; Mayer, Citation2021) and science education research (Kulgemeyer, Citation2018b).

For example, Brame (Citation2016) postulates guidelines based on the cognitive load theory, including ways to reduce the extraneous cognitive load by ‘weeding’ (erasing unnecessary information). In addition, Schroeder and Traxler (Citation2017) suggest that reducing distractions positively influences achievement. This finding mirrors the one from studies on instructional explanations, which highlight the benefits of focusing solely on the concept instead of on irrelevant details (‘minimal explanations’) (Anderson et al., Citation1995).

Hoogerheide et al. (Citation2016) observed that, compared to peer explanations, adults as explainers in videos appear more competent, thus influencing the learning outcome. Seidel et al. (Citation2013) suggest that the method of introducing the explained principle and, afterwards, illustrating it with examples might be superior fruitful in achieving content knowledge (rule-example strategy). The main quality criterion, however, is adapting to prior knowledge (Wittwer & Renkl, Citation2008). The tools required to reach such levels of adaptation have been empirically validated for science education in particular, and may even be domain-specific for science (Kulgemeyer & Schecker, Citation2009, Citation2013): (1) the language level, (2) the level of mathematisation, (3) representation forms and demonstrations, and (4) examples, models, and analogies. Adaptation cannot easily be achieved through a medium such as explainer videos (Findeisen et al., Citation2019; Kulgemeyer, Citation2018a; Kulgemeyer & Peters, Citation2016) because, other than instructional explanations as provided by teachers, they cannot include a diagnosis of the students’ understanding, followed by a more elaborate process of adaptation. Integrating interactive elements (Merkt et al., Citation2011) is, therefore, important. In addition, the integration of explainer videos into a learning process, for instance, by incorporating learning tasks, is a criterion for success (Altmann & Nückles, Citation2017; Webb et al., Citation2006). Lloyd and Robertson (Citation2012) suggest that procedural knowledge may be better gained from explainer videos as compared to print media. For learning physics, explainer videos are effective in imparting declarative knowledge: The explained principle can be used after the video to explain the examples that are shown in the video. To achieve flexible conceptual knowledge, it might be necessary to incorporate learning tasks that require the application of the explained principle to unknown examples (Kulgemeyer, Citation2018b). In addition, explainer videos are most effective for learners with low prior knowledge; for learners with higher prior knowledge, self-explanatory attempts work better (Kulgemeyer, Citation2018b; Wittwer & Renkl, Citation2008). Acuna et al. (Citation2011) found that learners with a low prior knowledge benefitted from instructional explanations while learners with a high knowledge did not. Wittwer and Renkl (Citation2008) highlighted that instructional explanations were likely to fail for learners with high prior knowledge. While they have a role when introducing a new concept to novice learners, they are not useful in a more advanced stage of a teaching unit (Kulgemeyer, Citation2018b). Thus, for the present study, we decided to focus on learners with a low prior knowledge because they are the target group of explainer videos.

The ‘illusion of understanding’ and learning from text and video

The ‘illusion of understanding’ refers to the mistaken belief that one has understood a topic and the instruction was easy, which is both actually not the case (Chi et al., Citation1994; Wittwer & Renkl, Citation2008). Therefore, it is a special case of a belief in understanding with a difference between this assumed degree of understanding and objective comprehension but also the impression that learning material is easier than it actually is. Kulgemeyer and Wittwer (Citation2022) provide a definition of the same that differentiates between two criteria of how an instructional explanation is perceived by the learners and two criteria of how learners perceive their own understanding of the topic:

An illusion of understanding is the mistaken belief that (1) an instructional explanation is (a) a scientifically correct and (b) a high-quality explanation in terms of comprehensibility, and (2) the learners themselves (c) understood the concept, and (d) do not require further instruction.

That is close to Paik and Schraw’s (Citation2013) view of the illusion of understanding hypothesis; they also include characteristics of (1) the material and of (2) the learners in their concept. Research provides several possible factors that influence these four criteria with respect to explainer videos. For example, Lowe (Citation2003) suggests that graphical animations, in general, support an illusion of understanding. Graphical animations may turn a learner’s focus on attention-grabbing information rather than relevant principles that are explained. In an early work in this field, Salomon (Citation1984) found that learners using video feel that the medium is more efficient than print, while participants learning in print outperformed the video group in an inference-making test. Wiley (Citation2019) calls this a potential ‘seduction effect’ of images. Kulgemeyer and Wittwer (Citation2022) found that presenting misconceptions, close to everyday experiences, as alternative explanations is highly convincing and fosters the illusion of understanding. They also highlight that misconceptions constitute a major educational obstacle in those who learn science from explainer videos.

A difference between the perceived learning gain and the objective learning gain may be more prevalent in learning from digital media when compared to learning from print media. Studies show that students who read print outperform those reading digital media in reading comprehension tests, while the digital media group believes they score higher (Dahan Golan et al., Citation2018; Singer Trakhman et al., Citation2019). Reading print also seems more efficient than listening to the same information in a podcast; however, learners prefer podcasts (Daniel & Woody, Citation2010).

Researchers have identified a particular tendency for learners from print to outperform those learning from video (Walma van der Molen & van der Voort, Citation2000). For complex principles (such as those that physics learning would require), different studies show an advantage in the case of print (DeFleur et al., Citation1992; Furnham et al., Citation1987; Gunter et al., Citation1986; Walma van der Molen & van der Voort, Citation2000). In a more recent work, Lee and List (Citation2019) found an advantage to learning from text, while List and Ballenger (Citation2019) did not find a clear difference. Similarly, Zinn et al. (Citation2021) compared text and video in technical education and did not find a difference between the media. Merkt et al. (Citation2011), however, provide evidence that the reason may lie in the level of interactivity; videos with interactive elements are equally as effective as print. This further underlines the need to integrate explainer videos into the cognitive activities described above.

However, the cognitive theory of multimedia learning suggests that learning via two channels (e.g. by learning from graphical representations that are commented on by a narrator) can, under certain conditions, help to overcome the limitations of working memory (Mayer, Citation2001). A key criterion for success is that learners be cognitively activated and use their prior knowledge to integrate the information from both channels. Explainer videos tend to fulfil these criteria and, being a multimodal form of information presentation, may be superior to textual explanations.

In a nutshell, results regarding learning from video compared to print media are ambiguous, with a tendency towards finding text to have an advantage. However, it is not clear which medium is better, including explanations in physics.

Recent research has shown that the context of learning from instructional explanations is also important for, both, actual learning and the potential illusion of understanding. Salmerón et al. (Citation2020) deem the so-called ‘shallowing hypothesis’ important when learning with explanations from online videos. It refers to the fact that such interactions in everyday contexts are often superficial and oriented toward quick rewards such as ‘likes’ (Annisette & Lafreniere, Citation2017). Indeed, processing the information presented in a digital medium may follow this superficial approach (Salmerón et al., Citation2020). Also, the shallowing hypothesis may have the advantage of written explanations when compared to explainer videos with respect to achievement.

The basic idea is that the medium overshadows how the explanation is dealt with. Thus, a medium learners are used to just superficially interact with might lead to the mistaken impression that an explanation was easy and they understood it while that was actually not the case. Explainer videos, therefore, might lead to an illusion of understanding.

However, the underlying idea of the shallowing hypothesis might also suggest a potential illusion of understanding when learning from written explanations, especially when they appear like formal textbooks. Contrary to their interaction with explainer videos, learners may tend to not superficially interact with explanations. With respect to such explanations, they interact first and foremost in a formal teaching context, which may lead to written explanations from textbooks appearing to be a more reliable source. Therefore, they may believe to have learned more from textbook entries than from explainer videos about the same subject because the information seems more trustworthy and this impression overshadows the actual achievement.

When it comes to comparing written explanations and explainer videos, there is no clear evidence that one can be used over the other in science classrooms. In particular, online explainer videos have a broad range of qualities because anyone can upload content on online platforms like YouTube (Bråten et al., Citation2018; Kulgemeyer & Wittwer, Citation2022). However, while several studies in educational psychology suggest that written explanations are at least as efficient as explainer videos, it is unclear whether explainer videos foster an unjustified belief of understanding – or, in other words, if science learners using explainer videos feel more competent than learners using written explanations from textbooks. As described above, a potential illusion of understanding may highly influence how learners perceive their lessons classroom lessons and, in particular, how open they are to further instruction. This forms the premise of the present study.

Materials and methods

Research questions

Learners with low prior knowledge are often considered the main target group for instructional explanations in general (Acuna et al., Citation2011; Wittwer & Renkl, Citation2008). Based on the aforementioned theoretical considerations, we identify a desideratum in the research on the effects of physics explainer videos versus those of written explanations that appear textbook-like on learners with low prior knowledge.

First, based on the ambiguous evidence from prior studies, it is unclear whether an explainer video or a written explanation has an advantage in terms of achievement.

Regarding a possible illusion of understanding, previous research suggests that video and, in particular, the animations and images contained in the videos have a ‘seductive effect’ (Wiley, Citation2019) which makes learners overestimate their knowledge and regard further instruction as unnecessary. However, the ‘shallowing hypothesis’ reminds us that the medium matters in one’s perception of an explanation. Written explanations that appear to be textbook entries give the impression of being more reliable and convincing, which also results in learners overestimating their knowledge gains.

Therefore, the research questions of the present study are:

Do explainer videos foster declarative knowledge when compared to textbook-like written explanations?

Do explainer videos foster an illusion of understanding when compared to textbook-like written explanations?

Since, as described above, the evidence from prior studies is ambiguous, it is not possible to predict which medium has an advantage in terms of achievement. Thus, in an attempt to answer Question 1, we test the following hypotheses:

Hypothesis 1: The medium of an explainer video leads to greater achievement of declarative knowledge than written explanations. Alternative hypothesis 1a: Textbook-like written explanations lead to greater achievement of declarative knowledge than explainer videos. Alternative hypothesis 1b: Explainer videos and written explanations lead to equal achievement of declarative knowledge.

Hypothesis 2: The medium of an explainer video leads to a greater illusion of understanding than written explanations. Alternative hypothesis 2a: Textbook-like written explanations lead to a greater illusion of understanding than explainer videos. Alternative hypothesis 2b: Explainer videos and written explanations lead to an equal illusion of understanding.

Kulgemeyer (Citation2018a) suggests that the learning progress from explainer videos was primarily limited to declarative knowledge directly presented in the explanation. For conceptual knowledge, which includes flexibly transferring the newly learned principles to various other examples not directly included in the explanation, more active learning followed by learning tasks is likely to be required. Therefore, our study addresses declarative knowledge as a construct of interest.

Context

The study took place in the introductory physics course for pre-service elementary teachers at a German university. The course is mandatory for first-semester students and covers basic school physics. The experiment was conducted before they were introduced to the concept of force. It was mandatory to participate; however, pre-service teachers could opt not to have their data used for further analysis.

Participants and design

The sample consisted of 181 learners with 31 opting out to have their data used. 150 learners remained in the sample. As it is often the case, none of them had opted to enrol in a physics course in the last three years of school, the academic preparation phase of the German school system. Thus, they can be expected to have low prior knowledge in physics, comparable to that of secondary school students. The sample, therefore, is a convenience sample, however, a suitable one for an exploration because their characteristics that make them different from learners at school being introduced into the concept of force are not expected to highly impact the dependent variables of the study (Gollwitzer et al., Citation2017).

Of these 150 learners, 18 identified themselves as male, 132 as female, and none as non-binary. This is not unusual since primary education is a subject that is, at least in Germany, predominantly elected by female pre-service teachers. Their final school exam grade was, on average, M = 2.37 (SD = 0.490) located on a scale from 1 (‘very good’) to 6 (‘unsatisfactory’). In the German state of Northrhine-Westfalia, where the course was taught, the average final school exam grade was 2.43 in 2020Footnote1 which does not differ significantly from the sample (t(105) = −1,34; p = .18). A random generator assorted the learner to two groups: (1) video (nV = 78) and (2) written explanation (nW = 72).

Materials: explainer video and written explanation

For this study, we decided to use the explainer video from Kulgemeyer and Wittwer (Citation2022), which introduced the concept of force. It has been designed following a framework for explainer videos that has been empirically researched for validity (Kulgemeyer, Citation2018a, Citation2018c). This framework has emerged from a systematic literature review regarding the criteria for successful instructional explanations with a focus on physics education (Geelan, Citation2012; Kulgemeyer, Citation2018a; Wittwer & Renkl, Citation2008). It is in line with other frameworks on the quality of explainer videos, for example, Brame (Citation2016) and Findeisen et al. (Citation2019). The framework includes the seven factors influencing the effectiveness of explainer videos (Kulgemeyer, Citation2018a): (1) the structure of the video, (2) adaptation to a group of addressees, (3) the use of appropriate tools to achieve adaptation, (4) minimal explanations that avoid digressions and keep the cognitive load low, (5) highlighting that the explanation itself is relevant to the learner and the other highly relevant parts, (6) providing learning tasks following the video, and (7) a focus on a scientific principle. These seven factors can be manipulated using 14 different features ().

Table 1. Framework for effective explanation videos (from Kulgemeyer (Citation2018a)).

Kulgemeyer and Wittwer (Citation2022) report based on an expert rating that these criteria have been fulfilled in the explainer video. The video is designed to be comparable to online explainer videos (e.g. as those found on YouTube). Therefore, this study addresses learning from such of videos in a laboratory setting.

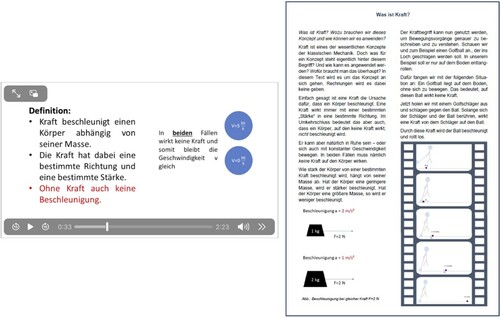

To test the effect of the medium, the content should remain unchanged. The written explanation, therefore, includes just the script of the video, i.e. the literal transcript (). This written explanation is presented combined with two images directly from the video, as the presence of images is a prerequisite for a good explanation (). All the features included in are, therefore, included in the written explanation to a comparable degree as in the video. The written explanation, including the illustrations, covers 1.5 pages of text and is presented in a digital format, not in print. The readability coefficient LIX works well with the German language (Lenhard & Lenhard, Citation2014) and indicates low text complexity (LIX = 35.6).

Figure 1. Overview of the materials used (in German as originally used). Left: screenshot of the video, right: illustrated written explanation using the same text and illustrations as used in the video (1 of 1,5 pages) (video and text by Erber, Citation2019).

provides a brief overview of the script of the video. The content of the textbook page is identical (translated from the German language). Both the video (2:23 min) and the textbook (1.5 pages) were comparable under the criteria of Kulgemeyer (Citation2018a). They followed a rule-example structure with a summary at the end. They made use of examples (e.g. a rolling golf ball) and representation forms (e.g. pictures with stick figures). The levels of mathematisation and language were identical. They focused on the concept (minimal explanation) and avoided digressions. They used prompts to highlight the most important parts and addressed the explainee directly.

Table 2. Parts of the script of the explainer video and the textbook page, translated from German language. Representation forms are not included, the used examples are just summarised briefly. Adapted from Kulgemeyer and Wittwer (Citation2022).

Measures

We used the same instruments as those used by Kulgemeyer and Wittwer (Citation2022). Next to demographics, we measured (1) control variables (gender, final school grade, experience with explainer videos, academic self-concept physics [all pre-test]), (2) the declarative knowledge that was part of the explanation (pre- and post-test), and (3) the illusion of understanding (just post-test).

Control variables

A core aspect of the control variables was the final school exam grade (‘Abitur’). This grade is a good indicator of one’s success in academics (e.g. Binder et al., Citation2021) and, therefore, reflects an academic skill set that is useful for learning from both textual and video content. This should be comparable between the groups. We also measured the gender of the participants and the academic self-concept in physics (5-point Likert scale, six items, α = 0.82, e.g. ‘I get good grades in physics’; scale taken from Kulgemeyer & Wittwer, Citation2022).

The experience with explainer videos was measured using 12 different items to obtain insights into various possible reasons for using explainer videos and the learner’s perception of explainer videos in general. All the items were 5-point Likert scales ranging from 1 (total agreement) to 5 (total disagreement). The items included the experience (e.g. ‘I have often watched explainer videos regarding school or university topics’), the perception of videos on YouTube (e.g. ‘I usually select the explainer video with the most number of likes’), and possible reasons for watching explainer videos (e.g. ‘I watch explainer videos to prepare for exams’). The primary purpose of these items was to ensure comparability between the experimental conditions. The items were not expected to form a coherent scale; thus, no reliability was reported.

We decided against including similar items to measure the experience with written explanations and textbook entries. Other than with explainer videos, we did not expect any student to leave school without any experience with textbooks, and the likelihood that both groups vary in that characteristic was very low compared to the danger of a longer test instrument harming the test motivation (also because they were assigned randomly to an experimental condition). This decision has been discussed as a limitation of the study in the discussion section.

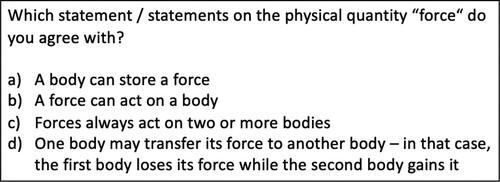

Declarative knowledge on the concept of force

The scale for declarative knowledge of the concept of force was originally designed by Kulgemeyer and Wittwer (Citation2022) by analyzing the script of the explainer video. To ensure content validity, explicit learning opportunities need to exist in both the explainer video and the written explanation for all items in the test instrument. The final scale consisted of 11 multiple-choice items. A sample item is shown in . The reliability of the scale is sufficient, both, in the pre-test (α = 0.77) and the post-test (α = 0.78). The same scale has been used in Kulgemeyer and Wittwer (Citation2022) and is motivated by instruments such as the force concept inventory (Hestenes et al., Citation1992). Since the test items mirror the learning opportunities in video and text, the test instrument was designed for learners with low prior knowledge (). More arguments concerning validity can be found in Kulgemeyer and Wittwer (Citation2022).

Figure 2. Sample item for conceptual knowledge and misconceptual knowledge (translated from the German language, taken from Kulgemeyer and Wittwer (Citation2022)).

Illusion of understanding

To measure the illusion of understanding, we used the instrument of Kulgemeyer and Wittwer (Citation2022) that covers all four criteria for an illusion of understanding mentioned above: An illusion of understanding is the mistaken belief that (1) an instructional material is (a) a scientifically correct and (b) a high-quality explanation in terms of comprehensibility, and (2) the learners themselves (c) understood the concept, and (d) did not require further instruction.

That is close to the understanding of an illusion of understanding as described by Paik and Schraw (Citation2013). The final scale consisted of 7 items. The reliability was found to be sufficient (α = 0.76). Six items were 5-point Likert scale items ranging from 1 ‘totally agree’ to 5 ‘totally disagree’. Sample items would be ‘I need to learn more on the concept force’, ‘I would advise a friend to prepare with this video/text for an exam’, or ‘I understood what force means in physics’. In one item we asked them to estimate how many of the test items they thought they could solve before each, pre-test and post-test. The post-test item was included into the scale.

In the present article, we use the following wording: the scale is called the ‘illusion of understanding scale’ while the score on this scale is called a more neutral ‘belief of understanding’. Following the research reported above, the belief of understanding can be qualified as an illusion of understanding when it does not reflect objectively measured understanding. The score, therefore, is called an ‘illusion of understanding’ when it has been empirically proven that there is a difference between the belief and actual understanding. How this has been carried out in the analysis is discussed in the following section.

Overview of the study, procedure, and analysis

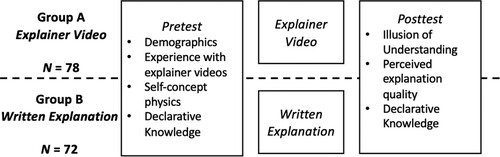

The study followed an experimental design (). Testing time was limited to 45 min.

After a short introduction, the learners were assigned to either the explainer video group or the written explanation group by a random generator. Afterwards, they were given a pre-test including demographics, experience with explainer videos, and declarative knowledge on the concept of force. Then, they worked with the medium according to the experimental conditions they had been categorised into. They took the test individually and were not allowed to browse the internet or use any material other than the medium they had been given.

The pre-test and post-test instruments were identical between the groups, except for the phrasing of some statements in the perceived explanation quality scale matching their respective medium (e.g. ‘the video is well explained’ versus ‘the text is well-explained’). Both groups were tested online in a testing environment based on LimeSurvey. The video and written explanations were embedded in this online testing environment.

We analyzed the learning gains of the two groups (hypothesis 1 and alternatives). We used t-tests, one-way ANOVAs and ANCOVAs to compare the results from the pre-tests and the post-tests of declarative knowledge, and the scores on the Illusion of Understanding scale. Subsequently, we compared the correlations between declarative knowledge and the scores from the Illusion of Understanding for each experimental condition in order to gain insights into the question of whether the learning gains and the belief of understanding were associated.

In case hypothesis 2 was true, we would expect a significant difference between the experimental conditions regarding their illusion of understanding scores, while the actual learning progress was comparable. In addition, we expected their belief in understanding to show no correlation to the actual declarative knowledge since it was an illusion and not a realistic view of their understanding.

Results

Descriptive statistics

The descriptive statistics of the study variables, including the control variables, are presented in .

Table 3. Descriptive Statistics of Study Variables.

Control variables

Using t-tests, we did not find any significant differences between the groups regarding the control variables (). Most importantly, gender (χ2(1) = 0.10, p = 0.75), the final school grade (t(104) = 0.01, p = 0.99, d = 0.00), and the academic self-concept in physics (pre: t(148) = 1.15, p = 0.25, d = 0.19; post: t(142) = 0.56, p = 0.57, d = 0.09) did not differ. That is a prerequisite to compare the groups.

Research question 1: do explainer videos foster declarative knowledge when compared to textbook-like written explanations?

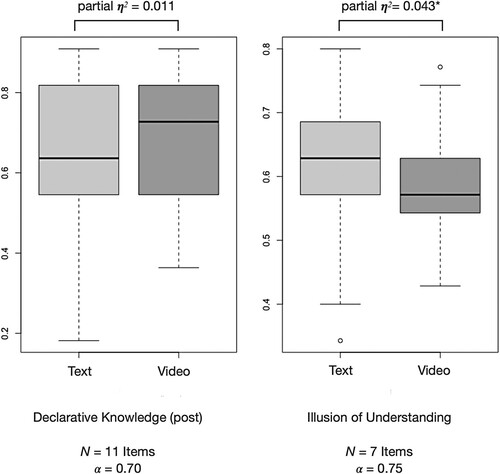

We did not find a difference between the groups in their performance in the post-test (F(1,148) = 1.55, p = 0.215, η2 = 0.010) or the pre-test (F(1,147) = 0.048, p = 0.828, η2 = 0.000) on declarative knowledge. shows a comparison of this scale. The learning gain (ANCOVA of the post-test results adjusted for the pre-test scores) did not reveal any differences either (F(1,147) = 1.70, p = 0.20, partial η2 = 0.011). These results are in line with alternative hypothesis 1b.

Research question 2: do explainer videos foster an illusion of understanding when compared to textbook-like written explanations?

Hypothesis 1: greater illusion of understanding from explainer videos

The written explanation group scored higher than the video group on the illusion of understanding scale with a small effect (F(1,148) = 5.87, p = 0.017, η2 = 0.038; ). Also, an ANCOVA adjusted for the knowledge in the post-test (to control the objective declarative knowledge into the analysis) reveals this difference (F(1,147) = 6.65, p = 0.011, partial η2 = 0.043).

To gain further insights into how strongly the belief of understanding and the actual understanding were associated, we conducted a correlational analysis. A positive correlation between the actual understanding and belief of understanding would hint to a realistic picture of one’s own performance. We did not find a correlation between the belief of understanding and declarative knowledge in the post-test (video group: r = 0.08, p = 0.49; written explanation group: r = −0.16, p = 0.17). Following Gollwitzer et al. (Citation2017, p. 547), this difference between the correlation coefficients is not significant (Z = 1.45, p = 0.148). Overall, these results support the alternative hypothesis 2a.

Discussion

We did not find a difference between the explainer video group and the written explanation group in their performance with respect to their achievement or performance on the post-test on declarative knowledge. This result is in line with alternative hypothesis 1b and aligned with prior research on media comparison regarding print and video, which has yielded ambiguous results. In our study, video was explicitly not the superior medium in terms of achievement.

The results, overall, are in line the alternative hypothesis 2a. The written explanation group was significantly more convinced that they understood the topic and did not require further instruction. The video group was more critical of their own performance. To label this as an illusion of understanding, we need evidence to identify this result as an erroneous belief. Two arguments support the conclusion of an illusion of understanding:

The first argument comes from a theoretical perspective and deals with learning the concept of force. From what we know about this, it may be argued that both groups are most likely to require further instruction to fully understand the complex concept of force. It is well known in physics education that this particular concept is difficult to grasp because of multiple misconceptions and because a conceptual change is very hard to accomplish in this context (Alonzo & Elby, Citation2019). A short video or a short text is most probably not sufficient. Also, as science educators, we would like our students to be sufficiently critical toward themselves so that they remain open to further instruction. The written explanation group was significantly more convinced of their understanding. Since (as argued), it is likely that they require further instruction, this is likely a false belief and, therefore, an illusion of understanding.

The second argument comes from an empirical perspective. First of all, the ANCOVA of the illusion of understanding scale adjusted for knowledge in the post-test reveals a significantly higher belief of understanding in the written explanation group. In addition, both groups score between 67% and 70% (with no ceiling effect) in a declarative knowledge test that was designed only for learners with low content knowledge (Kulgemeyer & Wittwer, Citation2022). Both groups, however, are significantly positive about their understanding. That suggests an illusion of understanding. Also, the correlational analysis revealed no relationship between test performance in the post-test and the belief of understanding for both groups. This supports the assumption that none of the groups has a realistic belief of understanding.

In a nutshell, we want to reiterate that neither group reflected well on their actual learning gains, but the written explanation group gave a worse performance in this respect.

Based on these two arguments, we posit that both groups have an illusion of understanding, but the written explanation group has a higher degree of an illusion of understanding. The medium of written explanation (appearing as a textbook entry) is the most probable, but not the only, reason for this (cf. ‘study limitations’).

Overall, our study shows that written explanations similar to textbook entries can potentially lead to an illusion of understanding. As discussed above, the reason might come from an inverse ‘shallowing hypothesis’ (Salmerón et al., Citation2020). The ‘shallowing hypothesis’ suggests that learners transfer their strategies of superficial everyday interaction with online content to learning with digital media. However, this may also explain why they felt they learnt more from the written explanation. As per the normative tendency, they may not only superficially interact with textbook-like explanations as they barely have strategies to interact with written explanations; with such explanations, they interact first and foremost within the context of formal teaching. Thus, students may perceive textbook entries as more convincing because they appear more formal, closer to past classroom experiences, and closer to what their teachers advise them to learn from. This may affect how they perceive the content of an explanation in this format, resulting in an illusion of understanding. Novice physics learners, in particular, may be distracted by the superficial appearance as they lack the skill to critically evaluate the content. Written explanations might appear to be more reliable than explainer videos, even though the actual achievement is not necessarily superior (as in the present study). However, the effect is rather small. Both groups show signs of an illusion of understanding.

Study limitations

There are several limitations to the present study. First, the results were derived in the context of physics and, in particular, for only one topic in the subject. We cannot safely assume that for other domains or topics, the results will be the same. In addition, a limitation of the design is that learning skills – including reading and video comprehension skills – were not assessed in this study. This was partly due to restrictions on the testing time, and also because learning from text and video involves a multitude of academic skills, and not all of them could have been using a single test instrument. Both video and text were rather short which might underestimate the effects of the media, maybe longer videos outperform longer texts. On the other hand, shorter material probably reduced the impact of reading or video comprehension skills on the results.

We decided that our approach is suitable for an explorative study. We used the final school exam grade as a measure for such academic skills useful to learning from both text and video and, additionally, to measure their experience with explainer videos. Both did not differ between the groups. The participants had average final school exam grades. That indicates average academic skills including reading skills, especially because the average final school grade (the ‘Abitur’) is a well-known predictor of one’s success in academics (Binder et al., Citation2021). However, it still is a convenience sample that does not fully mirror average learners at schools. Overall, the results should be treated as first insights and should not be generalised. Also, we conducted an experimental laboratory study, and external validity, therefore, was rather low.

The sample size (N = 150) might have resulted in the study being insufficiently powered, limiting the significance of the correlational analysis between the actual understanding and belief of understanding. We conducted a post hoc power analysis using G*Power (Erdfelder et al., Citation1996) that revealed that, for the size observed in the present study (r = −0.16, |ρ| = −0.3), the power to detect an effect of this size was 0.97 (with α = 0.05), which is above the recommended power of 0.8 (Cohen, Citation1988). Thus, even a very small effect for the written explanation group is unlikely.

Furthermore, the null result regarding the achievement of declarative knowledge may be due to a lack of statistical power. Therefore, we additionally conducted a post hoc power analysis. The effect size of this ANOVA of the learning gains was η2 = 0.01 (small effect following Cohen (Citation1988)). The power to detect an effect of this size was determined to be 0.23 with α set at 0.05. Thus, we cannot completely rule out a small effect. However, this effect would have been far below the desired effect size for education (e.g.. Hattie, Citation2009). For medium effect sizes (η2 = 0.06), the power was determined to be 0.86, above the recommended power of 0.8. We therefore regard our study as having adequate statistical power. The sample size is already relatively large for an experiment in science education research and very small effects might not be meaningful. Therefore, the power analyses do not affect our interpretations of the outcomes.

Study Implications

First of all, our results support the growing evidence that learning with digital media is not automatically superior in terms of achievement.

We see no reason that written explanations from textbooks should not remain a part of physics teaching. Also, we argue that our results highlight the potential dangers of leaving students to their own devices with either explainer videos or written explanations. Both groups show signs of an illusion of understanding. Prior research also indicated that learning with explanations, in general, works only when the explanations are well-embedded in ongoing cognitive activities such as learning tasks (Wittwer & Renkl, Citation2008). Perhaps working with learning tasks helps one assess their own performance better. Employing such learning tasks that build on the content of an instructional explanation may, therefore, serve as a countermeasure to the effect of written explanations on the illusion of understanding. Further research is needed to gain more insights into learning through explanations using different media. However, we will reiterate that an illusion of understanding has potentially harmful consequences for physics learning (Acuna et al., Citation2011; Kulgemeyer & Wittwer, Citation2022): if learners are convinced they have fully understood a topic, they may reject further teaching, deeming it redundant and irrelevant. This way, they are less cognitively activated (which is a core dimension of instructional quality in science). Also, if they get to choose further instructional material (e.g. in self-directed learning) they might stop after they are convinced of their understanding and that might apply to both explainer videos and written explanations. This underscores the fact that physics teaching does not stop with providing an explanation in a particular medium; further learning tasks are required to make learners aware of what they do not understand. Further studies exploring the effects of an illusion of understanding are required.

Ethical statement

The study met the ethics/human subject requirements of the University of Paderborn at the time the data was collected.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Notes

References

- Acuna, S. R., Rodicio, H. G., & Sanchez, E. (2011). Fostering active processing of instructional explanations of learners with high and Low prior knowledge. European Journal of Psychology of Education, 26(4), 435–452. https://doi.org/10.1007/s10212-010-0049-y

- Alonzo, A. C., & Elby, A. (2019). Beyond empirical adequacy: Learning progressions as models and their value for teachers. Cognition and Instruction, 37(1), 1–37. https://doi.org/10.1080/07370008.2018.1539735

- Altmann, A., & Nückles, M. (2017). Empirische Studie zu Qualitätsindikatoren für den diagnostischen Prozess [empirical studies on quality criteria for a diagnostic process]. In A. Südkamp, & A.-K. Praetorius (Eds.), Diagnostische Kompetenz von Lehrkräften: Theoretische und methodische Weiterentwicklungen (pp. 134–141). Waxmann.

- Anderson, J. R., Corbett, A. T., Koedinger, K. R., & Pelletier, R. (1995). Cognitive tutors: Lessons learned. Journal of the Learning Sciences, 4(2), 167–207. https://doi.org/10.1207/s15327809jls0402_2

- Annisette, L. E., & Lafreniere, K. D. (2017). Social media, texting, and personality: A test of the shallowing hypothesis. Personality and Individual Differences, 115, 154–158. https://doi.org/10.1016/j.paid.2016.02.043

- Binder, T., Waldeyer, J., & Schmiemann, P. (2021). Studienerfolg von Fachstudierenden im Anfangsstudium der Biologie. Zeitschrift für Didaktik der Naturwissenschaften, 27(1), 73–81. https://doi.org/10.1007/s40573-021-00123-4

- Brame, C. J. (2016). Effective educational videos: Principles and guidelines for maximizing student learning from video content. CBE—Life Sciences Education, 15(4), es6–es6. https://doi.org/10.1187/cbe.16-03-0125

- Bråten, I., McCrudden, M. T., Stang Lund, E., Brante, E. W., & Strømsø, H. I. (2018). Task-Oriented learning With multiple documents: Effects of topic familiarity, Author expertise, and content relevance on document selection, processing, and Use. Reading Research Quarterly, 53(3), 345–365. https://doi.org/10.1002/rrq.197

- Chi, M. T. H., Leeuw, N. D., Chiu, M.-H., & Lavancher, C. (1994). Eliciting self-explanations improves understanding. Cognitive Science, 18(3), 439–477.

- Cohen, J. (1988). Statistical power analysis for the behavioral sciences. Lawrence Erlbaum Associates.

- Dahan Golan, D., Barzillai, M., & Katzir, T. (2018). The effect of presentation mode on children’s reading preferences, performance, and self-evaluations. Computers & Education, 126, 346–358. https://doi.org/10.1016/j.compedu.2018.08.001

- Daniel, D. B., & Woody, W. D. (2010). They hear, but Do Not listen: Retention for podcasted material in a classroom context. Teaching of Psychology, 37(3), 199–203. https://doi.org/10.1080/00986283.2010.488542

- DeFleur, M. L., Davenport, L., Cronin, M., & DeFleur, M. (1992). Audience recall of news stories presented by newspaper, computer, television, and radio. Journalism Quarterly, 70(3), 585e601. https://doi.org/10.1177/107769909206900419

- Delen, E., Liew, J., & Willson, V. (2014). Effects of interactivity and instructional scaffolding on learning: Self-regulation in online video-based environments. Computers & Education, 78, 312–320. https://doi.org/10.1016/j.compedu.2014.06.018

- Erber, T. (2019). Auswirkungen des Vorhandenseins von Schülervorstellungen in Lernvideos auf die Verstehensillusion von Schülern [Effects oft he presence of misconceptions on students‘ illusion of understanding]. Masters‘ thesis, University of Bremen, Germany.

- Erdfelder, E., Faul, F., & Buchner, A. (1996). Gpower: A general power analysis program. Behavior Research Methods, Instruments, & Computers, 28(1), 1–11. https://doi.org/10.3758/BF03203630

- Findeisen, S., Horn, S., & Seifried, J. (2019). Lernen durch Videos – empirische Befunde zur Gestaltung von Erklärvideos. MedienPädagogik: Zeitschrift für Theorie und Praxis der Medienbildung, 16–36. https://doi.org/10.21240/mpaed/00/2019.10.01.X

- Furnham, A., Benson, I., & Gunter, B. (1987). Memory for television commercials as a function of the channel of communication. Social Behaviour, 2(2), 105e112.

- Geelan, D. (2012). Teacher explanations. In B. Fraser, K. Tobin, & C. McRobbie (Eds.), Second International handbook of science education (pp. 987–999). Springer.

- Gollwitzer, M., Eid, M., & Schmitt, M. (2017). Statistik und forschungsmethoden [Statistics and research methods]. Beltz Verlagsgruppe.

- Gunter, B., Furnham, A., & Leese, J. (1986). Memory for information from a party political broadcast as a function of the channel of communication. Social Behaviour, 1(2), 135e142.

- Hartsell, T., & Yuen, S. C.-Y. (2006). Video streaming in online learning. AACE Review (Formerly AACE Journal), 14(1), 31–43.

- Hasler, B. S., Kersten, B., & Sweller, J. (2007). Learner control, cognitive load and instructional animation. Applied Cognitive Psychology, 21(6), 713–729. https://doi.org/10.1002/acp.1345

- Hattie, J. (2009). Visible learning. Routledge.

- Hestenes, D., Wells, M., & Swackhamer, G. (1992). Force concept inventory. The Physics Teacher, 30(3), 141–158. https://doi.org/10.1119/1.2343497

- Hoogerheide, V., van Wermeskerken, M., Loyens, S. M. M., & van Gog, T. (2016). Learning from video modeling examples: Content kept equal, adults are more effective models than peers. Learning and Instruction, 44, 22–30. https://doi.org/10.1016/j.learninstruc.2016.02.004

- Kulgemeyer, C. (2018a). A framework of effective science explanation videos informed by criteria for instructional explanations. Research in Science Education, 50(6), 2441–2462. https://doi.org/10.1007/s11165-018-9787-7

- Kulgemeyer, C. (2018b). Towards a framework for effective instructional explanations in science teaching. Studies in Science Education, 54(54), 109–139. https://doi.org/10.1080/03057267.2018.1598054

- Kulgemeyer, C. (2018c). Wie gut erklären Erklärvideos? Ein Bewertungs-Leitfaden [How to rate the explaining quality of explainer videos]. Computer + Unterricht, 109, 8–11.

- Kulgemeyer, C., & Peters, C. (2016). Exploring the explaining quality of physics online explanatory videos. European Journal of Physics, 37(6), 065705–14. https://doi.org/10.1088/0143-0807/37/6/065705

- Kulgemeyer, C., & Schecker, H. (2009). Kommunikationskompetenz in der physik: Zur Entwicklung eines domänenspezifischen Kompetenzbegriffs [communication competence in physics: Developing a domain-specific concept]. Zeitschrift für Didaktik der Naturwissenschaften, 15, 131–153.

- Kulgemeyer, C., & Schecker, H. (2013). Students explaining science—assessment of science communication competence. Research in Science Education, 43(6), 2235–2256. https://doi.org/10.1007/s11165-013-9354-1

- Kulgemeyer, C., & Wittwer, J. (2022). Misconceptions in physics explainer videos and the illusion of understanding: An experimental study. International Journal of Science and Mathematics Education, 1–21. https://doi.org/10.1007/s10763-022-10265-7

- Lee, H. Y., & List, A. (2019). Processing of texts and videos: A strategy-focused analysis. Journal of Computer Assisted Learning, 35(2), 268–282. https://doi.org/10.1111/jcal.12328

- Lenhard, W., & Lenhard, A. (2014). Berechnung des Lesbarkeitsindex LIX nach Björnson [calculating the readability score LIX following Björnson]. Psychometrica. http://www.psychometrica.de/lix.html

- List, A., & Ballenger, E. E. (2019). Comprehension across mediums: The case of text and video. Journal of Computing in Higher Education, 31(3), 514–535. https://doi.org/10.1007/s12528-018-09204-9

- Lloyd, S. A., & Robertson, C. L. (2012). Screencast tutorials enhance student learning of statistics. Teaching of Psychology, 39(1), 67–71. https://doi.org/10.1177/0098628311430640

- Lowe, R. K. (2003). Animation and learning: Selective processing of information in dynamic graphics. Learning and Instruction, 13(2), 157–176. https://doi.org/10.1016/S0959-4752(02)00018-X

- Mayer, R. E. (2001). Multimedia learning. Cambridge University Press.

- Mayer, R. E. (2021). Evidence-Based principles for How to design effective instructional videos. Journal of Applied Research in Memory and Cognition, 229. https://doi.org/10.1016/j.jarmac.2021.03.007

- Merkt, M., Weigand, S., Heier, A., & Schwan, S. (2011). Learning with videos vs. Learning with print: The role of interactive features. Learning and Instruction, 21(6)), 687–704. https://doi.org/10.1016/j.learninstruc.2011.03.004

- Paik, E. S., & Schraw, G. (2013). Learning with animation and illusions of understanding. Journal of Educational Psychology, 105(2), 278–289. https://doi.org/10.1037/a0030281

- Pekdag, B., & Le Marechal, J. F. (2010). Movies in chemistry education. Asia-Pacific Forum on Science Learning and Teaching, 11(1), 1–19.

- Salmerón, L., Sampietro, A., & Delgado, P. (2020). Using internet videos to learn about controversies: Evaluation and integration of multiple and multimodal documents by primary school students. Computers & Education, 148, 103796. https://doi.org/10.1016/j.compedu.2019.103796

- Salomon, G. (1984). Television is "easy" and print is "tough": The differential investment of mental effort in learning as a function of perceptions and attributions. Journal of Educational Psychology, 76(4), 647–658. https://doi.org/10.1037/0022-0663.76.4.647

- Schroeder, N. L., & Traxler, A. L. (2017). Humanizing instructional videos in physics: When less Is more. Journal of Science Education and Technology, 26(3), 269–278. https://doi.org/10.1007/s10956-016-9677-6

- Seidel, T., Blomberg, G., & Renkl, A. (2013). Instructional strategies for using video in teacher education. Teaching and Teacher Education, 34, 56–65. https://doi.org/10.1016/j.tate.2013.03.004

- Singer Trakhman, L. M., Alexander, P. A., & Berkowitz, L. E. (2019). Effects of processing time on comprehension and calibration in print and digital mediums. The Journal of Experimental Education, 87(1), 101–115. https://doi.org/10.1080/00220973.2017.1411877

- Wagner, M., & Urhahne, D. (2021). Disentangling the effects of flipped classroom instruction in EFL secondary education: When is it effective and for whom? Learning and Instruction, 75, 101490. https://doi.org/10.1016/j.learninstruc.2021.101490

- Walma van der Molen, J., & van der Voort, T. (2000). Children’s and adults’ recall of television and print news in children’s and adult news formats. Communication Research, 27(2), 132. https://doi.org/10.1177/009365000027002002

- Webb, N. M., Ing, M., Kersting, N., & Nemer, K. M. (2006). Help seeking in cooperative learning groups. In S. A. Karabenick, & R. S. Newman (Eds.), Help seeking in academic settings: Goals, groups, and contexts (pp. 45–88). Lawrence Erlbaum Associates.

- Wiley, J. (2019). Picture this! effects of photographs, diagrams, animations, and sketching on learning and beliefs about learning from a geoscience text. Applied Cognitive Psychology, 33(1), 9–19. https://doi.org/10.1002/acp.3495

- Wittwer, J., & Renkl, A. (2008). Why instructional explanations often Do Not work: A framework for understanding the effectiveness of instructional explanations. Educational Psychologist, 43(1), 49–64. https://doi.org/10.1080/00461520701756420

- Wolf, K., & Kratzer, V. (2015). Erklärstrukturen in selbsterstellten Erklärvideos von Kindern [explaining structures in pupils’ self-made explanation videos.]. In K. Hugger, A. Tillmann, S. Iske, J. Fromme, P. Grell, & T. Hug (Eds.), Jahrbuch medienpädagogik 12 [yearbook media pedagogy 12] (pp. 29–44). Springer.

- Wolf, K., & Kulgemeyer, C. (2016). Lernen mit Videos? Erklärvideos im Physikunterricht [explainer videos in physics teaching]. Naturwissenschaften im Unterricht Physik, 27(152), 36–41.

- Zinn, B., Tenberg, R., & Pittich, D. (2021). Erklärvideos – im naturwissenschaftlich-technischen Unterricht eine Alternative zu Texten? [explanatory videos as an alternative to text-based learning using the problem-solving methodology SPALTEN as an example?] Journal of Technical Education, 9(2), 168-187. https://doi.org/10.48513/joted.v9i2.217