ABSTRACT

Although we know that asking questions is an essential aspect of online tutoring, there is limited research on this topic. The aim of this paper was to identify commonly used direct question types and explore the effects of using these question types on conversation intensity, approach to tutoring, perceived satisfaction and perceived learning. The research setting was individual online synchronous tutoring in mathematics. The empirical data was based on 13,317 logged conversations and a questionnaire. The tutors used a mix of open, more student-centred questions, and closed, more teacher-centred questions. In contrast to previous research, this study provides a more positive account indicating that it is indeed possible to train tutors to focus on asking questions, rather than delivering content. Frequent use of many of the question types contributed to increased conversation intensity. However, there were few question types that were associated with statistically significant effects on perceived satisfaction or learning. There are no silver bullet question types that by themselves led to positive effects on perceived satisfaction and learning. The question types could be used by teachers and teacher students when reflecting on what types of questions they are asking, and what kind of questions they could be asking.

Introduction

The use of questions in teaching has been considered a common method of teaching ever since Plato’s academy (Mills, Rice, Berliner, & Rosseau, Citation1980). Asking questions is a critical and challenging part of teachers’ work (Boaler & Brodie, Citation2004), and is together with the providing of answers central to the process of reasoning and understanding (Ram, Citation1991). When explaining to oneself, and to others, constructive cognitive activities take place and such activities seem to promote understanding (Webb, Citation1989). When students explain to others, such as a teacher, the material needs to be well organised, relevant, and described in an appropriate way. Based on questions asked, the student might need to search for more information, restructure the material and generate new explanations. This kind of successive construction of explanations supports understanding (Webb, Citation1989). Based on questions asked, the teacher can stimulate student thinking, uncover students’ current level of understanding, and use the responses to inform pedagogic strategies (Jiang, Citation2014). Teacher questions have been found to be pivotal in facilitating students’ access to content but also in learning the specific language of science (Ernst-Slavit & Pratt, Citation2017).

Researchers have called for the need to support teachers with further knowledge in order to use questions in effective ways (Araya & Aljovin, Citation2017; Jiang, Citation2014). However, most previous research has focused on tutoring groups and encouraging collaborative learning (McPherson & Nunes, Citation2004, Citation2009; Salmon, Citation2000). This paper pays special attention to individual online synchronous tutoring in mathematics. It has been found that individual online synchronous tutoring in mathematics contributed to statistically significant improvements in assessment scores for low-achieving children (Chappell, Arnold, Nunnery, & Grant, Citation2015; Tsuei, Citation2017). Tutors need to pose a range of different types of questions in line with learning objectives (Guldberg & Pilkington, Citation2007). Araya and Aljovin (Citation2017) analysed 23,653 written responses submitted by 984 elementary school students to open-ended questions in science and mathematics. It was found that certain key words in the questions, such as “explain”, had a significant effect on the length of responses and the type of words they used. Another study reported that tutors argued that questions are important to encourage students to reflect, understand the level of knowledge of the student, understand what mistakes students have made, understand different areas of mathematics and how they are connected, and guide students toward how to address a problem (Hrastinski, Cleveland-Innes, & Stenbom, Citation2018).

The aim of this paper is to identify commonly used direct question types and explore effects of using these question types on conversation intensity, approach to tutoring, perceived satisfaction and perceived learning in online tutoring. The research setting is the Math coach project, which offers K-12 students help with their homework in mathematics by online tutors. The empirical data is based on a database with 13,317 conversations from the project. More specifically, the paper is guided by the following research questions:

Which types of direct questions are frequently used by tutors in individual online synchronous tutoring in mathematics?

What are the relationships between the identified question types and conversation intensity, approach to tutoring, perceived satisfaction and perceived learning?

Individual tutoring and tutor questions

The tutorial process can be described as “the means whereby an adult or ‘expert’ helps somebody who is less adult or less expert” (Wood, Bruner, & Ross, Citation1976, p. 89). The construction of knowledge in individual tutoring could result from two types of interactivity in the learning process: a private activity between the student and the learning materials, and a social activity between the student and the tutor (Bates, Citation1991). Social interaction could promote development by interacting with a tutor who is more skilled in solving the problems emerging from the learning activities (McPherson & Nunes, Citation2004, Citation2009; Vygotsky, Citation1978).

Individual tutoring and its effect was studied by Bloom (Citation1984) and his PhD students (Anania, Citation1983; Burke, Citation1983). They compared three conditions of teaching: conventional control class, mastery learning and individual tutoring. The conventional control class was based on students learning the subject matter with about 30 students per teacher. Mastery learning was the same as in the conventional control class, but formative tests were also used, followed by corrective procedures and then repeated tests to determine the extent to which the students had mastered the subject matter. Individual tutoring was when students learn the subject matter with a tutor for each student (or for two or three students simultaneously). It also included formative tests and corrective procedures, although the need for corrective work during tutoring was very small. The study was replicated with four different samples of students at grades four, five and eight in the subject matters probability and cartography.

The average student under individual tutoring was about two standard deviations above the average of the control class in final achievement measures (Bloom, Citation1984). That is, the tutored student was above 98% of the students in the control class. Students undergoing mastery learning performed one standard deviation better than the control class, i.e. the mastery learning student was above 84% of the students in the control class. It should be noted that the research conducted by Bloom and his students was carried out more than three decades ago. Teaching methods might have improved over these years. A test cannot measure all the learning that has taken place and the results might have looked different with other methods of teaching and learning (Dron & Anderson, Citation2014). There are also conflicting findings. For example, in a study of 100 students in grade 4 through 6 that used a computer-supported collaborative environment, it was found that student achievement in large groups without a teacher was equal to the achievement of students individually tutored (Schacter, Citation2000). That said, the evidence for individual tutoring is still convincing.

What might be the reasons that students in individual tutoring outperform more conventional models of teaching? One reason is that students might be exposed to better questions because tutors are able to focus on deeper levels of understanding and reasoning (Graesser & Person, Citation1994). The essential purpose of a question is to elicit a response from the addressee (Chafe, Citation1970). Questions may, however, be expressed in several ways. Kearsley (Citation1976) distinguished between indirect and direct questions, where indirect questions contain an embedded partial interrogative phase, such as “It isn’t obvious what you mean” or “Sixty percent of fifteen is … ” (Boaler & Brodie, Citation2004; Kearsley, Citation1976). Although these are not “true” questions in a syntactical sense since they lack question marks, they have the purpose of a question, i.e. to elicit a response from the addressee. Direct questions could be subdivided into two major groups: open and closed questions. There are essentially infinite possibilities in answering an open question. The answer to a closed question is from a fixed alternative explicitly or implicitly contained in the question. Teachers primarily use closed questions (Mills et al., Citation1980). Students become skilled in recalling facts, but have difficulties when open questions are asked, and higher order thinking is required (Dohrn & Dohn, Citation2018).

Mills et al. (Citation1980) identified five types of empirical studies on the use of questions in teaching. The first type presented background information about questions and classification systems. The second type investigated the relationship between questions and student achievement outcomes. Most studies indicated that students who had been exposed to higher cognitive questioning attained higher achievement scores, although there has been some contrary evidence to this conclusion. The third type investigated teacher use of questions. It has been found that most teacher questions were at low intellectual levels, primarily requiring recall or memory. The fourth type investigated effects of training on increasing teachers’ use of higher cognitive questions. It was found that the level of questioning for trained teachers was significantly higher as compared with untrained teachers. The fifth type analysed the relations between teacher questions and student answers. The quality of these studies was questioned, although it was found that students of trained teachers gave significantly higher cognitive answers than students of untrained teachers.

Although most research seems to assume that questions are essential in teaching, there are also more critical findings. In a literature review, it was found that tutors, even when trained, focus more on delivering rather than developing knowledge (Roscoe & Chi, Citation2007). Mills et al. (Citation1980) investigated the degree of correspondence between the cognitive level of teachers’ questions and the cognitive level of students’ answers. They found that the chances were about even that there would be a correspondence. Questions could lead students’ thinking, which might prevent students from spontaneously developing their ideas. This is especially the case in questions which the teacher knows the answer to (Flammer, Citation1981). Similarly, in a study of Mathematics lecturers, it was found that lecturers asked many questions, but did not encourage students to generate mathematical contributions and reasoning (Paoletti et al., Citation2018).

Individual tutoring is too costly to be conducted on a large scale in most societies. In more recent research, complementing alternatives have been suggested (Bloom, Citation1984). One approach, like that of this paper, is to use a computer-assisted tutoring approach. Madden and Slavin (Citation2017) used a system where a tutor could work with up to six children at a time. They found that reading outcomes were strongly favoured when struggling readers in Grades 1–3 received tutoring. Another suggestion has been to develop adaptive practice systems that function as individual tutors. The online learning environment Math Garden

exploits the estimated difficulties of the problems, and makes sure each student receives little to no problems that are either too easy or too hard, and thus by balancing on the boundary of what a student can do without instruction. (Savi, Ruijs, Maris, & van der Maas, Citation2018, p. 85)

In summary, individual tutoring has been showed to be an effective teaching method in face-to-face settings (Bloom, Citation1984), and there is at least some evidence that it can be effective in online settings (Chappell et al., Citation2015; Tsuei, Citation2017). There is convincing evidence that asking questions is a critical part of teachers’ work (Mills et al., Citation1980; Ram, Citation1991; Webb, Citation1989), although there is more limited research into online settings (Araya & Aljovin, Citation2017; Guldberg & Pilkington, Citation2007). Conducting individual tutoring in an online setting might be one way to address the fact that it is considered too costly to be conducted on a large scale (Bloom, Citation1984; Madden & Slavin, Citation2017). It has been suggested that one reason that individual tutoring is of high quality is that students might be exposed to better questions (Graesser & Person, Citation1994). This paper contributes to a deeper understanding of individual tutoring in online settings, with a special focus on the process of asking questions in individual online synchronous tutoring in Mathematics.

Method

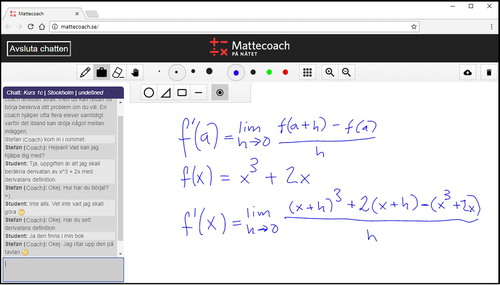

Math coach (mattecoach.se) was started in 2009 and offers K-12 students help with their homework in Mathematics from online tutors. The tutors are teacher students and work evenings Monday to Thursday. All tutors attend a 2 ECTS credit course in individual online Mathematics tutoring when entering Math coach. Tutor training has been reported to be associated with expected online tutoring strategies (De Smet, Van Keer, De Wever, & Valcke, Citation2010). The tutors have access to Swedish K-12 mathematics books and digital material. They use a software developed for Math coach that includes text-based chat and the possibility of drawing on a digital whiteboard with a digital pen. The combination of chat and whiteboard has been used in similar research (Chappell et al., Citation2015). One advantage is that the tutor can work with several students simultaneously (Madden & Slavin, Citation2017). The student can also take the time to work independently on a problem and continue the conversation later. A screenshot of the software is displayed in . The Math coach project meets several research-based recommendations for online Mathematics instruction, such as office hours, tailored advising, frequent communication and tutor professional development (Coleman, Skidmore, & Martirosyan, Citation2017), but is a complement to conventional K-12 education.

All conversation content and metadata are stored in databases. Math coach has, since its start in 2009, used three generations of software. The data for this current study is based on the third-generation software. The reason for not using all conversations is the simplified opportunities for data analysis in the most recent software. The dataset for the present study consists of the 13,317 conversations that were carried out between December 2015 and December 2017. The student and parent provide consent that the data that is collected as part of the Math coach project is treated confidentially and is used for research purposes. Since the data include all conversations during a two-year period, it gives a representative view of the conversations of the Math coach project. The conversations were performed by 79 tutors located at three different universities. The median conversation length was 40 min, covering all educational levels from compulsory school to upper secondary school. Many of the conversations concern the basic courses at an upper secondary level.

In addressing the aim and research questions, key variables were question types, conversation intensity, approach to tutoring, perceived satisfaction and perceived learning. The question types and conversation intensity were automatically retrieved from log-data based on semantic similarity (i.e. identical or very similar wording of questions), while the other variables were studied through an online questionnaire that appeared after each conversation.

Log data

Question types

In order to address the first research question, i.e. which types of direct questions are frequently used by tutors, frequently used question types were retrieved from the log data. An alternative approach would have been to use a content analysis framework. There are a number of content analysis schemes that could have been used, such as a scheme on teacher questions during group discussion in second-grade arithmetic (Hiebert & Wearne, Citation1993), a scheme on teacher questions based on a longitudinal study of Mathematics teaching across three schools (Boaler & Brodie, Citation2004), a scheme that was used to investigate tutor and student questions in research methods and algebra (Graesser & Person, Citation1994; Guldberg & Pilkington, Citation2007) and a scheme that was used to analyse one-to-one online Mathematics tutoring (Stenbom, Jansson, & Hulkko, Citation2016). However, all these schemes are dependent on manual content analysis. We used automatic analysis in order to be able to analyse a very large data set. A downside of this automated approach is that we could not manually analyse each conversation regarding which the question types were being asked. It is likely that the automated identification of question types does not fully capture the purpose behind the questions that the tutors were asking. That said, in the Results section we provide examples of how the question types have been used in different ways.

The data was based on the 12,615 conversations carried out between December 2015 and April 2017. We retrieved two datasets with questions. The first dataset included 92,793 direct questions. They were identified through automated data analysis, in which all chat lines that included a question mark were retrieved from the database. As noted above, Kearsley (Citation1976) distinguished between an indirect question that does not include a question mark and a direct question that includes a question mark. Thus, it was only possible to include direct questions in our study. The conversations were in Swedish and the questions have been translated into English after the analysis was conducted. There is a risk that the meaning of some question types might change slightly because of the translation. Commonly used questions were “What can I help you with?”, “What do you mean?” and “Are you with me?” The second dataset comprised the first five words from all utterances that included a question mark and five or more words. This dataset included 76,175 utterances. The reason for also including this second dataset was that many questions share the initial wording but add details in the latter part of the question. Commonly used initial wording of questions were: “Do you have an idea … ”, “How did you come up … ” and “How do you think that … ”.

Then, we created a dataset that included all questions that had been used at least 50 times, providing us with a list with 82 questions. Many of these questions were similar. Therefore, we created types of questions that used a similar wording. For example, there were 14 different formulations of the question type “*What can I help you*”, such as “What can I help you with?:)” and “Hi:) What can I help you with?”. The character * before the question type denotes that there might be other characters preceding the question type, while using the character * after the question type denotes that there might be other characters following the question type. After combining similar questions into types, there were 23 question types, which are presented in the Results section.

In the next step we analysed to what extent the identified question types occurred in the conversations. In addition to the actual conversation text, metadata was collected for all conversations. The log and metadata analysis used in this step was based on 13,317 conversations that had been conducted between December 2015 and December 2017. The reason that this dataset is larger is that we also included the time period May 2017 to December 2017 that had elapsed while we worked on analysing the first data set.

Conversation intensity

Inspired by previous research on conversation intensity when using mobile phones, we developed such a measure for text-based online tutoring (Irwin, Fitzgerald, & Berg, Citation2000). For each conversation, we collected data on the length of the conversation. This was measured as the number of characters and the duration of the conversation measured in seconds. Based on this, conversation intensity was calculated as the ratio between the number of characters and duration. We believe that this is an important metric since it identifies conversations where the tutor and tutee engage in intense discussion, by writing many messages to each other. The t-test is used to test for statistical significance between each question type and conversation intensity.

Questionnaire

A brief questionnaire with questions on approaches to tutoring, perceived satisfaction, and perceived learning, automatically appeared to be completed by the student and tutor after each conversation. The questionnaire was available between September and December 2017. Previous research has found that short questionnaires have a higher response rate (Deutskens, De Ruyter, Wetzels, & Oosterveld, Citation2004). Although, it might be argued that constructs should preferably be detailed, comprising of a set of items, this leads to long questionnaires. In online learning literature, it has generally been accepted to use one item to, for example, measure perceived learning (Richardson & Swan, Citation2003). In total, 392 questionnaires (13% response rate) were completed. Thus, unfortunately the response rate was low, despite the fact that the questionnaire was brief. The effects of different types of tutor questions are measured using nonparametric Mann-Whitney U tests.

Approach to tutoring

Two questions were included with the aim of assessing to what extent the student perceived that the tutor taught according to the acquisition metaphor or the participation metaphor of learning. Both metaphors might be necessary in processes of teaching and learning (Sfard, Citation1998), although, as noted above, using questions is essential in the tutoring process. Previous research has found that tutors tend to focus on knowledge-telling, i.e. the acquisition metaphor (Roscoe & Chi, Citation2007). The students were asked whether the tutor told/lectured/explained how to solve the mathematical problem (Lecture approach), and whether the tutor asked questions to encourage the student to think about how to solve the problem (Question approach). The questions were on a 7-point Likert scale ranging from “Strongly disagree” to “Strongly agree”.

Perceived satisfaction

One question on perceived satisfaction was included, based on the net-promoter score (Reichheld, Citation2003). It is an established measure from the customer relationship field. On a 11-point Likert scale ranging from “Not at all likely” (0) to “Extremely likely” (10), the students were asked whether they would recommend Math coach to a friend. According to Reichheld, a score of 0–6 means that respondents are “detractors” and unlikely to recommend a service, a score of 7–8 is interpreted as “passively satisfied” and a score of 9–10 means that respondents are “promoters” and very likely to recommend a service.

Perceived learning

One question on perceived learning was included. The students were asked to assess how much they learned during the conversation on a 11-point Likert scale ranging from “Nothing” to “Very much”. This question was inspired by the net-promoter score, while still being comparable to established measures of perceived learning in the online learning literature. For example, Richardson and Swan (Citation2003) asked students to what extent they agreed that the learning that took place in a course was of the highest quality. It can be noted that we have used both 7-point and 11-point Likert scales. It is common practice to use 7-point (or 5-point) Likert scales (Matell & Jacoby, Citation1971), but for questions based on the net-promoter score, it is recommended to use an 11-point Likert scale (Reichheld, Citation2003).

Results

Types of tutor questions

presents in how many of the conversations each question type occurred. In some conversations, a question type occurred several times, which is not reflected in the table. Two of the question types; “*What can I help you*” and “*How can I help you*”, are common ways to initiate a conversation. “Why*” was a frequently used question type. This question type was followed by three types of What questions (“What is*”, “What do we get*” and “What do you mean*”) and one type of How question (“How do you think*”). It can also be noted that some question types were rarely used, such as “Is there anything else*”, “Do you want help*” and “Have you heard about*”, which were used in less than 1% of the conversations.

Table 1. The number and percentage of conversations that included each question type.

Exploring effects of different types of tutor questions

For each variable, calculations were made to test whether the question type had a statistically significant impact. Thus, each question type was used as an independent variable while conversation intensity, approach to tutoring, perceived satisfaction and perceived learning were used as dependent variables. The effects of different types of tutor question were measured using either t-tests or nonparametric Mann-Whitney tests. It should be noted that most identified statistically significant relationships were weak. Every question type was correlated with every dependent variable. However, for reasons of simplicity below we present the question types that achieved statistical significance. All other question types did not achieve statistical significance. It should be noted that the question types occurred to varying extents in the conversations that were complemented by a questionnaire. The question type “Have you heard about*” did not occur, while the most common question type occurred 68 times (“*What can I help you*”).

Conversation intensity

Statistical significance was measured by using t-test between the question types and conversation intensity. Thirteen of the question types (57%) achieved statistical significance (see Appendix). Thus, many of the question types contributed towards increased conversation intensity.

Approach to tutoring

A Wilcoxon Signed-Ranks test (Z = −5.80, p < 0.01) indicated that the tutors were more likely to ask questions (M = 6.2, SD = 1.7, N = 363) as compared with lecturing (M = 5.7, SD = 2.0, N = 371). In conversations where the question type “*How can I help you*” was present, students felt that the tutors were more likely to ask questions (U = 6994, p = 0.02, r = 0.13). This question type is typically used in the beginning of a conversation. The following are examples: “How can I help you?:)”, “Hi and welcome! How can I help you?” and “Hello:) How can I help you?” Another statistical significance was found with the question type “*Do you have an idea*” (U = 3050, p = 0.03, r = 0.11). The following are three examples of how this question type has been used: “Do you have an idea how we should find a side now?:)”, “Do you have an idea there on task 38?:)” and “Okay, do you have an idea how we could start?” There was also a statistical significance with the question type “What is*” (U = 4612, p < 0.01, r = 0.16). The following are three examples of how this question type has been used: “What is x when y is 2?”, “What is 0.4/0.2?” and “What is that?:)”. It can be noted that two of the types of questions that achieved statistical significance are quite different. The question type “*Do you have an idea*” is typically an open question, while the question type “What is*” is typically a closed question.

There was a statistical significance between students that felt that tutors lectured and the question type “Right*” (U = 1975, p < 0.01, r = 0.16). This question type is typically used to follow up what was written on previous chat lines. The following are examples: “Then we put in x = 2 and get y = 2*2 + 1 = 5 // Right?”, “Ok, so the volume for cylinder then gets pi r^2 *2r // Right?” and “It gets quite complicated // Right?:)”. There was also a statistical significance for the question type “Do you recognise*” (U = 1396, p = 0.02, r = 0.12). This question type is typically used to follow up what was written on the whiteboard or in the chat. The following are examples: “Do you understand what I have written [on the whiteboard]?:) // Do you recognise it?”, “Do you recognise that formulae s = v*t?:)” and “First there is a logarithm rule so we can write 2lg(x) to lg(x^2) // Do you recognise that?”

Perceived satisfaction

The students were very positive when asked how likely on a Likert scale from 0 to 10 it was that they would recommend Math coach to a friend. According to the net-promoter score, 72% of the responses were classified as “promoters” (rated 9–10), 11% “passively satisfied” (rated 7–8), and 17% “detractors” (rated 0–6). There was a statistical significance between perceived satisfaction and the question type “*How far have you*” (U = 1634, p = 0.01, r = 0.14). The following are examples: “How far have you come with the task?:)”, “Aa, how far have you come on your own?” and “How far have you understood? What’s the matter, or do you want to go through an example?”

Perceived learning

The students were positive on a Likert scale from 0 to 10 when asked to what extent they learnt during the conversation (M = 7.3, SD = 3.4, N = 397). There was a statistical significance with the tutor question type “Right*” (U = 2607, p = 0.04, r = 0.15). Examples of this question type were provided above. There was also a statistical significance for the tutor question type “How will it*” (U = 2058, p = 0.02, r = 0.11). The following are three examples of how this question type has been used: “How will it be then? // Can you draw?”, “Then you see there are 4x in both? // How will it be then?” and “There is a formula that is called ‘double angle’” // Have you encountered that one? // How will it be if use that one to rewrite the expression?

Discussion

The first research question investigated which types of direct questions were frequently used by tutors in individual online synchronous tutoring in mathematics. Except for the two question types; “*What can I help you*” and “*How can I help you*”, that are commonly used to initiate a conversation, frequently used question types were identified. “Why*” was a very frequently used question type. Other common question types included three types of What questions (“What is*” and “What do we get*” and “What do you mean*”) and one type of How question (“How do you think*”). Why and How questions are typically more open, student-centred questions, while What questions are typically more closed, teacher-centred questions (Kearsley, Citation1976).

Mills et al. (Citation1980) reviewed studies investigating teacher use of questions and found that most teacher questions were at low intellectual levels, primarily closed questions requiring recall or memory. However, the tutors of the Math coach project seem to use a mix of open, more student-centred questions, and closed, more teacher-centred questions. One reason might be that training has been reported to increase teachers’ use of higher cognitive questions (Mills et al., Citation1980). Researchers have called for the need to support teachers with further knowledge in order to use questions in effective ways (Araya & Aljovin, Citation2017; Jiang, Citation2014). The tutors of the Math coach project are teacher students who also attend a 2 ECTS credit course in individual online Mathematics tutoring when entering the Math coach project. One essential part of the course is to encourage and analyse the use of questions in the tutoring process. Thus, this paper provides an example of how teachers could be prepared for online tutoring in general, and more specifically, on how questions can be used in the tutoring process.

The second research question explored the relationships between the identified question types and conversation intensity, approach to tutoring, perceived satisfaction and perceived learning. As noted previously, asking questions is a critical and challenging part of teachers’ work (Boaler & Brodie, Citation2004; Ram, Citation1991; Webb, Citation1989). Our findings suggest that frequent use of questions is an effective technique for encouraging discussion. In fact, many of the question types contributed to conversation intensity, measured as the ratio between the number of characters and the length of the conversation in seconds. These findings are complemented by a study that found that certain key words in the questions, such as “explain”, had a significant effect on the length of responses and the type of words they used (Araya & Aljovin, Citation2017).

The students perceived that the tutors were more likely to ask questions, as compared with lecturing. This is being encouraged in the Math coach project and introductory course, and underlines the potential of professional development to improve tutoring practice. Interestingly, a literature review found that tutors, even when trained, focused more on delivering rather than developing knowledge (Roscoe & Chi, Citation2007). Thus, this study provides a more positive account indicating that it is indeed possible to train tutors to focus on asking questions, rather than delivering content.

Very few question types were significantly related to perceived satisfaction and perceived learning. The only question type that was significantly related to perceived satisfaction was “*How far have you*”. This might be interpreted as it being appreciated by students when the tutor tried to understand how far they had come in the problem-solving process. This is an important aspect of individual online tutoring, especially since the tutor often does not know the student. The response from the student can be used to understand the zone of proximal development of the student (Vygotsky, Citation1978) and to inform tutoring strategies (Hrastinski et al., Citation2018; Jiang, Citation2014). It might be assumed that students appreciated when the tutors tried to understand the specific challenges the students face.

Mills et al. (Citation1980) reported that most reviewed studies indicated that students who had been exposed to higher cognitive questioning attained higher achievement scores, although there has been some evidence contradicting this conclusion. Our study only identified two question types that were significantly related with perceived learning. These were a closed teacher-centred question type (“Right*”) and a more open student-centred question type (“How will it*”). It could be assumed that it is useful to use these question types. For example, if a tutor engages in direct instruction, it would be beneficial to use confirmatory question types, such as “Right*”, to check whether the student is following. From a learning perspective, it also seems useful to ask “How will it*” and let the student show and explain how they address a problem. These findings can be contrasted to a recent study that suggested that individual tutoring contributed to significant gains in student assessment (Chappell et al., Citation2015). One difference was that diagnostic assessment was administered before tutoring began in order to develop individualised learning objectives for each student. This might be challenging in more ad-hoc online tutoring projects, such as the one described in this paper, but there might be other ways that tutoring could be individualised, for example, by drawing on previous conversations with the student.

Further research and implications

The main contribution of this paper has been to identify question types and to start exploring the effects of using these questions. Some significant relationships were identified, although most of them were weak because of the explorative character of the research. This paper could serve as a starting point for exploring the role of tutor questions in individual online synchronous tutoring in Mathematics, and therefore there are many opportunities for future research. It is important to continue investigating the relationship between using different question types and key variables of online learning in order to improve our understanding of the use of questions in online tutoring. Qualitative approaches need to complement the big data approach of this paper to provide a more detailed understanding. While the log data included data from a two-year period, the complementary questionnaire was conducted within a shorter time frame between September and December 2017, which resulted in 392 responses. Further research is suggested to not only be based on big data, but also on ambitious complementary data collection to help explain findings from big data sets.

The findings suggest that frequent use of questions can encourage conversation intensity. This conclusion is complemented by another study using big data, which found that the use of certain words in questions had a significant effect on the length of responses and the words they used (Araya & Aljovin, Citation2017). Jointly, these studies indicate a further need to investigate how certain types of questions, and the wording of questions, affect how students respond. It has been suggested that annotation software could be used to mark-up human-to-human online teaching interactions with successful teaching interaction signifiers (Cukurova, Mavrikis, Luckin, Clark, & Crawford, Citation2017). It would be interesting to explore whether tutors, and possibly students, could be asked to mark what they perceive to be questions of high quality during the tutoring process. In projects primarily based on text-based chat, such as the one reported here, it could be straightforward to introduce such annotation possibilities. In face-to-face and video conferencing sessions this might be more challenging, although current research is developing methods for “automatic detection of teacher questions from audio recordings collected in live classrooms with the goal of providing automated feedback to teachers” (Donnelly et al., Citation2017, p. 218). We also believe that the question types can be a useful foundation in the design of intelligent tutoring systems. There are different ways that the identified question types could be used in practice. For example, the question types could be used by teachers and teacher students when reflecting on what types of questions they are asking, and what kind of questions they could be asking. The art of facilitating meaningful mathematics discussion is a complex practice that teachers need to learn and continuously improve (Crespo, Citation2018). Crespo suggests an approach where representations of what high quality mathematical discussions might look like are discussed among teachers and teacher students, and then further refined. High-quality teacher questions are an essential aspect of such mathematical representations. Further research could explore how different types of questions could be used during different phases of a conversation, e.g. the introduction, problem solving and synthesis phases.

In conclusion, this paper indicates that it is indeed possible to train tutors to focus on asking questions, rather than delivering content. However, there are no question types that by themselves will lead to positive effects on learning. The question types could be used by teachers and teacher students when reflecting on what types of questions they are asking, and what kind of questions they could be asking.

Disclosure statement

No potential conflict of interest was reported by the authors.

References

- Anania, J. (1983). The influence of instructional conditions on student learning and achievement. Evaluation in Education, 7(1), 1–92. doi: 10.1016/0191-765X(83)90002-2

- Araya, R., & Aljovin, E. (2017). The effect of teacher questions on elementary school students’ written responses on an online STEM platform. Paper presented at the international conference on applied human factors and ergonomics.

- Arroyo, I., Wixon, N., Allessio, D., Woolf, B., Muldner, K., & Burleson, W. (2017). Collaboration improves student interest in online tutoring. Paper presented at the international conference on artificial intelligence in education.

- Bates, A. (1991). Third generation distance education: the challenge of new technology. Research in Distance Education, 3, 10–15.

- Bloom, B. S. (1984). The 2 sigma problem: The search for methods of group instruction as effective as one-to-one tutoring. Educational Researcher, 13, 4–16. doi: 10.3102/0013189X013006004

- Boaler, J., & Brodie, K. (2004). The importance, nature, and impact of teacher questions. Paper presented at the proceedings of the twenty-sixth annual meeting of the North American chapter of the international group for the psychology of mathematics education.

- Burke, A. J. (1983). Students’ potential for learning contrasted under tutorial and group approaches to instruction (Doctoral Dissertation). University of Chicago.

- Chafe, W. L. (1970). Meaning and the structure of language. Illinois, Chicago: The University of Chicago Press.

- Chappell, S., Arnold, P., Nunnery, J., & Grant, M. (2015). An examination of an online tutoring program’s impact on low-achieving middle school students’ mathematics achievement. Online Learning, 19(5), 37–53. doi: 10.24059/olj.v19i5.694

- Coleman, S. L., Skidmore, S. T., & Martirosyan, N. M. (2017). A review of the literature on online developmental mathematics: Research-based recommendations for practice. The Community College Enterprise, 23(2), 9–26.

- Crespo, S. (2018). Generating, appraising, and revising representations of mathematics teaching with prospective teachers. In Scripting approaches in mathematics education (pp. 249–264). Cham: Springer.

- Cukurova, M., Mavrikis, M., Luckin, R., Clark, J., & Crawford, C. (2017). Interaction analysis in online maths human tutoring: The case of third space learning. Paper presented at the international conference on artificial intelligence in education.

- De Smet, M., Van Keer, H., De Wever, B., & Valcke, M. (2010). Cross-age peer tutors in asynchronous discussion groups: Exploring the impact of three types of tutor training on patterns in tutor support and on tutor characteristics. Computers & Education, 54(4), 1167–1181. doi: 10.1016/j.compedu.2009.11.002

- Deutskens, E., De Ruyter, K., Wetzels, M., & Oosterveld, P. (2004). Response rate and response quality of internet-based surveys: An experimental study. Marketing Letters, 15(1), 21–36. doi: 10.1023/B:MARK.0000021968.86465.00

- Dohrn, S. W., & Dohn, N. B. (2018). The role of teacher questions in the chemistry classroom. Chemistry Education Research and Practice, 19(1), 352–363. doi: 10.1039/C7RP00196G

- Donnelly, P. J., Blanchard, N., Olney, A. M., Kelly, S., Nystrand, M., & D’Mello, S. K. (2017). Words matter: Automatic detection of teacher questions in live classroom discourse using linguistics, acoustics, and context. Paper presented at the proceedings of the seventh international learning analytics & knowledge conference.

- Dron, J., & Anderson, T. (2014). Teaching crowds: Learning and social media. Edmonton: Athabasca University Press.

- Ernst-Slavit, G., & Pratt, K. L. (2017). Teacher questions: Learning the discourse of science in a linguistically diverse elementary classroom. Linguistics and Education, 40, 1–10. doi: 10.1016/j.linged.2017.05.005

- Flammer, A. (1981). Towards a theory of question asking. Psychological Research, 43(4), 407–420. doi: 10.1007/BF00309225

- Graesser, A. C., & Person, N. K. (1994). Question asking during tutoring. American Educational Research Journal, 31(1), 104–137. doi: 10.3102/00028312031001104

- Guldberg, K., & Pilkington, R. (2007). Tutor roles in facilitating reflection on practice through online discussion. In T. W. Lauer, E. Peacock, & A. C. Graesser (Eds.), Journal of Educational Technology & Society (Vol. 10, pp. 61–72). Hillsdale: Lawrence Erlbaum Associates.

- Hiebert, J., & Wearne, D. (1993). Instructional tasks, classroom discourse, and students’ learning in second-grade arithmetic. American Educational Research Journal, 30(2), 393–425. doi: 10.3102/00028312030002393

- Hrastinski, S., Cleveland-Innes, M., & Stenbom, S. (2018). Tutoring online tutors: Using digital badges to encourage the development of online tutoring skills. British Journal of Educational Technology, 49(1), 127–136. doi: 10.1111/bjet.12525

- Irwin, M., Fitzgerald, C., & Berg, W. P. (2000). Effect of the intensity of wireless telephone conversations on reaction time in a braking response. Perceptual and Motor Skills, 90(3_suppl), 1130–1134. doi: 10.2466/pms.2000.90.3c.1130

- Jiang, Y. (2014). Exploring teacher questioning as a formative assessment strategy. RELC Journal, 45(3), 287–304. doi: 10.1177/0033688214546962

- Kearsley, G. P. (1976). Questions and question asking in verbal discourse: A cross-disciplinary review. Journal of Psycholinguistic Research, 5(4), 355–375. doi: 10.1007/BF01079934

- Madden, N. A., & Slavin, R. E. (2017). Evaluations of technology-assisted small-group tutoring for struggling readers. Reading & Writing Quarterly, 33(4), 327–334. doi: 10.1080/10573569.2016.1255577

- Matell, M. S., & Jacoby, J. (1971). Is there an optimal number of alternatives for Likert scale items? Study I: Reliability and validity. Educational and Psychological Measurement, 31(3), 657–674. doi: 10.1177/001316447103100307

- McPherson, M., & Nunes, M. B. (2004). The role of tutors as a integral part of online learning support. European Journal of Open, Distance and E-Learning, 7(1).

- McPherson, M., & Nunes, M. B. (2009). The role of tutors as a fundamental component of online learning support. Distance and e-Learning in Transition, 235–246.

- Mills, S. R., Rice, C. T., Berliner, D. C., & Rosseau, E. W. (1980). The correspondence between teacher questions and student answers in classroom discourse. The Journal of Experimental Education, 48(3), 194–204. doi: 10.1080/00220973.1980.11011735

- Paoletti, T., Krupnik, V., Papadopoulos, D., Olsen, J., Fukawa-Connelly, T., & Weber, K. (2018). Teacher questioning and invitations to participate in advanced mathematics lectures. Educational Studies in Mathematics, 98(1), 1–17. doi: 10.1007/s10649-018-9807-6

- Ram, A. (1991). A theory of questions and question asking. Journal of the Learning Sciences, 1(3-4), 273–318. doi: 10.1080/10508406.1991.9671973

- Reichheld, F. F. (2003). The one number you need to grow. Harvard Business Review, 81(12), 46–55.

- Richardson, J. C., & Swan, K. (2003). Examining social presence in online courses in relation to studentsí perceived learning and satisfaction. Journal of Asynchronous Learning Networks, 7, 68–88.

- Roscoe, R. D., & Chi, M. T. (2007). Understanding tutor learning: Knowledge-building and knowledge-telling in peer tutors’ explanations and questions. Review of Educational Research, 77(4), 534–574. doi: 10.3102/0034654307309920

- Salmon, G. (2000). E-moderating: The key to teaching and learning online. London: Kogan Page.

- Savi, A. O., Ruijs, N. M., Maris, G. K., & van der Maas, H. L. (2018). Delaying access to a problem-skipping option increases effortful practice: Application of an A/B test in large-scale online learning. Computers & Education, 119, 84–94. doi: 10.1016/j.compedu.2017.12.008

- Schacter, J. (2000). Does individual tutoring produce optimal learning? American Educational Research Journal, 37(3), 801–829. doi: 10.3102/00028312037003801

- Sfard, A. (1998). On two metaphors for learning and the dangers of choosing just one. Educational Researcher, 27(2), 4–13. doi: 10.3102/0013189X027002004

- Stenbom, S., Jansson, M., & Hulkko, A. (2016). Revising the Community of Inquiry framework for the analysis of one-to-one online learning relationships. The International Review of Research in Open and Distributed Learning, 17(3), 36–53. doi: 10.19173/irrodl.v17i3.2068

- Tsuei, M. (2017). Learning behaviours of low-achieving children’s mathematics learning in using of helping tools in a synchronous peer-tutoring system. Interactive Learning Environments, 25(2), 147–161. doi: 10.1080/10494820.2016.1276078

- Vygotsky, L. S. (1978). Mind in society: The development of higher psychological processes. Cambridge, MA: Harvard University Press.

- Webb, N. M. (1989). Peer interaction and learning in small groups. International Journal of Educational Research, 13(1), 21–39. doi: 10.1016/0883-0355(89)90014-1

- Wood, D., Bruner, J. S., & Ross, G. (1976). The role of tutoring in problem solving. Journal of Child Psychology and Psychiatry, 17, 89–100. doi: 10.1111/j.1469-7610.1976.tb00381.x