Abstract

The learning sciences, as an academic community investigating human learning, emerged more than 30 years ago. Since then, graduate learning sciences programs have been established worldwide. Little is currently known, however, about their disciplinary backgrounds and the topics and research methods they address. In this document analysis of the websites of 75 international graduate learning sciences programs, we examine central concepts and research methods across institutions, compare the programs, and assess the homogeneity of different subgroups. Results reveal that the concepts addressed most frequently were real-world learning in formal and informal contexts, designing learning environments, cognition and metacognition, and using technology to support learning. Among research methods, design-based research (DBR), discourse and dialog analyses, and basic statistics stand out. Results show substantial differences between programs, yet programs focusing on DBR show the greatest similarity regarding the other concepts and methods they teach. Interpreting the similarity of the graduate programs using a community of practice perspective, there is a set of relatively coherent programs at the core of the learning sciences, pointing to the emergence of a discipline, and a variety of multidisciplinary and more heterogeneous programs “orbiting” the core in the periphery, shaping and innovating the field.

The learning sciences as an academic community started to grow some 30 years ago, when the idea of investigating learning and teaching in the real world brought together scientists from various research areas. Stemming from fields like psychology, sociology, computer science, design studies, science, mathematics or medical education, social work, and the young field of cognitive science, these scientists had different academic backgrounds regarding both theory and methods (Hoadley & Van Haneghan, Citation2011). Thus, from the very beginning, a characteristic of the learning sciences was and continues to be its multifaceted nature, originating from the involvement of diverse scientific fields, each contributing to research on learning and supporting learning in its own way.

Since then, an academic community has evolved and flourished, which has increased considerably regarding its scientific impact and popularity. Today, there are many universities across the globe offering graduate programs in learning sciences. Still, up to now little has been known about the disciplines involved in offering these programs and the theories, concepts, and (research) methods they teach. As Yoon and Hmelo-Silver (Citation2017) point out, knowing the extent to which learning sciences programs align might be crucial knowledge to understand both the current status of learning sciences and its future development, which depends on what future learning scientists learn in these programs (Nathan, Rummel, & Hay, Citation2016). The present study aims to answer the question of the current alignment of graduate learning sciences programs and thereby adds to and extends the insights compiled by Packer and Maddox (Citation2016), who briefly reviewed 17 learning sciences programs while determining the “conceptual territory” of the learning sciences. Based on the selected programs, they present several keywords that “were mentioned frequently” by the programs, for example, apprenticeship, collaboration, instructional-design, community, and informal. However, they do not provide further analyses, do not assess the relative importance of the different topics, and do not provide further quantitative details regarding these topics. Our study can be seen as a step toward providing a quantitative answer to questions regarding backgrounds of graduate learning sciences programs, the concepts and research methods taught within these, and the coherence of graduate learning sciences programs. Beyond the analysis of the contents of the graduate programs, we put particular focus on the way the programs present themselves, what they emphasize and advertise on their websites, and how these programs and thus also the learning sciences are publically perceived. This aspect is of special interest, as the relatively young field of learning sciences is still in the process of finding its own profile to stand out from related fields such as educational psychology and cognitive science (Packer & Maddox, Citation2016). Here, the question of whether learning sciences is a discipline on its own with a clear common core and a learning sciences “brand” or whether it instead represents a tent (Nathan et al., Citation2016) for various research from different disciplines related to learning is repeatedly brought up. To generate further evidence for answering this question, we use the results of our document analysis on concepts and methods taught in graduate learning sciences programs worldwide as indicators for determining similarities and differences between the programs. Building on additional network and similarity analyses of these data, we adopt the concept of a community of practice (CoP; Lave & Wenger, Citation1991; Wenger, Citation1998) to give a more detailed description of the learning sciences as a community and network of graduate programs. In particular, we identify subgroups of programs that may contribute empirical arguments to the discipline versus tent discussion. For this, we explore whether the methods taught in the programs can be used as anchor points for subsamples with high similarity.

THE LEARNING SCIENCES COMMUNITY

In the following sections, we give a brief overview of five main themes within the learning sciences. Subsequently, the concept of CoPs is presented and suggested for the analysis of the graduate learning sciences programs.

Some Characteristics of the Learning Sciences

Various books, chapters, and articles describe the past and present of the learning sciences (e.g., Evans, Packer, & Sawyer, Citation2016; Hoadley, Citationin press; Hoadley & Van Haneghan, Citation2011; Pea, Citation2016; Seel, Citation2012). According to these, the vision of the International Society of the Learning Sciences (ISLS, Citation2009), and a recent ISLS membership survey (Yoon & Hmelo-Silver, Citation2017), the learning sciences has been from its beginning, and still is, a research field involving multiple disciplines investigating learning and supporting learning in real-world contexts (Kolodner, Citation1991). Based on the structure of the first Cambridge Handbook of the Learning Sciences (Sawyer, Citation2006) and the categories used for the Network of Academic Programs in the Learning Sciences (NAPLeS)Footnote1 webinar series, five main aspects of learning sciences research can be derived from existing literature (Evans et al., Citation2016; Hoadley, Citationin press; Sawyer, Citation2014b; Schank, Citation2016; Seel, Citation2012; Yoon & Hmelo-Silver, Citation2017): Regarding content, these aspects include how people learn, supporting learning, learning in the disciplines, and technology-enhanced learning and collaboration, including computer-supported collaborative learning. In addition, there is an emphasis on methodologies for the learning sciences, focusing on the research approaches and instruments used to investigate real-world learning and teaching.

The first major focus of learning sciences research is on how people learn with a special and increasing interest in real-world conditions (e.g., Barab & Squire, Citation2004; Kolodner, Citation2009; Yoon & Hmelo-Silver, Citation2017). This includes how children and adults process, gather, and interpret information or actions and how they develop knowledge, skills, and expertise, as well as other dispositions in different contexts such as schools, museums, or workplaces. Approaches to address these questions have been heavily influenced by theories of situated learning and cognition as well as sociocultural approaches (e.g., Collins, Brown, & Newman, Citation1988; Scardamalia & Bereiter, Citation2014; Stahl, Citation2010). These theories emphasize the importance of the social and physical context of cognition and hence the proclivity for authentic learning tasks (Greeno & Engeström, Citation2014).

Based on the multidisciplinary roots of the learning sciences and the emphasis on the situated nature of cognition, learning sciences research often addresses learning in the disciplines. That is, many learning scientists situate their research in one or more disciplines, striving to understand learning as it is enacted in a specific context, instead of assuming immediate domain-general answers to their research questions. Disciplines frequently involved are mathematics, science, computer science, language, or medicine (e.g., Herrenkohl & Cornelius, Citation2013; Kollar et al., Citation2014; Tabak & Radinsky, Citation2015).

Another major aspect of learning sciences research is that it aims at supporting learning and teaching by (re-)designing rich learning environments, be it in formal learning environments like school classrooms or informal learning environments like museums or libraries. In particular, research focuses on various forms of supporting learning and approaches to structuring and designing all kinds of learning environments. Here, scaffolding stands out as a learner-focused, highly adaptive support for learning processes (e.g., Pea, Citation2004) often implemented in conjunction with complex pedagogical approaches such as inquiry- and problem-based learning (e.g., Hmelo-Silver, Citation2004; Krajcik et al., Citation1998; Reiser, Citation2004).

A fourth, partially overlapping main emphasis of learning sciences research is on technology-enhanced learning and collaboration. As digitalization is changing the world rapidly, there are certainly several reasons that technologies could be of interest to researchers concerned with teaching and learning. Technologies have the potential to modify our ways of thinking and interacting with others and thus have the potential to change and enable learning processes. Emphasizing the social nature of cognition, research on computer-supported collaborative learning has focused on the question of how collaborative learning can be enabled and supported by digital technologies, partially reflecting the roots of the learning sciences in research in computer science and artificial intelligence research (Hoadley & Van Haneghan, Citation2011). This involves a broad variety of topics such as computer-supported knowledge building in schools (Scardamalia & Bereiter, Citation2014), in online communities, and through mass collaboration as, for example, in Wikipedia (e.g., Kimmerle, Moskaliuk, Oeberst, & Cress, Citation2015). Furthermore, digital technologies are also considered optimal tools to facilitate the implementation of adaptive support for individuals and groups. Here, two main lines of research are concerned with awareness support for groups (Janssen & Bodemer, Citation2013) as well as scaffolding and scripting of interaction in groups of learners (e.g., Kollar et al., Citation2014). The former collects information about the group and its members and feeds it back to the group, which can regulate its behavior based on this information. The latter, scaffolding and scripting, suggest certain roles and activities to a group or even guide the composition of individual contributions to dialogs and discussions (Dillenbourg, Järvelä, & Fischer, Citation2009). Moreover, several forms of individual learning supported by technologies can be mentioned here, for example, game- and simulation-based learning (De Jong, Lazonder, Pedaste, & Zacharia, Citationin press) and augmented reality. Furthermore, technology can afford a multitude of different experiences for learners, for example, via different forms of modeling and (participatory) simulations (e.g., Colella, Citation2000).

To investigate questions related to these four broad topics, learning sciences research uses a broad variety of methods. Methods used for data collection and analysis in the learning sciences are of particular interest for two reasons. First, as a multidisciplinary research field, learning sciences is shaped by various methods from different disciplines (Hoadley & Van Haneghan, Citation2011). Second, many researchers in the learning sciences are driven by the desire to advance theory as well as practice through their research (Pasteur’s quadrant; Stokes, Citation2011), avoiding the dichotomous distinction of “applied versus pure” in other disciplines concerned with learning (Hoadley & Van Haneghan, Citation2011; Packer & Maddox, Citation2016). Based on this second aspect, design-based research (DBR; Collins, Joseph, & Bielaczyc, Citation2004; The Design-Based Research Collective, Citation2003) is sometimes suggested as a key method of the “design science” learning sciences (e.g., Nathan & Sawyer, Citation2014; Sawyer, Citation2014a), as it integrates the development of theory and the design of learning environments through an iterative, holistic approach (Hoadley & Van Haneghan, Citation2011). Nevertheless, other methods are also part of the learning sciences’ methodological portfolio, including quantitative (multilevel) methods, educational data mining, and learning analytics (Siemens & Baker, Citation2012), as well as qualitative methods, for instance, to analyze small group discussions and argumentation, reflecting the learning sciences’ focus not only on learning of individuals but on their interaction in groups (e.g., Scardamalia & Bereiter, Citation2014; Stahl, Citation2010).

Learning Sciences as a Community

The learning sciences can be considered an academic community; there is hardly any disagreement on this. Scientifically, this status as a community can be further underpinned using some of the criteria developed in the literature to characterize CoPs. Lave and Wenger (Citation1991) introduced the concept of a CoP to describe a group of practitioners who form a community with several joint characteristics. Wenger (Citation1998) points out three main characteristics shaping CoPs: mutual engagement, that is, the shared engagement of the various members of a group, their relations, and their working together; a joint enterprise, that is, a common aim and shared understanding that binds the members of the community together and is constantly renegotiated to fit the members’ individual aims; and a shared repertoire, that is, a set of coherent concepts, resources, and especially methods shared among the members of the group (see also Wenger, McDermott, & Snyder, Citation2002). Barab, MaKinster, and Scheckler (Citation2003) suggested adding other characteristics, of which overlapping histories and mechanisms for reproduction seem particularly important for characterizing academic communities.

Examining these core characteristics in the context of learning sciences, there is a joint enterprise in developing theories of learning as it takes place in the real world and theories of how learning can be facilitated. Although many members’ roots are in several different disciplines including psychology, science education, and computer science (Hoadley & Van Haneghan, Citation2011), the last 30 years are at least a starting point for a shared or overlapping history. There is mutual engagement with two dedicated conferences, the International Conference of the Learning Sciences (ICLS) and Computer-Supported Collaborative Learning (CSCL) organized by the ISLS. ISLS also steers two well-established, dedicated journals, The Journal of the Learning Sciences and the International Journal of Computer-Supported Collaborative Learning. However, beyond the mutual engagement steered by the ISLS, many more activities take place at other conferences and in other societies, for example, in education or in computer science, which have special interest groups or dedicated strands devoted to learning sciences research.Footnote2 There are several further journals publishing learning sciences research, such as Instructional Science, Learning and Instruction, and American Educational Research Journal.

Further, there appears to be a shared repertoire of concepts, such as scaffolding, inquiry-based learning, or computer-supported collaborative learning, as well as research methods, such as dialog analysis and DBR, as they can be found repeatedly in learning sciences publications (e.g., Evans et al., Citation2016; Hoadley, Citationin press; Sawyer, Citation2014b; Schank, Citation2016; Seel, Citation2012; Yoon & Hmelo-Silver, Citation2017).

The learning sciences community offers several reproduction mechanisms, but two seem crucial. First, there are multiple session types in learning sciences conferences, including the relatively short New Members Meetings aimed at integrating newcomers, as well as two more extensive two-day workshops, the Doctoral Consortium and the Early Career Workshop at ICLS and CSCL, focusing on the integration of young researchers into the community. Second, an increasing number of academic degree programs educate young learning scientists around the globe, shaping their students as future learning scientists. However, up until now little has been known about how the learning sciences is being taught in these programs, how the programs conceptualize the learning sciences, and whether their curricula are targeted at those concepts and methods commonly regarded as core to the learning sciences (e.g., Packer & Maddox, Citation2016; Sawyer, Citation2014b; Schank, Citation2016) or rather entail diverse foci.

So far, characterizations of the learning sciences tend to focus mainly on what is perceived as the core of the community (Nathan & Sawyer, Citation2014; Schank, Citation2016), that is, those concepts and methods that are assumed to be crucial for the community based on the historical development of the learning sciences. This is fundamental knowledge, and more knowledge about key concepts and signature methods within the learning sciences is certainly necessary. However, from a theoretical point of view, the core of a CoP may not be the only interesting and certainly not the most dynamic aspect of a community, as it is characterized by high similarity and coherence (Lave & Wenger, Citation1991; Shaffer, Citation2004; Wenger et al., Citation2002). In contrast, the more peripheral members of a CoP may be moving closer to the core or may move toward other CoPs and thus change the boundaries of the learning sciences, potentially bringing in new concepts and methods from other CoPs.

Often, the meaning of peripheral participation is reduced to an early stage of participation (“newcomers,” “apprentices”) with a clear trajectory toward the core of a CoP, where the participants develop identity and knowledge as by-products of their “journey” (e.g., Lave & Wenger, Citation1991). However, peripheral participation may have other important aspects and characteristics. In particular, peripheral participation can take place without a trajectory toward the core because, for example, the peripheral members are deeply rooted in one or more other communities and just share certain interests with the community under consideration (“peripheral experts”). Thus, peripheral members may be committed to an interdisciplinary enterprise involving learning sciences phenomena but may have diverse backgrounds, methods, or resources and thus do not (fully) align with the other characteristics of the community. This diversity of trajectories within a CoP and the importance of peripheral members for innovation within a CoP are also highlighted as important according to research (Justesen, Citation2004).

Based on this conception, both core and periphery should be considered when describing the structure of the learning sciences as a CoP with various degree programs, each representing students and scientists affiliated with the programs. Thus, not only those topics and methods regarded as core to the learning sciences but also the whole breadth of learning sciences research are worth investigating.

Guiding Questions

In the present study, we examine which disciplines are involved in graduate learning sciences programs; which concepts and methods are taught within these; and how programs, concepts, and methods relate to each other, respectively. In addition to these descriptive data, we apply a CoP perspective to interpret our results with respect to these graduate learning sciences programs. More specifically, we aim to explore conceptual and methodological commonalities and differences among currently existing graduate learning sciences programs and, based on this information, aim to shed light on the status of the learning sciences community as depicted by the programs and their members and identify core and peripheral aspects. Using the websites of the graduate programs for a document analysis, we address the following questions with respect to teaching the learning sciences:

RQ1.

Where are graduate learning sciences programs located, and which disciplines are involved in teaching the learning sciences?

RQ2.

What are the core concepts and methods taught in graduate learning sciences programs?

RQ3.

What concepts and methods are explicitly highlighted and emphasized by the programs?

Based on the results regarding concepts and methods, we use network analytical methods to examine interrelations among the various programs, concepts, and methods, respectively. We further examine the similarity of graduate learning sciences programs and several subsamples based on shared methods, trying to identify core and peripheral programs within the learning sciences community. We address the following three questions:

RQ4.

What are important concept-related connections among graduate learning sciences programs?

RQ5.

What are the relations among the various concepts and among the various methods that are taught in the graduate learning sciences programs, respectively? Can subgroups of co-occurring concepts or methods be identified?

RQ6.

Can we identify a core of more homogeneous graduate learning sciences programs, defined by a specific methodological approach?

METHOD

General Approach

We conducted a document analysis (Bowen, Citation2009) on the website contents of graduate learning sciences programs across the globe. This method is recommended for qualitative case studies of events, organizations, or programs with rich verbal as well as pictorial material (e.g., Stake, Citation1995; Yin, Citation1994). In particular, this method mimics a genuine outsider’s (e.g., potential student’s) view of the programs as someone who would also only be able to access the English websites of the programs, thus enabling us to answer which concepts and methods are emphasized by the programs and thus likely shape the public view of the learning sciences. Furthermore, this approach has several advantages in comparison to other methods, for example, questionnaires, which are prone to availability errors or social desirability biases and often have rather low return rates (56% in the case of Yoon & Hmelo-Silver, Citation2017).

Working with the documents, we followed standard procedures of qualitative content analysis (Mayring, Citation2001, Citation2014), underpinned by quantitative as well as network analytical approaches. We gathered and analyzed data from the websites of universities offering master’s and/or PhD programs in learning sciences and analyzed them in a skimming, reading, and interpretation process, as recommended by Atkinson and Coffey (Citation2004). Besides gathering data on the involved disciplines and various descriptive information, special emphasis was put on the concepts and research methods taught to graduate students enrolled in the programs.

The sample of graduate learning sciences programs for this study was compiled using two approaches. First, we conducted an inductive online search using common Internet search engines (Google, Bing, DuckDuckGo), starting with the combinations of the terms “learning science*” or “sciences of learning” with “master,” “PhD,” or “degree program.” In addition, we used materials available from the ISLS and its conferences to find further graduate learning sciences programs (e.g., conference proceedings). Finally, we complemented the already identified programs by using the list of learning sciences programs within NAPLeS.Footnote3 Currently, NAPLeS, which strives to advance the learning and teaching of the learning sciences worldwide, includes programs from more than 30 universities across the globe. Main criteria for institutional membership in NAPLeS is a running program that has been labeled or categorized by its faculty as a learning sciences program and that three of this program’s faculty members are also individual members of ISLS.

To ensure high coding quality and avoid validity threats due to comprehension or translation errors, only programs with English-language websites and a solid amount of descriptive information (i.e., more than administrative or admission details) were considered in the present study. While the approach to include only English-language websites is selective, it not only is common practice in research synthesis (i.e., systematic reviews and meta-analyses; e.g., Card, Citation2015; Cooper, Hedges, & Valentine, Citation2009) to ensure high coding accuracy but also had the instrumental purpose of enabling us to implement the use of the labels “learning sciences,” “learning science,” and “sciences of learning” as an objectively workable selection criterion. This criterion marks an important distinction between programs considering themselves part of the broader learning sciences community, as of interest to us, and those that focus on learning but do not identify themselves as belonging to learning sciences. To extract those concepts and methods that are addressed within graduate learning sciences programs, the inclusion of programs identifying themselves as part of the learning sciences community and exclusion of other programs that do not identify themselves as part of the learning sciences community was crucial.

After compiling a list of 75 graduate learning sciences programs in early 2015, we downloaded the information contained on the individual program websites. The download process started in late June 2015 and ended on July 31, 2015. Any modifications to the websites after this date were not included in the analyses. For each program, we considered only websites and documents that could be retrieved from the study programs’ index or welcome page and that were (at least partially) specifically related to the program.

Sample

The sample consisted of 75 graduate learning sciences programs: 38 master’s degree programs (51%) and 37 PhD programs (49%). Out of the total sample, 57% (n = 43) were located at NAPLeS member universities, 20 being master’s degree programs and 23 being PhD programs. The sample also included two “learning specialist” programs, which were coded together with the PhD programs because they are post-master’s degree programs. For 90% of the programs, the teaching language was English, yet almost 30% of the programs were from non-English-speaking countries.

Coding Procedures

For data analysis, deductive categories (Mayring, Citation2001) were developed based on the ICLS keyword list (ICLS, Citation2014), which has been used to tag concepts (e.g., argumentation or scaffolding) and methods (e.g., DBR or eye tracking) for submissions to the ICLS. In addition, we took into consideration concepts and methods included in the Cambridge Handbook of the Learning Sciences (Sawyer, Citation2014b) and the NAPLeS webinar series as well as those mentioned in the ISLS vision document (ISLS, Citation2009). After initial exploratory coding, we refined our coding scheme by removing and merging particular categories, since some categories were difficult to distinguish based on the descriptions provided on the websites. For example, we merged categories such as multimedia learning and cognitive load theory into the new category nonsituative instructional approaches since it was often hard to determine the specific underlying framework from the given descriptions on the websites. Furthermore, we introduced two broader categories for the methods: data collection and data analysis. All existing method categories were included in either of the broader categories as subcodes (). To make maximum use of available information, ambiguous descriptions of data collection or data analysis were coded as unspecified. This category was assigned to explicit references to data collection or analysis that did not state a specific method (e.g., courses labeled “data analysis in the learning sciences”). The final categories are reported in . During the coding process, all categories mentioned in were applied at least once to the programs’ websites. No additional categories were needed to capture concepts and methods mentioned in the online information.

TABLE 1 Concept-Related and Method-Related Categories

To facilitate coding, short definitions of each category were created. For example, the category collaborative learning was used for any kind of explicit focus on learning or working together in dyads or larger groups, be it for analyzing effects on content elaboration, argumentation quality, or social aspects of the collaboration. The coding manual further detailed rules regarding the segmentation of the online materials.

Since learning sciences is multifaceted, including researchers from various disciplines, it was assumed that graduate learning sciences programs are often offered in collaboration with several departments, faculties, or research centers, each representing different disciplines and research fields. To analyze these relations, we extracted all available information regarding any contributing research field in the program, for example, courses offered by other departments. Here, we only included fields that were clearly involved in the teaching of courses for the whole degree program and therefore likely interact with most, if not all, enrolled students. We were also cautious in relabeling or grouping the research fields to prevent mixing up, for example, cognitive sciences, psychology, and related fields.

After an extended rater training, the percentage agreement on the frequencies of assigned codes per program was adequate, ranging from 75% to 94% (M = 84%, SD = 7%) for all four coders based on 16 programs (> 20% of the included programs). In addition, we calculated the weighted percentage agreement that takes into account the actual number of used categories and the overall number of assigned codes and thus takes into account agreement by chance. It ranged from 66% to 92% (M = 79%, SD = 10%). Following the calculation of the interrater agreement, the programs were equally distributed among four coders (four authors of this contribution), individually coded, and discussed among the four coders in case of difficulties.

Network Analytical Approaches

To find relations among the programs, the concepts taught, and the methods taught, respectively, the free R package igraph (Csardi & Nepusz, Citation2006) and its network analytical tools were used. For each analysis, a network consisting of nodes and connecting edges was created. Within the network, the nodes, representing the objects of interest (e.g., the programs), are connected by lines (edges) representing their connection (e.g., based on the common use of certain concepts and methods). The edges are weighted according to the strength of the connection between both nodes (e.g., determined by the number of shared concepts or methods). To illustrate this, consider a network of programs where the connections are based on shared concepts. If programs A and B both cover the concepts using technology to support learning and informal learning and have no other concepts in common, the connection between programs A and B would be assigned a strength value of 2 (Barrat, Barthélemy, Pastor-Satorras, & Vespignani, Citation2004) as they cover exactly two shared concepts. If program A is further connected to m out of the 74 other programs, then its degree, that is, the number of connections to other programs, would be m and its normalized degree would be m/74 (Borgatti, Everett, & Johnson, Citation2013). The visual display of the established network in conjunction with data on the degrees and strengths allow the description of how connected the various programs are regarding the concepts they address.

Determining Core Programs and Signature Methods of the Learning Sciences

Shared methods are an essential aspect of a joint practice and have been highlighted as an important aspect of CoPs (e.g., Wenger et al., Citation2002). Thus, we were interested in analyzing the similarity of those programs teaching a shared method, as a high similarity indicates that the programs and thus the shared method corresponds to a core method of the learning sciences. To this end, we created subsamples of the overall sample of graduate learning sciences programs sharing a specific method, such as DBR or video analysis. Each subsample was then analyzed regarding its similarity in terms of the remaining concepts and methods. For this, a measure of similarity fulfilling the following criteria was needed:

It allows the similarity of groups, not only pairs of graduate learning sciences programs, to be measured.

It is independent of the number of graduate learning sciences programs included in the examined subsample.

Both the teaching of the same method or concept in two programs and the “not teaching” of the same method or concept in these programs increases similarity.

Differences regarding the teaching of a method or concept have a negative influence on similarity.

The measure of similarity accounts for chance and the probability of certain concepts or methods to be taught.

The network analytical tools used to visualize the connections among the graduate learning sciences programs, concepts, and methods, respectively, do not comply with these criteria. For example, only shared methods and concepts add to the connection between two programs, but not the “not teaching” of other methods and concepts. Thus, an alternative measure was needed. For this, Fleiss’ kappa (Fleiss, Citation1971; Fleiss & Cohen, Citation1973) was selected, which is usually used to determine interrater reliability but can also be used to determine the similarity of “objects” other than raters.

RESULTS

Geographical Distribution and Disciplinary Background of the Programs

Regarding the location of the graduate learning sciences programs, a high concentration in North America of all programs was found, with 71% (n = 53). Still, 20% (n = 15) of the programs were located in Europe and the remaining 9% (n = 7) of the sample’s programs were in Asia and Australia, so that a substantial number of international programs were included. None of the programs included in the sample were located in South America or Africa.

In terms of disciplines involved in delivering the programs, a total of 45 different disciplines were found within the programs. The “top 10” disciplines can be found in , led by computer science (48%), psychology (35%), and science and science education (35%).

TABLE 2 Percentage of Programs Mentioning the Discipline as Involved in Offering the Program(Top 10 Disciplines)

Concepts and Methods Taught in Graduate Learning Sciences Programs

To evaluate which concepts and methods are taught in graduate learning sciences programs, we analyzed how many programs mention a specific concept or method. The assigned codes for the coverage of concepts and methods by the various programs revealed that six concepts are covered by more than 50% of the programs. Using technology to support learning is the most prevalent, with 76% of the programs explicitly mentioning it, but is closely followed by learning in formal environments, cognition and metacognition, informal learning environments, designing learning environments and scaffolding, and disciplinary learning, with around 60% of the programs addressing each of them. In contrast, nonsituative instructional approaches (e.g., cognitive load theory) are explicitly mentioned by only 13% of the programs.

In a more fine-grained analysis, we contrasted graduate learning sciences programs that are part of NAPLeS to nonmembers. We found that all concepts except for nonsituative instructional approaches are addressed relatively more often throughout the NAPLeS programs, indicating a higher degree of conceptual similarity among these programs (). Designing learning environments and scaffolding, motivation, emotion, and cognition and metacognition show a particularly pronounced difference between NAPLeS programs and nonmember programs.

Analyzing differences in coverage of concepts regarding regional distribution, the programs show the same pattern for the first three places (using technology to support learning, designing learning environments and scaffolding, and involving cognition and metacognition). Besides this similarity across regions, results reveal that these three amount to 56% of the concepts identified in the North American programs, 58% in European, and 82% in Asian and Australian programs, possibly implying that the latter programs have a more specific conceptual focus as compared to those in North America and Europe.

The coding results regarding the methods for data analysis and data collection were less straightforward to interpret. Most of the methods mentioned on the websites either fell into the data collection–unspecified (39%) or data analysis–unspecified (47%) categories due to very general levels of descriptions of methods on the programs’ websites. Looking at the remaining, more specific methods, two of them stand out (). DBR as well as basic statistics are each covered by more than 20% of the programs, whereas all other methods are only mentioned by less than 15% of the programs each. The low percentages for each of the methods reflects that most programs provide fairly unspecific descriptions of the methods they teach on their websites.

Comparing NAPLeS to non-NAPLeS programs, the results show that NAPLeS programs are more concerned with most of the methods, again showing greater homogeneity. Here, in particular, DBR, multilevel analysis, and big data and learning analytics stood out as more frequent in NAPLeS programs. However, it appears that other recent technological approaches such as physiological measures or eye tracking are also more common within the NAPLeS programs. In contrast, qualitative methods relating to video and audio are more often addressed across the non-NAPLeS programs. Of interest, questionnaires as a research method are more often mentioned on websites of NAPLeS programs.

Concepts and Methods Highlighted by the Programs

To identify concepts and methods specifically highlighted and advertised by the programs, we used a different coding strategy. Instead of focusing on whether a specific concept or method was mentioned, we counted how often it was explicitly mentioned on the programs’ websites. The according codes for what the programs emphasize in this sense were assigned quite frequently (more than 2,500 times). The analysis of these new coding results reveals that the number of assignments for each category varied considerably, indicating differing emphases on the various concepts and methods, respectively ( and ).

Using technology to support learning is by far the most emphasized concept, representing 27% of all assigned codes, followed by designing learning environments and scaffolding (17%) and cognition and metacognition (15%). The concepts with a relatively low frequency on the websites are argumentation (1.2%), as well as the various nonsituative instructional approaches (1.0%). Regarding methods, DBR and basic statistics stand out again, each holding more than 20% of the number of assigned codes.

Content-Related Connections Among the Programs

To identify concept-related connections among the graduate learning sciences programs, we constructed a first network by defining the graduate programs as nodes and pairwise co-occurrences of concepts within these programs as connections among them. The emerging network is quite complex, containing 75 programs and 4,284 connections among them. The average normalized degree of the resulting network is dav = .77. Thus, on average, the programs have at least one concept in common with 57 other programs. Although this number may seem high at first, this implies that, according to the information on the website, each program on average does not share a single concept with 17 other programs. This underlines the diversity of the programs at least in the programs’ descriptive online documents. Furthermore, the average strength amounts to sav = 209 (SD = 108), that is, on average, each degree program has 3 (out of 13) concepts in common with the other programs.

Relations Within the Concepts and Relations Within the Methods

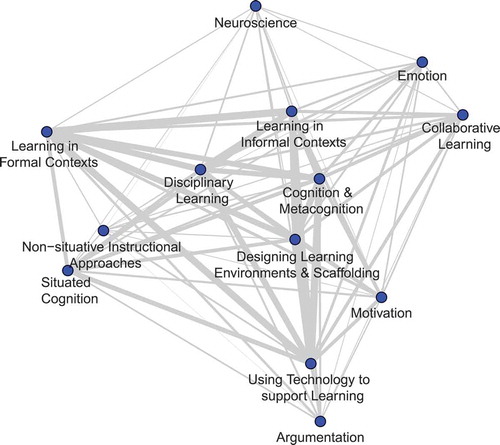

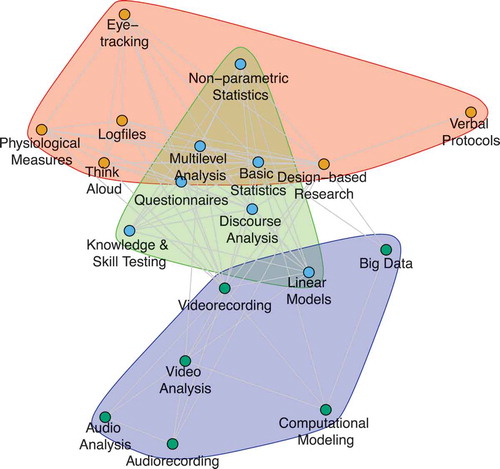

To examine the connections within concepts and methods, respectively, we created two additional networks: one focusing on the connections among the various concepts and one on the connections among the various methods. For the concept network, the various concepts were represented as nodes and defined to be connected when they co-occurred in the same degree program. Co-occurrences of two concepts in multiple programs (e.g., both program A and B mention both designing learning environments and scaffolding and argumentation) increased the connection weight between these concepts, which is represented by thicker gray lines in . The analogue procedure was used for the method network (). A distributed recursive layout was used for the creation of the graphs since it yielded more instructive representations than the common Fruchterman-Reingold layout. The layout is force-directed, implying that, broadly speaking, concepts or methods that are mentioned in conjunction with other concepts or methods at a higher frequency, respectively, attaining a higher strength of connection, are represented closer to each other and more centrally. The emerging networks show close relations, especially for the various concepts, represented by thick gray lines.

FIGURE 6 Network plot of all methods including three emerging clusters representing methods aimed at individuals (red) and their interaction (blue) and methods at the intersection of both other clusters (green).

In line with the analyses presented above, the concepts show a high average degree of dav = 1, indicating that among all possible concepts, each combination of two concepts occurs at least once. Again, taking the connection weight into account, the average strength amounts to s = 192 (SD = 89). The five most strongly connected concepts are using technology to support learning (s = 312), cognition and metacognition (s = 281), informal learning (s = 281), formal learning (s = 277), and designing learning environments and scaffolding (s = 260). The least connected concepts are emotion (s = 119), argumentation (s = 99), neuroscience (s = 68), and the various nonsituative instructional approaches (s = 63). In addition, we performed a cluster analysis based on eigenvalues on the concepts to identify potential subgroups within the concepts, following Newman’s (Citation2006) approach. The results revealed a single cluster including all concepts, thus indicating no more closely related subgroups within the set of concepts.

In contrast, the method network shows a lower average degree of dav = .44. The average strength amounts to s = 13 (SD = 10). The five most strongly connected (most frequently co-occurring) methods are basic statistics (s = 35), DBR (s = 24), multilevel analysis (s = 24), linear models (s = 24), and discourse analysis (s = 23). The subsequent cluster analysis on the methods revealed three clusters that are highlighted in red, blue, and green in . Both the red and the blue cluster contain six methods, whereas the green cluster consists of seven methods. The three clusters yield a classification of the methods used and taught within learning sciences. The red and blue clusters contain methods either targeting individuals (red cluster) or interaction between them (blue cluster). The third, green cluster may then be interpreted as those methods in the intersection of both other clusters, that is, those needed in both areas of research.

Homogeneous Cores Within the Graduate Learning Sciences Programs and Signature Methods

To identify homogeneous cores within the graduate learning sciences programs, we examined those programs that share a specific method, for example, multilevel analysis or discourse analysis, and used Fleiss’ kappa to determine their similarity regarding all other concepts and methods. These analyses revealed that those programs teaching DBR show the highest similarity (κ = .43; see ), whereas those programs sharing basic statistics (κ = .21) or questionnaires (κ = .10) as a method show the lowest similarity with respect to the remaining concepts and methods. Further, the group of graduate programs not teaching DBR show a lower similarity (κ = .27) than those programs teaching DBR (κ = .43).

TABLE 3 Similarity of the Group of Programs Teaching a Specific Method With Respect to All Other Concepts and Methods

Examining the DBR subsample more closely, not only can a higher similarity of the programs be observed, but also certain differences in the composition of their disciplinary backgrounds may be seen. Here, a specific influence of computer science (53% of all programs within the group), psychology (47%), education (24%), and science and science education (24%) can be observed, whereas the according numbers in the other programs are lower (less than 20% each) and spread more evenly across many different disciplines that are involved in delivering the programs (see ).

TABLE 4 Top Five Disciplines Involved in Offering Design-Based Research (DBR) and Non-DBR Graduate Learning Sciences Programs

Further, the DBR subsample also shows a differing distribution regarding the covered concepts (). Within the DBR subsample, learning in formal and informal contexts are the most important topics, as both are mentioned by 94% of the programs, followed by using technology to support learning (82%), disciplinary learning (82%), and designing learning environments and scaffolding (76%). In the non-DBR subsample, using technology to support learning is the most important topic (74%), followed by cognition and metacognition (62%) and learning in formal contexts (55%). Besides these differences in sequence, the percentages in the non-DBR subsample are overall lower than those in the DBR subsample, underlining that these programs are less focused on specific concepts.

FIGURE 7 Percentage of design-based research (DBR) and non-DBR programs mentioning the concepts (in descending order for DBR programs).

In addition, we examined the similarity of the programs within NAPLeS and the similarity of those programs that are not members of the network, showing that those within the network are more coherent (κ = .31) than those who are not members (κ = .26). Still, the similarity within NAPLeS is lower than the similarity within the subsample focusing on DBR.

DISCUSSION

On a simple and descriptive level, our analysis of graduate learning sciences programs illustrates that graduate programs worldwide identify themselves as learning sciences. The center of gravity of the learning sciences is still at its point of origin in North America (e.g., Hoadley, Citationin press; Hoadley & Van Haneghan, Citation2011) and no programs could yet be identified in Africa or South America. The contemporary disciplinary makeup of the graduate learning sciences programs further reflects its history. Specifically, computer science, psychology, education, science and science education, mathematics, and engineering turned out to be the most frequently contributing disciplines.

According to the documents that are publicly available on the programs’ websites, designing learning environments and scaffolding, using technology to support learning, cognition and metacognition, learning in formal and informal contexts, and disciplinary learning seem to constitute the conceptual core of many graduate learning sciences programs. Nevertheless, there is a tremendous conceptual diversity in the self-descriptions of the graduate learning sciences programs. These results are empirical support for common, yet anecdotally derived conceptions of the learning sciences. Furthermore, the results also match those of the survey of ISLS members by Yoon and Hmelo-Silver (Citation2017), who found a focus on learning environment design and on learning technologies in their data, which stand out as the two most frequently mentioned areas of interest among a total of 24 different areas in their study. In contrast, learning scientists within their survey mention collaborative knowledge building, communities of practice, and inquiry learning as primary research foci, which are not equivalently prominent in our analyses of the graduate programs’ websites.This difference can have methodological reasons, one related to a social desirability and selection bias within the survey by Yoon and Hmelo-Silver (Citation2017) and another related to the lack in degree of detail of the examined websites in the document analysis presented here. However, if this difference is not a methodological artifact, the lower prevalence of collaborative knowledge building, communities of practice, and inquiry learning may indicate that these approaches are prominent in current research but are not equivalently reflected in the teaching within graduate learning sciences programs. Adding to these different emphases, the analysis of how programs present themselves on their websites indicates that some topics and methods are heavily stressed. In this regard, using technology to support learning as well as designing learning environments and scaffolding stand out on the concept side, whereas DBR stands out on the method side. All three are proposed as core aspects of the learning sciences in the literature (e.g., Sawyer, Citation2014a).

The programs’ websites do not provide a detailed insight into the methods taught within the graduate learning sciences programs, as methods are often only broadly described. Still, even based on these broad descriptions (e.g., data collection, data analysis), our results clearly show that graduate learning sciences programs do typically have an empirical research orientation. Several core methods can be identified, and there is evidence that beyond basic behavioral and cognitive methods, DBR is a frequently taught methodological approach within the graduate learning sciences programs. An additional focus of many programs appears to be on methods for analyzing video as well as discourse and dialog data, reflecting the interest of learning scientists in the analysis of activities and interaction related to learning processes (ISLS, Citation2009).

Comparing the results of the network analyses for the concepts and methods, it is striking that the average degree (relating to the number of connections within the network), as well as the average strength (relating to the magnitude of the connections), of the method network are considerably lower than the corresponding values of the concept network. This can at least in part be attributed to the broad descriptions being used for the methods on the websites but may also underline that the programs are much more diverse regarding the methods they teach than regarding their core concepts, reflecting the history of the learning sciences shaped by research from different disciplines with different methods (Hoadley & Van Haneghan, Citation2011).

Finally, the analysis regarding the similarity of different method-based subgroups within the overall sample underlines several of these findings. Programs including DBR appear to represent a core of relatively coherent and similar programs at different universities. We suggest that these programs can be considered to form a core of the learning sciences as an emerging CoP. In contrast, basic statistics and questionnaires, although widely taught in graduate learning sciences programs, cannot be considered signature methods, as programs addressing them differ markedly with respect to the other concepts and methods they teach.

The chosen methodology for this study bears some limitations related mostly to the sampling method and data availability. Graduate programs that teach what we identify as learning sciences but named it differently or that do not identify with the terms “learning science,” “learning sciences,” or “sciences of learning” might have been neglected in this document analysis. Yet, we believe that the self-classification of a degree program as a learning sciences program is a useful starting point and important prerequisite for the validity of this study. Furthermore, programs might have been missed by our search altogether, for example, due to language reasons (e.g., websites in Dutch or Hebrew), novelty (published after the end of our data collection), or the inductive search process. Therefore, further analyses including those programs in other languages would present valuable additions to this report. Yet, these analyses may pose methodological questions regarding the accurate translation of the websites and are jeopardized by the fact that many languages do not have a sound and broadly shared translation of “learning sciences” (e.g., German).

Regarding data availability, the universities involved might not have put a valid representation of their programs’ contents or all of the contributing disciplines explicitly on their websites so that the data available may not perfectly reflect the programs. In addition, websites are created at least partially for marketing purposes, which means that not everything announced on the website and in flyers, program descriptions, and syllabi is enacted in teaching and vice versa. However, although our analyses of the program descriptions on their websites might not fully reflect the actual teaching of the programs, they do reflect the characteristics of the learning sciences that the universities and the faculties want to be publicly noticed. Thus, our findings reflect well what potential students and members of other faculties perceive as learning sciences “from the outside,” when they use the web as their source of information.

CONCLUSIONS AND IMPLICATIONS

This study provides empirical evidence on how graduate learning sciences programs teach learning sciences, that is, about their core concepts and methods. We argue that these findings enable a more informed discussion about the learning sciences as a CoP. Our analyses of 75 graduate learning sciences programs are a solid starting point for this research and highlight the current status of the learning sciences. Moreover, our study substantially extends the study by Packer and Maddox (Citation2016), who only included 17 learning sciences programs. As approximately 30% of the programs are from non-English-speaking countries, a substantial number of international programs is included.

To date, there have been several approaches to capture the essence of the learning sciences (Hoadley, Citationin press; ISLS, Citation2009; Nathan et al., Citation2016; Sawyer, Citation2014b). Based on the public representation of graduate learning sciences programs examined in the present study, we suggest the following description: learning sciences targets the analysis and facilitation of real-world learning in formal and informal contexts. Learning activities and processes are considered crucial and are typically captured through the analysis of cognition, metacognition, and dialog. To facilitate learning activities, the design of learning environments is seen as fundamental. Technology is key for supporting and scaffolding individuals and groups to engage in productive learning activities. Graduate learning sciences programs bring together faculties from several disciplines including computer science, psychology, education, science and science education, mathematics, and engineering. The basic methodological orientation of the learning sciences is empirical. Together with established behavioral and cognitive research methods focusing on the individual as well as on interaction between learners, DBR has emerged as a signature method specifically suited to the investigation of learning and teaching in real-world settings. In particular, those programs emphasizing DBR approaches in their teaching appear to constitute a more coherent core within the examined set of graduate programs.

But what about the majority of graduate learning sciences programs that are not part of this nucleus? Is it reasonable to say they are not part of the learning sciences community? Probably not, as merely 25% of the graduate programs that self-categorize as learning sciences programs explicitly address DBR. The question is rather how to represent those programs well that do not belong to the relatively small core. In what follows, we explore the CoP metaphor and suggest a possible view on the specific relationship of the core and more peripheral programs in the learning sciences.

We conceive a CoP roughly as a group of people, or in this case graduate programs representing the people affiliated with it, with partially shared histories, identities, and practices. They further share goals, use the same tools, aim to advance a joint body of knowledge, and maintain the community through mechanisms of reproduction (Barab et al., Citation2003). Participation in a CoP can take place in several degrees of centrality with core members and more or less peripheral members. Although core members typically started as peripheral members many years ago, the trajectory of members, respectively programs, is not limited to moving from the periphery to the core. Instead, some already more central members may become more peripheral again, for example, as they shift their (research) focus or start identifying with another CoP, and some peripheral members may constantly stay peripheral. Often, peripheral members are core members of other disciplines (i.e., other CoPs) and are thus participating centrally in the advancement of practices in other fields.

Through this theoretical lens, the conceptually and methodologically more homogeneous group of graduate programs identified in our analyses may be considered as belonging to the core of the learning sciences CoP. The second, larger, more heterogeneous set of programs can be located at different distances from this core as peripheral members, partially also comprising some smaller peripheral clusters. However, the group of more heterogeneous programs is misconceived if we think of them as less mature and not yet fully developed programs. While some of the mostly very young programs may move toward the core of the learning sciences CoP in the future, others will intentionally remain peripheral, prioritizing their engagement within other disciplines. From a CoP perspective, both developments are crucial. The former, that is, programs moving from peripheral to more central participation, can be seen as an indicator of the consolidation and maturation of the young discipline of the learning sciences. The latter programs, which stay peripheral, address crucial problems of the learning sciences but consider disciplines other than the learning sciences as their “home.” In bringing new methodological and conceptual developments from other disciplines to the learning sciences—that is, to its research, to its conferences, and to its journals—this type of peripheral participation is a crucial mechanism for innovation of the learning sciences CoP.

Based on this conception of the learning sciences as a discipline entailing orbiting peripheral members and programs in addition to the small and more coherent nucleus, the question of what we should teach when we teach the learning sciences is important. The current empirical findings represent a first, evidence-based starting point for discussing this question. Still, further research is needed to analyze changes in the teaching of the learning sciences longitudinally, to examine differences between core and peripheral programs in more detail, and to investigate the teaching of various concepts and methods more deeply, especially regarding how certain concepts are used and how concepts from different disciplines have developed in learning sciences research.

For the future of the learning sciences as a discipline, it will become even more important that learning sciences conferences and journals bring together individuals and programs participating with different degrees of centrality. There seems to be a center of gravity in the core of the learning sciences, and we are lucky that this core is surrounded by multidisciplinary hubs and programs that are important motors of innovation within the learning sciences. We should refrain from excluding more peripheral research from learning sciences conferences and journals because it is not using certain concepts or methods. Reducing the learning sciences to its core (e.g., to DBR) will likely cause stagnation. Instead, we should embrace new methodological developments coming from other disciplines as currently can be seen in the context of big data, learning analytics, and educational data mining methods (Koedinger, D’Mello, McLaughlin, Pardos, & Rosé, Citation2015; Wise & Shaffer, Citation2015). Learning sciences is a discipline and, if done well, a center of gravity for a powerful orbit of interdisciplinary collaborations on learning sciences themes.

Notes

1 NAPLeS is an international platform for networking and collaboration between learning sciences degree programs. More information can be found here: http://naples.isls.org/.

2 For example, on the annual meeting of the American Educational Research Association, on the biennial conference of the European Association for Research and Learning in Instruction, or on the International Conference on Computers in Education.

3 See http://isls-naples.psy.lmu.de/members/programs/index.html for the list of NAPLeS member programs.

4 The term is ambiguous. Here it is not meant in the classical way of computational modeling of cognition (e.g., Anderson, Citation2013; Lewandowsky & Farrell, Citation2010), but in the sense of computational modeling of interaction (e.g., Dillenbourg, Citation1999; Shaffer et al., Citation2009).

REFERENCES

- Anderson, J. R. (2013). The architecture of cognition. New York, NY: Psychology Press.

- Atkinson, P., & Coffey, A. (2004). Analysing documentary realities. In D. Silverman (Ed.), Qualitative research: Theory, method and practice (2nd ed., pp. 56–75). London, UK: Sage Publications Ltd.

- Barab, S., MaKinster, J., & Scheckler, R. (2003). Designing system dualities: Characterizing a web-supported professional development community. The Information Society, 19(3), 237–256. doi:10.1080/01972240309466

- Barab, S., & Squire, K. (2004). Design-based research: Putting a stake in the ground. Journal of the Learning Sciences, 13(1), 1–14. doi:10.1207/s15327809jls1301_1

- Barrat, A., Barthélemy, M., Pastor-Satorras, R., & Vespignani, A. (2004). The architecture of complex weighted networks. Proceedings of the National Academy of Sciences of the United States of America, 101(11), 3747–3752. doi:10.1073/pnas.0400087101

- Borgatti, S. P., Everett, M. G., & Johnson, J. C. (2013). Analyzing social networks. New York, NY: Sage Publications Ltd.

- Bowen, G. A. (2009). Document analysis as a qualitative research method. Qualitative Research Journal, 9(2), 27–40. doi:10.3316/QRJ0902027

- Card, N. A. (2015). Applied meta-analysis for social science research. New York, NY: Guilford Publications.

- Colella, V. (2000). Participatory simulations: Building collaborative understanding through immersive dynamic modeling. Journal of the Learning Sciences, 9(4), 471–500. doi:10.1207/S15327809JLS0904_4

- Collins, A., Brown, J. S., & Newman, S. E. (1988). Cognitive apprenticeship: Teaching the craft of reading, writing and mathematics. Thinking: the Journal of Philosophy for Children, 8(1), 2–10.

- Collins, A., Joseph, D., & Bielaczyc, K. (2004). Design research: Theoretical and methodological issues. Journal of the Learning Sciences, 13(1), 15–42. doi:10.1207/s15327809jls1301_2

- Cooper, H., Hedges, L. V., & Valentine, J. C. (2009). The handbook of research synthesis and meta-analysis. New York, NY: Russell Sage Foundation.

- Csardi, G., & Nepusz, T. (2006). The igraph software package for complex network research. InterJournal, Complex Systems, 1695(5), 1–9.

- De Jong, T., Lazonder, A., Pedaste, M., & Zacharia, Z. (in press). Simulations, games, and modeling tools for learning. In F. Fischer, C. E. Hmelo-Silver, S. Goldman, & P. Reimann (Eds.), International handbook of the learning sciences. New York, NY: Routledge.

- The Design-Based Research Collective. (2003). Design-based research: An emerging paradigm for educational inquiry. Educational Researcher, 32(1), 5–8. doi:10.3102/0013189X032001005

- Dillenbourg, P. (1999). What do you mean by collaborative learning? Collaborative-Learning: Cognitive and Computational Approaches, 1, 1–15.

- Dillenbourg, P., Järvelä, S., & Fischer, F. (2009). The evolution of research on computer-supported collaborative learning. In N. Balacheff, S. Ludvigsen, T. De Jong, A. Lazonder, & S. Barnes (Eds.), Technology-enhanced learning (pp. 3–19). Dordrecht, Netherlands: Springer Netherlands.

- Evans, M. A., Packer, M. J., & Sawyer, R. K. (2016). Reflections on the Learning Sciences. Cambridge, UK: Cambridge University Press.

- Fleiss, J. L. (1971). Measuring nominal scale agreement among many raters. Psychological Bulletin, 76(5), 378–382. doi:10.1037/h0031619

- Fleiss, J. L., & Cohen, J. (1973). The equivalence of weighted kappa and the intraclass correlation coefficient as measures of reliability. Educational and Psychological Measurement, 33(3), 613–619. doi:10.1177/001316447303300309

- Greeno, J. G., & Engeström, Y. (2014). Learning in activitiy. In R. K. Sawyer (Ed.), The Cambridge handbook of the learning sciences (2nd ed., pp. 128–147). Cambridge, UK: Cambridge University Press.

- Herrenkohl, L. R., & Cornelius, L. (2013). Investigating elementary students’ scientific and historical argumentation. Journal of the Learning Sciences, 22(3), 413–461. doi:10.1080/10508406.2013.799475

- Hmelo-Silver, C. E. (2004). Problem-based learning: What and how do students learn? Educational Psychology Review, 16(3), 235–266. doi:10.1023/B:EDPR.0000034022.16470.f3

- Hoadley, C. (in press). A short history of the learning sciences. In F. Fischer, C. E. Hmelo-Silver, S. Goldman, & P. Reimann (Eds.), International handbook of the learning sciences. New York, NY: Routledge.

- Hoadley, C., & Van Haneghan, J. (2011). The learning sciences: Where they came from and what it means for instructional designers. In R. A. Reiser, & J. V. Dempsey (Eds.), Trends and issues in instructional design and technology (Vol. 3, pp. 53–63). New York, NY: Pearson.

- ICLS. (2014). Keyword list for the international conference of the learning sciences [Unpublished list].

- ISLS. (2009). The international society of the learning sciences. Retrieved from https://www.isls.org/images/documents/ISLS_Vision_2009.pdf

- Janssen, J., & Bodemer, D. (2013). Coordinated computer-supported collaborative learning: Awareness and awareness tools. Educational Psychologist, 48(1), 40–55. doi:10.1080/00461520.2012.749153

- Justesen, S. (2004). Innoversity in communities of practice. In P. Hildreth, & C. Kimble (Eds.), Knowledge networks: Innovation through communities of practice (pp. 79–95). Hershey, PA: IGI Global.

- Kimmerle, J., Moskaliuk, J., Oeberst, A., & Cress, U. (2015). Learning and collective knowledge construction with social media: A process-oriented perspective. Educational Psychologist, 50(2), 120–137. doi:10.1080/00461520.2015.1036273

- Koedinger, K. R., D’Mello, S., McLaughlin, E. A., Pardos, Z. A., & Rosé, C. P. (2015). Data mining and education. Wiley Interdisciplinary Reviews: Cognitive Science, 6(4), 333–353. doi:10.1002/wcs.1350

- Kollar, I., Ufer, S., Reichersdorfer, E., Vogel, F., Fischer, F., & Reiss, K. (2014). Effects of collaboration scripts and heuristic worked examples on the acquisition of mathematical argumentation skills of teacher students with different levels of prior achievement. Learning and Instruction, 32, 22–36. doi:10.1016/j.learninstruc.2014.01.003

- Kolodner, J. L. (1991). The journal of the learning sciences: Effecting changes in education. Journal of the Learning Sciences, 1(1), 1–6. doi:10.1207/s15327809jls0101_1

- Kolodner, J. L. (2009). Note from the outgoing editor-in-chief. Journal of the Learning Sciences, 18(1), 1–3. doi:10.1080/10508400802663920

- Krajcik, J., Blumenfeld, P. C., Marx, R. W., Bass, K. M., Fredricks, J., & Soloway, E. (1998). Inquiry in project-based science classrooms: Initial attempts by middle school students. Journal of the Learning Sciences, 7(3/4), 313–350. doi:10.1080/10508406.1998.9672057

- Lave, J., & Wenger, E. (1991). Situated learning: Legitimate peripheral participation. Cambridge, UK: Cambridge University Press.

- Lewandowsky, S., & Farrell, S. (2010). Computational modeling in cognition: Principles and practice. Los Angeles, CA: Sage Publications Ltd.

- Mayring, P. (2001). Combination and integration of qualitative and quantitative analysis. Forum Qualitative Sozialforschung/Forum: Qualitative Social Research, 2(1), 6. doi:10.17169/fqs-2.1.967

- Mayring, P. (2014). Qualitative content analysis: Theoretical foundation, basic procedures and software solution Retrieved from http://nbn-resolving.de/urn:nbn:de:0168-ssoar-395173

- Nathan, M. J., Rummel, N., & Hay, K. E. (2016). Growing the learning sciences: Brand or big tent? Implications for graduate education. In M. A. Evans, M. J. Packer, & R. K. Sawyer (Eds.), Reflections on the learning sciences (pp. 191–209). Cambridge, UK: Cambridge University Press.

- Nathan, M. J., & Sawyer, R. K. (2014). Foundations of the learning sciences. In R. K. Sawyer (Ed.), The Cambridge handbook of the learning sciences (2nd ed., pp. 21–43). Cambridge, UK: Cambridge University Press.

- Newman, M. E. (2006). Finding community structure in networks using the eigenvectors of matrices. Physical Review E, 74(3). doi:10.1103/PhysRevE.74.036104

- Packer, M. J., & Maddox, C. (2016). Mapping the territory of the learning sciences. In M. A. Evans, M. J. Packer, & R. K. Sawyer (Eds.), Reflections on the learning sciences (pp. 126–154). Cambridge, UK: Cambridge University Press.

- Pea, R. D. (2004). The social and technological dimensions of scaffolding and related theoretical concepts for learning, education, and human activity. Journal of the Learning Sciences, 13(3), 423–451. doi:10.1207/s15327809jls1303_6

- Pea, R. D. (2016). The prehistory of the learning sciences. In M. A. Evans, M. J. Packer, & R. K. Sawyer (Eds.), Reflections on the learning sciences (pp. 32–58). Cambridge, UK: Cambridge University Press.

- Reiser, B. J. (2004). Scaffolding complex learning: The mechanisms of structuring and problematizing student work. Journal of the Learning Sciences, 13(3), 273–304. doi:10.1207/s15327809jls1303_2

- Sawyer, R. K. (2006). The Cambridge handbook of the learning sciences. Cambridge, UK: Cambridge University Press.

- Sawyer, R. K. (2014a). The new science of learning. In R. K. Sawyer (Ed.), The Cambridge handbook of the learning sciences (2nd ed., pp. 1–18). Cambridge, UK: Cambridge University Press.

- Sawyer, R. K. (Ed.). (2014b). The Cambridge handbook of the learning sciences (2nd ed.). Cambridge, UK: Cambridge University Press.

- Scardamalia, M., & Bereiter, C. (2014). Knowledge building and knowledge creation: Theory, pedagogy, and technology. In R. K. Sawyer (Ed.), The Cambrige handbook of the learning sciences (2nd ed., pp. 397–417). Cambridge, UK: Cambridge University Press.

- Schank, R. C. (2016). Why learning sciences? In M. A. Evans, M. J. Packer, & R. K. Sawyer (Eds.), Reflections on the learning sciences (pp. 19–31). Cambridge, UK: Cambridge University Press.

- Seel, N. M. (2012). History of the sciences of learning. In N. M. Seel (Ed.), Encyclopedia of the sciences of learning (pp. 1433–1442). New York, NY: Springer.

- Shaffer, D. W. (2004). Pedagogical praxis: The professions as models for postindustrial education. Teachers College Record, 106(7), 1401–1421. doi:10.1111/tcre.2004.106.issue-7

- Shaffer, D. W., Hatfield, D., Svarovsky, G. N., Nash, P., Nulty, A., Bagley, E., … Mislevy, R. (2009). Epistemic network analysis: A prototype for 21st-century assessment of learning. The International Journal of Learning and Media, 1(2), 33–53. doi:10.1162/ijlm.2009.0013

- Siemens, G., & Baker, R. (2012, April). Learning analytics and educational data mining: Towards communication and collaboration. Paper presented at the 2nd International Conference on Learning Analytics and Knowledge, Vancouver, BC.

- Stahl, G. (2010). Group cognition as a foundation for the new science of learning. In M. S. Khine, & I. M. Saleh (Eds.), New science of learning (pp. 23–44). New York, NY: Springer.

- Stake, R. E. (1995). The art of case study research. Thousand Oaks, CA: Sage Publications Ltd.

- Stokes, D. E. (2011). Pasteur’s quadrant: Basic science and technological innovation. Washington, DC: Brookings Institution Press.

- Tabak, I., & Radinsky, J. (2015). Paving new pathways to supporting disciplinary learning. Journal of the Learning Sciences, 24(4), 501–503. doi:10.1080/10508406.2015.1091704

- Wenger, E. (1998). Communities of practice: Learning, meaning, and identity. Cambridge, UK: Cambridge University Press.

- Wenger, E., McDermott, R. A., & Snyder, W. (2002). Cultivating communities of practice: A guide to managing knowledge. Boston, MA: Harvard Business School Press.

- Wise, A. F., & Shaffer, D. W. (2015). Why theory matters more than ever in the age of big data. Journal of Learning Analytics, 2(2), 5–13. doi:10.18608/jla.2015.22.2

- Yin, R. K. (1994). Discovering the future of the case study method in evaluation research. Evaluation Practice, 15(3), 283–290. doi:10.1016/0886-1633(94)90023-X

- Yoon, S. A., & Hmelo-Silver, C. E. (2017). What do learning scientists do? A survey of the ISLS membership. Journal of the Learning Sciences, 26(2), 167–183. doi:10.1080/10508406.2017.1279546