ABSTRACT

The Canadian English Language Proficiency Index Program (CELPIP) Test was designed for immigration and citizenship in Canada. CELPIP is a computer-based English-language proficiency test which covers all four skills. This test review provides a description of the test and its construct, tasks, and delivery. Then, it appraises CELPIP for reliability, fairness, and validity before identifying future directions for research.

Introduction

Canada currently accepts two English-language proficiency tests for immigrants to demonstrate their level in reading, writing, listening, and speaking – the IELTS General Training and the Canadian English Language Proficiency Index Program (CELPIP) Test, and will accept the Pearson Test of English – Core (PTE Core) by the end of 2023. CELPIP is a computer-based test designed specifically for Canadian immigration and citizenship by Paragon Testing Enterprises, a private subsidiary of the University of British Columbia (UBC). Immigration, Refugees, and Citizenship Canada (IRCC) has accepted CELPIP since 2014 (Paragon Testing Enterprises, Citation2021b). Canada uses a points-based immigration system with minimum required scores for English- or French-language proficiency test scores and requires a test score report be included as part of permanent residency applications. Meeting the minimum score may not always be sufficient as some competitive immigration programs award extra points for test scores above the minimum requirements (Immigration, Refugees and Citizenship Canada, Citation2022a), thus increasing the importance of a high test score. Outside of immigration, 17 Canadian professional organizations accept CELPIP for licensing purposes and 28 post-secondary institutions (colleges and vocational programs) accept CELPIP for admission (Paragon Testing Enterprises, Citationn.d.-d). CELPIP is not accepted for university admission.

Canada plans to accept 1.3 million new permanent residents from 2022–2024 (IRCC, Citation2022b). Although Paragon has not released the number of CELPIP test takers, it is important to critically appraise CELPIP and its role within the Canadian context of language proficiency testing for global migration. This review outlines CELPIP’s purpose, construct, delivery, resources, and test tasks. Then, we use the Standards for Educational and Psychological Testing (American Educational Research Association, American Psychological Association, & National Council on Measurement in Education, Citation2014) to examine CELPIP’s reliability, fairness, and validity.

Test construct

CELPIP is a general English language proficiency test whose construct is “to measure the functional language proficiency required for successful communication in general Canadian social, educational, and workplace contexts” (Paragon Testing Enterprises, Citation2021b, p. 1). The target language use (TLU) domain encompasses such everyday activities as communicating with work colleagues and superiors, understanding and responding to news stories or other print materials, and socializing with friends. Paragon defines functional language proficiency as being able “to integrate language knowledge and skills in order to perform various social functions” (2021a, p. 1) and considers CELPIP to align with the theoretical model of communicative language ability (Bachman & Palmer, Citation1996, Citation2010) which serves as the foundation for the Canadian Language Benchmarks (CLB) (Centre for Canadian Language Benchmarks, Citation2012). The CLB was introduced in 1996 to describe language development for adult immigrants (Centre for Canadian Language Benchmarks, Citation2012) and connects to settlement and citizenship requirements. The CLB’s 12 levels aim to enable precise decision making regarding language learners’ proficiency levels and the services they need as well as “convey the brand and values of Canadian identity” (Jezak & Piccardo, Citation2017, p. 9). For full details on the CLB, see https://www.language.ca/product/pdf-e-01-clb-esl-for-adults-pdf/. Paragon calibrated CELPIP’s test scores against the CLB (Paragon Testing Enterprises, Citation2018) and identified CELPIP levels 4–10 as those most relevant to its score users (Paragon Testing Enterprises, Citation2021c). below shows each CELPIP level with its corresponding description and CLB level equivalency (Paragon Testing Enterprises, Citationn.d.-a).

Table 1. CELPIP levels, descriptors, and CLB equivalencies.

Test delivery

The CELPIP-General (CELPIP-G) assesses listening, reading, writing, and speaking. It is available at over 60 testing centres across Canada and abroad and is accepted for Canadian

permanent residency applications. Test fees for CELPIP-G in Canada are $280 CAD plus applicable taxes and internationally range from roughly $200 CAD in India up to $340 CAD in the UAE. Because Canadian citizenship applications only require listening and speaking test scores, test takers can sit CELPIP-General Listening and Speaking (CELPIP-G LS) with only the listening and speaking tasks. The CELPIP-G LS is only available in Canada and costs $195 CAD plus applicable taxes (Paragon Testing Enterprises, Citationn.d.-a).

Resources & registration

The website, www.celpip.ca, provides information about the test, scoring, test registration, and preparation materials, in addition to research publications and information for stakeholders. As for test preparation materials, the website has for-cost practice test bundles, online programs, and course books, and free materials such as videos, online programs, practice tests, and live webinars. The website provides a comprehensive list of acceptable identification documents required for registration and test day but makes no mention of collecting biometric data from test takers. Test takers can apply for special accommodations on a case-by-case basis. The website provides a four-page document complete with guidelines for test takers and medical professionals, an accommodation application form, and examples of available accommodations (see https://www.celpip.ca/wp-content/uploads/2019/07/CELPIP_Special_Accommodations_Request_Form.pdf).

Test tasks

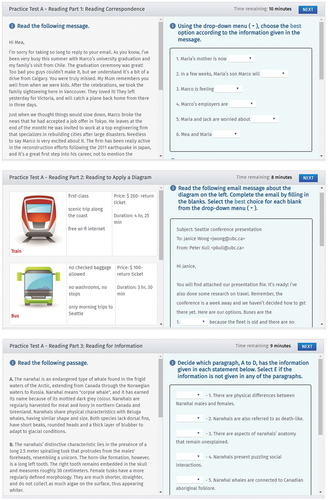

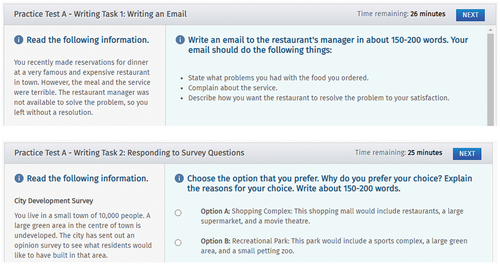

The four sections of the CELPIP-G test take roughly 3.5 hours to complete. below outlines the general topics and number of questions per listening, reading, writing, and speaking task (Paragon Testing Enterprises, Citationn.d.-b). are screen shots of sample tasks found in the free online practice tests. Based on its construct described above, the tasks are meant to assess a test takers’ proficiency in the rather broad domains of Canadian social, educational, and workplace contexts. It is difficult to assess how well the tasks match the TLU domain as detailed test specifications do not seem to be publicly available.

Table 2. CELPIP-G Test Structure.

Listening

The listening section takes 47–55 minutes and has one practice question and six distinct parts which have five to eight questions each. Each part takes roughly six minutes, and each listening stimulus is played once. A few statements or a picture are provided for context before the audio starts. The questions are played on the audio track and are not written on the test screen. The listening track may be audio only or a video. Question types include multiple choice with either pictures or statements to choose from, sentence stems with a drop-down menu of possible endings, and information questions with a drop-down menu of possible answers.

Reading

The reading section takes 55–60 minutes and has one practice question and four distinct parts with eight to eleven questions each. Each part takes roughly ten minutes. Question types include sentence stems with a drop-down menu of possible endings, cloze readings with drop-down menus of possible options, and matching information statements to the appropriate paragraphs.

The listening and reading sections may contain additional questions used only for research purposes.

Writing

The writing section takes 53–60 minutes and has two distinct parts with one question each. The time limit for Task 1 is 27 minutes and for Task 2 is 26 minutes. In the first task, test takers write a 150–200 word email in response to a context and list of items to include. In the second task, test takers write a 150–200 word response to a survey question. Both tasks include a word counter and there is a built-in spell checker (Li et al., Citation2020).

Speaking

The speaking section takes 15–20 minutes and has one practice question and eight distinct parts with one question each. Each speaking task has 30–60 seconds preparation time and 60–90 seconds recording time. There is a written prompt for each task and some include pictures. Some speaking tasks allow test takers to choose between prompts.

Test taker demographics

The majority of test takers took CELPIP-G for immigration purposes, increasing from 79.6% in 2018 to 90% in 2021 (Paragon Testing Enterprises, Citation2018, Citation2021a). Test takers reported approximately 130 different home countries and 100 different first languages with 67.7% of test takers self-reporting English, Spanish, or Chinese as their first language. Between March 2017 and March 2020, most test takers were between the ages of 26–40 (71%) and just over half were male (55.4%) (Paragon Testing Enterprises, Citation2021c). below shows the mean band scores for each subskill of the CELPIP-G (Paragon Testing Enterprises, Citation2021a). The CELPIP-G LS mean band scores are potentially lower because citizenship has a lower English-language proficiency requirement than many immigration programs.

Table 3. 2021 CELPIP-G and CELPIP-G LS Mean band scores.

Levels & scoring

The reading and listening sections are computer-scored out of 38 points with questions marked dichotomously and blank questions marked as incorrect. Writing and speaking are both assessed with four rating categories. Content and coherence, vocabulary, and task fulfillment are common for both subskills with writing also assessed for readability (grammar, cohesion, fluency) and speaking for listenability (pronunciation, intonation, grammar, fluency). The scoring rubrics are proprietary, but CELPIP writing and speaking level descriptor charts are available on the website. Information on how band scores are determined is not available.

Trained human raters are randomly assigned to score the anonymized writing and speaking responses using scoring rubrics. Two raters rate each writing response. Three raters rate a set of speaking responses with some overlap between them and four of the responses being double rated. Responses are automatically sent for benchmarking if the raters disagree in the first round. The writing and speaking ratings are used to calculate the scores which are then changed into a CELPIP level (Paragon Testing Enterprises, Citationn.d.-c).

Reporting

Test takers can view their scores and download an official PDF score report in their online account 4–5 days after their test. The score report includes a test taker’s name, address, date of birth, photo, the test date, and the name of the testing centre. Each skill is listed with its corresponding CELPIP level. The score report is signed and dated by a CELPIP official (Paragon Testing Enterprises, Citation2020). CELPIP score reports are available from Paragon for two years from the exam date, but the website states that the score validity period is determined by individual institutions’ policies. Test takers can order a hardcopy of their official score reports for $20 CAD each. Test takers have the option of having specific sections or their entire test re-scored within six months of the test date. This can only be done once for each section with fees depending on which sections are re-evaluated. Re-evaluations take up to two weeks (Paragon Testing Enterprises, Citationn.d.-c).

Appraisal of the test

According to Paragon’s technical reports on CELPIP, Paragon follows the Standards (American Educational Research Association, American Psychological Association, & National Council on Measurement in Education, Citation2014) description of validity. Using technical reports and research studies from both internal and external researchers as well as news articles, we appraised CELPIP for its reliability, fairness, and validity.

Reliability

The Standards (AERA et al., 2014) refer to equivalency across various test forms and score consistency across multiple testing procedures. Paragon analyzed all test forms which have had a minimum of 200 test takers using classical test theory. The reliability of the 166 listening test forms and the 165 reading test forms was a minimum Cronbach’s alpha of 0.84. For the 215 writing forms, the reliability was a minimum Cronbach’s alpha of 0.88 and for the 162 speaking test forms was at least 0.95 (Paragon Testing Enterprises, Citation2021c). The average Cronbach’s alpha was 0.89 for both the listening and reading sections, 0.92 for the writing section, and 0.97 for the speaking section (Paragon Testing Enterprises, Citationn.d.-c). Additionally, Paragon used a two-parameter item response theory analysis (2PL IRT) of the 20 most common listening and reading test forms to equate test scores and calibrate items which then accounted for some test form differences. The 2PL IRT also found there may not be sufficient items targeting higher proficiency test takers.

For scoring levels 4–10, the decision consistency and accuracy were high at 0.88 and higher across all four skills. The rates of false positives and negatives across these levels for all four skills was at 0.06 or lower. The conditional standard error of measurement (CSEM) was rather low across all four sub skills ranging from 0.20 in Level 10 Writing to 0.41 in Level 10 listening (Paragon Testing Enterprises, Citation2021c).

The website and technical reports provide a detailed description of Paragon’s rater qualifications, rater training, and benchmark rating. An analysis of rater drift using three multifaceted Rasch models revealed an overlap of the rater severity measures’ distributions, suggesting the rater severity effect was consistent between 2017 and 2020.

Fairness

A fair test is one that tests the same construct, whose scores have the same meaning for all test takers, and that does not (dis)advantage some test takers with any characteristics that are construct irrelevant (AERA et al., 2014). Paragon uses regression-based differential item functioning (DIF) methods and systematically removes items identified as having a moderate to large DIF. DIF analyses for both test taker gender and first language suggested neither played a role in a test taker’s CELPIP results (Paragon Testing Enterprises, Citation2021c).

Paragon researchers found a negligible DIF effect from gender (Chen et al., Citation2016) and only a very small DIF effect of educational backgrounds on CELPIP writing tasks (Chen, Liu, et al., Citation2020a). In a corpus-based study, Li et al. (Citation2020) identified linguistic features from the CELPIP writing rubric and found a small writing difference between genders for cohesion (Cohen’s d ranging from − 0.11 to − 0.14) and syntactic features (Cohen’s d ranging from − 0.115 to − 0.152). This difference could positively or negatively affect test takers’ scores, thus potentially cancelling each other out. In a differential option functioning (DOF) analysis of test takers’ reading task multiple choice item responses, Wu et al. (Citation2021) reported a few items had both uniform and non-uniform DOF. Items that require test takers to make their own interpretations were more difficult for male test takers while items that require making inferences from unfamiliar information were more difficult for female test takers. Both genders had difficulties with: multiple-choice items that required making personal interpretations and understanding more sophisticated language (Wu et al., Citation2021).

Fairness also includes accessibility which is “the notion that all test takers should have an unobstructed opportunity to demonstrate their standing on the construct(s) being measured” (AERA et al., 2014, p. 49). In a Canadian public policy report on temporary workers who transition to permanent residency, migrant workers complained about the high cost of testing, the lack of time for test preparation, and the difficulty in typing their written responses when their work, such as customer or food service, did not require any typing skills whatsoever (Nakache & Dixon-Perera, Citation2015). Although the report does not name CELPIP, it is currently the only computer-based test accepted by IRCC, so one can assume the participants were referring to CELPIP. CELPIP is used as a public policy tool, so it needs to be responsive to sometimes fast-changing immigration programs. This can certainly be challenging. As test takers rushed to apply for a one-time permanent residency program during Covid-19, the CELPIP (and IELTS) booking system crashed (The Canadian Press, Citation2021) and many temporary foreign workers were unable to access language classes or pay test fees (Juha, Citation2021; Rodriguez, Citation2021).

Validity

Paragon aims to make a consistent and ongoing effort to collect evidence that supports the intended use and interpretation of CELPIP scores as well as to stay up-to-date on the context in which they are used (Paragon Testing Enterprises, Citation2021b). Several published studies gathered evidence of test takers’ strategies, or response processes, in order “to suggest and illustrate a useful approach for validation” (Wu & Stone, Citation2016, p. 263). Test takers reported relying more on reading comprehension strategies than on irrelevant strategies, and higher scoring test takers reported using comprehension strategies. Fewer test takers reported using strategies for test wiseness or test management and only lower-scoring test takers reported adding in test wiseness strategies as item difficulty increased (Wu & Stone, Citation2016; Wu et al., Citation2018). Similarly, Chen, Liu, et al. (Citation2020b) reported that using the appropriate listening strategies for test items had a positive association with item-level performance, while inappropriate strategies, such as guessing blindly, predicted lower item-level performance. Lastly, Wu et al. (Citation2016) found that test takers who engaged in more language-learning enabling behaviours scored higher across all four CELPIP skills than those who did not. These findings supported CELPIP’s claim of measuring functional English-language proficiency and not test-taking abilities (Wu & Stone, Citation2016; Wu et al., Citation2018).

There are also works that provide lexical evidence for concurrent validity, examine repeat test takers, and map workplace communication to the CELPIP. Douglas (Citation2016) found overall test takers who produced more words and used a broader range of words, including medium and high-frequency words, scored higher on their CELPIP speaking and writing tasks. Li and Volkov (Citation2017) found higher scoring test takers used more lexical bundles in their writing tasks. Lin and Chen (Citation2020) reported cohesion, syntax, and lexical features remained relatively constant in repeat test takers’ writing samples. There were small changes in writing scores over time with high scorers on their first attempt showing small drops in scores on subsequent attempts and low scorers on their first attempt having the greatest score increases over time. These findings suggested language proficiency does influence CELPIP test takers’ results (Lin & Chen, Citation2020). Finally, Doe et al. (Citation2019) found a match between CELPIP-G LS levels 4 to 6 and the CLB by identifying and mapping newcomers’ workplace communication successes and challenges to both the CLB and CELPIP-General listening and speaking descriptors. This could inform test design because it offered a foundation of workplace language in use.

Conclusion & future directions

CELPIP is the only English-language proficiency test developed, delivered, and licensed specifically for the Canadian migration context, and it seems to be a well-designed test. Available technical reports and research studies indicate Paragon takes their professional role in such a high-stakes testing context seriously and are committed to exploring how alternative methodologies can contribute to a validation argument. Although technological savvy is needed to sit an online test of this nature, the clear instructions, well-designed screens, instructional videos, and practice questions provide appropriate technological support. The test covers all four skills, and the tasks seem to mirror typical daily life contexts and activities. That said, more transparency and discussion of this public policy tool, particularly of its target language use (TLU) domain, test specifications, test taker numbers, and equitable access, in addition to more external research studies would go a long way in contributing to CELPIP’s reliability, fairness, and validity arguments. Paragon’s work so far has made little to no mention of the policies driving CELPIP’s test score use, while the focus on alternative methodologies does not necessarily validate CELPIP test score interpretation and use.

Finally, focusing on test tasks which may match with what a hypothetical new Canadian may need in their daily life potentially hides the values underlying the policy that demands the use of such test scores (McNamara & Ryan, Citation2011). There is no evidence to suggest CELPIP’s construct accounts for linguistic performance in a Canadian English-language milieu or that CELPIP scores are appropriate for Canadian immigration or citizenship applications. CELPIP also claims to test Canadian English and be 100% Canadian, thus giving an advantage to test takers already in Canada (CELPIP Director, Citation2019), but Mendoza (Citation2018) argued Paragon’s claim was entirely ideological. CELPIP is also continuing to expand beyond the Canadian market as Prometric Canada, a global assessment services provider, acquired Paragon Testing Enterprises in 2021 to increase global availability of the CELPIP and contribute to UBC’s goal of creating “research-based spinoff companies” (Paragon Testing Enterprises, Citation2021d). To date there are no studies on CELPIP’s washback, impact, or consequences, or studies focused on policy makers’, test score users’, or test takers’ perspectives. There is potentially some influence of construct-irrelevant skills in both the listening and speaking sections as they require test takers to read instructions, answers, and prompts. This may have implications for lower-proficiency test takers who sit the CELPIP-G LS for citizenship applications or employment purposes.

Addressing these issues is critical because when a test has a political function such as controlling immigration and citizenship, “its very respectability – what we in our innocence call validity – in some ways may suit policy makers, as it can tend to disguise its function” (McNamara, Citation2005, p. 367).

Acknowledgement

This work was supported by an Insight Grant from the Social Sciences and Humanities Research Council (SSHRC) of Canada.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- American Educational Research Association, American Psychological Association, & National Council on Measurement in Education. (2014). Standards for educational and psychological Testing. American Educational Research Association. https://www.testingstandards.net/open-access-files.html

- Bachman, L. F., & Palmer, A. (1996). Language testing in practice: Designing and developing useful language tests. Oxford University Press.

- Bachman, L. F., & Palmer, A. (2010). Language assessment practice: Developing language assessments and justifying their use in the real world. Oxford University Press.

- The Canadian Press. (2021, April 22). Retaking language test unfair during COVID-19: Applicants to new residency pathway. CityNews Toronto. https://toronto.citynews.ca/2021/04/22/retaking-language-test-unfair-during-covid-19-applicants-to-new-residency-pathway/

- CELPIP (Director). (2019, November 12). CELPIP: Canada’s Leading English Language Test. https://www.youtube.com/watch?v=-Ry35MjUsqU

- Centre for Canadian Language Benchmarks. (2012). Canadian language benchmarks: English as a second language for adults. Citizenship and Immigration Canada. https://www.deslibris.ca/ID/235626

- Chen, M. Y., Liu, Y., & Zumbo, B. D. (2020a). A propensity score method for investigating differential item functioning in performance assessment. Educational and Psychological Measurement, 80(3), 476–498. https://doi.org/10.1177/0013164419878861

- Chen, M. Y., Wu, A. D., & Liu, Y. (2020b). Linking test-taking process to performance through mixed-effects regression models: A response process–based validation study. Journal of Psychoeducational Assessment, 38(3), 389–401. https://doi.org/10.1177/0734282919861269

- Chen, M. Y., Zumbo, B. D., & Lam, W. (2016). Testing for differential item functioning with no internal matching variable and continuous item ratings [Poster Presentation]. Language Testing Research Colloquium. chrome-extension://efaidnbmnnnibpcajpcglclefindmkaj/viewer.html?pdfurl=https%3A%2F%2Fwww.paragontesting.ca%2Fwp-content%2Fuploads%2F2018%2F12%2F2016-LTRC_Chen-M.-Y.-Lam-W.-Zumbo-B.-D.pdf&clen=551166&chunk=true

- Doe, C., Douglas, S. R., & Cheng, L. (2019). Mapping language use and communication challenges to the Canadian Language Benchmarks and CELPIP-General LS within workplace contexts for Canadian new immigrants (p. 59). Paragon Testing Enterprises. chrome-extension://efaidnbmnnnibpcajpcglclefindmkaj/viewer.html?pdfurl=https%3A%2F%2Fwww.paragontesting.ca%2Fwp-content%2Fuploads%2F2019%2F06%2FFinal-Report_June-21.pdf&clen=533594&chunk=true

- Douglas, S. R. (2016). The relationship between lexical frequency profiling measures and rater judgements of spoken and written general English language proficiency on the CELPIP-General test. TESL Canada Journal, 32(9), 43–64. https://doi.org/10.18806/tesl.v32i0.1217

- Immigration, Refugees and Citizenship Canada. (2022a, March 3). Language Testing—Skilled Immigrants (Express Entry) [Government Website]. https://www.canada.ca/en/immigration-refugees-citizenship/services/immigrate-canada/express-entry/documents/language-requirements/language-testing.html

- Immigration, Refugees and Citizenship Canada. (2022b, February 14). New Immigration Plan to Fill Labour Market Shortages and Grow Canada’s Economy [News Releases]. https://www.canada.ca/en/immigration-refugees-citizenship/news/2022/02/new-immigration-plan-to-fill-labour-market-shortages-and-grow-canadas-economy.html

- Jezak, M., & Piccardo, E. (2017). Introduction: The Canadian Language Benchmarks and Niveaux de compaux de linguistique canadiens – Canadian Language Framework in the era of globalization. In Language is the Key: The Canadian Language Benchmarks Model (pp. 7–30). University of Ottawa Press/Les Presses de l’Université d’Ottawa. https://doi.org/10.26530/OAPEN_631397

- Juha, J. (2021, April 29). Ottawa offers migrant workers new path to permanent residency. The London Free Press. https://lfpress.com/news/local-news/ottawa-offers-migrant-workers-new-path-to-permanent-residency

- Li, Z., Chen, M. Y., & Banerjee, J. (2020). Using corpus analyses to help address the DIF interpretation: Gender differences in standardized writing assessment. Frontiers in Psychology, 11, 1–11. https://doi.org/10.3389/fpsyg.2020.01088

- Lin, Y.-M., & Chen, M. Y. (2020). Understanding writing quality change: A longitudinal study of repeaters of a high-stakes standardized English proficiency test. Language Testing, 37(4), 523–549. https://doi.org/10.1177/0265532220925448

- Li, Z., & Volkov, A. (2017). “To whom it may concern”: A study on the use of lexical bundles in email writing tasks in an English proficiency test. TESL Canada Journal, 34(3), 54–75. https://doi.org/10.18806/tesl.v34i3.1273

- McNamara, T. (2005). 21st century Shibboleth: Language tests, identity and intergroup conflict. Language Policy, 4(4), 351–370. https://doi.org/10.1007/s10993-005-2886-0

- McNamara, T., & Ryan, K. (2011). Fairness versus justice in language testing: The place of English literacy in the Australian citizenship test. Language Assessment Quarterly, 8(2), 161–178. https://doi.org/10.1080/15434303.2011.565438

- Mendoza, A. (2018). Measuring intra- and international linguistic competence: Appropriation of WEs and ELF discourse in the commercials for two standardized English tests. Critical Inquiry in Language Studies, 15(3), 187–204. https://doi.org/10.1080/15427587.2017.1388171

- Nakache, D., & Dixon-Perera, L. (2015). Temporary or transitional? Migrant workers’ experiences with permanent residence in Canada. Institute for Research on Public Policy. https://irpp.org/research-studies/temporary-or-transitional/. [ 55]

- Paragon Testing Enterprises. (2018). CELPIP test 2018 annual report (p. 22). chrome-extension://efaidnbmnnnibpcajpcglclefindmkaj/viewer.html?pdfurl=https%3A%2F%2Fwww.paragontesting.ca%2Fwp-content%2Fuploads%2F2019%2F02%2FCELPIP-Test-Report-2018-min.pdf&clen=722455&chunk=true

- Paragon Testing Enterprises. (2020, April 8). Download your official online score report! CELPIP. https://www.celpip.ca/download-your-official-online-score-report/

- Paragon Testing Enterprises. (2021a). CELPIP annual report of 2021 test takers. https://www.paragontesting.ca/wp-content/uploads/2022/08/CELPIP-Test-Report-2021-1.pdf

- Paragon Testing Enterprises. (2021b). CELPIP test review report I: Test content, development, and validation (No. 2021–01; p. 22). chrome-extension://efaidnbmnnnibpcajpcglclefindmkaj/viewer.html?pdfurl=https%3A%2F%2Fwww.paragontesting.ca%2Fwp-content%2Fuploads%2F2021%2F05%2FCELPIP_Test_Report_I_2021.pdf&clen=505975&chunk=true

- Paragon Testing Enterprises. (2021c). CELPIP test review report II: Psychometric properties (No. 2021–2; p. 23). chrome-extension://efaidnbmnnnibpcajpcglclefindmkaj/viewer.html?pdfurl=https%3A%2F%2Fwww.paragontesting.ca%2Fwp-content%2Fuploads%2F2021%2F05%2FCELPIP_Test_Report_II_2021.pdf&clen=708574&chunk=true

- Paragon Testing Enterprises. (2021d, April 7). Prometric Canada® acquires paragon testing enterprises. Paragon Testing Enterprises. https://www.paragontesting.ca/prometric-canada-acquires-paragon-testing-enterprises/

- Paragon Testing Enterprises. (n.d.-a). Overview. CELPIP. Retrieved November 23, 2021 https://www.celpip.ca/take-celpip/overview/

- Paragon Testing Enterprises. (n.d.-b). Test Format. CELPIP. Retrieved September 10, 2022 https://www.celpip.ca/take-celpip/test-format/

- Paragon Testing Enterprises. (n.d.-c). Test Results. CELPIP. Retrieved February 28, 2022, from https://www.celpip.ca/take-celpip/test-results/

- Paragon Testing Enterprises. (n.d.-d). Who Accepts CELPIP. CELPIP. Retrieved September 10, 2022, from https://www.celpip.ca/take-celpip/score-users/

- Rodriguez, J. (2021, May 4). Thousands left out of plan to give temporary workers permanent residency: Migrant rights network | CTV news. CTV News. https://www.ctvnews.ca/canada/thousands-left-out-of-plan-to-give-workers-permanent-residency-migrant-rights-network-1.5414144?utm_medium=trueAnthem&taid=6091d66acd14980001d873ca&utm_campaign=trueAnthem%3A+Trending+Content&utm_source=twitter&cid=sm%3Atrueanthem%3A%7B%7Bcampaignname%7D%7D%3Atwitterpost%E2%80%8B

- Wu, A. D., Chen, M. Y., & Stone, J. E. (2018). Investigating how test-takers change their strategies to handle difficulty in taking a reading comprehension test: Implications for score validation. International Journal of Testing, 18(3), 253–275. https://doi.org/10.1080/15305058.2017.1396464

- Wu, A. D., Park, M., & Hu, S.-F. (2021). Gender fairness in immigration language testing: A study of differential options functioning on the CELPIP-G reading multiple-choice questions. International Journal of Quantitative Research in Education, 5(3), 244–264. https://doi.org/10.1504/IJQRE.2021.119811

- Wu, A. D., & Stone, J. E. (2016). Validation through understanding test-taking strategies: An illustration with the CELPIP-General reading pilot test using structural equation modeling. Journal of Psychoeducational Assessment, 34(4), 362–379. https://doi.org/10.1177/0734282915608575

- Wu, A. D., Stone, J. E., & Liu, Y. (2016). Developing a validity argument through abductive reasoning with an empirical demonstration of the latent class analysis. International Journal of Testing, 16(1), 54–70. https://doi.org/10.1080/15305058.2015.1057826