?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Success for All (SFA) is a comprehensive whole-school approach designed to help high-poverty elementary schools increase the reading success of their students. It is designed to ensure success in grades K-2 and then build on this success in later grades. SFA combines instruction emphasizing phonics and cooperative learning, one-to-small group tutoring for students who need it in the primary grades, frequent assessment and regrouping, parent involvement, distributed leadership, and extensive training and coaching. Over a 33-year period, SFA has been extensively evaluated, mostly by researchers unconnected to the program. This quantitative synthesis reviews the findings of these evaluations. Seventeen US studies meeting rigorous inclusion standards had a mean effect size of +0.24 (p < .05) on independent measures. Effects were largest for low achievers (ES= +0.54, p < .01). Although outcomes vary across studies, mean impacts support the effectiveness of Success for All for the reading success of disadvantaged students.

The reading performance of students in the United States is a source of deep concern. American students perform at levels below those of many peer nations on the Program for International Student Assessment (PISA; OECD, Citation2019). Most importantly, there are substantial gaps in reading skills between advantaged and disadvantaged students, between different ethnic groups, and between proficient speakers of English and English learners (National Center for Education Statistics [NCES], Citation2019). These gaps lead to serious inequalities in the American economy and society. America’s reading problem is far from uniform. On PISA Reading Literacy (OECD, Citation2019), American 15-year-old students in schools with fewer than 50% of students qualifying for free lunch scored higher than those in any country. The problem in the United States is substantially advancing the reading skills of students in high-poverty schools. The students in these schools are capable of learning at high levels, but they need greater opportunities and support to fully realize their potential.

Research is clear that students who start off with poor reading skills are unlikely to recover without significant assistance (Cunningham & Stanovich, Citation1997; National Reading Panel, Citation2000). A study by Lesnick et al. (Citation2010) found that students reading below grade level in third grade were four times more likely than other students to drop out before high school graduation.

Evidence about the role of early reading failure in long-term school failure (e.g. National Reading Panel, Citation2000) has led to a great deal of research and development focused on ensuring that students succeed in reading in the elementary grades. Recent reviews of programs for struggling readers by Neitzel, Lake, et al. (Citation2020) and Wanzek et al. (Citation2016) have identified many effective approaches, especially tutoring and professional development strategies. However, in high-poverty schools in which there may be many students at risk of reading failure, a collection of individual approaches may be insufficient or inefficient. In such schools, whole school, coordinated approaches may be needed to ensure that all students succeed in reading.

Success for All

Success for All (SFA) was designed and first implemented in 1987 in an attempt to serve very disadvantaged schools, in which it is not practically possible to serve all struggling readers one at a time. The program emerged from research at Johns Hopkins University, and since 1996 has been developed and disseminated by a nonprofit organization, the Success for All Foundation (SFAF). SFA was designed from the outset to provide research-proven instruction, curriculum, and school organization to schools serving many disadvantaged students.

Theory of Action

Success for All was initially designed in a collaboration between researchers at Johns Hopkins University (JHU) and leaders of the Baltimore City Public Schools (BCPS), whose high-poverty schools had large numbers of students falling behind in reading in the early elementary grades, losing motivation, and developing low expectations for themselves. Ultimately, these students entered middle school lacking basic skills and, in too many cases, no longer believing that success was possible. The JHU-BCPS team was charged with developing a whole-school model capable of ensuring success from the beginning of students’ time in school. The theory of action the team developed focused first on ensuring that students were successful in reading in first grade, providing a curriculum with a strong emphasis on phonemic awareness and phonics (National Reading Panel, Citation2000; Shaywitz & Shaywitz, Citation2020; Snow et al., Citation1998), and using proven instructional methods such as cooperative learning (Slavin, Citation2017), and effective classroom management methods (e.g. Good & Brophy, Citation2018). Students in grades 1–5 are grouped by reading level across grade lines, so that all reading teachers had one reading group. For example, a reading group at the 3–1 level (third grade, first semester) might contain some high-performing second graders, many third graders, and some low-performing fourth graders, all reading at the 3–1 level. Students in the primary grades, but particularly first graders, may receive daily, 30-min computer-assisted tutoring, usually in groups of four, to enable most struggling readers to keep up (Neitzel, Lake, et al., Citation2020; Wanzek et al., Citation2016).

The core focus of the SFA model is to make certain that every student succeeds in basic reading. In addition to the reading instruction and tutoring elements, students who need them can receive services to help them with attendance, social-emotional development, parent involvement, and other needs. After students reach the 2–1 reading level, they continue to receive all program services except tutoring. The upper-elementary program is an adaptation of Cooperative Integrated Reading and Composition (CIRC; Stevens et al., Citation1987). The design of the SFA program in at reading levels 3–5 is focused on cooperative learning, comprehension, metacognitive skills, and writing.

The theory of action for SFA, therefore, assumes that students must start with success, whatever this takes, in the expectation that early success builds a solid base for later learning, positive expectations for future success, and motivation to achieve. However, success in the early grades is seen as necessary, but not sufficient. Evidence on the difficulties of ensuring long-term maintenance of reading gains from highly successful first grade tutoring programs (e.g. Blachman et al., Citation2014) demonstrate that ensuring early-grade success in reading cannot be assumed to ensure lifelong reading success. The designers of SFA intended to build maintenance of first-grade effects by continuing high-quality instruction and classroom organization after an intensive early primary experience sets students up for success. Beyond reading and tutoring, the design seeks to build on students’ strengths by involving their parents, teaching social-emotional skills, and ensuring high attendance.

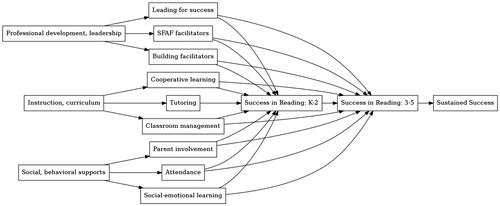

summarizes the SFA theory of action. At the center is success in reading in grades K-2, and then 3–5. All other components of the model support these outcomes. Only tutoring is limited to Grades 1 and 2. Other elements continue through the grades.

The logic of Success for All is much like that of response to intervention (Fuchs & Fuchs, Citation2006), now often called Multi-Tier Systems of Support (MTSS). That is, teachers receive extensive professional development and in-class coaching to help them use proven approaches to instruction and curriculum. Students who do not succeed despite enhanced teaching may receive one-to-small group or, if necessary, one-to-one tutoring. Ongoing assessment, recordkeeping, and flexible grouping are designed to ensure that students receive instruction and supportive services at their current instructional level, as they advance toward higher levels. Program components focus on parent involvement, classroom management, attendance, and social-emotional learning, to solve problems that may interfere with students’ reading and broader school success. Each school has a full-time facilitator to help manage professional development and other program elements, some number of paraprofessional tutors, and coaches from the nonprofit Success for All Foundation, who visit schools approximately once a month to review the quality of implementation, review data, and introduce additional components.

Program Components

Success for All is a whole-school model that addresses instruction, particularly in reading, as well as schoolwide issues related to leadership, attendance, school climate, behavior management, parent involvement, and health (see Slavin et al., Citation2009, for more detail). The program provides specific teacher and student materials and professional development to facilitate use of proven practices in each program component.

Literacy Instruction

Learning to read and write effectively is essential for success in school. Success for All provides in-depth support for reading acquisition. Instructional practices, teacher’s guides, student materials, assessments, and job-embedded professional development are combined to create a comprehensive reading program.

The Success for All reading program is based on research and effective practices in beginning reading (e.g. National Reading Panel, Citation2000), and appropriate use of cooperative learning to enhance motivation, engagement, and opportunities for cognitive rehearsal (Slavin, Citation2017; Stevens et al., Citation1987).

Regrouping

As noted earlier, students in grades one and up are regrouped for reading. The students are assigned to heterogeneous, age-grouped classes most of the day, but during a regular 90-minute reading period they are regrouped by reading performance levels into reading classes of students all at the same level. For example, a reading class taught at the 2–1 level might contain first, second, and third grade students all reading at the same level. The reading classes are smaller than homerooms because tutors and other certified staff (such as librarians or art teachers) teach reading during this common reading period.

Regrouping allows teachers to teach the whole reading class without having to break the class into reading groups. This greatly reduces the time spent on seatwork and increases direct instruction time. The regrouping is a form of the Joplin Plan, which has been found to increase reading achievement in the elementary grades (Slavin, Citation1987).

Preschool and Kindergarten

Most Success for All schools provide a half-day preschool and/or a full-day kindergarten for eligible students. Research supports a balance between development of language, school skills, and social skills (Chambers et al., Citation2016). The SFA preschool and kindergarten programs provide students with specific materials and instruction to give them a balanced and developmentally appropriate learning experience. The curriculum emphasizes the development and use of language. It provides a balance of academic readiness and nonacademic music, art, and movement activities in a series of thematic units. Readiness activities include use of language development activities and Story Telling and Retelling (STaR), which focuses on the development of concepts about print as well as vocabulary and background knowledge. Structured phonemic awareness activities prepare students for success in early reading. Big books as well as oral and written composing activities allow students to develop concepts of print story structure. Specific oral language experiences are used to further develop receptive and expressive language.

Curiosity Corner, Success for All’s pre-kindergarten program, offers theme-based units designed to support a language-rich half-day program for 3- and 4-year olds that supports the development of social emotional skills and early literacy.

KinderCorner offers a full-day theme-based kindergarten program designed to support the development of oral language and vocabulary, early literacy, and social and emotional skills needed for long term success. KinderCorner provides students with materials and instruction designed to get them talking using cooperative discussion with an integrated set of activities. Opportunities for imaginative play increase both self-regulation and language. Formal reading instruction is phased in during kindergarten. Media-based phonemic awareness and early phonics ease students into reading, and simple but engaging phonetically regular texts are used to provide successful application of word synthesis skills in the context of connected text.

Beginning Reading

Reading Roots is a beginning reading program for grades K-1. It has a strong focus on phonemic awareness, phonics, and comprehension (Shaywitz & Shaywitz, Citation2020; Snow et al., Citation1998). It uses as its base a series of phonetically regular but interesting minibooks and emphasizes repeated oral reading to partners as well as to the teacher. The minibooks begin with a set of “shared stories,” in which part of a story is written in small type (read by the teacher) and part is written in large type (read by the students). The student portion uses a phonetically controlled vocabulary. Taken together, the teacher and student portions create interesting, worthwhile stories. Over time, the teacher portion diminishes and the student portion lengthens, until students are reading the entire book. This scaffolding allows students to read interesting stories when they only know a few letter sounds.

Letters and letter sounds are introduced in an active, engaging set of activities that begins with oral language and moves into written symbols. Individual sounds are integrated into a context of words, sentences, and stories. Instruction is provided in story structure, specific comprehension skills, metacognitive strategies for self-assessment and self-correction, and integration of reading and writing. Brief video segments use animations to reinforce letter sounds, puppet skits to model sound blending, and live action skits to introduce key vocabulary.

Adaptations for Spanish Speakers

Spanish bilingual programs use an adaptation of Reading Roots called Lee Conmigo (“Read With Me”). Lee Conmigo uses the same instructional strategies as Reading Roots, but is built around shared stories written in Spanish. SFA also has a Spanish-language kindergarten program, Descubre Conmigo (“Discover with Me”). Students who receive Lee Conmigo typically transition to the English SFA program in Grade 2 or 3, using special materials designed to facilitate transition. Schools teaching English learners only in English are provided with professional development focused on supporting the language and reading development of English learners.

Upper Elementary Reading

When students reach the second grade reading level, they use a program called Reading Wings, an adaptation of Cooperative Integrated Reading and Composition (CIRC) (Stevens et al., Citation1987). Reading Wings uses cooperative learning activities built around story structure, prediction, summarization, vocabulary building, decoding practice, and story-related writing. Students engage in partner reading and structured discussion of stories or novels, and work toward mastery of the vocabulary and content of the story in teams. Story-related writing is also shared within teams. Cooperative learning both increases students' motivation and engages students in cognitive activities known to contribute to reading comprehension, such as elaboration, summarization, and rephrasing (see Slavin, Citation2017). Research on CIRC has found it to significantly increase students' reading comprehension and language skills (Stevens et al., Citation1987).

Reading Tutors

A critical element of the Success for All model is the use of tutoring, the most effective intervention known for struggling readers (Neitzel, Lake, et al., Citation2020; Wanzek et al., Citation2016). In the current version of SFA, computer-assisted tutoring is provided by well-qualified paraprofessionals to groups of four children with reading problems. However, students with very serious problems may receive one to two or one to one tutoring. The tutoring occurs in 30-minute sessions during times other than reading or math periods.

Leading for Success

Schools must have systems that enable them to assess needs, set goals for improvement, make detailed plans to implement effective strategies, and monitor progress on a child by child basis. In Success for All, the tool that guides this schoolwide collaboration is called Leading for Success.

Leading for Success is built around a distributed leadership model, and engages all school staff in a network of teams that address key areas targeted for continuous improvement. The leadership team manages the Leading for Success process and convenes the staff at the beginning of the school year and at the end of each quarter to assess progress and set goals and agendas for next steps. Staff members participate in different teams to address areas of focus that involve schoolwide supports for students and families as well as support for improving implementation of instructional strategies to increase success.

Schoolwide Solutions Teams

A Parent and Family Involvement Team works toward good relations with parents and to increase involvement in the school. Team members organize “welcome” visits for new families, opportunities for informal chats among parents and school staff members, workshops for parents on supporting achievement and general parenting issues, and volunteer opportunities. Solutions teams also focus on improving attendance and intervening with students having learning and behavioral problems.

Program Facilitator

A program facilitator works at each school to oversee (with the principal) the operation of the Success for All model. The facilitator helps plan the Success for All program, helps the principal with scheduling, and visits classes and tutoring sessions frequently to help teachers and tutors with individual problems. He or she works directly with the teachers on implementation of the curriculum, classroom management, and other issues, helps teachers and tutors deal with any behavior problems or other special problems, and coordinates the activities of the Family Support Team with those of the instructional staff.

Teachers and Teacher Training

Professional development in Success for All emphasizes on-site coaching after initial training. Teachers and tutors receive detailed teacher's manuals supplemented by three days of in-service at the beginning of the school year, followed by classroom observations and coaching throughout the year. For classroom teachers of grades 1 and above and for reading tutors, training sessions focus on implementation of the reading program (either Reading Roots or Reading Wings), and their detailed teacher’s manuals cover general teaching strategies as well as specific lessons. Preschool and kindergarten teachers and aides are trained in strategies appropriate to their students' preschool and kindergarten models. Tutors later receive two additional days of training on tutoring strategies and reading assessment.

Throughout the year, in-class coaching and in-service presentations focus on such topics as classroom management, instructional pace, and cooperative learning. Online coaching is also used after coaches and teachers have built good relationships.

Special Education

Every effort is made to deal with students' learning problems within the context of the regular classroom, as supplemented by tutors. Tutors evaluate students' strengths and weaknesses and develop strategies to teach in the most effective way. In some schools, special education teachers work as tutors and reading teachers with students identified as learning disabled, as well as other students experiencing learning problems who are at risk for special education placement. One major goal of Success for All is to keep students with learning problems out of special education if at all possible, and to serve any students who do qualify for special education in a way that does not disrupt their regular classroom experience (see Borman & Hewes, Citation2002).

Consistency and Variation in Implementation

Success for All is designed to provide a consistent set of elements to each school that selects it. On engaging with schools, school and district staff are asked to agree to implement a set of program elements that the developers have found to be most important. These include the following:

A full-time facilitator employed by the school. Typically, the facilitator is an experienced teacher, Title I master teacher, or vice principal already on the school staff, whose roles and responsibilities are revised to focus on within-school management of the SFA process.

At least one full-time tutor, usually a teaching assistant, to work primarily with first graders who are struggling in reading.

Implementation of the SFA KinderCorner (or Descubre Conmigo) program in kindergarten, Reading Roots (or Lee Conmigo) in grades 1 and 2, and Reading Wings in grades 2 and above (for students who have tested out of Reading Roots). KinderCorner and Reading Roots are complete early reading approaches, but Reading Wings is built around widely used traditional or digital texts and/or trade books selected by schools.

Professional development by SFA coaches, consisting of 2 days for all teachers, plus monthly on-site visits by SFA coaches.

Regrouping for reading. During a daily 90-minute reading period, students are regrouped for reading starting in grade 1, as described above.

These elements are considered essential to SFA, and SFAF does not engage with schools that decline to implement and maintain all of them. After program inception, it of course occurs that schools cannot keep to their initial commitments, and some accommodations have to be made. For example, a school under financial pressure may have to use a half-time facilitator rather than full-time.

With respect to other elements of SFA, such as leadership, parent involvement, and special education policies, SFAF negotiates variations to accommodate school characteristics and district policies. As a result of its strong emphasis on consistency, the program elements believed to be most essential to reading outcomes do not vary significantly from school to school.

Evolution of Program Components over Time

The basic design and operation of Success for All has remained constant for its entire 33-year history, but there has been constant change in the specific components. These are introduced because of learnings from experiences in schools, demand from schools and districts, findings of research, external grants, and advances in technology (see Peurach, Citation2011). The Reading Roots (K-1) reading program, for example, developed technology to help teachers present lessons and manage regular assessments. Reading Wings (2–5) has increasingly focused on the teaching of reading comprehension using metacognitive strategies. The tutoring program has evolved substantially. The main driver has been a quest for cost-effectiveness, as tutoring is expensive. Initially, tutoring was done by certified teachers one-to-one. However, this was not economically sustainable for most schools, so in the mid-1990s, SFAF developed a new model appropriate for use by teaching assistants. In the 2000s, SFAF began to introduce computer-assisted tutoring, taking advantage of increasing availability of computers in schools. SFAF then began to develop and evaluate small group tutoring. In 2016, SFAF developed a computer-assisted small-group model that teaching assistants could use reliably with success in groups of four. This model requires one-eighth the personnel costs per tutored student of our original model, and gets equal outcomes, so it allows schools to serve many more students for the same cost (Madden & Slavin, Citation2017).

Some whole programs have been added, to enable SFAF to serve additional populations. SFAF added a preschool program in the mid-1990s, and added Spanish bilingual and sheltered English program around the same time. SFAF added the Leading for Success component in the 2000s, to improve schools’ capacities to distribute leadership among its staff.

Any program as comprehensive as Success for All has to evolve to keep up with the times and to constantly improve its outcomes and reduce its cost and complexity. Success for All has always learned from its partners and its own staff, and incorporates these learnings continuously, in ways large and small.

Research on Success for All

Success for All has been in existence for 33 years, and currently (2020) provides services to about 1,000 schools in the United States. About half of these use the full program, and half use major components (most often, the K-2 reading program). The program has placed a strong emphasis on research and evaluation, and has always carried out or encouraged experimental or quasi-experimental evaluations to learn how the program is working and what results it is achieving for which types of students and settings. Studies of Success for All have usually been done by third party evaluators (i.e. researchers unrelated to the program developers). They have taken place in high-poverty schools and districts throughout the United States.

The present synthesis of research on Success for All includes every study of reading outcomes carried out in US schools that evaluated the program using methods that meet a set of inclusion standards described below. The purpose of the synthesis is to summarize the evidence and to identify moderators of program effects, and then to consider the implications of the findings for theory, practice, and policy.

The Need for a Meta-Analysis on SFA

Over the past 33 years, SFA programs have been widely applied and evaluated throughout the United States to help youngsters with their reading progress. However, these reports only focus on single evaluations of the intervention rather than synthesizing studies of all high-quality experiments over time. A meta-analysis of SFA studies was reported as part of a meta-analysis of comprehensive school reforms by Borman et al. (Citation2003), and another meta-analysis was part of a synthesis of research on elementary reading programs by Slavin et al. (Citation2009). SFA outcomes for struggling readers were included in a synthesis on that topic by Neitzel, Lake, et al. (Citation2020). However, the present synthesis is the first to focus in detail on Success for All alone, enabling much more of a focus on its evidence base than was possible in reviews of many programs. Also, the review uses up-to-date methods for quantitative synthesis (e.g. Borenstein et al., Citation2009; Pigott & Polanin, Citation2020).

The main objective of the current meta-analysis is to investigate the average impact of SFA on reading achievement. The three key main research questions are as follows:

What is the overall effect of SFA on student reading achievement?

Are there differential impacts of SFA on the reading achievement of different subgroups of students?

What study features moderate the effects of SFA on reading achievement?

Methods

Data Sources

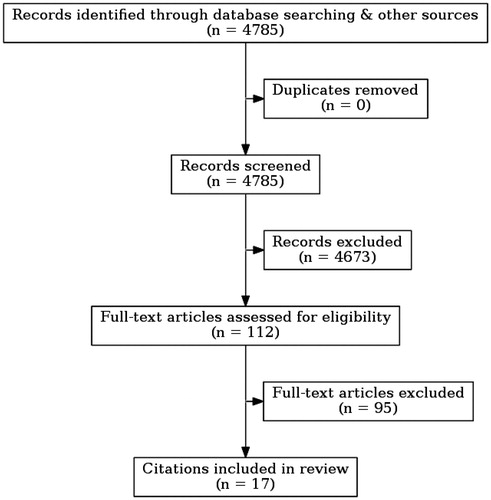

The document retrieval process consisted of several steps (see ). The research team employed various strategies to identify all possible studies that have been done to evaluate reading outcomes of SFA. First, the team carried out a broad literature search. Electronic searches were made of educational databases (ERIC, Psych INFO, Dissertation Abstracts) using different combinations of key words (for example, “Success for All,” “SFA,” “reading,” “Comprehensive School Reform”) and the years 1989–2020. In addition, previous meta-analyses on reading interventions were searched and the reference lists of these meta-analyses were examined to identify any SFA studies. The authors contacted the Success for All Foundation, the developer of the program, to identify studies that might have been missed in the search, especially unpublished studies. Articles from any published or unpublished source that met the inclusion standards were independently read and examined by at least two researchers. Any disagreements in coding were resolved by discussion, and additional researchers read any articles on which there remained disagreements.

Inclusion and Exclusion Criteria

Criteria for inclusion and exclusion of studies were similar to those of the What Works Clearinghouse (WWC, Citation2020). They are as follows.

The studies evaluated SFA programs used in elementary schools. Studies had to appear between 1989 and 2020.

Studies had to be of students who started SFA in grades pre-K, K, or 1, as most tutoring (a key element of the theory of action) takes place in first grade.

The studies compared children taught in schools using SFA with those in control schools using an alternative program or standard methods.

Random assignment or matching with appropriate adjustments for any pretest differences (e.g. analyses of covariance) had to be used. In randomized experiments, a number of schools volunteered to participate, and half were assigned at random to use SFA, while the remaining schools continued using existing methods. In matched studies, schools assigned to use SFA were matched in advance with control schools on factors such as pretests, poverty indicators, ethnicity, and school size. Post-hoc studies in which matching was done after experimental and control schools completed implementation were excluded. Studies without control groups, such as pre-post comparisons and comparisons to “expected” scores, were also excluded.

Pretest data had to be provided. Studies with pretest differences of more than 25% of a standard deviation were excluded, as required by WWC (Citation2020) standards.

The dependent measures included quantitative measures of reading performance not created by SFA developers or researchers.

A minimum study duration of one school year was required.

Studies had to have at least two schools in each treatment group. This criterion avoided having treatment and school effects be completely confounded.

Study reported results at the end of the intervention period (for the main analyses) or interim results (for exploratory analyses examining impacts over time).

Coding

Studies that met the inclusion criteria were coded by one of the study team members and verified by another study team member. The fully coded data are available on the Johns Hopkins University Data Archive (Neitzel, Cheung, et al., Citation2020). Data to be coded beyond outcome measures, sample sizes, and effect sizes included substantive factors, methodological factors, and extrinsic factors. These are described below.

Substantive Factors

Substantive factors describe the intervention, population, and context of the study. These coded factors included duration of intervention, student grade level, and population description (race, ethnicity, English learner status, and free/reduced price meals status). Schools were categorized as being primarily African-American, Hispanic, or White if more than half of students were of that race (or if there were subgroup analyses by race). They were considered high-poverty if at least 66% of students qualified for free lunch.

Methodological Factors

Methodological factors included the research design (randomized or quasi-experimental design), and the type of outcome. Outcomes were categorized into three groups: general reading/comprehension, fluency, or alphabetics (WWC, Citation2014). Alphabetics includes subskills of reading such as letter identification and phonics outcomes, fluency includes reading accuracy and reading with expression, and comprehension outcomes assess the ability to understand connected text. General reading includes all types of reading outcomes. Comprehension is weighted heavily in general reading measures, so we combined general reading and comprehension scores into a single factor. The reading posttest scores used as the main outcome measures were those reported from the final year of implementation for a given cohort. For example, in a 3-year study with a K-2 and a 1-3 cohort, the third-year scores in grades 2 and 3 would be the main outcomes, and these would be averaged to get a study mean.

Extrinsic Factors

Extrinsic factors coded included publication status, year of publication, and evaluator independence. Studies were considered independent if the list of authors did not include any of the original developers of SFA.

Statistical Analysis

The effect sizes of interest in this study are standardized mean differences. These are effect sizes that quantify the difference between the treatment and control group on outcome measures, adjusted for covariates, divided by standard deviations. This allows the magnitude of impacts to be compared across interventions and outcome measures. Effect sizes were calculated as the difference between adjusted posttest scores for treatment and control students, divided by the unadjusted standard deviation of the control group. Alternative procedures were used to estimate effect sizes when unadjusted posttests or unadjusted standard deviations were not reported (Lipsey & Wilson, Citation2001). Studies with cluster assignments that did not use HLM or other multi-level modeling but used student-level analysis were re-analyzed to estimate significance account for clustering (Hedges, Citation2007a).

In meta-analysis models, studies were weighted, to give more weight to studies with the greatest precision (Hedges et al., Citation2010). In practice, this primarily involves weighting for sample size. Weights for each study were calculated according to the following formula:

where

is the weight for study j,

is the number of findings in study j,

is the average finding-level variance for study j, and

is the between-study variance in the study-average effect sizes (Hedges et al., Citation2010; Tipton, Citation2015). Variance estimates were adjusted for studies with cluster-level assignment, using the total variance for unequal cluster sample sizes (Hedges, Citation2007b).

We used a multivariate meta-regression model with robust variance estimation (RVE) to conduct the meta-analysis (Hedges et al., Citation2010). This approach has several advantages. First, our data included multiple effect sizes per study, and robust variance estimation accounts for this dependence without requiring knowledge of the covariance structure (Hedges et al., Citation2010). Second, this approach allows for moderators to be added to the meta-regression model and calculates the statistical significance of each moderator in explaining variation in the effect sizes (Hedges et al., Citation2010). Tipton (Citation2015) expanded this approach by adding a small-sample correction that prevents inflated Type I errors when the number of studies included in the meta-analysis is small or when the covariates are imbalanced. We estimated three meta-regression models. First, we estimated a null model to produce the average effect size without adjusting for any covariates. Second, we estimated a meta-regression model with the identified moderators of interest and covariates. Both the first and second models included only the outcomes at the end of the intervention period. Third, we estimated an exploratory meta-regression model including the same identified moderators of interest and covariates, but that added results from interim reports, to better explore the change in impact over time. Both of the meta-regression models took the general form:

where

is the effect size estimate i in study j,

is the grand mean effect size for all studies,

is a vector of regression coefficients for the covariates at the effect size level,

is a vector of covariates at the effect size level,

is a vector of regression coefficients at the study level, and

is a vector of covariates at the study level,

is the study-specific random effect, and

is the effect size specific random effect. The

and

included substantive, methodological, and extrinsic factors, as outlined above. All moderators and covariates were grand-mean centered to facilitate interpretation of the intercept. All reported mean effect sizes come from this meta-regression model, which adjusts for potential moderators and covariates. The packages metafor (Viechtbauer, Citation2010) and clubSandwich (Pustejovsky, Citation2020) were used to estimate all random-effects models with RVE in the R statistical software (R Core Team, Citation2020).

Results

Since first implemented in Baltimore in 1987, over 60 studies have been carried out to examine the effectiveness of SFA. However, only 17 studies met the inclusion criteria for this review. Common reasons for exclusion (see Online Supplementary Appendix 1) included failure to have at least two schools in each treatment condition (k=17), no appropriate data, or nonequivalent or missing pretests (k = 13), non-US locations (k=17), program started after first grade (k=2), comparing to normed performance (k=2), or comparing two forms of SFA (k=4).

Characteristics of Studies

The majority of the included studies were quasi-experiments (k = 15), and only two were randomized studies. Three of the included studies were published articles and 14 were unpublished documents such as dissertations, conference papers, and technical reports. In terms of the relationship of the developer to the evaluator, most of the studies were determined to be independent (k=13), while the remaining studies included at least one of the developers in the author list of the study (k=4). All but one of the studies took place in schools with very high levels of economic disadvantage, with at least 66% of students receiving free or reduced-price lunches (k=16).

Across these 17 studies, a total of 221 separate effect sizes were coded, with an average of 13 effect sizes per study. Six studies reported final effect sizes after 1 year (n = 55), 3 studies reported effect sizes after 2 years (n = 20), and 9 studies reported effect sizes after 3 or more years (n = 146). Six studies reported 85 outcomes for African-American students, either by reporting on a predominantly African-American student sample or by reporting on outcomes for African-American students separately, within a heterogeneous sample. Outcomes for Hispanic students were reported in 3 studies (n=34). One study reported outcomes for White students (n=4). Four studies reported outcomes separately for English Learners (ELs), while eight studies reported on outcomes for low achievers separately. Outcomes were mainly of general reading or comprehension measures (n=90) and alphabetics (n=97), with fewer findings reported on fluency measures (n=34).

Overall Effects

The results for the null model and full meta-regression model is shown in , which lists the two randomized studies and then all quasi-experiments in order of school sample size. The meta-regression model controlled for research design, independence of evaluator, duration of study, race/ethnicity of students, language status of students, baseline achievement level, and outcome type. There was an overall positive impact of SFA on reading achievement across all qualifying studies (ES= +0.24, p < .05). However, these outcomes vary considerably, with a 95% prediction interval of −0.27 to +0.75. The prediction interval provides a sense of the heterogeneity of the outcomes, with 95% of the effect sizes in the population expected within this range. Study characteristics and findings of the 17 included studies are summarized in , and more detailed study-by-study information is shown in Appendix 2 in the Online Appendix.

Table 1. Meta-regression results.

Table 2. Features and summary of outcomes of included studies.

Only two of the studies of SFA were large-scale cluster randomized experiments. Borman et al. (Citation2007) carried out the first randomized, longitudinal study. Forty-one schools (21T, 20C) throughout the United States were randomly assigned to either the treatment or control condition. Children were pretested on the PPVT and then individually tested on the Woodcock Reading Mastery Test each spring for 3 years, kindergarten to second grade. At the end of this 3-year study, 35 schools and over 2,000 students remained. Using pretests as covariates, the HLM results indicated that the treatment schools significantly outperformed the controls on all three outcome measures, with an overall effect size of +0.25 (p < 0.05). The effect sizes were +0.22, +0.33, and +0.21 for Word Identification, Word Attack, and Passage Comprehension, respectively.

The second large-scale cluster randomized longitudinal study was carried out by Quint et al. (Citation2015). Similar to the Borman study, 37 low-SES schools from five school districts in the United States were randomly assigned to treatment (N = 19) or control conditions (N = 18). Students were followed from kindergarten to second grade. The treatment schools scored significantly higher than the controls on phonics skills for second-graders who had been in the treatment group for all three years. No statistically significant differences were found on reading fluency and comprehension posttests. However, among the lowest-performing students at pretest, those in the treatment group scored significantly higher than their counterparts in the control group on phonics skills, word recognition, and reading fluency.

All other US studies of SFA used quasi-experimental designs, in which schools were matched at pretest based on pretests and demographics, and then students in both groups were assessed each year, for from 1 to 5 years. Most of these quasi-experiments involved small numbers of schools, and would not have had sufficient numbers of clusters (schools) for adequate statistical power on their own. However, this meta-analysis combines these with other studies, weighting for sample size and other covariates, to obtain combined results that are adequately powered.

One of the QEDs was notable for its large size and longitudinal designs. Slavin et al. (Citation1993; also see Madden et al., Citation1993) evaluated the first five schools to use Success for All. The schools, all high-poverty schools in Baltimore, were each matched with control schools with very similar pretests and demographics. All students were African American and virtually all students qualified for free lunches. Within schools, individual students were matched with control students. Students were followed from first grade onward, in a total of five cohorts. The mean effect size across all five cohorts after 3 years was +0.59 (p = .05) for all students and +1.17 (p < .01) for low achievers. The mean effect size for fifth graders who had been in treatment or control schools since first grade was +0.46 (n.s.) overall and +1.01 (p < .01) for low achievers. A follow-up study of these schools was carried out by Borman and Hewes (Citation2002). It obtained data from three cohorts of students followed to the eighth grade, so students would have been out of the K-5 SFA schools for at least three years. Results indicated lasting positive effects on standardized reading achievement measures (ES = +0.29, n.s.), and SFA students were significantly less likely to have been retained in elementary school (ES = +0.27, n.s.) or assigned to special education (ES= +0.18, n.s.), in comparison to controls.

The second major, large-scale QED was a part of the University of Michigan’s Study of Instructional Improvement (Rowan et al., Citation2009). This study compared more than 100 schools throughout the United States that were implementing one of three comprehensive school reform models: Success for All, America’s Choice, or Accelerated Schools. There was also a control group. Students in the SFA portion of the study were followed from kindergarten to second grade. The detailed findings were reported by Correnti (Citation2009), who found an overall effect size of +0.43 (p < .01).

Substantive and Methodological Moderators

Several important demographic and methodological moderators of treatment impacts were identified and explored statistically (see ). Not all coded factors and potential moderators were able to be examined, because of very unequal distributions of studies within moderators, or substantial correlations between moderators and study features.

Table 3. Substantive and methodological moderators.

Research Design

Differences in effect sizes between studies that used randomized designs (k = 2, ES= +0.23) and studies that used quasi-experimental designs incorporating matching (k = 15, ES= +0.24) were tested. This difference was not statistically significant.

Evaluator Status

We also compared differences in effect sizes for studies conducted independently from the SFA developers and those conducted in collaboration with SFA. Effect sizes for studies from independent evaluations (ES= +0.21, p < .10) were similar to those from studies conducted with the program developers (ES= +0.30, p < .10). This difference was not statistically significant.

Duration

Effect sizes were compared for studies at the end of 1, 2, and 3 or more years. Effect sizes averaged +0.25 after 1 year, +0.46 after 2 years, and +0.19 after 3 or more years. Appendix 3 in the Online Appendix shows year-by-year outcome trends for longitudinal studies, with mean outcomes by year similar to the duration findings.

Race & Ethnicity

Outcomes for samples of mostly African-American students averaged +0.30 (p < .05; k = 6). In mostly Hispanic samples (k = 3), effect sizes averaged +0.24 (n.s.). One study included mostly White students, with average effects of +0.63 (p < .05). The remaining 10 studies included outcomes of a mix of race and ethnicities, with mean effect sizes of +0.23 (n.s.)

English Learner Status

Impacts were similar for English Learners (ES= +0.13, p < .05), non-English Learners (ES= +0.36, p < .05) and mixed samples (ES= +0.23, p < .05). These differences were not statistically significant.

Achievement Status

Outcomes including all students had a mean effect size of +0.24 (k = 17). Outcomes for low achievers averaged +0.54 (p < .01), significantly higher than outcomes for average/high achievers (ES= +0.07, n.s.), and those for mixed samples (ES= +0.16, n.s.).

Outcome Type

Differences in effect sizes across outcome types were also statistically examined. The mean effect size across studies with general reading or comprehension outcomes was +0.20 (n = 90). This contrasted with mean effect sizes across alphabetics outcomes (ES= +0.32, n = 97), and fluency outcomes (ES= +0.14, n = 34). Alphabetics outcomes were significantly higher than fluency outcomes (p < .01).

Discussion

Success for All is a very unusual educational reform program, unique in many ways. It has operated for 33 years with the same basic philosophy and approach, although it has constantly changed its specific components in response to its learnings (Peurach, Citation2011). Its dissemination has waxed and waned with changing educational policies, SFA served as many as 1,500 schools at one time, in 2000–2001. Currently, there are about 500 schools using the full program and another 500 schools using components. In contrast, in two prominent charter networks, KIPP serves 242 schools, and New York’s Success Academies serve 45. Also, the program is relatively long-lasting. Data reported by Slavin et al. (Citation2009), indicates that the median SFA school stays in the program for 11 years, and there are several that have used it more than 20 years. At a cost of $117 per student per year (as reported by Quint et al., Citation2015), SFA is relatively cost-effective (Borman & Hewes, Citation2002).

In its long history, Success for All has frequently been evaluated, mostly by third parties. There were 17 studies that met rigorous inclusion standards. In contrast, the great majority of programs that met the inclusion standards of the What Works Clearinghouse or Evidence for ESSA have been evaluated in just one qualifying study, and very few have been evaluated more than twice.

Across the 17 qualifying US studies, the mean effect size was +0.24 for students in general, and among 8 studies that separately analyzed effects for the lowest achievers, the mean was +0.56. These are important outcomes. As a point of comparison, the mean difference in National Assessment of Educational Progress (NAEP) reading achievement between students who qualify for free lunch and those who do not is approximately an effect size of 0.50 (National Center for Education Statistics (NCES), Citation2019). The mean outcomes of Success for All are almost half of this gap, and the outcomes for lowest achievers equal the entire gap.

An important and interesting question for policy and practice is whether SFA works particularly well with sub-populations. The only important factor with sufficient studies to permit subsample analyses was lowest-achieving students (usually students in the lowest 25% of their classes). As noted earlier, the mean effect size for low achievers was +0.54.

It is possible to speculate about what aspects of SFA made the program more effective for lowest achieving students. Low achievers are most likely to receive one-to-one or one-to-small group tutoring, known to have a substantial impact on reading achievement (Neitzel, Lake, et al., Citation2020; Wanzek et al., Citation2016). Also, there is evidence that cooperative learning, used throughout SFA, is particularly beneficial for low achievers (Slavin, Citation2017).

The findings of the subgroup analyses with low achievers may be especially important for schools serving large numbers of students who are poor readers. Quint and her colleagues argued that the cost of SFA, which they estimated at $117 per pupil per year, was relatively modest when compared to that of business-as-usual reading programs. In other words, for schools with a high percentage of poor readers, SFA offers a pragmatic alternative supported by evidence of effectiveness.

The effects of SFA are generally maintained as long as the program remains in operation. In the one study to assess lasting impacts (Borman & Hewes, Citation2002), outcomes maintain in follow-up as well. This is an unusual finding, and contrasts with the declining impacts over time seen for intensive early tutoring (e.g. Blachman et al., Citation2014; Pinnell et al., Citation1994). Beyond SFA itself, this set of findings suggests that a strategy of intensive tutoring and other services followed up with continued interventions to improve classroom instruction to maintain early gains may have more promise than intensive early intervention alone.

The importance of tutoring for struggling readers in the early elementary grades is suggested by the substantially greater short- and long-term impacts of SFA for the lowest-achieving students, who are those most likely to receive tutoring, of course. Another interesting point of comparison also speaks to the importance of tutoring as part of the impact of SFA. Of the four largest evaluations of SFA, three found strong positive impacts. In these, schools were able to provide adequate numbers of tutors to work with most struggling readers in grades 1–3. However, the fourth study, by Quint et al. (Citation2015), took place at the height of the Great Recession (2011–2014). School budgets were severely impacted, and during this study, most schools did not have tutors. This study reported significant positive effects for low achievers, but all outcomes were much smaller than those found in the other studies.

Many phonetic reading programs emphasizing early intervention show substantial positive effects on measures of alphabetics, but not comprehension or general reading. The outcomes of SFA are strongest on measures of alphabetics (ES= +0.32), but are also positive on general reading/comprehension (ES= +0.19), indicating that the program is more than just phonics.

A distressingly common finding in studies of educational programs is that studies carried out by program developers produce much more positive outcomes than do independent evaluations (Borman et al., Citation2003; Wolf et al., Citation2020). In the case of Success for All, studies including SFA developers as co-investigators (k = 4) do obtain higher effect sizes than do independent studies (k = 13) (ES= +0.30 versus +0.21, respectively), but this difference is not statistically significant. However, this analysis was underpowered, with only 17 studies, so these results must be interpreted with caution.

Policy Importance of Research on Success for All

Attempts to improve the outcomes of education for disadvantaged and at-risk students fall into two types. One focuses on systemwide policies, such as targeted funding, governance, assessment/accountability schemes, standards, and regulations. These types of strategies are rarely found to be very effective, but they do operate on a very large scale. In contrast, research and development often creates effective approaches, proven to make a meaningful difference in student achievement. However, these proven approaches rarely achieve substantial scale, and if they do, they often do not maintain their effectiveness at scale (see Cohen & Moffitt, Citation2009, for a discussion of this dilemma).

Success for All is one of very few interventions capable of operating at a scale that is meaningful for policy without losing its effectiveness. At its peak, Success for All operated nationally in more than 1,500 schools, and its growth was only curtailed by a shift in federal policies in 2002. Its many evaluations, mostly done by third party evaluators, have found positive outcomes across many locations and over extended periods of time.

In the current policy climate in the United States, in which evidence of effectiveness is taking on an ever-greater role, Success for All offers one of very few approaches that could, in principle, produce substantial positive outcomes at large scale, and this should have meaning for national policies.

The importance of Success for All for policy and practice is best understood by placing the program in the context of other attempts to substantially improve student achievement in elementary schools serving many disadvantaged students. A recent review of research on programs for struggling readers in elementary schools by Neitzel, Lake, et al. (Citation2020) found that there were just three categories of approaches with substantial and robust evidence of positive outcomes with students scoring in the lowest 25% or 33% of their schools in reading. One was one-to-one or one-to-small group tutoring, by teachers or teaching assistants, with a mean effect size of +0.29. Another was multi-tier whole school/whole school approaches, consisting of Success for All and one other program. The third was whole class Tier 1 programs, mostly using cooperative learning. What these findings imply is that in schools with relatively few students struggling in reading, tutoring may be the best solution for the individuals who are struggling. Even though tutoring is substantially more expensive per student than Success for All, in a school with few struggling readers, it may not be sensible to intervene with all students.

On the other hand, when most students need intervention in reading, it is not sensible or cost-effective to solve the problem with tutoring alone. In the United States, the average large urban school district has only 28% of fourth graders scoring “proficient” or better on the National Assessment of Educational Progress (National Center for Education Statistics [NCES], Citation2019), and in cities such as Dallas, Milwaukee, Baltimore, Cleveland, and Detroit, fewer than 15% of students in the entire district score at or above “proficient.” In such districts, and in individual low-performing schools even in higher-performing districts, trying to reach high levels of proficiency through tutoring alone would be prohibitively expensive.

The findings of the evaluations of Success for All have particular importance for special education policies. The structure of SFA adheres closely to the concept of Response to Intervention (RTI). SFA emphasizes professional development, coaching, and extensive programming to improve outcomes of Tier 1 classroom instruction, which is then followed up by closely coordinated Tier 2 (small-group tutoring) or Tier 3 (one-to-one tutoring) for students who need it. Longitudinal research found substantial and lasting impacts on the achievement of the lowest achievers, and on reductions in assignment to special education as well as retentions in grade (Borman & Hewes, Citation2002).

Beyond the program itself, the research on Success for All, as applied to low-achieving students, illustrates that the educational problems of low-achieving students are fundamentally solvable. Perhaps someday there will be many approaches like Success for All, each of which is capable of improving student achievement on a substantial scale. Research on Success for All suggests that disadvantaged students and struggling readers could be learning to read at significantly higher levels than they do today, and that substantial improvement can be brought about at scale. The knowledge that large-scale improvement is possible should lead to policies that both disseminate existing proven approaches, and invest in research and development to further increase the effectiveness and replicability of programs that can reliably produce important improvements in reading for disadvantaged and low-achieving readers.

Open Scholarship

This article has earned the Center for Open Science badge for Open Data. The data are openly accessible at https://archive.data.jhu.edu/dataset.xhtml?persistentId=doi:10.7281/T1/VDZAZY. To obtain the author's disclosure form, please contact the Editor.

Supplemental Material

Download MS Word (74.8 KB)Additional information

Funding

References

- **A double asterisk indicates studies included in the main meta-analysis (final reports).

- *A single asterisk indicates studies included in the exploratory meta-analysis (interim reports).

- Blachman, B. A., Schatschneider, C., Fletcher, J. M., Murray, M. S., Munger, K. A., & Vaughn, M. G. (2014). Intensive reading remediation in grade 2 or 3: Are there effects a decade later? Journal of Educational Psychology, 106(1), 46–57. https://doi.org/10.1037/a0033663

- Borenstein, M., Hedges, L. V., Higgins, J. P., & Rothstein, H. R. (2009). Introduction to meta-analysis. John Wiley & Sons, Ltd.

- Borman, G. D., & Hewes, G. M. (2002). The long-term effects and cost-effectiveness of Success for All. Educational Evaluation and Policy Analysis, 24(4), 243–266. https://doi.org/10.3102/01623737024004243

- Borman, G. D., Hewes, G. M., Overman, L. T., & Brown, S. (2003). Comprehensive school reform and achievement: A meta-analysis. Review of Educational Research, 73(2), 125–230. https://doi.org/10.3102/00346543073002125.

- **Borman, G. D., Slavin, R. E., Cheung, A. C. K., Chamberlain, A. M., Madden, N. A., & Chambers, B. (2007). Final reading outcomes of the national randomized field trial of Success for All. American Educational Research Journal, 44(3), 701–731. https://doi.org/10.3102/0002831207306743.

- Chambers, B., Cheung, A., & Slavin, R. (2016). Literacy and language outcomes of balanced and developmental-constructivist approaches to early childhood education: A systematic review. Educational Research Review, 18, 88–111. https://doi.org/10.1016/j.edurev.2016.03.003

- **Chambers, B., Slavin, R. E., Madden, N. A., Cheung, A., & Gifford, R. (2005). Enhancing Success for All for Hispanic students: Effects on beginning reading achievement. Success for All Foundation. http://eric.ed.gov/?id=ED485350

- Cohen, D. K., & Moffitt, S. L. (2009). The ordeal of equality: Did federal regulation fix the schools? Harvard University Press.

- **Correnti, R. (2009). Examining CSR program effects on student achievement: Causal explanation through examination of implementation rates and student mobility [Paper presentation]. Paper presented at the 2nd Annual Conference of the Society for Research on Educational Effectiveness, Washington, DC, March, 2009.

- Cunningham, A. E., & Stanovich, K. E. (1997). Early reading acquisition and its relation to reading experience and ability 10 years later. Developmental Psychology, 33(6), 934–945. https://doi.org/10.1037/0012-1649.33.6.934

- **Datnow, A., Stringfield, S., Borman, G., Rachuba, L., & Castellano, M. (2001). Comprehensive school reform in culturally and linguistically diverse contexts: Implementation and outcomes from a 4-year study. Center for Research on Education, Diversity, and Excellence.

- Fuchs, D., & Fuchs, L. (2006). Introduction to response to intervention: What, why, and how valid is it? Reading Research Quarterly, 41 (1), 93–128. https://doi.org/10.1598/RRQ.41.1.4.

- Good, T., & Brophy, J. (2018). Looking in classrooms (10th ed.). Allyn & Bacon.

- Hedges, L. V. (2007a). Correcting a significance test for clustering. Journal of Educational and Behavioral Statistics, 32(2), 151–179. https://doi.org/10.3102/1076998606298040

- Hedges, L. V. (2007b). Effect sizes in cluster-randomized designs. Journal of Educational and Behavioral Statistics, 32(4), 341–370. https://doi.org/10.3102/1076998606298043.

- Hedges, L. V., Tipton, E., & Johnson, M. C. (2010). Robust variance estimation in meta-regression with dependent effect size estimates. Research Synthesis Methods, 1(1), 39–65. https://doi.org/10.1002/jrsm.5.

- Lesnick, J., Goerge, R., Smithgall, C., & Gwynne, J. (2010). Reading on grade level in third grade: How is it related to high school performance and college enrollment? Chapin Hall at the University of Chicago.

- Lipsey, M. W., & Wilson, D. B. (2001). Practical meta-analysis. SAGE.

- **Livingston, M., & Flaherty, J. (1997). Effects of Success for All on reading achievement in California schools. WestEd.

- Madden, N. A., & Slavin, R. E. (2017). Evaluations of technology-assisted small-group tutoring for struggling readers. Reading & Writing Quarterly, 33(4), 327–334. https://doi.org/10.1080/10573569.2016.1255577.

- **Madden, N. A., Slavin, R. E., Karweit, N. L., Dolan, L. J., & Wasik, B. A. (1993). Success for All: Longitudinal effects of a restructuring program for inner-city elementary schools. American Educational Research Journal, 30(1), 123–148. https://doi.org/10.3102/00028312030001123.

- **Muñoz, M. A., Dossett, D., & Judy-Gullans, K. (2004). Educating students placed at risk: Evaluating the impct of Success for All in urban settings. Journal of Education for Students Placed at Risk, 9(3), 261–277. https://doi.org/10.1207/s15327671espr0903_3.

- National Center for Education Statistics (NCES). (2019). The condition of education 2019. https://nces.ed.gov/pubsearch/pubsinfo.asp?pubid=2019144.

- National Reading Panel (NRP). (2000). Teaching children to read: An evidence-based assessment of the scientific research literature on reading and its implications for reading instruction (NIH Pub. No. 00-4754). http://www.nichd.nih.gov/publications/pubs/nrp/pages/report.aspx.

- Neitzel, A., Cheung, A. C., Xie, C., Zhuang, T., & Slavin, R. (2020). Data associated with the publication: Success for All: A quantitative synthesis of U. S. evaluations (V1 ed.). Johns Hopkins University Data Archive.

- Neitzel, A., Lake, C., Pellegrini, M., & Slavin, R. (2020). Effective programs for struggling readers: A best-evidence synthesis. (Manuscript submitted for publication). www.bestevidence.org.

- **Nunnery, J. A., Slavin, R., Ross, S., Smith, L., Hunter, P., & Stubbs, J. (1996). An assessment of Success for All program component configuration effects on the reading achievement of at-risk first grade students [Paper presentation]. Annual Meeting of the American Educational Research Association, New York.

- OECD. (2019). PISA 2018 technical report. OECD Publishing.

- Peurach, D. J. (2011). Seeing complexity in public education: Problems, possibilities, and Success for All. Oxford University Press.

- Pigott, T. D., & Polanin, J. R. (2020). Methodological guidance paper: High-quality meta-analysis in a systematic review. Review of Educational Research, 90(1), 24–46. https://doi.org/10.3102/0034654319877153

- Pinnell, G. S., Lyons, C. A., DeFord, D. E., Bryk, A. S., & Seltzer, M. (1994). Comparing instructional models for the literacy education of high risk first graders. Reading Research Quarterly, 29(1), 8–38. https://doi.org/10.2307/747736

- Pustejovsky, J. (2020). Clubsandwich: Cluster-Robust (Sandwich) Variance Estimators with Small-Sample Corrections (Version R package version 0.4.1) [Computer software]. https://CRAN.R-project.org/package=clubSandwich

- **Quint, J., Zhu, P., Balu, R., Rappaport, S., & DeLaurentis, M. (2015). Scaling up the Success for All model of school reform: Final report from the Investing in Innovation (i3) evaluation. MDRC.

- R Core Team. (2020). R: a language and environment for statistical computing. R Foundation for Statistical Computing. https://www.R-project.org/

- **Ross, S. M., & Casey, J. (1998a). Longitudinal study of student literacy achievement in different Title I school-wide programs in Ft. Wayne community schools, year 2: First grade results. Memphis, TN: University of Memphis, Center for Research on Educational Policy.

- *Ross, S. M., & Casey, J. (1998b). Success for all evaluation, 1997–98 tigard-tualatin school district. Memphis: University of Memphis, Center for Research on Educational Policy.

- **Ross, S. M., Nunnery, J. A., & Smith, L. J. (1996). Evaluation of Title I reading programs: Amphitheater public schools year 1: 1995–1996. University of Memphis, Center for Research in Educational Policy.

- **Ross, S. M., Smith, L. J., & Bond, C. (1994). An evaluation of the Success for All program in Montgomery, Alabama schools. University of Memphis, Center for Research on Educational Policy.

- **Ross, S. M., Smith, L. J., & Casey, J. P. (1995). Final Report: 1994–1995 Success for All program in Fort Wayne, Indiana. University of Memphis, Center for Research in Educational Policy.

- **Ross, S. M., Smith, L. J., & Casey, J. P. (1997). Preventing early school failure: Impacts of Success for All on standardized tests outcomes, minority group performance, and school effectiveness. Journal of Education for Students Placed at Risk, 2(1), 29–53. https://doi.org/10.1207/s15327671espr0201_4

- *Ross, S. M., Smith, L. J., Casey, J. P., Johnson, B., & Bond, C. (1994b). Using Success for All to restructure elementary schools: A tale of four cities [Paper presentation]. At the annual meeting of the American Educational Research Association, New Orleans, LA. (ERIC Document Reproduction Service No. ED 373456)

- **Ross, S. M., Smith, L. J., Lewis, T., & Nunnery, J. (1996). 1995–96 evaluation of Roots & Wings in Memphis City Schools. University of Memphis, Center for Research in Educational Policy.

- Rowan, B., Correnti, R., Miller, R., & Camburn, E. (2009). School improvement by design: Lessons from a study of comprehensive school reform programs. http://www.cpre.org/school-improvement-design-lessons-study-comprehensive-school-reform-programs.

- Shaywitz, S. E., & Shaywitz, J. (2020). Overcoming dyslexia (2nd ed.). Penguin Random House.

- Slavin, R. E. (1987). Ability grouping and student achievement in elementary schools: A best-evidence synthesis. Review of Educational Research, 57(3), 347–350. https://doi.org/10.3102/00346543057003293.

- Slavin, R. E. (2017). Instruction based on cooperative learning. In R. Mayer & P. Alexander (Eds.), Handbook of research on learning and instruction. Routledge.

- Slavin, R. E., Lake, C., Chambers, B., Cheung, A., & Davis, S. (2009). Effective reading programs for the elementary grades: A best-evidence synthesis. Review of Educational Research, 79(4), 1391–1466. https://doi.org/10.3102/0034654309341374.

- Slavin, R. E., Madden, N. A., Chambers, B., & Haxby, B. (2009). Two million children: Success for All. Corwin.

- **Slavin, R. E., Madden, N. A., Dolan, L. J., & Wasik, B. A. (1993). Success for All in the Baltimore City Public Schools: Year 6 report. Johns Hopkins University, Center for Research on Effective Schooling for Disadvantaged Students.

- **Slavin, R. E., Madden, N. A., Dolan, L. J., Wasik, B. A., Ross, S. M., & Smith, L. J. (1994). Success for All: Longitudinal effects of systemic school-by-school reform in seven districts [Paper presentation]. Annual Conference of the American Educational Research Association, LA.

- Snow, C. E., Burns, S. M., & Griffin, P. (Eds.). (1998). Preventing reading difficulties in young children. National Academy Press.

- Stevens, R. J., Madden, N. A., Slavin, R. E., & Farnish, A. M. (1987). Cooperative integrated reading and composition: Two field experiments. Reading Research Quarterly, 22(4), 433–454. https://doi.org/10.2307/747701

- Tipton, E. (2015). Small sample adjustments for robust variance estimation with meta-regression. Psychological Methods, 20(3), 375–393. https://doi.org/10.1037/met0000011.

- Viechtbauer, W. (2010). Conducting meta-analyses in R with the metafor package. Journal of Statistical Software, 36(3), 1–48. https://doi.org/10.18637/jss.v036.i03.

- **Wang, W., & Ross, S. M. (1999). Results for Success for All program. University of Memphis, Center for Research on Educational Policy.

- Wanzek, J., Vaughn, S., Scammacca, N., Gatlin, B., Walker, M. A., & Capin, P. (2016). Meta-analyses of the effects of tier 2 type reading interventions in grades K-3. Educational Psychology Review, 28(3), 551–576. https://doi.org/10.1007/s10648-015-9321-7.

- Wolf, R., Morrison, J. M., Inns, A., Slavin, R. E., & Risman, K. (2020). Average effect sizes in developer-commissioned and independent evaluations. Journal of Research on Educational Effectiveness, 13(2), 428–447. https://doi.org/10.1080/19345747.2020.1726537

- What Works Clearinghouse. (2014). Review protocol for beginning reading interventions version 3.0. Institute of Education Sciences, US Department of Education.

- What Works Clearinghouse. (2020). Standards handbook (version 4.1). Institute of Education Sciences, US Department of Education.