Abstract

The accomplishment of Sustainable Development Goals (SDGs) is intrinsically connected to improving livelihoods in the Rural Global South (RGS). RGS livelihoods are complex, showing multiple dimensions beyond mere economic considerations. However, many related development policies (over)simplify livelihoods to income thresholds, leading to flawed interventions. Adequate strategies to address RGS livelihoods require a much deeper understanding of their various dimensions and complexities. Q methodology (Q) is a powerful participatory research technique that enables the systematic study of different viewpoints on subjective topics. Moreover, it has the potential to identify and reveal previously unheard narratives, thus allowing us to question the traditional understandings of RGS livelihoods. Yet, as a time- and assistance-intensive technique, its implementation faces methodological challenges that are currently overlooked and ought to be considered. We selected and reviewed 50 Q studies applied to different forms of RGS livelihoods. First, we discuss several on-field Q limitations associated with the physical, logistical, social, and cultural constraints. Second, we draw on good practices and strategies to cope with these limitations. Notwithstanding the limitations and strategies, we advocate building Q capacities and the gender-balanced empowerment of local researchers. This may contribute to a better understanding of the nuances and challenges of RGS livelihoods.

Reviewing Editor:

1. Introduction

The accomplishment of several Sustainable Development Goals (SDGs) is intrinsically connected to the generation and improvement of sustainable livelihoods in the Rural Global SouthFootnote1 (RGS) (Tambe, Citation2022). As 84% of the 1.3 billion multidimensionally poor (i.e., deprived of several resources and services) live in the RGS, uplifting their means of living is cornerstone in meeting global development targets (Tambe, Citation2022). RGS livelihoods are varied and complex, showing multiple dimensions far beyond the typically observed economic consideration: capabilities, activities, material resources, and social assets (Nunan et al., Citation2023). In contrast, related development policies still resort to income thresholds [e.g., World Bank’s International Poverty Line (Lang and Lingnau, Citation2015; United Nations, Citation2021)] as the compass to evaluate and benchmark livelihood conditions (Ascher, Citation2021). Such definitions and metrics overshadow the context-dependent diversity and complexity of RGS livelihoods (Chambers, Citation2017; Nunan et al., Citation2023). In addition, facilitators of development programs are usually outsiders who imprint their own priorities, often related to a number of substantial biases (e.g., spatial bias, project bias, person bias, seasonal bias, etc.) (Chambers, Citation2017; Chambers, Citation1983; Datta, Citation2019). These limited approaches have led to a distorted comprehension of the issues that face RGS populations, and therefore to ineffective livelihoods interventions (Ascher, Citation2021).

Smallholder agriculture is a good example of how an inadequate understanding of RGS livelihoods’ dynamics has resulted in many failures (Fan and Rue, Citation2020; Waarts et al., Citation2021). This sector represents the most prominent livelihood in RGS economies. It is the main occupation of 70% of the RGS poor, supplies up to 80% of the food consumed in Asia and sub-Saharan Africa, and is the main activity of approximately 50% of RGS women in several countries (Poole, Citation2017). Investing in smallholder farming is therefore a crucial strategy in boosting RGS economies, securing and increasing incomes (SDG 1), providing decent and inclusive work (SDG 8), and supporting food security (SDG 2) (Fan et al., Citation2013; Giordano et al., Citation2019; Mellor and Malik, Citation2017; Poole, Citation2017). Unfortunately, many policies and interventions have not addressed farmers’ actual needs and expectations (Giordano et al., Citation2019). Contrariwise, those have resulted in failures, such as biases in agricultural mechanization and technology adoption (Devkota et al., Citation2020; Van Loon et al., Citation2020), lack of empowerment of female farmers (Akter et al., Citation2017; Slavchevska et al., Citation2019; Theis et al., Citation2018), and high rates of rural youth disengagement and unemployment (Gc and Hall, Citation2020; Hazell and Rahman, Citation2014).

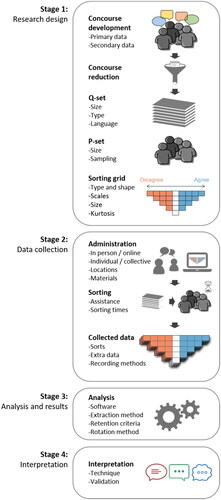

Adequate strategies to address RGS livelihoods require a much deeper understanding of their various dimensions and multifaceted characteristics (Chambers, Citation2017; Lang and Lingnau, Citation2015; Nunan et al., Citation2023). Numerous research approaches and methods to unravel the complexities of RGS livelihoods have arisen in response to this need (Chambers, Citation2017; Nunan et al., Citation2023). These include quantitative approaches, quantitative and qualitative longitudinal studies, ethnographic studies, participatory rural appraisal, participatory video research, among others. Alongside these approaches and methods, Q methodology (henceforth referred to as Q) has emerged as a powerful participatory research technique that enables the study of human subjectivity. It allows researchers to shift from single (and perhaps oversimplified) definitions around a particular topic or phenomenon (e.g., RGS livelihoods) to the systematic analysis of diverse perspectives about it (Previte et al., Citation2007). Simultaneously, Q embraces this diversity while maintaining a reductionist approach. This results in consistently clustered viewpoints that represent the spectrum of individual perceptions. Moreover, by systematically encompassing grassroots voices throughout its four stages (), Q helps identify and reveal previously unheard narratives, hence potentially allowing us to question traditional and/or dominant understandings of RGS livelihoods. Owing to these reasons, we argue that Q holds a strong potential to study the complex nature of and support interventions on RGS livelihoods. More background information on Q can be found in Appendix A of the Data availability statement.

Figure 1. Stages and steps of Q, adapted from (Zabala et al., Citation2018).

The potential of Q has been systematically assessed in review articles focused on psychology and behavioral studies (Dziopa and Ahern, Citation2011), conservation research (Zabala et al., Citation2018), healthcare research (Churruca et al., Citation2021), and even on its methodological choices across a wide range of disciplines (Dieteren et al., Citation2023). Despite this potential and its effective implementation across disciplines (Watts & Stenner, Citation2012; Zabala and Pascual, Citation2016), Q is seldom engaged with a focus on RGS livelihoods. Even in such cases, the on-field methodological choices and points of attention are seemingly underreported. Hardly any study has critically elaborated on the methodological implications of Q in these contexts, let alone in those related to any form of (smallholder) agriculture. Considering the challenges of conducting fieldwork in the RGS (Breman, Citation1985; Casale et al., Citation2013; Chacko, Citation2004; Potnis and Gala, Citation2020; Strijker et al., Citation2020), and as part of a larger Q-led doctoral project conducted at Delft University of Technology (Intriago et al., Citation2018), in this article we aim to analyze and discuss: (1) methodological challenges of implementing Q to study RGS livelihoods; and (2) the best (reported) practices to cope with these challenges, with emphasis on the stages that imply on-field methodological choices (i.e., research design, data collection, and interpretation). Through this study, we expect to make two key contributions: first, to expand the understanding about the methodological implications of Q in RGS settings; and second, to help researchers make informed methodological choices when engaging Q to study RGS livelihoods.

2. Methodology

We employed a semi-systematic approach in this review. As (Snyder, Citation2019) argues, this is an appropriate strategy to review mixed qualitative/quantitative information and identify knowledge gaps in the literature. Our approach enabled us to synthesize state-of-the-art knowledge on the application of Q in RGS settings, its intrinsic methodological issues, and best practices.

2.1. Sources of information

We chose database search as the preferred technique to search for references. Because this review focuses on the application of Q across different fields instead of discipline-specific studies, we opted for two multidisciplinary scientific databases, namely Scopus and Web of Science. Complementarily, we triangulated these databases using Google Scholar to prevent location bias. In addition to the database search, we also used snowball sampling (through bibliographic references and hyperlinks) to identify additional documents that did not appear in the iterative searches.

2.2. Search criteria

To search for the literature in the respective scientific databases, we used the terms “Q methodology” and “Q-methodology”, in combination with any/some of the following terms: “rural”, “farm”, “farmer”, “farming”, “smallholder”, “agriculture”, “irrigation”, “water”, “forest”, “forestry”. We acknowledge the possible biases in the review as a consequence of screening literature using terms exclusively in the English language. We believe that our results provide sufficient details and discussion to accept this language-based restriction in our review.

We searched for references between April and August 2020, and within the publishing period of 2010–2020. Through iterative searches, it became apparent that prior to that period, very few studies fit within the scope of this review.

2.3. Selection criteria

Within the scope of the present study, we employed the following inclusion criteria to determine the relevance of selected documents:

Application of Q as (one of the) main research technique(s);

Addressing topics around RGS livelihoods, with particular emphasis on any form of (smallholder) agriculture;

Direct involvement of RGS dwellers during the methodological cycle of Q, with a specific emphasis on smallholder farmers; and,

Given the incipient and unfamiliar use of Q in RGS settings, a peer-reviewed scientific article, published in a SCImago-indexed journal, with emphasis on Q1/Q2 impact factor quartiles.

Notwithstanding the above inclusion criteria, the final selection of studies was made based on our judgement. In our discussion below, we left aside five studies that, although fulfilled the set of criteria, showed a lack of (Q) methodological clarity (Dingkuhn et al., Citation2020; Leong and Lejano, Citation2016; Nijnik et al., Citation2017), or considered RGS livelihoods from the perspective of non-rural actors (i.e., extension officers) (Bond, Citation2016; Easdale et al., Citation2020).

2.4. Analytical methods and abstracted data

We analyzed the selected documents through a content analysis. Using this technique, we abstracted two types of information: descriptive information and the effects and findings of each study (Snyder, Citation2019). The former comprised general characteristics of studies, that is, subject of study, category of Q study, (non) open access, and geographical foci of both study areas and researchers’ affiliations. This information contributed to revealing possible underlying Q research gaps between Global South and Global North. The latter consisted of Q methodological choices and their consequent findings, in accordance with the four methodological stages of Q pointed out above (), with special emphasis on fieldwork, that is, research design and data collection.

3. Main findings

We selected in total 50 studies based on the above selection criteria. summarizes the data extracted from these studies. The complete dataset with qualitative and quantitative information obtained during the semi-systematic review process can be found in Appendix B of the Data availability statement.

Table 1. Data extracted from the 50 selected studies.

3.1. Characteristics of studies

The selected studies belonged mainly to the subjectsFootnote2 of environmental studies (n = 15), conservation (n = 7), forest and forestry (n = 5), agriculture (n = 4), and international development (n = 4) (). Whereas rural studies have traditionally focused on these subjects (Strijker et al., Citation2020; Wang and Liu, Citation2014), these leave aside other relevant yet still neglected (Q) research themes in the RGS, including subjects such as rural health, women empowerment, food safety, environmental justice, responsible mechanization, and education. Furthermore, we categorized the 50 selected studies according to the Q themes proposed in (Zabala et al., Citation2018). Most of these studies are within the category of management alternatives (n = 36), with two other categories worth mentioning being conflict resolutions (n = 4) and policy appraisals (n = 10) ().

Figure 2. Characteristics of the selected studies. (a) Number of studies across disciplines of agriculture (AG), international development (ID), environmental studies (ES), conservation (CO), forests and forestry (FF), veterinary sciences (VS), and others (OT). (b) Number of studies per category of Q study [as defined by (Zabala et al., Citation2018)] as conflict resolution (CR), management alternatives (MA) and policy appraisal (PA). (c) Number of studies published as (non)open-access documents. (d) Number of studies per geographical region, across Australasia (AA), East Asia (EA), Eastern Europe (EE), Middle East (ME), Central America (CA), South America (SA), South Asia (SAs), Southeast Asia (SEA) and sub-Saharan Africa (SSA); solid dark gray, solid light gray and diagonal-line patterns on each bar represent the proportions of low-, lower-middle- and upper-middle-income countries, respectively.

![Figure 2. Characteristics of the selected studies. (a) Number of studies across disciplines of agriculture (AG), international development (ID), environmental studies (ES), conservation (CO), forests and forestry (FF), veterinary sciences (VS), and others (OT). (b) Number of studies per category of Q study [as defined by (Zabala et al., Citation2018)] as conflict resolution (CR), management alternatives (MA) and policy appraisal (PA). (c) Number of studies published as (non)open-access documents. (d) Number of studies per geographical region, across Australasia (AA), East Asia (EA), Eastern Europe (EE), Middle East (ME), Central America (CA), South America (SA), South Asia (SAs), Southeast Asia (SEA) and sub-Saharan Africa (SSA); solid dark gray, solid light gray and diagonal-line patterns on each bar represent the proportions of low-, lower-middle- and upper-middle-income countries, respectively.](/cms/asset/cec50e90-2790-4c20-88d1-51e78b5a69f4/oass_a_2359018_f0002_b.jpg)

Only 16% (n = 8) of the selected papers were published as open-access (Carmenta et al., Citation2017; Giannichi et al., Citation2018; Mayett-Moreno et al., Citation2017; Rust, Citation2017; Schuman et al., Citation2018; Sumberg et al., Citation2017; Truong et al., Citation2017; Truong et al., Citation2019) (). Given the financial, legal, and technical restrictions faced by low- and middle-income countries, open access to scientific knowledge and data is crucial in the development of their research (Arunachalam, Citation2017; Chan et al., Citation2005; Serwadda et al., Citation2018; Zachariah et al., Citation2014). It seems paradoxical that, to a large extent, the selected studies, which can directly benefit (Q) researchers in Global South countries, are not (easily) accessible to these scholars.

3.2. Geographical foci

Despite their strong focus on RGS populations, only two publications (Schuman et al., Citation2018; Truong et al., Citation2019) were authored by researchers exclusively affiliated with institutions in their respective target countries. As illustrated on the world map in , most studies were conducted by (main) authors exclusively (n = 25) or partially affiliated (n = 6) with organizations located in countries of the Global North. The selected studies showed a strong emphasis on Southeast Asia (n = 15), South America (n = 14), and sub-Saharan Africa (n = 9) ( and ). As represented in , only 10% of the studies (n = 5) (Hamadou et al., Citation2016; Hilhorst et al., Citation2012; Jiren et al., Citation2020; Stoudmann et al., Citation2017; Weldegiorgis and Ali, Citation2016) aimed specifically at low-income countries, which bear the weakest economic category, where the livelihoods of RGS dwellers face more profound subsistence challenges. Moreover, none of the studies that focused on low-income countries were (exclusively) carried out by researchers and institutions within their national boundaries, nor from any other Global South country (). This might reflect the access and equality issues that researchers from these geographical areas have to confrontFootnote3. This clear decoupling between the places where the study has been envisaged and carried out, and where the data have been collected, could pose even further constraints for the (still limited) research capacities in the Global South. According to (ESSENCE on Health Research, Citation2014), research capacity building should be a long-term, explicit process that must go beyond the temporal scope of a single project or grant, whereas (Shucksmith and Brown, Citation2016) advocates the international co-production of knowledge between a number of (non)academic actors whose outputs must be more accessible and understandable for wider audiences. In addition, this detachment, which leads to sporadic, spatially biased contacts, could play against robust relationships and trust between researchers and communities, which are key requirements in rural studies (Chambers, Citation2017; Chambers, Citation1983).

Figure 3. Geographic location of main authors’ affiliations and studies per the theme of Q study [as defined by (Zabala et al., Citation2018)].

![Figure 3. Geographic location of main authors’ affiliations and studies per the theme of Q study [as defined by (Zabala et al., Citation2018)].](/cms/asset/23825b8c-fea4-4660-bdb6-90871eb7bedb/oass_a_2359018_f0003_c.jpg)

3.3. Research design

3.3.1. Concourse development

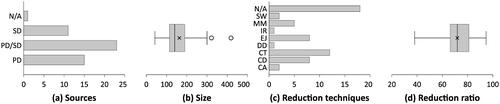

A minority (n = 15) of the selected studies relied purely on primary data for the development of their concourse, either exclusively for their respective studies (Brannstrom, Citation2011; Frate and Brannstrom, Citation2015; Kopytko and Pruneddu, Citation2018; Mayett-Moreno et al., Citation2017; Rodriguez-Piñeros et al., Citation2012; Rodríguez-Piñeros et al., Citation2018; Schuman et al., Citation2018; Stoudmann et al., Citation2017; Truong et al., Citation2017; Truong et al., Citation2019; Vargas et al., Citation2019) or as part of a larger umbrella project (Lairez et al., Citation2020; Nordhagen et al., Citation2017; Pirard et al., Citation2016; Schneider et al., Citation2015). Moreover, only five of these studies relied solely on RGS dwellers (Mayett-Moreno et al., Citation2017; Rodriguez-Piñeros et al., Citation2012; Schuman et al., Citation2018; Stoudmann et al., Citation2017; Truong et al., Citation2017). Approximately half of the studies (n = 23) employed a mixed primary/secondary data approach (Alexander et al., Citation2018; Astari and Lovett, Citation2019; Bumbudsanpharoke et al., Citation2009; Cammelli et al., Citation2019; Carmenta et al., Citation2017; Forouzani et al., Citation2013; Giannichi et al., Citation2018; Hamadou et al., Citation2016; Hilhorst et al., Citation2012; Hugé et al., Citation2016; Jaung et al., Citation2016; Jiren et al., Citation2020; Lansing, Citation2013; Rodríguez-Piñeros and Mayett-Moreno, Citation2015; Rust, Citation2017; Sumberg et al., Citation2017; Taheri et al., Citation2020; Tuokuu et al., Citation2019; Weldegiorgis and Ali, Citation2016; Wijaya and Offermans, Citation2019; Yeboah et al., Citation2017; Zabala et al., Citation2017; Zobeidi et al., Citation2016), whereas 11 studies used only secondary data (Anderson and Jacobson, Citation2018; Barbosa et al., Citation2020; Huaranca et al., Citation2019; Leite et al., Citation2019; Moros et al., Citation2020; Nguyen et al., Citation2018; Nhem and Lee, Citation2020; Nhem and Lee, Citation2019; Pereira et al., Citation2016; Rijneveld and Marhaento, Citation2020; Vela-Almeida et al., Citation2018) (). The concourses varied in size from as small as 42 (Brannstrom, Citation2011) to as large as 419 statements (Bumbudsanpharoke et al., Citation2009) ().

Figure 4. Characteristics of the concourse. (a) Number of studies per source for concourse construction, based on primary data (PD), secondary data (SD) and mixed sources (PD/SD). (b) Size of the constructed concourse in a number of statements across studies. (c) Number of studies per concourse reduction technique, comprising software (SW), matrix method (MM), iterative refinement (IR), expert judgement (EJ), division in discourses (DD), categorization (CT), combination and deletion of similar statements (CD), content analysis (CA). (d) Concourse reduction ratio across studies, expressed as the decreasing percentage between the concourse and the Q-set.

The development of the concourse requires time and rigor to ensure that the eventual Q-set represents an acceptable range of voices involved in the topic under study (Simons, Citation2013; Watts & Stenner, Citation2012). Although the concourse can be built purely from secondary data (Donner, Citation2001), it makes sense to incorporate primary data to guarantee proper representation of the range of discourses (Simons, Citation2013). When addressing understudied topics, geographic areas, and/or human groups, primary data collection for concourse development from RGS dwellers might become the only (or at least main) option. Seven studies (Nordhagen et al., Citation2017; Rodriguez-Piñeros et al., Citation2012; Rodríguez-Piñeros et al., Citation2018; Schuman et al., Citation2018; Stoudmann et al., Citation2017; Truong et al., Citation2017; Truong et al., Citation2019) are remarkable examples of such cases, especially because of their exhaustive primary data sources. In certain cases, however, RGS dwellers may be located in too remote—or ultimately almost unreachable—areas, or their political-cultural values or legal status could hide potential participants (e.g., lower-caste individuals, refugees and displaced groups, women of particular societies, individuals involved in illegal activities). Moreover, purely primary data collection for the concourse is not always applicable nor is perhaps the best approach when (financial) resources are a main limiting factor (Barbosa et al., Citation2020; Schneider et al., Citation2015) or when it is difficult to (re)visit participants (Giannichi et al., Citation2018; Kopytko and Pruneddu, Citation2018; Schneider et al., Citation2015; Truong et al., Citation2019; Yeboah et al., Citation2017).

Considering these possible limitations, three strategies for concourse development should be considered. First, (partially) resort to reliable secondary data, mainly if produced around the same study area or population. Second, reuse primary data from previous fieldwork activities, especially when they were part of a larger research program, as applied by (Alexander et al., Citation2018; Cammelli et al., Citation2019; Schneider et al., Citation2015). Third, as reported in (Astari and Lovett, Citation2019; Hamadou et al., Citation2016; Jiren et al., Citation2020; Kopytko and Pruneddu, Citation2018; Pirard et al., Citation2016; Rust, Citation2017; Taheri et al., Citation2020; Truong et al., Citation2019; Wijaya and Offermans, Citation2019), to build the concourse based on proxies’ discourses (i.e., experts, advisors, scholars, etc.), although researchers must be aware of its potential compromise in the accuracy and representativeness of the viewpoints (Cobb, Citation2018).

3.3.2. Concourse reduction

There is no specific recipe or fixed methodology on how to reduce the collected concourse to statements, let alone the number of statements required by the study. An appropriate approach is to consider the coverage and balance of the statements in such a way that they become as equally representative and balanced as possible across the different discourses (Watts & Stenner, Citation2012; Zabala et al., Citation2018). The reduction process should not eliminate any relevant statement of certain discourse(s), given that it will provoke further biases in the later sorts. Here, it may be good to remind ourselves that the Q set aims to create possible combinations between statements as expressions of diverse perspectives; as such, individual statements should represent sufficient diversity themselves but would not need to cover every possible perspective as such.

Most of the selected studies (Brannstrom, Citation2011; Huaranca et al., Citation2019; Hugé et al., Citation2016; Jiren et al., Citation2020; Lansing, Citation2013; Rodriguez-Piñeros et al., Citation2012; Truong et al., Citation2019; Vargas et al., Citation2019; Weldegiorgis and Ali, Citation2016; Zabala et al., Citation2017) relied on a reductionist technique of categorization, that is, classification into different categories within the found discourses, to filter statements out of the general concourse. Other studies (Barbosa et al., Citation2020; Giannichi et al., Citation2018; Nhem and Lee, Citation2019; Nordhagen et al., Citation2017; Rust, Citation2017; Tuokuu et al., Citation2019) applied a basic method of combining similar statements and deleting duplicates, redundant, and/or unclear ones. Other less frequent methods for selection of statements were purely expert judgement (Alexander et al., Citation2018; Anderson and Jacobson, Citation2018; Frate and Brannstrom, Citation2015; Nhem and Lee, Citation2019; Pirard et al., Citation2016; Rodríguez-Piñeros et al., Citation2018), matrix method (Astari and Lovett, Citation2019; Bumbudsanpharoke et al., Citation2009; Cammelli et al., Citation2019; Forouzani et al., Citation2013; Zobeidi et al., Citation2016), content analysis (Mayett-Moreno et al., Citation2017; Rodríguez-Piñeros and Mayett-Moreno, Citation2015), division of statements according to found discourses (Astari and Lovett, Citation2019), and funnel-like iterative refinement (Carmenta et al., Citation2017). Other authors (Moros et al., Citation2020; Taheri et al., Citation2020; Wijaya and Offermans, Citation2019) have employed combinations of these techniques. Moreover, (Astari and Lovett, Citation2019) and (Rodríguez-Piñeros et al., Citation2018) were the only studies that used specific qualitative data analysis software (Nvivo 11 and ATLAS.ti 7.5.9, respectively) to make a systematic selection of statements ().

Q studies dealing with conflict resolution may produce an unbalanced representation of discourses, typically in favor of the most powerful voices, while reducing the concourse. This could be more exacerbated when involving less-empowered RGS individuals compared to other stronger actors (Vela-Almeida et al., Citation2018; Weldegiorgis and Ali, Citation2016). Here, the matrix method becomes interesting, as it aims to capture several dimensions of both discourses and categories of statements, thereby ensuring representativeness across viewpoints. Three studies (Astari and Lovett, Citation2019; Forouzani et al., Citation2013; Zobeidi et al., Citation2016) enriched this technique using political discourse theory, as explained by (Dryzek and Berejikian, Citation1993).

There is no ideal concourse reduction percentage; it largely depends on the concourse type, number of sources, and amount of information extracted into the initial statements. As such, this percentage has been found to be not uniform across the selected studies. Of the 23 studies that provided sufficient information to calculate this reduction, two (Anderson and Jacobson, Citation2018; Brannstrom, Citation2011), 13 (Forouzani et al., Citation2013; Giannichi et al., Citation2018; Hugé et al., Citation2016; Lansing, Citation2013; Nhem and Lee, Citation2020; Nhem and Lee, Citation2019; Pereira et al., Citation2016; Rust, Citation2017; Taheri et al., Citation2020; Tuokuu et al., Citation2019; Vargas et al., Citation2019; Zabala et al., Citation2017; Zobeidi et al., Citation2016), six (Barbosa et al., Citation2020; Frate and Brannstrom, Citation2015; Moros et al., Citation2020; Rodriguez-Piñeros et al., Citation2012; Rodríguez-Piñeros et al., Citation2018; Wijaya and Offermans, Citation2019), and two (Bumbudsanpharoke et al., Citation2009; Leite et al., Citation2019) reported reductions of <50%, 50%–75%, 75%–90% and even up to >90%, respectively ().

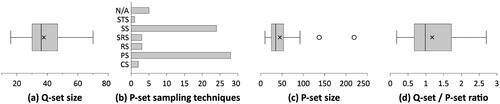

3.3.3. Q-set (size)

The size of the Q-set across studies ranged from 16 to 70, although most were around 30-50 (). The decision on the Q-set size should not be underestimated, nor should it be considered as a mere output of the concourse reduction process. Some authors have reported ideal sizes as high as 40–80, ≥40, ≥60 and 60–90 (Watts & Stenner, Citation2012). Large Q-sets enlarge the (already time-consuming) sorting process, thereby possibly discouraging respondents and eventually increasing the dropout rate (Previte et al., Citation2007; Simons, Citation2013; Stone et al., Citation2017). In light of these two antagonistic positions and considering RGS-related constraints for Q (e.g., illiteracy, improper site conditions, exposure to elements), researchers may be inclined to keep a highly reduced number of statements (Alexander et al., Citation2018; Cammelli et al., Citation2019; Nordhagen et al., Citation2017; Sumberg et al., Citation2017), without compromising the representativeness of the discourses.

Figure 5. Characteristics of research design. (a) Q-set size in a number of statements across studies. (b) Number of studies per P-set sampling techniques, including convenience sampling (CS), purposive sampling (PS), random sampling (RS), stratified random sampling (SRS), snowball sampling (SS) and structured sampling (STS). (c) P-set size in number of participants across studies. (d) Q-set/P-set ratio across studies.

3.3.4. Q-set (presentation of statements)

The vast majority of the selected studies (n = 47) presented statements solely in written form. Exceptions to this are (Carmenta et al., Citation2017) which also included images (though not specified) next to written statements; (Barbosa et al., Citation2020) which would suggest the use of illustrations along with the wording; and (Alexander et al., Citation2018) which was the only one conducted with a photo-based Q-set supported by proxy statements. The latter was intentionally chosen, along with just 16 statements, to reduce the complexity of engaging semi-literate Laotian farmers. Researchers may encounter other potential limitations besides illiteracy. For instance, participants with visual conditions (e.g., visual impairment and color blindness) would require visual items to be carefully implemented. Some authors have employed high-contrast designs and even statements written in Braille (Huang and Yu, Citation2013; Salaj and Kiš-Glavaš, Citation2017), whereas others advocate for non-conventional audiovisual-based Q-sets (Nazariadli et al., Citation2019). It is noteworthy that the latter are usually attached to digital tools and software such as VQMethod (Nazariadli, Citation2020), whose availability and/or applicability could be compromised in RGS contexts.

Most of the selected studies (n = 36) presented their statements written in a Latin script language (Afaan Oromo, English, French, Malagasy, Malay, Indonesian, Kinyarwanda, Portuguese, Spanish, Tok Pisin, Tswana, and Afrikaans). From these, (Astari and Lovett, Citation2019) and (Hugé et al., Citation2016) worked with a combination of Indonesian/English and Malay/English, respectively, whereas (Stoudmann et al., Citation2017) presented a unique successive translation of French, Malagasy, Sihanaka dialect, and Betsimisaraka dialect. In contrast, 14 studies (Alexander et al., Citation2018; Bumbudsanpharoke et al., Citation2009; Forouzani et al., Citation2013; Hilhorst et al., Citation2012; Hu et al., Citation2018; Kopytko and Pruneddu, Citation2018; Lairez et al., Citation2020; Nguyen et al., Citation2018; Nhem and Lee, Citation2020; Nhem and Lee, Citation2019; Taheri et al., Citation2020; Truong et al., Citation2017; Truong et al., Citation2019; Zobeidi et al., Citation2016) were conducted in non-Latin script languages (Khmer, Lao, Mandarin, Nepali, Persian, Thai, and Ukrainian). Although the latter does not seem to pose any inconvenience for the administration of hand-written Q-sets, it certainly might bear further limitations for researchers willing to rely on digital/electronic platforms and tools (Nazariadli, Citation2020; University of Birmingham, Citation2010; SurveyMonkey, Citation2020; Pruneddu, Citation2011). For example, current popular software has limited use (or none at all) of certain non-Latin script languages, which tend to belong to Global South cultures. For some Asian languages, complex and rare characters are not even defined for digital systems (Lee, Citation2019). Trivial operations, such as operating files of written statements across several platforms and throughout different software products (word processing, spreadsheets, design, CAD, etc.), might create spontaneous modifications in non-Latin characters, thus possibly rendering statements in rather meaningless wording. This digital constraint might further limit the applicability of the aforementioned inclusive audiovisual tools.

The sole use of a national/official language and/or lingua franca, even among native speakers, does not entail immediate accuracy and/or bias reduction. (Pirard et al., Citation2016) highlighted that language could be an issue across several ethnic groups in the study area, and, although relying on a lingua franca (Bahasa Indonesia, in this case) as a solution, respondents still presented different levels of fluency. Other authors (Schuman et al., Citation2018; Stoudmann et al., Citation2017), who had to deal with successive translations throughout a series of languages and dialects, resorted to the committee approach [as defined in (Buil et al., Citation2012)], in which discussions between researchers and translators aimed to use the most suitable terms for each statement, thereby reducing the likelihood of misinterpretation. Although (Barbosa et al., Citation2020) and (Zabala et al., Citation2017) conducted studies in Brazil and Mexico with purely native Portuguese and Spanish speaker research teams, respectively, special care was given to adapting the statements to local terms through extensive iterative piloting with on-site experts and community members.

3.3.5. P-set (sampling techniques)

The P-sets were mainly sampled through purposive sampling (n = 15) (Bumbudsanpharoke et al., Citation2009; Carmenta et al., Citation2017; Forouzani et al., Citation2013; Frate and Brannstrom, Citation2015; Hamadou et al., Citation2016; Huaranca et al., Citation2019; Jiren et al., Citation2020; Lansing, Citation2013; Mayett-Moreno et al., Citation2017; Nhem and Lee, Citation2020; Rodríguez-Piñeros et al., Citation2018; Rodríguez-Piñeros and Mayett-Moreno, Citation2015; Tuokuu et al., Citation2019; Wijaya and Offermans, Citation2019; Zabala et al., Citation2017), snowball sampling (n = 11) (Alexander et al., Citation2018; Cammelli et al., Citation2019; Hugé et al., Citation2016; Leite et al., Citation2019; Nguyen et al., Citation2018; Nhem and Lee, Citation2019; Schneider et al., Citation2015; Stoudmann et al., Citation2017; Sumberg et al., Citation2017; Vela-Almeida et al., Citation2018; Yeboah et al., Citation2017), and a dual-method approach, which is usually a combination of the first two (n = 10) (Brannstrom, Citation2011; Giannichi et al., Citation2018; Jaung et al., Citation2016; Kopytko and Pruneddu, Citation2018; Moros et al., Citation2020; Rust, Citation2017; Schuman et al., Citation2018; Taheri et al., Citation2020; Weldegiorgis and Ali, Citation2016; Zobeidi et al., Citation2016) or in combination with convenience sampling (Anderson and Jacobson, Citation2018; Barbosa et al., Citation2020), random sampling (Nordhagen et al., Citation2017; Truong et al., Citation2019; Vargas et al., Citation2019) and stratified random sampling (Pereira et al., Citation2016). (Hilhorst et al., Citation2012; Pirard et al., Citation2016) and (Truong et al., Citation2017) are the only ones that rely solely on an exclusive approach of stratified random sampling and structured sampling, respectively ().

Both purposive and snowball sampling have become practical methods to recruit potential Q respondents. The selected studies applied these techniques relying on contacts of governmental representatives (Alexander et al., Citation2018; Forouzani et al., Citation2013; Nguyen et al., Citation2018), (local) organizations (Anderson and Jacobson, Citation2018; Cammelli et al., Citation2019; Giannichi et al., Citation2018; Jiren et al., Citation2020; Leite et al., Citation2019; Nhem and Lee, Citation2019; Schneider et al., Citation2015), local experts (Jaung et al., Citation2016), local community leaders (Alexander et al., Citation2018; Cammelli et al., Citation2019; Forouzani et al., Citation2013; Hugé et al., Citation2016; Nguyen et al., Citation2018; Nhem and Lee, Citation2020; Nordhagen et al., Citation2017; Stoudmann et al., Citation2017), and recruited respondents themselves (Barbosa et al., Citation2020; Hugé et al., Citation2016; Schuman et al., Citation2018; Vela-Almeida et al., Citation2018). Their main shortcoming is that researchers may end up with undesirably homogeneous P-sets (Truong et al., Citation2017; Watts & Stenner, Citation2012; Watts and Stenner, Citation2005) associated with the prevalence of existing networks (Cohen and Arieli, Citation2011; Sadler et al., Citation2010). This homogeneity can ultimately leave hard-to-reach RGS respondents aside (Woodley and Lockard, Citation2016), possibly biasing the analyzed viewpoints. For instance, although (Pereira et al., Citation2016; Truong et al., Citation2019) aimed at a gender-balanced P-set, their snowball sampling resulted only in male respondents due to a lack of engagement with/of women. (Schneider et al., Citation2015) acknowledged potential biases in the respondents because of their closeness to a local farmers’ aid organization. (Stoudmann et al., Citation2017) reported that snowballing through village heads was a matter of cultural etiquette, which could lead to other types of unforeseen cultural interactions. (Truong et al., Citation2017) remarked that sampling through key local informants resulted in a limited representation of certain perspectives, thereby hampering their interpretation. Variations in snowball sampling could be suitable for reducing these biases; for instance, turning initial key informants from selectors to legitimators of the spread voice (Sadler et al., Citation2010), or increasing the trust of the desired networks by emphasizing the integrity, transparency, and sensitivity of (local) researchers (Cohen and Arieli, Citation2011).

3.3.6. P-set (size)

Q does not rely on large P-sets but on their diversity of viewpoints (Simons, Citation2013; Stenner et al., Citation2017; Watts & Stenner, Citation2012). Hence, there is not an ideal minimum number of participants. According to (Watts & Stenner, Citation2012), some authors advocate for ranges of 40–60 participants; others favor Q-set/P-set ratios higher than 1, with the number of respondents being lower than the number of statements. P-set sizes across the selected studies ranged from 10 to 219, although the majority were concentrated around 30–50 (). The Q-set/P-set ratios varied from 0.18 to 2.70, with most of them being around 1.0–1.5 (). From the selected studies, only two of them antagonistically elaborated on it: (Jaung et al., Citation2016) appealed to the <1 ratio as an indicator of ideal P-set size, whereas (Wijaya and Offermans, Citation2019) pointed out that a ratio larger than <1 would have increased the likelihood of finding a correlation between loaded respondents.

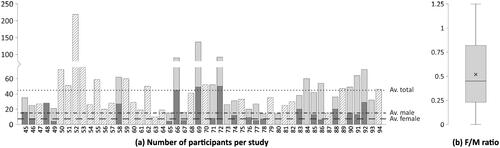

3.3.7. P-set (gender)

Gender representativeness, particularly concerning women’s participation, did not prove to be an active P-set criterion across the selected studies (). The aggregated female/male ratiosFootnote4 of the selected studies () revealed that female participants were typically about half of their male counterparts. Honorable exceptions are (Barbosa et al., Citation2020), whose focus was exclusively on a female phenomenon, and (Leite et al., Citation2019; Mayett-Moreno et al., Citation2017; Rodríguez-Piñeros and Mayett-Moreno, Citation2015), which considered a strong gender dimension in conducting their studies and interpreting viewpoints. In addition, only two studies (Stoudmann et al., Citation2017; Vargas et al., Citation2019) were explicit about gender balance, whereas (Pirard et al., Citation2016; Sumberg et al., Citation2017; Tuokuu et al., Citation2019; Weldegiorgis and Ali, Citation2016; Wijaya and Offermans, Citation2019; Yeboah et al., Citation2017) aimed towards proper gender diversity and women representation.

Figure 6. P-set size and genders. (a) Number and gender of participants per each of the 50 studies. Numbers on the X-axis correspond to the references. Solid dark gray, solid light gray and diagonal-line patterns on each bar represent the proportions of female participants, male participants, and gender-unspecified participants, respectively. Dashed line, dotted-dashed line and dotted line represent the average of female, male and total participants across studies, respectively. (b) Female/Male ratio across the selected studies. Barbosa et al. (Citation2020) considered female participants only, thus is not represented here.

Four studies (Pereira et al., Citation2016; Schneider et al., Citation2015; Taheri et al., Citation2020; Zabala et al., Citation2017) relied exclusively on male participants (). These numbers should not be taken exclusively as unawareness from the researchers but also as a result of potential political and cultural ideas. For instance, (Pereira et al., Citation2016; Truong et al., Citation2019) pointed out that although some women had stronger (legal) attachment to their farms, they gave up responding in favor of their husbands. Schneider et al. (Citation2015) and Wijaya and Offermans (Citation2019) indicated that women were too shy to talk or faced cultural constraints, ultimately declining their participation. Contrarily, Vargas et al. (Citation2019) highlighted the higher number of female participants, though not offering any plausible explanation, whereas (Nordhagen et al., Citation2017) argued that men usually being absent from the village/farm resulted in slightly skewed female participation. Taking into account the particular challenges RGS women must face in accessing resources (Poole, Citation2017; Giordano et al., Citation2019), gender imbalance can cause further biases and/or incompleteness of the topic that researchers expect to understand. Therefore, it is key for Q researchers in RGS settings to adopt cross-cutting, gender-sensitive approaches in their studies, primarily when dealing with male-dominated societies.

3.3.8. Sorting grid

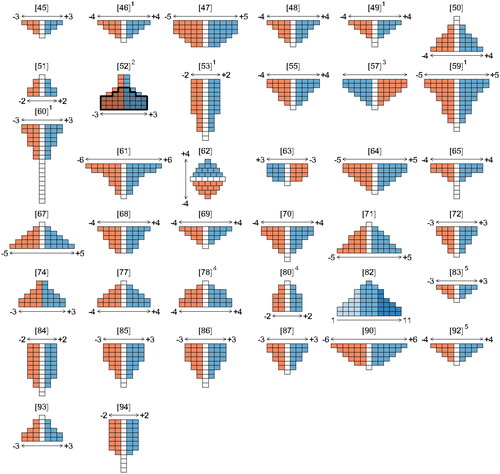

There are no rules to ascertain the sorting grid in which the Q-set must be sorted. Typical shapes include quasi-normal (pyramid) and inverted quasi-normal (inverted pyramid) forced-sorting grids. In this regard, 17 and 20 selected studies provided the former and the latter, respectively (). In contrast, Jiren et al. (Citation2020) used a unique, double-pyramid or diamond shape. This matrix, unlike typical grids, bears a principle of inverted axes. The ranking is performed across a vertical scale, whereas the rows, distributed symmetrically, hold for statements with the same value. A non-forced grid was used in Rijneveld and Marhaento (Citation2020) (not depicted), although the authors did not explain the reason for its use (nor its subsequent analytical process). Lansing (Citation2013) piloted a non-forced distribution that was discarded in favor of a forced grid; the authors argued that the forced approach led participants to reflect more while sorting. In contrast, Hugé et al. (Citation2016) allowed its respondents to deviate from the forced distribution as a way to cope with decision issues while sorting.

Figure 7. Sorting grids of the selected studies. 1(Anderson and Jacobson, Citation2018; Brannstrom, Citation2011; Forouzani et al., Citation2013; Huaranca et al., Citation2019; Hugé et al., Citation2016) did not report the orientation of their grids. They were assumed as inverted distributions. 2The shaded area with thicker border represents the second grid used in (Carmenta et al., Citation2017). 3(Hilhorst et al., Citation2012) did not use a quantitative scale; instead, its authors reported graphical hints (happy/sad faces). 4Reported grids of (Rodríguez-Piñeros and Mayett-Moreno, Citation2015; Schneider et al., Citation2015) did not match with their respective number of statements. 5(Sumberg et al., Citation2017; Yeboah et al., Citation2017) resorted to two grids; only information of one of them was provided.

The shape of the sorting grid does not influence the reliability of the method. The forced distribution should be considered as a mere device to encourage respondents to perform a systematic analysis of each item (McKeown and Thomas, Citation1988; Watts & Stenner, Citation2012). However, unless properly designed and explained, the (inverted) pyramidal shape, with the strongest load of statements in the central column, might transmit to the participant the impression of importance, in which the apex of the pyramid should match the most critical statement(s). From this perspective, the diamond grid used by (Jiren et al., Citation2020) would offer a more natural, easy-to-read, top-to-bottom hierarchy, which can be further underpinned by providing graphical hints or ideograms (e.g., sad/happy faces) depicting the degrees of agreement along with the ranking scale (Cammelli et al., Citation2019; Hilhorst et al., Citation2012; Schneider et al., Citation2015).

The sorting grids in Q are structured through two ordinal scales: qualitative and quantitative. The former typically comprises a wording-based scale to measure the level of agreement. The latter, matching with the qualitative one, generally makes use of odd symmetric scales [(Carmenta et al., Citation2017; Pirard et al., Citation2016) become rare even-scale exceptions] with negative and positive sides and several sorting points, whose center corresponds to the neutral position, also referred to as ‘distensive zero’ (Watts & Stenner, Citation2012). Most of the selected studies (n = 39) employed qualitative scales, with (a variation in) the typical disagree/agree scale. Others resorted to (variations of) importance (Alexander et al., Citation2018; Anderson and Jacobson, Citation2018; Carmenta et al., Citation2017; Jiren et al., Citation2020; Nguyen et al., Citation2018), effectiveness (Carmenta et al., Citation2017; Tuokuu et al., Citation2019), (dis)approval (Schneider et al., Citation2015), affection (Rodríguez-Piñeros et al., Citation2018), and self-identification (Mayett-Moreno et al., Citation2017; Rodriguez-Piñeros et al., Citation2012; Rodríguez-Piñeros and Mayett-Moreno, Citation2015). The latter group employed highly personal approaches (I don’t identify with/I identify with, Unlike me/Like me), even though they studied perceptions of external phenomena (i.e., sustainable management of a community-owned forest reserve and related tourism infrastructure) rather than deeply intrinsic subject-wise affairs. In these cases, sorting impersonal statements like ‘Agriculture is not profitable’ (Mayett-Moreno et al., Citation2017), ‘The reserve should have more wild animals’ (Rodriguez-Piñeros et al., Citation2012) or ‘Ecotourism is a way to preserve the forest’ (Rodríguez-Piñeros and Mayett-Moreno, Citation2015), could become sources of confusions. Researchers should pay close attention to the possible mismatches between the wording of statements and the grid’s qualitative scales to prevent respondents from being biased by a false sense of doubt or neutrality.

The quantitative scales of the selected studies ranged from five to 13 points, although most of them were concentrated on seven (n = 13) and nine (n = 19). Although the number of sorting points enlarges/shortens the continuum through which respondents make ranking decisions on a given Q-set (Watts & Stenner, Citation2012), little is mentioned about their impact on the difficulty level of the sorting process. It is logical to think that the more sorting points are offered, the more time the respondent will take to position every single statement, and consequently, the more burdensome the process could become. In turn, this can negatively impact the in-sorting motivation, possibly decreasing the number of well-thought responses, as well as participation and completion rates.

Most of the selected studies (n = 41) employed a negative-to-positive order of the quantitative scales. Exceptions are studies with absolute (Stoudmann et al., Citation2017) and positive-to-negative (Frate and Brannstrom, Citation2015; Kopytko and Pruneddu, Citation2018; Leite et al., Citation2019; Rijneveld and Marhaento, Citation2020; Schuman et al., Citation2018) [and its vertical variation (Jiren et al., Citation2020)] scales. Absolute scales are used to prevent discomfort in the participants due to seemingly forced positive/negative choices while sorting; for example, participants do not necessarily have to feel disagreement, but a lower level of agreement in a negatively ranked statement (Watts & Stenner, Citation2012). Positive-to-negative scales could entail confusion in participants from sociolinguistic contexts with right-to-left reading languages (e.g., Persian, Arabic, Hebrew, Urdu, etc.), where the direction of the scale can enter into conflict with the respondents’ approach to reading and thus understanding (Bergen and Chan Lau, Citation2012).

The range of these scales, in combination with the different Q-set sizes, resulted in a wide diversity of sorting grids of both size and shape. These can be categorized according to the number of statements and kurtosis (). Most of the selected studies used mesokurtic sorting grids (n = 26), consistent with the traditional shapes depicted in introductory studies to Q (Watts & Stenner, Citation2012); 10% (n = 5) and 16% (n = 8) employed less common platykurtic (flat) and leptokurtic (steep) shapes, respectively ().

Table 2. Characteristics of sorting grids with regard to their size and kurtosis.

According to (Watts & Stenner, Citation2012), targeting the correct size and kurtosis of the sorting grid is key to making participants feel comfortable during the sorting process. Two complementary factors that should lead the choices are the complexity or specialized nature of the topic and the related level of knowledge of participants. Steeper grids allow for larger neutrality and less decision making. By contrast, flatter ones are suitable for participants and/or topics that require more fine-grained decisions. Most of the selected studies seemingly made arbitrary choices of sorting grids; scarcely, three offered justifications for their grid choices. Astari and Lovett (Citation2019) implemented platykurtic grids owing to the knowledgeability of the respondents [consistent with (Watts & Stenner, Citation2012)]. (Carmenta et al., 2017) preferred a platykurtic shape (one of the two grids) to enable subtle discrimination throughout many agreed statements. Nordhagen et al. (Citation2017) opted for a mesokurtic grid to diminish low-literacy cognitive barriers by allowing for more neutral positions. In addition, although Hamadou et al. (Citation2016) did not depict the sorting grid, its authors argued its simplicity was chosen because of the low educational level of the respondents.

3.4. Data collection

3.4.1. Location and materials

Q is typically a space-demanding technique that requires controlled environments and large flat workspaces. The use of appropriate, robust, and resistant materials can cause a substantial difference during their administration (Donner, Citation2001; Watts & Stenner, Citation2012). Only 20% of the studies (n = 10) reported their respective locations where sorting occurred. Most of these (Cammelli et al., Citation2019; Lairez et al., Citation2020; Nhem and Lee, Citation2020; Rodríguez-Piñeros and Mayett-Moreno, Citation2015; Truong et al., Citation2017; Truong et al., Citation2019; Zobeidi et al., Citation2016) mentioned respondents’ houses or farms, whereas others pointed out generically each village or community (Schneider et al., Citation2015; Weldegiorgis and Ali, Citation2016), offices of stakeholders (Weldegiorgis and Ali, Citation2016; Zobeidi et al., Citation2016), and schools (Anderson and Jacobson, Citation2018). In RGS contexts, particularly in remote and scattered areas where it is not feasible to gather participants at specific locations, ideal site conditions cannot be easily met and controlled. If the sorting location is the main workplace of the dweller, exposure to the elements (i.e., sun, wind, rain, and moisture) will certainly imply further constraints for researchers (Cheema et al., Citation2018). Lack of proper furniture (e.g., large tables and chairs for participants) is another point of concern that must not be overlooked, as it can hamper the engagement of participants. Probably due to the unavailability of these facilities, some of the selected studies were sorted directly on the floor (Cammelli et al., Citation2019; Lairez et al., Citation2020).

Although 30 of the selected studies indicated certain use of materials, most of these referred only to generic instruments such as ‘cards’ and ‘boards’. Others provided further specifications, such as paper (Huaranca et al., 2019; Nhem and Lee, Citation2020; Nhem and Lee, Citation2019; Rodríguez-Piñeros and Mayett-Moreno, Citation2015; Sumberg et al., Citation2017; Yeboah et al., 2017), (thin) paper/cardboard (Barbosa et al., Citation2020; Cammelli et al., Citation2019; Lairez et al., Citation2020; Schneider et al., Citation2015), laminated cards and board (Jiren et al., Citation2020), magnetic cards and board (Schuman et al., Citation2018), and a combination of paper, pencil, and eraser (without cards and sorting board) (Weldegiorgis and Ali, Citation2016). Three studies (Huaranca et al., 2019; Kopytko and Pruneddu, Citation2018; Rust, Citation2017) did not (partially) use any of these kinds of materials because of the use of online platforms. Materials such as mere paper and/or cardboard can result in damaged instruments if sorting is conducted outdoors during drizzling periods, and too lightweight materials could be compromised in the case of winds, becoming an additional burden to the respondent. The selection of adequate materials can help researchers cope with these unforeseen conditions; thus, these logistical issues should not be underestimated. Jiren et al. (Citation2020) and Schuman et al., (Citation2018) are good examples of the proper management of materials that facilitate interaction between researchers and respondents. The former used laminated cards, thereby becoming waterproof and highly durable throughout the field journeys. Furthermore, it implemented a system of hook and loop fasteners, hence being windproof and rendering it prone to be used vertically (coping with lack of flat horizontal space). Finally, the board was designed in a foldable layout, thus becoming more portable, in a (seemingly) waterproof material. The latter provided similar benefits through magnetic materials, although these could be more costly and scarce in certain (rural) settings.

3.4.2. Administration technique

Most of the selected studies were conducted face to face. Of these, 15 were done individually with each respondent (Anderson and Jacobson, Citation2018; Barbosa et al., Citation2020; Cammelli et al., Citation2019; Forouzani et al., 2013; Hu et al., Citation2018; Jiren et al., Citation2020; Nguyen et al., Citation2018; Nhem and Lee, Citation2020; Nhem and Lee, Citation2019; Rijneveld and Marhaento, Citation2020; Rodríguez-Piñeros et al., Citation2018; Stoudmann et al., 2017; Truong et al., Citation2017; Zabala et al., Citation2017; Zobeidi et al., Citation2016), thereby being more time-consuming for the research teams. For this reason, studies (Nguyen et al., Citation2018; Nordhagen et al., Citation2017), and (Carmenta et al., 2017), with 92, 137, and 219 effective respondents, respectively, became impressive cases of collected sorts for this type of study. In contrast, studies (Mayett-Moreno et al., Citation2017; Pirard et al., Citation2016), and (Vargas et al., Citation2019) were collectively conducted during a 16-person community meeting, in rounds of three people simultaneously, and in a 39-person deliberative workshop, respectively. Considering that (Vargas et al., Citation2019) poses an exceptional setup for RGS contexts, it would have been interesting to understand how it was executed; unfortunately, the authors did not provide any details on the process or locations. In addition, it is worth recalling whether Q is administered individually or collectively can influence the results (Buil et al., Citation2012). Since it is intended to capture personal viewpoints, undesired group opinions—especially when involving dominant individuals and/or in collectivistic cultures—could steer some respondents’ own perspectives (Stone et al., Citation2017).

Online-administered Q is an acceptable alternative to its face-to-face version (Watts & Stenner, Citation2012). Some authors (Ormerod, Citation2017; Davis and Michelle, Citation2011; Westwood and Griffiths, Citation2010; Hermans et al., Citation2012; Raadgever et al., Citation2008) have successfully conducted online-administered Q sorts, although this is still a rare choice nowadays. From the selected studies, only three (Huaranca et al., 2019; Kopytko and Pruneddu, Citation2018; Rust, Citation2017) were (partially) conducted by means of online tools, namely Partnership Online Evaluation Tool with Q methodology – POETQ (University of Birmingham, Citation2010; Jeffares and Dickinson, Citation2016), Qsortware (Pruneddu, Citation2011) and SurveyMonkey (SurveyMonkey, Citation2020); none of them took place in a low-income country. Online administration at times might be the only feasible technique, for instance, when addressing an international community (Bordt, Citation2018)Footnote5 or in view of exceptional yet plausible limited-access scenarios, such as the COVID-19 global pandemic crisis (Omary et al., Citation2020). In RGS settings, the online administration of Q is certainly restricted by much more than merely the researcher’s willingness to use it. RGS dwellers worldwide face a serious lack of access to the Internet (Villapol et al., Citation2018), deeply limited access to equipment and electricity (Armey and Hosman, Citation2016), and (technological) illiteracy (Jere et al., Citation2013).

On one hand, the (digital) gap between researchers and RGS populations demands building and/or reinforcing local (Q) research capacities. On the other hand, circumstances like those of the ongoing pandemic may pose a sudden and unforeseen turn towards remote research (Omary et al., Citation2020) that renders that gap more acute and critical than ever. The way forward during the latter should not be limited to relying on local networks (e.g., NGOs, cooperatives, village development centers, extension officers) as a way to bridge the gap. The crisis must foster the development of innovative, open-source tools to make Q more accessible and with fewer shortcomings, especially under the light of an increasing access to and use of mobile phones in the Global South (Loo and Ngan, Citation2012).

3.4.3. Assistance and facilitation

Q is an assistance-intensive technique; therefore, for RGS dwellers who might bear further cultural- and literacy-related constraints (Roser and Ortiz-Ospina, Citation2018), appropriate facilitation is crucial. Most of the selected studies relied on pre-sort instructions (i.e., explanation of the purpose and whole process) (Anderson and Jacobson, Citation2018; Giannichi et al., Citation2018; Hamadou et al., Citation2016; Jiren et al., Citation2020; Moros et al., Citation2020; Nguyen et al., Citation2018; Nhem and Lee, Citation2020; Pirard et al., Citation2016; Schuman et al., Citation2018; Sumberg et al., Citation2017; Truong et al., Citation2017; Truong et al., Citation2019; Vela-Almeida et al., Citation2018; Weldegiorgis and Ali, Citation2016; Wijaya and Offermans, Citation2019; Yeboah et al., 2017), normally accompanied by step-by-step oral guidance (Alexander et al., Citation2018; Bumbudsanpharoke et al., Citation2009; Cammelli et al., Citation2019; Frate and Brannstrom, Citation2015; Jaung et al., Citation2016; Lairez et al., Citation2020; Moros et al., Citation2020; Nhem and Lee, Citation2020; Nhem and Lee, Citation2019; Nordhagen et al., Citation2017; Sumberg et al., Citation2017; Tuokuu et al., Citation2019; Wijaya and Offermans, Citation2019; Yeboah et al., 2017; Zobeidi et al., Citation2016). Other complementary, more time-consuming activities were reading of (almost) every statement by the research team (Cammelli et al., Citation2019; Carmenta et al., 2017; Lairez et al., Citation2020; Nordhagen et al., Citation2017; Wijaya and Offermans, Citation2019), especially because of low levels of literacy and on-demand iterative clarification of statements (Alexander et al., Citation2018; Astari and Lovett, Citation2019; Lairez et al., Citation2020; Moros et al., Citation2020; Pirard et al., Citation2016; Sumberg et al., Citation2017; Wijaya and Offermans, Citation2019; Yeboah et al., 2017). In-depth explanations and interactions may smoothen the sorting process and reduce the risk of participants misunderstanding instructions and misinterpreting statements; however, this may also increase sorting times and interviewer bias, which can seriously affect the respondents’ engagement and validity of the findings. Moreover, the status of the researchers (i.e., origin, gender, age, etc.) may provoke unexpected behavior from participants; in these cases, proper selection, training, and supervision of (local) assistants is highly advisable (Buil et al., Citation2012).

When researchers are not (native) speakers of the P-set language(s), as it occurred with 20% (n = 10) of the selected studies (Anderson and Jacobson, Citation2018; Brannstrom, Citation2011; Bumbudsanpharoke et al., Citation2009; Hilhorst et al., Citation2012; Hugé et al., Citation2016; Kopytko and Pruneddu, Citation2018; Lansing, Citation2013; Nguyen et al., Citation2018; Nordhagen et al., Citation2017; Rodriguez-Piñeros et al., Citation2012)Footnote6, they will likely rely on translators and interpreters. In this case, particular emphasis should be placed on biases beyond the mere accuracy of the terms in statements. Interpreters and assistants must first thoroughly understand the dynamics of the methodology and the topic under investigation, so they can provide a more accurate explanation to participants (Cheema et al., Citation2018). Similarly, they must be aware of not influencing the respondents’ sorts with their own opinions while facilitating. This potential limitation again links to discussing the need to build (Q) research capacities in the local contexts of the Global South. By intensively involving local scholars, universities, and institutes, these studies could be conducted by relying on native speakers and will also empower those who can better understand the demands of their local realities.

Online-administered studies (Huaranca et al., 2019; Kopytko and Pruneddu, Citation2018; Rust, Citation2017) do not allow—nor should require—face-to-face facilitation. Their respective platforms give the participant the chance to read written instructions as many times as needed to understand the required dynamics. Three main downsides are that they require participants to have access to the required equipment, demand a certain degree of (ICT) literacy, and entirely rely on each respondent’s interpretation of the provided statements.

Sorting a set of statements holistically through a (relatively) large grid can be a daunting and cumbersome process, especially if respondents are not vastly knowledgeable on the topic under study. The so-called three-pile technique is a popular way among researchers to cope with this burden (Watts & Stenner, Citation2012). It consists of a primary rough sorting in which the participant distributes all statements based on three criteria: agree, neutral, and disagree. This preliminary rough sort is thereafter refined by positioning the statements to the sorting grid. From the selected studies, 48% (n = 24) (Alexander et al., Citation2018; Astari and Lovett, Citation2019; Barbosa et al., Citation2020; Cammelli et al., Citation2019; Forouzani et al., 2013; Hamadou et al., Citation2016; Jaung et al., Citation2016; Jiren et al., Citation2020; Kopytko and Pruneddu, Citation2018; Lairez et al., Citation2020; Moros et al., Citation2020; Nhem and Lee, Citation2020; Nhem and Lee, Citation2019; Nordhagen et al., Citation2017; Rodríguez-Piñeros et al., Citation2018; Stoudmann et al., 2017; Sumberg et al., Citation2017; Truong et al., Citation2017; Truong et al., Citation2019; Tuokuu et al., Citation2019; Wijaya and Offermans, Citation2019; Yeboah et al., 2017; Zabala et al., Citation2017; Zobeidi et al., Citation2016) resorted to this technique. Jaung et al. (Citation2016) implemented an interesting two-step modification, where participants sequentially sorted into three and nine sub-piles (three per each first pile), thus enabling a smoother transition to the final grid distribution.

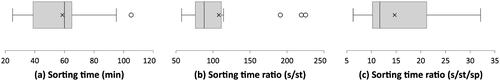

3.4.4. Sorting times

Required sorting times across the selected studies were reported to be as low as 25 min (Brannstrom, Citation2011), and as high as 1.5 h (Frate and Brannstrom, Citation2015; Pereira et al., Citation2016) and (up to) 3.0 h (Hu et al., Citation2018) (). Considering that these times are highly interrelated with the Q-set size, we can define a sorting time ratio expressed in seconds (s) per statement (st). These ratios varied from approximately 58 s st−1 (45) to 225 s st-1 (Alexander et al., Citation2018), although most ratios were concentrated around 100 s st−1 (). A third variable that influences the time required for sorting, which is usually overlooked in Q studies, is the number of sorting points throughout the grid. Larger Q-sets, distributed over a wider range of sorting choices, naturally take respondents longer times than otherwise. Accounting for this third variable, we define another ratio as the required time in seconds (s) per statement (st) per sorting point (sp). Most of the selected studies were within ratios of <10 s st−1 sp−1 (Anderson and Jacobson, Citation2018; Brannstrom, Citation2011; Nhem and Lee, Citation2019) and 10–20 s st−1 sp−1. Giannichi et al., (Citation2018), Moros et al., (Citation2020), Pereira et al., (Citation2016), Pirard et al., (Citation2016), Schneider et al., (Citation2015), Truong et al., (Citation2017), Truong et al., (Citation2019), Yeboah et al., (2017) (); others had higher ratios of >20 s st−1 sp−1 (Cammelli et al., Citation2019; Frate and Brannstrom, Citation2015; Hu et al., Citation2018; Zobeidi et al., Citation2016), and even an exceptionally high ratio of 32 s st−1 sp−1 (Alexander et al., Citation2018).

Figure 8. Sorting times across the selected studies. (a) Absolute sorting time in minutes. (b) Sorting time ratio, expressed in seconds per statement. (c) Sorting time ratio, expressed in seconds per statement per sorting points.

Only Truong et al., (Citation2017) elaborated on the consequences of (too) long sorting times hampering the Q process. Regarding the high ratios of (Alexander et al., Citation2018; Cammelli et al., Citation2019), they found their origins in the reported illiteracy conditions of their respective respondents. Other unexplained yet salient time-related facts from certain studies are worth remarking. Although Alexander et al., (Citation2018) presented the smallest Q-set, presented in the form of pictures instead of written statements, it counterintuitively resulted in the highest sorting time ratios. Its images could facilitate the sorting flow yet could also turn into subjective instruments that perhaps demanded more extended interpretation and discussion times. In contrast, although Nhem and Lee, (Citation2019) had a large Q-set that had to be additionally sorted vertically (concerning the strength of feeling of each statement within a given sorting point), it turned into barely 6 s st−1 sp−1, the lowest reported ratio.

Except for Yeboah et al., (2017), none of the studies with the largest Q-sets (>50 statements) (Astari and Lovett, Citation2019; Carmenta et al., 2017; Forouzani et al., 2013; Huaranca et al., 2019; Stoudmann et al., 2017) indicated sorting times. From these, two particularly interesting cases to analyze would have been (Huaranca et al., 2019), which presented 68 lengthy written statements, and (Carmenta et al., 2017) which asked each respondent to sort two 30- and 40-statement Q-sets in a single sitting.

3.4.5. Complementary information

To provide Q studies with an accurate and holistic interpretation of viewpoints, authors normally collect qualitatively rich complementary information (e.g., sociodemographic data, reasoning on sorting, etc.) (Watts & Stenner, Citation2012; Zabala et al., Citation2018). The most commonly used technique is the post-sorting interview about the placement of the (most extreme) elements and related topics (Alexander et al., Citation2018; Anderson and Jacobson, Citation2018; Barbosa et al., Citation2020; Brannstrom, Citation2011; Bumbudsanpharoke et al., Citation2009; Cammelli et al., Citation2019; Carmenta et al., 2017; Forouzani et al., 2013; Frate and Brannstrom, Citation2015; Giannichi et al., Citation2018; Hamadou et al., Citation2016; Hilhorst et al., Citation2012; Huaranca et al., 2019; Hugé et al., Citation2016; Jaung et al., Citation2016; Jiren et al., Citation2020; Kopytko and Pruneddu, Citation2018; Lairez et al., Citation2020; Leite et al., Citation2019; Mayett-Moreno et al., Citation2017; Moros et al., Citation2020; Nguyen et al., Citation2018; Nhem and Lee, Citation2020; Nhem and Lee, Citation2019; Nordhagen et al., Citation2017; Pereira et al., Citation2016; Pirard et al., Citation2016; Rijneveld and Marhaento, Citation2020; Rodriguez-Piñeros et al., Citation2012; Rodríguez-Piñeros et al., Citation2018; Rodríguez-Piñeros and Mayett-Moreno, Citation2015; Rust, Citation2017; Schneider et al., Citation2015; Stoudmann et al., 2017; Sumberg et al., Citation2017; Taheri et al., Citation2020; Truong et al., Citation2017; Truong et al., Citation2019; Tuokuu et al., Citation2019; Vargas et al., Citation2019; Vela-Almeida et al., Citation2018; Weldegiorgis and Ali, Citation2016; Wijaya and Offermans, Citation2019; Yeboah et al., 2017), as well as its collective variant in the form of focus group discussions (Weldegiorgis and Ali, Citation2016; Wijaya and Offermans, Citation2019). Other less common techniques include in-sorting interviews (about clarifying and sorting statements) (Astari and Lovett, Citation2019; Hu et al., Citation2018; Nguyen et al., Citation2018), pre-sorting interviews (Giannichi et al., Citation2018; Schneider et al., Citation2015), sociodemographic surveys (Sumberg et al., Citation2017; Zobeidi et al., Citation2016), and secondary information from prior interviews (Schuman et al., Citation2018). It is worth noting that according to (Truong et al., Citation2017), (too) long sorting times led participants to provide poor-quality complementary information during exit interviews.

3.4.6. Data recording

Q studies require adequate data recording of both the sort itself and any other information that contributes to the interpretation (Watts & Stenner, Citation2012). Less than half of the selected studies (n = 19) provided relevant information. Most of them used any form of (audio) recording for interviews (Alexander et al., Citation2018; Anderson and Jacobson, Citation2018; Cammelli et al., Citation2019; Giannichi et al., Citation2018; Hu et al., Citation2018; Jiren et al., Citation2020; Pereira et al., Citation2016; Schuman et al., Citation2018; Truong et al., Citation2019; Vela-Almeida et al., Citation2018), answer sheets for recording the sorts (Bumbudsanpharoke et al., Citation2009; Nhem and Lee, Citation2020; Nhem and Lee, Citation2019; Weldegiorgis and Ali, Citation2016; Zobeidi et al., Citation2016) and written notes (Cammelli et al., Citation2019; Jiren et al., Citation2020; Sumberg et al., Citation2017; Yeboah et al., 2017). Less reported techniques include photos of sorts (Alexander et al., Citation2018), structured questionnaires (Nhem and Lee, Citation2020), and even a unique approach of collecting notes written by the participants themselves (Rodríguez-Piñeros et al., Citation2018). Regarding web-based Q studies (Huaranca et al., 2019; Kopytko and Pruneddu, Citation2018; Rust, Citation2017), their respective platforms offered their own data-recording methods. Moreover, these studies did not limit the application of other online methods such as email-based follow-up interviews (Kopytko and Pruneddu, Citation2018).

Means of recording could be more restricted, in both quality and quantity, in RGS settings. Although none of the studies pointed out any related limitations, it makes sense to resort to methods that fulfil certain context-friendly properties: portable and lightweight, particularly for journeys between remote areas with low accessibility; elements-resistant, so rain, dust, heat, and humidity do not compromise recorded data; off-the-grid operation, either through long-life batteries for electronic equipment and/or by using non-electronic media. Moreover, a good strategy for reducing the risk of on-field data loss is to rely on several complementary and redundant recording methods.

3.5. Analysis and results

As this Q research stage typically does not imply on-field methodological choices, the respective findings can be found in Appendix C of the Data availability statement.

3.6. Interpretation

Given the subjectivity that interpreting viewpoints entails, and the particularities of each of the selected studies, the contents of the interpretations themselves were not considered within the scope of this review. Nevertheless, some commonalities can be identified regarding the labelling and framing of the interpreted factors. Although labelling is not a mandatory step in Q, it is certainly a common practice among Q methodologists. These labels are intended to deliver, in a nutshell, what characterizes each viewpoint and makes it unique compared to one another (Donner, Citation2001; Simons, Citation2013; Watts & Stenner, Citation2012). Because these labels depend mostly on the creativity of the researchers, there are virtually endless options to define them; however, some approaches are recognizable. Some labels assign behavioral characteristics to respondents, whereas others focus on defining a given situation or even providing a short explanation of certain positions.

Most of the selected studies relied on labels for societal scenarios, either in their compact (n = 17) (Barbosa et al., Citation2020; Brannstrom, Citation2011; Huaranca et al., 2019; Jiren et al., Citation2020; Kopytko and Pruneddu, Citation2018; Leite et al., Citation2019; Mayett-Moreno et al., Citation2017; Moros et al., Citation2020; Nhem and Lee, Citation2020; Nhem and Lee, Citation2019; Rodriguez-Piñeros et al., Citation2012; Stoudmann et al., 2017; Truong et al., Citation2017; Truong et al., Citation2019; Tuokuu et al., Citation2019; Vargas et al., Citation2019; Vela-Almeida et al., Citation2018) or longer forms (n = 12) (Alexander et al., Citation2018; Astari and Lovett, Citation2019; Cammelli et al., Citation2019; Carmenta et al., 2017; Frate and Brannstrom, Citation2015; Hugé et al., Citation2016; Jaung et al., Citation2016; Rodríguez-Piñeros et al., Citation2018; Rodríguez-Piñeros and Mayett-Moreno, Citation2015; Sumberg et al., Citation2017; Weldegiorgis and Ali, Citation2016; Yeboah et al., 2017), behavioral adjectives (n = 11) (Bumbudsanpharoke et al., Citation2009; Giannichi et al., Citation2018; Hamadou et al., Citation2016; Hu et al., Citation2018; Lansing, Citation2013; Nordhagen et al., Citation2017; Pereira et al., Citation2016; Schneider et al., Citation2015; Taheri et al., Citation2020; Wijaya and Offermans, Citation2019; Zabala et al., Citation2017), or their combinations (n = 4) (Lairez et al., Citation2020; Pirard et al., Citation2016; Schuman et al., Citation2018; Zobeidi et al., Citation2016). Few authors resorted to longer, descriptive versions of behavioral adjectives (n = 1) (Forouzani et al., 2013) and explanatory labels (n = 1) (Anderson and Jacobson, Citation2018). Other studies (n = 4) (Hilhorst et al., Citation2012; Nguyen et al., Citation2018; Rijneveld and Marhaento, Citation2020; Rust, Citation2017) reported the use of generic nameless labels, distinguished by the use of numbers or letters.

Interpreted factors should ideally be validated through ulterior interaction with respondents. By iteratively providing participants with draft interpretations, they can offer further feedback that contributes to refining the narratives (Robbins and Krueger, Citation2000; Robbins, Citation2005). This appears to have been amply overlooked (or underreported) in Q studies. Of the selected studies, only four (Brannstrom, Citation2011; Kopytko and Pruneddu, Citation2018; Lansing, Citation2013; Schuman et al., Citation2018) mentioned that they had resorted to this technique. Regarding RGS settings, where even one-time (sorting) contact with respondents could already be limited, validation seems to become a less likely choice. Under such circumstances, an alternative could be to validate the narratives with at least the highest loaded respondents for each factor (Kopytko and Pruneddu, Citation2018; Lansing, Citation2013).

3.7. Challenges and the way forward