ABSTRACT

Research skills are challenging to teach in a way that is meaningful to students and has ongoing impact in research practice. This paper investigates constructivist and experiential strategies for effective learning and deep understanding of postgraduate research skills and proposes a game-based learning (GBL) solution. A (non-digital) game called How to Fail Your Research Degree was designed and iteratively developed. Gameplay loop analysis identifies various learning and game mechanics and contextualises them in relation to GBL theory. Evaluation of gameplay (n = 127) demonstrates effective transmission of intended learning outcomes and positive game experience based on Keller’s Attention-Relevance-Confidence-Satisfaction (ARCS) model. Discussion proposes that the game has high cognitive authenticity, relies heavily on tutor facilitation, can create tension between knowledge and confidence, and is applicable to multiple domains and learning situations. GBL is proposed to be an original and effective approach to teaching high-level, functional learning outcomes such as academic research skills.

Introduction

Educational games (also known as ‘serious games’) are widely acknowledged as fruitful tools for learning and skills development across multiple domains, specifically educational enhancement (Bellotti, Kapralos, Lee, Moreno-Ger, & Berta, Citation2013). The literature on game-based learning (GBL) has moved on from questioning whether educational games can successfully enable learning and now concentrates on how learning occurs: the particular ways in which games and their associated teaching practices can be best exploited to meet learning outcomes (Hanghøj & Hautopp, Citation2016). The emergence of, and need for, empirical studies which examine different game mechanics and their effects within courses is noted by Aguilar, Holman, and Fishman (Citation2015). Higher Education has lagged behind school-based implementations of GBL, due to the barriers to adoption particular to this context (Moylan, Burgess, Figley, & Bernstein, Citation2015; Whitton & Moseley, Citation2012) and the difficulties of evaluating high-level cognitive outcomes (Whitton, Citation2012) resulting in little research addressing high-level, functional learning outcomes and less still specific to a postgraduate context.

This paper analyses the development and evaluation of How to Fail Your Research Degree, an educational game for teaching postgraduate research skills. The design was in relation to both pedagogical and practical considerationsFootnote1 and gameplay loops have been analysed in detail to demonstrate the links between various game mechanics and their associated learning mechanisms. Literature on the evaluation of serious games informed the collection and analysis of quantitative and qualitative data from an extensive survey (n = 127) of the learning outcomes and experiential factors of playing the game. Results are then discussed in relation to GBL theory and this informs reflections on the game’s implementation.

Development, contextualisation, and evaluation sought to investigate two specific concepts within GBL in a postgraduate context. Firstly, whilst there is significant evidence that games can effectively communicate knowledge, how can high-level, functional learning outcomes (such as research skills) be effectively taught through a game-based intervention? This addresses the research gap in close, empirical studies on GBL interventions within postgraduate learning. Secondly, what are the most fruitful facets of student engagement with this intervention, and does it encourage students to embed these skills into their practice? This, alongside the interdisciplinary analysis of game and learning mechanics offers insight into how GBL can be effectively deployed and its likely impacts.

Rationale for game-based learning of research skills

Equipping postgraduate students with research skills and critical aptitudes is widely acknowledged as being crucial for their future problem-solving and employment opportunities. Yet, alongside this need, the literature identifies a widespread lack of satisfaction and engagement in research skills courses. ‘[T]he common finding among scholars is that students find methods classes “dry” and “irrelevant”, leading them to not engage with the material as much as they would with a topic-based course.’ (Ryan, Saunders, Rainsford, & Thompson, Citation2013, p. 88). Studies identify both the difficulty of teaching research skills in a way that is meaningful to students, and also the need to relate skills to real-world problems in order to increase relevance and motivation to learn (Waite & Davis, Citation2006, p.406; Ryan et al., Citation2013, p.88; Hamnett & Korb, Citation2017, p.449; Kirton, Campbell, & Hardwick, Citation2013). Furthermore, transitions from undergraduate to postgraduate study are recognised as posing particular challenges and, until recently, have presented a research gap (O’Donnell, Tobbell, Lawthom, & Zammit, Citation2009; Burgess, Smith, & Wood, Citation2013, p.4; QAA, Citation2017). Graduates from diverse backgrounds need to improve their critical reading, thinking, and writing skills in the context of a taught master’s or research degree, often in a very different learning mode than the student has previously experienced (O’Donnell, Citation2009, p. 35–37). This context requires both deep understanding and the active application of research skills; quite different to learning outcomes related to simply retaining knowledge.

Precisely because of these challenges, recent course redesign and interventions in research skills tuition have emphasised constructivism, with educators introducing much more active and experiential teaching and learning methods. Examples include scaffolded real-life academic (Hammnet & Korb, Citation2017) and extra-academic (Kirton et al., Citation2013) research activities; exposure to and critique of real-world examples (Ryan et al., Citation2013); mind maps (Kernan, Basch, & Cadorett, Citation2017); and metacognitive strategies such as active reflection (Saemah et al., Citation2014; Kirton et al., Citation2013) and peer assessment (Burgess et al., Citation2013). Positive results were reported for learning outcomes, engagement, and practical application of knowledge and skills. These results, specific to teaching research skills, reflect the wider literature on experiential and active learning strategies, and authors note the need for further innovation and a ‘culture shift’ within research skills course delivery (Ryan et al., Citation2013, p. 88).

An original intervention: game-based learning of research skills

The characteristics of learning and playing games are closely correlated: curiosity, persistence, risk-taking, reward, attention to detail, problem-solving, and interpretation (cf. Klopfer, Osterweil, & Salen, Citation2009). The research presented here sought to enhance a lecture-based research skills course and improve knowledge retention, deep understanding, and students’ research practice, reflecting previous moves towards active, constructivist approaches. There is no evidence of similar GBL strategies being used within postgraduate research skills teaching, making this approach a novel intervention. The overall goal was to enhance the comprehension and implementation of high-level, functional learning outcomes related to academic research, which is typically taught through lecture or seminar-based interactions. Pedagogy has for years acknowledged the tension between telling (e.g. via a lecture) and immersion in contexts of practice. Clearly, both are needed, however ‘Educators tend to polarize the debate by stressing one thing (telling or immersion) over the other and not discussing effective ways to integrate the two.’ (Gee, Citation2014, p. 114). GBL is well established as one way in which to complement the ‘overt telling’ limitations of lectures and other instructional approaches (cf. Boydell, Citation1976, p.32; Games & Squire, Citation2011; Kirkley, Duffy, Kirkley, & Kremer, Citation2011). Beard & Wilson firmly establish play as an experiential method – ‘play serves to rehearse and exercise skills in a safer environment’ (Citation2002, p. 70) – and the vast majority of games are inherently active and constructivist. Research itself is also fundamentally active, experiential, and constructivist, and postgraduate assessments (e.g. dissertation or thesis) encourages learning activities which are highly goal-driven.

[W]hat is learned is goal-driven, and it is the learners’ goals and their ownership of those goals that shape the learning and problem solving process. […] This epistemological commitment of sense making forms the basis for our design and use of games for learning. (Kirkley et al., Citation2011, p. 375)

Goal-driven learning, encouraging active participation, and rehearsing a relevant problem were core to the development of this intervention: a non-digital educational game called How to Fail Your Research Degree.

Game design and gameplay loop analysis

Research into serious games shows that it is crucial to understand and integrate the serious game mechanics which are the relationship between pedagogy and game design; i.e. how mechanisms for learning are mapped to pedagogic goals. (Arnab et al., Citation2015). Arnab et al. reviewed the literature extensively to produce a descriptive and non-exhaustive model to improve mapping of learning mechanics (LMs) to game mechanics (GMs): the LM-GM model (Arnab et al., Citation2015; Lim et al., Citation2013). This model, when combined with gameplay loop analysis (Guardiola, Citation2016), allows insight into the contextualised, dynamic relationships between player actions, pedagogic goals, and GMs.Footnote2 The overall design parameters for How to Fail Your Research Degree have been previously published (Abbott, Citation2015) and full rules are available online (Abbott, Citation2017); therefore, this paper focusses on detailed mapping of LMs and GMs, incorporating in-game and out-game actions (Guardiola, Citation2016) in order to analyse purposeful learning within this context.

Overall design

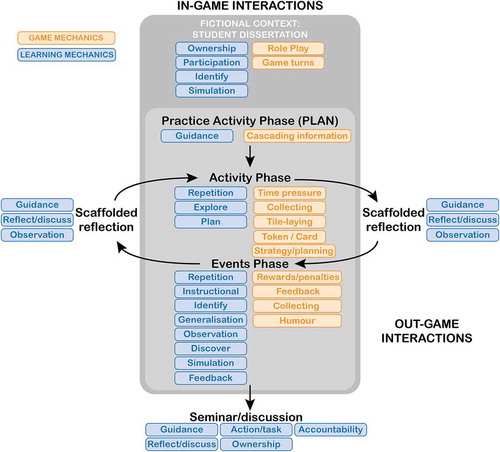

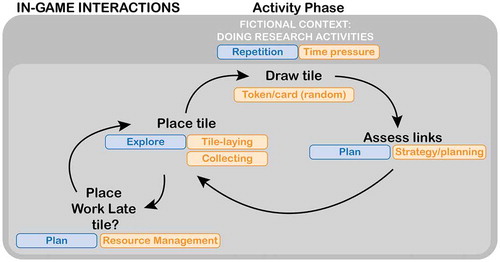

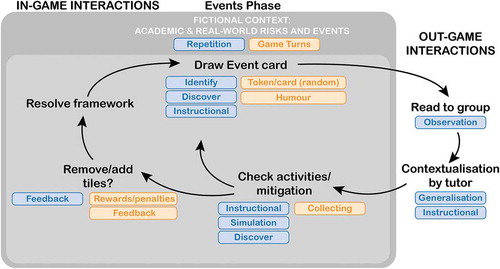

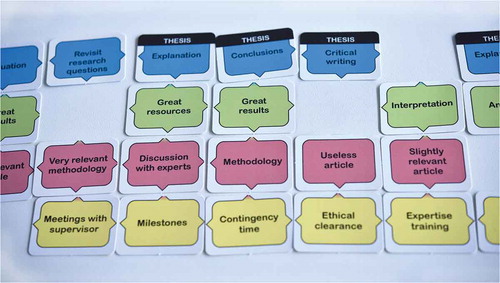

How to Fail Your Research Degree (henceforth How to Fail) is described as ‘undertaking a master’s degree at an unusually busy and calamitous stage of your life’ (Abbott, Citation2017). shows the high-level gameplay loop. Within the fictional context of a research dissertation, players draw and arrange tiles representing research activities (Activity Phase, see ) which are then affected by randomly drawn academic and real-life events (Events Phase, see ). There are four rounds, a ‘practice’ Activity round (Plan), then three subsequent rounds: Context, Implementation, and Write-Up. The purpose of the game is to connect as many Thesis tiles as possible in the Write-Up round, representing a well-written dissertation. Within and between each round, players are supported in active reflection to enhance learning. Gameplay is followed by a seminar/discussion in order to reinforce the lessons learned, propose future actions, and increase accountability.

Figure 2. example of a completed framework made up of Activity tiles from the four sequential rounds (Plan, Context, Implementation, Write-Up). The relative usefulness of each activity can be inferred from the connections shown on the cards, as well as whether or not it contributes directly to a good dissertation.

The crucial role of reflection within GBL activities is well established: ‘[L]earners must have opportunities to analyse, reflect upon, and abstract what has been learned in the game’ (Kirkley et al., Citation2011, p. 389). This is supported by Beard and Wilson (Citation2002, p. 17) and Boydell who notes that this deeper understanding is core to the process in which an experience becomes conceptual guidance for new experiences (Citation1976, p. 17). Sandford & Williamson note that space for reflection is rarely included in games for learning (Citation2005, p.15), making its close integration into gameplay here significant.

The intention was to minimise learning about the game in order to maximise learning through the game (cf. Sandford & Williamson, Citation2005, p. 17), therefore rules are as simple as possible, whilst still supporting intended learning mechanisms. As shows, gameplay incorporates social and vicarious learning, out-game reflection, and repeating cycles of constructivist exploration, discovery, and instruction (discussed in more detail below). The overall gameplay loop takes place within an immediately recognisable and relevant fictional context (a simplified simulation of a student research project.) Creating a game environment which is fictional (and therefore safe) yet realistic provides students with a ‘whole-task context’ thought to be more easily mapped to real situations (Easterday, Aleven, Scheines, & Carver, Citation2011, p. 69) whilst also encouraging an element of risk-taking; required to produce original and innovative research (Fry, Ketteridge, & Marshall, Citation2003, p. 303). This approach is supported by the principle of constructive alignment between learning activities, assessments, and intended learning outcomes (ILOs). Biggs’ extensive work on constructive alignment emphasises that aligned course design encourages active construction of meaning and the ability to abstract and reflect on learning in order to apply it to future situations (Biggs & Tang, Citation2011). In the GBL context, enhancing cognitive objectives requires close integration of game learning activities into the curriculum (Tobias, Fletcher, Dai, & Wind, Citation2011, pp.177, 198) and, when aiming to embed critical capabilities into students’ ongoing practice, it is particularly important to align games (subject, gameplay, or both) with the core competency seeking to be taught, in other words reinstating play as a means to establish knowing (Games & Squire, Citation2011, p. 18). Therefore, the instructional goals of How to Fail are based directly on elements of the curriculum for the research skills course where the game was initially implemented, focussing on the successful management of a dissertation project. The game’s ILOs enable students to:

Understand the various risks affecting research and their impact on projects;

Recognise dependencies between tasks at different stages of research;

Understand the interrelations of different risks with the activities to negate or mitigate them;

Be aware of the time-critical nature of short research projects. (Abbott, Citation2015)

As discussed above, research skills courses have particular challenges in motivating students to learn and practice these skills. Beard and Wilson state that ‘for learning to occur and an opportunity for learning not to be rejected, there has to be an attitudinal disposition towards the event’ (Citation2002, p. 119). Although it is common to encounter the (incorrect) assumption that all games are inherently motivating, simply because they are often fun, the need for connectivity in games which aim to increase motivation for learning is widely acknowledged. Keller states that:

To be effective, motivational tactics have to support instructional goals. Sometimes the motivational features can be fun or even entertaining, but unless they engage the learner in the instructional purpose and content, they will not promote learning. (Citation2010, p. 25)

Keller also notes the negative effects of non-aligned game experience (p.222) and states that motivation ‘includes all goal-directed behaviour’ (Keller, Citation2010, p. 4) noting its complexity as both affective and cognitive (p.12), extrinsic and intrinsic (p.18). A wider discussion of the motivation for learning is outside the scope of this paper; however, it is notable that games are specifically mentioned by Keller as a technique to improve motive stimulation (Citation2010, pp.130, 190), and he describes educational games as having elements of person-centred and interaction-centred motivational design models. Increasing motivation for a subject often seen by students as somewhat dry was a core design goal of How to Fail. Design was student-centric focussing on interactions that are light-hearted, memorable, and highly relevant to the course and programme outcomes as well as wider research capabilities. The game falls into a category of motivational design defined by Keller as omnibus models which ‘have more pragmatic or pedagogical origins and incorporate both motivational design and instructional design strategies without distinguishing between the two’ (p.27) and includes a constructivist approach designed ‘to help learners develop meaningful, contextualized bodies of knowledge’ (p.34). Put more simply, How to Fail explicitly connects the pedagogic method to the goals of learners to influence their attitudes towards core course content based on goal success (or failure) (cf. Keller, Citation2010, p. 22).

Specific game mechanics for learning

Within the overall gameplay loop, the detailed mechanics were iteratively developed. One set of attributes define GBL as being at its most effective when it includes: active participation; immediate feedback; dynamic interaction; competition; novelty, and goal direction (Tobias et al., Citation2011, p. 177). Mechanics therefore encompass these attributes (with the arguable exception of competition) and emphasise the thoughtful nature of a research project, the player’s control and agency, and the pressures of time (Activity phase, ), alongside luck in encountering different risks and events and active reflection on how to avoid or ameliorate them (Events phase, ).

The Activity phase is largely preparatory, players collect different types of research activity, with elements of strategy and resource management within the metaphorical project. For example, during the Context round, a player draws a ‘Relevant Article’ tile which must be matched using the arrows to existing tiles played (). LMs during this stage are limited to recognising and building the required activities (Plan) and a constructivist, exploratory approach to the relationships between tiles (Explore), proposed to be ‘most appropriate for teaching generalised thinking and problem-solving skills’ (Lim et al., Citation2013, p. 181).

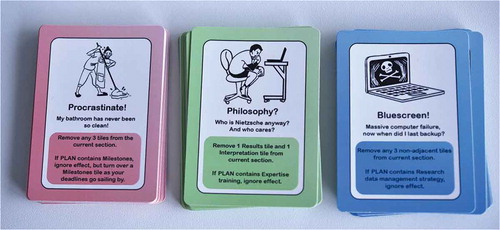

During the Events phase, on the other hand, GMs are simply drawing and following instructions on Event cards and instead the LMs are firmly foregrounded. The risk or event on each card is recognised or learned by the player (Identify/Discover, Instructional) and shared with the rest of the group by reading out the card (Observation). Tutor contextualisation aids direct or inductive learning, relevant to both the Event and the Activities collected by the player which may prevent the card penalty. Checking the Event card against existing Activities creates active learning of the conceptual connections (Discover, Simulation) and the penalties/rewards on the card provide instant Feedback about possible consequences. For example, a player draws a ‘Bluescreen’ event (), reads it out and checks her framework to see if she has collected a ‘Research Data Management Plan’ tile. The tutor defines ‘research data management’ and provides real-world examples of both the event and methods to avoid it happening. The team does not have a ‘Research Data Management Plan’ tile and must, therefore, choose three other tiles to remove, representing work that was lost when the computer crashed.

Penalties and points of failure within educational games increase the challenge, excitement, and pressure, all of which work to provide meaningful agency over gameplay (Easterday et al., Citation2011, p. 70). Most games present losing as something to be overcome by strategy or mastering gameplay (cf. Juul, Citation2013, p. 9), whereas the Penalty/Feedback interplay is central to How to Fail as it highlights academic risks in a meaningful yet safe environment and, combined with luck, moves the focus of the game to process, i.e. engagement with the activity itself rather than results. Crucially, learning opportunities occur during gameplay, therefore, it is participation itself which is educational, not whether or not players ‘win’.

Habgood and Ainsworth (Citation2011) discuss intrinsic integration, that is, an intrinsic link between GMs and learning content, and note that directing flow experiences (i.e. gameplay) towards educational goals could increase learning and motivation when core mechanics are intrinsically integrated with ILOs. They also state that learning in the intrinsic condition is more emotionally charged, which is thought to have benefits for long-term retrieval (p.198). As can be seen from the LM-GM analysis above, gameplay is intrinsically integrated with LMs, especially in the Events phase, and reflects the game’s overall metaphor.

To complement the theoretical analysis above, empirical data on learning and gameplay experience will now be presented.

Evaluation

Serious games (SGs) evaluation literature demonstrates positive outcomes within formal education for knowledge acquisition and content understanding, knowledge retention, and in particular, motivation (Bellotti et al., Citation2013, p.2; Tobias et al., Citation2011, pp.160–161, 188) whilst simultaneously noting the shortage of rigorous studies and the need for robust frameworks for SG evaluation (Bellotti et al., Citation2013, p. 2) as well as the highly fragmented community in this discipline (Mayer et al., Citation2014, pp.502–504). Recent research attempts to address the lack of universal evaluation and validation procedures for SGs and to bring together fragmented research on this topic (GaLA, Citation2014). Bellotti et al. state that ‘assessment of a serious game must consider both aspects of fun/enjoyment and educational impact’ (Citation2013, p. 1). The notion of ‘fun’ is broadly accepted as an SG characteristic however research has questioned whether emotional engagement need necessarily be a positive experience and analysed the value of less pleasurable facets to the ‘entertainment’ aspects of SGs (cf. ‘pleasantly frustrating’ experiences (Gee, Citation2007, p. 36) and Beard & Wilson’s description of ‘painful learning’ and learning from mistakes (Citation2002, p. 22–26)). The balance between engagement and pedagogy is also acknowledged as being of critical importance to the success of any SG (Boughzala, Bououd, & Michel, Citation2013, p.845; Bellotti et al., Citation2013, p.3; Kirkley et al., Citation2011, p. 389).

Evaluation methods suitable for non-digital games include post-game questionnaires as well as teacher assessment based on observation during gameplay (Bellotti et al., Citation2013, p.3; Moreno-Ger, Torrente, Serrano, Manero, & Fernández-Manjón, Citation2014, p. 10). The importance of triangulated methods is also specifically noted by Mayer et al. (Citation2014, p. 509) and qualitative evaluation of serious games within Higher Education is identified as a research gap by Boughzala et al. (Citation2013, p. 846).

Methodology

The evaluation methodology for How to Fail draws on the above research context and therefore focusses on testing two aspects: the effectiveness of the game in achieving its intended learning outcomes (ILOs), and the experience of gameplay itself. Dede proposes that a valuable assumption for a research agenda is to focus on individual learning rather than attempting to demonstrate generic effectiveness in a universal way (Citation2011, pp.236–237); therefore, the target group for evaluation was taught master’s and early-stage PhD students (the intended primary users) whilst also including a small number of final-year undergraduates and postgraduate tutors, where appropriate. After the study gained ethical clearance from the institutional Ethics Committee, 21 voluntary game sessions were run with over 130 players attending. Games had a varying number of players (from 2 to (typically) 8–12, but in one extreme example 28). Where participants exceeded four per game, players were grouped into teams and the facilitator encouraged equal participation by all team members. Game rules were briefly explained before gameplay and reinforced throughout. Gameplay was followed by a short reflection, distribution of the surveys, and then (where possible) a tutorial discussion focussing on reinforcing the learning outcomes. Participation in the survey was voluntary and anonymous and took place after gameplay and reflection, but before further discussion. 127 surveys were returned.

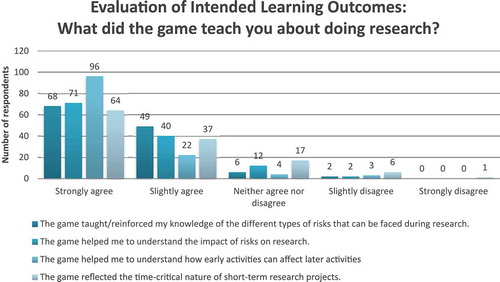

Quantitative data on the perception of cognitive outcomes were collected on a 5-point Likert scale measuring player agreement with four statements based on the game’s ILOs (see ). Qualitative data were sought via free-text response which appeared before the more guided quantitative questions to avoid biasing responses.

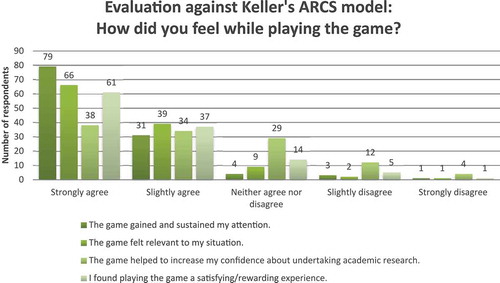

Evaluation of game experience used a widely applicable theoretical framework focussed on emotional engagement as the conduit to increased motivation: the (extensively validated) Attention Relevance Confidence Satisfaction (ARCS) model, which uses an extensive review of motivational literature to cluster motivational concepts into four categories, shown in the first three columns of (Keller & Suzuki, Citation2004; Keller, Citation2010, p. 44–45).

Table 1. ARCS Model (Keller, Citation2010, p. 45), final column added by author.

As noted in Roodt & Joubert, motivation in higher education is multi-facetted; therefore, the ARCS model is a robust method of evaluating whether the major motivational facets defined by Keller exist within an SG (Citation2009, p. 337). This model encapsulates ‘fun’ within the Satisfaction and Attention facets, and also provides a nuanced framework through which to examine other motivation-related game experiences. As with the evaluation of ILOs, free-text responses were sought before data on attention, relevance, confidence, and satisfaction were collected using a 5-point Likert scale.

Personal data collection was solely a self-assessment of research experience. Respondents could also write additional free-text feedback.

Quantitative data were collated and analysed with nonparametric statistical methods appropriate to ordinal, not normally distributed, data. Outliers were retained to show full range of responses. Qualitative data were hand-coded to triangulate quantitative results and identify any other major themes emerging by identifying synonyms and grouping statements by intent for a macro-analysis. All results were interpreted with the informal knowledge gained by the researcher having participated in the out-game elements of the GBL intervention (see ), observing players’ experiences of gameplay and examples of deep learning in discussions inspired or contextualised by the game. The participation of the game designer as facilitator was invaluable in informing analysis and interpretation of the way the game plays out in different groups and the major lessons learned. However, it is acknowledged that action research of this kind is also a methodological limitation. Rules explanations, gameplay, and reflection were not delivered in exactly the same way as the game is designed to be a responsive teaching tool. Furthermore, the presence of the game designer as facilitator may have influenced survey results, despite best practice being followed to ensure anonymity and encourage honest responses.

Longitudinal study

To complement immediate outcomes, reflection on the second-order learning outcomes over time was facilitated via a longitudinal evaluation (n = 13) (cf. Mayer et al., Citation2014, p. 511). This sought to establish which lessons had been retained approximately 6 months after playing using free-text responses: what the player remembered learning, feeling, and other comments. The open-ended strategy avoids prompting the respondents’ memories, to gain the most accurate results.

Results and analysis

Intended learning outcomes

A large majority of players agreed with the statements testing each ILO, indicating high levels of success in the knowledge acquisition/reinforcement defined (see Figure 6). shows overall agreement (i.e. slightly agree plus strongly agree) with each ILO, all of which are over 80%. The game appears to be particularly successful at identifying risks and their impacts (94% and 89% agreement, respectively) and enabling the active discovery of interrelations and dependencies between early activities and activities and risks that come later, with 94% overall agreement (77% who agreed strongly).

Table 2. percentage of responses agreeing with each ILO evaluation statement.

Table 3. percentage of responses agreeing with each motivation evaluation statement.

Specific lessons learned

A number of specific outcomes were identified by players in free-text responses; the most common are presented below, evidenced by selected quotes from players.Footnote3

Interconnectedness/dependencies in research activities. This outcome is directly aligned with the game’s ILOs and triangulates the results of the third evaluation statement in .

‘It demonstrated cause & effect issues in research and emphasised thinking ahead.’ (Participant ID (PID) 22)/‘The dependencies inherent in the structure of almost any research project e.g. if you haven’t set milestones at the planning stage, you are vulnerable to distractions later etc.’ (PID23)/‘Everything is connected.’ (PID123)

(2) The importance of planning. This lesson arises from many of the Event cards being directly negated or ameliorated by activities in the Plan phase (e.g. Contingency Time ameliorates the penalties of getting ill) and is aligned with the ILO of demonstrating how early activities can affect research later on.

‘Reinforced the importance of planning and strategy at the early stages of research.’ (PID14)/‘The need for careful planning.’ (PID24)/‘Remember to factor in contingency time – didn’t do this as a student and should recommend to others.’ (PID19)/‘The better I plan, the more likely I will succeed.(PID17)’

(3) The impact of both academic and real-world events/risks.

‘Reinforced the idea that research is very much managed on the individual level and is therefore caught up in a person’s life and circumstances.’ (PID14)/‘the importance of support structures (supervisors, friends and family etc) and potential need for training.’ (PID23)/‘bad stuff happens and you have to make tough decisions about what has to go.’ (PID24)

(4) That the game would be most useful played early in the research process.

‘I wish we could have done this or had it required at the beginning of our proposals.’/‘I wish I could have considered these outside factors sooner.’ (PID17)/‘Great game, possibly something that can be introduced at an earlier stage of the final project to know how to deal with blocks or certain obstacles’ (PID125)/‘The game was enjoyable and definitely worth using during induction day/week of embarking on a research project.’ (PID95)

It is notable that the game designer’s plan for most fruitful integration into the research skills course was independently confirmed by players. Delaying direct instruction to allow students to first engage in problem-solving activities has been shown to promote learning and re-learning at university level (Westermann & Rummel, Citation2012), which supports this position. Several players also noted that the game was a good ice-breaker.

(5) Familiarisation with research terminology, particular for novice researchers and those with English as an additional language.

‘It was useful in demonstrating & repeating the terminology of research’ (PID19)/‘This would be useful for students needing reassurance in the use of English language terminology.’ (PID22)

Other specific lessons included the importance of considering the ethical implications of research, changing the player’s approach to research data management, and the specifics of planning the project (e.g. setting milestones, getting training where needed, and arranging appropriate meetings with supervisors).

With reference back to the gameplay loop analyses above, it can be seen that, as expected, the bulk of the learning/understanding occurs in the Events phase () with a mixture of Instructional, Discovery, Simulation, and Feedback learning mechanics (evaluation statements 1–3 and specific lessons learned 1–4). However, the Explore, Plan, Collecting, and Time-pressure mechanics during the Activity phase () are also crucial to support evaluation statement 4 and specific lessons 4 and 5.

Analysis of experience of gameplay

Evaluation of gameplay experience used Keller’s framework to measure opinion on attention, relevance, confidence, and satisfaction (see and ).

Attention and engagement

92% agreed that the game gained and sustained their attention (67% strongly). This is the highest result from all four ARCS categories and indicates that the game is both novel and likely to be memorable. The framework indicates that a strong success in this factor indicates high perceptual and inquiry arousal, which will stimulate a curiosity to learn and apply understanding (Keller, Citation2010). Clearly, these causal links were not measured as part of this study; however, the extremely high positive result in this category is notable. Qualitative responses specific to attention and engagement included:

‘It was neat to see a fast-forwarded process of how to write a thesis.’ (PID17)/‘The game taught various elements of research in a fun and interactive manner.’ (PID13)/‘Excited, nervous about the Event cards and engaged with the narration of the game’ (PID91)/‘it definitely sustained my attention and interest throughout … Thoroughly enjoyed playing.’ (PID21)/‘Lots of fun and very instructive!’ (PID106)

Relevance

90% agreed that the game felt relevant to their situation (56% strongly). This strong result in terms of goal-orientation and motive matching also applies to Keller’s third subcategory for relevance: familiarity (ibid.) (especially, as noted above, in terms of embedding appropriate research terminology). Situational relevance is also strongly reflected in the qualitative responses, with many students relating the game directly to their own research projects and processes:

‘I think this was very accurate to myself’/‘Some of the planning cards gave me more ideas for my research.’ (PID17)/‘Timed nature of rounds feels like master’s year.’ (PID24)/‘I also felt I could relate to my own research process throughout the game.’(PID15)

Perhaps surprisingly, high levels of relevance also applied to more and less experienced players (e.g. supervisors and undergraduates) who were easily able to generalise the fictional context and relate it to their own situations. A number of participants suggested ways in which the game could be made specifically relevant to them by adapting the final round:

Really good tool to brainstorm process of research, could blue deck be altered for researchers i.e. research paper/exhibition be a goal not a thesis? (PID19)

Confidence

63% agreed that the game increased their confidence in undertaking academic research (33% strongly). Overall this category is not as emphatically positive as the other motivational concepts measured. Given the game’s focus on penalties as a memorable learning strategy, this is perhaps not surprising. Keller’s subcategories within Confidence focus on learning requirements, success opportunities, and personal control (2010) and whilst the first two are clear within the game, several players noted a frustration with the lack of personal control over cards drawn and luck-based elements of the game:

‘so happy I could test my understanding of research but slightly frustrated that luck played such a high impact on my performance.’ (PID20)/‘Might be interesting to plan first round (PLAN) by choice and not luck. Felt a bit harsh to be peer-reviewed on what was drawn from pack.’ (PID66)/‘Under pressure – not very happy. It is a great idea and very clever – just not sure about how it might be made more reassuring.‘ (PID11)

Responses also revealed that some players felt less confident about research due to having gained an insight into the breadth and depth of procedures required of them.

‘I didn’t realise that ethical clearance was so important and could destroy the validity of your research.’ (PID17)/‘for those well-versed [research processes are] fine, for less experienced [they are] daunting to take into account.’ (PID19)/‘Added worry about how much there is to do.‘ (PID36)

This result suggests that increased knowledge can actually come into direct opposition with student confidence about the research they will undertake. Therefore, whilst realising how much you do not yet know is undeniably a useful outcome, it may have a negative impact on motivation.

Satisfaction/reward

83% agreed that the game was satisfying or rewarding (52% strongly). This indicates not only a strong intrinsic reward within the game but also a link that was obvious to players between the game and their extrinsic goals (cf. Keller, Citation2010). Free-text responses emphasised this finding, identifying enjoyment of the experience (whilst acknowledging stress alongside fun as a useful emotional response) and desire to both use and share the game’s learning outcomes:

‘Slightly stressed! Enlightened.’ (PID18)/‘I found it fun and a bit playful’ (PID16)/‘Entertained and invested’ (PID122)/‘Relaxed at the start and a bit stressed by the time it came to write-up! Very positive experience as it is very well organised and didactic and presents a clear framework for approaching research.’ (PID14)/‘Excited and stressed. Great game, the events especially shows how one thing can mess it up. Would love to play again!’ (PID115)/‘Really excellent way into thinking through the mechanics of research!’ (PID21)/‘Good opportunity for discussion.’ (PID24)/‘I hope my classmates do this’ [author’s emphasis] (PID17)

Player-level analysis

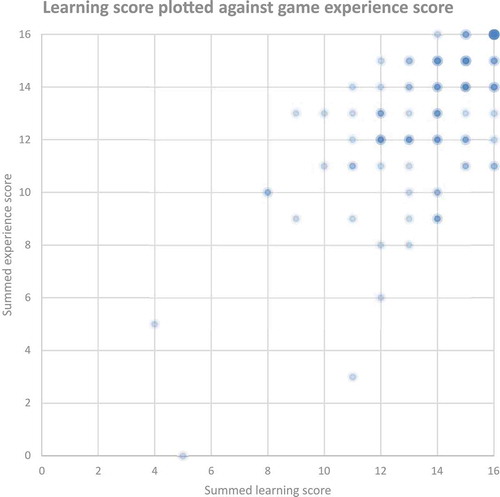

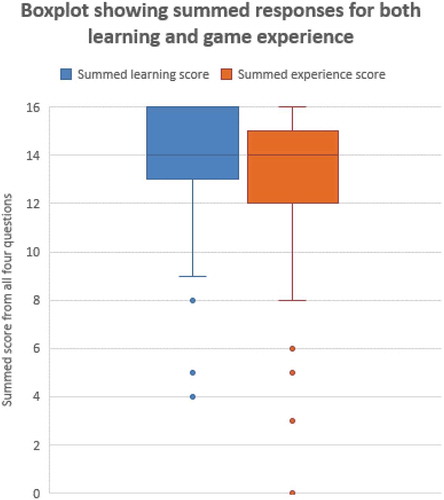

In order to gain further insight into individual player experiences, shows responses converted to numerical data (0 = Strongly Disagree, 4 = Strongly Agree) and summed to provide an overall Learning Score and Experience Score for each player where 16 represents strong agreement with every ILO or experience statement and 8 represents neutral overall (e.g. four Neither Agree Nor Disagree responses). Null value pairs were removed and transparency applied to show the frequency of responses. Acknowledging the limitations of converting Likert scales to numeric data (assuming linearity), it is notable that only outliers had Learning or Experience scores below 8 (Neutral) (see ), furthermore that the maximum value (16, 16) is the most common response. There is a (statistically significant) strong positive correlation between Learning and Experience scores (rs(114) = 0.65, p = 0), suggesting that learning and ‘fun’ is well balanced.

Three-quarters of players reported very positive learning outcomes and gameplay experience (i.e. averaged 12 or more) with a median value of 14 for both. Results showed no significant differences between players with differing research experience,Footnote4 indicating that the game remains useful at a number of different levels of research expertise. In terms of group size, there were no significant differences between reported learning between individual players, those playing in small groups, and those playing in large groups. However, the very large group (n = 28) demonstrated a statistically significant drop in enjoyment of approximately 2 points (p = 0.01539) compared with groups of up to 12 players and three of the four outliers who did not enjoy the game were from this large group. It is speculated that this is due to the lack of personalised scaffolding and opportunities for engagement with such a large group. The facilitator’s informal reflections on this game also indicate that scalability is an issue, which is reflected in the literature on educational games (Baalsrud-Hauge et al., Citation2015). There were no significant differences in enjoyment scores between individual players and those playing in small teams; however, observation indicated that team play was more discursive which is felt to be a benefit.

Longitudinal evaluation results

Longitudinal evaluation reinforces the results above, albeit with the limitation of a much smaller sample (n = 13). The most common lessons mentioned were the importance of planning (85%, n = 11), having learned about the research process in general (69%, n = 9), issues surrounding time management (e.g. setting milestones) (62%, n = 8), and being made aware of the number and diversity of risks (54%, n = 7).

‘It was a fun and engaging way to understand what the steps for a research project are.’ (Longitudinal PID7)/‘It made me more aware of everything that could potentially go wrong, so reinforced the fact you need to plan well’ (LPID2)/‘Great fun, and a good way of taking a step back and seeing what can go wrong.‘ (LPID11)

Responses indicate that the major lessons learned from the game are retained in the medium term and have a role in contextualising research skills and approaches learned subsequent to playing the game. 77% (n = 10) of responses specifically mentioned the impact that the game (and the student’s subsequent reflections on it) had on planning research projects, in fact, 54% (n = 7) reported that they felt the value of having played the game increased over time.

‘I remember at the time the game made me think about how carefully I had planned my research and prompted me to re-think the timescales required for things like ethical clearance. It also encouraged me to rethink my approach to backing up my work. I think overall the game had a positive impact on my approach to planning my research project, as I took a lot of it into consideration when planning the next stages of my project.’ (LPID1)/‘when I had played this game in the beginning of my course, I wasn’t really understand it fully [sic] but now I can relate it to my research.’ (LPID4)/‘It was a good laugh at the time but now, more than half way through the dissertation project, I can appreciate the take home message from the game more.’ [author’s emphasis] (LPID2)

Furthermore, 31% (n = 4) expressed a desire to play the game again.

I think it would be a good idea to play the game again, now that the project proposal is approaching. It could be a good reminder of how we should plan our research.(LPID7)

Despite the small sample, the qualitative longitudinal evaluation appears to support the hypothesis that the game is both memorable and successful in embedding the knowledge acquired into research practice.

Discussion

Overall, How to Fail appears to overcome some of the challenges of teaching postgraduate research skills in a meaningful and memorable way. Players reported high achievement for all four ILOs and qualitative data demonstrates deep understanding of the issues explored, with players actively relating their game experience to their real-life challenges in undertaking postgraduate research. Furthermore, a large majority of players found the game enjoyable and rewarding. The most significant findings and limitations of this research will now be discussed in detail.

Integrating multiple interaction modes with educational content

How to Fail includes both positive and negative emotional states of engagement (humour and failure) and aims to encourage creativity, imagination, and problem-solving within a sufficiently structured and ‘safe’ playful environment (cf. Fry et al., Citation2003). It makes use of emotional ‘waves’ with periods of high-concentration, high-flow activities (Activity phase) followed by periods of calm which include guided reflection on each phase of the game and the overall result (cf. Beard & Wilson, Citation2002, pp.124–130, 147–154). This is particularly important as Habgood and Ainsworth note the competing demands of ‘intrinsically integrating learning content within frantic action-based games’, noting that without the chance for reflection this mechanic could inhibit learning (Citation2011, p.175–176). This combination of constructively aligned content and interaction behaviours shows How to Fail to be a content system which delivers understanding of a subject area – however as a one-off tutorial intervention, it also functions as a trigger system which creates an experimental context for understanding a subject, built on by the tutor in subsequent discussion (Klopfer et al., Citation2009, p. 23).

Cognitive authenticity

As indicated by the high levels of reported relevance, above, How to Fail focusses on usable knowledge, rather than being designed as ‘a solution looking for a problem’ (Dede, Citation2011, p. 235) and conforms to the guidelines suggested by Kirkley et al. for problem-centred game design:

The problems or challenges provided via game play should reflect the types of real-world problems, situations, and scenarios faced by people in the field and also meet the curricular needs and requirements of the course or educational program. (Citation2011, p. 388)

In this way, the game functions as a ‘metaphoric intervention’ (Beard & Wilson, Citation2002, pp.158–160) within the course it was designed for. The aim in game content, form, and function, was to have cognitive authenticity to both the learning domain and the course content (Kirkley et al., Citation2011, p.376, 388) and to improve connectionism – i.e. cognitive reasoning rooted in specific areas of embodied experience (Gee, Citation2014, p. 8). It is widely acknowledged that assessment forms have a strong influence on the learning process and approach taken by students (cf. Norton, Citation2007, pp.93–95; Ramsden, Citation2003, p. 67–72). How to Fail mimics and exploits assessment forms, explicitly linking the research skills course with the student’s future dissertation requirements. The game functions as a ‘practice-run’ for an independent master’s project and bridges the gap between a simulated problem and a real-world problem, highly relevant to the student.

Tension between penalties and confidence

Whilst this game has an overall positive result for increasing confidence about research, this motivational factor was less successful than the others due to two characteristics: first, the lack of control over cards drawn and second, the emphasis on losing tiles as a penalty, which contributed to anxiety about success.

Since initial testing, game rules have been tweaked to increase player agency and optional rules also have an impact on personal control (cf. Abbott, Citation2017). These additions allow players to actively address research weaknesses, increase the overall chance of success, and also allow tutors to adapt the game to the predicted resilience of their students. The game is designed to also function as a workshop tool with the cards being used as triggers for group discussion (without gameplay). For example, allowing players to rebuild their projects using face-up tiles as part of the post-game tutorial could increase confidence whilst also reinforcing learning outcomes.

Although the majority of player reactions to the penalties were positive, 15% (n = 13/89) of free-text responses used synonyms for feeling nervous/anxious and 11% (n = 10/89) reported a feeling of stress/pressure. Responses also demonstrate a very high level of personal identification with, and commitment to, the fictional project (triangulated by the relevance results, above) which may contribute to negative emotions. However, the majority of these responses contextualise these feelings as positive or fruitful, as a realistic representation of the challenge of completing a dissertation, or a useful way to focus.

‘Generally enjoyed [the game]. I got a heart attack (almost) when I was picking the “fail” card.’ (PID77)/‘A bit unsure – a wee bit unprepared/overwhelmed – which seems totally appropriate!’ (PID9)/‘Stressed about how little time we have to complete the research project – 3 months and no plan!’ (PID36)/“Excited but nervous” (PID43)/“Excited, nervous about the Event cards and engaged with the narration of the game.“(PID91)”

As mentioned above, game mechanics with negative outcomes can have very strong learning potential. Several players acknowledged that the points of loss were where lessons were learned most powerfully, some even expressing disappointment at their lack of penalties within the fantasy context:

Our experience provided us with a relatively pain-free route to the thesis – it was only seeing how other teams ran into difficulties that underlined risks. (PID81)

It is also notable that Event cards resulting in catastrophe caused typically high levels of hilarity across groups, with good-natured acceptance from the affected player(s) and schadenfreude from other teams. These emotional peaks were almost always positive and often the heightened affect led to unprompted reflection and analysis about why the Event had the effect it did. Similarly, where disasters were narrowly averted by the player(s) having previously played Activity tiles that negated an Event, there was usually a sense of delighted relief from the team in question.

Therefore, it is felt that the significant advantages of the emphasis on penalties far outweigh the tiny minority of cases where the penalty was not seen to be productive. It is also hypothesised that the increased player agency created by the rules amendment above will further increase player confidence in research skills and, by implication, motivation for research.

Reliance on the tutor-facilitator

One unexpected result is the extent to which gameplay itself relies on the performance of the tutor/facilitator. Tutor participation is inherent to the game’s design (namely their role in interpreting and contextualising ILOs during the reflective phases of the game), however the tutor’s role in clearly explaining the rules to allow players to quickly start the learning activity is also critical. The tutor also levels the playing field for players who take longer to grasp the rules, actively helping those teams who are obviously lagging behind in placing their Activity tiles, with verbal reminders of rules and strategy guidance. Furthermore, the tone of the game being actively performed to players by the facilitator helps to maintain a humorous atmosphere. Support structures in productive failure scenarios are important to reduce the risk of unproductive experiences whilst still allowing students to participate in unstructured generation and invention activities (Kapur & Rummel, Citation2012). In addition to supporting students’ own reflection, techniques used to guide the emotional response of the players included exaggerated dismay at penalties, exaggerated relief at narrow escapes, or ‘playing the villain’ by reversing these reactions to increase humour. This performative role was not anticipated whilst designing the game but developed instinctively during early playtesting. Whilst it appears to increase engagement and emotional peaks during gameplay – a definite advantage – it also requires high familiarity and commitment from the tutor-facilitator, which could impede wider take-up of the game. This reliance has been ameliorated by production of a tutor guide with notes on how to run the game successfully and a short, funny, introductory video (Abbott, Citation2017).

Wider applicability

The game’s educational context and design principles focus on skill improvement rather than establishing a baseline of knowledge, perhaps explaining why the game appears to be equally successful for players with more or less experience of research (within the evaluation inclusion criteria). Essentially, gameplay appears to function independently of the research expertise of the player, enabling useful self-reflection even in highly experienced researchers, both in terms of their own research and when considering how to best support students.

‘I thought I might feel distant or unreal. But v quickly I applied my own experience as a research student. I was engaged and actively thinking about each task.’ (PID83)/‘Fun but thoughtful! Reinforced good practices I have so felt good. […] I imagine it would be very helpful for new researchers.’ (PID68)/‘I think it would work well with our students.‘ (PID110)

The game appears to have wider applicability beyond taught Master’s programmes and first-year doctoral training. Players indicated a strong appetite for use of the game (or a trivially adapted version) within other areas of Higher Education, in particular for early career researchers, students and staff with English as an additional language, and equality and diversity training. Several tutors also expressed a desire to create a version for undergraduate and/or Further Education contexts.

In order to enable wide access and adaptation, How to Fail has been released under a Creative Commons NC-BY-SA licence. It is suitable for adaptation to any fictional context which has a broadly linear activity model with a final outcome measurable in terms of quality (examples suggested so far include arranging a fashion show, managing a group project, or creating an exhibition). It would be a fruitful further study to consider further developments in terms of the five-dimensional framework of scalability (Dede, Citation2011, p. 239) in order to maximise the benefit from the game.

Conclusion

How to Fail Your Research Degree uses a pedagogically robust framework to align game mechanics with learning mechanics and is perceived by players as strongly educational with strongly positive gameplay experience in three of Keller’s four motivational categories (attention, relevance, and satisfaction); fairly positive for confidence. Qualitative and quantitative evaluation results imply an increased motivation for learning and embedding research skills. Learning outcomes appear to be retained over the medium term, although this finding was based on a small sample. The game also demonstrates the potential for adaptation to different learning contexts and wide dissemination. Limitations include scalability, learning mechanisms being relatively minimal in the Activity phase, and the action research methodology of this study which may have skewed results. Despite this, the game has clear potential for benefit to students via successful integration with teaching and learning activities in a number of contexts and provides a useful case study for the serious games research community in terms of integrating learning and game mechanisms.

This research focusses on game-based learning as a complement to postgraduate research skills courses. A fruitful further study would be to perform a comparative analysis between game-based learning and other existing methods for teaching research skills, taking into account prior knowledge and testing short- and long-term skills improvement for a range of learning situations.

In conclusion, How to Fail Your Research Degree has been shown to be an effective step towards an innovative way to teach, embed, and help retain knowledge and skills for undertaking academic research.

Acknowledgments

Thanks are due to everyone who provided feedback on the game and to The Glasgow School of Art for providing the investment needed to release the finished game.

Disclosure statement

No potential conflict of interest was reported by the author.

Notes

1. High-level design goals and practical considerations have been previously discussed in Abbott (Citation2015).

2. Although much of the literature analysing serious games and their mechanics are focussed on digital games, these concepts are equally applicable to tabletop games as interactions are defined as between the player and the game, not as human-computer interactions.

3. Themes were widely represented in responses, however for brevity, only a few illustrative examples for each are included here.

4. Acknowledging the small sample size for less and more experienced researchers (n = 9 and 12, respectively).

References

- Abbott, D. (2015). “How to fail your research degree”: A serious game for research students in higher education. InS. Göbel, M. Ma, J. Baalsrud-Hauge, M.F. Oliveira, J. Wiemeyer, & V. Wendel (Eds.), Serious games, lecture notes in computer science (pp.179–185) Switzerland: Springer International Publishing. 9090 (1). doi:10.1007/978-3-319-19126-3

- Abbott, D. (2017) How to fail your research degree. Retrirved from http://howtofailyourresearchdegree.com

- Aguilar, S., Holman, C., & Fishman, B. (2015). Game-inspired design: Empirical evidence in support of gameful learning environments. Games and Culture, 13(1), 44–70. doi:10.1177/1555412015600305

- Arnab, S., Lim, T., Carvalho, M.B., Bellotti, F., de Freitas, S., Louchart, S., … De Gloria, A. (2015). Mapping learning and game mechanics for serious games analysis. British Journal of Educational Technology, 46, 391–411. doi:10.1111/bjet.12113

- Baalsrud-Hauge, J.M., Stanescu, I.A., Arnab, S., Ger, P.M., Lim, T., Serrano-Laguna, A., … Degano, C. (2015). Learning through analytics architecture to scaffold learning experience through technology-based methods. International Journal of Serious Games, 2(1), 29–44. doi:10.17083/ijsg.v2i1.38

- Beard, C., & Wilson, J.P. (2002). The power of experiential learning: A handbook for trainers and educators. London: Kogan Page Ltd.

- Bellotti, F., Kapralos, B., Lee, K., Moreno-Ger, P., & Berta, R. (2013). Assessment in and of serious games: An overview. Advances in Human-Computer Interaction, (1), 1–11. doi:10.1155/2013/136864

- Biggs, J., & Tang, C. (2011). Teaching for quality learning at university (4th ed.). Milton Keynes: Open University Press.

- Boughzala, I., Bououd, I., & Michel, H. (2013). Characterization and evaluation of serious games: A perspective of their use in higher education. Proceedings of 46th Hawaii International Conference on System Sciences (HICSS), Wailea, Maui, HI USA. IEEE, 844–852 doi:10.1177/1753193412469127

- Boydell, T. (1976). Experiential learning (pp. 5). Manchester: Manchester Monographs.

- Burgess, H., Smith, J., & Wood, P. (2013). Developing peer assessment in postgraduate research methods training. Higher Education Academy: Teaching Research Methods in the Social Scienceshttps. Retrieved from www.heacademy.ac.uk/system/files/resources/leicester.pdf

- Dede, C. (2011). Developing a research agenda for educational games and simulations. In S. Tobias & J.D. Fletcher (Eds.), Computer games and instruction (pp. 233–250). Charlotte NC: Information Age Publishing.

- Easterday, M.W., Aleven, V., Scheines, R., & Carver, S.M. (2011). Using tutors to improve educational games. In G. Biswas, S. Bull, J. Kay, & A. Mitrovic (Eds.), Artificial intelligence in education. Lecture notes in computer science (Vol. 6738, pp. 63–71). Berlin, Springer Heidelberg.

- Fry, H., Ketteridge, S., & Marshall, S. (2003). A handbook for teaching and learning in higher education: Enhancing academic practice (3rd ed.). Abingdon: Routledge.

- Games, A., & Squire, K.D. (2011). Searching for the fun in learning: A historical perspective on the evolution of educational video games. In S. Tobias & J.D. Fletcher (Eds.), Computer Games and Instruction (pp. 7–46). Charlotte, NC: Information Age Publishing.

- Games and Learning Alliance (GaLA). (2014). Network of excellence for serious games. Retrieved from http://cordis.europa.eu/project/rcn/96789_en.html

- Gee, J.P. (2007). Good video games and good learning: collected essays on video games, Good video games and good learning, and Literacy. New York, Peter Lang doi:10.3726/978-1-4539-1162-4

- Gee, J.P. (2014). What video games have to teach us about learning and literacy. New York: Palgrave Macmillan.

- Guardiola, E. (2016) The gameplay loop: A player activity model for game design and analysis. Proceedings of the 13th International Conference on Advances in Computer Entertainment Technology, Osaka, Japan. DOI:10.1145/3001773.3001791

- Habgood, M.J., & Ainsworth, S.E. (2011). Motivating children to learn effectively: Exploring the value of intrinsic integration in educational games. The Journal of the Learning Sciences, 20(2), 169–206. doi:10.1080/10508406.2010.508029

- Hamnett, H., & Korb, A. (2017). The coffee project revisited: Teaching research skills to Forensic chemists. Journal of Chemical Education, 94(4), 445–450. doi:10.1021/acs.jchemed.6b00600

- Hanghøj, T., & Hautopp, H. (2016) Teachers’ pedagogical approaches to teaching with minecraft. Proceedings of the 10th European Conference on Games Based Learning, Paisley, Scotland, UK, (pp. 265–272).

- Juul, J. (2013). The art of failure: An essay on the pain of playing video games. London: MIT Press.

- Kapur, M., & Rummel, N., (2012) Productive failure in learning from generation and invention activities. Instructional Science, 40(4),645–650 doi:10.1007/s11251-012-9235-4

- Keller, J.M. (2010). Motivational design for learning and performance: The ARCS model approach. London: Springer. doi:10.1007/978-1-4419-1250-3

- Keller, J.M., & Suzuki, K. (2004). Learner motivation and E-learning design: A multi-nationally validated process. Journal of Educational Media, 29(3), 229–239. doi:10.1080/1358165042000283084

- Kernan, W., Basch, C., & Cadorett, V. (2017). Using mind mapping to identify research topics: A lesson for teaching research methods. Pedagogy in Health Promotion. doi:10.1177/2373379917719729

- Kirkley, J.R., Duffy, T.M., Kirkley, S.E., & Kremer, D.L.H. (2011). Implications of constructivism for the design and use of serious games. In S. Tobias & J.D. Fletcher (Eds.), Computer games and instruction (pp. 371–394). Charlotte NC: Information Age Publishing.

- Kirton, A., Campbell, P., & Hardwick, L. Developing applied research skills through collaboration in extra-academic contexts. Higher Education Academy: Teaching Research Methods in the Social Sciences, 2013. Retrieved from https://www.heacademy.ac.uk/system/files/resources/liverpool.pdf

- Klopfer, E., Osterweil, S., & Salen, K. (2009). Moving learning games forward. Cambridge, MA: The Education Arcade.

- Lim, T., Louchart, S., Suttie, N., Ritchie, J., Stanescu, I., Roceanu, I., … Moreno-Ger, P. (2013). Strategies for effective digital games development and implementation. In Y. Baek & N. Whitton (Eds.), Cases on digital game-based learning: Methods, models, and strategies, Hershey, PA, IGI Global (pp. 169–198).

- Mayer, I., Bekebrede, G., Harteveld, C., Warmelink, H., Zhou, Q., van Ruijven, T., … Wenzler, I. (2014). The research and evaluation of serious games: Toward a comprehensive methodology. British Journal of Educational Technology, 45, 502–527. doi:10.1111/bjet.12067

- Moreno-Ger, P., Torrente, J., Serrano, A., Manero, B., & Fernández-Manjón, B. (2014). Learning analytics for SGs. Game and Learning Alliance (GALa) The European Network of Excellence on Serious Games. Retrieved from http://www.galanoe.eu/repository/Deliverables/Year%204/DEL_WP2%20+%20TC%20Reports/GALA_DEL2.4_WP2_Report%201%20LearningAnalytics%20final.pdf

- Moylan, G., Burgess, A.W., Figley, C., & Bernstein, M. (2015). Motivating game-based learning efforts in higher education. International Journal of Distance Education Technologies, 13(2), 54–72. doi:10.4018/IJDET.2015040104

- Norton, L. (2007). Using assessment to promote quality learning in higher education. In A. Campbell & L. Norton (Eds.), Learning, teaching and assessing in higher education: Developing reflective practice (pp. 92–101). Exeter: Learning Matters.

- O’Donnell, V., Tobbell, J., Lawthom, R., & Zammit, M. (2009). Transition to postgraduate study. Active Learning in Higher Education, 10(1), 26–40. doi:10.1177/1469787408100193

- Quality Assurance Agency for Higher Education (QAA Scotland) (2017) Enhancement theme: Student transitions. http://enhancementthemes.ac.uk/enhancement-themes/current-enhancement-theme

- Rahman, S., Yasin, R.M, Salamuddin, N., & Surat, S., (2014) The use of metacognitive strategies to develop research skills among postgraduate students. Asian Social Science, 10(19), 271-275 doi: 10.5539/ass.v10n19p271

- Ramsden, P. (2003). Learning to teach in higher education. London: Routledge.

- Roodt, S., & Joubert, P. (2009). Evaluating serious games in higher education: A theory-based evaluation of IBMs Innov8. Proceedings of the 3rd European Conference on Games-based Learning, Graz, Austria, (pp.332–338).

- Ryan, M., Saunders, C., Rainsford, E., & Thompson, E. (2013). Improving research methods teaching and learning in politics and international relations: A ‘Reality Show’ approach. Politics, 34(1), 85–97. doi:10.1111/1467-9256.12020

- Sandford, R., & Williamson, B. (2005). Games and learning: A handbook from NESTA Futurelab. Bristol: Futurelab.

- Tobias, S., Fletcher, J.D., Dai, D.Y., & Wind, A.P. (2011). Review of research on computer games. In S. Tobias & J.D. Fletcher (Eds.), Computer games and instruction (pp. pp.127–221). Charlotte NC: Information Age Publishing.

- Waite, S., & Davis, B. (2006). Developing undergraduate research skills in a faculty of education: Motivation through collaboration. Higher Education Research & Development, 25(4), 403–419. doi:10.1080/07294360600947426

- Westermann, K., & Rummel, N. (2012). Delaying instruction: Evidence from a study in a university relearning setting. Instructional Science, 40(4), 673–689. doi:10.1007/s11251-012-9207-8

- Whitton, N. (2012). Games-Based learning. In N.M. Seel (Ed.), Encyclopedia of the sciences of learning. Boston, MA: Springer. doi:10.1007/978-1-4419-1428-6_437

- Whitton, N., & Moseley, A. (eds.). (2012). Using games to enhance learning and teaching: A beginner’s guide. London, Routledge, Taylor & Francis Group.