ABSTRACT

Technical writing skills are vital to professional engineers, but many engineering students find them difficult to master. This paper presents a case study carried out among ~300 first and second year engineering students who had little previous experience in technical writing. The aim was to support them to write better technical reports. Students were asked to write an 800-word report following an experimental laboratory and to include written reflection on their work. This improved writing skills (as measured by mark awarded and by questionnaires completed by students before and after the activity) by encouraging self-regulation and had the additional benefit that students were more satisfied with and engaged with the feedback they received on their work.

Introduction

Professional engineers are required to be able to communicate effectively, including in written formats such as technical reports (Engineering Council, Citation2014). Universities aim to prepare students for the professional environment; however, many new engineering graduates still lack the level of writing skills expected by their employers (Moore & Morton, Citation2017), and 71% of surveyed engineering employers have faced problems because candidates have good technical knowledge but lack workplace skills (Institution of Engineering and Technology, Citation2017).

Writing is also recognised as an important method of learning, as it forces students to synthesise information; hence, poor writing skills may reduce a student’s ability to learn effectively (Emig, Citation1977). Poor writing skills are not confined to engineering students; in fact, some reports suggest that writing skills are worsening across all students in all subject areas (Carter & Harper, Citation2013).

Beer and McMurrey (Citation2009) suggest that the problem of poor writing skills arises in part because students who choose engineering wish to focus on mathematical and practical work instead of on written work. This innate preference contributes to a lack of interest in developing writing skills. Students may also underrate the importance of writing skills (Lievens, Citation2012; Nguyen, Citation1998), which could further reduce motivation to learn these skills (Ramsden & Entwistle, Citation1981). However, other studies have shown that undergraduates do recognise the importance of communication skills in their future careers, but to a lesser extent than technical skills (Direito, Pereira, & Duarte, Citation2012; Itani & Srour, Citation2016).

Direito et al. (Citation2012) also found that undergraduates report a ‘skill gap’ for many soft skills, including written communication, whereby they rate skills as important, but do not believe they have a good ability in those skills. This lack of confidence in ability can lead to students avoiding the task, so not having the chance to practise and improve (Bandura, Citation1982).

Another contribution to the problem of poor writing skills arises from students not having a clear idea of what ‘good’ writing looks like. Levels of self-regulation determine how well a person can plan, monitor and self-evaluate progress towards a goal, and higher levels result in more motivated, better-performing students (Bandura, Citation1991; Pintrich & de Groot, Citation1990; Zimmerman, Citation1986). If a student is unable to visualise the goal, it is impossible to make positive progress towards it, so a student who is unable to visualise ‘good’ writing will not be able to produce it. Good feedback can be a useful tool to develop self-regulation in students, by helping them to see how their performance compares to ‘good’ performance (Nicol & Macfarlane-Dick, Citation2006)

Novices often struggle to self-regulate because they do not have a clear idea of what ‘good’ performance looks like in an unknown area, leading to low levels of motivation and loss of interest in performing (McPherson & Zimmerman, Citation2011). However, research has shown that by placing emphasis on strategies rather than outcomes, students can be taught to be more self-regulated (Boekaerts, Citation1997; Zimmerman, Citation2002). Students feel more satisfied and motivated when they master the strategies, even when the resulting improvements in outcomes are small and may otherwise have caused disillusionment with slow progress in a new subject.

We attempted to encourage self-regulation in approximately 300 first and second year engineering students by encouraging them to reflect on their written work before submission. We hypothesised that this reflection would encourage students to compare their work to the guidelines provided for the work and self-assess their performance – key strategies used by self-regulated individuals. We also hypothesised that by using the reflective comments as the starting point for a feedback dialogue, students would be more engaged with their feedback and find it easier to identify ways to improve their performance. This study focuses on a technical report written following a practical laboratory session. Agreeing upon a single definition of ‘technical writing’ for any given subject area is a cause of much debate in the literature (e.g. Grossberg, Citation1978; Allen, Citation1990). For clarity, we expect the technical report produced by students in this study to be a well-structured, objective report, which concisely presents and evaluates the aims, methods, and results of the practical laboratory. These expectations are consistent with the expectations placed on students when writing reports in later years of study and in professional careers. For this study, the report should be written to be understood by an audience of the students’ peers – i.e. intelligent non-experts in the field.

Context

Teaching writing

There are several schools of thought when it comes to teaching writing skills to engineering students: some institutions have specific units or modules within the curriculum where ‘softer’ skills such as writing are taught (Martin, Maytham, Case, & Fraser, Citation2005; Robinson & Blair, Citation1995); others (particularly in the USA) have implemented a ‘Writing Across the Curriculum’ (WAC) approach (Kinneavy, Citation1983); while others have no specific provision, expecting students to pick up skills as they progress through various other tasks. Given industry concerns about poor writing skills in graduates (Institution of Engineering and Technology, Citation2017), it is clear that these approaches are not working well.

From a delivery point of view, there are difficulties with all these approaches. Using specific units to teach soft skills usually involves many written assessments and hence a large marking load. Robinson and Blair (Citation1995) reported that students were unhappy with the lack of detailed feedback on their work and often did not receive feedback in time to use it in their next submission. Melin Emilsson and Lilje (Citation2008) found that teaching soft skills in a separate unit did not provide lasting benefits. They suggested that they needed to be reinforced over a longer period of time and also modelled by the staff responsible for teaching the hard skills.

One implementation of the WAC approach relies on all staff incorporating opportunities to write within their units, and it can be difficult to secure this support, especially in research-intensive institutions (Fulwiler, Citation1984). Large class sizes also complicate matters, requiring large numbers of highly trained assistants.

Yalvac, Smith, Troy, and Hirsch (Citation2007) found that the existence of a writing element within any unit was not sufficient for students to learn to write well, but the addition of oral peer discussions improved the written performance of the students. This highlights the importance of engaging students in a feedback dialogue to encourage better self-regulation and higher learning gains.

Situation at the University of Bristol

The Mechanical Engineering department at the University of Bristol follows the third school of thought described previously: it does not provide any explicit teaching of writing skills and expects students to pick them up as they complete the rest of the curriculum. By third and fourth year, students are expected to submit a written report about their individual (third year) and group (fourth year) projects. These projects account for one-third of the year mark each year. In first and second year, students follow several compulsory units covering core engineering science topics. Assessment for each unit typically comprises 90% exam and 10% laboratory work. Each year group comprises up to 200 students, and laboratory sessions are delivered to smaller groups of 8–10 students at a time.

There are a wide range of assessments used for laboratory work: some units award credit simply for attending; most require some calculations to be completed and/or technical questions to be answered; and a minority require a short, written report to be submitted. This variety is non-ideal for teaching writing skills for several reasons. First, the lack of consistent expectations (even within the few labs that require submission of a written report) can confuse students. Second, there are only a limited number of opportunities to practise writing longer documents, limiting the likelihood of being able to improve performance. Third, it is not always clear how feedback from one report is relevant to future assessments. These problems go against the suggestions made by Nicol and Macfarlane-Dick (Citation2006) for helping students to become more self-regulated.

Method

This case study attempts to improve student writing skills by trialling a new approach in two laboratories. The new approach aims to clarify student understanding of what ‘good’ writing looks like and encourage students to reflect on how their work compares to this ideal before submission by completing a ‘Reflective comments’ section. The two laboratories were practical experiments in thermodynamics: one in first year and one in second year. Both laboratories require submission of a similar technical report, which is assessed and worth 1 credit (out of a total 120 credits each year). Approximately 300 students were involved in this case study (135 first years and 171 second years). During the laboratory, students are provided with a set of guidelines that describe assessor expectations for the assessment. Gibbs and Simpson (Citation2005) state that assessments only benefit student learning when the expectations are clearly communicated. As such, the guidelines cover the expected style, length, structure, and content. They also direct students to other useful resources in areas such as references and writing style. The guidelines include details on the section headings that should be used in the report and the points that should be considered in each section. Students are also informed that the mark scheme is based on how well they satisfy the requirements outlined in the guidelines. As such, the guidelines clearly communicate assessor expectations and provide an ideal against which a self-regulated student can self-assess.

In previous years, it appeared that a significant number of students were not engaging with these guidelines. Assessors noted a range of problems. Basic errors included students not following the required formatting instructions or adhering to the maximum word limit. Common presentational mistakes related to figures and tables in the Results section. More serious content-related problems were students not including necessary sections of the report or not attempting to include all the necessary information in each section. Hence, as part of this work, an extra section was added to the report guidelines. In a pilot year, this section had been optional, but the completion rate was <10%. A low rate of completion is a common problem with optional work, particularly for students in early years (National Center for Academic Transformation, Citation2006; Twigg, Citation2015). The marks of the small number of students completing the section appeared better than the rest of the cohort, but numbers were too small to draw reliable conclusions. Hence, the section was made compulsory for this case study. The guidelines for the ‘Reflective comments’ section stated:

The mark scheme for this lab assesses how well you have fulfilled each of the required contents sections. By spending some time reflecting on your report and how it relates to the required contents, you have the opportunity to improve your work. Consider how well you think you have satisfied the requirements, and state any areas you would like specific feedback on.

By explicitly encouraging students to compare their work to the requirements, they should become more familiar with the structure and content of a formal report. Self-evaluation is also a key strategy in self-regulation, and this additional section encourages students to practice this skill by identifying their own priority areas for specific feedback. It was assumed that students would request the feedback that they felt would be most useful to them and therefore be more likely to use it to improve future work.

Results

The impact of adding the ‘Reflective comments’ section was assessed by monitoring the marks awarded; recording how many students accessed the feedback on their reports; and surveying students once before they completed the lab and once after the reports had been marked. All data were collected anonymously, and students were informed that participation in surveys was voluntary. Ethics approval was obtained from the University of Bristol Research Ethics Committee.

Student perceptions

Students were surveyed in an attempt to identify some of the problems they face when writing reports. When asked how important writing skills would be to their future careers (on a scale of 1 (useless) to 5 (vital)), students gave a mean score of 4.1 (see ).

Figure 1. Student responses to the question ‘How important do you think writing skills will be in your future career?’ given on a 5-point scale from 1 (useless) to 5 (vital)

When asked to rank their confidence in writing a technical report on a scale of 1 (no idea where to start) to 5 (very confident), the mean response before the practical laboratory was 2.6 (see ). This shows the expected low confidence that novices feel when approaching an unfamiliar task. This was particularly true for first year students, 85% of whom had written fewer than two reports previously, and who gave a mean confidence score of 2.1.

Figure 2. Student responses to the question ‘How confident do you feel about writing a formal lab report?’ given on a 5-point scale from 1 (no idea where to start) to 5 (very confident). The responses are compared before students completed the laboratory and after they completed the written report

After completing the assessment (including discussing the guidelines during the lab, and viewing feedback on their submissions), the mean confidence value had increased to 3.1. This is a noticeable improvement from just one attempt at writing a report and would be expected to further improve if students were given the opportunity to write additional reports in future.

As part of the process, students were provided with a detailed set of guidelines about what should be included in the report, to clarify their expectations and encourage self-regulation. It was also stressed that the mark scheme had been developed to assess how well they satisfied the guidelines. Students were asked how closely the guidelines matched their expectations for a technical report on a scale of 1 (completely different) to 5 (exactly the same), giving a mean score of 3.6 (see ). This is not as high as hoped but was to be expected based on novices not knowing what a report should involve. Students were also asked about how clear the mark scheme was on a scale of 1 (unclear) to 5 (very clear), responding with a mean score of 3.3 (see ).

‘Reflective comments’ section

Despite the ‘Reflective comments’ section being nominally compulsory, only 70% of the students completed it. As the section has no marks directly associated with it, it is likely that the other 30% of the students thought it was optional; 60% of the students completing the section felt that completing the section helped them improve their work before submission, with qualitative responses including comments such as ‘made me realise mistakes and correct them’ and ‘made me re-read my work’. Students also highlighted the usefulness of being able to ask for – and receive – feedback on specific areas of their work. The resulting targeted feedback also supports students (especially novices) in identifying how their performance compares to the ideal and identifying how they can improve in future.

Of the 40% of the students who did not find completing the section helpful, the main problem seemed to arise because they were novices. Typical comments justifying why the section was not helpful included ‘couldn’t comment on how I had done as I didn’t have any idea what it should be like because we hadn’t had the feedback’ and ‘didn’t know what was wrong’.

Engagement with feedback

All reports were submitted through Turnitin, which allows assessors to see whether a student has accessed their feedback. In previous years, approximately 50% of the students accessed their feedback, but after the addition of the ‘Reflective comments’ section, 73% of the students accessed their feedback. Students commented that completing the section made them ‘able to take note where to improve next time’ and rated the usefulness of the feedback for future reports as 3.9 (see ). This suggests that the feedback will be used in a feedforward manner.

Attainment

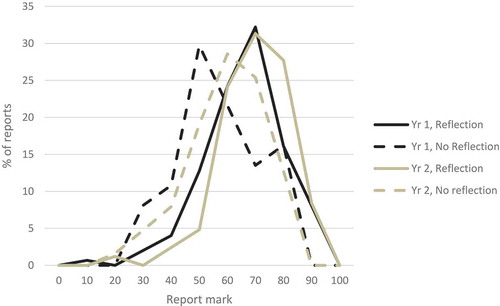

Before introducing the ‘Reflective comments’ section, the mean mark awarded for these reports was 55% for both year groups. Following the introduction of this section, the mean mark for students who completed the section was 63% in first year and 67% in second year (see ). This compared to mean marks of 55% (first year) and 57% (second year) for those who did not complete the section.

Discussion

Students recognised the importance of writing skills to their future careers but did not initially feel confident writing a technical report. These findings disagree with literature suggestions that students write badly because they do not appreciate the importance of writing skills (Beer & McMurrey, Citation2009) but support the idea that students do not understand what ‘good’ writing looks like, so are not able to self-regulate effectively (Bandura, Citation1991).

The provided guidelines went some way towards clarifying student expectations of the report structure and of how they would be assessed, enabling students to self-regulate against a more accurate understanding of ‘good’ performance (Nicol & Macfarlane-Dick, Citation2006). The ‘Reflective comments’ section explicitly encouraged self-regulation, with the majority of students reporting that it had made them reflect on their work before submission and attempt to improve it – both key steps in self-regulation. However, a non-negligible proportion of the cohort felt unable to comment on how their work compared to the requirements, suggesting that the guidelines had not clarified expectations enough for them to be able to self-regulate. This was to be expected from novice students; however, given that the guidelines specified the content required in each section, and were discussed at the end of each lab, it is disappointing that students still struggled with this reflection. As expected, students who completed the reflective section also achieved higher marks than those who did not.

Student engagement with feedback was significantly higher than in previous years, and students appreciated the possibility of using this feedback in future work, further demonstrating that our approach has encouraged students to become more self-regulated. The high engagement with feedback, along with positive student comments in the survey, shows that the ‘Reflective comments’ section has also moved the traditional feedback monologue towards a more beneficial dialogic process within the constraints of a relatively large class size, as advocated by Nicol (Citation2010).

Limitations and recommendations

Over the course of this study, several areas for future improvement were identified. Despite the ‘Reflective comments’ section being compulsory, 30% of the students did not complete it. As these students performed worse, attempts should be made to get a higher proportion of students to engage with this section. One way to do this might be to stress the benefits further, citing improvements in marks for students who do complete it as motivation, or reassuring students that the contents of the section are less important than the process of reflecting on their own work.

Another potential improvement would be to increase the number of reports that students are expected to submit and to ensure that expectations for each one are consistent. To avoid excessively increasing the workload and overwhelming students, a scaffolded approach could be considered (Wood, Bruner, & Ross, Citation1976), whereby students could be asked to complete different parts of a report for each laboratory. Use of a scaffold with consistent expectations from one report to the next would allow students to easily implement their feedback in future reports, further developing their self-regulation and practising their writing skills. A scaffolded approach is currently being developed within Mechanical Engineering at the University of Bristol, and initial results are promising (Selwyn, Renaud-Assemat, Lazar, & Ross, Citation2018).

It is vital to provide clear guidelines so that students can begin to self-regulate their performance. The guidelines provided during this study were not perceived by students as being as clear as was hoped, so further efforts should be made to clarify expectations. These efforts may involve adding a seminar to allow students the time to discuss with their peers and co-develop a detailed set of expectations for the work.

Conclusions

The results of this case study were positive overall. Students appreciated the ‘Reflective comments’ section as a means of accessing more specific feedback. The section explicitly encouraged and supported the development of self-regulation skills. Students were more engaged with their feedback, and attainment improved for those students who completed the reflective section.

Disclosure statement

No potential conflict of interest was reported by the authors.

References

- Allen, J. (1990). The case against defining technical writing. Journal of Business and Technical Communication, 4(2), 68–77. doi:10.1177/105065199000400204

- Bandura, A. (1982). Self-efficacy mechanism in human agency. American Psychologist, 37(2), 122–147. doi:10.1037/0003-066X.37.2.122

- Bandura, A. (1991). Social cognitive theory of self-regulation. Organizational Behavior and Human Decision Processes, 50(2), 248–287. doi:10.1016/0749-5978(91)90022-L

- Beer, D., & McMurrey, D. (2009). A guide to writing as an engineer. USA: John Wiley & Sons, Inc.

- Boekaerts, M. (1997). Self-regulated learning: A new concept embraced by researchers, policy makers, educators, teachers, and students. Learning and Instruction, 7(2), 161–186. doi:10.1016/S0959-4752(96)00015-1

- Carter, M.J., & Harper, H. (2013). Student writing: Strategies to reverse ongoing decline. Academic Questions, 26(3), 285–295. doi:10.1007/s12129-013-9377-0

- Direito, I., Pereira, A., & Duarte, A.M. (2012). Engineering undergraduates’ perceptions of soft skills: Relations with self-efficacy and learning styles. Procedia - Social and Behavioral Sciences, 55, 843–851. doi:10.1016/j.sbspro.2012.09.571

- Emig, J. (1977). Writing as a mode of learning. College Composition and Communication, 28(2), 122–128. doi:10.2307/356095

- Engineering Council. (2014). UK-SPEC UK standard for professional engineering competence (3rd). UK: Author. Retrieved from https://www.engc.org.uk/engcdocuments/internet/Website/UK-SPEC%20third%20edition%20(1).pdf

- Fulwiler, T. (1984). How well does writing across the curriculum work? College English, 46(2), 113–125. doi:10.2307/376857

- Gibbs, G., & Simpson, C. (2005). Conditions under which assessment supports student’s learning. Learning and Teaching in Higher Education, 1, 3–31.

- Grossberg, K.M. (1978). ERIC/RCS report: The truth about technical writing. The English Journal, 67(4), 100–104. doi:10.2307/815643

- Institution of Engineering and Technology. (2017). Skills & demand in industry 2017 survey. UK: IET.

- Itani, M., & Srour, I. (2016). Engineering student’s perceptions of soft skills, industry expectations, and career aspirations. Journal of Professional Issues in Engineering Educations and Practice, 142(1), 04015005-1–04015005-12.

- Kinneavy, J.L. (1983). Writing across the curriculum. Profession, 13–20.

- Lievens, J. (2012). Debunking the ‘nerd’ myth: Doing action research with first-year engineering students in the academic writing class. Journal of Academic Writing, 2(1), 74–84. doi:10.18552/joaw.v2i1

- Martin, R., Maytham, B., Case, J., & Fraser, D. (2005). Engineering graduates’ perceptions of how well they were prepared for work in industry. European Journal of Engineering Education, 30(2), 167–180. doi:10.1080/03043790500087571

- McPherson, G., & Zimmerman, B.J. (2011). Self-regulation of musical learning: A social cognitive perspective on developing performance skills. In R. Colwell & P.R. Webster (Eds.), MENC handbook of research on music learning (pp. 130–175). Oxford, UK: Oxford University Press.

- Melin Emilsson, U., & Lilje, B. (2008). Training social competence in engineering education: Necessary, possible or not even desirable? An explorative study from a surveying education programme. European Journal of Engineering Education, 33(3), 259–269. doi:10.1080/03043790802088376

- Moore, T., & Morton, J. (2017). The myth of job readiness? Written communication, employability, and the ‘skills gap’ in higher education. Studies in Higher Education, 42(3), 591–609. doi:10.1080/03075079.2015.1067602

- National Center for Academic Transformation. (2006, April). The learning marketspace. NCAT Newsletter. Retrieved from http://www.thencat.org/Newsletters/Apr06.htm

- Nguyen, D.Q. (1998). The essential skills and attributes of an engineer: A comparative study of academics, industry personnel and engineering students. Global Journal of Engineering Education, 2(1), 65–75.

- Nicol, D.J. (2010). From monologue to dialogue: Improving written feedback processes in mass higher education. Assessment & Evaluation in Higher Education, 35(5), 501–517. doi:10.1080/02602931003786559

- Nicol, D.J., & Macfarlane-Dick, D. (2006). Formative assessment and self-regulated learning: A model and seven principles of good feedback practice. Studies in Higher Education, 31(2), 199–218. doi:10.1080/03075070600572090

- Pintrich, P.R., & de Groot, E.V. (1990). Motivational and self-regulated learning components of classroom academic performance. Journal of Educational Psychology, 82(1), 33–40. doi:10.1037/0022-0663.82.1.33

- Ramsden, P., & Entwistle, N.J. (1981). Effects of academic departments on students’ approaches to studying. British Journal of Educational Psychology, 51(3), 368–383. doi:10.1111/bjep.1981.51.issue-3

- Robinson, C.M., & Blair, G.M. (1995). Writing skills training for engineering students in large classes. Higher Education, 30(1), 99–114. doi:10.1007/BF01384055

- Selwyn, R., Renaud-Assemat, I., Lazar, I., & Ross, J. (2018, July). Improving student writing skills using a scaffolded approach. In Proceedings of the 7th International Symposium for Engineering Education. University College London, London, UK.

- Twigg, C.A. (2015). Improving learning and reducing costs: Fifteen years of course description. Change: the Magazine of Higher Learning, 47(6), 6–13.

- Wood, D., Bruner, J.S., & Ross, G. (1976). The role of tutoring in problem solving. Journal of Child Psychology and Psychiatry, 17(2), 89–100. doi:10.1111/jcpp.1976.17.issue-2

- Yalvac, B., Smith, H.D., Troy, J.B., & Hirsch, P. (2007). Promoting advanced writing skills in an upper-level engineering class. Journal of Engineering Education, 96(2), 117–128. doi:10.1002/jee.2007.96.issue-2

- Zimmerman, B.J. (1986). Becoming a self-regulated learner: Which are the key subprocesses? Contemporary Educational Psychology, 11(4), 307–313. doi:10.1016/0361-476X(86)90027-5

- Zimmerman, B.J. (2002). Becoming a self-regulated learner: An overview. Theory into Practice, 41(2), 64–70. doi:10.1207/s15430421tip4102_2